6

THE TIMEKEEPERS

The Uncertainties of Scientific Dating

In every case, the story [of man] seemed to hinge on the age of things.

JAMES SHREEVE, THE NEANDERTAL ENIGMA

Current geological dating is arbitrary at best, and wildly inaccurate at worst.

DAVID HATCHER CHILDRESS, LOST CITIES OF NORTH AND CENTRAL AMERICA

I pay not the least attention to the generally received chronologies.

GODFREY HIGGINS, ANACALYPSIS

INFLATIONARY TIMES

Does mankind (or Earth itself, for that matter) have as long a history as we’ve been taught? Millions and billions of years? I have come to doubt it. One century ago, geologists like George Frederick Wright placed the first forms of life at only 24 mya and the age of man as no older than 100 kyr—in contrast to the five million currently assigned to him. (The subject of Earth’s age is touched on again in chapter 10).

Historically, it was Darwin’s ponderously gradual and long evolution that kicked off deep time, for his theory demanded vast eons for organisms to evolve. Yet, it is a plain fact that with hybridization of the races, new types can happen quickly, in a matter of generations. Darwin, we know, had postulated accumulated and minute adaptations building up over huge amounts of time; after all, “millions on millions of generations” are needed for speciation to happen.1 Darwin’s detractors, however, twitted his conveniently “indefinite time . . . an unlimited number of generations for the transitions to take place.”2

I want to stress that the inflated dates handed to us for life on Earth bear the heavy imprint of evolutionism. And we know that long dating stands at the very opposite extreme (the opposite fallacy) of young Earth, the biblical age of Adam said to have occurred a meager 6 or 10 thousand years ago, which is just as suspect as the dates given us by today’s deep-time enthusiasts. I think the truth actually lies somewhere in between. Sure, dating by the bone people is reliable enough up to, say, 40 or 50 kya (the carbon-14 limit), but beyond that time, things go hog wild, off the charts. I would sooner accept their dates relatively (reflecting sequences), than as an absolute time count.

Absolute dating . . . is still subject to a very large margin of error.

GEORGE GAYLORD SIMPSON, THE MAJOR FEATURES OF EVOLUTION

ADDING ZEROS

“Not a trace of unquestionable evidence of man’s existence has been found in strata older than the Pleistocene,” said William J. Sollas, Oxford geologist.3 Before the 1950s, the Pleistocene was believed to extend back some 700 kyr; today, that’s tripled to at least 2 million years.

Man today has no more conception of how many years ago the Pleistocene commenced, or the length in years of any geological time, era, or period, than the ancient fossil on my library table.

JAMES CHURCHWARD, THE LOST CONTINENT OF MU

Moving man (hominids) beyond the Pleistocene, back to the Tertiary (up to 6 mya) has been de rigueur since Louis Leakey’s time (as per Zinj) and the rise of potassium-argon dating (K-Ar method) in the 1960s. This is how the bone people keep score, and one thing is for sure—the older the better. Given the academic race to outstrip the earliest dated man, most dates are suspiciously overestimated.

Figure 6.1. Louis Leakey as a young man.

And it is dominoes. Almost overnight, H. erectus of Java was made to jump from 500 kyr to 1 million years old, Louis Leakey’s headliner Olduvai finds pushing everything back even further. (A cute consequence: In 1957, Ashley Montagu’s book was titled, Man: His First Million Years. Reprinted in 1969, the revised title was: Man: His First Two Million Years.)

Just one century ago, earliest man’s age was set (reasonably) at around 100,000 kya. I believe this figure is very close to correct (see more on this in chapter 10). Marcellin Boule, in 1923, had man no older than 125 kyr; at this time the Paleolithic was thought to have begun only 50 kya, but in 1929 that was changed to 400 kya.4 What is going on here? Comparing these dates we soon find that man’s age has been increased in the past century by a factor of twenty! In the 1940s and 1950s, Australopithecus (who was the earliest hominid at that time) was dated to no more than 500 kyr. Then by the 1970s the first hominids were “at least a million years old.”5 And with American anthropologist F. Clark Howell’s finds in Ethiopia (just after the K-Ar method was brought out), that figure instantly doubled to 2 myr.

Nevertheless, Le Gros Clark (in 1955) noted that “all the australopithecine deposits in South Africa are not very widely separated in geological time.”6 But then after Leakey and Howell broke the Tertiary barrier, their new expanded dates became the gold standard, and everything must be fitted around this benchmark—even surpass it.

Now mtDNA says the mod type of man arose perhaps 130 kya: Ethiopia’s Omo I, originally dated at less than 50 kyr, is now offered as possibly the oldest Homo sapiens anywhere at 130 kyr.7 But this figure will not stay put; watch it change to 200,000 (to fit the African Eve theory). What is the oldest reliable date for the modern type? Back in 1955, Le Gros Clark dated AMHs to a modest 30,000 years ago. But since the 1990s, given the push for the out-of-Africa paradigm, date changes seem more politics, more agenda, than impartial science.

Long dating had a good head start, for it was advanced from the beginning by Darwinists in order to supply a plausible length of time for man to develop his great brain. British naturalist Alfred Russel Wallace (as we will see in chapters 7 and 9) thought at least 15 million years of evolution were needed to produce the human brain (or “the symphonies of Beethoven”). Evolution theory needs very substantial time also to get back to the less specialized pongids of the Miocene, from which both apes and humans presumably evolved (especially since the more recent Pliocene apes are not too different than today’s editions).

WORLD’S GREATEST FOSSIL: NE PLUS ULTRA

Let me ask you this: How many times have you read the phrase we now know—persuading the innocent reader to put his or her faith in the most recent findings of science? Let’s try to remember, though, that hominid discoveries in Africa have caused the family tree to be rewritten several times. Which one is right? “[A]ll the evolutionary stories I learned as a student . . . have now been ‘debunked,’” owned Derek V. Ager.8 What are the chances of today’s “breakthroughs,” with all their splashy fanfare, holding up to tomorrow’s surprises?

Let’s not be naive. Latest studies are not necessarily the best. It is nothing but the misleading rule of progress (forever onward and upward) that Darwinism itself inculcates. For example, American paleoanthropologist John Hawks in his weblog imperiously dismisses earlier work, criticizing authors who use “completely obsolete anthropological information” more than fifty years out of date. Objection! More often than we may suppose, the older theorists (using their brains and immense fount of knowledge) more closely skirted the truth than many of their modern replacements (using supercomputers and the latest vogue in their field).

And how would Charles Darwin fit in Hawk’s reproof? Isn’t Darwin 150 years out of date? I have come to suspect the vaunted claims that new or recent studies are automatically better. Franz Weidenreich, as an example, was on the money 75 years ago, when he rejected an out-of-Africa or any other single-origin theory of man, showing instead that H. erectus was followed by mods everywhere (not just Africa). As occasionally noted in these chapters, I frankly find the work of some of the self-made old-timers—like Weidenreich, Hooton, Dixon, and Coon—more impressive, reliable, and realistic than the smoke and drone of today’s generation of skin-deep theorists and sycophants.

But this is how it’s done: New finds are trumpeted as groundbreaking fossils, rewriting human history, seriously revolutionizing everything, and threatening to deal a major blow to previous concepts. Headline-seeking superlatives like “utterly dazzling find” and “oldest human ancestor” sound more like a marketing phenomenon than sober science. Feeding off the glamour of deep time, for example, Lee Berger’s Australopithecus sedipa, dated to a whopping 5.5 myr, “changes the game.”9 Don Johanson well knew the value of “the world’s oldest humans . . . to dangle before foundations and private funders.”10

Discovered (but in fact only rediscovered) in 2003, hobbit Flo made her flashy debut as the hominid that “overturns everything”11 (utterly disregarding earlier hobbits found in the 1950s). It was the same with Kenya’s Black Skull, which was touted as “overturn[ing] all previous notions of the course of early hominid evolution,” the swashbuckling posture answered by head-shaking critics who aver it was exactly “what you would expect to find . . . it isn’t even a new fossil” (the very same type had been found back in 1967 by French diggers).12

Combine the funds-seeking diggers with the sensation-seeking press and boom—each new specimen is an electrifying challenge to orthodoxy. The script: “Our assumptions were wrong, but we now know . . .” deceptively creates the impression of a humble, open-minded, self-correcting science.

How shall I account for the difference betwixt thy arguments now and the other time?

OAHSPE, BOOK OF FRAGAPATTI 9:17

In reality, such finds are blowback—exposing the bogus theories and pseudobreakthroughs of yesterday’s news. One disgusted observer commented facetiously: “Every new fossil hominid specimen is the most important ever found . . . and is completely different from all previous ones, and probably a new genus, and therefore deserve a new name.”13

I fear that much of this date changing, this time inflation, is a form of damage control, saving evolution from the wrecking ball of hard facts. It is difficult to get a footing here. Whose dates shall we believe? Some say Neanderthal and H. sapiens split off from an (imagined) common ancestor 300 kya, but others say that happened 800 kya.14 Was there an unstated reason for setting Neanderthal farther back in time? Yes, there was: Greater age is needed for Neanderthal, to account for huge differences in physiology between his lineage and all others. In the continuing chaos of dates for Neanderthals, analysts have dated them anywhere from 150 to 353 kya. Still others give Neanderthal no more than 100,000 years on this Earth.15

Robert Braidwood dated the earliest Neanderthal to 85,000 years.16 There is no undisputed documentation of Neanderthal, some say, before 75 kya. Neanderthal is basically man of the Mousterian age, most clustering around 40 to 50 kyr. He does not show up earlier than 50 kya in most parts of Europe, Russia, Siberia, and Iran17; and his tenure on Earth can be no more than 50,000 years altogether.18 His remains are found in largely organic state (not fossilized), indicating relatively recent time.

CONTROVERSY, CONTAMINATION, AND CONFUSION

These wide and indeterminate date ranges are called scatter. Vertesszollos Man, a problematic fellow for evolutionists, with awfully early AMH features, has been dated (scattered) anywhere from 100 kyr to 700 kyr! Petralona Man, another early European troublemaker, is dated anywhere from 70 to 700 kya19—the 70 kyr tag fits the modern aspects of his morphology, while the older date, 700 kyr, fits the primitive ones. Why even bother with such indeterminate ranges? In the case of Ihroud, Morocco Man, one method gave him 40 kyr and another method gave him 125 kyr—the older date favored, for it helped him fit the bill as a transitional species in the out-of-Africa theory. Dates changed for Africa’s Kabwe Man, too, scattered from 11 kya to 40 kya to 300 or 400 kya20—also to better fit the out-of-Africa model.

It’s a rollercoaster ride: Java’s Ngandong Man, found in 1931, has been dated anywhere from 77 to 170 to 300 kya. But consider the largely ignored report by zoologist Emil Selenka and zoologist and paleontologist Margarethe Selenka concerning Java’s deposits of Homo erectus. Here violent eruptions of a nearby volcano and subsequent flooding changed the landscape dramatically: the Solo River actually changed its course. Those beds might have been less than 1,000 years old, for the degree of fossilization was the “result of the chemical nature of the volcanic material, not the result of vast age.”21 *81 Keith, a century ago, had cautioned that “the mineralized condition of bones does not signify much . . . [if] impregnated with iron and silica from the stratum in which they were embedded.”22

Modern man’s age has been scattered anywhere from 30 kya up to 500 kya. But don’t make any AMH too old, as that would put mods too early in the record, throwing off the whole evolutionary staircase, onward and upward. Let’s see how this works: The AMH Keilor skull from Australia was thought to be of very early (third interglacial) age, but being AMH, it was automatically elevated to “definitely postglacial times,”23 possibly as young as 15 kyr. Before the long-dating craze began a half century ago, if they found an AMH too early in the record (as indeed they are), they simply bumped up the date to make him more recent. Nowadays they’re more inclined to leave the old date as is and say the whole shooting match began earlier than we thought—in the Pliocene. Deep time. But man did not exist in the Pliocene (see discussion in chapter 10).

How do you like the scatter for China’s Choukoutien Homo erectus, variously dated anywhere from 200 kyr to 750 kyr? Good thing I used a pencil (with a stout eraser) during my note gathering for these dates. How does one know which guess is correct? They will shelve any hominid at mid-Pleistocene (say, 800 kya) automatically if the morphology is H. erectus–like. This is called dating by morphology; for example, “We must regard a small brain cavity . . . as an indication of antiquity.”24 But beware: That was before the “shocking” discovery of “too young” H. erectus types like Flores’s hobbit, with her pea brain of 380 cc, who lived as recently as 12 kya. Forget antiquity.

| TABLE 6.1. EXAMPLES OF DEEP-TIME GAFFES | ||

| Item | Date Originally Assigned | Subsequent Date Assigned |

| Skeletons, United States | 38–70,000 BP(AAR method) | 7,000 BP*82 |

| Neanderthal, Croatia | 130,000 BP | ca 30,000 BP†83 |

| Fontéchevade Man, France | 800,000 BP | 100,000, 70,000, or 40,000 BP |

| Galley Hill Man, UK | mid-Pleistocene, ca 1 myr | Neolithic, less than 10,000 BP‡84 |

| Hamburg skull, Germany | 36,000 BP (carbon dated) | ca 14,000 BP |

| KBS tuff, Africa | 220 myr (K-Ar method) | 2 to 6 mya |

| Krapina Man, Croatia | Third interglacial | Upper Paleolithic |

| Moab Man, Utah | 65 mya | Native American bones from the past few centuries |

| Olduvai Bed II | 500 kyr (dated by Louis Leakey) | 17,000 BP§85 |

| Skull 1470, Africa | 230 mya (volcanic rock test) | 1.8 myr |

| Solo River H. erectus, Java | 900,000 BP | 30,000 BP¶86 |

THE DIFFICULTIES OF ACCURATE DATING

Radiometric Dating

Radiocarbon dating (C-14) was introduced in the late 1940s and works all right for relatively recent material only: “The men who run the tests would report that they cannot date with accuracy beyond 3,000 years.”25 C-14 analysis has actually given shells of living mollusks an age of 2,300 years. One of the problems is this—the decay rate of C-14 may not have been so constant in the past, particularly if you factor in the great abundance of vegetation on the landscape in earlier times. The globe was once more tropical with luxuriant plant life; without taking this into account, you get older ages from C-14 readings, which may also result from the proportion of C-14 isotopes in the atmosphere, which has not always been the same. There is also the problem of contamination by natural coal, slanting carbon dates in the too-old direction. The real question is: Does organic material take on C-14 at a constant rate? Open to debate, the C-14 margin of error, some say, is as high as 80 percent (up to 10 kya).26 *87

Potassium-argon dating (K-Ar), another radiometric method, enabled geologists and archaeologists to date very old materials. First developed in the late 1940s, it wasn’t used in an archaeological setting until 1959. This new method began to give astoundingly old dates. It was an instant media sensation, attracting headlines—and funding. Come up with a fossil specimen older than the record holder—and you’re a player. A 165,000-year-old shellfish dinner, for example “pushes back [e.a.] the earliest known seafood meal by 40,000 years”27—how about that! When Richard Leakey dated his Lake Rudolph Skull 1470 to 2.8 myr, he bragged that “our past has now been pushed back at least 10,000 centuries [by this] extraordinarily important [find] . . . the oldest skull of early man yet discovered.”28 Competition among diggers, even “science wars,” have become a game of breaking records, like Ardi who “smashed the 4 myr barrier.”29

In K-Ar dating, potassium, a leading parameter, is a radioactive element with a supposedly steady decay rate: apparently it takes almost 3 myr for 0.1 percent of “parent” K-40 to turn into “daughter” Ar-40. It is not the fossil itself that is dated but the potassium-bearing deposits in which it is found. However, collecting undisturbed and uncontaminated rock samples of indisputable association with the fossils is more iffy than we are led to believe. Too, we are relying on the constant rate of decay from potassium into argon: even though the margin of error is claimed to be less than 1 percent,30 decay rates are only statistical averages.

The story of K-Ar began in 1959 with Zinjanthropus boisei at East Africa’s Olduvai Gorge; at this time the University of California had just brought out the K-Ar method. Louis Leakey’s first guesstimate for Zinj’s age had been 600 kyr, which itself was looked on as extravagant, even outrageous, compared to a mere 10 kyr, presumed by other workers.31 But K-Ar now dated Zinj almost 2 myr. Quite a leap—from five to seven digits! An overnight sensation.

Let’s take a closer look: Radiometric analysis by the K-Ar method is used specifically to date lava and other volcanic rocks—despite the fact that volcanic ash is apt to be infused with much older crystals. Ash samples that are badly weathered are also useless. Consider this: The K-Ar method performs best on things 2 to 3 myr; in fact, K-Ar cannot date younger materials, that is, rocks younger than 100 kyr. Its capability extends to deposits only if they are older than 500 kyr, whereby it has more than tripled man’s age and inaugurated the wayward trend toward deep-time or long dating, back-dating genus Homo into the millions of years.

Given that the K-Ar method refers to the age of volcanic soils, not to the bones themselves, it is a poor method if the bones are not securely in place, and many if not most fossils are, indeed, surface finds, kicked up from who knows where. Since argon 40 is a gas, it easily migrates in and out; potassium is also mobile. K-Ar in certain minerals will therefore show bogus age if argon gets trapped inside; indeed, argon could have already been in the rocks before they solidified. How can we be sure of the ratio of parent: daughter elements in the original sample? The delusory K-Ar method has yielded dates ranging everywhere from 160 million to 3 billion years old for the same material—rocks actually formed fewer than 200 years ago!

First man Ardi was dated wildly at 23 myr by testing the basalt (lava deposit) closest to the fossils. They realized, of course, that was way too old for any hominid, so they sampled some other basalt a ways off and “selected” those samples that gave a comfortable age of 4.4 mya—because that would be about right in the current scheme of evolutionary dating. We find a similar scenario in connection with one very controversial Homo habilis–like specimen, a skull, for which early testing again gave a too-old date of 230 mya! But humans weren’t around then, of course, so they kept sampling volcanic rocks in the area, until settling on one that gave an age of 2.8 myr. Why? Because this age aligned with previous published studies and was assumed to be about right.

Radiometric dating, as David Pitman, Australia’s brilliant young cosmogonist, has explained (in a personal communication), makes the blithe assumption that

no (or very little) new material has been added to the earth since its initial formation. . . . But the planet has undergone periods when vast amounts of new material, both organic and inorganic, have been thrown down to the earth. Apparently, this is where our fossil fuels came from (i.e., they are not fossils at all!). . . . And the age you are measuring is greater than the length of time that the material has been on the earth. . . . It probably spent a whole bunch of time rolling around in space before it was caught in the earth’s vortex and dumped on the surface. The accuracy of radiometric dates depends on these conditions. . . . It is impossible to know which particular measurements will be accurate without first establishing the vortexian history of the fossil location.

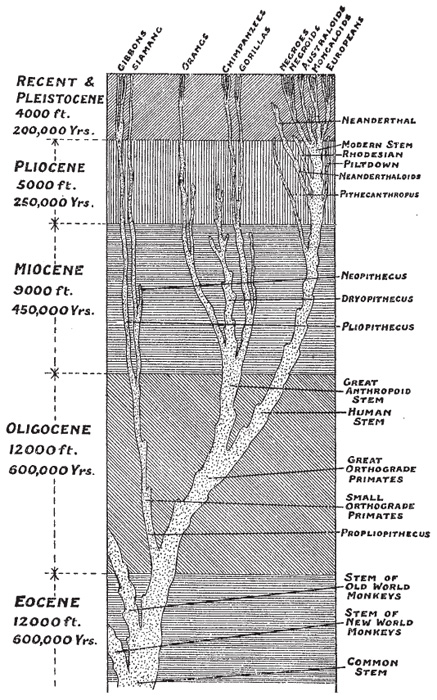

Figure 6.2. Genealogical tree (1929) shows estimated depth of deposits (left-hand column).

Our Earth is composed of material that gathered as it formed, whirled into shape by its powerful vortexian (field) currents*88; the tendency to accumulate outside (extraterrestrial) material increases the farther back we go. Nonterrestrial material could have been in space for millions of years before being pulled into the earth-forming vortex. Without factoring in the influx of very old interstellar particles, radiometric dates are distorted in the too-old direction. Are we measuring Earth’s age—or stuff that fell to the planet from dissolved stars and spheres?

As Pitman has observed,

decay rates are likely to change over time . . . [and are] highly subject to the change in atmospherean currents. Think of the meteoris belts where solid elements like iron are apparently held in solution and condense out to create meteorites. . . . At this altitude, iron would be radioactive because it would be capable of decaying into pure energy. These belts, their location and intensity, are controlled by the atmospherean currents, and these currents change over time. Therefore the decay rate of a particular sample will depend on its vortexian environment, and this environment changes greatly over time. . . . The decay rate [is] mostly tending to slow down over time. . . . Using it for dating becomes very very shaky the more distant into the past you go. . . . Yep, we just need to take a bunch of zeros off those dates! . . . Heavy elements will tend to decay faster; the degree of how much faster will get greater the further into the past we go (when the vortex’s rotation is proportionally faster). Thus there will be a direct relationship between the speed of the earth’s rotation and the rate of radioactive decay—and thus the degree of inaccuracy of radiometric dating.

Indeed, some areas of the deepest ocean are highly radioactive, suggesting a much higher rate of radioactive decay in times past.32 The quantity of cosmic rays penetrating the atmosphere may also affect the amount of C-14, research indicating that past surges of radiocarbon affected all parts of the globe. It is thought that the amount of rays varies as Earth passes through magnetic clouds; the strength of the magnetic field (which is ever decreasing) also affects the amount of rays that reach us. Baby Earth registered a fabulously turbo-charged atmosphere in ancient rocks, showing a hundred times the expected magnetism. The rocks are faithful recorders of the enormous amplitude of our planet’s original force field, that force in turn having led to bigger earthquakes and greater volcanic activity—leaving lava beds almost a mile deep in the western United States. Lava flows in the Cretaceous covered land the size of India: today the Deccan Traps still extend over 200 thousand square miles, a mile and a half thick in some places.

Figure 6.3. Vortex energy: the rotary force behind all motion; the force that driveth all things. The force is movement toward center, from external to internal, symbolized by the whirlwind.

From Molecular Dating to Genetic Analysis

When evolution turned “molecular” in the 1960s, we were told that this new

science was based on proteins evolving at a known and constant rate, even though

different proteins have different mutation rates. Anyway, this molecular

revolution was followed, in the 1970s by the sequencing of DNA. In the same way

that the DNA breakthroughs of the late twentieth century revolutionized the

identification, the “fingerprint,” of individuals (especially criminals), it also transformed the paleoanthropological

arts, the celebrity status of DNA even threatening to rob the thunder of the good old fossil hunters.

Genetics (Mendelian), having once saved Darwinism (when it fell out of favor around the turn of the twentieth century), now made another go at it. Recent advances in molecular biology and genetics, it is now bruited about, can determine the history of species and virtually replace morphology (referring to the skeletal anatomy recovered at digs). Genetic distance can be measured, telling us when species diverged (split off), all of which is then nicely plotted on an evolutionary tree.

But outside the English-speaking cabal, there are geneticists like Maciej Giertych (Polish Academy of Sciences) who find no biological data relevant to tree genetics. Giertych says he could pursue his entire career without ever mentioning evolution. Built on supposition, this new school of population genetics assumes (1) that there is a constant rate of mutations and (2) that species have arisen by splitting off (diverging) from a parent stock—the theory blithely assuming a common ancestor, which it is supposed to confirm! All this is null and void if there has been extensive crossbreeding in the human family.

Race mixture is a very ancient phenomenon.

EARNEST HOOTON, UP FROM THE APE

Geneticists using mtDNA contend they can assess the amount of time that separates two species by measuring the accumulated mutations, the method called, appealingly, “the molecular clock.” Offsetting this confidence is the fact that mtDNA is only a small fragment of our genetic heritage. And what if variations in mtDNA are due to factors other than mutation (like hybridization)? There is also the little problem of species that have not changed, mutated, or evolved in millions of years. Do we really have an “exact” (or relevant) science here? In an intriguing interview shortly before her death, biologist Lynn Margulis called the population geneticists who have “taken over evolutionary biology . . . reductionists ad absurdum.”33

Bunny Suits

Archaic DNA is easily contaminated with extraneous DNA, making the process of looking for ancient DNA extremely sensitive to contamination. Workers at an excavation site in Spain have taken to wearing “clean-room” bunny suits (jumpsuit, gloves, booties, plastic face mask) to protect samples from their own DNA. Handling, or even breathing on, a sample can easily throw off results.

Current work in RNA molecules has researchers claiming to have isolated collagen from a 68-myr Tyrannosaurus rex. Other scientists, however, suspect that the collagen comes from bacteria rather than dinosaurs, citing similar claims, for instance, of 80-myr dinosaur DNA, which actually came from a human. In any event, despite the claim to have found blood cells of some unfossilized dinosaur bone, it is doubtful that cells could have lasted any more than a few thousand years, no less 65 myr!

Dinosaurs have been gone too long for any genetic material to remain in fossils.

BRYAN WALSH, “THE WALKING DEAD,” TIME, APRIL 15, 2013

Most recovered Neanderthal bones have been extensively contaminated with modern human DNA. It is a painstaking process isolating these ancient genomes; as a rule, bacteria contaminates fossils. Recovering genetic material from such remains is fraught with difficulty, if only because DNA itself degrades rapidly. (Protein and DNA break down so fast, it is hard to believe they could survive more than 30 kyr or so.) Svante Pääbo’s genome project was based on a leg bone from Croatia, and there were no bunny suits around when those Vindija Neanderthal bones were first collected; the remains sat in a drawer in Zagreb, the DNA unprotected for 30 years.

As molecular genetics steps into anthropology’s bailiwick, it boldly presumes to trump the paleontological arts. With DNA sequencing technology*89 upstaging morphological analysis (the bones) and claiming its increased rigor, “fresh evolutionary insights do not necessarily require any fossils at all.”34 Highly specialized biochemists (who are neither historians, archaeologists, anatomists, or paleoanthropologists) are then left to call the shots on the human record; and these population geneticists are saying that H. sapiens and Neanderthal did not interbreed.35 This, we know can’t be right.

Evolutionary geneticists Svante Pääbo and Johannes Krause of Germany’s Max Planck Institute, using high-tech lab equipment and supercomputers, were the first to identify a novel Siberian hominid (Denisova) by using genetic analysis alone, without any real fossilized remains (the analysis was based on fragments of a pinky finger). Studying that precious pinky’s mtDNA, scientists determined it was “twice as distant from us as Neanderthals.” OK, but you cannot infer anything about the organism from the genes, which is to say, they have no idea what this creature looked like—its anatomy, context, ecology, or behavior. Nothing.

You don’t discover a Lucy in the molecular lab, protested one anthropologist, others agreeing that genetics can never replace the hard work of the dig. Cold weather, significantly, helps preserve ancient DNA, but, hey, most early fossils are from tropical regions where conditions for DNA survival are poor. Neanderthal DNA, we learn, is only viable for 35 kyr; and protein will not last more than 100 kyr.36 How then can this science tell us anything about creatures who roamed Earth (supposedly) millions of years ago?

Organic matter, metals and nonmetals . . . disintegrate within a few thousand years . . . a million years from now, the skeletons of Rodin, Renoir, Einstein. . . . . Will have turned to dust, along with the coffins in which they were buried.

ROBERT CHARROUX, MAN’S UNKNOWN HISTORY

Passing the buck . . . the evolutionists resolve all their problems by pushing them over to the geneticists.

GORDON RATTRAY TAYLOR,

THE GREAT EVOLUTION MYSTERY

And so molecules (and radiometrics) are now setting the time frame for human evolution, the molecular clock supposedly measuring the passage of species time, even though mutation rates are not so fixed or reliable, as discovered in the work of molecular biologist John Cairns, who found bacteria were stress dependent, mutating at a faster rate when faced with an environmental challenge. Even the esteemed Ernst Mayr, considered perhaps the greatest twentieth-century evolutionary biologist, warned this eager new generation of scientists against the alleged constancy of rates.

The vaunted molecular clock uses average mutation rates, although there is “no theoretical reason why the accumulation of genetic change should be steady through time.”37 The rate of DNA mutations can fluctuate: “they fail to take account of the different rates at which mutations can accumulate.”38 Mutations can be brought on by cosmic rays, radiation, just as X-rays can produce mutations.39 In excess, it “gives an array of freaks, not evolution.” We well know that radiation can increase mutations, as the atomic age has made plain such horrible genetic effects.

Some scientists think supernova events threw colossal amounts of radiation to Earth. “The explosion of supernovae has subjected the earth at least fifteen times to showers of radiation strong enough to kill most forms. . . . These bouts of high radiation could well have been a significant factor in the evolution of life,” says Lyall Watson in Supernature. Paleobiologist of Chicago’s Field Museum, David Raup, in The Nemesis Affair, predicts that “as we near the plane of the galaxy, various aspects of our cosmic environment change. . . . Interstellar clouds of gas and dust, and the levels of certain kinds of radiation may increase . . . [which] might produce biological effects on earth.”

And how much stock shall we place in genetic distance when chimpanzees are said to be genetically closer to human beings than to gorillas and we humans are 98.4 percent identical to chimps? Or when 95 percent of DNA is “junk DNA”?40 Or when Neanderthal sequences come out as “somewhere between modern human and chimp sequences”? Paradoxically, one molecular biologist, John Marcus, after performing his own DNA distance comparisons, found “the Neandertal sequence is actually further away from . . . the chimpanzee sequences than the modern human sequences are.” Humans closer to chimps than Neanderthals are? Marcus concluded: “the Neandertal is no more related to chimps than any of the humans. If anything, Neandertal is less related to chimps.”41 Huh?

Successfully intimidating with complicated formulas and daunting nomenclature, the work of the geneticist has been properly scientized by all these: molecular phylogenetics, allopatric populations, epistatic interactions, allelic combinations, prezygotic isolation, sympatric speciation, and amphiploids—none of which anyone outside the cabal understands and little of it in fact pertaining to humans—mostly to plants and fruit flies. Does the number of bristles on fruit flies tell us anything about human evolution?

Chemical and Faunal Dating

One chemical dating technique measures the amount of nitrogen lost and

fluorine gained in bones buried in a deposit. But the amount of fluorine in the bones depends on the amount of fluorine in the soil; if rich in it, the bones there embedded can become rapidly saturated. Neither does fluorine, which yields highly variable results, accrue at a uniform rate; its absorption in volcanic areas tends to be quite erratic. Result: Bones of wildly different dates may have similar fluorine contents.

Fontéchevade Man has been loosely assigned a meaningless scatter of ages, anywhere from 40 to 800,000 years. But recovered from damp, clayey soil, it makes the latter date most unlikely. The nitrogen preserved in bone, a factor used in dating, varies greatly depending on the amount of clay in the soil. England’s acclaimed Ipswich skeleton, beneath only four feet of clay, was assumed nevertheless to antedate the last glaciations, even though “the geological evidence of antiquity was actually quite inadequate,” as noted by Le Gros Clark in The Fossil Evidence for Human Evolution; he further remarked that “even so, it was seized with avidity by those who were particularly anxious to bolster up arguments for the remote origin” of our species. (To Boule, these AMHs were “in reality barely prehistoric.”)

Concerning Au dating, Le Gros Clark went on to warn that South African deposits are mostly in the form of breccias in which stratification (observable layers) is either absent or too poorly defined to permit geological dating. So an alternative was to base dating here on associated fauna—even though South African faunal correlations of those early periods were still not worked out. Some of these fossils (mammals) put Au in association with Pliocene fauna, even though a number of these mammals actually survived to a much later date.

Faunal dating uses animal bones to determine the age of sedimentary layers or fossils buried in those layers. This method is a touch circular: How is the geological age of rock determined? By its index fossils, meaning the fauna (animals) most characteristic of a particular stratum; but that fossil in turn is dated according to an assumed evolutionary sequence determined by the rocks. In other words, rocks are used to date fossils; fossils are used to date rocks. Trilobites, for example, have been dated about 550 myr because they are found in Cambrian layers; how do we know it is the Cambrian? Because of the presence of trilobites (index fossil). Faunal fossil markers (animal remains) may be mistakenly labeled, say, Tertiary, when they might actually be much more recent. As an example, the fauna used to long-date Krapina Man to 130 kyr actually survived to a much later time, 30 kyr. When such “index fossils” turn out to be long lived throughout several strata (or in fact still extant), you can throw out the whole ball game. (Some modern, extant, species previously thought to be extinct for millions of years are listed in chapter 12). Although Australopithecus africanus was dated by faunal assemblages, “African faunal sequences are not very precise indicators,” warned William Howells; faunal dating is “notoriously capricious.”42

Paleomagnetic Dating

Paleomagnetic dating is yet another method of determining age, this one according to magnetic field reversals: north pole becomes the south pole and vice versa. Such pole shifts are evidenced by the alternating zig or zag direction marked in ancient rocks: this is because volcanic basalt (the commonest rock on Earth) cools in the direction of the magnetic field. Solidified lava is then magnetized like the compass needle, facing North.

It was paleomagnetic dating that came up with that extraordinarily old date for Au. sedipa. But, trust me, the timing of these magnetic pole shifts is still indeterminate. Magnetic polar shifts are not the same as geographical pole shifts, the notion that the rotational axis of the Earth flips or turns over in space either due to crustal slippage or movement of the entire planet. Geographical pole shifts have been investigated in John W. White’s Pole Shift and found by him to be pseudoscientific, without basis in fact.

I have researched these shifts and have never succeeded in finding any scientific consensus on the rate of these reversals. Again, it’s a question of time. To give you a taste of the discrepancies: Some say a reversal occurs once every 5,000, 7,000, or 28,000 years; others say, every 100 kyr; others 200 kyr; still others 250, 500, 550, 780, or 1 myr. The Mammoth reversal supposedly lasted from 3.1 to 3.0 mya; the Gilbert reversal from 3.6 to 3.4 mya. Alternatively, a pole shift happened 26,500 years ago.43 Or the Mungo event occurred some 35 kya; the Gothenburg event 13 kya; a “fully confirmed” field reversal 10,000 years ago44; or twelve pole flips have happened in the last 5 myr. Take your pick!

A GEOLOGICAL NIGHTMARE

Earth’s crust and ancient beds, it is well known, may be distorted by faulting and folding and redeposited gravels, or material carried from elsewhere: newer stuff can get wedged in the gap of an older rock layer. When a hominid fossil is labeled intrusive, that means it has been accidentally reburied in some other deposits. Louis Leakey’s 1-myr Oldoway (Olduvai) Man, for example, turned out to be a modern H. sapiens accidentally buried less than 20 kya in older deposits, which had been scrambled by faulting.45 Workers know Olduvai Gorge is a place that has seen recurrent faulting and deformation. Even older than Olduvai Man, touted as the world’s earliest H. sapiens, was Kanjera Man, another Leakey exploit in East Africa, which also turned out to be a modern human buried somehow in older sediments.

Neither is river plain a good stratigraphical context: sediments are so mixed up by flowing water. Many important fossils were recovered in river plains, Java’s famous H. erectus man found right at the edge of a river. Alkaline washing, too, gives dates much older than they should be; some of the carbon-14 necessary for accurate dating is apparently removed by exposure to such treatment, thus deepening the age of the sample. In Africa, many of the key Kenyan fossils are from dry streambeds. Turkana Boy, found in a riverbed, was long dated to 1.6 mya. In Europe, an early dated Neanderthal type, Steinheim, was found in river gravels and dated to 300 kyr.

With many geologists frankly doubting that sedimentation rates are uniform, nineteenth-century uniformitarianism has been seriously challenged. So many factors can affect the transportation of Earth materials: speed of flow, roughness of channel, temperature, type of material carried, direction and volume of flow, depth, slope, and chemicals present. And most fossil bones are indeed found in sedimentary rock.

In places like Ethiopia where Tim White discovered what he trumpeted as “5.5-million-year-old hominids,” the setting is nonetheless a “geological nightmare. You have a patchwork quilt of different aged sediments on the surface.”46 White’s old partner in East Africa, Donald Johanson, said: “At Hadar the minerals in some of the volcanic ash layers we normally use to date fossil-bearing formations had been altered or contaminated by later geologic processes.”47

Ethiopia’s now-famous Ardi was discovered in a rainy region. Torrential downpours wash up traces of ancient stone and bone from different eras; remains as old as 5.7 myr can get mixed up with stuff as recent as 80 kyr. And that’s just the point: We will return to these dates in chapter 10, where that figure, 80 kyr, is proposed as the oldest possible date for man. Extended pluvial periods in East Africa complicate the reading of deposits; here dating is surrounded by “clouds of dust.”48

Red sandstone*90 . . . limestone, gravel conglomerates, and other formations extend over exceedingly wide geographical areas. The problem posed by these strata is that they suggest a blanketing of the planet from extraterrestrial sources. Sedimentary rocks are something more than we have been taught. . . . Our planet has been blanketed by much extraterrestrial matter in previous times.

VINE DELORIA JR., RED EARTH, WHITE LIES

Surface finds present their own share of problems. Hominid fossils found near the surface are much more likely to be contaminated with superfluous material of both different origin and different age. Java’s Sangiran hominids almost all occur on the surface, making their real age uncertain. In Africa, H. habilis femurs were found right on the surface—which didn’t stop Richard Leakey from dating them to 2.6 myr. Few of the locations where important paleoanthropological discoveries have been made can thus qualify—and that includes the whole australopithecine family of Africa, as well as H. habilis and H. erectus, which occur on the surface or in cave deposits, the latter also notoriously hard to gauge geologically.

Most hominid fossils gotten prior to World War II were taken from caves. Yet dating cave finds is iffy, for they tend to get “churned” up; recent material can get sucked down in “swallow holes.” Glaciers also mixed up cave deposits. Significantly, most caves older than 150 kyr have collapsed or been flushed of deposits—yet Sterkfontein and Swartkrans and most other South African Au for that matter are from caves and insouciantly dated ten times older than that! “Time resolution of cave deposits is poor,” admits Richard Leakey.49 Often a jumble of material, such finds tend to be hopelessly scrambled. Most Neanderthals were found in caves, as were the hominids at Sima de los Huesos, Grimaldi, Krapina, Chapelle, Belgium, Dusseldorf, Moravia, Mt. Carmel, and Peking.

Volcanic regions are also notoriously problematic. On Java, those (surface) Sangiran hominids were found at the foot of a volcano; here, Trinil’s H. erectus, in volcanic rock, is dated to the early Pleistocene or even Pliocene (more than 2 myr). Africa’s Skull 1470 was also found in a volcanic context; the KBS tuff for 1470 gave a scatter from 500 kyr to 17 myr! While volcanic activity does enhance fossilization, the ash and pumice in such beds tend to become eroded and transported by streams and rivers.

The Olduvai Beds in Tanzania are also interspersed with volcanic material: the radiometric dating of these ash layers, encasing the deposits, gives Ardi an age of 4.4 myr. This date, however, has been questioned, for Ardi’s region is difficult to date radiometrically. Also dated in the millions of years, East Africa’s controversial Laetoli footprints are impressed in volcanic ash, just as Lucy herself was dated from lava flows; but lava flow may easily contain older rocks.

COSMIC CLIMATE

While evolutionists routinely turn to climate shifts and other supposed environmental challenges as the alleged trigger of natural selection and, therefore, of evolution itself—largely overlooked are the vagaries of cosmic change, the cosmic climate—and its inexorable effect on terrestrial geology. Not much is known about these nonterrestrial imponderables.

Scientists have spoken of the difficulty in dating objects that have had contact with electricity, which can distort the results of radiometric dating and of mutation rates. This has been (experimentally) shown in plant seeds and fish eggs. An electrical field of high concentration, or solar flares, can alter decay rates. In this regard, Stephen E. Robbins has warned: “When the atoms of the nucleus are excited, decay is much quicker, making things look vastly older. Cataclysms on a vast scale involve high energies that could easily alter radiometric clocks.”50

Decay rates were much faster . . . in the past.

JONATHAN SARFATI, REFUTING COMPROMISE

Blanketing is another phenomenon, which is to say, extraneous material falling to Earth (extraterrestrial debris), resulting in contamination by older cosmic material gathered up in Earth’s vortex. This interstellar dust (which NASA calls space clouds and which, according to the nebula theory, actually formed Earth) is older than Earth itself. As reported by the U.S. Geological Survey, each year tens of thousands of tons of interstellar dust still fall to Earth (some say 80 million pounds of space dust are added to Earth per annum). This cosmic dust influx, others estimate, could amount to anywhere from 10,000 to 700,000 tons per day. With so much space dust accumulated, could a 5-million-year-old creature even be dug up from the depths?

Consider some of the highly charged events in our planet’s history, as witnessed by regions of Earth like the Gobi Desert with its irradiated sands, or by Earth scars and bombardments like the ancient tektites (glassy blobs) in Java, India, Australia, and France; or the strange black stones called harras in the Arabian desert; or vitrified areas in India and Libya, so like those in America’s Death Valley and the fused stone ruins near the Gila River (remembered by the Hopi as fire from heaven). Or the melted stones at Brazil’s Sete Cidades. Or Scotland’s and Ireland’s vitrified hill forts, calcinated by extreme heat. Or Turkey’s and Iran’s fused rocks that appear blasted from above (similar to the floor of New Mexico, where it was scarred by the first A-bomb tests).

Do these represent the brimstone of high antiquity? seventy-five thousand years ago when Earth’s atmosphere had not quite settled down, “the gases of her low regions [were] purified to make more places for mortals. . . . Fire, brimstone,*91 iron and phosphorus fell upon the earth . . . and this shower reached into the five divisions of the earth.”51

Some time around 22,000 years ago, “the earth . . . [was] dripping wet and cold in the ji’ayan eddies . . . [bringing] a spell of darkness.”52 And again, ca 8,000 years ago, “a’ji began to fall. The belt of meteoris gave up its stones, and showers of them rained down on the earth.”53 Aren’t thick rock layers (showing little time between layers) consistent with the rapid deposition of such sky falls?

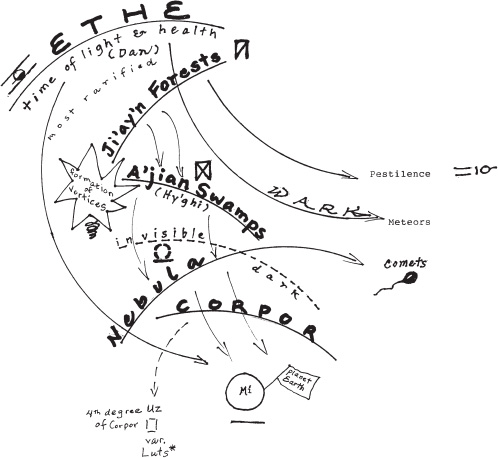

Figure 6.4. A’ ji: degree of density needed to create a world; dark period on Earth sometimes accompanied by stone showers and other strange phenomena. A’ ji signifies interstellar fields of greater densities and different properties.

Contrary to the uniformitarian view, so essential to evolutionism, early Earth and past processes cannot be judged altogether by present ones. “Extraterrestrial forces periodically disrupt the normal course of life. . . . The episodic course of natural history [redefines] the uniformitarianism of Hutton and Lyell . . . looking beyond the planet.”54 Thomas Henry Huxley, in fact, thought the remote period saw Earth passing through physical and chemical conditions “which it can no more see again than a man can recall his infancy.”55 His good friend Charles Darwin had to acknowledge Huxley’s and Lord Kelvin’s view that early Earth was subject to more rapid and violent changes. Indeed, Darwin deleted his section on steady sedimentation as a reliable chronometer (from the 1868 edition of Origin), after contemplating Lord Kelvin’s work, including his, Kelvin’s, more recent dating of Earth (see chapter 10).

Thousands of feet of sediments have buried the coral reefs; but not always in steady increments. Certain (polystrate) fossil trees seem to have been laid down rapidly; and there are signs of other episodes of super-sedimentation: consider the times of “Luts wherein there falls on a planet condensed earthy substances, such as clay, stones, ashes, and disseminated molten metals, in such great quantities that it can be compared to snowstorms, piling up corporeal substance on the earth to a depth of many feet, and in drifts up to hundreds of feet. Luts was . . . a time of destruction.”56 Around 12 kya, “there came great darkness on the earth, with falling ashes and heat and fevers.”57

Figure 6.5. Luts: seasons of the firmament. Luts is the opposite of dan (light). It is also the brimstone of biblical fame. “Great cities [were] . . . covered up by falling nebulae” (Oahspe, Book of Sethantes 9.13).

The Finnish Kalevala recalls hailstones of iron that fell from the sky. Before our planet stabilized, long periods of darkness, luts, meteorites, stone showers, “star oil,” and other destructive sky falls assailed Earth, lasting sometimes for hundreds of years. The quantity and quality of such precipitations to Earth have been greatly variable. The substance of a’ji and falling nebulae may be so fine, it is invisible to the eye; nevertheless, it is capable of building up in very short periods. “Mortals did not see the a’ji; but they saw their cities and temples sinking, as it were, into the ground; yet in truth they were not sinking, but were covered by the a’ji falling and condensing.”58

BIG POPULATIONS AND EARLY CIVILIZATIONS

[A]ll the growing body of evidence for “art” before 40,000 years ago is simply dismissed and ignored.

PAUL BAHN, NATURE MAGAZINE

Ironically, the flip side of science’s extravagant long dating is the stubborn refusal to recognize any sign of civilization earlier than, say, 6 or 7 kya. The same intellectual establishment that has overestimated man’s time on Earth (by millions of years) turns around and underestimates man’s achievements by at least 40,000 years.

Similarly, there is the fixed idea that human populations in the Pleistocene were small. This belief reflects academia’s wholesale dismissal of lost races and extinct civilizations, a factor that, sooner or later, will blow up in their face.

A World Filled with People

Spencer Wells, a population geneticist and molecular anthropologist who collected DNA samples from (living) people all over the world to trace the roots of human history, states that his data reveal “as few as 2,000 people were alive some 70,000 years ago.”59 This outrageous claim is on a par perhaps with Brian Fagan’s assertion that mankind became almost extinct 73,000 years ago due to a Sumatran volcanic eruption: “there were very few of them indeed.”60

The geneticists’ assumptions concerning human populations may be off target: The problem here is that each population has a separate demographic history, which invalidates the use of mtDNA to clock past events in any standard way. Population size alone can throw off its accuracy: for example, if a population grows more in one region than another, this can lead to greater (genetic) diversity; it would not, as claimed, be necessarily older—which circumstance queers the whole genetic distance premise.

Banish the thought that primitive populations were always sparse.

WILLIAM CORLISS, THE UNEXPLAINED

Small population? Why, man filled Earth, at least according to Genesis 1:28, 6:1, and 6:11, as well as chapters 10 and 11. Ironically, it was Malthus’s very idea of too-large populations that inspired Darwin to postulate fierce competition and survival only of the fittest. Despite this, Darwin did indeed embrace small populations, if only because his model of speciation required small isolated groups in order for changes to take hold.

The world hath been peopled over many times and many times laid desolate.

OAHSPE, BOOK OF APOLLO 2:7

Peak populations followed by swift collapse have been well documented by British historian Arnold Toynbee and more recently by Jared Diamond. There were, to give one example, up to fourteen million people in Central Peten (classic Mayan) civilization, reduced to a mere thirty thousand by the sixteenth century. In tribal legend, too, the people of Melanesia and Vanuatu actually say the mortality of men (the origin of death) was due to overcrowding on Earth. Likewise does Vietnamese legend speak of primordial overcrowding that got so bad the poor lizard could not go about without someone stepping on its tail. Sources such as Babylonian and Persian creation cycles say the reason for the flood was overpopulation; just as the Greek Zeus planned a war to reduce population.

The Oahspe chronicles recount that the Ihins alone once “covered over the whole earth, more than a thousand million of them.”61 Worldwide population counts embedded in these scriptural histories may be summarized as follows.62

| TABLE 6.2. WORLDWIDE POPULATION COUNTS | |

| Years Ago | Population |

| 70,000 | Over 2 billion |

| 67,000 | Almost 4 billion |

| 63,000 | 4.8 billion |

| 60,000 | 6.4 billion |

| 57,000 | 8 billion |

| 53,000 | 9 billion |

| 50,000 | 9.4 billion |

| 41,000 | 10.8 billion |

While William Whiston, an eighteenth-century Cambridge theorist, correctly, I think, estimated the preflood population at more than 8 billion, paleontologists today guess at only a few million people or possibly as few as 1.3 million humans ca 50 kya,63 at which time there were supposedly only 15,000 to 20,000 Neanderthals in Europe and Eurasia: “tiny numbers of people,”64 or as Erik Trinkaus put it: “There were very few people on the landscape.”65

Around this time (Aurignacian), however, a great accumulation of Cro-Magnon “cultural debris”66 speaks of quite large populations in Europe as well as a “very large population in Swaziland” (35 kya).67 All the continents and islands of Earth were inhabited. There was no wilderness, for mortals were prolific, many of the mothers bringing forth two score sons and daughters, and from two to four at a birth.*92

The inhabitants of the Earth were before the Flood vastly more numerous than the present Earth.

THOMAS BURNET, SACRED THEORY OF THE EARTH

There were also great cities in all the divisions of Earth before the flood, but all were cast down. Later, after the flood, in the time of Apollo (ca 18 kya), the Ihins in America alone numbered 4 million souls; and at that time there were in the world hundreds of millions of Ihuans and ground people.68 Yet, for this period, the Mesolithic, paleontologists put a mere 10 million people on Earth, or as few as 5 million.69

Twenty Thousand Years of Civilization

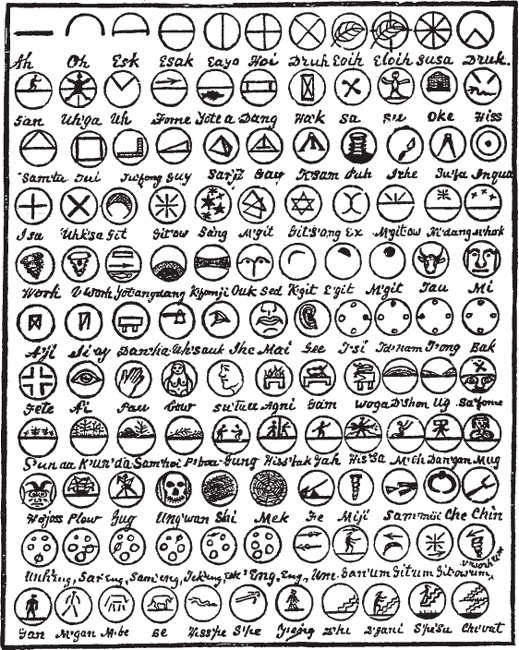

If human beings didn’t really put together an advanced society until well into the Holocene, why does the Paleolithic give us cities tens of thousands of years ago? Freeze-frame 70 kya: “Mortals had given up the . . . [wilderness] and come to live in villages and cities.”70 Oahspe tells us that around this time the first written language in the world (Semoin) was developed, with characters engraved and copies brought to all the cities in the world (see figure 6.6).71

Figure 6.6. Semoin tablet from Oahspe.

Independent researchers have discovered that cultured AMHs coexisted with archaic types: “parallel to the hunter gatherer societies . . . a higher level of civilization also existed. . . . Humans more than 20,000 years ago had precise knowledge of major star coordinates. . . . The Egyptian calendar’s starting date . . . is within 400 years of the oldest Mayan date, recorded as 51,611 years ago on the Chincultic ceramic disk.”72 *93 Indeed, Martianus Capella stated that the Egyptians had secretly studied the stars for 40,000 years before revealing their knowledge; Diogenes Laertius also dated Egyptian astronomy to 48,863 years before Alexander the Great.

The Babylonian priest Kidinnu, about 15,000 years ago, was an astronomer who knew the facts relating to the yearly movement of the sun and moon, this science also predicting lunar eclipses with precision. North of Babylon (and possibly its mother culture), Gobekli Tepe, with its ancient observatory (the site also a ritual center, a sanctuary), is dated to 14,000 BP. This recently discovered star chamber in the highlands of Old Turkey, with finely carved reliefs on massive columns (five meters high), is aligned north–south (as is the much later Great Pyramid of Egypt), betraying a precise knowledge of geodesy 7,000 years earlier than the presumed beginning of exact science. Similar temples of the same age are being explored at Karahantepe, Sefertepe, and Hamzantepe, all antedating the Neolithic.73

The first period of civilization brought navigation, printed books, schools, astronomy, and agriculture.74 Agriculture and other arts may go back as far as 45 kya: “Her people have tilled all the soil of the earth . . . feeding great centers with hundreds of thousands of inhabitants in all the five divisions of the earth.”75 Pottery fragments found in Belgium in Mousterian layers are dated 50 kyr, and here some of the Spy II specimens show AMH traits.76 The ceramic arts (usually associated with sedentary agriculturalists) are in evidence in Czechoslovakia (Moravia) as well, at the 29 kyr site of Dolni Vestonice, a populace that also produced carvings, engravings, portraiture. “Explain Dolni Vestonice, and you explain humanity.”77 This extensive settlement, boasting dwellings up to fifty by thirty feet, was a thriving town long before the Holocene, even though their ceramics (fired clay) and kilns are not supposed to be there until the agricultural revolution of the Neolithic, 20 kyr later. Archaeologists have also uncovered 28 kyr agriculture (evidence of cultivated grains and related tools) in the Solomon Islands.78

Twenty thousand years ago, in a short-lived golden age, there were thousands of cities with great canal works, but all were destroyed by the time of Apollo.79 However, in time, they built up again and by 12 kya, thousands of cities thrived in the lands of Ham (Africa) and Guatama (America)80 where it has been estimated that the great mounds along the lower Mississippi had “a population as numerous as . . . the Nile or the Euphrates. . . . Cities similar to those of ancient Mexico, of several hundred thousand souls, have existed in this country.”81 Man’s existence in America, we realize, has been steadily pushed back from 6 kya to 10 to 20 to 30 and even 40 kya (see more on this in appendix F). Louis Leakey, in his New World foray, ignored conventional chronologies and dated American material culture to 48 kya.

Even if archaeologists say the first cities in the world came about 4500 BP (and strictly in the Old World), a lost horizon lies buried deep in the hidden earth: “The Ihins . . . built mounds of wood and earth . . . hundreds and thousands of cities and mounds built they.”82 Nor should we dismiss records of such lost cultures as known to the Hindus, Tibetans, Persians, Chinese, Polynesians, Maya, Zimbabweans—each with traditions of previous “worlds” and every one of them waved off, discredited, or simply ignored by the same experts who promote the fable of 5-myr man! Proceed cautiously within their precincts. Therein lies wizardry.

If our dating is wrong, “we might,” mused Robert Schoch, “be forced to not only rethink our science, but to rethink our history as well. . . . The stakes could not be higher.”83