Areas and Networks

Psyche Loui and Emily Przysinda

Introduction

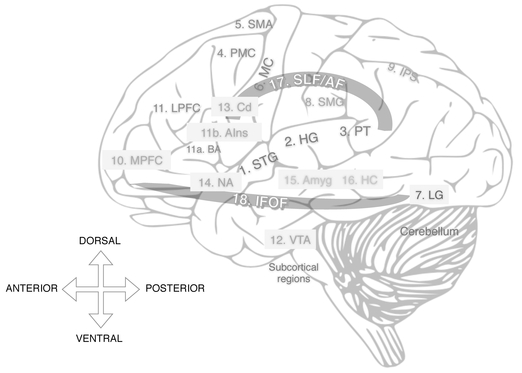

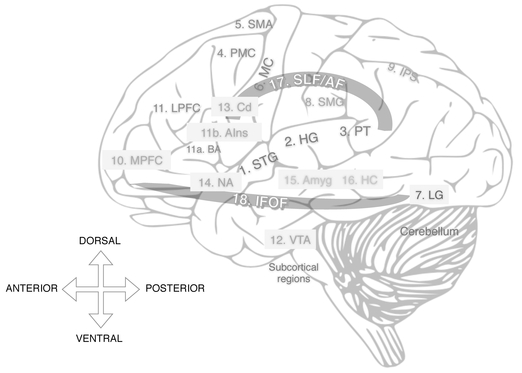

Scientists and philosophers alike have long asked questions about the cerebral localization of the human faculties such as language and music. In recent years, however, human cognitive neuroscience has shifted from “blobology”—a dogmatic focus on identifying individual brain areas that subserved specific cognitive functions—to a more network-based approach. This sea change is aided by the development of sophisticated tools for sensing and seeing the brain, as well as more mature theories with which neuroscientists think about the relationship between brain and behavior. The newly developed tools include magnetic resonance imaging (MRI) and its many uses including functional (fMRI) as well as structural imaging, electroencephalography (EEG) and magnetoencephalography (MEG), and brain stimulation techniques (transcranial magnetic stimulation, or TMS, and transcranial direct current stimulation, or tDCS) with which it is possible to test the causal roles of specific targeted brain areas. Here, we review representative studies that use music as a domain for understanding specific brain regions, as well as studies that identify widespread networks of regions that enable specific aspects of musical experience. General anatomical locations of brain regions that will be highlighted are shown in Figure 2.1 below. For a more comprehensive review of brain anatomy including all its structures and functions, the reader is directed to more general cognitive neuroscience sources such as Purves, et al. (2013). In this chapter, we focus on brain areas and networks as they relate to specific aspects of musical experience.

Two main focus points may serve as guideposts for this chapter’s wide-ranging discussions: First, there is no single region for music, as there is no single musical experience: Distributed areas of the brain form networks to give rise to different aspects of musical experience. Second, music offers an appropriate test case for existing hypotheses about how brain areas and networks enable human behavior.

Figure 2.1 Anatomical locations of some grey matter regions involved in music perception and cognition. Shaded grey regions represent mesial structures, i.e., areas that are seen in cross-section rather than in the present surface view of the brain. Shaded orange regions represent white matter pathways. Colors of text represent general areas of the brain: Blue = temporal lobe; red = frontal lobe; brown = occipital lobe; green = parietal lobe; pink and violet = subcortical structures. See insert for color figure.

1. STG: Superior temporal gyrus

2. HG: Heschl’s gyrus

3. PT: Planum temporale

4. PMC: Premotor cortex

5. SMA: Supplementary motor area

6. MC: Motor cortex

7. LG: Lingual gyrus

8. SMG: Supramarginal gyrus

9. IPS: Intraparietal sulcus

10. MPFC: Medial prefrontal cortex

11. LPFC: Lateral prefrontal cortex

a. BA: Broca’s area

b. AIns: Anterior insula

12. VTA: Ventral tegmental area 13. Cd: Caudate

14. NA: Nucleus accumbens

15. Amyg: Amygdala

16. HC: Hippocampus

17. SLF/AF: Superior Longitudinal Fasciculus/Arcuate Fasciculus

18. IFOF: Inferior Frontal-Occipital Fasciculus

Understanding Areas and Networks

Inferring structure and function in the human brain is a science that dates back centuries. Renaissance scholars such as Leonardo Da Vinci believed that the soul was contained in the ventricles of the brain, whereas in the 1600s, Descartes believed that the soul was contained in the pineal gland (Purves, et al., 2013). More systematic study of brain-behavior relationships comes from neuropsychological findings from the nineteenth century onwards, where patients of stroke, tumor, and/or traumatic brain injury presented clinically with unique behavioral symptoms. Perhaps the best known of these cases was Monsieur LeBorgne, neurologist Paul Broca’s famous patient “Tan,” who, following a stroke-induced lesion to the left frontal lobe, suffered a complete loss of speech production other than the single syllable “Tan,” but was nevertheless able to understand spoken sentences and was also able to sing. This paradoxical dissociation between music (singing ability) and language (spoken sentences) was a major step towards a fundamental postulate of cognitive neuroscience: That specific brain areas gave rise to specific mental functions such as language. Today, neuropsychological approaches still offer important insights on how specific brain areas may give rise to behaviors, by relating the presence of lesions in specific areas of the brain to disruptions in behavioral function.

At a fundamental level, neuroscience is based on the neuron doctrine: The idea that individual neurons communicate with each other to give rise to all behavior. Cell bodies of neurons and their supporting cells (glia) make up regions of grey matter, which perform many of the computations to enable neuronal signaling. In contrast to grey matter, white matter generally consists of myelin-sheathed axonal connections between neuronal cell bodies. Bundles of these axonal connections together constitute tracts and fasciculi, which are major white matter pathways that connect networks of regions in the brain, thus enabling neuronal communication.

Following the neuron doctrine, technological advances have enabled the sensing of the structure and activity of large groups of neurons, thus revolutionizing our understanding of the brain. Notably, the cognitive neuroscience of music has been facilitated by the invention in the 1920s of encephalography (EEG) from the recording of scalp electrical potentials, as well as the discovery of nuclear magnetic resonance, which first enabled structural and then functional magnetic resonance imaging (fMRI). EEG and fMRI are complementary methods that provide different types of information about the function of the brain. Since EEG captures the electrical fluctuations of large groups of neurons as they occur, it is a highly time-sensitive tool; however, with EEG we can only obtain readings from the surface of the head, which leads to less spatial sensitivity (e.g. less information about functions of structures deep within the brain). In contrast, fMRI uses changes in the oxygenation level of blood to provide information about where (as opposed to when ) changes occur in the brain. This gives us higher spatial resolution, but poorer time resolution (due to the relatively slow process of blood flow changes as opposed to direct neuronal activity). Magnetoencephalography (MEG), which uses magnetic flux sensors to detect fluctuations of neural activity, is also increasingly a tool of choice, as it provides a reasonable tradeoff between its abilities to detect where and when changes occur in the brain.

In addition to functional neuroimaging, structural neuroimaging includes different methods of acquiring and analyzing MRI data: These methods are rapidly being developed to enable stronger inferences on brain structure and function and their relationship with behavior. One possible limitation of these techniques, however, is their reliance on correlations between brain and behavior, rather than causal claims, such as one that identifies a certain brain area as being necessary and sufficient for certain brain functions. Lesion methods (as mentioned above) and even open-brain recordings may provide more direct information about causality, but they are invasive for subjects and thus, for ethical reasons, they can only be naturally occurring or produced as a result of medical need (such as in open-brain recordings in patients undergoing brain surgery for epilepsy). Thus, in addition to being invasive for subjects, these studies also pose scientific difficulties, as they are difficult to control in an experimental setting.

To circumvent these difficulties, noninvasive brain-stimulation methods enable hypothesis testing through the creation of virtual (temporary) lesions in targeted regions of the brain. These methods include transcranial direct current stimulation (tDCS) and transcranial magnetic stimulation (TMS). tDCS stimulates specific regions of the brain by applying a low-voltage current through electrodes placed on the scalp over targeted brain regions. TMS stimulates the brain by inducing electrical current as a result of magnetic pulses that are applied via a magnetic coil placed over the head. Due to physical constraints of magnetic and electrical forces, TMS applies more focused stimulation (i.e. more targeted over specific areas) compared to tDCS; however, the use of the magnet in TMS can be mildly uncomfortable and emits sounds, which might add confounds to auditory studies. Despite these tradeoffs, TMS and tDCS both allow carefully controlled studies that can be used to gain a deeper understanding of specific roles of brain areas.

Today, these neuroimaging, neuropsychology, and brain-stimulation techniques are often used in combination, and novel techniques are rapidly being developed not only to localize specific brain functions, but also to understand the relationships and connections between specific brain areas and mental functions. Networks of brain areas give rise to all brain functions: from primary senses such as audition and vision, to motor functions, to association networks such as multisensory integration and spatial navigation, and to networks responsible for higher-level cognitive functions such as attention, working memory, and learning, all of which are required for intact musical functioning.

Auditory Areas

Although much of the neuroimaging work in music and the brain focuses on the level of the auditory cortex and beyond, the pathway that sounds take from the air to the brain clearly includes many subcortical way stations. For a more comprehensive overview of the subcortical pathway that sound takes from the air to the brain, the reader is referred to the preceding chapter in this volume. Hereafter, we focus on responsiveness to musical sounds from the level of the auditory cortex and beyond.

Once auditory input reaches the primary auditory cortex, the pathways taken by neural impulses are relatively variable and involve distributed brain areas throughout the cortex. Very generally, cortical auditory processing begins at “core” areas and progresses towards “belt” and “parable” areas, which correspond to primary and secondary auditory cortices. These structures are collectively located in the superior temporal lobe. The primary auditory cortex is thought to be located in Heschl’s gyrus, part of the superior temporal lobe. Posterior to Heschl’s lies the superior temporal plane (planum temporale) and the rest of the superior temporal gyrus which, together with the upper bank of the superior temporal sulcus, constitute parts of the secondary auditory cortex.

A computational model derived from fMRI data suggests that the planum temporale may function as a computational hub that extracts spectrotemporal information to be routed to higher cortical areas for further processing (Griffiths & Warren, 2002). Anatomical MRIs have shown that the planum temporale is larger in the left hemisphere and is leftwardly asymmetric, especially more so among people with absolute pitch (AP) (Schlaug, Janacke, Huang, & Steinmetz, 1995). As AP entails superior categorization ability, these findings suggest that the planum temporale may extract pitch information for further categorization. More evidence for this role of the planum temporale comes from another study on people with AP, using diffusion tensor imaging (DTI) and diffusion tensor tractography, which are specialized MRI techniques for detecting white matter differences (Loui, Li, Hohmann, & Schlaug, 2011). AP possessors were shown, relative to non-AP possessors, to have higher volume of white matter connectivity from the left posterior superior temporal gyrus (STG) to the middle temporal gyrus, a region known to be involved in categorical perception. The posterior STG was also observed to be more functionally connected to the motor and emotional systems in the brain among AP possessors, a finding which again highlights the role of secondary auditory areas in sound extraction and categorization (Loui, Zamm, & Schlaug, 2012).

Pathways Beyond Auditory Areas

Perisylvian Network

Beyond the auditory cortices, musical sounds activate distributed grey matter throughout the brain. Researchers have proposed various functional networks or pathways beyond the level of the primary auditory cortex. These functional networks subserve language and generalized auditory processing as well as music. Language researchers describe a dual-stream pathway in speech and language processing. The two streams are dorsal and ventral: A dorsal stream projects from the superior temporal lobe (superior temporal gyrus and superior temporal sulcus) via the parietal lobe towards the inferior frontal gyrus and the premotor areas. In contrast, a ventral stream extends from the same superior temporal structures ventrally towards the inferior portions of the temporal lobe. The dorsal stream is involved in articulation and sensorimotor components of speech, and the ventral stream is implicated in lexical and conceptual representations (Hickok & Poeppel, 2007). These similar regions are also activated in musical tasks such as singing. Auditory researchers have also posited a ventral vs. dorsal distinction for the processing of complex sounds that involve most of the same brain areas, with the ventral stream allowing for auditory object recognition, while the dorsal stream enables sensorimotor integration (Rauschecker, 2012).

Although language, music, and auditory processing are ostensibly different neural functions, their underlying brain networks all share many overlapping areas in the brain (the chapter by Besson and colleagues in this volume discusses this matter from a functional as well as neurological perspective). Such overlapping areas include, but are not limited to, a perisylvian network: regions surrounding the sylvian fissure, which separates the temporal lobe from the parietal and frontal lobes. Notably, the superior longitudinal fasciculus is a major white matter pathway that includes the arcuate fasciculus, an arc-like structure that connects the frontal lobe and the temporal lobe. The importance of the arcuate fasciculus for language has been known since the nineteenth century, when it was observed that patients with conduction aphasia, who suffer lesions in the arcuate fasciculus, have trouble repeating sentences that are spoken to them. Due to its positioning as a connective pathway between auditory regions in the temporal lobe and motor regions in the frontal lobe, the arcuate fasciculus is hypothesized to play a key role in mapping sounds to motor movements (such as relating pitch to laryngeal tension). This auditory-motor mapping is a crucial process in speech and music.

To assess the relationship between auditory-motor connectivity and musical skills, we used DTI to compare people with tone-deafness (also known as congenital amusia, see Tillmann et al., this volume) against matched controls. Tone-deaf individuals showed decreased volume in the arcuate fasciculus. Furthermore, the volumes of specific branches of the arcuate fasciculus are correlated with pitch perception and production abilities: People with more accurate pitch discrimination ability had larger branches in their arcuate fasciculus (Loui, Alsop, & Schlaug, 2009). These findings dovetail with fMRI and structural MRI studies (Albouy et al., 2013) that showed grey matter differences in the temporal and frontal lobe areas, endpoints of the arcuate fasciculus, among tone-deaf individuals.

Further studies on the arcuate fasciculus also show larger volume in the left arcuate among singers compared to non-vocal instrumentalists, as well as larger right arcuate volume among singers as well as instrumentalists compared to non-musician controls (Halwani, Loui, Rüber, & Schlaug, 2011). In a combined behavioral and DTI study, participants were taught a novel, unfamiliar musical system, with pitch categories that differed from all existing musical scales. Learning success was significantly correlated with white matter in the turning point of the arcuate fasciculus in the right hemisphere: Better learners had higher connectivity in this white matter pathway (Loui, Li, & Schlaug, 2011). Taken together, these data patterns highlight individual differences in musical behaviors that are reflected by individual differences in brain anatomy, and these individual brain–behavior variations may fall along continua rather than belonging in discrete categories such as would arise from a rigid criterion defining tone-deafness. Rather than categorizing individuals into groups such as “tone-deaf ” vs. “normal,” it may be fruitful to identify continua of behavioral variations, as we see with many other kinds of neurobehavioral conditions.

Areas of the Motor System

A major hub of the dorsal pathway in the perisylvian network is the premotor cortex, a region dorsal to the Broca’s area. The premotor cortex is involved in action selection, such as in the identification and sequencing of rhythmic sequences (Janata & Grafton, 2003). It is also the site of the putative human mirror neuron system and is shown to respond to acquired actions such as learned melodies (Lahav, Saltzman, & Schlaug, 2007).

Posterior to the premotor areas are the motor cortices of the brain, which, in addition to enabling motor movements, are part of the perception-action network that is central to musical behaviors. Listening to metric rhythms is shown to activate motor cortices as well as the basal ganglia (Grahn & Brett, 2007). These studies, reviewed elsewhere in this volume (Henry & Grahn, this volume), together suggest that rhythm and timing prepares the motor system for response and action, which may explain why humans are so compelled to clap along with or move to the beat.

In addition to functional activity during rhythm perception, areas of the motor system are also sensitive to structural changes as a result of musical training. Bengtsson, et al. (2005) examined concert pianists via DTI and showed that white matter integrity in the motor system was associated with childhood practicing. This and other findings that relate musical training to microstructural changes are now being observed across many areas and networks of the brain. Such findings, reviewed elsewhere in this volume (see especially Gordon & Magne, this volume), provide increasing support for structural neuroplasticity: the crucial notion that the brain structure changes as a result of experience.

Multisensory Perception

Although the auditory modality is the primary pathway through which music is processed, much of musical experience includes more than auditory perception. The concurrent influence of visual information is verified by many behavioral studies in music perception and cognition (for a more thorough overview, refer to chapters by Eitan and by Tan, this volume). The underlying brain networks that subserve audiovisual integration appear to differ between musicians and nonmusicians: Network analysis of MEG data suggested that musicians rely more on auditory cues compared to nonmusicians (who rely more on visual cues) during the integration of audiovisual information (Paraskevopoulos, Kraneburg, Herholz, Bamidis, & Pantev, 2015), suggesting that long-term musical training can affect visual as well as auditory processing.

Enhanced Multisensory Perception

While audiovisual integration is ubiquitous in everyday functioning, in the unique population of people with synesthesia, audiovisual or other cross-sensory experiences are enhanced. Synesthesia, the fusion of the senses, is a neurological phenomenon in which experienced sensations trigger concurrent sensations in a different modality. For instance, people with music–color synesthesia report seeing colors when they hear musical sounds, and this can be both bothersome to the synesthetes but also a source of artistic inspiration (Cytowic & Eagleman, 2009). Although the general reported incidence of synesthesia is between 1 and 4% of the population, it is reported to be eight times higher among people in the creative industries and possibly as frequent as 10% or higher for the visualization of overlearned sequences (such as seeing number lines or days of the week as being laid out in a specific manner). In a DTI study comparing music-color synesthetes and matched controls, a major white matter pathway known as the inferior frontal occipital fasciculus (IFOF) was found to be higher in fractional anisotropy (an index of white matter integrity) among the music-color synesthetes. Furthermore, the right hemisphere IFOF was correlated with the subjective report of consistency between musical stimuli (pitches, timbres, and chords) and color associations (Zamm, Schlaug, Eagleman, & Loui, 2013). As the IFOF connects the frontal lobe with areas important for visual perception in the occipital lobe, via auditory and categorization areas in the temporal lobe, this white matter pathway may act as a crucial highway of connectivity that holds together the network of areas necessary for multisensory integration.

Occipital Lobe

The occipital lobe has long been known to be the seat of vision. Although the organization of visual structures in the occipital lobe is complex, it is sensitive to plastic changes as a function of experience from other senses. Many studies on blind subjects show that the occipital lobe may be taken over by auditory processing when deprived of visual experience (Voss & Zatorre, 2012). Occipital lobe regions are also activated during musical imagery (Herholz, Halpern, & Zatorre, 2012; for more on musical imagery, see the next chapter in this volume; for more on cross modal connections, see Eitan, this volume). Studies with special populations are also informative: During a pitch memory task in an fMRI study, a blind AP musician activated visual association areas in the occipital lobe, whereas sighted AP musicians used more typical auditory regions in the superior temporal lobe (Gaab et al., 2006). Also interestingly, when music–color synesthetes listen to music, they activate color-sensitive areas in the lingual gyrus of the occipital lobe, in addition to classic auditory temporal lobe structures (Loui et al., 2012). These findings converge to show that areas classically viewed as visual cortex in the occipital lobe can be sensitive to nonvisual aspects of musical experience as well.

Learning and Memory

Learning of and memory for music involve perceptual, motor, affective, and even autobiographical memory processes. The interaction of these processes poses a challenge for neuroscientists who must choose between different behavioral testing methods that may present conflicting results. For example, patients who have had a right temporal lobectomy were impaired in their retrieval of melodies, suggesting that the right temporal lobe stores long-term memories for musical melody (Samson & Zatorre, 1992). On the other hand, conflicting evidence comes from a report of a professional musician with severe amnesia (anterograde, interfering with new memory formation, and retrograde, involving loss of previous memories) who had lesions in most of the right temporal lobe, as well as large portions of the left temporal lobe and parts of left frontal and insular cortices, but who paradoxically had preserved memory for music (Finke et al., 2012). Furthermore, the left temporal pole (a most anterior portion of the temporal lobe) is also implicated in melodic identification, as patients with left temporal pole damage performed worse than a brain-damaged control group on melody naming but not in melody recognition (Belfi& Tranel, 2014). In contrast to neuropsychological studies, fMRI studies show a more distributed network for musical learning and memory, including bilateral superior temporal gyrus, as well as superior parietal regions and the supramarginal gyrus in the parietal lobe (Gaab, Gaser, Zaehle, Jancke, & Schlaug, 2003). Together these studies remind us that in the study of learning and memory, a search for a direct mapping between musical materials and its underlying brain substrates will probably remain elusive. Instead, results depend on the specific research question of each study, and the stimuli used. Results will also depend on task differences such as naming versus recognition, perception versus production, and task-dependent involvement of related cognitive processes such as attention and mental imagery. Nevertheless, here we review studies that very generally converge upon a frontoparietal network for learning and memory. Specifically, the supramarginal gyrus and intraparietal sulcus in the parietal lobe and the lateral prefrontal and medial prefrontal cortices in the frontal lobe show fairly consistent activity in various studies.

Parietal Lobe

Classic parietal lobe functions include sensory integration, memory retrieval, and mental rotation. In the domain of music, these mental functions translate to cognitive and perceptual manipulations of musical materials, such as learning and memory of sequences of pitches and rhythms. The supramarginal gyrus, near the temporal-parietal junction, is a region within the parietal lobe that has appeared in several studies on learning and memory. Activity in the supramarginal gyrus was significantly associated with memory performance, especially in musically trained subjects (Gaab et al., 2003). Other fMRI studies have refined our understanding of the role of the parietal lobe in music to cognitive operations on abstract musical materials. In one fMRI study, participants were given an encoding and retrieval task of unfamiliar microtonal compositions (i.e., using intervals smaller than those found in conventional Western music), and the right parietal lobe was activated during retrieval of these newly-encoded musical stimuli (Lee et al., 2011). Another fMRI study showed that the intraparietal sulcus, a parietal region dorsal to the supramarginal gyrus, was involved in melody transposition (Foster & Zatorre, 2010). White matter underlying the supramarginal gyrus was also correlated with individual differences in learning a grammar of musical pitches (Loui et al., 2011b). Finally, transcranial direct current stimulation (tDCS) and repetitive transcranial magnetic stimulation (rTMS) over the supramarginal gyrus modulated performance on pitch memory tasks (Schaal, Williamson, & Banissy, 2013).

Taken together, the role of the parietal lobe in music appears to encompass cognitive operations on musical materials: learning, memory, and mental transformations. Some of these operations will be addressed elsewhere in this volume (Schaefer, this volume).

Prefrontal Cortex

Although debates are still ongoing regarding the optimal division of the prefrontal cortex, the majority of researchers agree on a functional division between lateral and medial prefrontal structures. In the musical domain, the medial prefrontal cortex is shown to track tonal structure (Janata et al., 2002) as well as autobiographical memories (Janata, 2009) and is also relatively active during free-form music making such as in jazz improvisation (Limb & Braun, 2008). Studies on emotional responses to music have also consistently activated the ventromedial prefrontal cortex as part of the reward network, which we will review in more detail in a later section (see also Granot, this volume). In contrast to the medial prefrontal cortex, the lateral prefrontal cortex plays roles in cognitive operations such as working memory and task-related attentional processing (Janata, Tillmann, & Bharucha, 2002). Notably, Broca’s area lies within the lateral prefrontal cortex and has long been known to be crucial for language, but is sensitive to musical syntax as well (see Besson, Barbaroux, & Dittinger, this volume, for an in-depth review). Towards the midline of the brain from the Broca’s area is the anterior insula, an area that predominantly activates in vocal tasks such as singing (Zarate, Wood, & Zatorre, 2010). Due to its role in vocal tasks and its proximity to other regions within the perisylvian network reviewed above, the anterior insula is frequently considered part of the dorsal sensorimotor network used in singing and speaking (Hickok & Poeppel, 2007). Taken together, the roles of the prefrontal cortex are many and complex, but very generally they fall under the categories of attention and memory, vocal-motor, and emotional and self-referential processing.

Emotion and Reward

Music is widely reported as one of the most emotionally rewarding experiences in human experience. While other chapters in this Companion (Granot, this volume; Timmers, this volume) will give more specific overviews on emotion and pleasure, here we discuss briefly the brain areas and networks that enable the experience of emotion, which include the amygdala and hippocampus within the middle temporal lobe, insula and medial prefrontal cortex within the frontal lobe structures, and the reward network which is driven by the neurotransmitter dopamine.

The dopamine network includes the substantia nigra and ventral tegmental area in the brainstem, and the caudate and nucleus accumbens. Salimpoor et al. (2011) found temporally distinct activations between caudate and nucleus accumbens during the experience of intensely pleasurable moments in music: The caudate was more active during the anticipation of pleasurable moments, whereas the nucleus accumbens was active during the actual experience of pleasurable stimulus. This coupling between dopaminergic areas may give rise to reward processing of slightly unexpected events, consistent with the widely influential view that the systematic fulfillment and violation of expectations give rise to emotional arousal in music (Meyer, 1956).

Neuropsychological evidence shows the involvement of the amygdala in the medial temporal lobe in the fear responses to music. In Gosselin’s (2005) study, for example, patients with lesions in the amygdala had trouble identifying specific emotional categories, such as “scary” music as being scary. Trost et al. (2012) completed an fMRI study to compare nine different emotions that varied in valence and arousal. They found that high-arousal emotions, such as power or joy, were associated with increased activation in the premotor and motor cortex, which suggests that these emotions might be the cause of an urge to move along to the beat or dance. Low arousal emotions such as tenderness, calm, and sadness showed activation in the medial prefrontal cortex (MPFC), which, as mentioned above, is often linked to autobiographical or self-referential processing. This finding is further validated by a study that showed increased connectivity between auditory areas and frontal lobe areas, including anterior insula and MPFC, in individuals who experience chills (Sachs, Ellis, Schlaug, & Loui, 2016). These results may suggest that music can evoke self-referential processing, i.e. the consideration of one’s self relative to others, as part of its emotional influence. These findings suggest that social and emotional communications may serve as candidate evolutionary functions of music.

Conclusions

The human experience of music requires multiple functions of the mind that engage distributed networks in the brain. While some of these networks may be defined by their anatomical relationships (e.g. perisylvian areas), more networks are defined by their functions (e.g. learning and memory, emotion and reward). Taken together, the results reviewed in this introductory chapter echo many findings from cognitive neuroscience more generally. They also expand our knowledge by enabling rigorous tests of neuroanatomical models that are offered by other studies outside the realm of music. The study of music and the brain is rapidly gaining in theoretical as well as empirical momentum, and it is clear that music can also be helpful to neuroscientists by supplying a rich source of stimulus materials with which to test out contemporary hypotheses with regard to regional and/or network views of the human brain.

Core Reading

Janata, P. (2009). The neural architecture of music-evoked autobiographical memories. Cerebral Cortex, 19(11), 2579–2594.

Loui, P., Alsop, D., & Schlaug, G. (2009). Tone deafness: A new disconnection syndrome? Journal of Neuroscience, 29(33), 10215–10220.

Purves, D., Cabeza, R., Huettel, S. A., LaBar, K. S., Platt, M. L., & Woldorff, M. G. (2013). Principles of cognitive neuroscience (2nd Ed). Sunderland, MA: Sinauer Associates, Inc.

Salimpoor, V. N., Benovoy, M., Larcher, K., Dagher, A., & Zatorre, R. J. (2011). Anatomically distinct dopamine release during anticipation and experience of peak emotion to music. Nature Neuroscience, 14(2), 257–262.

Schlaug, G., Jancke, L., Huang, Y., & Steinmetz, H. (1995). In vivo evidence of structural brain asymmetry in musicians. Science, 267 (5198), 699–701.

Further References

Albouy, P., Mattout, J., Bouet, R., Maby, E., Sanchez, G., Aguera, P. E., Daligault, S, Delpeuch, C., Bertrand, O. Caclin, A., & Tillmann, B. (2013). Impaired pitch perception and memory in congenital amusia: The deficit starts in the auditory cortex. Brain, 136(Pt 5), 1639–1661.

Belfi, A.M., & Tranel, D. (2014). Impaired naming of famous musical melodies is associated with left temporal polar damage. Neuropsychology, 28(3), 429–435.

Bengtsson, S. L., Nagy, Z., Skare, S., Forsman, L., Forssberg, H., & Ullén, F. (2005). Extensive piano practicing has regionally specific effects on white matter development. Nature Neuroscience, 8(9), 1148–1150.

Cytowic, R. E., & Eagleman, D. M. (2009). Wednesday is indigo blue: Discovering the brain of synesthesia. Cambridge, MA: MIT Press.

Finke, C., Esfahani, N. E., & Ploner, C. J. (2012). Preservation of musical memory in an amnesic professional cellist. Current Biology, 22(15), R591–R592.

Foster, N. E. V., & Zatorre, R. J. (2010). A role for the intraparietal sulcus in transforming musical pitch information. Cerebral Cortex, 20(6), 1350–1359.

Gaab, N., Gaser, C., Zaehle, T., Jancke, L., & Schlaug, G. (2003). Functional anatomy of pitch memory—an fMRI study with sparse temporal sampling. Neuroimage, 19(4), 1417–1426.

Gaab, N., Schulze, K., Ozdemir, E., & Schlaug, G. (2006). Neural correlates of absolute pitch differ between blind and sighted musicians. Neuroreport, 17(18), 1853–1857.

Gosselin, N. (2005). Impaired recognition of scary music following unilateral temporal lobe excision. Brain, 128(3), 628–640.

Grahn, J. A., & Brett, M. (2007). Rhythm and beat perception in motor areas of the brain. Journal of Cognitive Neuroscience, 19(5), 893–906.

Griffiths, T. D., & Warren, J. D. (2002). The planum temporale as a computational hub. Trends in Neurosciences, 25(7), 348–353.

Halwani, G. F., Loui, P., Rüber, T., & Schlaug, G. (2011). Effects of practice and experience on the arcuate fasciculus: Comparing singers, instrumentalists, and non-musicians. Frontiers in Psychology, 2(July), 1–9.

Herholz, S. C., Halpern, A. R., & Zatorre, R. J. (2012). Neuronal correlates of perception, imagery, and memory for familiar tunes. Journal of Cognitive Neuroscience, 24(6), 1382–1397.

Hickok, G., & Poeppel, D. (2007). The cortical organization of speech processing. Nature Reviews. Neuroscience, 8(5), 393–402.

Janata, P., Birk, J. L., Van Horn, J. D., Leman, M., Tillmann, B., & Bharucha, J. J. (2002). The cortical topography of tonal structures underlying Western music. Science, 298(5601), 2167–2170.

Janata, P., & Grafton, S. T. (2003). Swinging in the brain: Shared neural substrates for behaviors related to sequencing and music. Nature Neuroscience, 6(7), 682–687.

Janata, P., Tillmann, B., & Bharucha, J. J. (2002). Listening to polyphonic music recruits domain-general attention and working memory circuits. Cognitive, Affective, & Behavioral Neuroscience, 2(2), 121–140.

Lahav, A., Saltzman, E., & Schlaug, G. (2007). Action representation of sound: Audiomotor recognition network while listening to newly acquired actions. Journal of Neuroscience, 27(2), 308–314.

Lee, Y.-S., Janata, P., Frost, C., Hanke, M., & Granger, R. (2011). Investigation of melodic contour processing in the brain using multivariate pattern-based fMRI. NeuroImage, 57(1), 293–300.

Limb, C. J., & Braun, A. R. (2008). Neural substrates of spontaneous musical performance: An fMRI study of jazz mprovisation. PLoS ONE, 3(2), e1679.

Loui, P., Li, H. C. C., Hohmann, A., & Schlaug, G. (2011). Enhanced cortical connectivity in absolute pitch musicians: A model for local hyperconnectivity. Journal of Cognitive Neuroscience, 23(4), 1015–1026.

Loui, P., Li, H. C., & Schlaug, G. (2011). White matter integrity in right hemisphere predicts pitch-related grammar learning. NeuroImage, 55(2), 500–507.

Loui, P., Zamm, A., & Schlaug, G. (2012). Enhanced functional networks in absolute pitch. NeuroImage, 63(2), 632–640.

Meyer, L. (1956). Emotion and meaning in music. Chicago, IL: University of Chicago Press.

Paraskevopoulos, E., Kraneburg, A., Herholz, S. C., Bamidis, P. D., & Pantev, C. (2015). Musical expertise is related to altered functional connectivity during audiovisual integration. Proceedings of the National Academy of Sciences, 12(40), 12522–12527.

Rauschecker, J. P. (2012). Ventral and dorsal streams in the evolution of speech and language. Frontiers in Evolutionary Neuroscience, 4. http://dx.doi.org/10.3389/fnevo.2012.00007

Sachs, M. E., Ellis, R. J., Schlaug, G., & Loui, P. (2016). Brain connectivity reflects human aesthetic responses to music. Social, Cognitive, and Affective Neuroscience, 1–6.

Samson, S., & Zatorre, R. J. (1992). Learning and retention of melodic and verbal information after unilateral temporal lobectomy. Neuropsychologia, 30(9), 815–826.

Schaal, N. K., Williamson, V. J., & Banissy, M. J. (2013). Anodal transcranial direct current stimulation over the supramarginal gyrus facilitates pitch memory. European Journal of Neuroscience, 38(February), 3513–3518.

Trost, W., Ethofer, T., Zentner, M., & Vuilleumier, P. (2012). Mapping aesthetic musical emotions in the brain. Cerebral Cortex, 22(12), 2769–2783.

Voss, P., & Zatorre, R. J. (2012). Organization and reorganization of sensory-deprived cortex. Current Biology, 22(5), R168–R173.

Zamm, A., Schlaug, G., Eagleman, D. M., & Loui, P. (2013). Pathways to seeing music: Enhanced structural connectivity in colored-music synesthesia. NeuroImage, 74, 359–366.

Zarate, J. M., Wood, S., & Zatorre, R. J. (2010). Neural networks involved in voluntary and involuntary vocal pitch regulation in experienced singers. Neuropsychologia, 48(2), 607–618.