3

SCARCE AND ENDANGERED WORKS

Using Network-Level Holdings Data in Preservation Decision-Making and Stewardship of the Printed Record

Jacob Nadal, Annie Peterson, and Dawn Aveline

This chapter proposes methods of making preservation decisions based on holdings data for library collections, rather than on intrinsic characteristics of the damaged items. Although focused on monographic collections, it builds on related work in serials preservation and argues that there is potential for a common framework that encompasses both types of published materials. This chapter evaluates the role of regional and consortial holdings, as well as the relationship of print to digitized holdings, to help tie local preservation decisions to larger shared print initiatives. These methods borrow from economic assessments of risk and use simple, readily available data in a way that encourages fast and predictable decision-making. The outcome is a risk-management framework focused on ensuring access to a complete copy of a work at a given point well into the future, without requiring a specific set of future conditions for their success. Instead, these approaches are designed to serve as an adaptive framework for ensuring access to the records and artifacts that are in the library’s care.

Beginning in 2008, the University of California, Los Angeles (UCLA) Library conducted a series of experiments and pilot projects to explore the issues at stake in stewardship of the printed record and the best ways to structure preservation programs to support the Library’s commitments. The “preservation review” function developed for UCLA Library uses holdings data to expedite preservation responses to critically damaged or decaying materials as soon as they are identified in the course of day-to-day library operations. Because the process uses a variety of regional and national holdings data, it also lends itself to preservation management in a shared print environment.

The first section of this chapter describes the process of collecting and evaluating holdings data and applying these results to preservation decision-making. The second section extrapolates from the lessons learned in local preservation work to issues implicit in collaborative print archiving projects and other types of network-level collection management. In both sections, the problems inherent in making local operational decisions in the face of incomplete or imperfect information are confronted. This chapter expands upon a white paper published by Peterson and Nadal in 2011, updating that work to include additional data from UCLA along with Aveline’s observations about the development of the preservation review program.1

BACKGROUND AND LITERATURE REVIEW

Large-scale digitization efforts in research libraries have progressed rapidly. By July 2014, the HathiTrust contained over eleven million volumes, a quantity comparable to any of the world’s major collections of record.2 According to a recent report, however, only 27 percent of the then ten million volumes in HathiTrust were in the public domain.3 The existence of this body of digitized materials does not immediately create ubiquitous access to the pre-twenty-first century printed record. Numerous factors remain at play to determine the future methods of providing access to library collections: intellectual property, licensing, and copyright; the applicability of fair-use standards and first-sale doctrine to reformatted and born-digital works; the role of libraries in material culture scholarship; and diverse and changing user preferences for print and digital versions of a work. The list can go on, but whatever the near-term obstacles and unresolved questions, the intention of the library community is clear: the effort is well underway to create ubiquitous digital access to the published record. In turn, the print collection is being positioned as an adjunct or interdependent part of the library. Printed books satisfy a reading preference, function as a source of artifactual evidence, serve as a backup in case of loss to digital collections, and provide a future source for re-mastering to create new digital objects.4

In general, preservation decision-making, cost management, and selection for preservation have been discussed from the perspective of how local decisions were made to manage capacity or to argue the need and value of libraries investing in preservation efforts overall. The national microfilming effort that began with the US Newspaper Program in the 1980s provided the impetus for much of this work. Robert Hayes’ 1987 report, The Magnitude, Costs and Benefits of the Preservation of Brittle Books, identifies keys factors in selection and costs associated with the microfilm projects into the 1980s and will be used as a source for cost comparisons in this chapter.5 Michael Lesk’s “Selection for Preservation of Research Library Materials” provides a thorough overview of selection methods for preservation effort.6 The focus of selection for reformatting in the late twentieth century was on pre-coordinated sets of materials, drawn from the areas of the collection identified as likely to yield preservation problems. These sets were generated through a “great collections” approach or through consultation of a scholarly bibliography. A great collections approach “begins with an evaluation of the relative strength of collections by classification” to produce “a ranking of comprehensiveness of various libraries in specific subject areas.”7 Scholarly lists are to be taken from published bibliographies or based on consultation with scholarly societies or ad hoc academic committees. Once a list is established or a great collection selected, preservation reformatting proceeds within that collection.

In his overview of the preservation selection methods that were becoming established in the 1990s, Robert Mareck describes a two-part process of selection for preservation and selection in preservation.8 The first part considers the decision about whether a particular library ought to preserve something; the second addresses the decision about how to preserve the item in question. Implicitly, this process represents a shift from a collection-driven strategy that declares the value of a set of library resources in advance of an item-driven strategy. Mareck describes his model in terms of a “macro preservation decision” that “is not based on the individual discrete item, but on its inclusion in a larger coherent body of material.”9 Despite the language of macro decisions and bodies of material, however, the critical distinction must be made between two broad types of library decision-making processes. Item-driven approaches originate from an item-in-hand and then investigate relevant context. Collection-driven approaches begin with a hypothetically complete corpus and then investigate whether the specific members of that corpus exist in a given location and in serviceable condition.

Mareck provides a four-part model for connecting the critical, or “macro,” assessments with the technical, or “micro,” assessments. Although Mareck presents a structured container for the critical and technical decisions and allowing that he is summarizing practices, his description of the “macro” stage remains loosely structured at best. He provides a list of questions to guide decision-making, but provides no metrics for determining an outcome or indication of what specific data would prompt a decisively positive or negative decision within any set of questions. Mareck’s second stage, the “micro preservation decision,” is more focused, and consists of a set of advantage and disadvantage statements for each standard preservation outcome. This chapter proposes an alternative that allows for more efficient decision-making by establishing clear metrics for the “macro” decision-making, and by using some of the data for “macro” decisions to guide “micro” decisions, as well.

Surveys and statistical approaches to preservation decision-making trace their roots to the Yale condition survey of the 1980s10 and to the survey strategies that Carl Drott developed for collection management beginning in the 1960s.11 These surveys have primarily focused on understanding the physical condition of library collections as a guide to developing preservation strategies. Twenty-first century preservation efforts have shifted toward a balanced strategy of artifact conservation, digital reformatting, and the development of cooperative repositories for long-term storage. Much of the earlier literature has lost its currency due to simple changes in technology or more complex changes in the preservation decision-making environment. Jan Merrill-Oldham’s article “Taking Care” in the Journal of Library Administration offers a clear summary of the current context for preservation decision-making.12 Merrill-Oldham reviews the primary arenas of preservation activity and discusses the primary factors at play in each. In this survey article, however, Merrill-Oldham does not investigate specific methodologies for decision-making, selection, or specifics of cost assessment.

Julie Mosbo and John Ballestro write about the feasibility of purchasing from secondary sellers, finding that purchases off the used, secondary, and out-of-print markets meet the needs of the Southern Illinois University Morris Library at a net cost savings.13 They found that only 3.5 percent of the items ordered required preservation treatment, but these were also works printed within the last decade. Very little has been written on the use of replacement as a preservation strategy specifically.

Libraries have a substantial history of cooperating to retain the published record, through formal efforts such as the Center for Research Libraries (CRL), and through the uncoordinated network effect, where numerous research libraries across the world purchase copies to serve their local users but also share scarce resources via interlibrary loan. Cooperative print-archiving projects developed in the first decades of the twenty-first century as a corollary to large-scale digitization of print collections and the broad shift in usage of serials from print to electronic versions. On July 21, 2003, CRL convened “Preserving America’s Printed Resources (PAPR),” a conference focused on “the role of repositories, depositories, and libraries of record.”14 This conference considered the major issues around the mission of libraries in cooperative preservation as well as key operation concerns implicit in collaborations around shared resources.

In October 2004—just over a year after the PAPR conference—Google launched the Google Books Libraries Project, followed shortly after by the Open Content Alliance and Microsoft LiveSearch collaboration.15 The advent of large-scale digitization placed a new focus on the role of print in library collections and raised questions about the relevancy of previous methods of selecting candidates for preservation reformatting. These trends are described in Oya Rieger’s 2008 report, Preservation in the Age of Large-Scale Digitization.16

Late twentieth-century preservation reformatting efforts selected damaged items from within the larger collection. The large-scale digitization projects of the twenty-first century do the opposite. To create a workflow with high and predictable throughput, these digitization efforts reject damaged items from a workflow that is not strictly constrained by subject parameters or scholarly input. A desire for large amounts of text in digital form is the motivation for these reformatting programs. Although preservation is not the primary goal of these projects, they do offer an ancillary benefit, since more copies in more formats spreads risk across a more diverse set of formats with different advantages and disadvantages.

In 2009, Ithaka S+R released What to Withdraw? Print Collections Management in the Wake of Digitization.17 This report encapsulates the lessons learned from the JSTOR project, digitization projects with Google and the Internet Archive, and the work conducted on print archives during the first decade of the twenty-first century. The report and accompanying Excel-based calculator introduce a methodology for deciding which print journals libraries can be withdrawn responsibly and how that set of materials can be expanded to allow libraries the maximum possible flexibility in managing their collections. What to Withdraw? builds on a study that Ithaka commissioned from Candace Arai Yano and her colleagues in the Department of Industrial Engineering and Operations Research at University of California, Berkeley. Yano’s study has also been used as the fundamental building block for the decision-making process described in this study.

METHODOLOGY I

RISK ASSESSMENT AND REPLACEMENT MODEL

Yano’s framework considers time and rate of loss for serials, based on data from the JSTOR validation process, library claims rates filed with insurance companies, and loss rates for circulating materials.18 These data are used in a calculation for estimating the number of required initial items to produce a single perfect copy at a given point in the future. This method of assessing survival probabilities is similar to methods used to resolve supply chain management issues around the production of spare parts for manufactured goods or to do risk modeling for redundant array of inexpensive disk (RAID) systems.19

Yano assumes that the intention for this final copy is perfect information content, not necessarily a single perfect artifact. Because her study focuses on journals, hybridization of a complete work from multiple sources is feasible as part of a later digitization process. More specifically, her work assumes that “virtually all of the journals will be stored in the form of bound volumes, so we take a bound volume . . . as the unit of analysis.”20 Applying this methodology to monographs raises the question of whether they exhibit the same survival characteristics as journals.

If one considers the problem to be merely the loss of bound volumes, then serials and monographs should be interchangeable. The performance of book structures bears no necessary relationship to the intellectual content of the work. However, the process of loss and reconstruction is more precisely understood as the loss of some knowable percentage of a serial, itself composed of bound volumes, over time. The extant volumes from one instance of a serial replace the lost elements from other instances of the serial, each of which has also suffered loss. The survival probability represents a measurement of the likelihood that all of the extant serials have not lost the same parts and thus eliminated the possibility of creating a perfect hybrid serial. Using this model for monographic assessment becomes intuitively problematic. For clarity, the crucial question should be framed around the notion of repetition of parts in a monographic series. The mathematics remain agnostic about bibliographic conventions, so intellectual series in and of themselves yield to the relationship of parts to wholes, or members to sets.

Although monographs are not explicitly cataloged as continuing resources, they are easily conceptualized into a variety of sets, based on common points of bibliographic identity, such as their author, year of publication, or language. Individual monographs can also be treated simply as members in a set of all instances of that monograph. Subsets could be conceived of in a variety of ways, including geographical regions and consortia, which are already common points of identity and collaboration among libraries.

In this vein, Yano’s work draws on Martin L. Weitzman’s “The Noah’s Ark Problem,” which uses what Weitzman calls a “library model” to evaluate biodiversity risks.21 He treats the underlying conservation unit, the species as a library, observing that “the book collections in various libraries may overlap to some degree,” and develops a measurement of diversity as “the size of the set that consists of the union of all the different books in all the libraries” in the study.22

The method suggested by this chapter follows Weitzman by treating WorldCat as the assemblage of libraries and UCLA Library’s collections as one branch within the larger set. Weitzman’s model proposes a diversity function, V, for a set of libraries, S. Each library within S holds a set of books held in common with other libraries, J, and a set of books unique to that library, Elibrary. This process examines each book in preservation review to determine if it belongs to the set EUCLA or J, and if the latter, to decide if its withdrawal adversely affects V(global), the diversity function of libraries globally. Because real libraries are being analyzed rather than the abstract library that Weitzman models, it is possible to investigate EUCLA, EUC, and ECA, in addition to J. Furthermore, for all of the theoretical value of Weitzman’s model, it is Yano’s formulation that does the heavy lifting by quantifying the extent of risk to the shared collection, the J set of V(global), created by withdrawal or replacement.

The question at issue, “of the set of items, what is the chance of loss,” can be meaningful whether the items are individual bound titles (monographs), sets of bound volumes in unanalyzed serials, or multi-volume monographic sets. The issue that remains is determining the loss rate for monographs, in case it is different than the loss rate for serials/sets. Supposing 100 instances of a serial/set with 100 volumes, 1 percent loss means the loss of one volume. In the monographic parallel, something like 100 copies of a monograph with 100 pages, 1 percent loss means one page. Although the same risk evaluation formulas can be applied, monographs may seem more fragile than serials, since 1 percent loss occurs at a dramatically lower threshold. To account for this in the analyses below, loss rates are derived from observation and the rates are doubled to seek thresholds that are safe for both. In addition, several sets of monographs (UCLA, UC, CA, and Global) are used, in effect creating series at different consortium and regional levels, imitating the pattern of serials.

METHODOLOGY II

DATA COLLECTION, ACCURACY, AND APPLICABILITY

For this study, data was used from 1,408 items selected at random from among the brittle materials identified at circulation points and by collection managers during the preceding decade at UCLA Library. This provided a set of materials from across the Library’s entire collection that is large enough to be statistically useful, provided it is truly random and free from major biases in selection. This set would ideally draw on a random sample of damaged materials from the entire collection to develop policies, strategies, and preservation actions at the institutional level, but there are significant barriers to the discovery of a sufficient sample of these materials.

The common traits of interest among preservation candidates—paper decay and mechanical failure of book structures—are caused by inherent factors as well as external influences. Neither set of causes is tracked in library databases, so automated generation of a randomized sampling list is impossible. The only readily available proxies for severe damage are publication date and sometimes country of publication, but these factors show low correlation to severe damage: although severely damaged materials are somewhat likely to be old, older materials are not as likely to be severely damaged.23 Locating an ideal random sample therefore requires a hands-on survey across the entire collection. For UCLA Library, with a collection of over nine million items, the walking distance of the survey alone could approach forty miles.24 The sample used for this study matched the profile of severely damaged materials as they appear in a general preservation collection survey that included materials from all UCLA Library branches and collection areas, suggesting that this sample is representative of the collections as a whole. Because the preservation department is a fairly new entity in the UCLA Library, no systematic plan has been in place for collecting or treating brittle materials, so it is unlikely that the sample is biased by any long-running and consistent past practice for collecting candidate volumes for preservation treatment.25

PRESERVATION TREATMENT OUTCOMES

Preservation options for severely damaged materials are limited. These items are characterized by extreme paper decay, severe structural damage, or both. As a consequence of paper decay, the materials used for repair often exceed the mechanical strength of the paper. The double-fold test characterized this problem by counting how many times a page could fold before breaking apart.26 A sheet of paper in good condition will survive dozens of folds at least; hundreds if one has the patience. The books with paper decay in this study often fail before one fold is complete, or at best, two or three. Even the small level of adhesion generated by a repositionable note (such as the Post-it brand from 3M), which is on the order of 0.1–2.7 N/25mm, suffices to peel away a layer of paper from many of the items considered.27 Structural damage to the case or text block of the volume forms the other main factor. The materials in this study included some that the dog literally ate; most others exhibited enough damage that conventional repair or commercial rebinding was not possible. In many instances, chemical decay and structural damage play upon one another to create items with complex, compound problems. The physical integrity of all items subjected to preservation review has been so severely compromised that the item can no longer serve its function as an information carrier, and cannot be circulated without risk of further damage or content loss.

In this study, preservation review leads to two broad outcomes: withdrawal or replacement. Replacement options include reformatting to a digital surrogate, print facsimile (or both), or purchase of another copy of the same edition in acceptable condition.28 The final disposition of the original volume is implicit withdrawal following reformatting or replacement. If an item of substantial artifactual value is identified for replacement and another print copy cannot be substituted, the item is reformatted as it came to preservation review, the original artifact is placed in a suitable enclosure, and then deposited into closed storage with an in-house use only status. In principle, the option of heroic conservation is available should materials of dramatic artifactual or associative value come to light, but this has not occurred in practice.29

Yano’s survival probability algorithm assumes a survival scenario for a hybrid print and digital information environment where i) the availability of digital version will lead to low print usage, ii) the movement of print materials from open stacks to closed depositories will reduce loss and damage, and iii) chemical decay can be reduced through environmental controls.30 The treatment outcomes developed for the preservation review process are designed to achieve these conditions through digitization of scarcely held materials and, when replacement copies are purchased or a retention decision is made for the original book, relocating it to the Southern Regional Library Facility (SRLF). SRLF deposit with a restricted use status performs the functional role of a dark archive in Yano’s model, with good environmental conditions, near-zero loss, and an option for later reformatting. When the replacement option leads to the discarding of a print item, it is only after digitization.31 The loss to the library system is minimal in these instances. The materials in question are severely damaged, making them poor exemplars of the artifactual value of that work. Creating a digital surrogate allows the library system to gain the added preservation benefits of working in a hybrid environment and spreads the risks to the survival of content across multiple formats.

HOLDINGS-BASED DECISION-MAKING SCENARIOS

This project was predicated on the idea that complex decision-making strategies could be replaced with simple holdings analysis. To the extent that the simplified strategy is successful, the total cost of preservation actions can be more closely evaluated and controlled. In addition, the otherwise diffuse decision-making efforts of library staff can be clearly located in the organization, creating an opportunity for better accountability, reduced costs, and fewer delays in service. To test the assumption that holdings-based decision-making could be an effective proxy for other means of preservation decision-making, three decision-making formulas were created. These holdings-based scenarios are described as aggressive, moderate, and conservative, in reference to the degree to which they lean toward the “aggressive” option of disposal of materials instead of replacement, repair, or reformatting.

Each of the three formulas has two thresholds, leading to three classes of preservation decisions. The first is the replacement threshold, the number of holdings in WorldCat below which the default is to take preservation action. The total number of WorldCat holdings includes only items of the same edition and format. Below this number, the default decision is to replace or reformat. The second threshold, withdrawal, marks the level of holdings above which the default is to withdraw the copy.

The selected replacement thresholds were created for the specific survival probabilities based on Yano’s model, as were the upper thresholds in the aggressive and conservative formulas.32 The withdrawal threshold in the moderate formula reflects local practice, and was derived from an analysis of past collection management decisions at the UCLA Library. These decisions showed a strong trend toward withdrawal when global holdings in WorldCat were greater than twenty-six, so this number was selected as the upper boundary for the moderate scenario. The UCLA Library Preservation Department currently follows the moderate formula. Finally, a policy decision was implemented always to take preservation action when UCLA holds the last copy in the University of California system or one of only two copies in the state of California, independent of other holdings data. Although almost an afterthought in the early investigations, this policy has turned out to be one of the most compelling features of this method.

The withdrawal and retention thresholds bracket a zone in which additional data, such as the existence of digitized copies and where they are held, are collected to make a decision. This category was established initially because the thresholds do not perfectly align, and it had been the intention for items in this middle group to be candidates for referral to a decision-maker outside of preservation. This category drew on the assumption that this group of materials would benefit from further knowledge, such as collection priorities or local user needs, in the preservation decision-making process. In practice, only about 1 percent of items fell into the middle category and almost none had their default decision changed by a secondary reviewer. The value of this group warrants further consideration for future implementations of this decision-making framework.

FORMULAS FOR REPLACEMENT AND WITHDRAWAL

The moderate formula indicates replacement of items with fewer than twelve global holdings, fewer than three California holdings, and zero additional UC holdings; withdrawal of items with more than twenty-six global holdings, more than five California holdings, and more than two UC holdings; and to ask collection managers to review all other items. Based on Yano’s model, and assuming an annual loss rate of 1 percent or less, these thresholds allow for estimating the probability that, after taking the local preservation action, a complete copy can be found or reconstructed in 2100 CE. For California alone this probability comes to approximately 78 percent, and surpasses 99.5 percent across global holdings.

The aggressive formula replaces items with fewer than four global holdings, fewer than one California holding, and zero UC holdings, and withdraws items with more than twelve global holdings, more than three California holdings, and more than one UC holding; all other items will be reviewed. This formula reduces California’s perfect copy survival probability to about 65 percent and places the worldwide survival odds at 92.5 percent.

Application of the conservative formula leads to replacing items with fewer than sixteen global holdings, fewer than four California holdings, and one other UC holding, and withdrawal of items with more than thirty global holdings, more than ten California holdings, and more than three UC holdings; all other items will be reviewed. This formula produces a California state-level perfect copy survival probability of 87 percent, based on four copies, and worldwide survival odds upward of 99.9 percent. Table 3.1 shows the withdrawal and replacement thresholds for each formula.

Table 3.1 | Thresholds for preservation action

Formula |

Grouping |

Replace below |

Withdraw above |

|---|---|---|---|

|

Aggressive |

WorldCat Global |

4 |

12 |

|

California |

1 |

3 |

|

|

UC |

0 |

1 |

|

|

Moderate |

WorldCat Global |

12 |

26 |

|

California |

3 |

5 |

|

|

UC |

0 |

2 |

|

|

Conservative |

WorldCat Global |

16 |

30 |

|

California |

4 |

10 |

|

|

UC |

1 |

3 |

Table 3.2 shows the WorldCat holdings and associated survival probabilities for each scenario described above and listed in table 3.1. In table 3.2, the survival probabilities can be read as risk tolerances. They could also be read as a statement about the level of risk at which the stakeholders feel compelled to hold the line against further loss (the replacement threshold) and above which there is confidence in the system’s ability to absorb the loss of UCLA’s damaged copy—and still maintain access well into the future (the withdrawal threshold). This implicit reading of the moderate threshold, which UCLA has been developing for actual application rather than theoretical study, suggests that UCLA Library evaluates preservation risks out to the end of the century, always takes preservation action when a work’s chance of survival drops below 90 percent, and never withdraws materials unless there is a 99 percent or better chance that the global library system can preserve that work until the year 2100 CE.

Table 3.2 | Survival probabilities at each preservation threshold

Scenario |

Replacement threshold |

Survival probability, % |

Withdrawal threshold |

Survival probability, % |

|---|---|---|---|---|

|

Aggressive |

4 |

50.76 |

12 |

88.06 |

|

Moderate |

12 |

88.06 |

26 |

99.00 |

|

Conservative |

16 |

94.12 |

30 |

99.51 |

Table 3.3 shows the range of probabilities for the library network to be able to produce a single perfect copy in 2100 CE, given an initial number of starting copies, based on Yano’s study.33 The table shows probabilities for two loss rates. There are strong reasons to include the 1 percent loss rate projection as the default assumption for planning purposes. This level closely matches the internal estimates of loss based on interlibrary loan data, circulation data from the Southern and Northern Regional Library Facilities, and data from the UCLA Library catalog and circulation records.34 A second section of the table shows the equivalent risks at a 2 percent loss rate to provide additional context and a worse- (if not worst-) case projection. In the interest of being risk-averse, the 2 percent loss scenario is employed in table 3.2 and in other analyses throughout this chapter.

Accurate assessment of risk is most important at the lower threshold, where the decision branches between defaulting to replace the item in question, or to send the item for additional review. At the withdrawal threshold, the difference in survival probabilities narrows to about 1 percent. Even that 1 percent difference causes little real impact on survival probabilities in the sample studied, because very few items actually comprise holdings precisely matching the threshold level. Table 3.4 shows the median, average, and maximum holdings levels for global, California, and the UC system across the entire sample. At the median global holdings level of sixty-one copies, the difference in survival probability between the 1 and 2 percent loss rate projections shrinks to 0.002 percent. The high level of median holdings supplies important information for the risk assessment. Even if the real rate of loss is twice the estimated rate of loss—2 percent instead of 1 percent—at least half of the materials considered in preservation review will maintain the same survival outlook.

Table 3.3 | Probabilities for survival of a single perfect copy in 2100 CE (based on Yano et al.)

Projection 1 Single copy; 1% loss until 2100 CE • Planning horizon: 90 years • Loss rate: 1% |

Projection 2 Single copy; 2% loss until 2100 CE • Planning horizon: 90 years • Loss rate: 2% |

||

|---|---|---|---|

|

Initial number of copies |

Survival probability, % |

Initial number of copies |

Survival probability, % |

|

1 |

40.4732 |

1 |

16.2311 |

|

2 |

64.5656 |

2 |

29.8276 |

|

3 |

78.9070 |

3 |

41.2174 |

|

4 |

87.4440 |

4 |

50.7584 |

|

5 |

92.5258 |

5 |

58.7508 |

|

6 |

95.5509 |

6 |

65.4460 |

|

7 |

97.3516 |

7 |

71.0545 |

|

8 |

98.4235 |

8 |

75.7527 |

|

9 |

99.0615 |

9 |

79.6883 |

|

10 |

99.4414 |

10 |

82.9851 |

|

11 |

99.6675 |

11 |

85.7468 |

|

12 (moderate replacement threshold) |

99.8021 |

12 (moderate replacement threshold) |

88.0602 |

|

13 |

99.8822 |

13 |

89.9982 |

|

14 |

99.9299 |

14 |

91.6216 |

|

15 |

99.9582 |

15 |

92.9815 |

|

16 |

99.9751 |

16 |

94.1207 |

|

17 |

99.9852 |

17 |

95.0749 |

|

18 |

99.9912 |

18 |

95.8743 |

|

19 |

99.9948 |

19 |

96.5440 |

|

20 |

99.9969 |

20 |

97.1049 |

|

21 |

99.9981 |

21 |

97.5748 |

|

22 |

99.9989 |

22 |

97.9685 |

|

23 |

99.9993 |

23 |

98.2982 |

|

24 |

99.9996 |

24 |

98.5744 |

|

25 |

99.9998 |

25 |

98.8058 |

|

26 (moderate withdrawal threshold) |

99.9999 |

26 (moderate withdrawal threshold) |

98.9996 |

|

27 |

99.9999 |

27 |

99.1620 |

|

28 |

100.0000 |

28 |

99.2980 |

|

29 |

100.0000 |

29 |

99.4120 |

|

30 |

100.0000 |

30 |

99.5074 |

Table 3.4 | Median, average, and maximum holdings

WorldCat global holdings |

CA holdings |

UC holdings |

|

|---|---|---|---|

|

Median |

1 |

3 |

1 |

|

Average |

146 |

11 |

2 |

|

Maximum |

4,085 |

263 |

23 |

Figure 3.1 shows the relationship of the 1 and 2 percent loss rate projections to one another. Both projections approach 100 percent probability for the survival of a perfect copy in 2100 CE at the twenty-six copy threshold for withdrawal decisions that are used in the moderate scenario.

Figure 3.1 | Comparison of asymptotic survival probability curves, 1 and 2 percent loss rate projections

When applying the three different formulas to the study materials, the findings emerged as expected. The aggressive formula led to more items withdrawn, fewer items replaced, and slightly more items reviewed. The conservative formula led to more items replaced, fewer withdrawn, and more reviewed. Table 3.5 shows the numbers of items, in a total sample of 1,408 items, that receive default decisions of withdraw, replace, or review.

Table 3.5 | Actions derived from each formula

Aggressive |

% |

Moderate |

% |

Conservative |

% |

|

|---|---|---|---|---|---|---|

|

Withdraw |

765 |

54 |

631 |

45 |

521 |

37 |

|

Replace |

585 |

42 |

760 |

54 |

872 |

62 |

|

Review |

58 |

4 |

17 |

1 |

15 |

1 |

IMPLICATIONS FOR COLLECTION STRATEGY

Although these data collected to facilitate local preservation management, they can be analyzed with a view to shared collections management. For example, a telling indicator from the preservation review materials reveals that median holdings are lower than average holdings by a factor of roughly three (table 3.6).

Table 3.6 | Average and median holdings

WorldCat Global holdings |

CA holdings |

UC holdings |

|

|---|---|---|---|

|

Average |

146 |

11 |

2 |

|

Median |

43 |

3 |

1 |

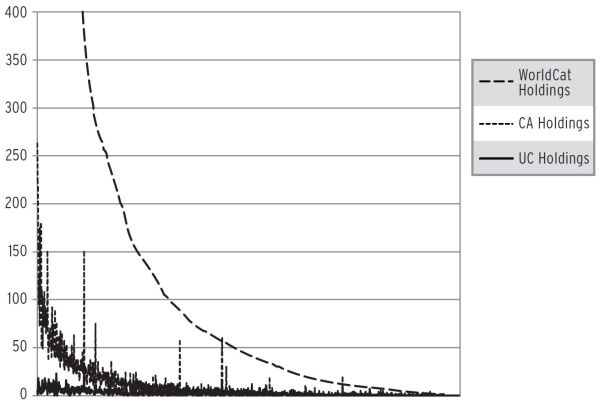

This suggests that a relatively small number of very commonly held works inflates the average. Figure 3.2 illustrates this pattern, commonly called a “long tail,” after the book and article by Chris Anderson.35 Significantly, figure 3.2 is truncated at 400 holdings, the ninetieth percentile for holdings. The top 10 percent of global holdings for these materials cover an order of magnitude change from 407 to 4,085 holdings.

Figure 3.2 | Holdings of the sample set in WorldCat, California, and the University of California

If the holdings for the entire set of 1,408 items are examined, a clear long tail trend appears, with the exception of the UC holdings. The fairly flat curve of UC holdings likely arises from the small number of UC campuses compared to the number of libraries in the world. This also fits with the shift in UC policy toward limiting the purchase and maintenance of multiple copies across the system wherever possible. Given the origin of this dataset in preservation review, the low UC-wide holdings remain consistent with expectations and UC collection development policies.36

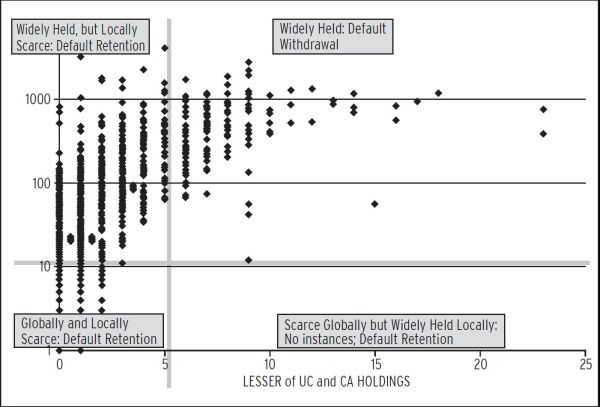

To view these data in another way, global holdings are plotted against the lower of UC or California state holdings. In figure 3.3, global holdings are plotted logarithmically, since they greatly outpace state- or system-level holdings, and the resulting scatter plot is divided into quadrants that show the default actions for each combination of local or worldwide scarcity.

Figure 3.3 | Logarithmic plot of holdings with implied preservation activity

Materials that are widely held from a global perspective but scarcely held within the local system are particularly visible in this presentation, where they cluster in the upper left-hand quadrant. As noted above, adding replacement thresholds for local or consortial groups makes almost no impact on system-wide risk to the loss of print materials. However, it transforms preservation decision-making and the ability of a given library or system to independently meet its users’ needs.

Forty percent of the materials in the study fall into this locally scarce but globally common set of materials. This is an important counterbalance to the idea of a single, centrally planned, national collection of record. The analysis suggests that preservation strategies may be best articulated on a regional or consortial basis, with national goals emerging from those efforts. To illustrate how local collection management decisions can create positive global outcomes, two scenarios can be played out using the data and risk projections presented thus far, by adding additional retain and withdraw thresholds for consortia or regional collections.

THE INFLUENCE OF REGIONAL AND CONSORTIAL HOLDINGS

In the first scenario, thresholds are added for the number of copies in the UC system and in the state of California. The differences between the thresholds to withdraw, replace, and review in each of these scenarios are small, as table 3.7 demonstrates. The Difference column of table 3.7 shows how much closer the UC and California numbers are to each other as compared to the global numbers, effectively narrowing the band of “review” materials needing input from outside the preservation department. Consequently, fewer items require secondary review in scenarios that include UC or California holdings, even though the system-wide risk of loss (driven by the global “withdraw above” threshold) stays the same.

The total numbers of withdraw and replace decisions do not vary greatly between any of the original formulas; however, when one of the holdings groups is removed from the formula, the differences increase. Table 3.8 shows the percentage of each type of decision in the three formulas with and without UC holdings data.

Table 3.7 | Withdraw and retain thresholds for each formula and holdings group

Aggressive |

Moderate |

Conservative |

|||||||

|---|---|---|---|---|---|---|---|---|---|

|

Holdings |

Retain below |

Difference |

Withdraw above |

Retain below |

Difference |

Withdraw above |

Retain below |

Difference |

Withdraw above |

|

WorldCat Global |

4 |

8 |

12 |

12 |

14 |

26 |

16 |

14 |

30 |

|

California |

1 |

2 |

3 |

3 |

2 |

5 |

4 |

6 |

10 |

|

UC System |

1 |

1 |

1 |

1 |

2 |

2 |

2 |

2 |

3 |

Table 3.8 | Percentage of decisions with varied holdings groups

Aggressive |

Without UC, % |

Moderate |

Without UC, % |

Conservative |

Without UC, % |

|

|---|---|---|---|---|---|---|

|

Withdraw |

54 |

66 |

45 |

52 |

37 |

44 |

|

Replace |

42 |

25 |

54 |

48 |

62 |

54 |

|

Review |

4 |

8 |

1 |

0 |

1 |

2 |

If UC holdings are ignored, but other constraints remain, the difference between the categories shifts by 16 percent toward withdrawal of material. Purely within the boundaries of this model, and if it had no obligations to the UC system, UCLA could, in effect, justify an 11 percent increase in the rate at which it deferred preservation responsibility to other institutions. This is not, in fact, the aspiration, policy, mission, or vision of the UCLA Library. And fortunately, there is some evidence that adding consortium or regional thresholds creates important benefits for the local library: savings in labor, by reducing secondary review of materials, and an additional level of protection against loss of materials. Taken together, these indicators provide a useful object lesson in how to situate evidence-driven practice in library management. These data allow the exploration of many possible paths and outcomes, but as a matter of institutional mission, UCLA Library intends to serve as a collection of record that “develops, organizes, and preserves collections for optimal use.”37

Interpreted from an intention to preserve, these models reinforce the importance of explicitly declared networks for preservation, and provide an incentive to associate with other collecting institutions, the better to safeguard against withdrawal activity that would threaten our mission. In effect, the constraints of system-wide policy and obligations to local or regional groups enjoin the parties to a greater degree of collective preservation effort.

The inclusion of California holdings information makes less of an impact than the addition of UC data, but it keeps items out of the review category in the moderate formula, and leads to a slight shift in the proportion of materials retained or withdrawn. The review category in the moderate formula increases significantly if the California holdings data are ignored, illustrating the common theme that having more decision-making data about each title allows for more default actions to withdraw or retain, and fewer review decisions, shown in table 3.9. The effect of removing the California holdings, but leaving UC and global holdings, is less dramatic than removing the UC holdings.

Table 3.9 | Retention scenarios without California holdings group

Aggressive |

% |

Moderate |

% |

Conservative |

% |

|

|---|---|---|---|---|---|---|

|

Withdraw |

763 |

54 |

685 |

49 |

492 |

65 |

|

Replace |

582 |

41 |

642 |

46 |

900 |

64 |

|

Review |

63 |

4 |

81 |

6 |

16 |

1 |

One conclusion is that California is simply a large enough state with enough major libraries that it operates as a good proxy for the world. This may be less true as the scale of analysis shifts from this case study to the entire collective collection, but the alignment between these data and the work on mega-regions merits further attention.38 States, regions, or other large affinity groups may provide a valuable scoping mechanism for planning preservation and shared collection networks. Such groupings may be large enough to approximate the global patterns of retention necessary for the long-term survival of materials, while also being small enough and having enough shared interests among stakeholders to organize collaboration. Indeed, at this scale, there are likely to be preexisting collaborative ventures that can be leveraged for print archive efforts.

Table 3.10 shows how removing either the global holdings or the local holdings (UC and California) from the moderate replacement thresholds (replace below 12, withdraw above 26) leads to a more conservative outcome than a scenario that only looks at global holdings through WorldCat on the one hand, and a more aggressive approach than an exclusively local scenario on the other. Considering only global holdings leads to 30 percent more withdrawal but 50 percent less automatic replacement. Considering only local holdings, in this case California state holdings and UC system holdings, leads to slightly less (-19 percent) withdrawal and almost identical replacement outcomes (-2 percent).

Table 3.10 | Moderate formula with varied holdings groups

Moderate |

Moderate, only Global |

% Change versus moderate |

Moderate, only UC+CA |

% Change versus moderate |

|

|---|---|---|---|---|---|

|

Withdraw |

630 |

822 |

30 |

511 |

-19 |

|

Replace |

761 |

382 |

-50 |

747 |

-2 |

|

Review |

17 |

204 |

1,100 |

150 |

782 |

The elimination of either the global or regional holdings increases review cases as well. Although the percentage change is staggering (782 to 1,100 percent), the actual number of review cases only becomes significant where global holdings are not evaluated. In that instance, there would be 150 items for review, 11 percent of the total sample. As we expanded this work from a pilot project with 376 samples to this case study of 1,408, the review category diminished in importance whenever groups of holdings data were added. From a purely practical view, within this model of preservation review, one could argue that it is worthwhile to always evaluate the global holdings just to reduce the amount of selector review required, and by happy coincidence, this also helps to avoid global scarcity problems.

The impact of these scenarios on the review category has been of particular interest for two main reasons. First, keeping items out of the review category means fewer backlogs in the preservation department. Second, placing items into the review category shifts some decision-making work from preservation staff to collection managers. The time required to get the UC holdings from WorldCat is small and easily measured, unlike the time it takes for collection managers to receive and review an item in order to make a decision based on their knowledge of or beliefs about its usefulness to a field of study and relevance to the collection.

Another factor considered in the decision-making was whether or not an item appears in HathiTrust. The original plan was to use HathiTrust data to resolve items left in a review status for several months, so that if collection managers had not made a decision on review materials and a full-view HathiTrust copy were available, it would revert to a withdrawal decision. Although the small number of materials in the study requiring outside review has made this a moot point, the rationale behind this consideration deserves attention. Reformatting options are evaluated for items that fall into a lower-risk category, indicating the existence of other copies. The damaged copy in-hand is a poor candidate for digitization in any case, so its withdrawal does not have an adverse effect on the system-wide possibility of creating a better digital version in the future, or using a better copy from another library.

The results of fully incorporating HathiTrust data into the decision-making are shown in table 3.11. Using the moderate formula as the starting point, the presence of a HathiTrust copy was used to change review decisions into withdrawals. In the scenario that only uses global holdings, changing the decisions based on the existence of a copy in HathiTrust only affects outcomes for four volumes. However, in the localized scenario that includes the UC and California holdings, this approach greatly reduces the number of volumes left in review status, from 150 to 9, a 94 percent decrease. This application of HathiTrust data suggests some potential for consortial networks to do effective internal coordination of print and digital preservation efforts.

Table 3.11 | Impact of HathiTrust holdings on the moderate formula, with varied holdings groups

Moderate |

Moderate with HathiTrust |

% Change |

Moderate UC + CA |

UC + CA with HathiTrust |

% Change |

|

|---|---|---|---|---|---|---|

|

Withdraw |

630 |

638 |

1 |

511 |

638 |

25 |

|

Replace |

761 |

761 |

0 |

747 |

761 |

2 |

|

Review |

17 |

9 |

-47 |

150 |

9 |

-94 |

Although this model of preservation decision-making shows many advantages, there are concerns for a hypothetical situation where the library withdraws the damaged copy in its collection, but no other libraries with holdings of that title have a policy that would ensure they retain their copies. In the moderate formula, UCLA withdraws when there are more than twenty-five extant copies, so the specific version of this concern would be that two dozen other libraries will lose their copies or withdraw it without any consideration of the network impact of that action. The likelihood of that is very difficult to determine. Although it is assumed the probability would be quite low, there is no definitive data to affirm or negate this assumption. This dilemma supports an additional, compelling justification for having a second set of replacement criteria built around a region or consortium, in this case California and the University of California system.

As discussed above, adding the consortial checks reduced the quantity of materials withdrawn as a default by 30 percent in the study (see table 3.10). Further, as figures 3.1 and 3.2 show, a substantial amount of material that could be withdrawn without affecting system-wide survival rates must be retained in order to protect consortial preservation and access. This point bears some additional emphasis, given the amount of activity focused on network-level collection development. For several of these network efforts, the wish to avoid duplicate maintenance costs and the possibility of recovering space in library facilities form the chief motivations; these projects focus primarily on journal backfiles.39 Monographs are receiving increased attention, though. Robert Darnton recently made a broader argument for a renewed national library building effort, for example, and recent announcements by the HathiTrust and Internet Archive indicate their engagement with the idea of building a monographic print archive to mirror their digital holdings.40 None of these projects specifically say that one and only one print copy of a work is the end game. However, most of these efforts center their activity on a small number of copies, identified on the network level and placed into trusted archives on behalf of the community. The authors endorse the core concerns identified by these projects’ leaders, but the analysis suggests that at least a dozen regional or consortial efforts, rather than a few national efforts, will lead to more favorable outcomes. Localized efforts are more likely to see implementation and will be easier to maintain, since they build on existing communities and established operations. The data show that multiple systems acting independently can provide reliable preservation, especially if they have a means of disclosing their actions.41

As different replacement scenarios are tested, it is not sufficient to simply develop an internal matrix for decisions and declare success. Preservation operations have two stewardship responsibilities. One is the basic fiduciary responsibility to expend funds in an effective and transparent fashion. The other is the larger goal of ensuring that those funds actually support the survival of cultural and artifactual heritage. The methodology described above places both stewardship responsibilities into a measurable framework that supports meaningful comparison and collaboration between institutions.

FURTHER RESEARCH

The approach to making preservation review decisions in this chapter only governs UCLA Library, not the decisions of the other holding libraries in WorldCat. As discussed above, replacement and withdrawal thresholds are selected to allow for a margin of safety against the possibility of system-wide loss of access to a work. Further hedges against the risk of loss are made by applying consortia holdings as a decision-making element, so that even if a work is widely held, a copy in the state or UC system is maintained. A secondary benefit arises as well, since the more holdings groups included in the formula, the fewer items fall into the review category. The effect of adding additional real or hypothetical groups to this analysis was not evaluated, but that is a promising direction for future research.

Further studies should be conducted to determine which pieces of data are the most important to collect and what replacement thresholds most closely mirror collection managers’ levels of risk tolerance. By providing proposed decisions to collection managers without identifying whether the aggressive, moderate, or conservative protocol was used, it would be possible to track the way in which their decisions align with the pattern of default preservation decisions and to see which data most strongly correlate to their decisions. Additionally, areas where managers consistently decide against the numerical indicators offer valuable clues about the library’s collecting interests and intentions. This information may be key to developing more effective assessment and decision-making routines. Ideally, only data with the strongest relationship to a desired replacement pattern will be collected. For example, if it is determined that decisions based on the UC system holdings data correlated with 95 percent of the collection manager decisions, and the global holdings data correlated with 94 percent or 96 percent of the collection manager’s decisions, the utility of collecting global holdings data might be questioned. As the data-driven decisions start to more closely mimic collection managers’ decision-making, it may be necessary to reevaluate the utility of the review category between the withdrawal and retention thresholds. Eliminating the review category would further the goals of reducing a backlog in preservation and of making efficient, timely, evidence-based decisions.

In addition, better analysis tools for the WorldCat database would facilitate the investigation of questions such as overlap of holdings and classes of libraries that hold various types of materials. For instance, if it were found that of the thirty-two copies held for a given title, the thirty-one non-UCLA copies were in small school and public libraries, it might be concluded that the preservation responsibility would belong to UCLA. Contemporary library collection management strategies increasingly incorporate cross-collection availability patterns and the potential for systematic collaborations in developing shared print collections. For this, the holdings data in WorldCat needs to be much more accessible. The WorldCat Collection Analysis tool made some progress in this direction, but its restricted access and high pricing impeded its use; its publicly available documentation and interfaces did not disclose clearly how one could create selected title lists for comparison, rather than entire library collections; and the tool provided limited ability to create customized datasets that would be useful for analysis in external tools. Collection Evaluation replaced the Collection Analysis tool in late 2013, after this study. The authors have not yet evaluated this new tool for its usefulness.42

CONCLUSION

This project’s primary value stems from its addressing the review needs that emerge from the subset of the collection used in day-to-day library operations. It prevents the accumulation of large amounts of unusable materials with no clear outcomes assigned to them, while freeing limited local resources to be spent in a way that provides the greatest return. By using a risk-assessment model to guide decisions, it can ensure that funds are spent to immediate, positive effect and avoid costs that can be safely pushed elsewhere in the global library network and to a later point in time.

The broader secondary value of this project derives from its ability to both improve network-level library services and to provide models for local action within large-scale collections strategies. In the work to date, several hundred volumes that would otherwise be excluded from large-scale digitization efforts have been identified. Additional volumes of substantial scholarly and artifactual value appearing unique or so scarcely held that UCLA Library were discovered and became the only realistic point of access in North America. This has been achieved through a balancing of efforts. Holdings-based analysis is used to make the majority of decisions quickly, but the process includes a simple method of detecting characteristics that indicate the need for more complex decision-making. This ensures that objects with scholarly or artifactual value do not get overlooked within the large-scale process. This combination of a broad view of collection management coupled to a clear set of local needs is highly effective in preservation management and suggests a strategy for shared library collection management efforts.

Library preservation activities can benefit from new techniques for management and new technologies for treatment, yet the mission of preservation remains inherently cautious. There is a vital place for this same balance in shared print projects. Preservation librarians and collection managers must be keenly aware of the opportunity costs involved in delayed or slow-moving decisions, but they must pay equal attention to the opportunity costs involved in working only in data-driven abstractions and neglecting the individual value of the items in their care. A book kept is not a book lost, after all.

Notes

1. Jacob Nadal and Annie Peterson, “Scarce and Endangered Works,” 2011. http://jacobnadal.com/162.

2. “2014 Mid-Year Review,” HathiTrust Digital Library, July 7, 2014, www.hathitrust.org/updates_mid-year2014.

3. HathiTrust Digital Library, “Ten Million and Counting,” Perspectives from HathiTrust (blog), January 6, 2012, www.hathitrust.org/blogs/perspectives-from-hathitrust/ten-million-and-counting.

4. Gary Frost, Future of the Book: A Way Forward, Iowa Book Works, 2011.

5. Robert M. Hayes, The Magnitude, Costs and Benefits of the Preservation of Brittle Books (Washington, DC: Council on Library Resources, 1987), 2.

6. Michael Lesk, “Selection for Preservation of Research Library Materials,” (Washington, DC: The Commission on Preservation and Access, 1989), www.clir.org/pubs/reports/lesk/select.html.

7. Ibid.

8. Robert Mareck, “Practicum on Preservation Selection,” in Collection Management for the 1990s: Proceedings of the Midwest Collection Management and Development Institute, University of Illinois at Chicago, August 17–20, 1989, ed. Joseph Branin (Chicago: American Library Association, 1993), 114.

9. Ibid., 115.

10. Robin Gay Walker, Jane Greenfield, and John Fox, “The Yale Survey: A Large-Scale Study of Book Deterioration in the Yale University Library,” College and Research Libraries 46 (March 1985): 111–32.

11. M. Carl Drott, “Random Sampling: A Tool for Library Research,” College and Research Libraries 30 (March 1969): 119–25.

12. Jan Merrill-Oldham, “Chapter 8. Taking Care,” Journal of Library Administration 38 no. 1–2 (2003): 73–84.

13. Julie Mosbo and John Ballestro, “Buying from Secondary Markets: Acquiring Dollars and Sense,” Technical Services Quarterly 28, no. 2 (2011):121–31.

14. Bernard Reilly, “Preserving America’s Printed Resources Update,” CRL Focus on Global Resources 24, no. 1 (2004), www.crl.edu/focus/fall-2004.

15. Joseph O’Sullivan and Adam Smith, “All Booked Up,” The Official Google Blog, December 14, 2004, https://googleblog.blogspot.com/2004/12/all-booked-up.html.

16. Oya Y. Rieger, Preservation in the Age of Large-Scale Digitization: A White Paper (Washington, DC: Council on Library and Information Resources, 2008), www.clir.org/pubs/abstract/pub141abst.html.

17. Roger C. Schonfeld and Ross Housewright, What to Withdraw? Print Collections Management in the Wake of Digitization (New York: Ithaka S+R), September 29, 2009, www.sr.ithaka.org/sites/default/files/reports/What_to_Withdraw_Print_Collections_Management_in_the_Wake_of_Digitization.pdf.

18. Candace Arai Yano, Zuo-Jun Max Shen, and Stephen Chan, “Optimising the Number of Copies and Storage Protocols for Print Preservation of Research Journals,” International Journal of Production Research 51, no. 23-24 (2013): 7456–69, doi:10.1080/00207543.2013.827810.

19. Ibid., 7458.

20. Ibid., 7461.

21. Martin L. Weitzman, “The Noah’s Ark Problem.” Econometrica 66, no. 6 (November 1998): 1279–98, www.jstor.org/stable/2999617.

22. Ibid., 1281.

23. These inferences are drawn from the published literature on library condition surveys as well as a collection condition survey project conducted by the UCLA Library Preservation Department. More information on this effort is available at www.jacobnadal.com/82. For other library condition surveys, see Thomas Teper, “Building Preservation: The University of Illinois at Urbana-Champaign’s Stacks Assessment,” College and Research Libraries 64, no. 3 (2003): 211–27, and Gay Walker, Jane Greenfield, John Fox, and Jeffrey S. Simonoff, “The Yale Survey: A Large-Scale Study of Book Deterioration in the Yale University Library,” College and Research Libraries 46 (1985), 111–32.

24. These calculations assume an average book thickness of 1.5," with stacks assembled from six 36" shelves, filled to 90 percent capacity. These dimensions are drawn from UCLA Library’s internal collection surveys.

25. Jacob Nadal, “Developing a Preservation Program for the UCLA Library,” Archival Products News 16, no. 1 (2009), www.archival.com/newsletters/apnewsvol16no1.pdf.

26. International Standards Organization, ISO 5626:1993, Paper—Determination of folding endurance (Geneva: 2003).

27. The adhesive used in Post-it notes is described in Spencer Ferguson Silver, “Acrylate Copolymer Microspheres,” US Patent 36911140. This patent application describes a method of manufacture, but not the performance characteristics of the resulting adhesive. Information about the peel strength is inferred from John A. Miller, “Repositionable Adhesive Tape,” US Patent 5389438, February 14, 1995.

28. UCLA buys replacement copies listed by their booksellers as “Very Good” or better and with no defects noted. To date, we have used every one of the more than one hundred books purchased, with only two requiring minor stabilization treatments. See the Independent Online Booksellers Association Book Condition Definitions (www.ioba.org/pages/resources/condition-definitions/) for a listing and discussion of bookseller’s terminology.

29. One of the critical issues latent in preservation decision-making is the research library’s role in the maintenance of material culture outside of its dedicated special collections. We have two working assumptions in this area. One is that within the library staff, the preservation and conservation staff is the best-equipped to evaluate the material significance of the library’s artifacts. The other is that the severely damaged materials in preservation review are of little or no value to the study of the material culture of the book. Of course, severely damaged books are of value as a study collection for preservation and conservation, arguably a related field to material culture studies, but in this regard their presence in preservation review means they are accessible to the scholarly community that needs to interrogate them.

30. Yano, Shen, and Chan, 11.

31. Because of restrictions on providing full-view access to in-copyright materials in the HathiTrust, the copy in preservation review is sometimes placed in an enclosure and transferred to the SRLF, in lieu of withdrawal, until the emergence of a consistent means of managing full-view access for in-copyright materials in HathiTrust.

32. Yano, Shen, and Chan, 33–34.

33. In this instance, a perfect copy retains all of its contents so that it could be used to create a usable digital version. Perfect copies are assumed to be subject to the normal decay that affects all cellulosic materials, a problem preservation efforts guard against through preventative treatment such as deacidification and environmental controls.

34. These data were collected by the Persistence Policy Implementation Task Force, on which Jacob Nadal served as a member, in the course of developing replacement guidelines to support the UC Libraries Persistence Policy. The Persistence Policy and related reports are available at http://libraries.universityofcalifornia.edu/cdc/documents.

35. Chris Anderson, “The Long Tail,” Wired, October 2004, http://archive.wired.com/wired/archive/12.10/tail.html, and Chris Anderson, The Long Tail: Why the Future of Business Is Selling Less of More (New York: Hyperion, 2006).

36. University of California Libraries, Collection Development Committee website, http://libraries.universityofcalifornia.edu/cdc.

37. UCLA Library, Mission Statement, www.library.ucla.edu/about.

38. Brian Lavoie, Constance Malpas, and J. D. Shipengrover, Print Management at “Mega-scale”: A Regional Perspective on Print Book Collections in North America (Dublin, OH: OCLC Research, 2012), www.oclc.org/research/publications/library/2012/2012-05.pdf.

39. See, for examples, WEST: Western Regional Storage Trust (www.cdlib.org/west) and Ithaka S+R, What to Withdraw (www.ithaka.org/ithaka-s-r/research/what-to-withdraw).

40. See, for example, Heather Christenson, “HathiTrust: Sharing a Federal Print Repository: Issues and Opportunities” (www.hathitrust.org/documents/HathiTrust-FLICC-201105.ppt), Robert Darnton, “Can We Create a National Digital Library?” in The New York Review of Books, October 28, 2010, www.nybooks.com/articles/archives/2010/oct/28/can-we-create-national-digital-library, and Brewster Kahle, “Why Preserve Books? The New Physical Archive of the Internet Archive,” in Internet Archive Blogs, June 6, 2011, http://blog.archive.org/2011/06/06/why-preserve-books-the-new-physical-archive-of-the-internet-archive.

41. It is worth noting that what we propose is, in effect, the same model as the LOCKSS project, only for print rather than digital resources. It is no mere coincidence that LOCKSS networks become robust when there are ten or more LOCKSS appliances in the network. More information on LOCKSS is available at: http://lockss.stanford.edu.

42. WorldShare Collection Analysis, www.oclc.org/collectionanalysis.