Somewhere beyond the edge of our solar system, hurtling into interstellar space, is a phonograph and a golden record with hieroglyphic instructions on the sleeve. They are attached to the Voyager 2 space probe, launched in 1977 to transmit photographs and data back to us from the outer planets in our solar system. Now that it has flown by Neptune and its thrilling scientific mission is over, it serves as an interplanetary calling card from us to any spacefaring extraterrestrial that might snag it.

The astronomer Carl Sagan was the record producer, and he chose sights and sounds that captured our species and its accomplishments. He included greetings in fifty-five human languages and one “whale language,” a twelve-minute sound essay made up of a baby’s cry, a kiss, and an EEG record of the meditations of a woman in love, and ninety minutes of music sampled from the world’s idioms: Mexican mariachi, Peruvian panpipes, Indian raga, a Navajo night chant, a Pygmy girl’s initiation song, a Japanese shakuhachi piece, Bach, Beethoven, Mozart, Stravinsky, Louis Armstrong, and Chuck Berry singing “Johnny B. Goode.”

The disk also bore a message of peace from our species to the cosmos. In an unintended bit of black comedy, the message was recited by the secretary-general of the United Nations at the time, Kurt Waldheim. Years later historians discovered that Waldheim had spent World War II as an intelligence officer in a German army unit that carried out brutal reprisals against Balkan partisans and deported the Jewish population of Salonika to Nazi death camps. It is too late to call Voyager back, and this mordant joke on us will circle the center of the Milky Way galaxy forever.

The Voyager phonograph record, in any case, was a fine idea, if only because of the questions it raised. Are we alone? If not, do alien life forms have the intelligence and the desire to develop space travel? If so, would they interpret the sounds and images as we intended, or would they hear the voice as the whine of a modem and see the line drawings of people on the cover as showing a race of wire frames? If they understood it, how would they respond? By ignoring us? By coming over to enslave us or eat us? Or by starting an interplanetary dialogue? In a Saturday Night Live skit, the long-awaited reply from outer space was “Send more Chuck Berry.”

These are not just questions for late-night dorm-room bull sessions. In the early 1990s NASA allocated a hundred million dollars to a ten-year Search for Extraterrestrial Intelligence (SETI). Scientists were to listen with radio antennas for signals that could have come only from intelligent extraterrestrials. Predictably, some congressmen objected. One said it was a waste of federal money “to look for little green men with mis-shapen heads.” To minimize the “giggle factor,” NASA renamed the project the High-Resolution Microwave Survey, but it was too late to save the project from the congressional ax. Currently it is funded by donations from private sources, including Steven Spielberg.

The opposition to SETI came not just from the know-nothings but from some of the world’s most distinguished biologists. Why did they join the discussion? SETI depends on assumptions from evolutionary theory, not just astronomy—in particular, about the evolution of intelligence. Is intelligence inevitable, or was it a fluke? At a famous conference in 1961, the astronomer and SETI enthusiast Frank Drake noted that the number of extraterrestrial civilizations that might contact us can be estimated with the following formula:

(1) (The number of stars in the galaxy) ×

(2) (The fraction of stars with planets) ×

(3) (The number of planets per solar system with a life-supporting environment) ×

(4) (The fraction of these planets on which life actually appears) ×

(5) (The fraction of life-bearing planets on which intelligence emerges) ×

(6) (The fraction of intelligent societies willing and able to communicate with other worlds) ×

(7) (The longevity of each technology in the communicative state).

The astronomers, physicists, and engineers at the conference felt unable to estimate factor (6) without a sociologist or a historian. But they felt confident in estimating factor (5), the proportion of life-bearing planets on which intelligence emerges. They decided it was one hundred percent.

Finding intelligent life elsewhere in the cosmos would be the most exciting discovery in human history. So why are the biologists being such grinches? It is because they sense that the SETI enthusiasts are reasoning from a pre-scientific folk belief. Centuries-old religious dogma, the Victorian ideal of progress, and modern secular humanism all lead people to misunderstand evolution as an internal yearning or unfolding toward greater complexity, climaxing in the appearance of man. The pressure builds up, and intelligence emerges like popcorn in a pan.

The religious doctrine was called the Great Chain of Being—amoeba to monkey to man—and even today many scientists thoughtlessly use words like “higher” and “lower” life forms and the evolutionary “scale” and “ladder.” The parade of primates, from gangly-armed gibbon through stoop-shouldered caveman to upright modern man, has become an icon of pop culture, and we all understand what someone means when she says she turned down a date because the guy is not very evolved. In science fiction like H. G. Wells’ The Time Machine, episodes of Star Trek, and stories from Boy’s Life, the momentum is extrapolated to our descendants, shown as bald, varicose-veined, bulbous-brained, spindly-bodied homunculi. In The Planet of the Apes and other stories, after we have blown ourselves to smithereens or choked in our pollutants, apes or dolphins rise to the occasion and take on our mantle.

Drake expressed these assumptions in a letter to Science defending SETI against the eminent biologist Ernst Mayr. Mayr had noted that only one of the fifty million species on earth had developed civilizations, so the probability that life on a given planet would include an intelligent species might very well be small. Drake replied:

The first species to develop intelligent civilizations will discover that it is the only such species. Should it be surprised? Someone must be first, and being first says nothing about how many other species had or have the potential to evolve into intelligent civilizations, or may do so in the future. … Similarly, among many civilizations, one will be the first, and temporarily the only one, to develop electronic technology. How else could it be? The evidence does suggest that planetary systems need to exist in sufficiently benign circumstances for a few billion years for a technology-using species to evolve.

To see why this thinking runs so afoul of the modern theory of evolution, consider an analogy. The human brain is an exquisitely complex organ that evolved only once. The elephant’s trunk, which can stack logs, uproot trees, pick up a dime, remove thorns, powder the elephant with dust, siphon water, serve as a snorkel, and scribble with a pencil, is another complex organ that evolved only once. The brain and the trunk are products of the same evolutionary force, natural selection. Imagine an astronomer on the Planet of the Elephants defending SETT, the Search for Extraterrestrial Trunks:

The first species to develop a trunk will discover that it is the only such species. Should it be surprised? Someone must be first, and being first says nothing about how many other species had or have the potential to evolve trunks, or may do so in the future. … Similarly, among many trunk-bearing species, one will be the first, and temporarily the only one, to powder itself with dust. The evidence does suggest that planetary systems need to exist in sufficiently benign circumstances for a few billion years for a trunk-using species to evolve.…

This reasoning strikes us as cockeyed because the elephant is assuming that evolution did not just produce the trunk in a species on this planet but was striving to produce it in some lucky species, each waiting and hoping. The elephant is merely “the first,” and “temporarily” the only one; other species have “the potential,” though a few billion years will have to pass for the potential to be realized. Of course, we are not chauvinistic about trunks, so we can see that trunks evolved, but not because a rising tide made it inevitable. Thanks to fortuitous preconditions in the elephants’ ancestors (large size and certain kinds of nostrils and lips), certain selective forces (the problems posed by lifting and lowering a huge head), and luck, the trunk evolved as a workable solution for those organisms at that time. Other animals did not and will not evolve trunks because in their bodies and circumstances it is of no great help. Could it happen again, here or elsewhere? It could, but the proportion of planets on which the necessary hand has been dealt in a given period of time is presumably small. Certainly it is less than one hundred percent.

We are chauvinistic about our brains, thinking them to be the goal of evolution. And that makes no sense, for reasons articulated over the years by Stephen Jay Gould. First, natural selection does nothing even close to striving for intelligence. The process is driven by differences in the survival and reproduction rates of replicating organisms in a particular environment. Over time the organisms acquire designs that adapt them for survival and reproduction in that environment, period; nothing pulls them in any direction other than success there and then. When an organism moves to a new environment, its lineage adapts accordingly, but the organisms who stayed behind in the original environment can prosper unchanged. Life is a densely branching bush, not a scale or a ladder, and living organisms are at the tips of the branches, not on lower rungs. Every organism alive today has had the same amount of time to evolve since the origin of life—the amoeba, the platypus, the rhesus macaque, and, yes, Larry on the answering machine asking for another date.

But, a SETI fan might ask, isn’t it true that animals become more complex over time? And wouldn’t intelligence be the culmination? In many lineages, of course, animals have become more complex. Life began simple, so the complexity of the most complex creature alive on earth at any time has to increase over the eons. But in many lineages they have not. The organisms reach an optimum and stay put, often for hundreds of millions of years. And those that do become more complex don’t always become smarter. They become bigger, or faster, or more poisonous, or more fecund, or more sensitive to smells and sounds, or able to fly higher and farther, or better at building nests or dams—whatever works for them. Evolution is about ends, not means; becoming smart is just one option.

Still, isn’t it inevitable that many organisms would take the route to intelligence? Often different lineages converge on a solution, like the forty different groups of animals that evolved complex designs for eyes. Presumably you can’t be too rich, too thin, or too smart. Why wouldn’t humanlike intelligence be a solution that many organisms, on this planet and elsewhere, might converge on?

Evolution could indeed have converged on humanlike intelligence several times, and perhaps that point could be developed to justify SETI. But in calculating the odds, it is not enough to think about how great it is to be smart. In evolutionary theory, that kind of reasoning merits the accusation that conservatives are always hurling at liberals: they specify a benefit but neglect to factor in the costs. Organisms don’t evolve toward every imaginable advantage. If they did, every creature would be faster than a speeding bullet, more powerful than a locomotive, and able to leap tall buildings in a single bound. An organism that devotes some of its matter and energy to one organ must take it away from another. It must have thinner bones or less muscle or fewer eggs. Organs evolve only when their benefits outweigh their costs.

Do you have a Personal Digital Assistant, like the Apple Newton? These are the hand-held devices that recognize handwriting, store phone numbers, edit text, send faxes, keep schedules, and many other feats. They are marvels of engineering and can organize a busy life. But I don’t have one, though I am a gadget-lover. Whenever I am tempted to buy a PDA, four things dissuade me. First, they are bulky. Second, they need batteries. Third, they take time to learn to use. Fourth, their sophistication makes simple tasks, like looking up a phone number, slow and cumbersome. I get by with a notebook and a fountain pen.

The same disadvantages would face any creature pondering whether to evolve a humanlike brain. First, the brain is bulky. The female pelvis barely accommodates a baby’s outsize head. That design compromise kills many women during childbirth and requires a pivoting gait that makes women biomechanically less efficient walkers than men. Also, a heavy head bobbing around on a neck makes us more vulnerable to fatal injuries in accidents such as falls. Second, the brain needs energy. Neural tissue is metabolically greedy; our brains take up only two percent of our body weight but consume twenty percent of our energy and nutrients. Third, brains take time to learn to use. We spend much of our lives either being children or caring for children. Fourth, simple tasks can be slow. My first graduate advisor was a mathematical psychologist who wanted to model the transmission of information in the brain by measuring reaction times to loud tones. Theoretically, the neuron-to-neuron transmission times should have added up to a few milliseconds. But there were seventy-five milliseconds unaccounted for between stimulus and response—“There’s all this cogitation going on, and we just want him to push his finger down,” my advisor grumbled. Lower-tech animals can be much quicker; some insects can bite in less than a millisecond. Perhaps this answers the rhetorical question in the sporting equipment ad: The average man’s IQ is 107. The average brown trout’s IQ is 4. So why can’t a man catch a brown trout?

Intelligence isn’t for everyone, any more than a trunk is, and this should give SETI enthusiasts pause. But I am not arguing against the search for extraterrestrial intelligence; my topic is terrestrial intelligence. The fallacy that intelligence is some exalted ambition of evolution is part of the same fallacy that treats it as a divine essence or wonder tissue or all-encompassing mathematical principle. The mind is an organ, a biological gadget. We have our minds because their design attains outcomes whose benefits outweighed the costs in the lives of Plio-Pleistocene African primates. To understand ourselves, we need to know the how, why, where, and when of this episode in history. They are the subject of this chapter.

One evolutionary biologist has made a prediction about extraterrestrial life—not to help us look for life on other planets, but to help us understand life on this planet. Richard Dawkins has ventured that life, anywhere it is found in the universe, will be a product of Darwinian natural selection. That may seem like the most overreaching prognosis ever made from an armchair, but in fact it is a straightforward consequence of the argument for the theory of natural selection. Natural selection is the only explanation we have of how complex life can evolve, putting aside the question of how it did evolve. If Dawkins is right, as I think he is, natural selection is indispensable to understanding the human mind. If it is the only explanation of the evolution of little green men, it certainly is the only explanation of the evolution of big brown and beige ones.

The theory of natural selection—like the other foundation of this book, the computational theory of mind—has an odd status in modern intellectual life. Within its home discipline, it is indispensable, explaining thousands of discoveries in a coherent framework and constantly inspiring new ones. But outside its home, it is misunderstood and reviled. As in Chapter 2, I want to spell out the case for this foundational idea: how it explains a key mystery that its alternatives cannot explain, how it has been verified in the lab and the field, and why some famous arguments against it are wrong.

Natural selection has a special place in science because it alone explains what makes life special. Life fascinates us because of its adaptive complexity or complex design. Living things are not just pretty bits of bric-a-brac, but do amazing things. They fly, or swim, or see, or digest food, or catch prey, or manufacture honey or silk or wood or poison. These are rare accomplishments, beyond the means of puddles, rocks, clouds, and other nonliving things. We would call a heap of extraterrestrial matter “life” only if it achieved comparable feats.

Rare accomplishments come from special structures. Animals can see and rocks can’t because animals have eyes, and eyes have precise arrangements of unusual materials capable of forming an image: a cornea that focuses light, a lens that adjusts the focus to the object’s depth, an iris that opens and closes to let in the right amount of light, a sphere of transparent jelly that maintains the eye’s shape, a retina at the focal plane of the lens, muscles that aim the eyes up-and-down, side-to-side, and in-and-out, rods and cones that transduce light into neural signals, and more, all exquisitely shaped and arranged. The odds are mind-bogglingly stacked against these structures’ being assembled out of raw materials by tornados, landslides, waterfalls, or the lightning bolt vaporizing swamp goo in the philosopher’s thought experiment.

The eye has so many parts, arranged so precisely, that it appears to have been designed in advance with the goal of putting together something that sees. The same is true for our other organs. Our joints are lubricated to pivot smoothly, our teeth meet to sheer and grind, our hearts pump blood—every organ seems to have been designed with a function in mind. One of the reasons God was invented was to be the mind that formed and executed life’s plans. The laws of the world work forwards, not backwards: rain causes the ground to be wet; the ground’s benefiting from being wet cannot cause the rain. What else but the plans of God could effect the teleology (goal-directedness) of life on earth?

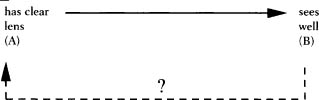

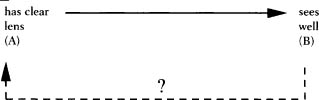

Darwin showed what else. He identified a forward-causation physical process that mimics the paradoxical appearance of backward causation or teleology. The trick is replication. A replicator is something that can make a copy of itself, with most of its traits duplicated in the copy, including the ability to replicate in turn. Consider two states of affairs, A and B. B can’t cause A if A comes first. (Seeing well can’t cause an eye to have a clear lens.)

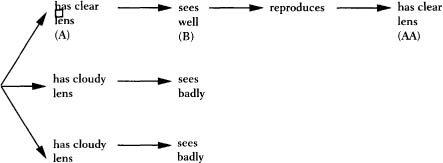

But let’s say that A causes B, and B in turn causes the protagonist of A to make a copy of itself—let’s call it AA. AA looks just like A, so it appears as if B has caused A. But it hasn’t; it has only caused AA, the copy of A. Suppose there are three animals, two with a cloudy lens, one with a clear lens. Having a clear lens (A) causes an eye to see well (B); seeing well causes the animal to reproduce by helping it avoid predators and find mates. The offspring (AA) have clear lenses and can see well, too. It looks as if the offspring have eyes so that they can see well (bad, teleological, backward causation), but that’s an illusion. The offspring have eyes because their parents’ eyes did see well (good, ordinary, forward causation). Their eyes look like their parents’ eyes, so it’s easy to mistake what happened for backward causation.

There’s more to an eye than a clear lens, but the special power of a replicator is that its copies can replicate, too. Consider what happens when the clear-lensed daughter of our hypothetical animal reproduces. Some of her offspring will have rounder eyeballs than others, and the round-eyed versions see better because the images are focused from center to edge. Better vision leads to better reproduction, and the next generation has both clear lenses and round eyeballs. They, too, are replicators, and the sharper-visioned of their offspring are more likely to leave a new generation with sharp vision, and so on. In every generation, the traits that lead to good vision are disproportionately passed down to the next generation. That is why a late generation of replicators will have traits that seem to have been designed by an intelligent engineer (see figure on page 158).

I have introduced Darwin’s theory in an unorthodox way that highlights its extraordinary contribution: explaining the appearance of design without a designer, using ordinary forward causation as it applies to replicators. The full story runs as follows. In the beginning was a replicator. This molecule or crystal was a product not of natural selection but of the laws of physics and chemistry. (If it were a product of selection, we would have an infinite regress.) Replicators are wont to multiply, and a single one multiplying unchecked would fill the universe with its great-great-great-…-great-grandcopies. But replicators use up materials to make their copies and energy to power the replication. The world is finite, so the replicators will compete for its resources. Because no copying process is one hundred percent perfect, errors will crop up, and not all of the daughters will be exact duplicates. Most of the copying errors will be changes for the worse, causing a less efficient uptake of energy and materials or a slower rate or lower probability of replication. But by dumb luck a few errors will be changes for the better, and the replicators bearing them will proliferate over the generations. Their descendants will accumulate any subsequent errors that are changes for the better, including ones that assemble protective covers and supports, manipulators, catalysts for useful chemical reactions, and other features of what we call bodies. The resulting replicator with its apparently well-engineered body is what we call an organism.

Natural selection is not the only process that changes organisms over time. But it is the only process that seemingly designs organisms over time. Dawkins stuck out his neck about extraterrestrial evolution because he reviewed every alternative to selection that has been proposed in the history of biology and showed that they are impotent to explain the signature of life, complex design.

The folk theory that organisms respond to an urge to unfold into more complex and adaptive forms obviously won’t do. The urge— and, more important, the power to achieve its ambitions—is a bit of magic that is left unexplained.

The two principles that have come to be associated with Darwin’s predecessor Jean Baptiste Lamarck—use and disuse, and the inheritance of acquired characteristics—are also not up to the job. The problem goes beyond the many demonstrations that Lamarck was wrong in fact. (For example, if acquired traits really could be inherited, several hundred generations of circumcision should have caused Jewish boys today to be born without foreskins.) The deeper problem is that the theory would not be able to explain adaptive complexity even if it had turned out to be correct. First, using an organ does not, by itself, make the organ function better. The photons passing through a lens do not somehow wash it clear, and using a machine does not improve it but wears it out. Now, many parts of organisms do adjust adaptively to use: exercised muscle bulks up, rubbed skin thickens, sunlit skin darkens, rewarded acts increase and punished ones decrease. But these responses are themselves part of the evolved design of the organism, and we need to explain how they arose: no law of physics or chemistry makes rubbed things thicken or illuminated surfaces darken. The inheritance of acquired characteristics is even worse, for most acquired characteristics are cuts, scrapes, scars, decay, weathering, and other assaults by the pitiless world, not improvements. And even if a blow did lead to an improvement, it is mysterious how the size and shape of the helpful wound could be read off the affected flesh and encoded back into DNA instructions in the sperm or egg.

Yet another failed theory is the one that invokes the macromutation: a mammoth copying error that begets a new kind of adapted organism in one fell swoop. The problem here is that the laws of probability astronomically militate against a large random copying error creating a complex functioning organ like the eye out of homogeneous flesh. Small random errors, in contrast, can make an organ a hit more like an eye, as in our example where an imaginable mutation might make a lens a tiny bit clearer or an eyeball a tiny bit rounder. Indeed, way before our scenario begins, a long sequence of small mutations must have accumulated to give the organism an eye at all. By looking at organisms with simpler eyes, Darwin reconstructed how that could have happened. A few mutations made a patch of skin cells light-sensitive, a few more made the underlying tissue opaque, others deepened it into a cup and then a spherical hollow. Subsequent mutations added a thin translucent cover, which subsequently was thickened into a lens, and so on. Each step offered a small improvement in vision. Each mutation was improbable, but not astronomically so. The entire sequence was not astronomically impossible because the mutations were not dealt all at once like a big gin rummy hand; each beneficial mutation was added to a set of prior ones that had been selected over the eons.

A fourth alternative is random genetic drift. Beneficial traits are beneficial only on average. Actual creatures suffer the slings and arrows of outrageous fortune. When the number of individuals in a generation is small enough, an advantageous trait can vanish if its bearers are unlucky, and a disadvantageous or neutral one can take over if its bearers are lucky. Genetic drift can, in principle, explain why a population has a simple trait, like being dark or light, or an inconsequential trait, like the sequence of DNA bases in a part of the chromosome that doesn’t do anything. But because of its very randomness, random drift cannot explain the appearance of an improbable, useful trait like an ability to see or fly. The required organs need hundreds or thousands of parts to work, and the odds are astronomically stacked against the required genes accumulating by sheer chance.

Dawkins’ argument about extraterrestrial life is a timeless claim about the logic of evolutionary theories, about the power of an explanans to cause the explanandum. And indeed his argument works against two subsequent challenges. One is a variant of Lamarckism called directed or adaptive mutation. Wouldn’t it be nice if an organism could react to an environmental challenge with a slew of new mutations, and not wasteful, random ones, but mutations for traits that would allow it to cope? Of course it would be nice, and that’s the problem—chemistry has no sense of niceness. The DNA inside the testes and ovaries cannot peer outside and considerately mutate to make fur when it’s cold and fins when it’s wet and claws when there are trees around, or to put a lens in front of the retina as opposed to between the toes or inside the pancreas. That is why a cornerstone of evolutionary theory—indeed, a cornerstone of the scientific worldview—is that mutations are indifferent overall to the benefits they confer on the organism. They cannot be adaptive in general, though of course a tiny few can be adaptive by chance. The periodic announcements of discoveries of “adaptive mutations” inevitably turn out to be laboratory curiosities or artifacts. No mechanism short of a guardian angel can guide mutations to respond to organisms’ needs in general, there being billions of kinds of organisms, each with thousands of needs.

The other challenge comes from the fans of a new field called the theory of complexity. The theory looks for mathematical principles of order underlying many complex systems: galaxies, crystals, weather systems, cells, organisms, brains, ecosystems, societies, and so on. Dozens of new books have applied these ideas to topics such as AIDS, urban decay, the Bosnian war, and, of course, the stock market. Stuart Kauffman, one of the movement’s leaders, suggested that feats like self-organization, order, stability, and coherence may be an “innate property of some complex systems.” Evolution, he suggests, may be a “marriage of selection and self-organization.”

Complexity theory raises interesting issues. Natural selection presupposes that a replicator arose somehow, and complexity theory might help explain the “somehow.” Complexity theory might also pitch in to explain other assumptions. Each body has to hang together long enough to function rather than fly apart or melt into a puddle. And for evolution to happen at all, mutations have to change a body enough to make a difference in its functioning but not so much as to bring it to a chaotic crash. If there are abstract principles that govern whether a web of interacting parts (molecules, genes, cells) has such properties, natural selection would have to work within those principles, just as it works within other constraints of physics and mathematics like the Pythagorean theorem and the law of gravitation.

But many readers have gone much further and conclude that natural selection is now trivial or obsolete, or at best of unknown importance. (Incidentally, the pioneers of complexity theory themselves, such as Kauffman and Murray Gell-Mann, are appalled by that extrapolation.) This letter to the New York Times Book Review is a typical example:

Thanks to recent advances in nonlinear dynamics, nonequilibrium thermodynamics and other disciplines at the boundary between biology and physics, there is every reason to believe that the origin and evolution of life will eventually be placed on a firm scientific footing. As we approach the 21st century, those other two great 19th century prophets—Marx and Freud—have finally been deposed from their pedestals. It is high time we freed the evolutionary debate from the anachronistic and unscientific thrall of Darwin worship as well.

The letter-writer must have reasoned as follows: complexity has always been treated as a fingerprint of natural selection, but now it can be explained by complexity theory; therefore natural selection is obsolete. But the reasoning is based on a pun. The “complexity” that so impresses biologists is not just any old order or stability. Organisms are not just cohesive blobs or pretty spirals or orderly grids. They are machines, and their “complexity” is functional, adaptive design: complexity in the service of accomplishing some interesting outcome. The digestive tract is not just patterned; it is patterned as a factory line for extracting nutrients from ingested tissues. No set of equations applicable to everything from galaxies to Bosnia can explain why teeth are found in the mouth rather than in the ear. And since organisms are collections of digestive tracts, eyes, and other systems organized to attain goals, general laws of complex systems will not suffice. Matter simply does not have an innate tendency to organize itself into broccoli, wombats, and ladybugs. Natural selection remains the only theory that explains how adaptive complexity, not just any old complexity, can arise, because it is the only nonmiraculous, forward-direction theory in which how well something works plays a causal role in how it came to be.

Because there are no alternatives, we would almost have to accept natural selection as the explanation of life on this planet even if there were no evidence for it. Thankfully, the evidence is overwhelming. I don’t just mean evidence that life evolved (which is way beyond reasonable doubt, creationists notwithstanding), but that it evolved by natural selection. Darwin himself pointed to the power of selective breeding, a direct analogue of natural selection, in shaping organisms. For example, the differences among dogs—Chihuahuas, greyhounds, Scotties, Saint Bernards, shar-peis—come from selective breeding of wolves for only a few thousand years. In breeding stations, laboratories, and seed company greenhouses, artificial selection has produced catalogues of wonderful new organisms befitting Dr. Seuss.

Natural selection is also readily observable in the wild. In a classic example, the white peppered moth gave way in nineteenth-century Manchester to a dark mutant form after industrial soot covered the lichen on which the moth rested, making the white form conspicuous to birds. When air pollution laws lightened the lichen in the 1950s, the then-rare white form reasserted itself. There are many other examples, perhaps the most pleasing coming from the work of Peter and Rosemary Grant. Darwin was inspired to the theory of natural selection in part by the thirteen species of finches on the Galápagos islands. They clearly were related to a species on the South American mainland, but differed from them and from one another. In particular, their beaks resembled different kinds of pliers: heavy-duty lineman’s pliers, high-leverage diagonal pliers, straight needle-nose pliers, curved needle-nose pliers, and so on. Darwin eventually reasoned that one kind of bird was blown to the islands and then differentiated into the thirteen species because of the demands of different ways of life on different parts of the islands, such as stripping bark from trees to get at insects, probing cactus flowers, or cracking tough seeds. But he despaired of ever seeing natural selection happen in real time: “We see nothing of these slow changes in progress, until the hand of time has marked the lapse of ages.” The Grants painstakingly measured the size and toughness of the seeds in different parts of the Galápagos at different times of the year, the length of the finches’ beaks, the time they took to crack the seeds, the numbers and ages of the finches in different parts of the islands, and so on—every variable relevant to natural selection. Their measurements showed the beaks evolving to track changes in the availability of different kinds of seeds, a frame-by-frame analysis of the movie that Darwin could only imagine. Selection in action is even more dramatic among faster-breeding organisms, as the world is discovering to its peril in the case of pesticide-resistant insects, drug-resistant bacteria, and the AIDS virus in a single patient.

And two of the prerequisites of natural selection—enough variation and enough time—are there for the having. Populations of naturally living organisms maintain an enormous reservoir of genetic variation that can serve as the raw material for natural selection. And life has had more than three billion years to evolve on earth, complex life a billion years, according to a recent estimate. In The Ascent of Man, Jacob Bronowski wrote:

I remember as a young father tiptoeing to the cradle of my first daughter when she was four or five days old, and thinking, “These marvelous fingers, every joint so perfect, down to the fingernails. I could not have designed that detail in a million years.” But of course it is exactly a million years that it took me, a million years that it took mankind … to reach its present stage of evolution.

Finally, two kinds of formal modeling have shown that natural selection can work. Mathematical proofs from population genetics show how genes combining according to Gregor Mendel’s laws can change in frequency under the pressure of selection. These changes can occur impressively fast. If a mutant produces just 1 percent more offspring than its rivals, it can increase its representation in a population from 0.1 percent to 99.9 percent in just over four thousand generations. A hypothetical mouse subjected to a selection pressure for increased size that is so weak it cannot be measured could nonetheless evolve to the size of an elephant in only twelve thousand generations.

More recently, computer simulations from the new field of Artificial Life have shown the power of natural selection to evolve organisms with complex adaptations. And what better demonstration than everyone’s favorite example of a complex adaptation, the eye? The computer scientists Dan Nilsson and Susanne Pelger simulated a three-layer slab of virtual skin resembling a light-sensitive spot on a primitive organism. It was a simple sandwich made up of a layer of pigmented cells on the bottom, a layer of light-sensitive cells above it, and a layer of translucent cells forming a protective cover. The translucent cells could undergo random mutations of their refractive index: their ability to bend light, which in real life often corresponds to density. All the cells could undergo small mutations affecting their size and thickness. In the simulation, the cells in the slab were allowed to mutate randomly, and after each round of mutation the program calculated the spatial resolution of an image projected onto the slab by a nearby object. If a bout of mutations improved the resolution, the mutations were retained as the starting point for the next bout, as if the slab belonged to a lineage of organisms whose survival depended on reacting to looming predators. As in real evolution, there was no master plan or project scheduling. The organism could not put up with a less effective detector in the short run even if its patience would have been rewarded by the best conceivable detector in the long run. Every change it retained had to be an improvement.

Satisfyingly, the model evolved into a complex eye right on the computer screen. The slab indented and then deepened into a cup; the transparent layer thickened to fill the cup and bulged out to form a cornea. Inside the clear filling, a spherical lens with a higher refractive index emerged in just the right place, resembling in many subtle details the excellent optical design of a fish’s eye. To estimate how long it would take in real time, rather than in computer time, for an eye to unfold, Nilsson and Pelger built in pessimistic assumptions about heritability, variation in the population, and the size of the selective advantage, and even forced the mutations to take place in only one part of the “eye” each generation. Nonetheless, the entire sequence in which flat skin became a complex eye took only four hundred thousand generations, a geological instant.

I have reviewed the modern case for the theory of natural selection because so many people are hostile to it. I don’t mean fundamentalists from the Bible Belt, but professors at America’s most distinguished universities from coast to coast. Time and again I have heard the objections: the theory is circular, what good is half an eye, how can structure arise from random mutation, there hasn’t been enough time, Gould has disproved it, complexity just emerges, physics will make it obsolete someday.

People desperately want Darwinism to be wrong. Dennett’s diagnosis in Darwin’s Dangerous Idea is that natural selection implies there is no plan to the universe, including human nature. No doubt that is a reason, though another is that people who study the mind would rather not have to think about how it evolved because it would make a hash of cherished theories. Various scholars have claimed that the mind is innately equipped with fifty thousand concepts (including “carburetor” and “trombone”), that capacity limitations prevent the human brain from solving problems that are routinely solved by bees, that language is designed for beauty rather than for use, that tribal people kill their babies to protect the ecosystem from human overpopulation, that children harbor an unconscious wish to copulate with their parents, and that people could just as easily be conditioned to enjoy the thought of their spouse being unfaithful as to be upset by the thought. When advised that these claims are evolutionarily improbable, they attack the theory of evolution rather than rethinking the claim. The efforts that academics have made to impugn Darwinism are truly remarkable.

One claim is that reverse-engineering, the attempt to discover the functions of organs (which I am arguing should be done to the human mind), is a symptom of a disease called “adaptationism.” Apparently if you believe that any aspect of an organism has a function, you absolutely must believe that every aspect has a function, that monkeys are brown to hide amongst the coconuts. The geneticist Richard Lewontin, for example, has defined adaptationism as “that approach to evolutionary studies which assumes without further proof that all aspects of the morphology, physiology and behavior of organisms are adaptive optimal solutions to problems.” Needless to say, there is no such madman. A sane person can believe that a complex organ is an adaptation, that is, a product of natural selection, while also believing that features of an organism that are not complex organs are a product of drift or a by-product of some other adaptation. Everyone acknowledges that the redness of blood was not selected for itself but is a by-product of selection for a molecule that carries oxygen, which just happens to be red. That does not imply that the ability of the eye to see could easily be a by-product of selection for something else.

There also are no benighted fools who fail to realize that animals carry baggage from their evolutionary ancestors. Readers young enough to have had sex education or old enough to be reading articles about the prostate may have noticed that the seminal ducts in men do not lead directly from the testicles to the penis but snake up into the body and pass over the ureter before coming back down. That is because the testes of our reptilian ancestors were inside their bodies. The bodies of mammals are too hot for the production of sperm, so the testes gradually descended into a scrotum. Like a gardener who snags a hose around a tree, natural selection did not have the foresight to plan the shortest route. Again, that does not mean that the entire eye could very well be useless phylogenetic baggage.

Similarly, because adaptationists believe that the laws of physics are not enough to explain the design of animals, they are also imagined to be prohibited from ever appealing to the laws of physics to explain anything. A Darwin critic once defiantly asked me, “Why has no animal evolved the ability to disappear and instantly reappear elsewhere, or to turn into King Kong at will (great for frightening predators)?” I think it is fair to say that “not being able to turn into King Kong at will” and “being able to see” call for different kinds of explanations.

Another accusation is that natural selection is a sterile exercise in after-the-fact storytelling. But if that were true, the history of biology would be a quagmire of effete speculation, with progress having to wait for today’s enlightened anti-adaptationists. Quite the opposite has happened. Mayr, the author of a definitive history of biology, wrote,

The adaptationist question, “What is the function of a given structure or organ?” has been for centuries the basis of every advance in physiology. If it had not been for the adaptationist program, we probably would still not yet know the functions of thymus, spleen, pituitary, and pineal. Harvey’s question “Why are there valves in the veins?” was a major stepping stone in his discovery of the circulation of blood.

From the shape of an organism’s body to the shape of its protein molecules, everything we have learned in biology has come from an understanding, implicit or explicit, that the organized complexity of an organism is in the service of its survival and reproduction. This includes what we have learned about the nonadaptive by-products, because they can be found only in the course of a search for the adaptations. It is the bald claim that a feature is a lucky product of drift or of some poorly understood dynamic that is untestable and post hoc.

Often I have heard it said that animals are not well engineered after all. Natural selection is hobbled by shortsightedness, the dead hand of the past, and crippling constraints on what kinds of structures are biologically and physically possible. Unlike a human engineer, selection is incapable of good design. Animals are clunking jalopies saddled with ancestral junk and occasionally blunder into barely serviceable solutions.

People are so eager to believe this claim that they seldom think it through or check the facts. Where do we find this miraculous human engineer who is not constrained by availability of parts, manufacturing practicality, and the laws of physics? Of course, natural selection does not have the foresight of engineers, but that cuts both ways: it does not have their mental blocks, impoverished imagination, or conformity to bourgeois sensibilities and ruling-class interests, either. Guided only by what works, selection can home in on brilliant, creative solutions. For millennia, biologists have discovered to their astonishment and delight the ingenious contrivances of the living world: the biomechanical perfection of cheetahs, the infrared pinhole cameras of snakes, the sonar of bats, the superglue of barnacles, the steel-strong silk of spiders, the dozens of grips of the human hand, the DNA repair machinery in all complex organisms. After all, entropy and more malevolent forces like predators and parasites are constantly gnawing at an organism’s right to life and do not forgive slapdash engineering.

And many of the examples of bad design in the animal kingdom turn out to be old spouses’ tales. Take the remark in a book by a famous cognitive psychologist that natural selection has been powerless to eliminate the wings of any bird, which is why penguins are stuck with wings even though they cannot fly. Wrong twice. The moa had no trace of a wing, and penguins do use their wings to fly—under water. Michael French makes the point in his engineering textbook using a more famous example:

It is an old joke that a camel is a horse designed by a committee, a joke which does grave injustice to a splendid creature and altogether too much honour to the creative power of committees. For a camel is no chimera, no odd collection of bits, but an elegant design of the tightest unity. So far as we can judge, every part is contrived to suit the difficult role of the whole, a large herbivorous animal to live in harsh climates with much soft going, sparse vegetation and very sparse water. The specification for a camel, if it were ever written down, would be a tough one in terms of range, fuel economy and adaptation to difficult terrains and extreme temperatures, and we must not be surprised that the design that meets it appears extreme. Nevertheless, every feature of the camel is of a piece: the large feet to diffuse load, the knobbly knees that derive from some of the design principles of Chapter 7 [bearings and pivots], the hump for storing food and the characteristic profile of the lips have a congruity that derives from function and invests the whole creation with a feeling of style and a certain bizarre elegance, borne out by the beautiful rhythms of its action at a gallop.

Obviously, evolution is constrained by the legacies of ancestors and the kinds of machinery that can be grown out of protein. Birds could not have evolved propellers, even if that had been advantageous. But many claims of biological constraints are howlers. One cognitive scientist has opined that “many properties of organisms, like symmetry, for example, do not really have anything to do with specific selection but just with the ways in which things can exist in the physical world.” In fact, most things that exist in the physical world are not symmetrical, for obvious reasons of probability: among all the possible arrangements of a volume of matter, only a tiny fraction are symmetrical. Even in the living world, the molecules of life are asymmetrical, as are livers, hearts, stomachs, flounders, snails, lobsters, oak trees, and so on. Symmetry has everything to do with selection. Organisms that move in straight lines have bilaterally symmetrical external forms because otherwise they would go in circles. Symmetry is so improbable and difficult to achieve that any disease or defect can disrupt it, and many animals size up the health of prospective mates by checking for minute asymmetries.

Gould has emphasized that natural selection has only limited freedom to alter basic body plans. Much of the plumbing, wiring, and architecture of the vertebrates, for example, has been unchanged for hundreds of millions of years. Presumably they come from embryological recipes that cannot easily be tinkered with. But the vertebrate body plan accommodates eels, cows, hummingbirds, aardvarks, ostriches, toads, gerbils, seahorses, giraffes, and blue whales. The similarities are important, but the differences are important, too! Developmental constraints only rule out broad classes of options. They cannot, by themselves, force a functioning organ to come into being. An embryological constraint like “Thou shalt grow wings” is an absurdity. The vast majority of hunks of animal flesh do not meet the stringent engineering demands of powered flight, so it is infinitesimally unlikely that the creeping and bumping cells in the microscopic layers of the developing embryo are obliged to align themselves into bones, skin, muscles, and feathers with just the right architecture to get the bird aloft—unless, of course, the developmental program had been shaped to bring about that outcome by the history of successes and failures of the whole body.

Natural selection should not be pitted against developmental, genetic, or phylogenetic constraints, as if the more important one of them is, the less important the others are. Selection versus constraints is a phony dichotomy, as crippling to clear thinking as the dichotomy between innateness and learning. Selection can only select from alternatives that are growable as carbon-based living stuff, but in the absence of selection that stuff could just as easily grow into scar tissue, scum, tumors, warts, tissue cultures, and quivering amorphous protoplasm as into functioning organs. Thus selection and constraints are both important but are answers to different questions. The question “Why does this creature have such-and-such an organ?” by itself is meaningless. It can only be asked when followed by a compared-to-what phrase. Why do birds have wings (as opposed to propellers)? Because you can’t grow a vertebrate with propellers. Why do birds have wings (as opposed to forelegs or hands or stumps)? Because selection favored ancestors of birds that could fly.

Another widespread misconception is that if an organ changed its function in the course of evolution, it did not evolve by natural selection. One discovery has been cited over and over in support of the misconception: the wings of insects were not originally used for locomotion. Like a friend-of-a-friend legend, that discovery has mutated in the retelling: wings evolved for something else but happened to be perfectly adapted for flight, and one day the insects just decided to fly with them; the evolution of insect wings refutes Darwin because they would have had to evolve gradually and half a wing is useless; the wings of birds were not originally used for locomotion (probably a misremembering of another fact, that the first feathers evolved not for flight but for insulation). All one has to do is say “the evolution of wings” and audiences will nod knowingly, completing the anti-adaptationist argument for themselves. How can anyone say that any organ was selected for its current function? Maybe it evolved for something else and the animal is only using it for that function now, like the nose holding up spectacles and all that stuff about insect wings that everyone knows about (or was it bird wings?).

Here is what you find when you check the facts. Many organs that we see today have maintained their original function. The eye was always an eye, from light-sensitive spot to image-focusing eyeball. Others changed their function. That is not a new discovery. Darwin gave many examples, such as the pectoral fins of fishes becoming the forelimbs of horses, the flippers of whales, the wings of birds, the digging claws of moles, and the arms of humans. In Darwin’s day the similarities were powerful evidence for the fact of evolution, and they still are. Darwin also cited changes in function to explain the problem of “the incipient stages of useful structures,” perennially popular among creationists. How could a complex organ gradually evolve when only the final form is usable? Most often the premise of unusability is just wrong. For example, partial eyes have partial sight, which is better than no sight at all. But sometimes the answer is that before an organ was selected to assume its current form, it was adapted for something else and then went through an intermediate stage in which it accomplished both. The delicate chain of middle-ear bones in mammals (hammer, anvil, stirrup) began as parts of the jaw hinge of reptiles. Reptiles often sense vibrations by lowering their jaws to the ground. Certain bones served both as jaw hinges and as vibration transmitters. That set the stage for the bones to specialize more and more as sound transmitters, causing them to shrink and move into their current shape and role. Darwin called the earlier forms “pre-adaptations,” though he stressed that evolution does not somehow anticipate next year’s model.

There is nothing mysterious about the evolution of birds’ wings. Half a wing will not let you soar like an eagle, but it will let you glide or parachute from trees (as many living animals do), and it will let you leap or take off in bursts while running, like a chicken trying to escape a farmer. Paleontologists disagree about which intermediate stage is best supported by the fossil and aerodynamic evidence, but there is nothing here to give comfort to a creationist or a social scientist.

The theory of the evolution of insect wings proposed by Joel Kingsolver and Mimi Koehl, far from being a refutation of adaptationism, is one of its finest moments. Small cold-blooded animals like insects struggle to regulate their temperature. Their high ratio of surface area to volume makes them heat up and cool down quickly. (That is why there are no bugs outside in cold months; winter is the best insecticide.) Perhaps the incipient wings of insects first evolved as adjustable solar panels, which soak up the sun’s energy when it is colder out and dissipate heat when it’s warmer. Using thermodynamic and aerodynamic analyses, Kingsolver and Koehl showed that proto-wings too small for flight are effective heat exchangers. The larger they grow, the more effective they become at heat regulation, though they reach a point of diminishing returns. That point is in the range of sizes in which the panels could serve as effective wings. Beyond that point, they become more and more useful for flying as they grow larger and larger, up to their present size. Natural selection could have pushed for bigger wings throughout the range from no wings to current wings, with a gradual change of function in the middle sizes.

So how did the work get garbled into the preposterous story that one day an ancient insect took off by flapping unmodified solar panels and the rest of them have been doing it ever since? Partly it is a misunderstanding of a term introduced by Gould, exaptation, which refers to the adaptation of an old organ to a new function (Darwin’s “pre-adaptation”) or the adaptation of a non-organ (bits of bone or tissue) to an organ with a function. Many readers have interpreted it as a new theory of evolution that has replaced adaptation and natural selection. It’s not. Once again, complex design is the reason. Occasionally a machine designed for a complicated, improbable task can be pressed into service to do something simpler. A book of cartoons called 101 Uses for a Dead Computer showed PCs being used as a paperweight, an aquarium, a boat anchor, and so on. The humor comes from the relegation of sophisticated technology to a humble function that cruder devices can fulfill. But there will never be a book of cartoons called 101 Uses for a Dead Paperweight showing one being used as a computer. And so it is with exaptation in the living world. On engineering grounds, the odds are against an organ designed for one purpose being usable out of the box for some other purpose, unless the new purpose is quite simple. (And even then the nervous system of the animal must often be adapted for it to find and keep the new use.) If the new function is at all difficult to accomplish, natural selection must have revamped and retrofitted the part considerably, as it did to give modern insects their wings. A housefly dodging a crazed human can decelerate from rapid flight, hover, turn in its own length, fly upside down, loop, roll, and land on the ceiling, all in less than a second. As an article entitled “The Mechanical Design of Insect Wings” notes, “Subtle details of engineering and design, which no man-made airfoil can match, reveal how insect wings are remarkably adapted to the acrobatics of flight.” The evolution of insect wings is an argument for natural selection, not against it. A change in selection pressure is not the same as no selection pressure.

Complex design lies at the heart of all these arguments, and that offers a final excuse to dismiss Darwin. Isn’t the whole idea a bit squishy? Since no one knows the number of kinds of possible organisms, how can anyone say that an infinitesimal fraction of them have eyes? Perhaps the idea is circular: the things one calls “adaptively complex” are just the things that one believes couldn’t have evolved any other way than by natural selection. As Noam Chomsky wrote,

So the thesis is that natural selection is the only physical explanation of design that fulfills a function. Taken literally, that cannot be true. Take my physical design, including the property that I have positive mass. That fulfills some function—namely, it keeps me from drifting into outer space. Plainly, it has a physical explanation which has nothing to do with natural selection. The same is true of less trivial properties, which you can construct at will. So you can’t mean what you say literally. I find it hard to impose an interpretation that doesn’t turn it into the tautology that where systems have been selected to satisfy some function, then the process is selection.

Claims about functional design, because they cannot be stated in exact numbers, do leave an opening for a skeptic, but a little thought about the magnitudes involved closes it. Selection is not invoked to explain mere usefulness; it’s invoked to explain improbable usefulness. The mass that keeps Chomsky from floating into outer space is not an improbable condition, no matter how you measure the probabilities. “Less trivial properties”—to pick an example at random, the vertebrate eye—are improbable conditions, no matter how you measure the probabilities. Take a dip net and scoop up objects from the solar system; go back to life on the planet a billion years ago and sample the organisms; take a collection of molecules and calculate all their physically possible configurations; divide the human body into a grid of one-inch cubes. Calculate the proportion of samples that have positive mass. Now calculate the proportion of samples that can form an optical image. There will be a statistically significant difference in the proportions, and it needs to be explained.

At this point the critic can say that the criterion—seeing versus not seeing—is set a posteriori, after we know what animals can do, so the probability estimates are meaningless. They are like the infinitesimal probability that I would have been dealt whatever poker hand I happened to have been dealt. Most hunks of matter cannot see, but then most hunks of matter cannot flern either, where I hereby define flern as the ability to have the exact size and shape and composition of the rock I just picked up.

Recently I visited an exhibition on spiders at the Smithsonian. As I marveled at the Swiss-watch precision of the joints, the sewing-machine motions by which it drew silk from its spinnerets, the beauty and cunning of the web, I thought to myself, “How could anyone see this and not believe in natural selection!” At that moment a woman standing next to me exclaimed, “How could anyone see this and not believe in God!” We agreed a priori on the facts that need to be explained, though we disagreed about how to explain them. Well before Darwin, theologians such as William Paley pointed to the engineering marvels of nature as proof of the existence of God. Darwin did not invent the facts to be explained, only the explanation.

But what, exactly, are we all so impressed by? Everyone might agree that the Orion constellation looks like a big guy with a belt, but that does not mean we need a special explanation of why stars align themselves into guys with belts. But the intuition that eyes and spiders show “design” and that rocks and Orion don’t can be unpacked into explicit criteria. There has to be a heterogeneous structure: the parts or aspects of an object are unpredictably different from one another. And there has to be a unity of function: the different parts are organized to cause the system to achieve some special effect—special because it is improbable for objects lacking that structure, and special because it benefits someone or something. If you can’t state the function more economically than you can describe the structure, you don’t have design. A lens is different from a diaphragm, which in turn is different from a photopigment, and no unguided physical process would deposit the three in the same object, let alone align them perfectly. But they do have something in common—all are needed for high-fidelity image formation—and that makes sense of why they are found together in an eye. For the flerning rock, in contrast, describing the structure and stating the function are one and the same. The notion of function adds nothing.

And most important, attributing adaptive complexity to natural selection is not just a recognition of design excellence, like the expensive appliances in the Museum of Modern Art. Natural selection is a falsifiable hypothesis about the origin of design and imposes onerous empirical requirements. Remember how it works: from competition among replicators. Anything that showed signs of design but did not come from a long line of replicators could not be explained by—in fact, would refute—the theory of natural selection: natural species that lacked reproductive organs, insects growing like crystals out of rocks, television sets on the moon, eyes spewing out of vents on the ocean floor, caves shaped like hotel rooms down to the details of hangers and ice buckets. Moreover, the beneficial functions all have to be in the ultimate service of reproduction. An organ can be designed for seeing or eating or mating or nursing, but it had better not be designed for the beauty of nature, the harmony of the ecosystem, or instant self-destruction. Finally, the beneficiary of the function has to be the replicator. Darwin pointed out that if horses had evolved saddles, his theory would immediately be falsified.

Rumors and folklore notwithstanding, natural selection remains the heart of explanation in biology. Organisms can be understood only as interactions among adaptations, by-products of adaptations, and noise. The by-products and noise don’t rule out the adaptations, nor do they leave us staring blankly, unable to tell them apart. It is exactly what makes organisms so fascinating—their improbable adaptive design—that calls for reverse-engineering them in the light of natural selection. The by-products and noise, because they are defined negatively as un-adaptations, also can be discovered only via reverse-engineering.

This is no less true for human intelligence. The major faculties of the mind, with their feats no robot can duplicate, show the handiwork of selection. That does not mean that every aspect of the mind is adaptive. From low-level features like the sluggishness and noisiness of neurons, to momentous activities like art, music, religion, and dreams, we should expect to find activities of the mind that are not adaptations in the biologists’ sense. But it does mean that our understanding of how the mind works will be woefully incomplete or downright wrong unless it meshes with our understanding of how the mind evolved. That is the topic of the rest of the chapter.

Why did brains evolve to start with? The answer lies in the value of information, which brains have been designed to process.

Every time you buy a newspaper, you are paying for information. Economic theorists have explained why you should: information confers a benefit that is worth paying for. Life is a choice among gambles. One turns left or right at the fork in the road, stays with Rick or leaves with Victor, knowing that neither choice guarantees fortune or happiness; the best one can do is play the odds. Stripped to its essentials, every decision in life amounts to choosing which lottery ticket to buy. Say a ticket costs $1.00 and offers a one-in-four chance of winning $10.00. On average, you will net $1.50 per play ($10.00 divided by 4 equals $2.50, minus $1.00 for the ticket). The other ticket costs $1.00 and offers a one-in-five chance of winning $12.00. On average, you will net $1.40 per play. The two kinds of tickets come in equal numbers, and neither has the odds or winnings marked on it. How much should you pay for someone to tell you which is which? You should pay up to four cents. With no information, you would have to choose at random, and you could expect to make $1.45 on average ($1.50 half the time, $1.40 half the time). If you knew which had the better average payoff, you would make an average of $1.50 each play, so even if you paid four cents you would be ahead by one cent each play.

Most organisms don’t buy lottery tickets, but they all choose between gambles every time their bodies can move in more than one way. They should be willing to “pay” for information—in tissue, energy, and time—if the cost is lower than the expected payoff in food, safety, mating opportunities, and other resources, all ultimately valuated in the expected number of surviving offspring. In multicellular animals the information is gathered and translated into profitable decisions by the nervous system.

Often, more information brings a greater reward and earns back its extra cost. If a treasure chest has been buried somewhere in your neighborhood, the single bit of information that locates it in the north or the south half is helpful, because it cuts your digging time in half. A second bit that told you which quadrant it was in would be even more useful, and so on. The more digits there are in the coordinates, the less time you will waste digging fruitlessly, so you should be willing to pay for more bits, up to the point where you are so close that further subdivision would not be worth the cost. Similarly, if you were trying to crack a combination lock, every number you bought would cut down the number of possibilities to try, and could be worth its cost in the time saved. So very often more information is better, up to a point of diminishing returns, and that is why some lineages of animals have evolved more and more complex nervous systems.

Natural selection cannot directly endow an organism with information about its environment, or with the computational networks, demons, modules, faculties, representations, or mental organs that process the information. It can only select among genes. But genes build brains, and different genes build brains that process information in different ways. The evolution of information processing has to be accomplished at the nuts-and-bolts level by selection of genes that affect the brain-assembly process.

Many kinds of genes could be the targets of selection for better information processing. Altered genes could lead to different numbers of proliferative units along the walls of the ventricles (the cavities in the center of the brain), which beget the cortical neurons making up the gray matter. Other genes could allow the proliferative units to divide for different numbers of cycles, creating different numbers and kinds of cortical areas. Axons connecting the neurons can be re-routed by shifting the chemical trails and molecular guideposts that coax the axons in particular directions. Genes can change the molecular locks and keys that encourage neurons to connect with other ones. As in the old joke about how to carve a statue of an elephant (remove all the bits that don’t look like an elephant), neural circuits can be sculpted by programming certain cells and synapses to commit suicide on cue. Neurons can become active at different points in embryogenesis, and their firing patterns, both spontaneous and programmed, can be interpreted downstream as information about how to wire together. Many of these processes interact in cascades. For example, increasing the size of one area allows it to compete better for real estate downstream. Natural selection does not care how baroque the brain-assembly process is, or how ugly the resulting brain. Modifications are evaluated strictly on how well the brain’s algorithms work in guiding the perception, thought, and action of the whole animal. By these processes, natural selection can build a better and better functioning brain.

But could the selection of random variants really improve the design of a nervous system? Or would the variants crash it, like a corrupted byte in a computer program, and the selection merely preserve the systems that do not crash? A new field of computer science called genetic algorithms has shown that Darwinian selection can create increasingly intelligent software. Genetic algorithms are programs that are duplicated to make multiple copies, though with random mutations that make each one a tiny bit different. All the copies have a go at solving a problem, and the ones that do best are allowed to reproduce to furnish the copies for the next round. But first, parts of each program are randomly mutated again, and pairs of programs have sex: each is split in two, and the halves are exchanged. After many cycles of computation, selection, mutation, and reproduction, the surviving programs are often better than anything a human programmer could have designed.

More apropos of how a mind can evolve, genetic algorithms have been applied to neural networks. A network might be given inputs from simulated sense organs and outputs to simulated legs and placed in a virtual environment with scattered “food” and many other networks competing for it. The ones that get the most food leave the most copies before the next round of mutation and selection. The mutations are random changes in the connection weights, sometimes followed by sexual recombination between networks (swapping some of their connection weights). During the early iterations, the “animals”—or, as they are sometimes called, “animats”—wander randomly over the terrain, occasionally bumping into a food source. But as they evolve they come to zip directly from food source to food source. Indeed, a population of networks that is allowed to evolve innate connection weights often does better than a single neural network that is allowed to learn them. That is especially true for networks with multiple hidden layers, which complex animals, especially humans, surely have. If a network can only learn, not evolve, the environmental teaching signal gets diluted as it is propagated backward to the hidden layers and can only nudge the connection weights up and down by minuscule amounts. But if a population of networks can evolve, even if they cannot learn, mutations and recombinations can reprogram the hidden layers directly, and can catapult the network into a combination of innate connections that is much closer to the optimum. Innate structure is selected for.

Evolution and learning can also go on simultaneously, with innate structure evolving in an animal that also learns. A population of networks can be equipped with a generic learning algorithm and can be allowed to evolve the innate parts, which the network designer would ordinarily have built in by guesswork, tradition, or trial and error. The innate specs include how many units there are, how they are connected, what the initial connection weights are, and how much the weights should be nudged up and down on each learning episode. Simulated evolution gives the networks a big head start in their learning careers.

So evolution can guide learning in neural networks. Surprisingly, learning can guide evolution as well. Remember Darwin’s discussion of “the incipient stages of useful structures”—the what-good-is-half-an-eye problem. The neural-network theorists Geoffrey Hinton and Steven Nowlan invented a fiendish example. Imagine an animal controlled by a neural network with twenty connections, each either excitatory (on) or neutral (off). But the network is utterly useless unless all twenty connections are correctly set. Not only is it no good to have half a network; it is no good to have ninety-five percent of one. In a population of animals whose connections are determined by random mutation, a fitter mutant, with all the right connections, arises only about once every million (220) genetically distinct organisms. Worse, the advantage is immediately lost if the animal reproduces sexually, because after having finally found the magic combination of weights, it swaps half of them away. In simulations of this scenario, no adapted network ever evolved.

But now consider a population of animals whose connections can come in three forms: innately on, innately off, or settable to on or off by learning. Mutations determine which of the three possibilities (on, off, learnable) a given connection has at the animal’s birth. In an average animal in these simulations, about half the connections are learnable, the other half on or off. Learning works like this. Each animal, as it lives its life, tries out settings for the learnable connections at random until it hits upon the magic combination. In real life this might be figuring out how to catch prey or crack a nut; whatever it is, the animal senses its good fortune and retains those settings, ceasing the trial and error. From then on it enjoys a higher rate of reproduction. The earlier in life the animal acquires the right settings, the longer it will have to reproduce at the higher rate.

Now with these evolving learners, or learning evolvers, there is an advantage to having less than one hundred percent of the correct network. Take all the animals with ten innate connections. About one in a thousand (210) will have all ten correct. (Remember that only one in a million nonlearning animals had all twenty of its innate connections correct.) That well-endowed animal will have some probability of attaining the completely correct network by learning the other ten connections; if it has a thousand occasions to learn, success is fairly likely. The successful animal will reproduce earlier, hence more often. And among its descendants, there are advantages to mutations that make more and more of the connections innately correct, because with more good connections to begin with, it takes less time to learn the rest, and the chances of going through life without having learned them get smaller. In Hinton and Nowlan’s simulations, the networks thus evolved more and more innate connections. The connections never became completely innate, however. As more and more of the connections were fixed, the selection pressure to fix the remaining ones tapered off, because with only a few connections to learn, every organism was guaranteed to learn them quickly. Learning leads to the evolution of innateness, but not complete innateness.

Hinton and Nowlan submitted the results of their computer simulations to a journal and were told that they had been scooped by a hundred years. The psychologist James Mark Baldwin had proposed that learning could guide evolution in precisely this way, creating an illusion of Lamarckian evolution without there really being Lamarckian evolution. But no one had shown that the idea, known as the Baldwin effect, would really work. Hinton and Nowlan showed why it can. The ability to learn alters the evolutionary problem from looking for a needle in a haystack to looking for the needle with someone telling you when you are getting close.

The Baldwin effect probably played a large role in the evolution of brains. Contrary to standard social science assumptions, learning is not some pinnacle of evolution attained only recently by humans. All but the simplest animals learn. That is why mentally uncomplicated creatures like fruit flies and sea slugs have been convenient subjects for neuroscientists searching for the neural incarnation of learning. If the ability to learn was in place in an early ancestor of the multicellular animals, it could have guided the evolution of nervous systems toward their specialized circuits even when the circuits are so intricate that natural selection could not have found them on its own.

Complex neural circuitry has evolved in many animals, but the common image of animals climbing up some intelligence ladder is wrong. The common view is that lower animals have a few fixed reflexes, and that in higher ones the reflexes can be associated with new stimuli (as in Pavlov’s experiments) and the responses can be associated with rewards (as in Skinner’s). On this view, the ability to associate gets better in still higher organisms, and eventually it is freed from bodily drives and physical stimuli and responses and can associate ideas directly to each other, reaching an apex in man. But the distribution of intelligence in real animals is nothing like this.