10

Methods of Media Visualization

Media visualization is a deeply qualitative rather than quantitative approach. It allows us to work with big data without counting something first or translating it into very different semiotic signs, such as text and numbers.

But how is this possible? Media visualization exploits the fact that real-world image collections always contain some metadata. This metadata can be used to sort images and group them in various categories. For example, in the case of digital video, the ordering of individual frames is built into the format itself. Depending on the genre, other higher-level sequences can be also present: shots and scenes in a narrative film, the order of subjects presented in a news program, the weekly episodes of a TV drama. In the case of single images, we also usually have at least some information: dates and times of creation, modification, or sharing; creators’ names; titles, hashtags, or short descriptions; metadata captured by the camera; and so on.

We can exploit this already existing information in two complementary ways. On the one hand, we can visualize all images in a collection together in the order provided by metadata. For example, in our visualization of 4,535 Time magazine covers of all issues published from 1923 to 2009, the images are organized by publication date, going from left to right and top to bottom (see plate 12). On the other hand, to reveal patterns that such an organization may hide, we can also organize the images via new sequences and layouts. In doing this, we deliberately go against the conventional understanding of cultural image sets, which metadata often reify. I call such conceptual operation remapping. By changing the accepted ways of sequencing media artifacts and organizing them in categories, we create alternative “maps” of our familiar media universes and landscapes.

Media visualization techniques can be also situated along a second conceptual dimension depending on whether they use all media in a collection or only a sample. We may sample in time (i.e., use only some of the available images) or in space (i.e., use only parts of the images). An example of the first technique is my visualization of Dziga Vertov’s The Eleventh Year, which uses one frame from every shot (see figure 10.2). The example of the second technique is a “slice” of 4,535 covers of Time magazine that uses only a single-pixel-width vertical line from each cover (see plate 13).

The third conceptual dimension that helps us sort possible media visualization techniques is the types and sources of information they use. As I already explained, media visualization exploits the presence of at least minimal metadata in a media collection, so it does not require the addition of new metadata about the individual media items. However, if we decide to add such metadata—for example, content tags we create via manual content analysis, labels for groups of similar images generated via automatic cluster analysis, automatically detected semantic concepts (such as objects, types of scenes, or photographic techniques used), face detection data, or visual features extracted with digital image processing—all this information can also be used in visualization.

In fact, media visualization offers a new way to work with such information. For example, consider information about content we added manually to our Time covers dataset, such as whether each cover features portraits of particular individuals, or whether it illustrates some concept or issue. I can use standard graphing techniques such as a line graph to display how the proportions of covers in these two categories change over time of publication (see figure 10.1). But I can also create a media visualization that shows all cover images and uses color borders or some other technique to label categories. Since such a visualization shows the content of all images, potentially it will let us notice many more patterns than a graph that shows the same information but without any images.

In the rest of this chapter, I discuss these distinctions in more detail, illustrating them with visualizations created in our lab. I will start with the simplest technique: using all images in a collection and organizing them in a sequence defined by existing metadata. Next, I will cover temporal and spatial sampling techniques. The final section discusses and illustrates the operation of remapping.

Image Montage

Conceptually and technically, the simplest technique is to show all images in a collection or a sample in a single visualization. I call this media visualization technique an image montage. We can order images by existing metadata or by a visual feature extracted from all images using image processing. For example, the image montage of Time covers organizes them by order of publication (plate 12).

Figure 10.1

Selected changes in the design and content of 4,535 Time magazine covers, 1923–2009. X-axis represents time; y-axis represents the proportion of covers with particular characteristic in a given year.

This technique can be seen as an extension of the most basic intellectual operations of media studies and humanities—comparing a set of related items. In 2000, John Unsworth proposed that there are seven scholarly primitives common to all scholarship: discovering, annotating, comparing, referring, sampling, illustrating, and representing.1 An image montage enables comparing many images and discovering trends in content and visual form.

Twentieth-century technologies only allowed for comparison among a small number of artifacts at the same time; for example, the standard lecturing method in art history (introduced around 1900) was to use two slide projectors to show and discuss two images side by side. Contemporary software running on computer devices now allows simultaneous display of thousands of images. As discussed earlier, all commonly used photo organizers and editors, including macOS Finder, Preview and Photos, and Adobe Bridge, can show images in a grid format. Similarly, image search engines such as Google image search and media sharing sites such as Instagram and Flickr also use image grids. However, typically they do not allow users to sort images in arbitrary ways or fully control the presentation layout. And though they can often show images automatically divided into a few categories—such as favorites, selfies, panoramas, and screenshots in the Photos app on an iPhone—the user often cannot create her own categories and use them to organize collection display.

To use image grids for comparison and discovery, we need to be able to sort images using metadata fields or extracted features, create our own categories, and control all details of the presentation. Such controls over elements of a visualization are standard in data visualization software, but not in media organizing applications. At the end of 2008, I realized that the open-source image analysis software ImageJ, popular in a number of scientific fields, could be adapted to visualize image collections as grids and provide control I wanted. (You can also use Processing, Python, Java, or other programming languages to make such visualizations or to program interactive apps.) ImageJ calls its command to make image grids make montage.2 So after we started to use ImageJ in our lab, we began to refer to such media visualization as montages. In 2009 I wrote a program in the ImageJ scripting language to add additional controls not available in the standard commands. The largest montage we made in 2010 contains a sequence of 22,500 frames sampled from 62.5 hours playing the Kingdom Hearts video game from beginning to end (see plate 14).

The quantitative increase in the number of images that can be visually compared using the image montage technique leads to a qualitative change in the type of observations you can make. Being able to display thousands of images simultaneously allows us to see patterns in composition, color, content, and other characteristics. If we sort the images by creation or publication dates, we can see if any of these characteristics change over time and if the changes are gradual or sudden. We can notice the images that stand out from the rest (i.e., outliers). We can also discover groups of images that share some characteristics in common (i.e., clusters). If we find some patterns during exploratory media analysis and we want to quantify any of them, in many cases we can extract the appropriate features and then analyze them using standard statistical and data visualization techniques. This approach where visual exploration of a media collection comes first before generating hypotheses extends Turkey’s paradigm of exploratory data analysis to media data.3

To be effective, even this simple media visualization technique may require some transformation of all the media being visualized. For example, to be able to observe similarities, differences, and patterns in large image collections, it is crucial to make all images the same size. In some cases, it is best to place images side by side without any gaps; in other cases, we need to add some empty spaces in between and change the background color. And rather than being satisfied with a single image montage, it is better to make a few of them, sorting the images in different ways.

One member of our lab, Damon Crockett, wrote software in Python that speeds such explorations. You can use this software to create six different media visualization layouts: rectilinear and circular montages, Cartesian and polar image histograms, and Cartesian and polar image plots.4 All visualizations and the code that generates them are presented in a single Jupiter Notebook document that can be edited, expanded, and shared with others. The software can also extract features from images and process them using clustering and dimension reduction techniques. The results of this analysis can be then used to organize images according to their visual characteristics. Another interactive media visualization software we developed for large tiled displays allows for very quick generation of montages sorted in different ways. We also wrote scripts for ImageJ and ImageMagic that render new montage layouts for particular datasets.

Figure 7.3 shows one such layout we developed to visualize twenty-one thousand historical photographs in the MoMA’s photography collection. Each row shows photographs from the collection for a given year, from 1844 to 1989 (top to bottom). The visualization reveals how the collection represents some historical periods much better than other periods. So rather than thinking of an image montage as a single technique, think of it as a more general strategy for exploratory media analysis that supports many different montage variations—and you can invent new ones to fit your project needs.

The following example illustrates the kinds of patterns a basic montage can help to see. This example is a visualization of 4,535 Time magazine covers for all issues from 1923 to 2009 (see plate 12). A large percentage of the covers included red borders. We cropped these borders and scaled all images to the same size. Here are the patterns I found by studying this visualization:

- Medium: In the 1920s and 1930s, Time covers mostly use photography. After 1941, the magazine switches to paintings. In the later decades, photography gradually comes to dominate again. In the 1990s, we see the emergence of the contemporary software-based visual language, which combines manipulated photography, graphic, and typographic elements.

- Color versus black and white: The shift from early black-and-white covers to full-color covers happens gradually, with both types coexisting for many years. Because full-color printing was quite expensive, in the beginning we see occasional covers that that use only a single color for a side area. Eventually all covers start to be printed in color.

- Hue: Distinct “color periods” appear in bands over time: green, yellow/brown, red/blue, yellow/brown again, yellow, and a lighter yellow/blue in the 2000s.

- Brightness: The changes in brightness between 1923 and 2009 (the mean of all pixels’ grayscale values for each cover) follow a similar cyclical pattern.

- Contrast and saturation: Both gradually increase over time. However, since the end of the 1990s, this trend is reversed: recent covers have lower contrast and lower saturation.

- Content: Initially most covers are portraits of individuals set against neutral backgrounds. Over time, portrait backgrounds change to feature compositions representing concepts. Later, these two different strategies come to coexist: portraits return to neutral backgrounds, while concepts are now represented by compositions that may include both objects and people—but not particular individuals.

- Metapattern: The visualization also reveals an important metapattern: almost all changes are gradual. Each of the new communication strategies emerges slowly over a number of months, years or even decades.

This image montage takes advantage of the serial and periodic nature of a magazine publication. Because the issues are published every week, we have an equal number of of Time covers’ visual images per year over eighty-six years. And because all covers have the same size and proportions, we can clearly see which visual characteristic change, when the changes start, and if they are rapid or slow.

Human vision is very good at noticing patters that involve repetition and deviations from this repetition. In the case of Time covers, we can, for example, notice occasional color covers that stand out against the black-and-white covers in the 1920s. We are also good at picking up gradual changes and seeing 2-D configurations when everything else is kept equal. The first ability enables bar plots and line graphs, and the second enables scatter plots. For example, if all bars have the same width and color, then the brain can focus on their respective lengths, detecting even small differences. In the case of the Time visualization, all cover images have exactly the same size and proportions, similar to bars in a bar chart. And this allows us to notice a number of temporal patterns across these images.

In both the natural and human-created worlds, particular semantics are often correlated with particular visual characteristics. The sky in the summer tends to be blue in color, and trees are green; clouds do not have sharp boundaries, but pebbles on the beach do; airplanes all have a similar shape, and cars have four wheels; most Instagram photos tagged with #selfie indeed show one or more people. These correlations mean that when our brain notices visual patterns in a media visualization, often these patterns correspond to particular content. For example, in the Time covers montage, we see a large area in yellow-orange colors in the upper part (if you divide the montage into five parts from the top, this will be the second part). Zooming in, we learn that this area corresponds to the 1940s and war years, and these are all hand-drawn portraits of military leaders and others associated with the war. This gives the overall yellow-orange look to this whole period.

However, seeing certain content patterns in the image montage of Time covers is more difficult. Once we reach the 1950s, the semantic and visual content of the covers in this and following decades is just too varied. The visual cortex of the brain cannot quickly compute how often each subject appears over time, the ratio of symmetrical versus asymmetrical compositions, the ratio of men versus women, patterns in head sizes and angles, and so on. To investigate such characteristics, we need to either automatically detect subjects, the positions of people and objects, and visual characteristics of covers using computer vision, or tag them manually and then analyze and visualize this new metadata.

We have manually tagged every Time cover using fourteen different categories to indicate if a cover is a portrait of a particular person or an illustration of some concept; if it is a drawing or a photograph or if a portrait is that of a man or a woman; the ethnicity suggested by the skin color of a person; and so on. Plotting the frequencies of these tags over time shows a number of important patterns we would not see in an image montage. (A few of these plots are shown in figure 10.1.) A number of trends start in the early 1950s and grow stronger over the following decades. Their speed of growth is similar. They include an increase in the proportion of concept illustrations versus portraits; use of photos versus drawings; an increase in the number of topics shown; and a decrease in the number of covers that show a male portrait (or full-figure view). It is tempting to think these all are expressions of a larger megatrend that contains trends that all support each other: development of a consumer and youth culture, growth of travel and improvements in color photography all happening in the 1950s. Also, in the 1960s, Time and many other publications around the world start covering more subjects—travel, science, lifestyles, sports, and more. This may be the main reason that covers from 1960 onward have such visual variety in contrast to earlier decades.

Although media visualizations certainly cannot reveal every pattern contained in an image collection, they can often show some patterns, as the example of Time covers image montage demonstrates. In general, we found that media visualizations are very useful if all images in a collection have some common characteristics. For example, Time covers all come from a single publication, have the same proportions, and feature the word time in approximately the same position in the top area. Other image collections that work well for media visualizations are a group of images in a single medium that have a time dimension: paintings created over time by one artist or by members of an artistic movement, key frames from a movie or a video, or pages from a number of magazine issues published consequently.

One example of such image collections are photos shared on social media over a period of time. I have used this method to visualize photos shared on Instagram in the center of Kiev during five days of 2014 Ukrainian Revolution (see our project The Exceptional and the Everyday: 144 hours in Kiev; the-everyday.net). Here I will discuss another example: two image montages from our 2013 Phototrails project led by Nadav Hochman (phototrails.info). They are shown in plate 15. Each montage is made of fifty thousand images shared on Instagram in a central area of a particular city over a few days in the spring of 2012. The top montage uses images shared in New York, and bottom montage uses images shared in Tokyo. These images represent a random sample from all publicly shared images with geoinformation we collected from a 5 × 5 km area. In New York case, this area covers Manhattan from its south end to Central Park. In the montage, the images are organized by sharing date and time (top to bottom and left to right).

The photographs were captured and shared by thousands of people, as opposed to a single artist or a single publication. They did not coordinate what and when to photograph. But when we place these images together along the time dimension, the result is a new kind of “city film.” This film has systematic patterns, but also lots of variability. In other words, it visualizes well how a a city (or society in general) functions: individuals have free will and can determine their own actions, and at the same time they are likely to follow certain regular routines, creating “social facts.”

For example, the New York and Tokyo montages show alternating lighter and darker vertical areas, and this pattern repeats horizontally. The lighter parts correspond to daytime, while darker parts correspond to nighttime. But this pattern does not repeat mechanically: each day and each night and their transitions are all different, as people share more or fewer images. At the time of our data collection, people were sharing more images in New York than in Tokyo: we see approximately three twenty-four-hour cycles in the upper montage versus four such cycles in the bottom montage. Each montage has variety of colors, but in Tokyo certain colors are more prominent. These are the yellow and brown of traditional food dishes. Finally, the Tokyo montage is more uniform than the New York City montage: the photos that are next to each other are often more similar in Tokyo than in New York. This difference in visual diversity of images does a good job of capturing the difference in cultural diversity between two giant cities: for example, while Tokyo saw approximately fifteen million foreign tourists in 2018, New York saw sixty-five million.

This example demonstrates how important it is to experiment with organizing images in a media visualization in different ways. Compare the image montage in plate 15 with the radial image plot in plate 16. The latter sorts the same fifty thousand Instagram images shared in Manhattan by average brightness and hue values, which control images distances from the center and their angles. While our image montage is in effect a 1-D visualization, since images are only sorted by their timestamp, it is more informative than the 2-D radial image plot that uses two extracted features.

The image montage technique has been pioneered in digital art projects such as Brendan Dawes’s Cinema Redux (2004) and Jonathan Harris’s The Whale Hunt (2007). To create their montages, artists wrote custom code; in each case, the code was used with particular datasets and not released publicly. In 2009, I wrote and published the code to create image montages with the open-source, free ImageJ software. The code was later expanded and refined by our lab members (github.com/culturevis/imagemontage).

When I started creating image montage visualizations in 2008, there were only a few examples of this technique used by digital artists. But a few years later, Apple added dynamic montage-like display modes into its Photo app in iOS. You can view photos by years, collections, and moments, and the app automatically arranges them in grids, making individual photos bigger or smaller depending on how many it needs to show.5 If you are using an iPhone or Apple laptop that comes with the Photos software that offers the same display modes, you can see them for yourself.

Although the montage method is the simplest conceptually and technically, it is quite challenging to characterize it theoretically. Given that information visualization normally takes data that is not visual and represents it in a visual domain, is it appropriate to think of a montage as a visualization method? To create a montage, we start in the visual domain and we end up in the same domain; that is, we start with individual images, put them together, and then zoom out to see them all at once.

I believe that calling this method visualization is justified if instead of focusing on the transformation of data in information visualization (from numbers and categories to images), we focus on its other key operation: arranging the elements of visualization in such a way as to allow the user to easily notice the patterns that are hard to observe otherwise. From this perspective, a montage is a legitimate visualization technique. For instance, the current interface of Google Books can only show 10 covers of any magazine on one page, so it is hard to observe the longer historical patterns. However, if we gather all the covers of a magazine and arrange them in a particular way, these patterns are now easy to see. Specifically, we scale all covers to exactly the same size and display them in a rectangular grid in publication date order as we did in Time covers montage. In other words, just as in the bar chart example I evoked, we make everything the same so the eye can focus on comparing what is different—the content of covers, their layouts, colors, and other visual characteristics.

Sampling versus Summarization

The next two techniques I will present add another conceptual step. Instead of displaying all the images in a collection, we can sample them using some procedure to select only some images and/or their parts. (For examples, see figure 10.2 and plate 13.)

In statistics, a sample is a part of data that is representative of the whole, and I am using this term in the same way here. However, if our whole is a large collection of images or videos, representative can be defined in many ways. One type of sample may be best in revealing some characteristics and patterns, while another may reveal different patterns. Therefore, there is not one correct way to sample a media collection. We should try different sampling strategies and visualize these samples not because we want to come up with one “correct” strategy, but because we want to enrich our understanding of a collection and create its alternative visual representations. In other words, we want to create not a single map, but many different ones.

We can contrast such an approach with one adopted in the area of computer science called automatic video summarization. Here the goal is to produce the “best” compact summary of a given video that satisfies the following principles:

The video summary must contain high priority entities and events from the video. For example, a summary of a soccer game must show goals, spectacular goal attempts, as well as any other notable events such as the ejection of a player from the game, any fistfights or scuffles and so on. In addition, the summary itself should exhibit reasonable degrees of continuity—the summary must not look like a bunch of video segments blindly concatenated together. A third criterion is that the summary should be free of repetition—this can be difficult to achieve. For example, it is common in soccer videos for the same goal to be replayed several times. It is not that easy to automatically detect that the same event is being shown over and over again. These three tenets, named the CPR (Continuity, Priority and no Repetition) form the basic core of all strong video summarization methods.6

Video summarization is used in the interfaces of some online media collections; for instance, the Internet Archive includes regularly sampled frames for some of the videos in its collection. There are also mobile apps that produce short video summaries.

The algorithms that can quickly process videos and produce their visual summaries are certainly useful for practical applications. But if we want to explore a media collection to find different patterns and to question our standard understanding of the material, is video summarization the right approach? This approach samples videos to summarize them, but why would we want to use sampling for media exploratory analysis? After all, we have entered the “big data” research paradigm, and our computers can render visualizations showing millions of images. So why would we want instead to only use a small part of the whole? Why do we want to engage again in reduction after we critiqued and promoted media visualization as an alternative?

The reason for video sampling is to highlight the unique elements of the whole and leave out repetitive parts. This allows the brain to compare these unique elements without being distracted by the repetitive elements, which, to use the language of Shannon’s mathematical theory of communication, do not carry any new information. However, we do not have to follow the goal of video summarization to always include “high priority” events. Importance is defined by convention, and we may want to question such conventions. In fact, we should always generate a few different media visualizations that sample the collection is alternative ways and highlight different kinds of events and patterns.

The rich traditions of video and media art offer many examples of such alternative sampling of original media that not only reveal meanings intended by the media producers but also read this media against the grain, expose its ideologies, and construct new audiovisual experiences from sampled material. Equally rich and inspiring is the history of sampling in music. These traditions are the opposites of video summarization research aiming to create a single best solution that is “true” to the original. The beauty of cultural analytics is that it does not have to choose between the objective approach of computer scientists and the transformative approach of artists.

The companies that use this computer science research create practical applications for mass audiences, and they adapt the commonsense but (in reality) only one possible interpretation of media and the world. When they automate our interactions with media, such as summarizing video contents or promoting social media photos in recommendations that we are likely to like, what is being automated is the mainstream “average” taste and mainstream and seemingly normal understanding of reality.

In the 1970s, members of the Birmingham School of Cultural Studies argued that receiving “objective” (i.e., intended) messages encoded in mass communication is not the only correct outcome. In his well-known 1973 article “Encoding and Decoding in the Television Discourse,” Stuart Hall proposed that the subject decoding a mass media message can follow three strategies: hegemonic, negotiated, and oppositional.7 In the first case, the subject decodes the message as it was intended—that is, the way it was encoded. (Hegemonic refers to “definitions of situations and events which are ‘in dominance.’”8) In the second case, the subject understands the intended message but then modifies it to fit her/his situation. Finally, in the third case the subject rejects the intended code. Here is one such example Hall provides: “This is the case of the viewer who listens to a debate on the need to limit wages but ‘reads’ every mention of the ‘national interest’ as ‘class interest.’”9

From this perspective, the subject taken for granted by most computer science publications dealing with media is the one that follows a hegemonic strategy of decoding. This is the subject of a consumer society that accepts it and only looks for help to make life more efficient and satisfying, without questioning this society. So many areas in computer science want to help—by improving image search engines, by providing better movies and book recommendations, by making UIs more efficient. At the same time, they see their functions as serving industry needs, which means, for example, making more people click on online and app ads. In other words, lots of research in computer science is aligned with the hegemonic system. One indirect support for such an interpretation is a phrase that was popular in the 2010s: “The best minds of my generation are thinking about how to make people click ads.”10 Of course, in reality things are not so black and white. Talking to many computer scientists made it clear to me that what they really care about is interesting research and working on hard problems, but regardless everybody has to justify their research in publications by referring to industry needs and people’s needs.

This means that, for example, video summarization as it is presented in computer science publications is an instance of such hegemonic decoding. When researchers say that “a summary of a soccer game must show goals, spectacular goal attempts, as well as any other notable events,” such a summarization follows the intended messages of sports as they exist today (live global broadcasting and selling of ads that require the sports to have frequent “spectacular” moments).

For cultural analytics, many other parts of a media collection or a video and its overall patterns are often more interesting. Figure 10.3 is one example of an oppositional media visualization I created. By sampling a movie in an unconventional way, it reveals the largely static character of a film by a director famous for his dynamism. (This example will be discussed in more detail later in the chapter).

Temporal Sampling

Because time-based visual media exists in space (images are 2-D representations) and in time (a sequence of images like Time covers, frames of a video, states of an interactive interface), we can sample either in space or time, or use both types of sampling together. Temporal sampling means selecting a subset of images from a larger image sequence. We assume that this sequence is defined by existing metadata, such as frame numbers in a video, sharing dates of images on a social media site, or page numbers in a comic book. We keep the original sequence, but instead of showing every image or video frame, we show only some of them.

Temporal sampling is particularly useful in representing cultural artifacts, processes, and experiences that unfold over significant periods of time—such as playing a video game. Completing a single-player game may take dozens or even hundreds of hours. In the case of massively multiplayer online role-playing games (MMORPGs), users may be playing for years. (A 2005 study by Nick Yee found that MMORPG players spend an average of twenty-one hours per week in the game world.11) In the following example, two visualizations created by William Huber in our lab together represent one hundred hours of game play.

The games in question are Kingdom Hearts (2002) and Kingdom Hearts II (2005). Each game was played by one player from beginning to end over a number of sessions. The Kingdom Hearts gameplay was 62.5 hours in twenty-nine sessions over twenty days. The Kingdom Hearts II gameplay was thirty-seven hours in sixteen sessions over eighteen days. The video captured from all game sessions of each game were assembled into a single sequence. The sequences were sampled at six frames per second. This resulted in 225,000 frames for Kingdom Hearts and 133,000 frames for Kingdom Hearts II. The visualizations use every tenth frame from the complete frame sets. Frames are organized in a grid in order of game play (left to right, top to bottom). Plate 14 shows one of the two visualizations, for the original Kingdom Hearts gameplay.

Kingdom Hearts is a franchise of video games and other media properties created in 2002 via a collaboration between Tokyo-based videogame publisher Square (now Square Enix) and the Walt Disney Company. The franchise includes original characters created by Square traveling through worlds representing Disney-owned media properties (Tarzan, Alice in Wonderland, The Nightmare before Christmas, etc.). Each world has distinct characters derived from the respective Disney-produced film. It also features distinct color palettes and rendering styles, which are related to visual styles of the corresponding Disney film. Visualizations reveal the structure of the gameplay, which cycles between progressing through the story and visiting various Disney worlds.

For an example of using temporal sampling for the visualization of films, see figure 10.2. The visualization of Vertov’s The Eleventh Year shown in this figure relies on a semantically and visually important segmentation of the film—the shots sequence. The film is fifty-two minutes long and contains 654 shots. Instead of mechanically sampling the film at a fixed rate (e.g., one frame for every second), we sample the film at shot boundaries. Each shot is represented by its second frame, but middle or last frames can be also used. The frames are organized left to right and top to bottom following the shot order in the film. We can think of this visualization as a reverse-engineered imaginary storyboard of the film—a reconstructed plan of its cinematography, editing, and content. You can use free shot detection software shotdetect and our ImageMontage program to make such visualizations for any film. (For more examples of image montages and other visualizations of films, video, and TV programs, see our projects Visualizing Vertov, Media Species, ObamaVideo, PoliticalVideoAds.viz, and Remix.viz, and Adelheid Heftberger’s book Digital Humanities and Film Studies: Visualising Dziga Vertov’s Work.)

Spatial Sampling

Spatial sampling involves selecting parts of images according to a chosen procedure. For example, our visualization of Time covers used spatial sampling because we cropped some of the images to get rid of the repeating red borders that the magazine employed for decades. Because of their saturated color, these borders attract the attention of a viewer, making it harder to see patterns in the rest of the covers. Therefore, we removed these red parts from all images prior to rendering the final visualization. However, as the next example demonstrates, often more dramatic sampling, which leaves only a small part of an image, can be quite effective in revealing additional patterns that a full image montage may not show as well. In keeping with the standard name for such visualizations used in medical and biological research and in the ImageJ software, we refer to them as slices.12

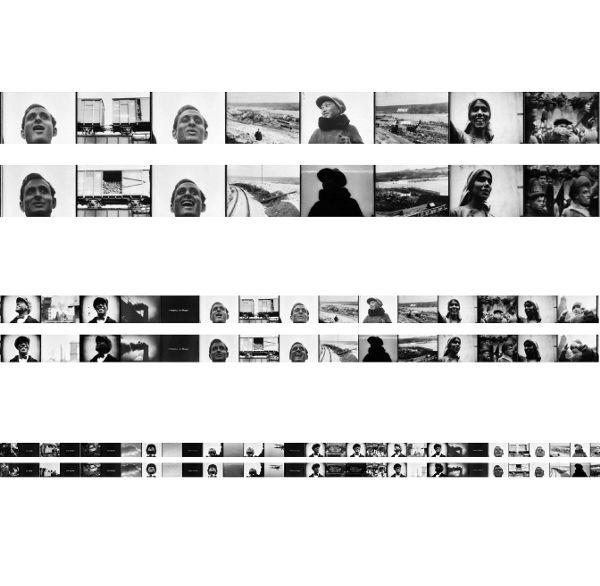

Figure 10.2

Visualization of The Eleventh Year (Dziga Vertov, fifty-two minutes, 1928). Every one of the 654 shots in the film is represented by its second frame. Frames are organized from left to right and top to bottom following the order of the shots in the film. A close-up of a single row of frames is shown separately below.

In biology, neuroscience, and medical sciences, researchers use slicing techniques to study and visualize brain activity. For example, current research to produce very detailed maps of the brain uses microthin slices created 100 μm apart.13 MRI and fMRI technologies also image the brain by recording information in slices. Subsequently, software such as ImageJ is used to assemble individual slices together and study them.14 In fact, ImageJ and its predecessor Image were originally developed at the U.S. National Institutes of Health (NIH) for working with images obtained using light microscopes, and the software has been particularly popular in biomedical image processing. These origins are clearly visible in ImageJ’s UI: it calls individual images slices, and a sequence of these slices is called a stack.15 When I started to explore the program, I came across one of the commands designed to work with slices: orthogonal views. The User Guide described the command as follows: “if a stack displays sagittal sections, coronal (YZ projection image) and transverse (XZ projection image) will be displayed through the data-set.”16 I realized that these are terms from medical science, but instead of looking them up, I just applied this command to my own sequence of images (all frames from a short music video). The resulting visualizations turned out to be very revealing, and we started referring to them as slices. The slicing operation extracts a narrow column from the center of each image in a sequence and assembles them together. You can control the width of a column. This is conceptually similar to an image montage, but instead of assembling full images, here each image is first sampled.

The visualization in plate 13 demonstrates the use of slices using images of 4,535 covers of Time. Each cover has been sampled to leave a one-pixel-wide vertical slice from its center. These slices are positioned one after another in order of publication (1923–2009, left to right). This visualization makes easily visible some patterns that are harder to see in an image montage of full covers. The magazine name keeps changing its vertical position and design over the decades: first it’s black and relatively large, then it becomes smaller, later it almost disappears, and later again it becomes more visible printed in red. The use of photography in the first decade after beginning of the publication gives way to a period of the painted portraits during the years of the World War II (the uniform orange area). While most patterns change gradually, one important change takes place quickly over just a few months in 1952. Before an image occupied only a part of the cover; now it fills the whole cover. We see how this change happens in two stages: first an image is extended to the top edge of a cover, and after a few months it is also extended to the bottom edge.

What are the relations between the techniques for visual media sampling as illustrated here and the theory and practice of sampling in statistics and the social sciences?17 Statistics is concerned with selecting a sample in such a way as to yield some reliable knowledge about the larger population. Because it was often not practical to collect data about the whole population, the idea of sampling was the foundation of twentieth-century applications of statistics. Today, in the era of big data, in retrospect it may look strange that throughout the twentieth century countless studies and practical policies were based on very small samples of human populations. And the use of small samples is still standard today in many areas such as population surveys. (For a detailed discussion of the limitations of sampling when used for the study of society and culture, see chapter 5.)

The design of experiments, including types and sizes of samples, has been an important area of modern statistics development. The theory and methods of sampling were elaborated by Charles Peirce, Ronald Fisher, and others between 1870 and 1930. Statisticians developed a variety of methods that allow them to draw conclusions about a larger population using carefully designed experiments and small samples.

When using media visualization, we often face the same limitations. A ninety-minute-long feature film sampled at twenty-four frames per second is 129,600 frames. A video recording of a gameplay that lasts fifty hours sampled at the same rate is 1,728,000 frames. This is why in our visualizations of Kingdom Hearts we sampled the complete gameplay videos to show only a selection of frames.

Ideally, if the ImageJ software was capable of creating a montage using all the video frames, we would not have to do this. However, as the Time covers slice visualization and the image montage of Vertov’s film demonstrate, sometimes dramatically limiting part of the data shown is more effective in revealing certain patterns than using all the data. While we could have noticed changes in cover layout over time in the full-images montage, a slice montage is much clearer. We see irregular placement and the changing size of the word time, and the switch to full images covering the whole cover in the early 1950s. We also see that the visual variability of the covers sequence is much lower until around 1960. After that, variability increases, and it becomes hard to predict the colors and content of the next cover based on the previous ones.

Remapping

Any representation can be understood as a result of a mapping operation between two entities. In terms of semiology developed by Ferdinand de Saussure, the mapping is from a signified to a signifier. In terms of Charles Peirce’s semiotics, the mapping is from object to sign. The taxonomy of signs defined by Peirce—icon, index, symbol, and diagram—defines different types of mapping between an object and its representation.

Mathematically, mapping is a function that creates a correspondence between elements in two domains. For a familiar example of such mappings, consider geometric projection techniques that are used to create two-dimensional images of three-dimensional scenes, such as isometric projection and perspective projection. Another example is two-dimensional maps that represent physical spaces.

Twentieth-century cultural theory often stressed that all cultural representations are partial maps because they can only show some aspects of the objects. A cultural representation is always selective, and what gets represented is often determined by power interests or ideologies, as well as the media technology used. However, this assumption needs to be rethought today, given the dozens of technologies developed in the last decades for capturing data about physical objects and biological bodies and the ability to process massive amounts of data to extract features and other information. Google, for instance, crawls the web continuously to update its search database, which contained one trillion links by 2008.18

Modern media technologies—photography, film, audio records, fax and copy machines, audio and video magnetic recording, media editing software, the web—led to a new practice of cultural mapping: using an existing media artifact and creating new meaning or a new aesthetic effect by sampling and rearranging parts of this artifact. While this strategy was already used in the second part of the nineteenth century (e.g., with photomontages), it became more central to modern media arts in the 1910s and 1920s, and even more important since the second part of the 1950s. Its different manifestations include pop art, compilation films, appropriation art, remixes, and a significant part of media art—from Bruce Conner’s very first compilation film A Movie (1958) to Dara Birnbaum’s Technology/Transformation: Wonder Woman (1979), Douglas Gordon’s 24 Hour Psycho (1993), Joachim Sauter and Dirk Lüsebrink’s The Invisible Shapes of Things Past (1995), Mark Napier’s Shredder (1998), Jennifer and Kevin McCoy’s Every Shot/Every Episode (2001), Cinema Redux by Brendan Dawes (2004), Natalie Bookchin’s Mass Ornament (2009), Christian Marclay’s The Clock (2011), and many others. While earlier remappings were done manually and had a small number of elements, use of computers allowed artists to automatically create new media representations containing thousands of elements.

If the original media artifact, such as a news photograph, a feature film, or a website, can be understood as a map of some “reality,” an art project that rearranges the elements of this artifact can be understood as a remapping. These projects derive their meanings and aesthetic effects from systematically rearranging the samples of original media in new configurations. In retrospect, many of these art projects can also be understood as media visualizations. They examine ideological patterns in mass media, experiment with new ways to navigate and interact with media, defamiliarize our media perceptions, or create new dynamic media landscapes.

For instance, visual artist and video director Marco Brambilla produced a series of stunning videos for the elevators of The Standard Hotel in New York’s Chelsea area (they were installed in 2008). One such video, Civilization (Megaplex), uses over four hundred video clips drawn from historical films that are composited in an elaborate, overlapping, dynamic “map.”19 As you take the elevator to the top floor, the video progresses from images of hell to images of heaven. In other videos in this series, the artist and his production company similarly combine hundreds of movie clips that represent iconic moments in twentieth-century visual culture. The characters and scenes appear as miniatures each following its own movement. Together they form a media history galaxy unfolding before our eyes. The animations build numerous links among faces, bodies, set designs, and periods accumulate as we watch, making the overall map thicker with references and denser visually.

The methods of media visualization described in this chapter use some of the techniques already explored earlier by artists. However, the practices of photomontages, film editing, sampling, remixing, and digital arts are likely to contain many other strategies that can be appropriated for media visualization. Just as with the sampling concept, the relationship between these artistic practices of media remapping and the use of media visualization as a research method for media studies is a rich topic for further reflection. As a way to begin, we can consider important differences between these two paradigms.

Artistic projects typically sample media artifacts, select parts, and then deliberately assemble these parts in a new order. For example, in a classical work of video art titled Technology/Transformation: Wonder Woman (1979), Dara Birnbaum sampled the television series Wonder Woman to isolate a number of moments, such as the transformation of the woman into a superhero. These short clips were then repeated many times to create a new narrative. The order of the clips did not follow their order in the original television episodes.

In cultural analytics, we are interested in revealing the patterns across a complete media artifact or a series of artifacts. Therefore, regardless of whether we are using all media objects (such as in the Time covers montage or Kingdom Hearts montages) or their samples (the Time covers slices), we typically start by arranging them in the same order as in the original work.

Additionally, rather than selecting samples intuitively or intentionally to present our new interpretation of the cultural media or expressing our opinion, we start with a more systematic sampling method—for instance, selecting the first frame of every shot in a film or every frame when some parameter in a video changes significantly (a new person enters the shot; a change in color palette; a change in shot composition; etc.).

However, we also intentionally experiment with various sampling strategies and also different spatial arrangements of the media objects in a visualization. Such deliberate remappings are closer to the practice of rearrangements of media materials by designers and artists. (Their rearrangements may be sometimes quite “violent” in terms of how they treat media samples. I am thinking of photomontages of the 1920s and 1930s by Hannah Hoch, László Moholy-Nagy, and John Heartfield, montages by surrealist Gherasim Luca, and works by 1960s pop artists.) However, typically our goal is revealing the patterns that are already present, as opposed to making new statements about the world using media samples.

Whether this goal is realizable is a different question. It is certainly possible that our remappings are reinterpretations of the media works that force viewers to notice some patterns at the expense of others. In other words, even the seemingly most “natural” and “objective” sampling method or visualization layout is already a strong interpretation of the material—a reading that is negotiated or oppositional, rather than hegemonic.

As an example of our use of a remapping technique, figure 10.3 shows parts of the visualization I created that compares the first and last frame of every shot in Vertov’s The Eleventh Year. The complete visualization is 133,204 × 600 pixels; in order to make the frames visible in the figure, I have zoomed into one small part, shown at zoom levels.

Vertov is a neologism invented by the director, who adopted it as his last name early in his career. It comes from the Russian verb vertet, which means “to rotate.” Vertov may refer to the basic motion involved in filming in the 1920s—rotating the handle of a camera—as well as to the dynamism of film language developed by Vertov. Along with a number of other Russian and European artists, designers, and photographers working in that decade, Vertov wanted to defamiliarize the familiar reality by using dynamic diagonal compositions and shooting from unusual points of view.

However, our visualization suggests a very different picture of Vertov. Almost every shot of The Eleventh Year starts and ends with practically the same composition and subject. In other words, the shots are largely static. Going back to the actual film and studying these shots further, we find that some of them are indeed completely static—such as the close-ups of people’s faces looking in various directions without moving. Other shots employ a static camera that frames some movement—such as working machines, or workers at work—but the movement is localized completely inside the frame; that is, the objects and human figures do not move outside or across frame boundaries. Of course, a number of shots in Vertov’s most famous film, Man with a Movie Camera (1929), were specifically designed to be dynamic: shooting from a moving car meant that the subjects were constantly crossing the camera view. But even in this most experimental film by Vertov, such shots constitute a very small part of a film.

Figure 10.3

Close-ups of a small part of the visualization of all 654 shots in The Eleventh Year (Vertov, 1928). Each shot is represented by its first frame (top row) and last frame (bottom row). The shots are organized from left to right following their order in the film.

In this chapter, I described visual techniques for exploring large media collections. These techniques follow the same general idea: use the content of the collection—all images, their subsets (temporal sampling), or their parts (spatial sampling)—and present it in various spatial configurations to make visible the patterns and the overall “shape” of a collection. To contrast this approach to the more familiar practice of information visualization, I call it media visualization.

I included examples that show how media visualization can be used with already existing metadata such as magazine issues dates or film frame numbers. However, if researchers add new information to the collection, this new information also can be used to create media visualizations. For example, images can be plotted using manually coded or automatically detected content characteristics, or visual features extracted via digital image processing. In the case of videos, we also can use automatically extracted or coded information about editing techniques, framing, presence of human figures and other objects, amount of movement in each shot, and so on. Thus, to compare the first and last frames of every shot in Vertov’s film, I used shotdetect software that finds boundaries between the shots.

Conceptually, media visualization is based on three operations: zooming out to see the whole collection (image montage), temporal and spatial sampling, and remapping (rearranging the samples of media in new configurations). Achieving meaningful results with remapping techniques often involves experimentation and time. The first two methods usually produce informative results more quickly. Therefore, every time we assemble or download a new media dataset, we first explore it using image montages and slices of all items.

Consider this definition of browse from Wiktionary: “to scan, to casually look through in order to find items of interest, especially without knowledge of what to look for beforehand.”20 Also relevant is one of the meanings of the word exploration: “to travel somewhere in search of discovery.”21 How can we discover interesting things in massive media collections? In other words, how can we browse through them efficiently and effectively, without prior knowledge of what we want to find? Media visualization techniques give us some ways of doing this.