ROBOTS

IN YOUR LIFE

ROBOTS IN YOUR LIFE

Seeing a robot in a domestic situation was something of a novelty until recent years, unless it was a toy. From the wind-up tin models of the 1940s that only looked like robots, to Sony’s robot dog AIBO in the 2000s, these have become steadily more sophisticated over the years. AIBO had sensors, processors and a computer brain smart enough to learn new tricks and behaviours. It could obey instructions, follow a ball and respond to being stroked. AIBO was the state of the art in electronic toys, but could not do anything useful. Similarly, Lego Mindstorms have more computing power than NASA had when it put a man on the Moon, but they are still toys.

The situation is changing with domestic robots. Millions of people have Roombas and similar self-driving cleaners in their homes, and a growing number also have a robotic lawnmower like the Automower. The iPal goes beyond ‘toy’ robots, acting as a combined child monitor and entertainment/education device for small children. Millions of older children and adults have a Mavic Pro drone – this might be seen as an expensive item of camera kit or a robotic toy, but either way its capabilities are impressive.

At the hospital, you are increasingly likely to encounter a medical robot like the da Vinci Surgical System for minor surgery, while machines like the Flex Robotic System allow surgeons to perform tricky operations in otherwise inaccessible parts of the body. STAR is an autonomous robot surgeon that will take over the less demanding tasks, such as stitching up a patient. Soft exoskeletons, among them the Wyss Robotic Exosuit, are already helping stroke patients to recover mobility; in future they may become common as aids for active living to the elderly. Some amputees now have sophisticated robot prosthetics, such as the bebionic Hand.

Robots also look set to play a much greater part in everyday life. The Waymo driverless car has received considerable publicity. These robotic vehicles are likely to reshape our cities and their impact may be as great as the invention of the car itself. Meanwhile, Amazon Prime Air’s delivery drones might have had a sci-fi edge a decade ago, but their prototypes are already carrying out deliveries.

The Care-O-bot is effectively a robot butler. Although initially aimed at providing help for the elderly, its makers see it as the ‘perfect gentleman’, always on hand to carry out domestic chores. The Vahana is – very nearly – a flying car. This electrically powered robotic air taxi for urban environments is able to take off vertically from a small landing pad and carry passengers for a comparable price to a ground taxi.

Not all of these robots will succeed in the real world. Delivery drones and driverless cars – not to mention flying taxis – require changes to existing legislation. Getting the relevant laws changed may take much longer than developing the technology. Simply because a technology is possible does not mean it is desirable. We will need to consider very carefully just how much of a child’s upbringing should be left to robots, and how much elderly care and, indeed, how much responsibility in the operating theatre.

Nevertheless, history teaches us that new technology becomes part of the furniture with amazing speed. It shifts seamlessly from novelty to commonplace. Anyone who grows up with a technology finds it completely unremarkable, however astonishing motorcars, televisions or mobile phones might have seemed to their parents a decade before.

Before long it is not a question of having a robot butler, but whether we need one for each member of the family – and how well it can make a soufflé? When everyone has an Automower, people will want robots that can prune trees and shrubs, or plant attractive flower beds. And how about delivery drones for larger items, such as furniture?

Ordinary people in the industrialised West enjoy luxury that was unattainable to any except the rich a few generations before. Machines clean our clothes, hot water arrives at the twist of a tap and our homes are heated at the flick of a switch. Smartphones and the Internet provide what is effectively the sum total of human knowledge in our pockets to carry with us wherever we go. Robots will only extend this process.

We are starting to see robots in our lives. We are likely to see many more.

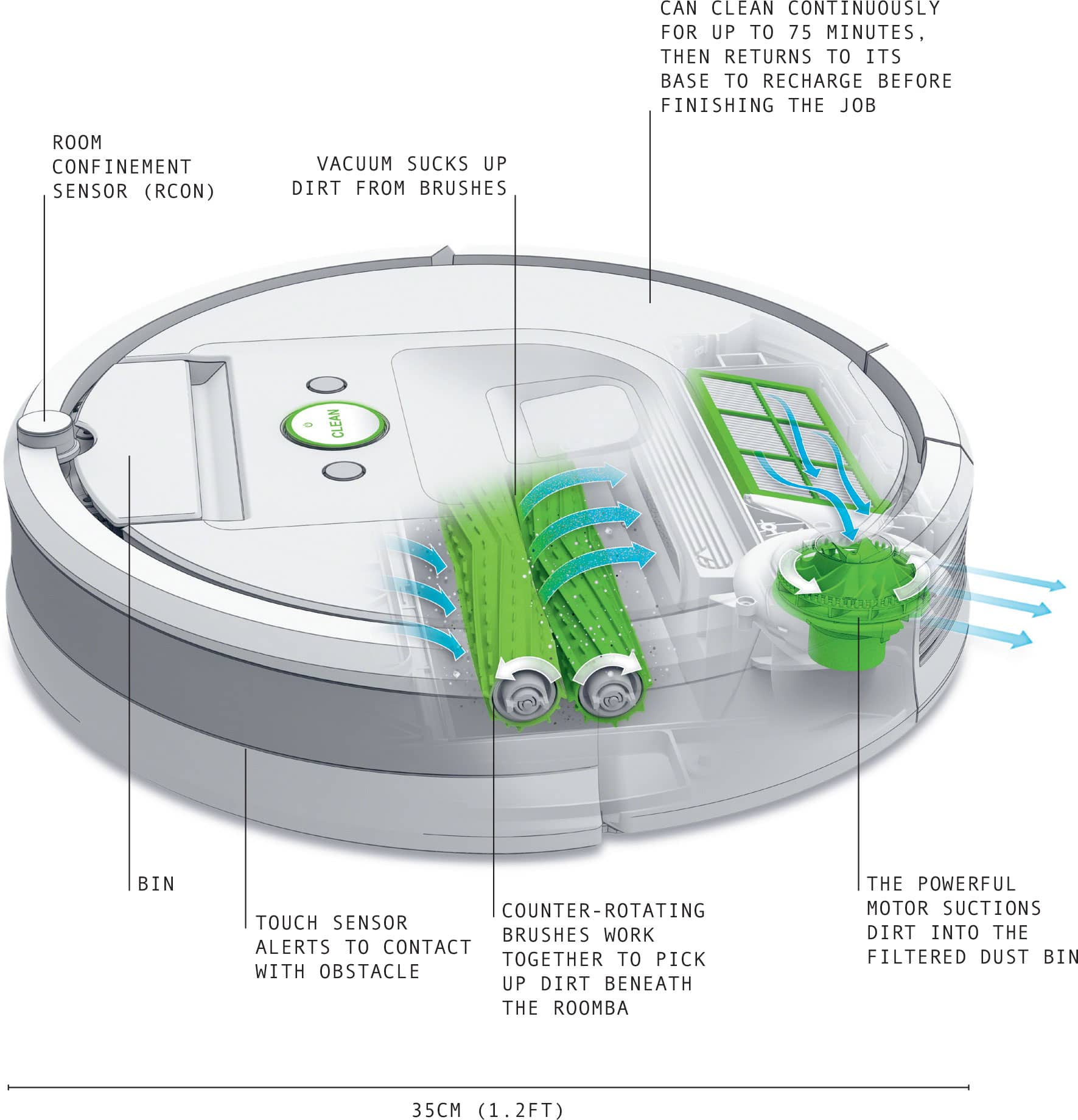

ROOMBA 966

Width |

35cm (1.2ft) |

Weight |

4kg (8.8lb) |

Year |

2015 |

Construction material |

Composite |

Main processor |

Classified |

Power source |

Battery |

If housework is not your thing, perhaps you should buy a Roomba. This small, compact device is the first successful domestic cleaning robot, and undoubtedly the most popular robot in the world. More than twenty million Roombas have been sold, all of them working diligently, cleaning floors in homes across dozens of countries.

The original Roomba was launched in 2002 by US company iRobot®. Several generations later, the Roomba 900 series, looks much like its predecessors – a flat disc the size of a dinner plate and about 9cm (3.5in) high. The most noticeable difference, however, is that the machine is about 1kg (2.2lb) heavier than the 2002 original, at 4kg (8.8lb) due to improvements in the vacuum system.

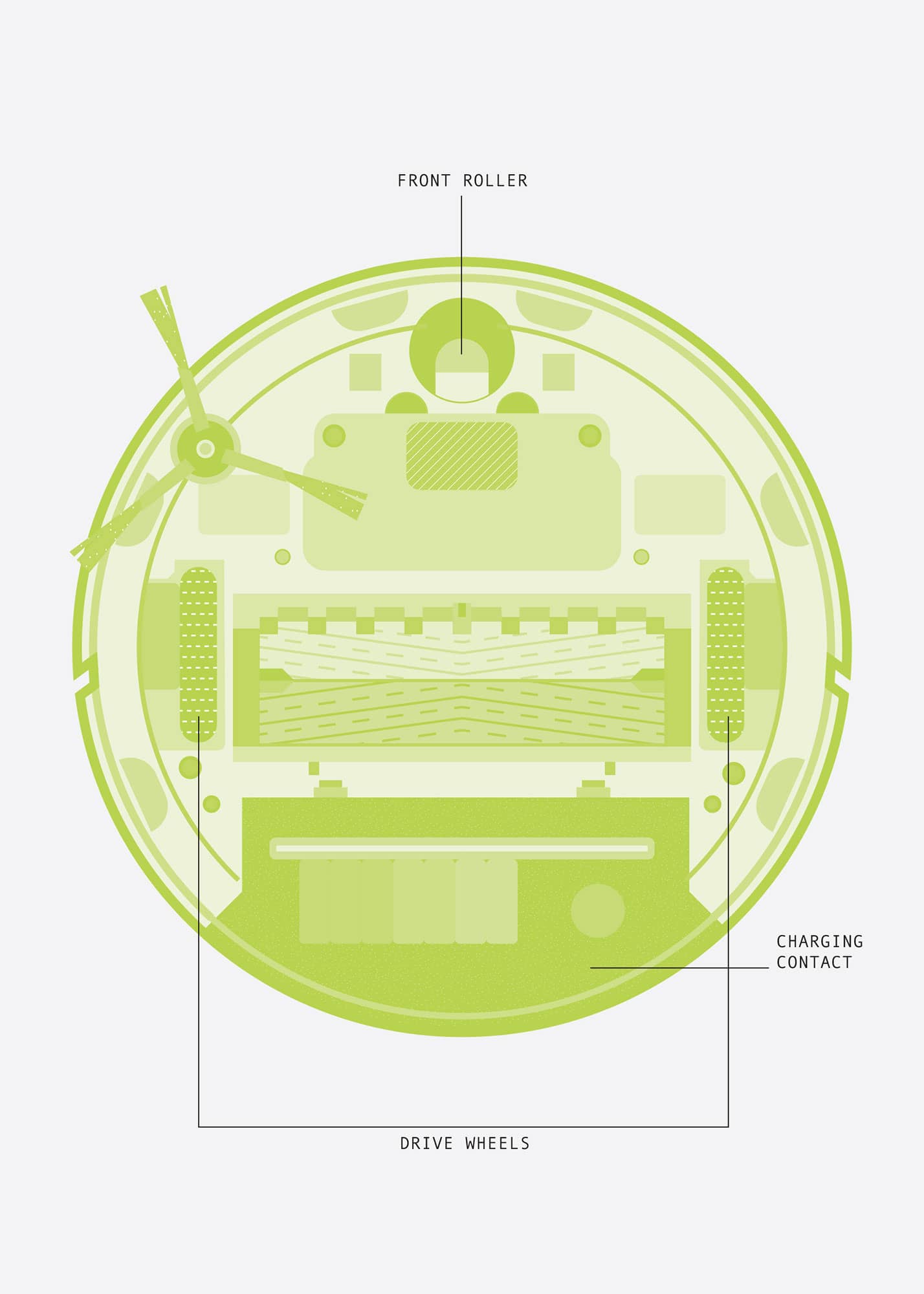

Colin Angle, founder and CEO of iRobot®, originally had a very different vision of what a domestic robot would look like. The first designs had legs for maximum mobility around the house. Angle soon realised that this would not work; legged robots were expensive and unreliable machines, while a cleaning robot needed to be as cheap as other household appliances, and just as dependable. So he reframed the problem: how could a small, cheap robot clean floors? It was not possible to simply miniaturise a vacuum cleaner, so Roomba relies on sophisticated brushwork. Two large rotating rubber brushes lie beneath the machine, while a third, spinning, brush attached at one edge extends to the edges of a room and into corners. All three direct dirt into the path of a vacuum, which sucks it up and stores it in a dustbin.

Roomba trundles along on two large wheels, each driven by its own motor. This arrangement gives the robot a zero turning circle, so it can spin in place to go in any direction. Roomba’s low height allows it to scoot beneath furniture and the kickboards in modern kitchens. An infrared sensor notifies Roomba on approaching an obstacle such as a wall, alerting it to slow down. A touch sensor in the front bumper tells Roomba when it runs into something, as which point Roomba repeats a sequence of reversing, rotating and advancing as many times as needed to get past or around whatever is blocking the way. Additional infrared sensors underneath Roomba prevent it from falling off what its makers call ‘cliffs’ – stairs or similar drops. Should the sensors detect power cords and carpet tassles, Roomba reverses its brushes to avoid tangling. The brushes are not as effective as those of a vacuum cleaner, but the robot can keep going over the floor until the job is done. In fact, the piezoelectric and optical ‘dirt sensors’ detects the volume of dirt being swept up over a particualr spot to ensure just this. Roomba simply goes over the area again and again.

Originally, models operated using a ‘random walk’, zigzagging across the room repeatedly until the sensors indicated that it covered the area thoroughly and there was no more dirt to collect. It did so with algorithms originally developed during the Second World War for submarine hunting patrols. The latest Roomba is smarter than that. It maps the room with infrared cameras to figure out where it has been and where it needs to go next. The result is that cleans in straight lines, as a human with a vacuum cleaner would.

When Roomba’s battery gets low, it makes its way to a recharging point, which it locates via an infrared beacon. Once recharged, it goes back to cleaning until the job is done. Roombas are typically programmed to clean while their owners are out so they do not get in the way, though their sensors mean that they can work safely around people and pets – you can see how cats and dogs react to Roomba on YouTube.

This robot has obvious limitations. It cannot move furniture or clutter, neither can it clean under cushions or negotiate stairs. This means it cannot do as thorough a job as a human and Roomba owners need to do some vacuuming themselves. Despite these drawbacks, the Roomba is active in millions of peoples’ homes – proof that the machine is no longer a novelty, but rather an invaluable aid on the domestic landscape.

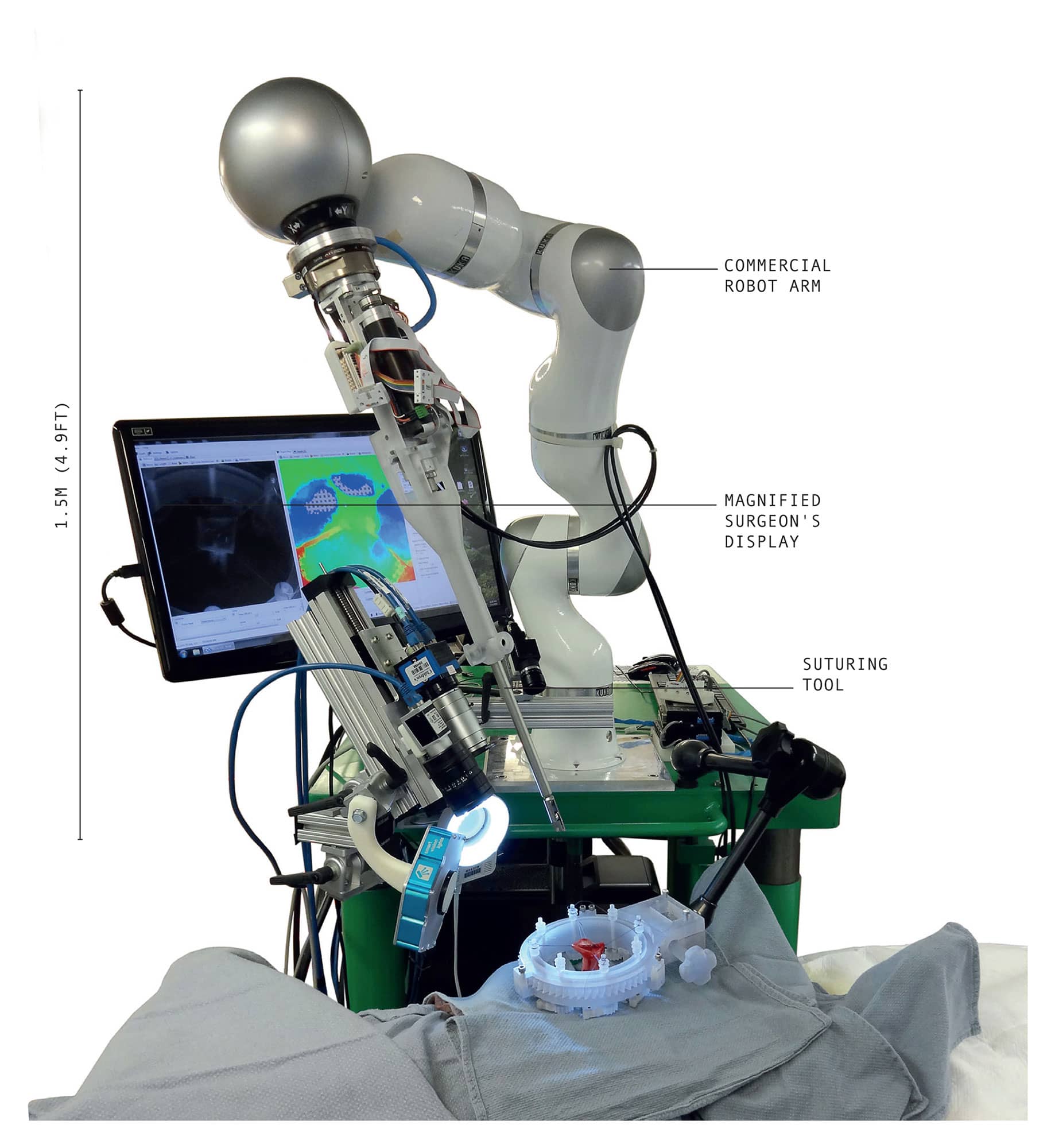

DA VINCI® SURGICAL SYSTEM

Height |

1.5m (4.9ft) |

Weight |

544kg (1200lb) |

Year |

2000 |

Construction material |

Steel |

Main processor |

Commercial processors |

Power source |

External mains electricity |

Adding a robotic touch to routine laparoscopy, the da Vinci is the world’s leading surgical system. Made by US company Intuitive Surgical, Inc., some three thousand da Vinci units are currently installed in hospitals globally. Between them, they have performed over three million operations to date.

The da Vinci is named after Leonardo da Vinci and, specifically, in honour of his creation of what Intuitive Surgical describes as ‘the world’s first robot’. Theirs is not an autonomous system, but a remote-controlled device that allows a surgeon to operate with greater precision and dexterity than when working manually.

Traditional laparoscopy, or ‘keyhole surgery’, has been around since the early 1900s, but it only became common in the 1980s. It is a minimally invasive technique, in which operations are carried out deep inside the body via a tiny incision, often less than a 1cm (0.4in) long. The surgeon views the site of the operation via a laparoscope – a camera on the end of a flexible fibre-optic cable – and uses special long-handled surgical instruments to reach the site.

Compared to traditional surgical approaches, laparoscopy means a smaller incision, less blood loss, reduced pain and shorter hospital stays. But it requires considerable skill and dexterity, as there is a reduced range of motion possible to the machine’s arm. The instruments enter through a narrow hole that acts as a pivot point, so their ends move in the opposite direction to normal surgery. This ‘fulcrum effect’ makes laparoscopic surgery challenging to master.

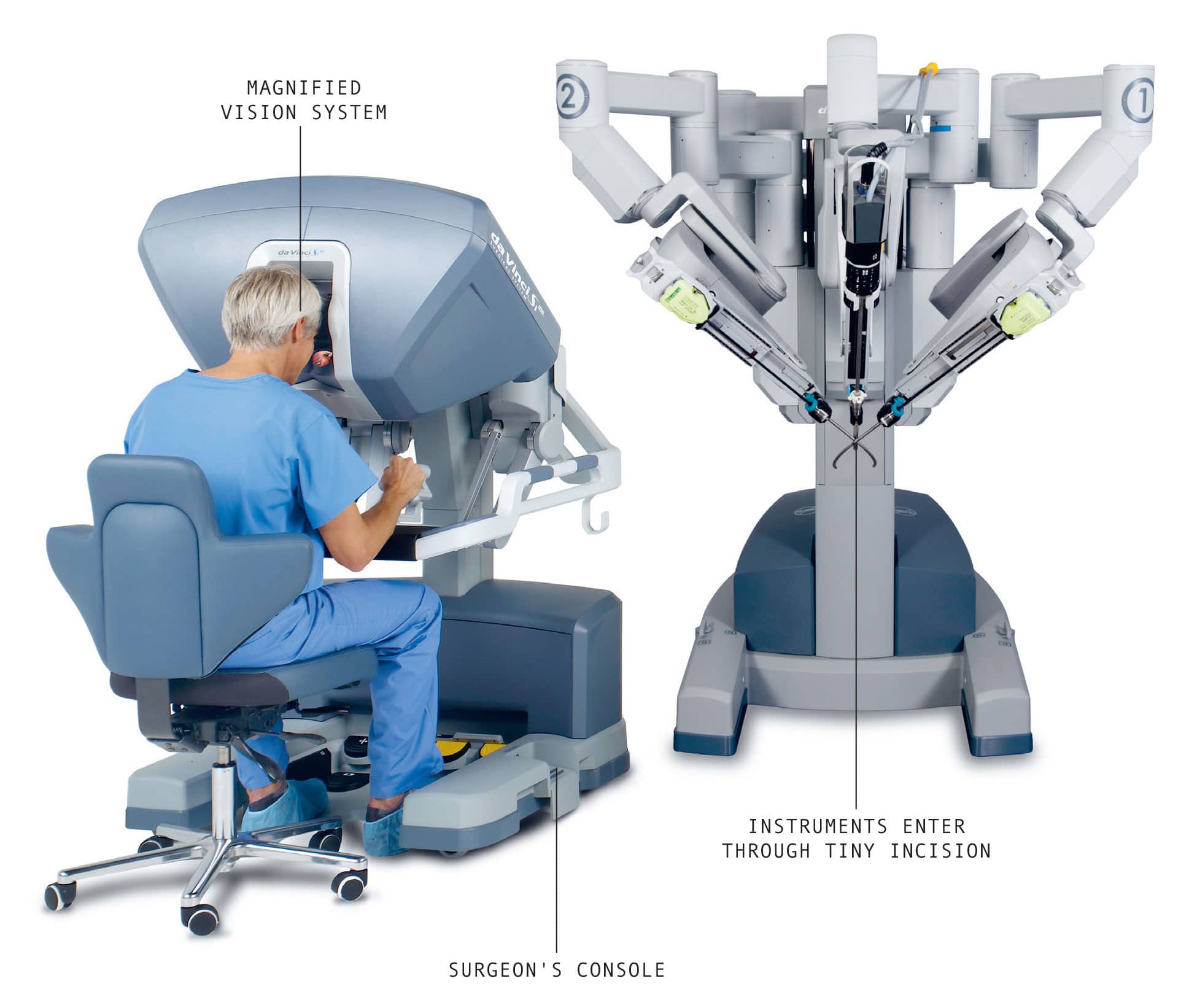

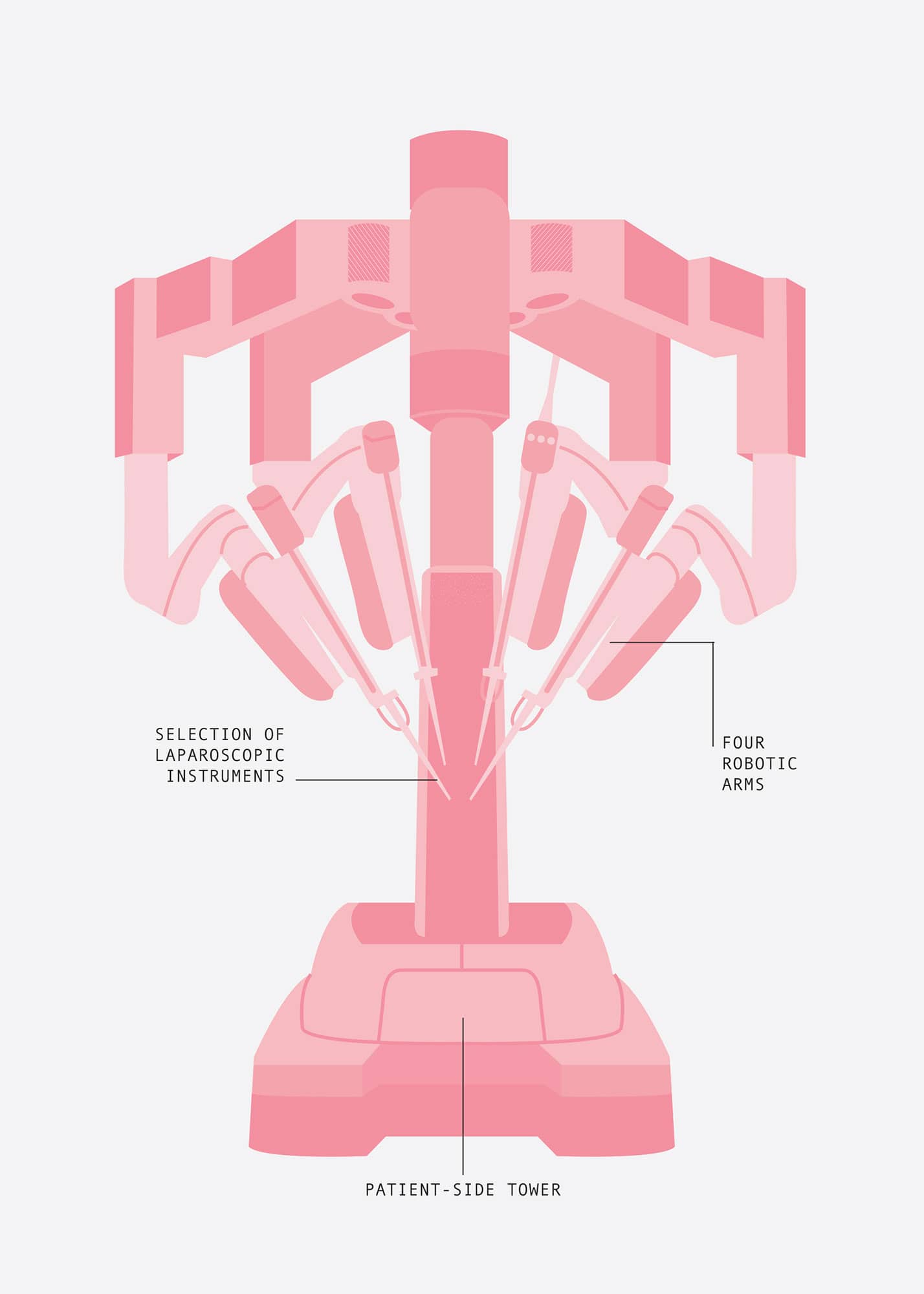

The da Vinci approach, introduced in 2000, makes such surgery simpler with miniature robotic arms. There is a patient-side cart or tower with four robotic arms, and a surgeon’s console, usually in the same room as the patient. The magnified vision system resembles those used for normal laparoscopy, but gives a 3D stereoscopic view.

The screen keeps the surgery in front of the surgeon rather than off to one side, and instead of standing, the surgeon sits at the screen with two hand controllers. The robot arms, equipped with a variety of instruments, follow the surgeon’s hand movements, automatically smoothing out any hand tremors.

The robot’s wrists have seven degrees of freedom, allowing them to be moved into any position inside the incision. This eliminates the fulcrum effect, making it much easier to master. Surgeons can carry out complex tasks, such as stitching and tying knots, more easily inside confined spaces. This reduces the risk of accidents and complications.

The da Vinci system has become the standard for prostatectomy, removal of the prostate gland, and is also increasingly used for cardiac valve repair. It makes difficult operations routine, allowing them to be carried out more quickly and easily.

Perhaps the biggest limitation of the system is its cost; at around $2m, da Vinci is out of the reach of many hospitals. The cost gets passed on, making robot surgery expensive. This makes the system almost unique as, generally, robots are only employed where they are cheaper than humans working alone.

To ease patients’ fears of robots running amok, Intuitive Surgical emphasises that ‘Your surgeon is 100 per cent in control of the da Vinci Surgical System at all times’. There is also the USFDA (Food and Drug Administration) licensing process to consider; getting the machine certified as safe would be far more difficult were it autonomous.

The Da Vinci Surgical System represents a partnership between humans and robots, much like FANUC’s collaborative robots (see here). In this case, machines provide the stability and fine control, while humans supply skill and expertise. And the results are better than either could produce working alone.

AUTOMOWER® 450X

Height |

31cm (12.2in) |

Weight |

14kg (31lb) |

Year |

1995–2015 |

Construction material |

Composite |

Main processor |

Commercial processors |

Power source |

Battery |

Mowing the lawn became a far less strenuous task with the advent of powered mowers, but it still required endless walking up and down behind the machine. No wonder that inventors started working on robot mowers from the 1960s, literally as soon as the electronics became available.

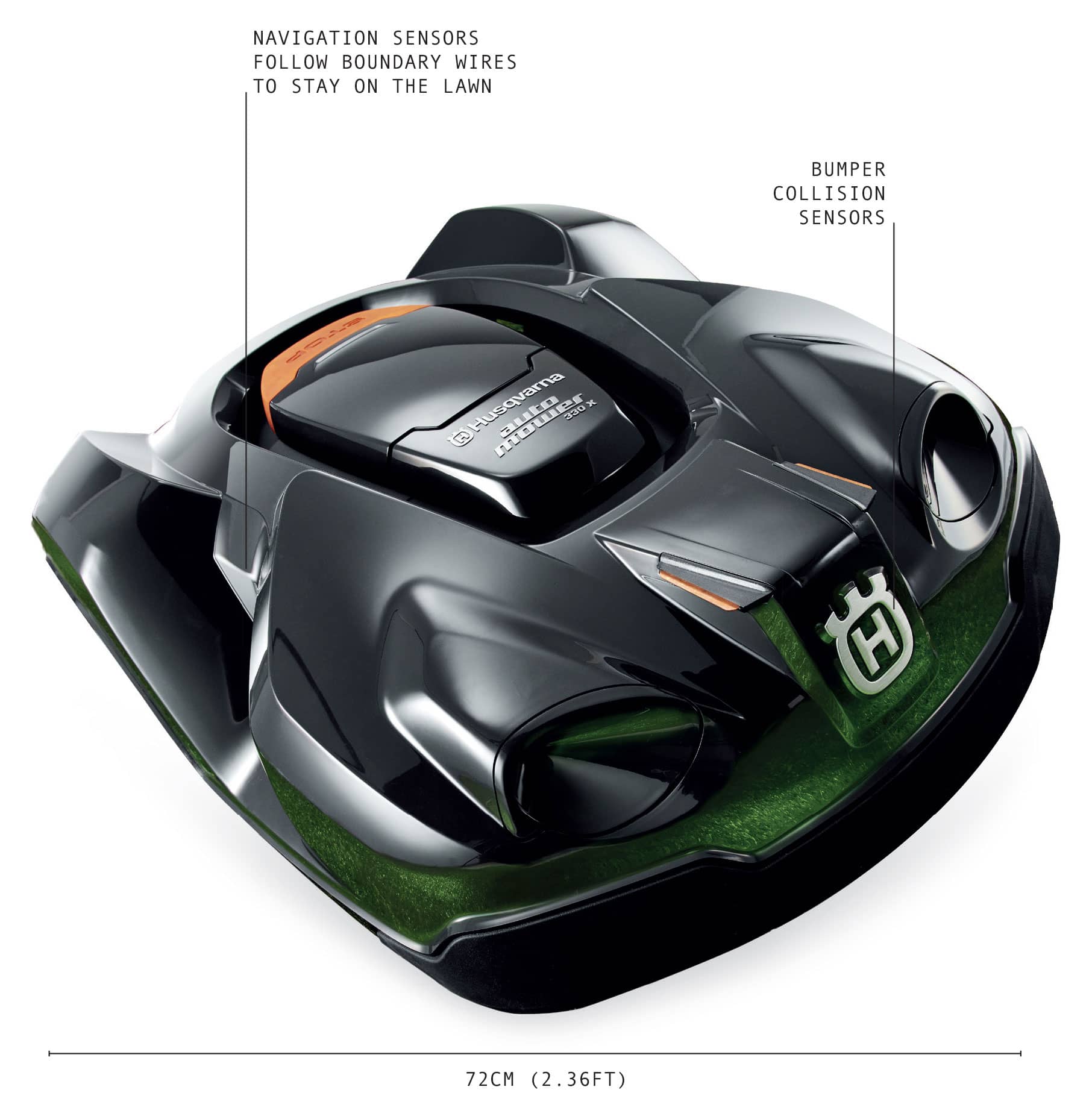

Selling its millionth robot in April 2017, the Swedish company Husqvarna entered the world of robot lawnmowers in 1995 and has dominated the market ever since. Its robots are not big, noisy machines with dangerous blades that mow in one single pass. Rather, its Automower takes a gentler approach that works to the robot’s strengths.

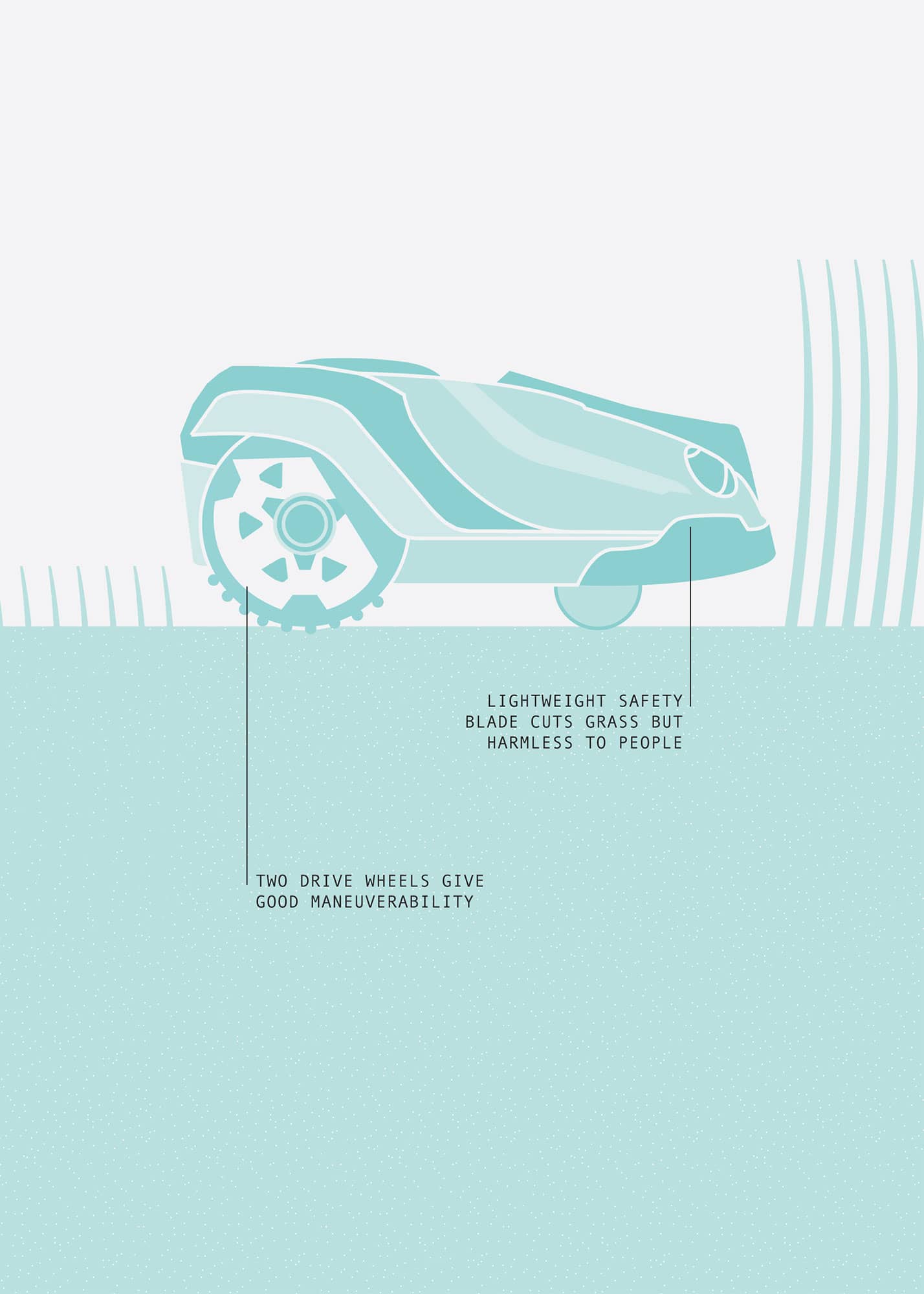

An Automower mows continuously on a random pattern rather than following the regular up-and-down lines of a human, so the same area may be covered several times. In place of the usual massive blades, an Automower has lightweight safety blades. These exert little force and pivot so that, if they strike anything heavier than grass they just fold away. This makes the robot safe around people. The idea is to ‘shave’ the lawn, taking off just a few millimetres at a time and mowing daily to keep the lawn at a set height, rather than once a week. Grass cuttings are left on the lawn as natural fertiliser. A sensor detects whether the grass in any one spot is below the height for cutting, so it can tell when the job is done.

The current top-end model is the Automower 450X, which can handle a lawn of up to 0.5ha (1.25 acres).

Like Husqvana’s other machines, the Automower is fully automated. The area in which it works is defined by a boundary wire, usually installed by the lawnmower supplier and laid a few centimetres underground by a special cable-laying machine. As the robot approaches this wire, its electric sensor detects a signal in the wire and steers to avoid it. As well as marking the perimeter of the lawn, the wire can mark out islands within it – flowerbeds, ponds or other areas that should not be mowed. The Automower also has a collision sensor for detecting trees, garden furniture and other obstacles. Should the robot collide with something, it stops and changes direction.

The Automower runs on two large wheels, each powered by an independent electric motor. It is highly manoeuvrable and can turn on the spot. Unlike other mowers, it is designed to work in rain – according to Husqvarna, this does not affect its cutting performance. The robot is also designed to be quiet, so it can mow at night without disturbing its owner’s sleep. One of the big selling points is that the robot gives you more time to enjoy your garden.

The original 1995 Automower was solar powered, but this proved impractical and they run on batteries. When the battery gets low – after around eighty minutes – the Automower docks with its charging station, either directed by a radio signal or by following the boundary wire or a guide wire. Once set up, an Automower manages the lawn without any human supervision. This means a lawn stays in good shape while owners are away. The operator also set a cutting schedule via a smartphone app so that the robot only mows during the night or while they are at work.

Being an outdoor robot, the Automower has an additional feature – an antitheft alarm system. The alarm sounds when the mower is picked up and cannot be turned off without a PIN. The same PIN is required to reprogram the robot. The 450X also has GPS and geofencing; should it be removed from its designated area, it calls its owner to report itself stolen.

As with many robots, the commercial success of robot mowers has a lot to do with pricing and their ability to compete with cheap human labour. This could explain why, so far, they have been more popular in Europe than in the United States and are generally uncommon at that. However, the continuing success of Husqvarna suggests that, just like grass, the numbers of robot mowers will keep on growing.

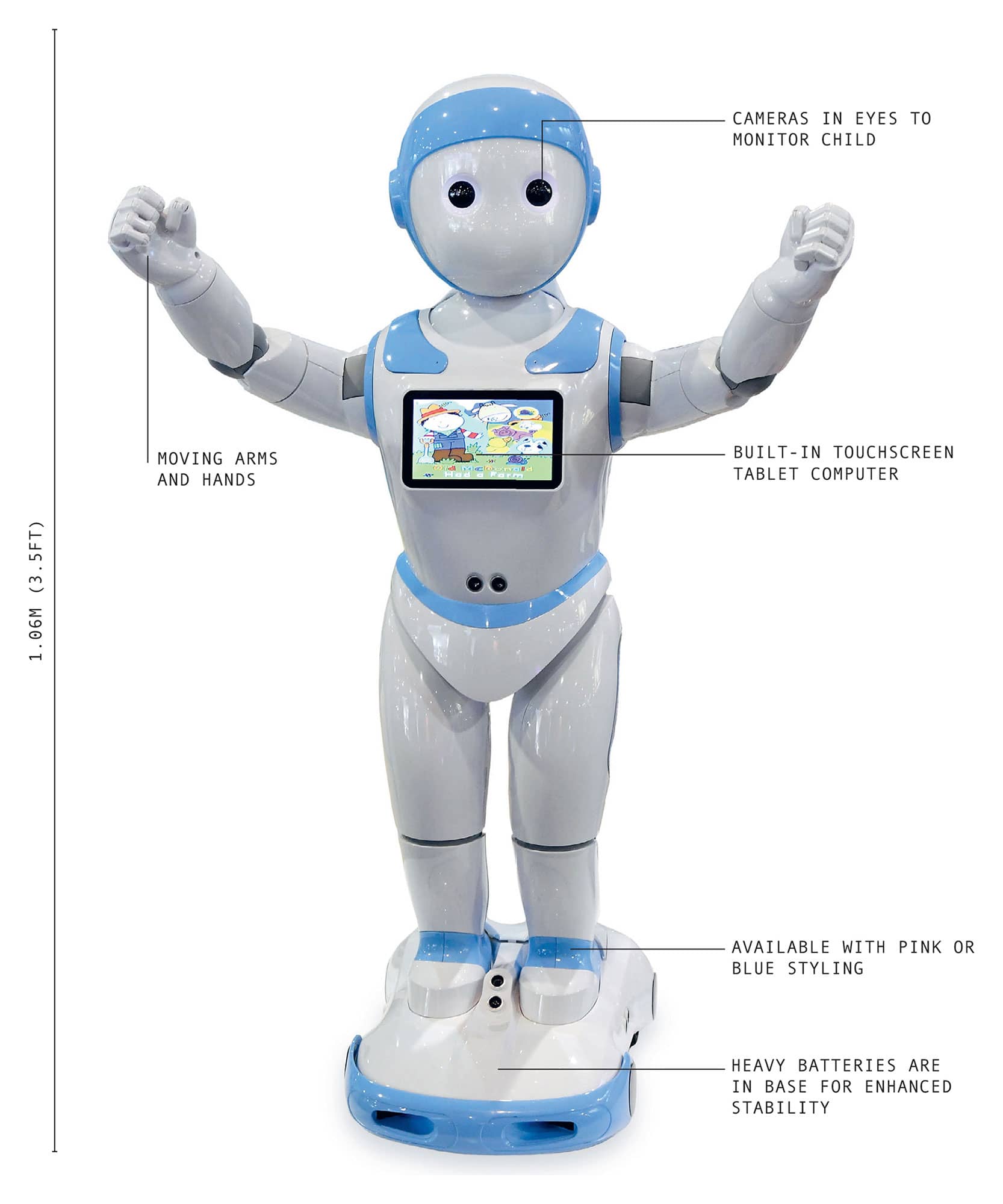

IPALTM

Height |

1.06m (3.5ft) |

Weight |

13kg (29lb) |

Year |

2016 |

Construction material |

Composite |

Main processor |

Commercial processors |

Power source |

Battery |

Tablet computers, which did not exist as consumer items until the introduction of the iPad in 2010, are now firmly established as a part of modern childhood. As of 2017, an estimated one in three children under the age of three in the UK had a tablet, and the average child spends several hours a day in front of a screen. AvatarMind, a Chinese/Silicon Valley startup founded in 2014 want to use a tablet-based approach to help look after children and encourage them to be more active.

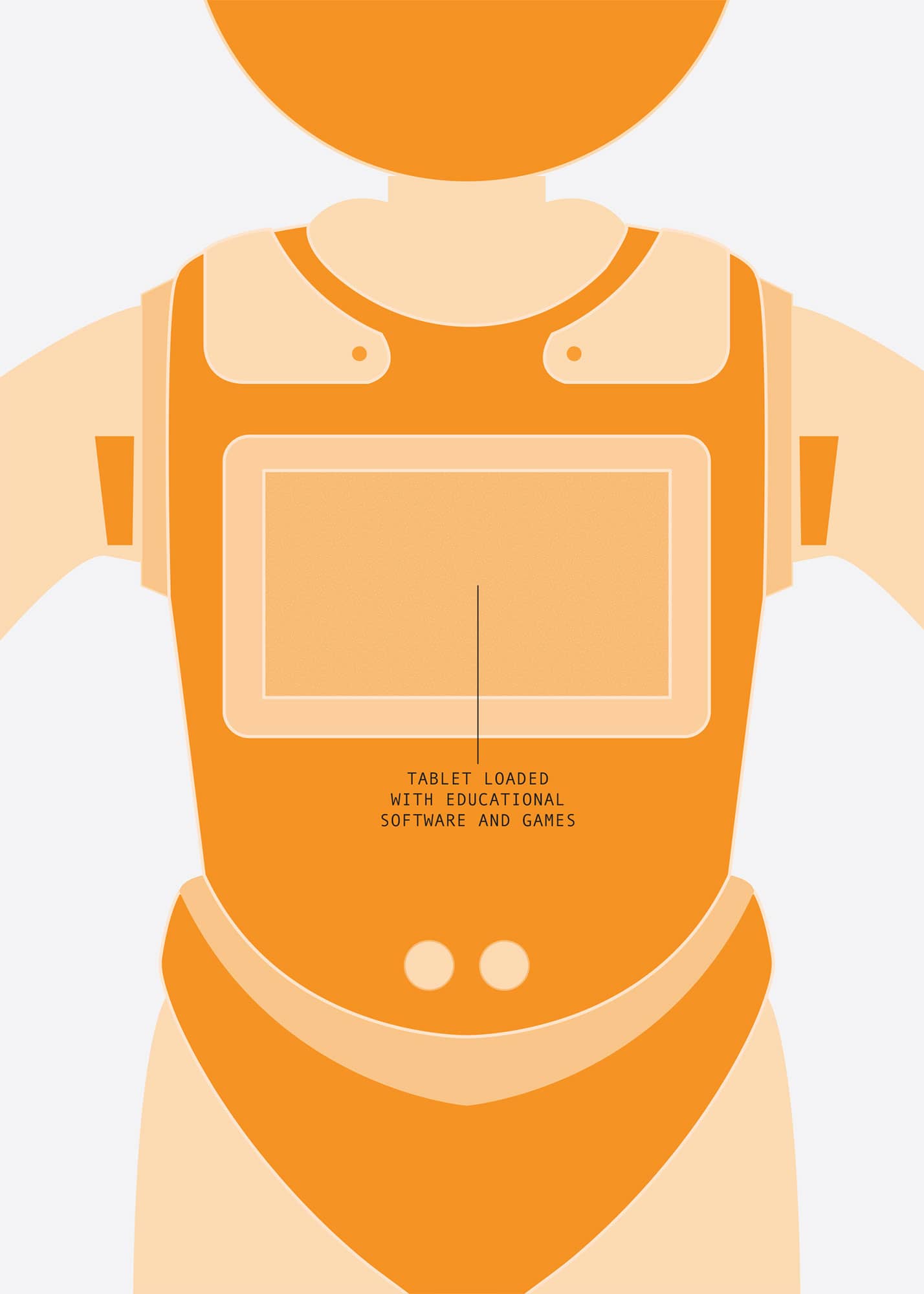

The iPal is a 1m (3.2ft) tall robot with big, appealing eyes and a touchscreen tablet in its chest. Built-in sensors allow parents to see and hear their child – each eye houses a 1.3-megapixel camera – and to monitor what a child is doing on the screen. The robot is loaded with educational software; the iPal is so inherently appealing that children simply want to use it. It teaches maths, science and languages, a range that will extend rapidly. It also can keep a continuous record of the child’s development, taking pictures or recording video every day.

An iPal runs on four concealed wheels rather than legs, for the usual reasons of economy. Batteries located towards its base give it a low centre of gravity and prevent it toppling over. Collision-avoidance sensors and software keep it from running into things.

The iPal has arms and hands that move, but they are essentially decorative — they cannot grasp or pick up objects, as this was not seen as a useful capability for a child’s robot. It can, however, play rock, paper, scissors. The iPal speaks with a squeaky child’s voice, and can sing and dance and play games. It has a conversational interface, and the makers say that it can answer a wide range of questions, such as ‘why is the sun hot?’. Unlike a human, it never gets tired of answering a stream of questions, or irritated by the same question being repeated, or bored of telling a favourite story again and again.

Not only does the iPal recognise faces, its makers claim that it can pick up on, and respond to, emotions. It also apparently learns the ‘preferences and habits’ of its host family. Both abilities will improve as the software becomes more sophisticated.

Experts have already raised several concerns about iPal. One is that the robot brings its own agenda. While the makers may not have deliberately programmed the machine to deliver propaganda, it may – like any teacher or nanny – introduce subtle values that are different to those of a child’s parents. For example, it may display a tendency to use certain websites or software, or teach creationism rather than evolution.

More seriously, robots like iPal may work too well: children may prefer interaction with the machine to that with other children or parents. Because it spends so much time in their company, this type of robot may actually know more about the child – and on some level, understand them, better – than anyone else. This may lead to ‘attachment disorders’ in which a child has trouble building relationships in later life.

There is little doubt that such a robot could cause emotional problems if it is used as a substitute for parenting, and the makers are not suggesting that iPal could be a full-time nanny. But robots might be a useful supplement when the parent is working or otherwise unavailable. Plenty of parents buy themselves time with stacks of DVDs or games for iPads; an iPal, or its descendants, seems a more benign alternative, especially if its use is limited to a few hours a day.

Exactly how far such robots could, and should, go remains an open question. Parents would rather teach their own children to swim, or ride a bike, or make pancakes. But parents cannot always be there, especially lone parents. And if a child wants to learn origami, or Swahili, or line dancing, then a patient, seemingly omniscient robot friend such as iPal may give him or her opportunities that might otherwise pass them by.

WAYMO

Height |

2.1m (6.9ft) |

Weight |

2.9mt (3.2t) |

Year |

2016 |

Construction material |

Steel |

Main processor |

Commercial processors |

Power source |

Hybrid car: battery/petrol |

In 2015, in Austin, Texas, Steve Mahan went for a drive that made history. Mahan is legally blind; but although he was the only person in the car, he was not driving it. Waymo, the self-driving car had carried its first passenger on a public road.

This was a triumph considering the fiasco eleven years earlier, when the Pentagon’s Defense Advanced Research Project Agency (DARPA) staged a Grand Challenge for self-driving vehicles. DARPA offered a million-dollar cash prize for the first robot to complete a course through 100 miles (160km) of empty desert. Not one of the fifteen robot contenders finished the course; the best made it no further than 8 miles (13km) before getting stuck. However, robot sensors, processors and software were advancing rapidly. A year later, in the 2005 Grand Challenge, five driverless vehicles made it to the finish. ‘Stanley’, the winner, was developed by a team at Stanford University led by Sebastian Thrun.

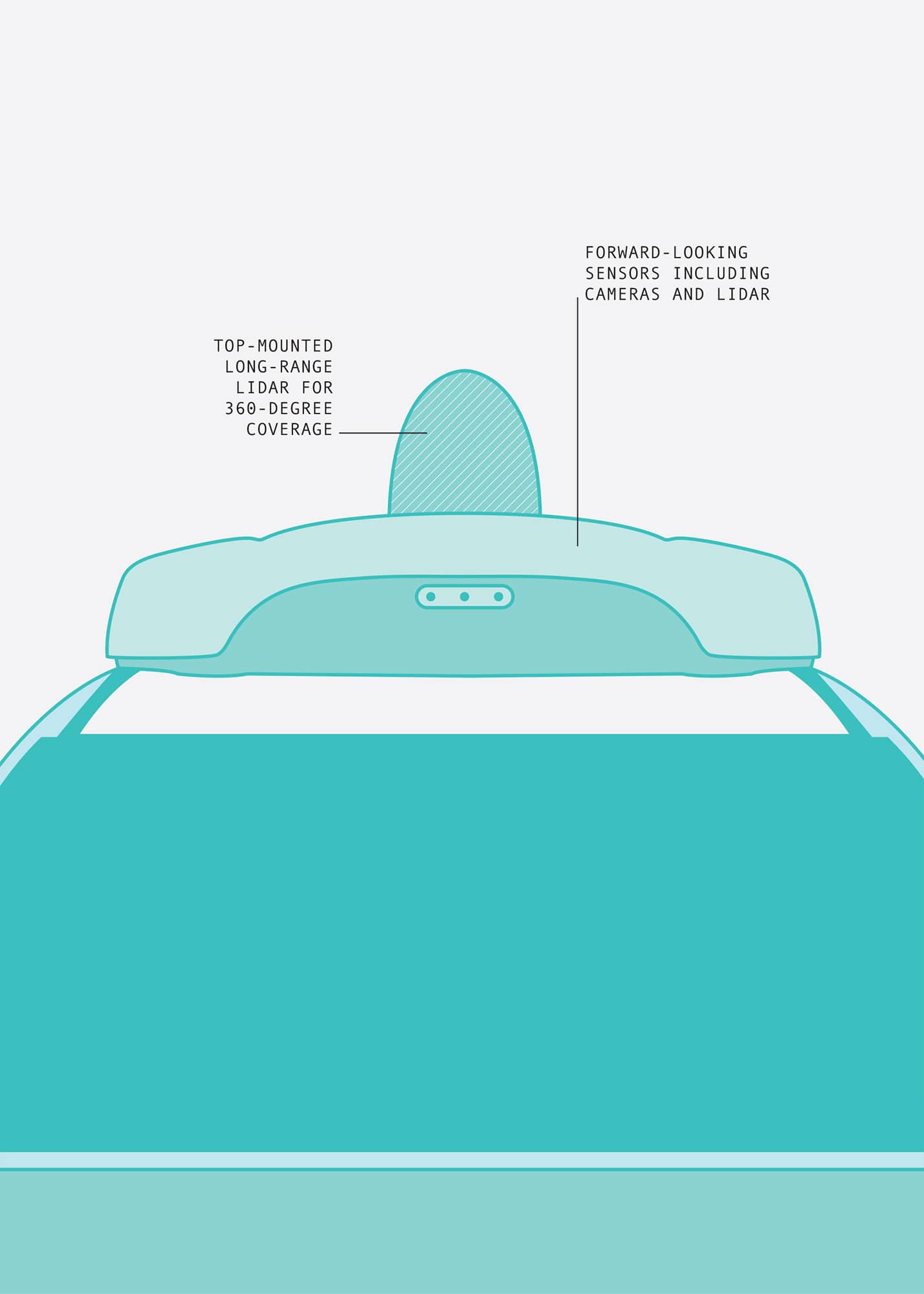

Thrun went on to lead what started as Google’s driverless car project, now known as Waymo (both Google and Waymo are subsidiaries of the same parent group, Alphabet Inc.). Stanley had many sensors, but the one that has been most crucial to the development of Waymo is the LIDAR mounted on its roof. LIDAR is a laser-based radar that can be used to build a detailed 3D map of the surrounding environment (see here). Google is a data company. One of its key products is Google Maps, in which cities are surveyed and photographed in unprecedented detail. By matching with data from Google Maps, the LIDAR can figure out its exact position and plot its route. In the original Google vehicle, the Velodyne LIDAR accounted for approximately half the $140,000 cost. Google now appears to have developed its own low-cost LIDAR.

Waymo’s software is as important as its sensors. The car has to see, understand and react appropriately to everything around it in real time. While most situations require little thought, odd or unexpected events have the potential to cause accidents: unmarked roadworks, a broken-down vehicle or an animal straying into the road.

During its development, Google carried out thousands of hours of testing, with a human ‘driver’ who kept their hands on the wheel and ensured the robot was driving safely. Google cars were in at least eighteen accidents, all but one of which resulted from human error. In that case, the robot which was pulling out slowly to avoid sand bags placed around a storm drain, was hit by a bus. There were no casualties and only slight damage.

Inside sources suggest that the Waymo car will be electric, but Waymo has not yet revealed what the body of its car will look like. There may even be several versions. Google’s test vehicles included Toyota Prius, Audi TT and Lexus RX450h. Google also had a custom vehicle assembled by Roush, a small Detroit-based company that previously worked on projects ranging from aircraft to amusement-park rides. Companies developing rival vehicles include traditional car manufacturers, such as Ford, and tech giants Apple. So far, Google’s deep reserves and commitment to self-driving vehicles are keeping it ahead.

Robot vehicles could transform city centres, which are largely designed around cars and car parking. Car parks will cease to be so important when vehicles can park themselves a convenient distance away. Many commentators suspect that the new technology will see car ownership fall off; a driverless car is effectively a robot taxi, so owning one may be less of an issue. Alphabet Inc. has already announced a deal with online taxi company Lyft.

Most cars are only used for an hour or two a day; shared driverless vehicles would mean more efficient usage, making car travel cheaper and reducing the number of parked cars clogging city streets. And when you consider that some ninety per cent of car accidents are caused by driver error, often exacerbated by carelessness, fatigue or alcohol, self-driving cars are ultimately likely to be much safer too.

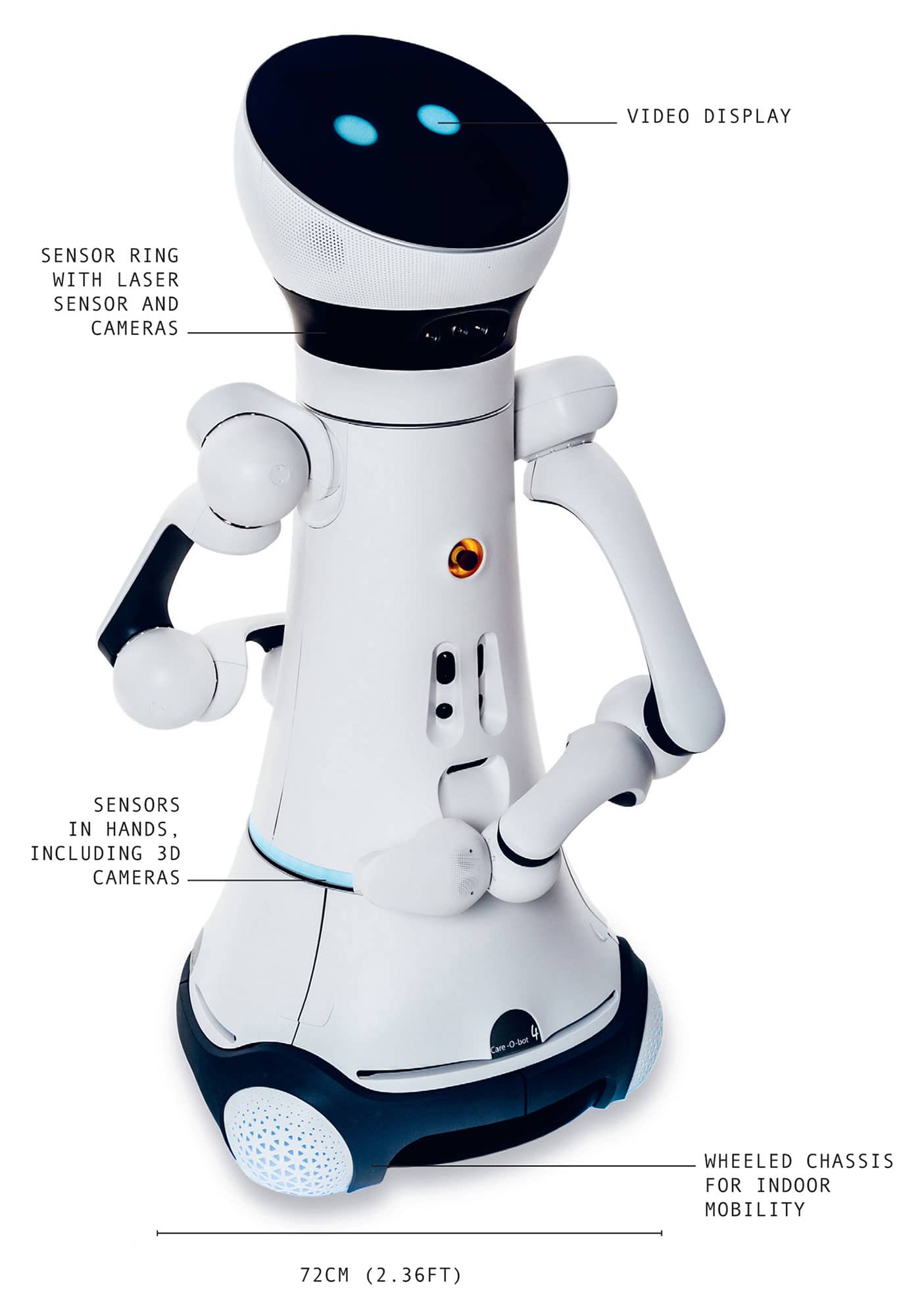

CARE-O-BOT 4

Height |

1.58m (5.18ft) |

Weight |

140kg (309lb) |

Year |

2014 |

Construction material |

Composite |

Main processor |

4–6 Intel NUC i5 256GB, 8GB RAM |

Power source |

Lithium ion battery |

A demographic time bomb is ticking: in the coming decades, there will be more elderly people needing care and fewer young people to care for them. German company Fraunhofer IPA want machines like their Care-O-bot 4 to take some of the load.

Japan has already started promoting elder-care robots, initially aimed at providing interaction and stimulation rather than practical care. PARO is a therapeutic robot that looks like a baby seal, developed for dementia patients; it reduces stress and improves the interaction between patients and human caregivers. Robots like PARO can do some good, as can computers that remind patients about appointments, ensure they take their medication and generally check that they are well and going about their usual activities. But in order to assist human carers more effectively, robots need to be able to help with daily life.

The Care-O-bot 4 is the fourth generation of robots developed by Fraunhofer. It is a mobile, modular testbed for researchers developing caring robots. Its software is ‘open source’, which means it is easy for users to program it themselves and developers to create their own software.

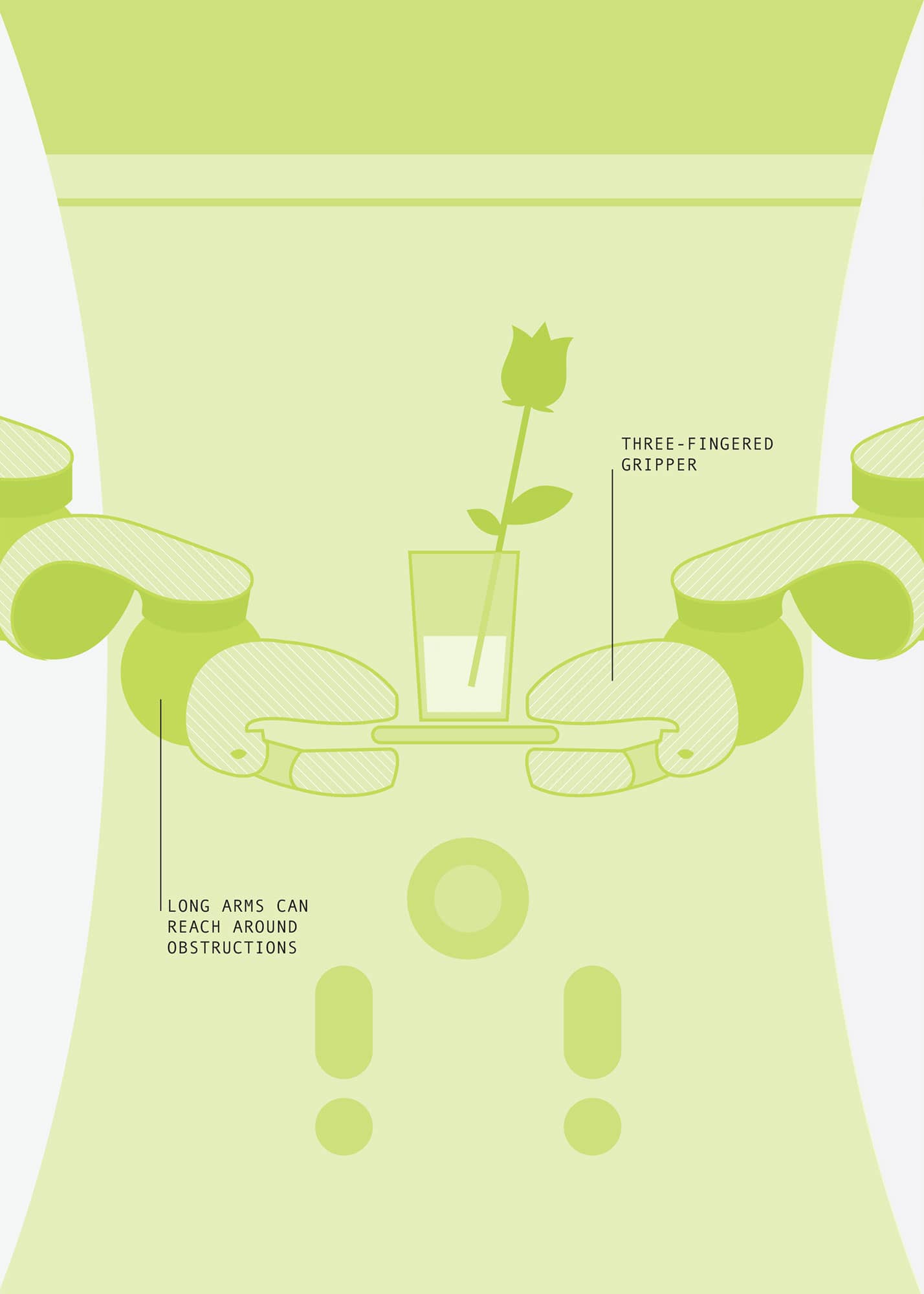

The simplest variant of the Care-O-bot is a mobile serving trolley that trundles around on four independently steered wheels. A sensor ring has a laser distance sensor and stereo cameras. The robot combines data from the sensors to create a 3D colour picture of its surroundings, enabling it to plan a route and navigate safely, avoiding what the manufacturer calls ‘dynamic obstacles’ – moving people.

Microphones, speakers, cameras and a video screen enable the robot to function as a ‘telepresence platform’, a communications terminal that a patient can use to talk to carers, doctors or others without having to master a tablet or other new technology. And of course, carers can use the robot to check on their patient’s health.

The Care-O-bot becomes more capable with the addition of one or two arms equipped with spherical joints. Each arm has its own 3D camera, light and laser pointer, and a three-fingered gripper with touch sensors. These allow the robot to adjust its grasping force to pick up objects securely without damaging them. The arm can reach down to pick up objects from the floor or up to high shelves, and it can reach around obstacles without knocking them over.

The Care-O-bot has an adaptive object-recognition system to identify new objects. Once an item is placed in its gripper, the robot rotates it to take pictures from all angles and identifies ‘feature points’ for recognition and correct orientation. Having been shown an object, the robot can obey requests, such as ‘fetch my hairbrush’ or ‘put the vase on the table’.

Key to the robot’s acceptance among elderly users was finding ways in which they could interact with it in a natural, intuitive way. A complicated interface with drop-down menus was not going to work and so the Care-O-bot has voice and gesture recognition. In addition, its torso has two flexible joints that allow it to make body gestures, and a head display to indicate ‘mood’. These features make the robot more of a person and less of a thing.

To start with, the Care-O-bot will act as a general butler and domestic helper, able to help with food preparation and serving drinks; ultimately it may do much more. Just like earlier caring robots, it will have a social function as well as a practical one, providing company and assistance.

There is some resistance to the idea of robot carers, owing to the risk that they could increase social isolation. A carer is not just a pair of helping hands, but provides the human touch – empathy, warmth and someone to talk to. The risk is that robots will offer a cheap and easy means to look after elderly people in isolation, leaving them without human contact.

Ideally however, by taking on chores such as cooking and tidying, robots like the Care-O-bot 4 will free up time for human carers who can then concentrate on the caring part of their job. And by providing assistance – and a means of connecting to the Internet and to the world – they will improve the quality of life of elderly people.

FLEX® ROBOTIC SYSTEM

Height |

136cm (4.5ft) |

Weight |

196Kg (430lbs) |

Year |

2014 |

Construction material |

Steel |

Main processor |

Commercial processors |

Power source |

External mains electricity |

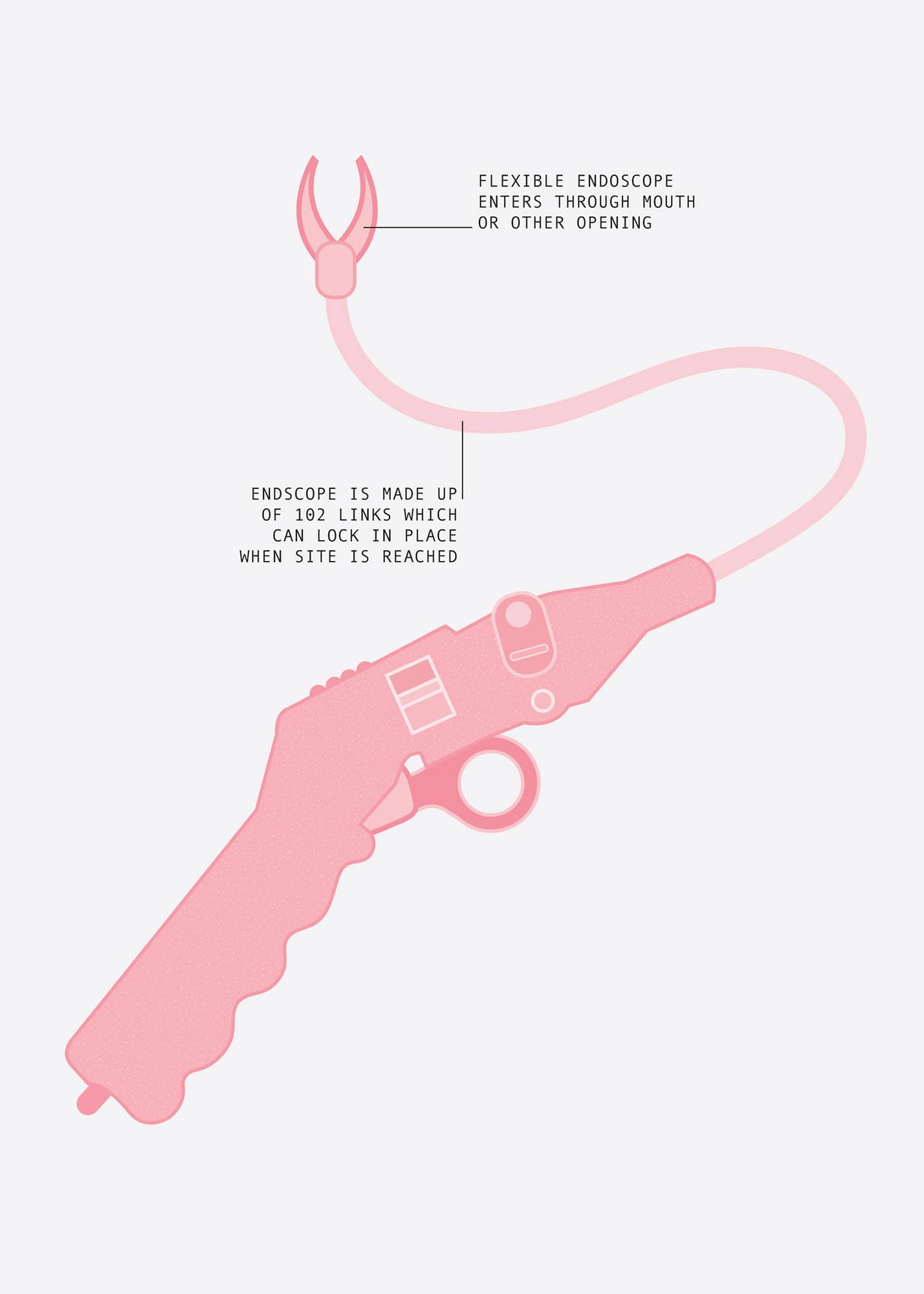

Robots such as the da Vinci Surgical System (see here) still enter the body via a surgical incision, even if the incision is as small as possible. But there is another way: accessing the interior through natural orifices. This technique, called endoscopy, is well established for imaging. The science of robotics is extending this approach further, with devices that can crawl into the human body and carry out operations.

Philipp Bozzini invented the first rigid endoscope in 1806. His Lichtleiter, or ‘light conductor’, allowed him to look into patients’ ears, nasal cavities and rectums without a need for surgery. Later physicians developed flexible endoscopes that could travel further into the body. They then added a needle for taking tissue samples and there is now a growing range of surgical tools that can be fitted to an endoscope.

When Professor Howie Choset of the Robotics Institute at Carnegie Mellon University developed the Flex Robotic System, he took endoscopy to the next level. Medrobotics Corp of Raynham in Massachusetts launched Choset’s machine in 2014. As with da Vinci, the system has two components: the robot itself, which is positioned beside the patient, and a surgeon’s control console, normally be in the same room.

A flexible robot travels down a patient’s throat, under the control of a surgeon using a joystick, in turn guided by the magnified image from a video camera in the robot’s nose. The robot is a mechanical snake, consisting of a series of articulated links capable of flexing around 180 degrees. Powered by four motors, the number of joints give Flex an impressive 102 degrees of freedom.

When the surgeon reaches the site of interest, the articulated sections are locked in place and the robot becomes a stable operating platform. Two small tubes inside the endoscope allow surgical instruments to be fed to the site. These include scalpels and scissors, grippers and a needle able to stitch up wounds. A surgeon using the video camera can use grippers to hold a piece of tissue and then cut it with the scalpel.

The makers of Flex say that the robot’s high level of manoeuvrability within the body allows surgeons to carry out operations in places that would previously have been difficult or impossible to reach without an incision. Following initial US trials, in 2016, Flex had a ninety-four per cent success rate in reaching the site of a tumour, with fifty-eight per cent of those sites being classified as ‘difficult to reach’ by conventional methods.

The Flex represents a new level of minimally invasive surgery and, like the da Vinci, it is very much a remote-controlled system rather than an autonomous one. The USFDA licensing process required that a surgeon be in control at all times, otherwise the machine would not have been certified as safe to use. Safety features include a controller to ensure that there are no accidental movements. This prevents a robot from progressing unless the surgeon has a foot on a pedal. The only truly autonomous feature is retraction: following an operation, the Flex automatically withdraws back the same way it came.

Professor Choset sees his Flex robot as the start of a new type of healthcare. Unlike the da Vinci, which tends to be confined to elite medical institutions, the Flex is highly affordable. Minimally invasive surgery that is carried out inside the patient does not necessarily require a hospital stay – or even a hospital. For routine operations, Choset believes that surgical procedures might be delegated to non-surgeons. Such a combination might lead to what Choset calls the ‘democratisation’ of surgery, with operations being carried out far more quickly and easily, with shorter waiting lists and far fewer patients even visiting a hospital.

With the cost and complexity of medical treatment steadily spiralling upwards, robots such as Flex may start to reverse the trend. They may not be welcomed by everyone in the medical industry, especially well-paid surgeons who stand to lose out. But if flexible robots can safely provide better outcomes at lower costs, there is no reason why they should not become more widely as surgical tools in time.

AMAZON PRIME AIR

Height |

30cm (12in) estimated |

Weight |

6kg (13.2lb) estimated |

Year |

2013 |

Construction material |

Composite |

Main processor |

Commercial processors |

Power source |

Battery |

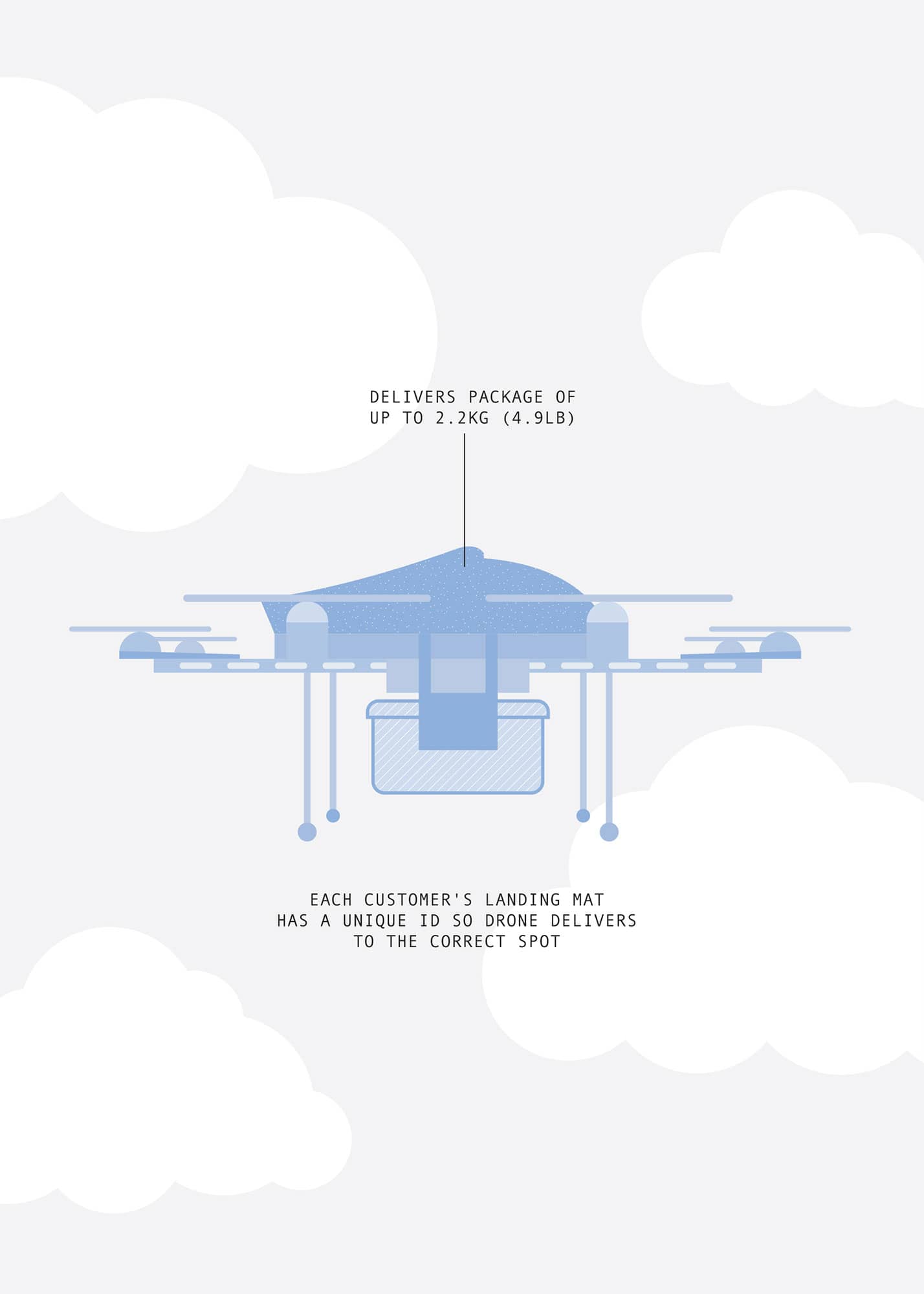

Amazon is by far the world’s most successful online retailer. Customers can order from more than one hundred million items, and a giant warehouse-cum-delivery centre usually ensures that goods are delivered the next day. The company’s growth has been fuelled by aggressive innovation – using Kiva robots for ‘picking and packing’, for example (see here). But when the company’s Christmas 2013 advert featured a delivery drone, it looked more like a publicity stunt than a real plan.

Initial, frivolous demonstrations had shown that it was technically possible for a quadcopter to deliver pizza, beer or related items, but the idea had never been presented as serious business proposition. While the Amazon advert seemed to be an amusing attention-grabber, the company did indeed hire teams of drone developers, set up testing sites, and lobby for changes in the air regulations to permit commercial drone deliveries.

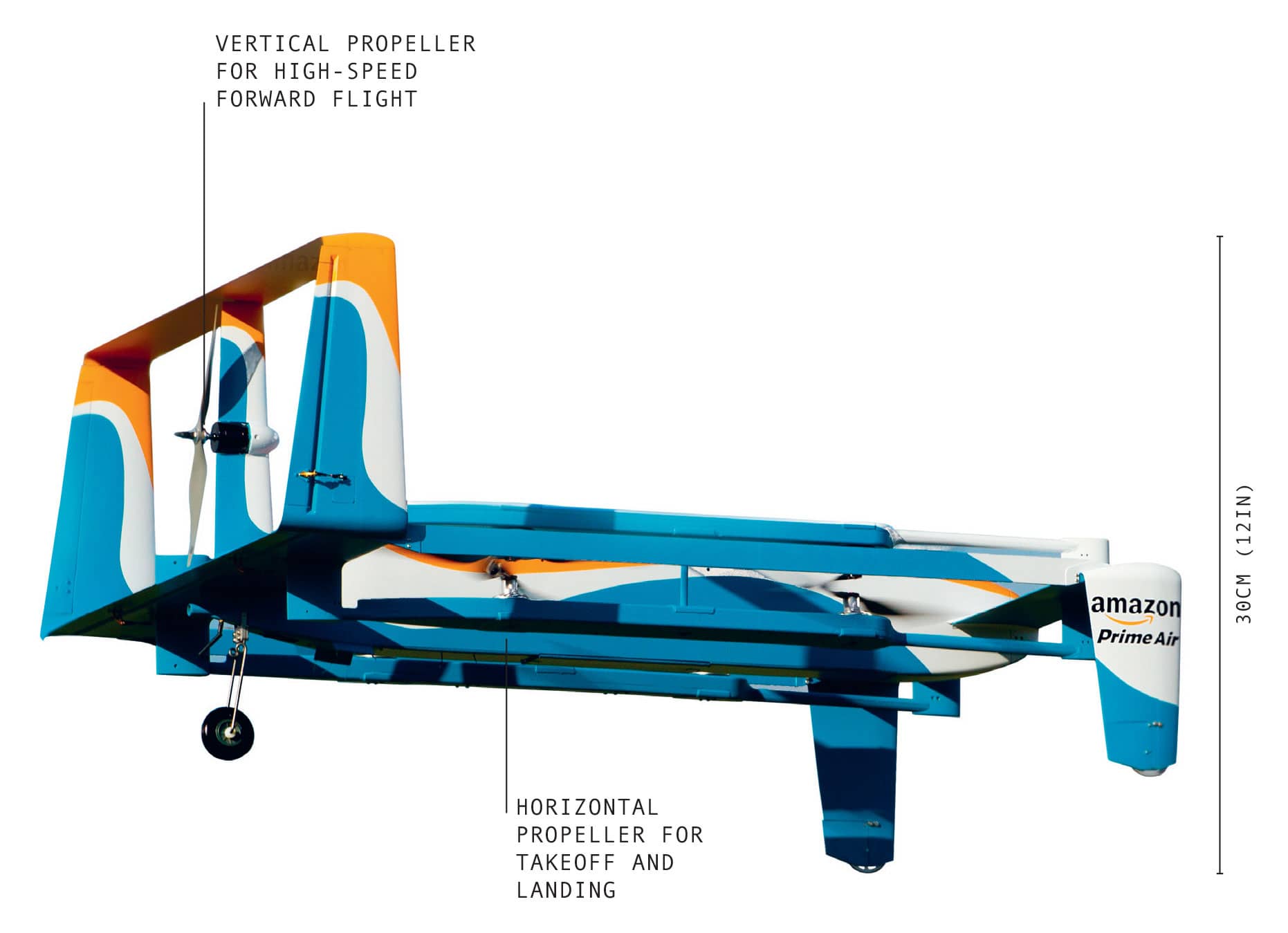

Not yet up and running, Amazon Prime Air, will not fulfil all of Amazon’s orders. It will be a premium service for items needed urgently, delivering packages of less than 2.2kg (4.8lb) within 30 minutes. This will require an automated setup in which drones fly themselves without human input.

The 2013 advert gave some idea of Amazon’s vision. The drone is battery-driven, as are consumer quadcopters, but is of a conversion type. This means it takes off and lands vertically, like a helicopter with rotors, but in the air, it is driven forwards horizontally by a propeller, like an aeroplane. This allows the drone to fly at up to 60mph (96kmh) for a distance of 8 miles (13km), and gives it an essential vertical-landing capability. In the video, the customer lays out a landing mat, and the drone gently sets down the delivery upon it. Landing pads may have a unique identifier, such as a barcode, so the drone can pick out the right one when there are several landing mats in a small area.

The first ‘real’ Amazon drone delivery was carried out in December 2016 in the UK, where a small-scale trial of the service is underway. The order consisted of a TV streaming stick and a bag of popcorn; again, it looked like a publicity stunt. The drone itself was very different to the 2013 version, being a quadcopter with rotors protected by a plastic guard so they are not a safety hazard.

The big issue with this sort of delivery is that drones must be able to fly safely over densely populated urban areas. At present, drones are not allowed to fly out of sight without human control. Amazon is working on a sense-and-avoid system using cameras and other sensors to spot obstacles including trees, buildings, wires, birds and other drones. Amazon’s plan is that its drones will operate in the airspace below 400ft (120m), keeping them out of the way of manned aircraft. Aviation authorities are still considering how to regulate such activities: drones will not only need to be safe, they need to be proven to be safe. Even a drone weighing a few kilos could cause severe injury at 60mph (96kmh).

The United States is likely to be one of the toughest areas to get drone deliveries approved, so Amazon has pilot projects in other places. A working system in one country may help pave the way for regulations elsewhere.

In the meantime, Amazon has registered a steady stream of patents relating to Amazon Prime Air, on ideas as diverse as airships acting as drone base stations, to recharging stations on skyscrapers and orders delivered by parachute. The drones themselves may look different, but the drive towards a drone-based service seems unwavering.

Many other companies are working on similar concepts. These range from Matternet, who intend to set up a drone network to deliver drugs and medical supplies to remote villages in developing countries, to traditional delivery companies such as DHL and UPS experimenting with drones flying from delivery trucks. Internet giant Google has a rival delivery drone project known as ‘Project Wing’.

Such a broad overall level of investment so far suggests a strong belief in delivery drones. Amazon may be the first to get their network going, but within a few years drone deliveries could be as commonplace as emails.

BEBIONIC HAND

Diameter |

5cm (2in) diameter at wrist |

Weight |

598g (1.3lb) |

Year |

2010 |

Construction material |

Aluminium alloy |

Main processor |

Commercial processors |

Power source |

Battery |

The most direct application of Leonardo da Vinci’s concept of machines imitating human articulation lies in prosthetics. Replacement limbs have evolved from simple hooks or lifelike, but useless, wooden models to sophisticated robotic devices able to match the actions and movements of a human hand.

UK company RSL Steeper claim that the bebionic hand it developed is the most advanced prosthetic hand in the world. Wearers can carry out a wide range of activities that the rest of us take for granted, but that can be challenging for amputees: picking up a glass, turning a key in a lock or carrying a bag, for example.

The term bionic is a combination of ‘bio’ (living) and ‘ic’ (like, or having the qualities of) and was popularised by the TV series, The Six Million Dollar Man, about a test pilot with limbs replaced with super-powered prosthetics.

The bebionic hand is battery-powered, and controlled directly by the user’s own muscles. Each of its five digits has its own independent motor. An electronic sensor detects changes in the skin conductivity over control muscles in the wearer’s wrist, and by working these muscles the wearer activates a grip. This ‘myoelectric’ control has been used for some years, but what distinguishes bebionic is the level of control that it provides with the different grip options it offers. There are fourteen basic hand positions or grip patterns for different tasks.

One set of grip patterns is available directly, and users can access another set by pressing a switch on the back of the hand. A user selects their own primary and secondary grip patterns based on their preferences and needs. The manufacturers say that operating the hand becomes instinctive after some training and practice.

What makes the hand genuinely robotic is that it has its own intelligence: embedded microprocessors monitor the position of each finger for precise, reliable control. An ‘auto grip’ function senses when something is slipping out of the hand’s grasp and adjusts the grip to keep hold of it. For some grips, it is necessary to shift the thumb manually between the opposed and non-opposed position. The opposed position is used in grips such as the ‘tripod’ – for holding things like pens – in which the index and middle fingers meet the thumb. An opposed thumb is also used for the ‘power’ grip where all four fingers close towards the thumb – this is the grip you use to pick up a ball or a piece of fruit, or cylindrical objects such as bottles, glasses or kitchen and garden tools.

Non-opposed hand positions include the ‘finger point’, where the middle, ring, and little fingers are folded into the palm and the thumb rests on the middle finger, with the index finger partially extended. This is the position used for typing – using just the index finger on each hand – or pressing buttons. The ‘mouse’ grip, as the name suggests, is handy for operating a computer mouse, while ‘open palm’ is useful for carrying large objects, such as trays or boxes. The hand can carry weights of up to 45kg (99lb) and is strong enough to crush a can. Proportional speed control ensures the right level of force; as well as crushing cans the user can pick up something as delicate as an egg without breaking it.

The bare hand looks robotic, but is covered in a removable silicone glove, which comes in nineteen different shades to match a user’s skin tone, complete with customised silicone nails. Users may tend to reject prosthetics for failing to feel like a part of themselves, but bebionic has overcome the resistance of many with its strong combination of looks and functionality.

It may not provide super-strength – a notable feature of the original Bionic Man – but the bebionic hand is improving the daily lives of its users in ways never seen before.

MAVIC PRO

Height |

8.3cm (3.25in) |

Weight |

743g (1.63lb) |

Year |

2016 |

Construction material |

Plastic |

Main processor |

Proprietary processors |

Power source |

Lithium ion battery |

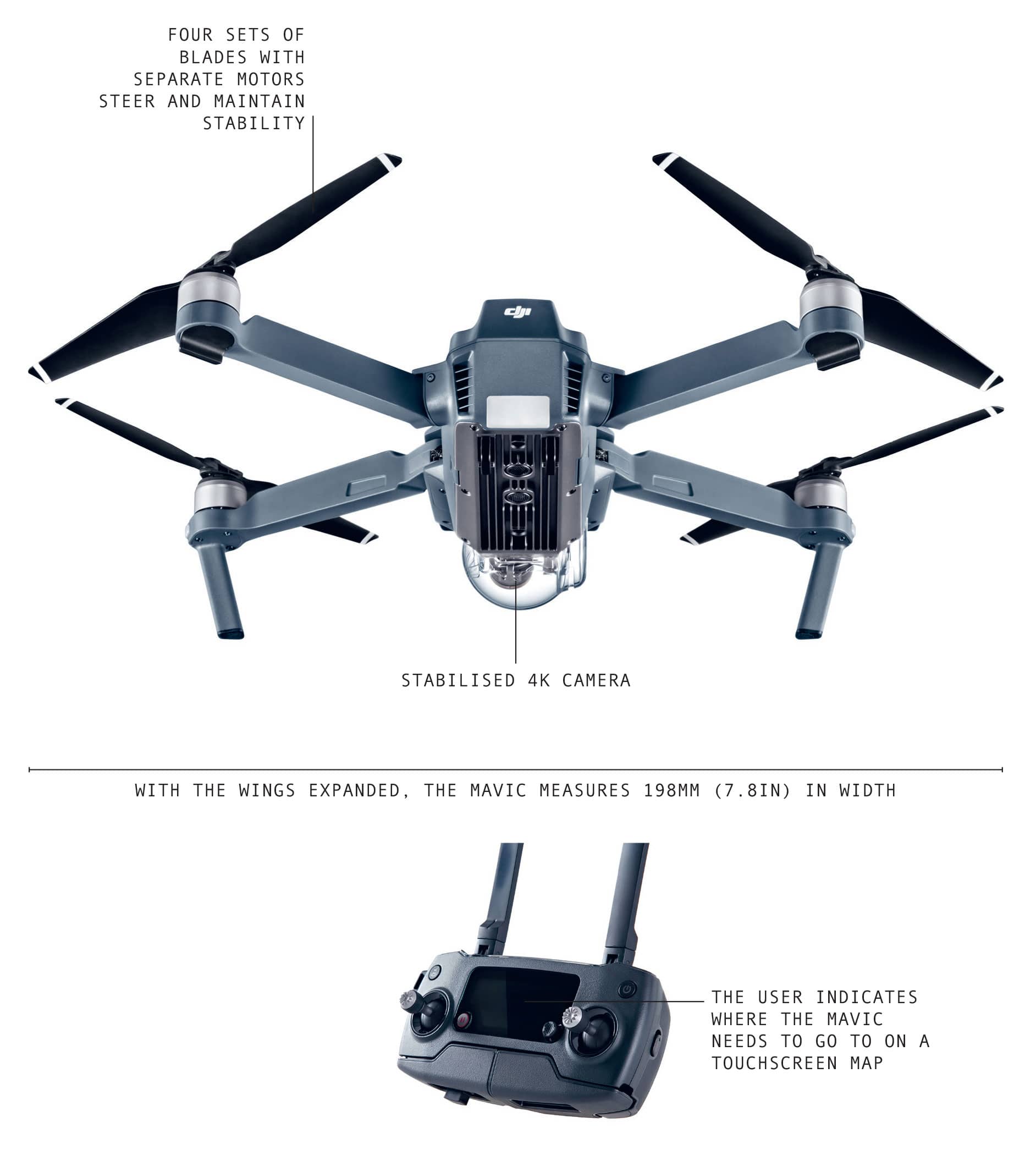

Miniature quadcopters, such as the Mavic Pro, are an increasingly common sight. Millions of enthusiasts use them to shoot spectacular aerial videos, and their popularity has made Chinese makers Da-Jiang Innovations (DJI) the most successful drone manufacturer in the world. While the Mavic Pro may look like a toy, closer inspection reveals a highly sophisticated autonomous flying robot.

Multicopters can be traced back to US engineer Mike Dammarm, who developed his first battery-powered quadcopter in the early 1990s. Standard helicopters, with a horizontal rotor blade for lift and a vertical rotor for stability. They steer by changing the angle or pitch of their blades, which requires an elaborate mechanical arrangement. The quadrotor is mechanically far simpler. Four sets of blades, each with separate motors, steer and maintain stability by speeding up or slowing down different rotors. This would be impossible for a human pilot to control, but electronics handle the task with ease. The Mavic Pro can remain perfectly stationary in the air, even in changing wind and weather conditions. It can also tilt to fly forward at high speed.

Dammarm’s invention hit the big time in 2010, when French company Parrot introduced the AR.Drone, a quadcopter that sent back video in real time via Wi-Fi. The AR.Drone was a bestseller, but could only fly for a few minutes at short distances, and the camera had poor photo resolution.

Frank Wang, DJI’s CEO, saw the potential of small drones as platforms for aerial photography, and set about developing the technology – batteries, motors, cameras, sensors and computer brain – to realise that potential. In 2013, the company launched the Phantom, which could take high-quality video for twenty minutes at a stretch, and could be flown from 1 mile (1.6km) away. The Phantom was priced for the mass market and DJI sold them by the million.

The new Mavic Pro is smaller than the Phantom series, weighing just over 700g (1.6lb) and priced at less than £1,000, but is packed with advanced electronics. The manufacturer claims that it is simple enough that a beginner can unpack the machine and start flying it at once. The Mavic Pro flies at 40mph (65kmh), shooting ‘movie-quality’ 4K video for up to twenty-five minutes with a stabilised camera. It can be controlled from 5 miles (8km) away.

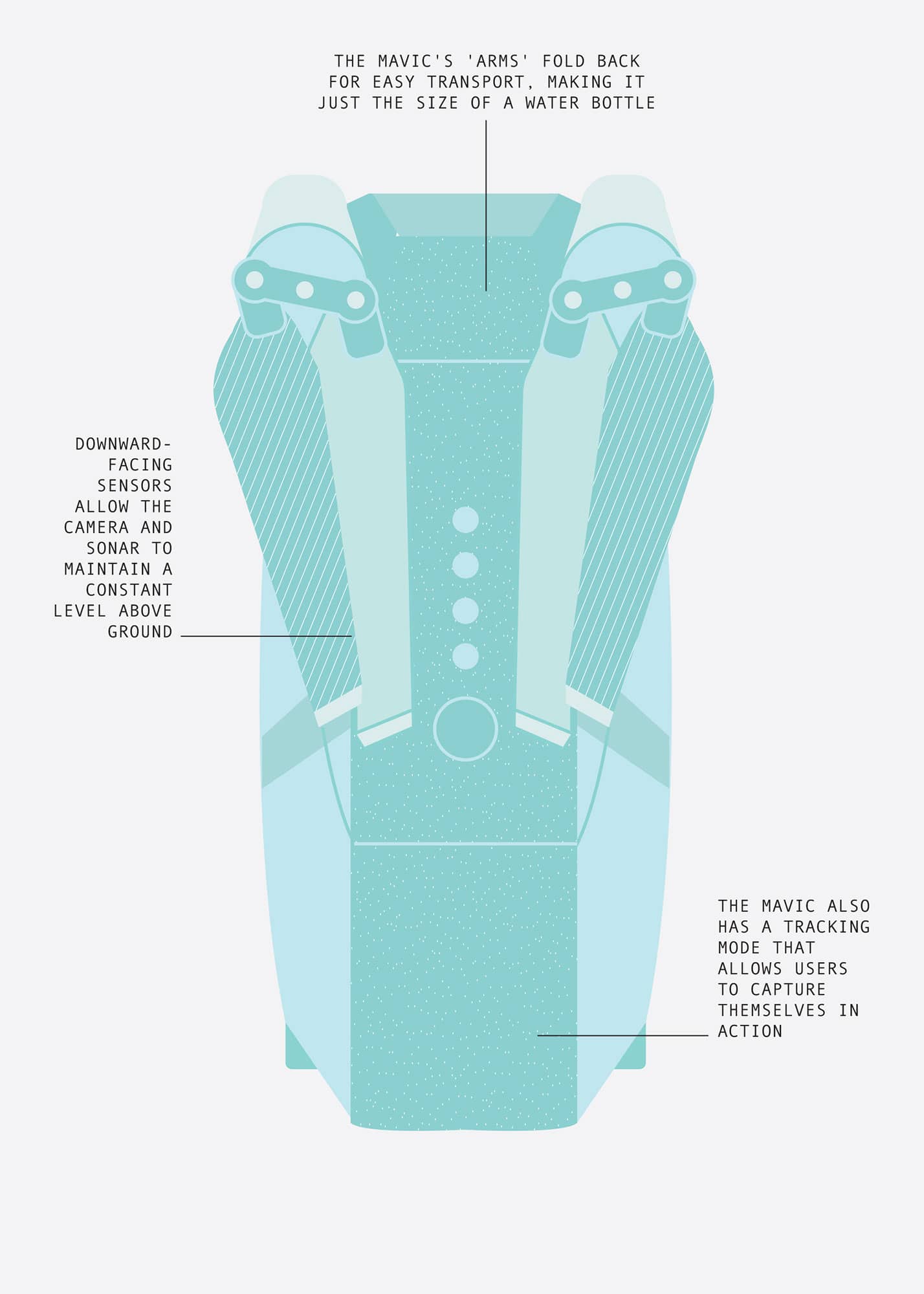

Despite its small size, the Mavic copes with winds of over 20mph (32kmh). Downward-facing sensors – cameras and sonar – allow it to hover as steady as a rock, and to maintain a constant level above ground as it mounts a slope. Portability is a key selling point. The Mavic Pro’s arms fold away, making it the size of a water bottle, so climbers or hikers can pack it safely and easily unfold it for action.

What makes the Mavic Pro a robot is the high level of built-in automatic control. It can fly itself, and avoids obstacles with the aid of sonar and cameras. The user indicates a destination on a touchscreen map, and the drone navigates the route itself via GPS. The Mavic Pro can always return to its takeoff point at the touch of a button. A clever optical navigation system takes pictures of the ground below at takeoff and uses these to recognise the launch point, enabling it to return and land automatically a few centimetres from where it started.

The Mavic also has a tracking mode: the camera locks on to a person or vehicle and follows them. This means that skiers, skateboarders and others can capture video of themselves in action. Gesture mode is another way of directing the drone without a radio-controlled unit – the drone follows hand signals given by the operator, taking pictures when requested.

The Mavic Pro’s larger cousins have revolutionised professional film-making. You no longer need a helicopter to get aerial shots of a car chase, to swoop into a canyon or over a skyscraper, or to fly with eagles. Machines like Airobotics’ Optimus drone-in-a-box (see here) are moving into industry and agriculture. But, on a domestic level, the robot that most people are likely to own and enjoy will be a Mavic Pro.

SOFT ROBOTIC EXOSUIT

Height |

1m (3.3ft) approx. |

Weight |

3.5kg (7.7lb). |

Year |

2014 |

Construction material |

Composite |

Main processor |

Commercial processors |

Power source |

Battery |

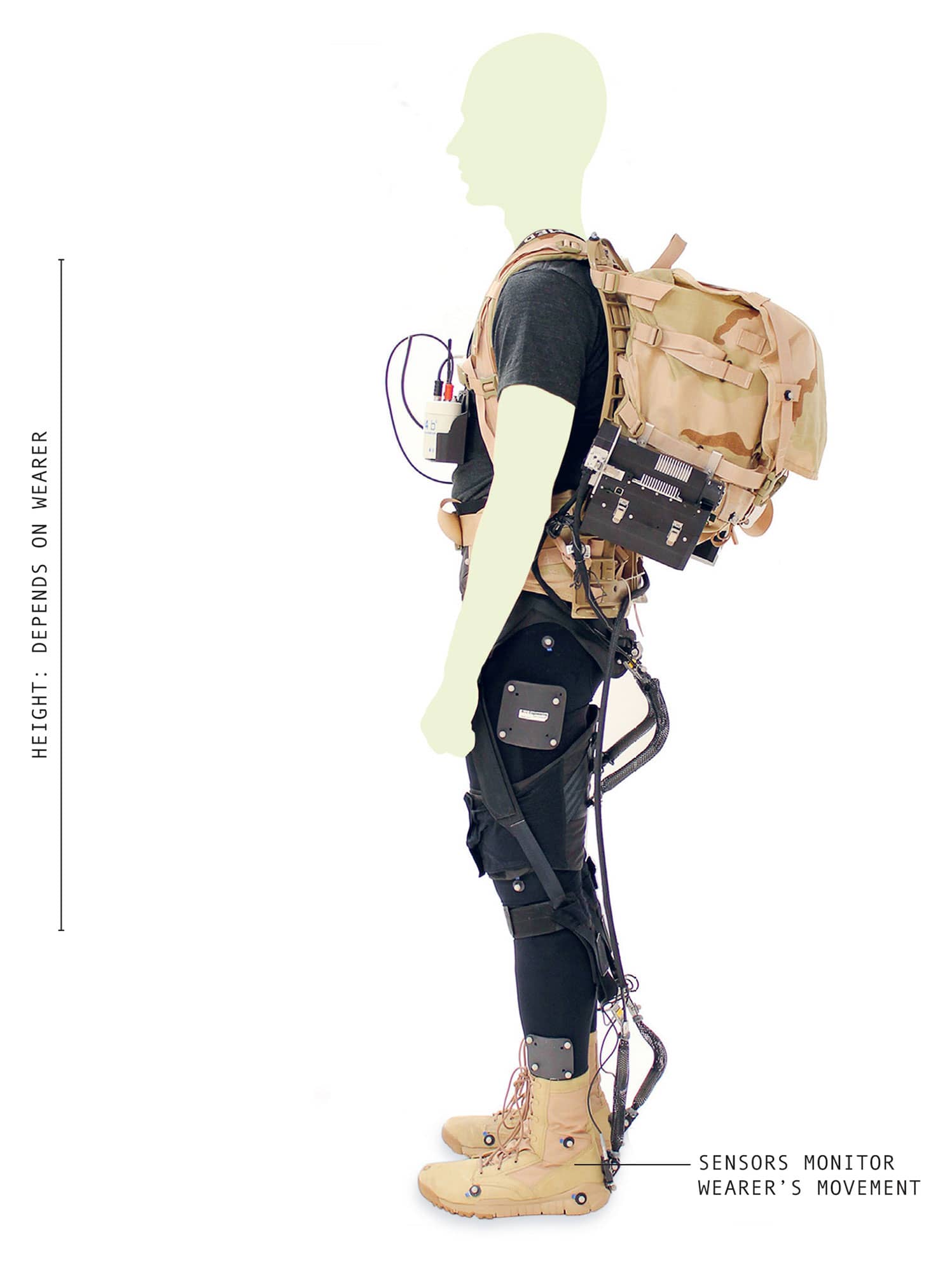

An exoskeleton is a wearable robot that matches its wearer’s movements and gives them additional strength. While the initial motivation for this type of work came from the military’s desire for armoured suits resembling Iron Man, with superhuman strength (see XOS 2, here), researchers are now looking at a different type of exoskeleton for use on a domestic level.

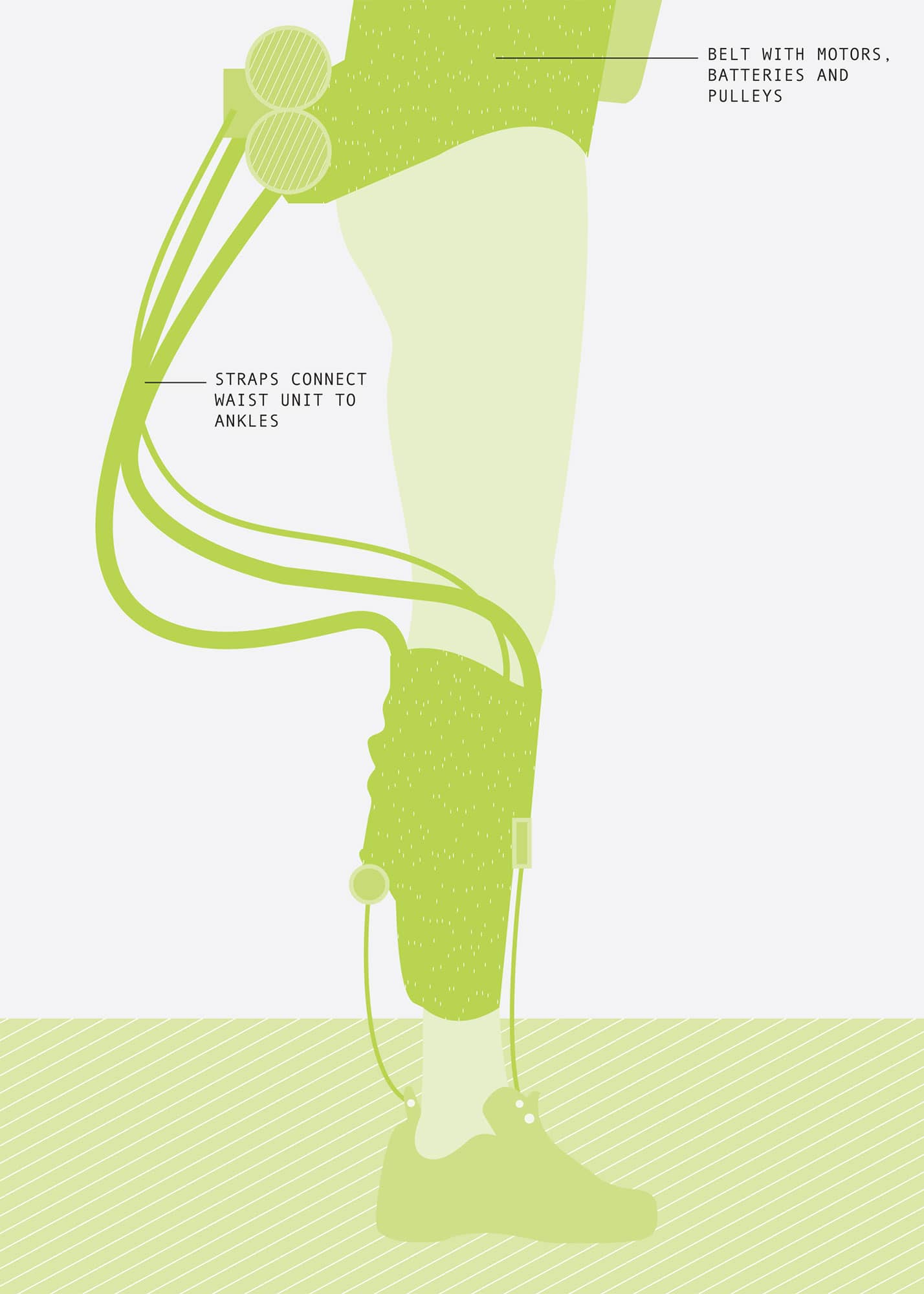

Soft exoskeletons do not form a rigid shell, but act as an additional set of muscles. Conor Walsh leads a team at Harvard University’s Wyss Institute for Biologically Inspired Engineering, developing a range of devices based on this concept. They provide mobility assistance to patients weakened by strokes or other conditions, and can make it easier for people to carry heavy loads or walk long distances. Like Leonardo da Vinci, the researchers have had to look closely at how human muscles and tendons work.

Walsh’s ‘lower-extremity robotic exosuit’ fits over the wearer’s legs, with a waist belt carrying motors, pulleys and a battery pack. Two vertical straps on each leg connect the waist unit to the ankles. Sensors monitor the wearer’s movements and a computer system determines the right moment to activate each of the motors. The exosuit provides assistance precisely when help is needed to take a step, lift a foot, push off the ground or place a foot down, adjusting itself seamlessly to match the wearer’s gait. The result of such sophisticated control is that the exosuit does not seem to be doing anything; the wearer only notices that it easier for them to walk.

Instead of relying on electric motors, the exosuit uses a type of artificial muscle known as a McKibben actuator. These are pneumatic, powered by compressed gas, and behave like real muscle. Unlike electric motors, muscles are not consistent in the amount of force they exert, which depends on how far they are extended. To work in concert with human muscles, the artificial version needs to have a similar pattern of strength, otherwise it would push too hard at the beginning and end of each movement with too little in the middle, interfering with, rather than assisting movement. When deactivated, the soft exoskeleton is just a heavy additional garment; the overall weight is significant at 3.5kg (7.7lb).

Stroke patients have been among the first to use exosuits. Eighty per cent of stroke survivors lose some function in one limb, and an exosuit can help them to regain their walking ability. Typically, stroke patients change the way they walk, lifting their hips or swinging a foot around in a circular motion to prevent it dragging on the ground. Such measures solve the immediate problem, but limit mobility. An exosuit helps them walk normally and easily, preventing them from developing a poor gait during rehabilitation.

Walsh’s team has also developed a soft robotic glove for patients who have lost strength in their fingers. Like the lower-limb exosuit, this works with the wearer’s body and effectively amplifies their remaining strength. In the future, electromyography sensors could detect nerve signals when a wearer attempts to carry out a movement such as grasping, and the glove will function even if the patient is not strong enough to move their fingers. This could be extremely useful for patients not able to carry out basic tasks, such as picking up a cup or using a fork, without assistance.

Another project is a lower-limb exosuit for hiking for extended periods carrying heavy loads. The military are obvious customers. Tests have shown that the soft exosuit decreases the ‘metabolic cost’ of jogging and other activity. So, for example, it takes less effort to walk 1 mile (1.6km) even with the added weight of the exosuit.

Soft robotic exoskeletons are being trialled for commercial use. In the United States, Retailer Lowe’s has experimented with shelf-stackers wearing soft exoskeletons to reduce the risk of back injury.

Soft exoskeletons are still in the development stage and, going forward, it will be a matter of improving the them in order to increase the overall benefit. But as the technology develops they may become increasingly common,allowing people to enjoy travel and outdoor activities long into their later years.

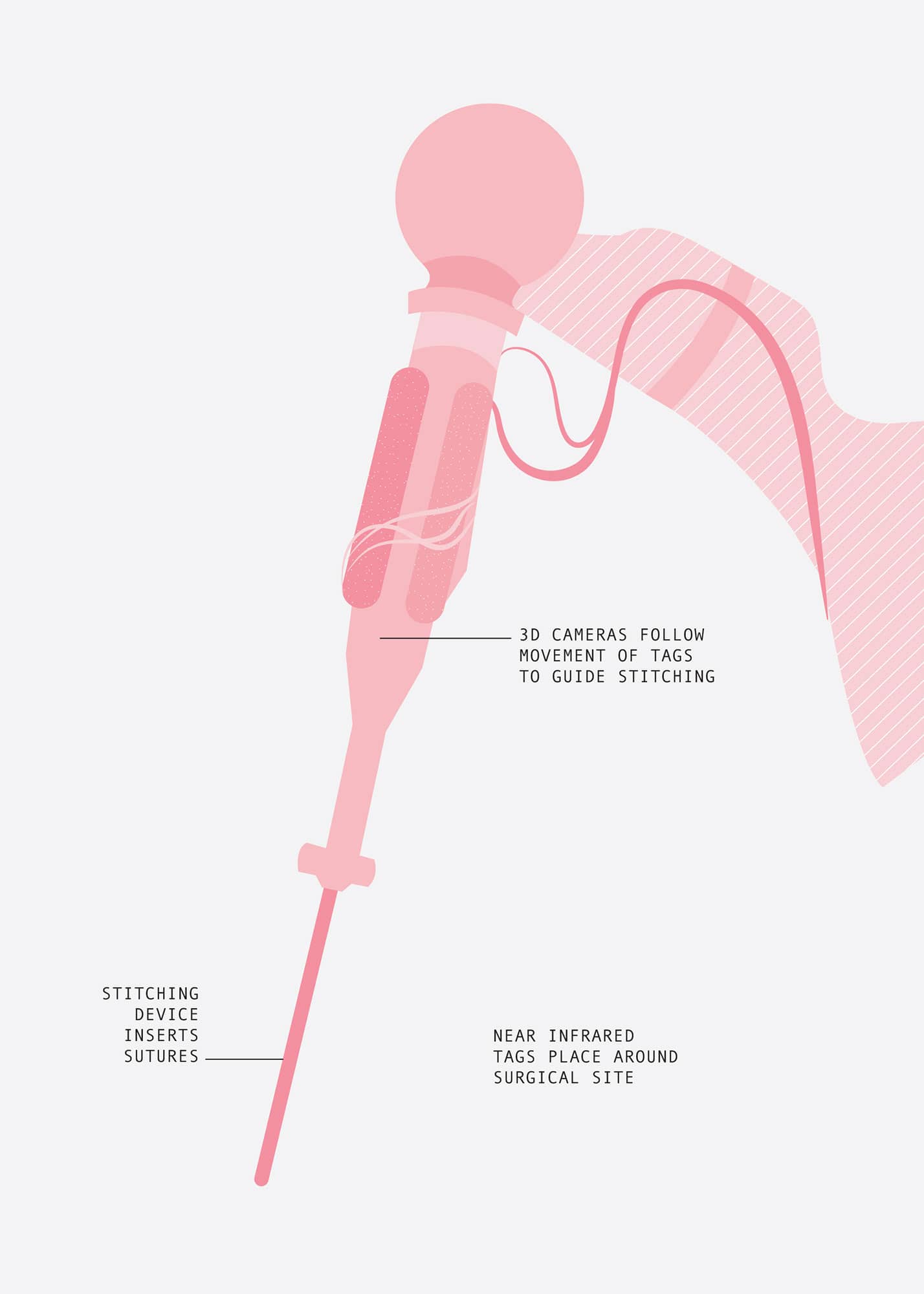

SMART TISSUE AUTONOMOUS ROBOT

Height |

1.5m (4.9ft) approx. |

Weight |

30kg (66lb). |

Year |

2016 |

Construction material |

Steel |

Main processor |

Commercial processors |

Power source |

External mains electricity |

Robots such as the da Vinci Surgical System and the Flex Robotic System robot (see here and here) have to be controlled by a surgeon because of the requirements of soft-tissue surgery. Automating the process calls for a new type of robot altogether.

If the subject stays in a fixed position, an industrial robot can do the job of the surgeon – it is just a matter of drilling in a certain place, attaching a screw, and so on. For knee operations and laser eye surgery, this type of automation is routine, though it is rarely described as ‘robotic’ surgery, possibly for the patient’s peace of mind.

Soft-tissue surgery is more difficult. The squishy stuff that makes up most of our bodies is apt to move around during a surgical process, so a robot cannot simply be programmed to carry out the sort of fixed moves used in bone surgery. Peter Kim and colleagues at the Sheikh Zayed Institute for Paediatric Surgical Innovation in Washington DC designed the Smart Tissue Anastomosis Robot (STAR) to tackle this challenge. STAR’s special skill is tracking the movement of tissue in three dimensions.

Kim borrowed technology from the movie business. ‘Motion capture’ is a technique for filming an actor’s movements in 3D so they can be overlaid onscreen with computer-generated imagery – for example, Andy Serkis’ performance as the grotesque Gollum in the Hobbit series. During motion capture, a series of fluorescent dots are positioned on the actor’s bodysuit at key locations – in particular the joints – to show the relative movement of hands and elbows, knees and feet. Multiple cameras track the movements of these dots from different angles. A computer then creates a wireframe animation of the action based on the dots, which forms the scaffolding for the CGI. STAR uses a similar technique. Before surgery, a series of Near Infrared Fluorescent (NIRF) tags are placed around the operation site. A set of 3D cameras follows the movement of the tags tracking the precise position of the tissue.

STAR was initially set up to stitch or suture a wound. A robotic arm with seven degrees of freedom from German company, Kuka AG, is fitted with a special stitching device. This has a curved needle that arcs round, putting in the stitches. A sensor detects the level of force being applied by the needle to ensure it pushes hard enough to go through the skin, but not so hard as to cause tears.

STAR has been tested on dead tissue and on animals, and it has performed well – better than human surgeons, according to its developers. They also claim that it can stitch faster, with more consistent results. The stitching leaked less, and fewer stitches needed to be redone, than with a typical human surgeon. At the moment STAR only works under close supervision, but in principle it should be possible for a doctor to mark out a line that needs stitching, tap a button and have STAR do the work.

STAR is not a prototype, but a proof-of-principle of what robots are now capable of. One surgeon described this type of suturing as a ‘grand challenge’, the type of task that needs to be mastered before robots can progress. Suturing is a small but important start. If it can be fully mastered and shown to work safely, then the universe of soft-tissue surgery could open up for STAR. In principle, machines such as the da Vinci Surgical System and the Flex Robotic System could be upgraded to become fully autonomous robot surgeons.

Initially, this technology may be adopted in situations where no human surgeon is available, for example in space missions. Such missions usually include a doctor, but sometimes the doctor is also a patient. In 1961, Leonid Rogozov, the doctor at a Russian Antarctic base, removed his own appendix with the aid of a mirror, anaesthetic and several unqualified assistants.

Looking further forward, surgical robots are likely to become increasingly capable. Ultimately, they may even be able to carry out operations of a delicacy and complexity that currently prove impossible for human surgeons, such as some forms of neurosurgery.

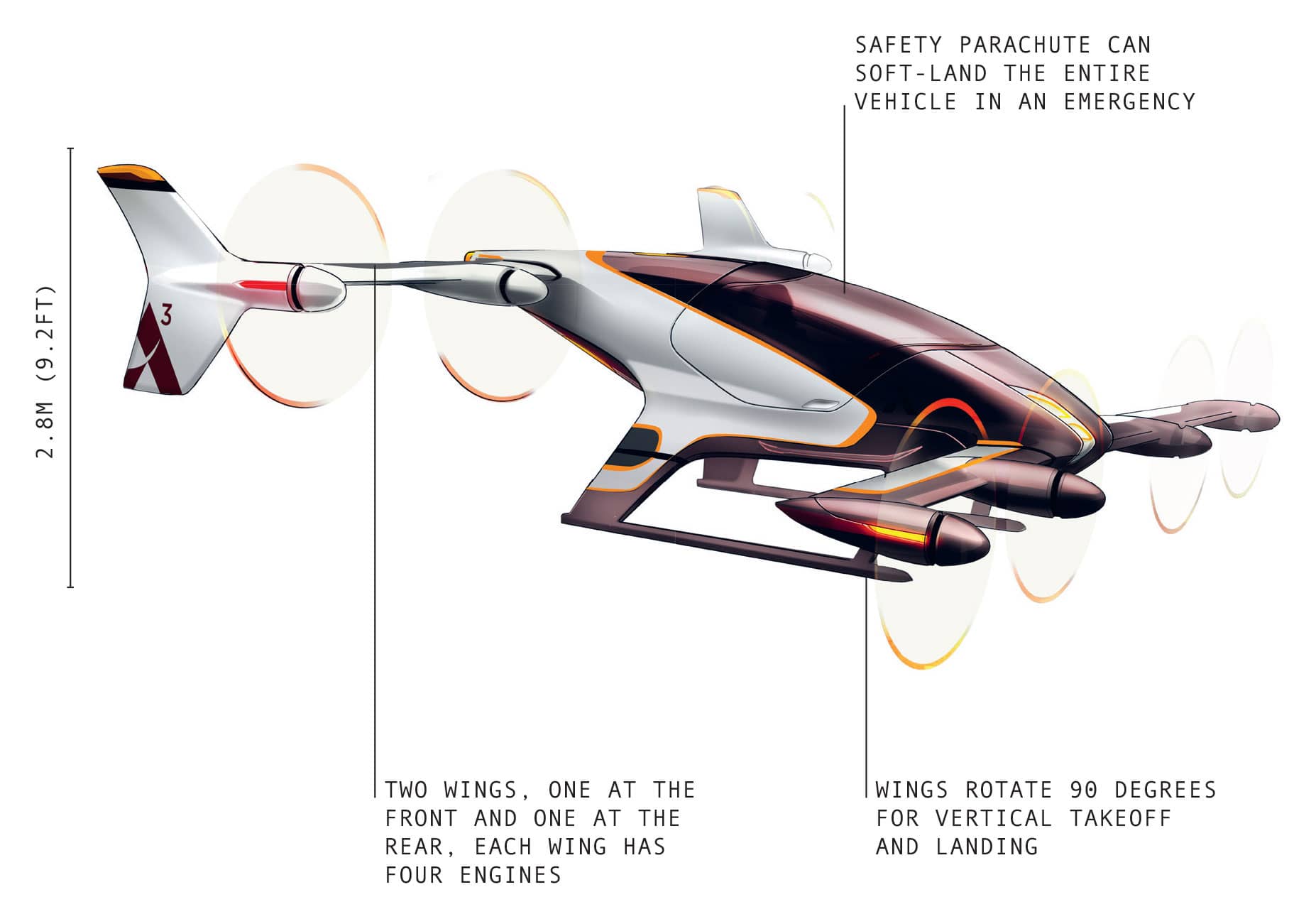

VAHANA

Height |

2.8m (9.2ft) approx. |

Weight |

726kg (1,600lb). |

Year |

2018 |

Construction material |

Composite |

Main processor |

Commercial processors |

Power source |

Battery |

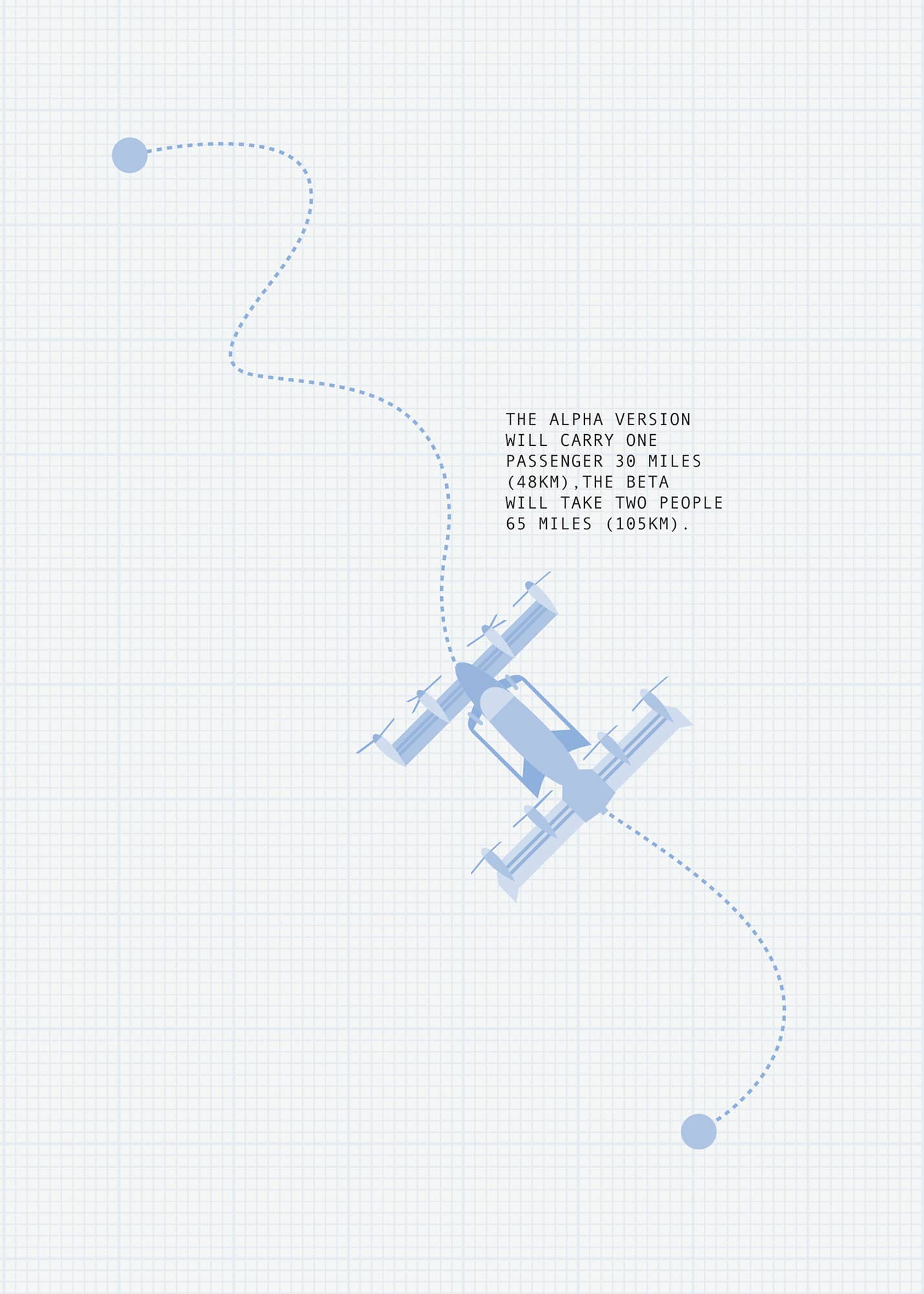

The Vahana is one of many planned ‘flying cars’ that have more in common with consumer drones than automobiles. Like a small drone, Vahana has several engines driven by electricity, and is self-piloted. It is not so much a flying car as an aerial robotic taxi. The name Vahana, incidentally, comes from a Sanskrit word meaning ‘that which carries’.

Flying cars have been technically feasible, if expensive, for some time. Two basic problems have held them back. One is that few people have the necessary pilot’s licence, the other is that air-traffic control could not cope with thousands of aircraft flying over cities. Taking humans out of the equation solves both problems: you do not need to be a pilot to use Vahana, and the machine will infallibly obey rules, stick to its flightpath and keep out of the way of other vehicles – just like the fleets of delivery drones that plan to share the same airspace.

While it might appear to be a flight of fantasy, A³ is part of Airbus, a company with decades of experience in the aviation market. Electric power makes Vahana cheap and reliable, but limits its range because batteries only provide about one-tenth of the energy that a typical gasoline-based system can. Vahana will be used for short hops rather than long-haul international flights, and that suits Airbus perfectly. The machine could offer a quick way for people to get from cities to airports and vice versa.

The initial Alpha version, currently undergoing flight testing, will carry one passenger. Range, speed and passenger capacity will increase with the Beta version: Alpha will fly up to 30 miles (48km) at 125mph (200kmh), Beta 65 miles (104km) at 145mph (233kmh). Safety is a high priority. In addition to other measures, Vahana will carry an emergency parachute so the entire aircraft can descend for a soft landing if problems arise.

Unlike the traditional idea of a flying car, Vahana will not take off from a driveway. Instead, the passenger will likely get a taxi – presumably a driverless one, such as Waymo (see here) – to the nearest helipad, where scheduling software will have arranged a Vahana pickup.

Vahana has a tilting wing design. It takes off vertically like a helicopter, then the two wings, each with four engines, rotate through ninety degrees, after which Vahana flies horizontally, like an aeroplane. Vahana has a sophisticated sense-and-avoid system using radar, cameras and other sensors to avoid other air users. As with delivery drones, its introduction will need a change in existing regulations governing unmanned aircraft flying without human control.

Whether the vehicle is commercially viable will depend on price, which should be far lower than existing air services thanks to the cheap electric propulsion and the absence of a pilot. Vahana suggests it will work out to around £1–£2 per mile (1.6km), similar to a road taxi, but will be two to four times faster – and without the risk of delays caused by traffic or roadworks.

If this pricing is accurate, early adopters may not just be VIPs looking for a quick trip to the airport. Being able to fly in urban areas, Vahanas are likely to be popular for cheap commuting alternatives . More practically, Vahana would be an ideal air ambulance, with its low cost, quiet operation and ability to land on a smaller site than a traditional helicopter. Police may find it similarly useful.

There is no doubt that a flying taxi could be a great convenience, but only for as long as supply outstrips demand. In the coming decades, what if travellers find themselves standing in a long queue at the heliport because of a major conference, and face a one-hour wait for a five-minute journey?