This example webbot relies on a library called LIB_download_images, which is available from this book’s website. This library contains the following functions:

download_binary_file(), which safely downloads image filesmkpath(), which makes directory structures on your hard drivedownload_images_for_page(), which downloads all the images on a page

For clarity, I will break down this library into highlights and accompanying explanations.

The first script (Example 9-2) shows the main webbot used in Example 9-1 and Figure 9-1.

Example 9-2. Executing the image-capturing webbot

include("LIB_download_images.php");

$target="http://www.nasa.gov/mission_pages/viking/index.html";

download_images_for_page($target);This short webbot script loads the LIB_download_images library, defines a target web page, and calls the download_images_for_page() function, which gets the images and stores them in a complementary directory structure on the local drive.

Note

Please be aware that the scripts in this chapter, which are available at http://www.WebbotsSpidersScreenScrapers.com, are created for demonstration purposes only. Although they should work in most cases, they aren’t production ready. You may find long or complicated directory structures, odd filenames, or unusual file formats that will cause these scripts to crash.

Our image-grabbing webbot uses the function download_binary_file(), which is designed to download binary files, like images. Other binary files you may encounter could include executable files, compressed files, or system files. Up to this point, the only file downloads discussed have been ASCII (text) files, like web pages. The distinction between downloading binary and ASCII files is important because they have different formats and can cause confusion when downloaded. For example, random byte combinations in binary files may be misinterpreted as end-of-file markers in ASCII file downloads. If you download a binary file with a script designed for ASCII files, you stand a good chance of downloading a partial or corrupt file.

Even though PHP has its own, built-in binary-safe download functions, this webbot uses a custom download script that leverages PHP/CURL functionality to download images from SSL sites (when the protocol is HTTPS), follow HTTP file redirections, and send referer information to the server.

Sending proper referer information is crucial because many websites will stop other websites from “borrowing” images. Borrowing images from other websites (without hosting the images on your server) is bad etiquette and is commonly called hijacking. If your webbot doesn’t include a proper referer value, its activity could be confused with a website that is hijacking images. Example 9-3 shows the file download script used by this webbot.

Example 9-3. A binary-safe file download routine, optimized for webbot use

function download_binary_file($file, $referer)

{

# Open a PHP/CURL session

$s = curl_init();

# Configure the PHP/CURL command

curl_setopt($s, CURLOPT_URL, $file); // Define target site

curl_setopt($s, CURLOPT_RETURNTRANSFER, TRUE); // Return file contents in a string

curl_setopt($s, CURLOPT_BINARYTRANSFER, TRUE); // Indicate binary transfer

curl_setopt($s, CURLOPT_REFERER, $referer); // Referer value

curl_setopt($s, CURLOPT_SSL_VERIFYPEER, FALSE); // No certificate

curl_setopt($s, CURLOPT_FOLLOWLOCATION, TRUE); // Follow redirects

curl_setopt($s, CURLOPT_MAXREDIRS, 4); // Limit redirections to four

# Execute the PHP/CURL command (send contents of target web page to string)

$downloaded_page = curl_exec($s);

# Close PHP/CURL session and return the file

curl_close($s);

return $downloaded_page;

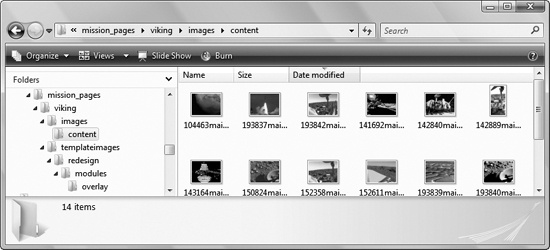

}The script that creates directories (shown in Figure 9-1) is derived from a user-contributed routine found on the PHP website (http://www.php.net). Users commonly submit scripts like this one when they find something they want to share with the PHP community. In this case, it’s a function that expands on mkdir() by creating complete directory structures with multiple directories at once. I modified the function slightly for our purposes. This function, shown in Example 9-4, creates any file path that doesn’t already exist on the hard drive and, if needed, it will create multiple directories for a single file path. For example, if the image’s file path is images/templates/November, this function will create all three directories—images, templates, and November—to satisfy the entire file path.

Example 9-4. Re-creating file paths for downloaded images

function mkpath($path)

{

# Make sure that the slashes are all single and lean the correct way

$path=preg_replace('/(\/){2,}|(\\\){1,}/','/',$path);

# Make an array of all the directories in path

$dirs=explode("/",$path);

# Verify that each directory in path exists and create if necessary

$path="";

foreach ($dirs as $element)

{

$path.=$element."/";

if(!is_dir($path)) // Directory verified here

mkdir($path); // Created if it doesn't exist

}

}This script in Example 9-4 places all the path directories into an array and attempts to re-create that array, one directory at a time, on the local filesystem. Only nonexistent directories are created.

The main function for this webbot, download_images_for_page(), is broken down into highlights and explained below. As mentioned earlier, this function—and the entire LIB_download_images library—is available at this book’s website.

Since $target is used later for resolving the web address of the images, the $target value must be validated after the web page is downloaded. This is important because the server may redirect the webbot to an updated web page. That updated URL is the actual URL for the target page and the one that all relative files are referenced from in the next step. The script in Example 9-5 verifies that the $target is the actual URL that was downloaded and not the product of a redirection.

Example 9-5. Downloading the target web page and responding to page redirection

function download_images_for_page($target)

{

echo "target = $target\n";

# Download the web page

$web_page = http_get($target, $referer="");

# Update the target in case there was a redirection

$target = $web_page['STATUS']['url'];Much like the <base> HTML tag, the webbot uses $page_base to define the directory address of the target web page. This address becomes the reference for all images with relative addresses. For example, if $target is http://www.schrenk.com/april/index.php, then $page_base becomes http://www.schrenk.com/april.

This task, which is shown in Example 9-6, is performed by the function get_base_page_address() and is actually in LIB_resolve_address and included by LIB_download_images.

Example 9-6. Creating the page base for the target web page

# Strip filename off target for use as page base $page_base=get_base_page_address($target);

As an example, if the webbot finds an image with the relative address 14/logo.gif, and the page base is http://www.schrenk.com/april, the webbot will use the page base to derive the fully resolved address for the image. In this case, the fully resolved address is http://www.schrenk.com/april/14/logo.gif. In contrast, if the image’s file path is /march/14/logo.gif, the address will resolve to http://www.schrenk.com/march/14/logo.gif.

Since this webbot may download images from a number of domains, it creates a directory structure for each (see Example 9-7). The root directory of each imported file structure is based on the page base.

The webbot uses techniques described in Chapter 4 to parse the image tags from the target web page and put them into an array for easy processing. This is shown in Example 9-8. The webbot stops if the target web page contains no images.

The webbot employs a loop, where each image tag is individually processed. For each image tag, the webbot parses the image file source and creates a fully resolved URL (see Example 9-9). Creating a fully resolved URL is important because the webbot cannot download an image without its complete URL: the HTTP protocol identifier, the domain, the image’s file path, and the image’s filename.

The webbot verifies that the file path exists in the local file structure. If the directory doesn’t exist, the webbot creates the directory path, as shown in Example 9-10.

Example 9-10. Creating the local directory structure for each image file

if(get_base_domain_address($page_base) == get_base_domain_address($image_url))

{

# Make image storage directory for image, if one doesn't exist

$directory = substr($image_path, 0, strrpos($image_path, "/"));

clearstatcache(); // Clear cache to get accurate directory status

if(!is_dir($save_image_directory."/".$directory)) // See if dir exists

mkpath($save_image_directory."/".$directory); // Create if it doesn'tOnce the path is verified or created, the image is downloaded (using its fully resolved URL) and stored in the local file structure (see Example 9-11).

Example 9-11. Downloading and storing images

# Download the image and report image size $this_image_file = download_binary_file($image_url, $referer=$target); echo " size: ".strlen($this_image_file); # Save the image $fp = fopen($save_image_directory."/".$image_path, "w"); fputs($fp, $this_image_file); fclose($fp); echo "\n";

The webbot repeats this process for each image parsed from the target web page.