CHAPTER 9

EVALUATING YOUR EXPERIMENTS

DRINKING BEER IS DIFFERENT than evaluating it. Evaluating a beer is how you find out objectively if it meets your expectations, has any flaws, changed as you intended … or if it’s even a damn good beer. Whether you’re evaluating a recipe change, a comparison of ingredients, or just trying a new beer, there are some basic steps you need to follow.

First you’ll need to recruit some tasters. Sure, you can do it yourself, but in this chapter we’ll show you why you can’t always trust your own impressions. You’ll also need to know the basics of how to taste and be able explain it to your tasters. You’ll need a bit of equipment, such as glasses, paper, and writing tools. And you’ll need to decide on your method of analysis. Sure, your tasters will need to think about what they’re sensing and how to describe it. But it’s a fun job—and we’ve never had anyone refuse a beer tasting!

HOW TO TASTE A BEER

Your beer is ready. It’s a new recipe or a new variation of a classic. It’s fermented, packaged, aged properly, and chilled to just the right temperature. It’s time to break out the classic beer judge evaluation technique. What follows is based on what we both learned from the Beer Judge Certification Program (BJCP), a process used weekly by many of the 7,500 officially certified beer judges in their blind evaluation of beer.

Before you crack the first bottle, prepare to take notes. A mechanical pencil, pen, or even a computer works well. But trust us, you don’t want cedar aroma on your fingers from a wooden pencil. If you want to go a step further, head to the BJCP website (www.bjcp.org) and download the official BJCP score sheet for your notes. Also, it helps if you’re in a relatively quiet and odor-free place, so your senses won’t wander. And don’t go smoking a big ol’ cigar when you’re trying to evaluate.

Step 1: Pour about 4–6 ounces of beer into a clean, clear glass.

You weren’t going to drink it from the bottle, were you? Pour vigorously enough to get a moderate head on the beer, but not so much as to interfere with drinking it.

Step 2: Swirl and smell the beer. Write your notes on the aroma.

Gently swirl the glass to release some CO2. The carbonation helps carry aromas to your nose. Sit back and relax.

Start with three drive-bys: pass the glass under your nose and take a short, sharp sniff of the aroma. Think about the smell. Do that twice more. Write down your impressions. Did it smell sweet? Fruity? Pungent? Dirty? Whatever it smells like, think of it as a description, not a judgment. Don’t try sounding fancy with phrases like tones of a warm Belgian lawn. However, if the beer smells like fruit punch with grass in it, then write it down. Your notes are for helping you remember, not for impressing the crowd with your sagacity.

Note: If you’re having trouble finding the aroma of the beer, try an old trick to help cleanse your sense of smell. Before you sniff the beer, smell your shirt sleeve. No, not your armpit! It’s amazing how plain cotton can neutralize your sense of smell and let the aroma of the beer come through.

Step 3: Examine the visual nature of the beer. Observe the color, clarity, and head.

Take a good look at the beer, paying special attention to its color and clarity. Hold it up to a light or shine a flashlight through it to perform a thorough inspection. Examine the head on the beer. Is it tight or loose? Are there big bubbles or small bubbles? What color is it? Does the head last or does it dissipate quickly? Again, jot down your impressions.

Step 4: Smell the beer again.

Go back and do another couple of drive-bys. As the beer warms and CO2 escapes from solution, aromas change. You’ll want to see if you smell anything that you didn’t smell earlier. Sometimes a particularly strong aroma on your first passes dissipates by the time you revisit the beer.

Step 5: Taste the beer.

Okay, now the good part: taste the beer. Take a small sip, maybe an ounce. Swirl the beer around your mouth so that it coats your gums, the roof of your mouth, and all parts of your tongue. Swallow the beer and immediately exhale through your nose. That’s called retronasal stimulation (ooh, science!) and will really bring out the aroma and flavors.

Think about what you taste. Is it sweet, malty, sour, bitter, fruity, or alcoholic? What’s the mouthfeel like? Is it full-bodied and mouth coating? Is it thin and digestible? Is it highly carbonated and spritzy or is it smooth and mellow? What’s the balance like? Is it malty or hoppy? Is the finish dry or sweet?

Drew: You may be asking yourself if this is all a bit too wine snob–like. Yes, yes it is. But the reality is that for just a moment, you need to focus all of your organoleptic sensors on gleaning every last bit of data from the beer. Your experiment’s impact can be surprisingly subtle, and you’ll never glean the effect if you sit and drink without intention. What you learn based on the tasting can result in changed, corrected, and improved beer.

THE SCIENCE OF TASTING OBJECTIVITY

The most difficult part of evaluating a beer is remaining objective. You brewed the beer, you know what went into it, you know how hard you worked on it and what you expected it to turn out like. But you have to be sure you aren’t fooling yourself when you taste it. It’s the difference between mounting an investigation and proving a hypothesis. To prove a hypothesis, you assume something is true and try to verify that. When you do an investigation, you’re looking for answers without a notion of what you may find. Each approach has its place, but by mounting an investigation, you won’t have preconceived ideas getting in the way of objective evaluation. There have been a number of experiments done to show how preconception can influence perception. Let’s take a look at a few of them.

One of the most interesting experiments was chronicled in the Journal of the Institute for Brewing (Vol 8, No. 1, 2002). Charles Bamforth and J. E. Smythe conducted tastings in three different countries. There were twelve tasters from Ireland, all untrained; twelve from Finland, trained in sensory evaluation; and fourteen from Belgium, rigorously trained and highly tested. In the first test, tasters were told that one beer was produced using traditional techniques and took 15 days to produce, while the other beer used new technology and yeasts that allowed it to be produced in only 10 hours. For the other test, tasters were told that one beer was produced strictly according to the Reinheitsgebot (using only malt, hops, water, and yeast), while the other beer got 30 percent of its fermentables from sugar. What the tasters didn’t know was that all of the beers were poured from the same bottle!

The more experienced the tasters were, the fewer preferences they described, but all tasters showed some preference for what they thought were the more traditionally brewed beers. This indicates that just the suggestion of a beer’s history can play a large role in the perception of tasters. This is why brewers continue to push romantic stories of farmhand beers or IPAs on ocean journeys. They create a potent predisposition in the drinker’s mind.

Another very interesting example, albeit with wine rather than beer, came from Frédéric Brochet in a 2001 experiment at the University of Bordeaux. He assembled a panel of fifty-four experienced wine tasters for evaluation of what they thought were four different wines. In the first test, they were given two glasses of wine, one white and one red. However, the red wine was actually the same white wine as was in the other glass with red food coloring. Nearly every taster described the red wine in terms ordinarily used to describe red wine, including words like jammy and crushed red fruit—terms that are seldom, if ever, used to describe white wines.

But that’s wine, right? That could never impact us in the beer world, where the ingredients we use to color the beer carry distinctive qualities! Who would ever confuse pilsner malt and chocolate malt? Well …

On a Norwegian beer blog called Larsblog, Lars Marius posted about a blind tasting conducted in 2008 during a judge training class he attended. There were some very experienced tasters involved, including three RateBeer users who had a total of eight thousand ratings, a man educated as a wine sommelier, and two commercial microbrewers.

The participants were given three unidentified black beers and asked to write notes about them before attempting to guess the beer styles. All the tasters notes identified flavors like roasty or caramel. All the tasters were shocked to find out that of the three beers, only one was an actual dark beer. The other two? Ringnes Pils (a super common pils in Norway) and Erdinger Hefeweizen with black coloring added to them! Every one of the ten tasters claimed to taste roastiness in the beers, and not a single taster had any idea that beer might actually be a pilsner or a hefe. It appears that their senses of taste and aroma were completely fooled by the color of the beer.

Looking back to Brochet, in another test he took two bottles of wine, one a grand cru and the other an ordinary vin de table, poured them out, and filled both bottles with a mid-level Bordeaux. Tasters then described exactly the same wine in almost completely opposite terms. The wine in the grand cru bottle was described as agreeable, woody, complex, balanced, and rounded, while the supposed vin de table was weak, short, light, flat, and faulty. Brochet’s conclusion was that the perception of the wine was often more important than what was actually in the glass. Kind of makes you wonder about your own tasting skills and susceptibility to labels, doesn’t it?

Finally, in 2008, Avenue Vine published an article about how actual physiological changes took place in tasters’ brains based on their perception of the price of the wine they were tasting. They found that people who paid more for wine actually enjoyed it more! Brain scans of people who were told they were drinking a more expensive wine confirmed that their pleasure centers were activated much more than people who were told that they were drinking a less expensive wine. They found changes in a part of the brain called the medial orbito-frontal cortex, which plays a main role in many types of pleasure. The cortex became more activated by the supposed expensive wines than the cheap ones.

So, what do we as homebrewers need to do to evaluate our experiments and be reasonably certain they’re objectively assessed? First, we must gather our tasters!

FINDING GUINEA PIGS … ER … TASTING PANELISTS

The problem with trying to evaluate your own beer is that you know how you made it, and you know what you’re looking for. That makes it extremely difficult to evaluate your own beer objectively. You need a tasting panel!

Does that mean you need beer experts or supertasters? Not necessarily. If your goal is to find out if a change in process or ingredients produces a beer that people prefer, the tasters only need to like beer and have some idea of what it is they like. However, if you’re hoping to compare two beers with different sulfate levels, the tasters need to be experienced and sophisticated enough to pick out and describe qualities of bitterness and dryness as well as potentially elevated mineral levels.

It just so happens there’s a place you can find both types of tasters: your homebrew club! Clubs have a wide range of beer lovers, from those who just like beer to BJCP judges. It’s not uncommon to find professional brewers in the ranks of club members as well. Keep in mind that even for more serious tastings, it can be valuable to have a range of experience and ability in your panel to give you a balanced impression of the beers. Sometimes a novice taster will pick out something that a more experienced person has overlooked. Here’s why we love science: to us someone is a novice taster, but to a scientist that person is a seminaïve assessor.

Additionally, experienced tasters may have built-in biases that may not reflect general taste preferences. For example, in 2006 Cook’s Illustrated magazine did a taste test of olive oil where they ranked inexpensive Spanish and Greek olive oils over the vaunted and expensive Italian oils. Alexandra Kicenik Devarenne, an olive oil expert, convened a panel of trained tasters to sample the same oils, and their results were almost reversed. Who’s right? The panel of cooks and regular Joes or the trained tasters who obsess over oil? The trained group asserted that Cook’s Illustrated got it wrong, because they were looking for flavors familiar to the American palate that are considered defects by the olive oil community. Cook’s Illustrated said they’re right because the oils tasted better for the applications called for in an American kitchen. In sensory science, this is the battle and difference between “consumer panels” (Cook’s Illustrated) and “descriptive panels” (Devarenne’s group).

We see this battle all the time in the beer world. Take a look at a beer-rating site like Beer Advocate. In their top twenty-five beers, they have, as of this writing, only two beers that are below 7 percent alcohol, and one of those is a decidedly nonaverage Cantillon Lambic. Lists like that this, compiled by the insanely passionate, end up dominated by bold styles like Double IPA, Imperial Stout, Belgian Quad, and so on. Now go look at what sells the best. In recent years, amongst the self-selected crowd of craft beer drinkers, the top sellers have been Seasonals, Pale Ales, and IPAs. That’s your expert-versus-common-taster dilemma in two lists.

Note: Over time, make sure you learn the biases and perception skills of your judges. If your test centers on Belgian beers, you probably don’t want to invite the guy who thinks all Belgian beers taste like shoes soaked in alcohol. Also, be aware if anyone in your group is a supertaster (see above) and watch closely for their evaluations.

ONE IS THE LONELIEST NUMBER: THE BLIND SIDE

If, for some reason, you’re unable to find people to evaluate beer for free, your course of action is a little less clear. First, you clearly need better friends and acquaintances. Second, if you have a spouse, a minister, a helpful bartender, anyone other than you, you can have them serve as your blind.

The blind is how scientists ensure objectivity. Everything you as a tester can remove to avoid bias, you remove. That means: Use the same cups, the same level of cleanliness, the same lighting, the same aromas in the setting, and so on. Ideally, your evaluators have no differences except the key one, under test, to add potential bias their evaluation. You can thank the French for this concept, which, shockingly, was first recorded in 1784.

Since you’ll be the one with all the knowledge about what’s being tested, you’ll be acting as the blind in your panel tastings. Think of the classic soda wars style taste-off. The guy pouring the soda knows which is Coca-Cola and which is Pepsi, but none of the tasters do. In the case of our tasting panels, you’ll usually want to run two sets of tastings. The first tasting is where the tasters are blind to what the difference is (for example, whether a certain process creates more hop aroma). After seeing if there’s a perceivable difference, the second pass can have the tasters focus on questions like “what do you notice about the hop aroma?”

So, if it’s to be a tasting panel of one (you), make sure that you have someone else pouring your samples. Not having someone else pour the unmarked sample means your awareness of the samples makes your evaluations subject to all the biases you bring.

To be truly hardcore, you can take it a step further. Since it turns out that the guy running that cola taste-off could be subconsciously giving signals to the tasters as to which cola is his preferred taste, it can be a good idea to go double blind. Beyond signaling, in observational tests, an observer with knowledge may let that knowledge about the samples affect what’s recorded. (For example: “Aha! I think Denny winced a little when he tried sample B, which is the doctored version, so that’s a positive hit,” when in reality Denny was wincing because Drew put on the Grateful Dead in the background.)

A double blind study moves the chain of knowledge—which sample is which, what part of a group someone is in, and so on—back away from the folks running the experiment and to the ultimate researchers. You’ll be hard pressed to double-blind a tasting of one, but for your regular panels, double-blinding is as easy as turning the sample pouring and observation recording over to someone who doesn’t know what you’re testing.

TYPES OF TESTING

TRIANGLE TASTING: THE GATEWAY TO VALIDITY

The best way to evaluate a beer objectively is to use what’s known as blind triangle tasting. In a nutshell, have someone else pour two samples of one of the beers you want to evaluate and one sample of another. The objective is to pick out the sample that’s different. If you can’t do that, then you know that whatever you’re testing for doesn’t really matter. If you can pick out the different sample, then you can go ahead and use the techniques discussed on page 200 to evaluate it.

Triangle testing is a cornerstone of beer evaluation. In the world of sensory science it is considered a difference test. The taster is given no guidance about what’s different. He or she is just handed three samples and asked which two are the same.

A triangle test can help determine if a difference exists at all. Think about the major debates like: Does decoction mashing make a difference in the flavor of the beer? If it does, the tasters will detect it. If not, then the test tentatively indicates that your uncontrolled variable (decoction mashing versus infusion) makes no perceptual difference.

It can also serve as a test of panelist quality. (For example, a better, more trustworthy panelist will spot the difference.) You can use this during your panels to select judges for further tastings (“Great! Now tell us about the character differences you perceive and evaluate sample B fully”) or to weight their feedback above those who missed the difference.

Keep in mind that this technique doesn’t work for vastly different beers, like a pilsner and a stout. The beers need to be the same beer with a slight difference. Look at the sample process above. The two beers are pilsners with the only difference being the mashing method. It could as easily be two Scottish ales with concentrated kettle runnings in one and the other just plain. Maybe the same wort with two difference yeast strains, and so on.

If you want to get all super science-y when you’re using a tasting panel, you may want to use weighting to determine how many panelists are likely to choose the odd beer simply by chance. There are resources available online to help you with weighting.

Cathy Haddock is a sensory specialist at Sierra Nevada Brewing Company in Chico, California. Part of her job is to conduct blind triangle tastings of the beers produced by Sierra Nevada. Sometimes it’s to evaluate new recipes being considered for production, and sometimes it’s for quality assurance, in order to make certain a batch of beer meets Sierra Nevada’s high standards and is consistent with previous batches. Cathy has a few tips for homebrewers who want to conduct a blind triangle tasting:

Proper protocol needs to be followed in order to trust your results. Proper protocol includes following procedures in which a taster’s response is not biased or influenced due to any psychological factors or environmental conditions. Those psychological/environmental factors that can influences a taster’s response when doing a triangle test are numerous, but I will sound off on a few that I feel are most important:

1. We will not tell the tasters anything about the samples they are tasting in a triangle test other than the brand so that they do not have any information to bias their response.

2. We serve the samples in a frosted glass to help eliminate visual cues biasing a taster’s response.

3. All samples are poured the same amount of beer, with careful attention as to not have one beer more foamy than the others.

If that is not enough, I also ask that they not even look at the samples, just simply grab the glass, noting its three-digit code, and evaluate the sample. This way, we can have confidence the tasters are not biased by any visual cues.

We also run the triangle tests in a random balance order to help eliminate First Order effect, which is where the first sample evaluated is perceived stronger—whether negatively or positively—and therefore may be chosen as the odd sample out. This type of presentation format employs that the odd sample out is evenly tasted in the first, second, and third position in a three-sample triangle set. I also allow tasters to retaste if necessary.

Other external controls we employ to help offset bias is that other tasters in the tasting area do not verbalize, whether through speech or body language, any opinions on the samples they are tasting in triangle test.

An alternate version of the classic triangle test is a duo-trio test. In this type of test, you still work with three samples, but one sample is designated to the tasters as the reference. The tasters then must choose which of the remaining two beers matches the reference sample. The primary effect is allowing a different set of tasters on to the second level of evaluation.

QUALITATIVE DIFFERENCE TESTING

With the question of “Can we notice a difference?” out of the way thanks to the triangle test, there are other things that you’re probably concerned about including the all-important: What is the difference between these beers? Since there’s no right or wrong answer, the important part is to watch and observe the reactions of your tasters. Try to keep the participants from talking to each other, though, since you don’t want a lot of chatter influencing their opinions.

Hedonistic Testing

All things pleasurable are good is the core of hedonism as a philosophy. In evaluation, hedonism is simply the measurement of your enjoyment of a sample. A taster is given a set of samples, with or without information about differences, and asked to rate the samples on a scale of 1 to 10.

The evaluators can rank the sample anywhere they want; there’s no need for unique scores. If an evaluator feels that all of their samples are worth a 10 rating, then that’s their call. These ratings can show you the general preference of tasters. Whereas a number of these other tests focus on discerning single changes, a hedonistic test shows how a person actually trying the beer feels about it in general.

Rating Difference Testing

A rating difference test is like the hedonistic test in that it allows evaluators to rate a beer and assign a score. Unlike the hedonistic test, it focuses on a particular characteristic. Instead of asking, “Do you like this beer?” you ask evaluators something like, “Rate this beer’s hoppiness.” Tasters then assign a score according to the characteristic you ask about on a scale of 1 to 10. Using a rating difference test allows you to hone a taster’s responses and detect patterns that might otherwise be obscured.

RANK TESTING

Sometimes you’re faced with a set of samples that need to be sorted into discrete positions. For instance, maybe you have five samples of different levels of dry hopping. You then ask your evaluators to rank the five samples in terms of absolute dry hopping character. No two samples can have the same ordinal value.

You can test other characteristics here as well. For example, by asking evaluators to do things like put two beers in order of color from light to dark, you can determine any color difference contributed by a process like wort reduction or late extract additions. If the evaluators don’t show consistent rankings, you can safely ascribe no discernable difference to the processes involved.

PAIRED COMPARISON TESTING

Sometimes you’ll want a focused response from your tasters. With a simple question like “Which of these two beers is hoppier?” you can use your evaluators to determine if there’s a pointed directional difference between two samples. If enough evaluators choose the same sample, you know that difference is significant. However, if there isn’t a clear tendency toward one of the samples, then you know the change between the two versions isn’t overt and can be considered a wash.

The samples don’t always have to be different, either. Some panels use a comparison test to weed out evaluators. They do this by presenting the same sample twice to a taster. An accurate taster should note that there’s no difference.

TESTING IN ACTION

You’ve run your testers through the gauntlet. Data has been recorded. The results are in. But do they mean anything? There are whole courses of statistical analysis to determine the meaning of your results.

To start with, math is good but can be scary—so we’ll try and keep this simple. For tests like the ranking tests and hedonistic tests, you can glean a lot of information from the simple average of each beer sample. When you lay out the scores, you’ll see clear preferences.

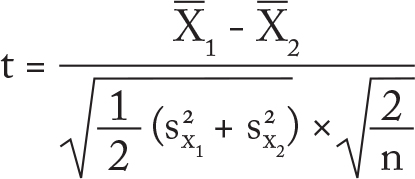

One of the classic statistical tests was invented at the Guinness Brewery and is called the “Student’s T-test.” William Sealy Gosset, a Guinness chemist, created the test in 1908 when he wanted to figure out how to verify statistical significance when you only have a small number of evaluators. His pseudonym was “Student,” hence Student’s T-test. He used a pen name because Guinness didn’t want their competitors to catch on that they were making better beer using math! Is there anything beer math can’t do?

Here’s what Student’s T-test looks like for an equal set of evaluations like what we’re running.

In the stats world this is a simple equation. What it basically says is this: Take the difference of the average scores of the two evaluations (beer1 and beer2) and divide it by the standard deviations (the s variables) and the sample size (n). This t value is then used to calculate the probability (p) that the Null hypothesis (your change didn’t matter) is true. This p value ranges between 0–1 and is compared to a standard value (usually, 0.10, 0.05, or 0.01). If the p value is less than the chosen value, your difference is significant.

In the modern world of computers, don’t fret the equation. There are plenty of websites and spreadsheets that will do the calculation for you. Excel, for instance, has a function called “T.TEST” that you feed two groups of cells (with your score data), the number of tails (2), and a type (2 for normal). It returns back the final p value.

STUDENT’S T-TEST EXAMPLE 1: TWO BEERS SCORED

Beer 1 Scores |

Beer 2 Scores |

35 |

20 |

34 |

21 |

38 |

22 |

23 |

33 |

42 |

28 |

In example 1 we have two beers that have been scored in a BJCP-like fashion. Beer 1 scored much higher with the tasting panel than Beer 2. Running the scores through a simple two-tailed Student’s T-test, we get a returned p value of 0.044, which is under the 0.10 and 0.05 thresholds. This means that whatever change this test was checking is significant. Given how most judges use the BJCP scoring system (almost all scores fall in the 20–40 range), it seems fairly standard to assume anything below 0.1 is significant when tested this way.

STUDENT’S T-TEST EXAMPLE 2: TWO CLOSER BEERS SCORED

Beer 1 Scores |

Beer 2 Scores |

34 |

22 |

33 |

23 |

37 |

24 |

22 |

35 |

41 |

30 |

This second test shows a minor change in scoring; the tasters liked Beer 1 exactly one point less than before and like Beer 2 two points more than before. The resulting p-value? 0.14—above the threshold of the null hypothesis, indicating that our change isn’t as significant as we thought.

EXAMPLE EVALUATION WITH DENNY

Here’s an example of an experiment I performed a few years ago with a blind triangle test evaluation. The objective was to compare the effects of a beer that received first wort hops only to a beer that received an equal amount of hops as a single 60-minute addition. I told the tasters nothing about the beers or the purpose of the tasting. I served each of them two glasses of one of the beers and one glass of the other. The samples arrived in identical glasses for all the beers. I didn’t hide the color of the beers since they were two 5-gallon batches made from the same batch of wort. We told the participants nothing about the beers they were tasting. They were given three glasses labeled A, B, and C. Two of the beers were the same, and the tasters were asked to answer a set of questions about which beers were the same. Unlike the standard procedure where if a taster was able to identify the different beer, they were given a second set of questions to answer asking them to describe the beers, I let all tasters answer the second set of questions, even if they were incorrect in identifying the different beer. That’s because I was interested in what their perceptions were, and I knew I could filter the wrong answers if I desired that. The tasters received questionnaires with the following questions.

Part 1 (Triangle Test)

1) These three samples are:

• The same

• One is different from the other two.

• All three are different from each other.

2) If two or more beers are the same, list which they are.

3) If you detected a difference, describe what was detected for each sample.

4) Did you prefer one of the samples?

A B C no preference

5) If you had a preference, what was it about the sample that you preferred?

PART 2 (Qualitative Testing)

At this point, identify the two different samples and relabel them as 1 and 2.

1) Thinking of bitterness, did one sample seem more bitter? (Rank Testing)

1 2 no preference

2) Subjectively describe your impression of the bitterness of each sample. (Hedonistic Testing)

3) Thinking of hop flavor, did one sample seem to have more hop flavor? (Rank Testing)

1 2 no preference

4) Subjectively describe your impression of the hop flavor of each sample. (Hedonistic Testing)

I also sent the beers to two different laboratories to have the bitterness analyzed. (Thanks to Scott Bruslind at Analysis Laboratory and to Bob Smith at S. S. Steiner.) The result surprised me, but it was educational to see that even though the FWH beers had a slightly higher analyzed bitterness, a number of tasters (including myself) actually found them to taste less bitter.

GAS CHROMATOGRAPHY RESULTS

Beer |

IBU |

A (FWH) |

31 |

B (60) |

28.7 |

HPLC (HIGH PRESSURE LIQUID CHROMATOGRPAHY) RESULTS

Beer |

Iso-alpha-acids |

Alpha-acids |

Humulinones |

A (FWH) |

24.8 |

3.5 |

1.9 |

B (60) |

21.8 |

4.7 |

1.8 |

The alpha-acids are not bitter though they contribute to bitterness units value. The humulinones are oxidized alpha-acids and are slightly bitter.

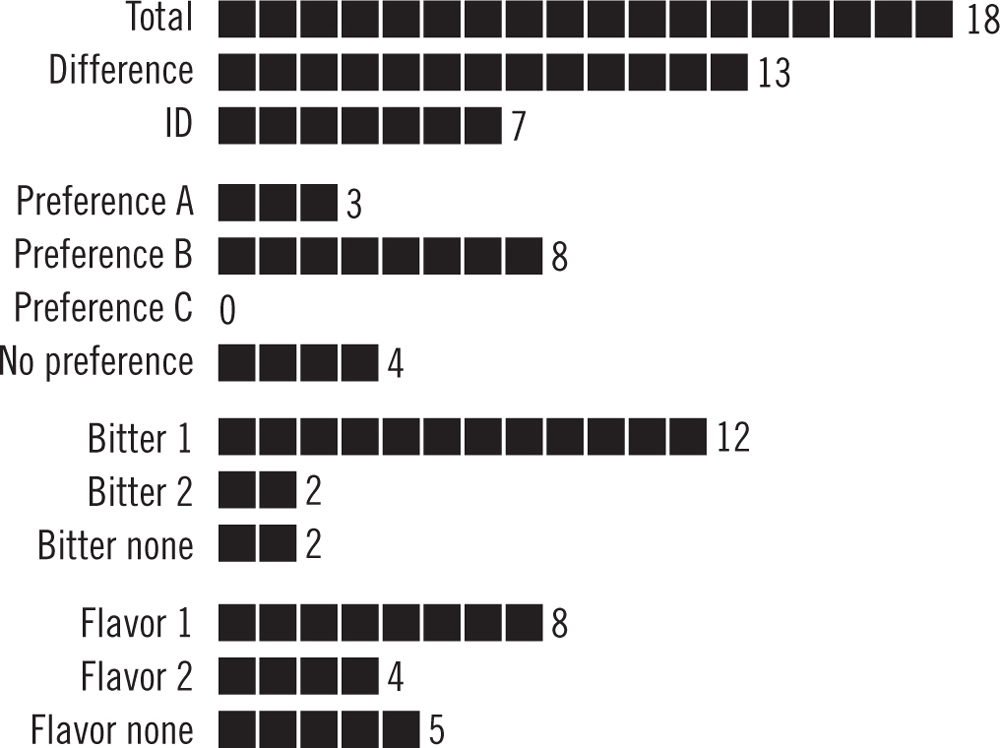

Interpreting the Results

The results of the tasting are summarized in the graph that follows. Quickly summarized, you can see there were eighteen total tasters. Thirteen said they found a difference, but only seven correctly identified which beer was different. Of those seven, five preferred beer B, which was the FWH beer. As you can see, there was no clear consensus. So, what do you do in a case like that? Trust yourself! Even though the experiment didn’t point in any one direction, I feel that I can taste the difference that FWH makes, and I continue to use the procedure extensively to this day. Sure, it may be counterintuitive, but it’s my beer, and I like it!

CONCLUSION

In the end, after all the science and objective tasting, there’s a final criteria to consider: Do you like the beer? Would you have another pint? That’s what determines if a beer is “moreish.” Do you want another one? Or two?

Both of us will readily admit that there are times we’ve gone in favor of blind, stupid decision making in spite of all the experimental evidence in front of us. It happens. We’re human and assume you are, too. Just be sure that if you do that you are aware of the ramifications of your decision. It might mean you spend extra time and effort doing a decoction mash because you love the process even if you don’t think it really makes a better beer. Or it may mean that you make an ingredient choice that you love but that everyone else who tries your beer hates. Wait a minute … maybe that’s not a bad thing!