LTE-M performance

Abstract

This chapter presents LTE-M performance in terms of coverage, data rate, latency, and system capacity based on the functionality described in Chapter 5. The presented performance evaluations are largely following the International Mobile Telecommunication 2020 (IMT-2020) and 5G evaluation frame work defined by the International Telecommunications Union Radiocommunication sector (ITU-R) and 3GPP, respectively. It is shown that LTE-M in all aspects meets the massive machine-type communications (mMTC) part of the requirements defined by ITU-R and 3GPP. The reduction in device complexity achieved by LTE-M compared to higher LTE device categories is also presented. While LTE-M has been specified for half-duplex frequency-division duplexing (HD-FDD), full-duplex FDD (FD-FDD) and time-division duplexing (TDD) operation, this chapter focuses on the performance achievable for LTE-M HD-FDD.

Keywords

6.1. Performance objectives

- • A coverage corresponding to a MCL of 164 dB should be supported.

- • A sustainable data rate of at least 160 bits per second should be supported at the 164 dB MCL.

- • A small data transmission latency of no more than 10 seconds should be supported at the 164 dB MCL.

- • A battery-powered device should support small infrequent data transmission during at least 10 years at the 164 dB MCL.

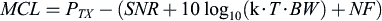

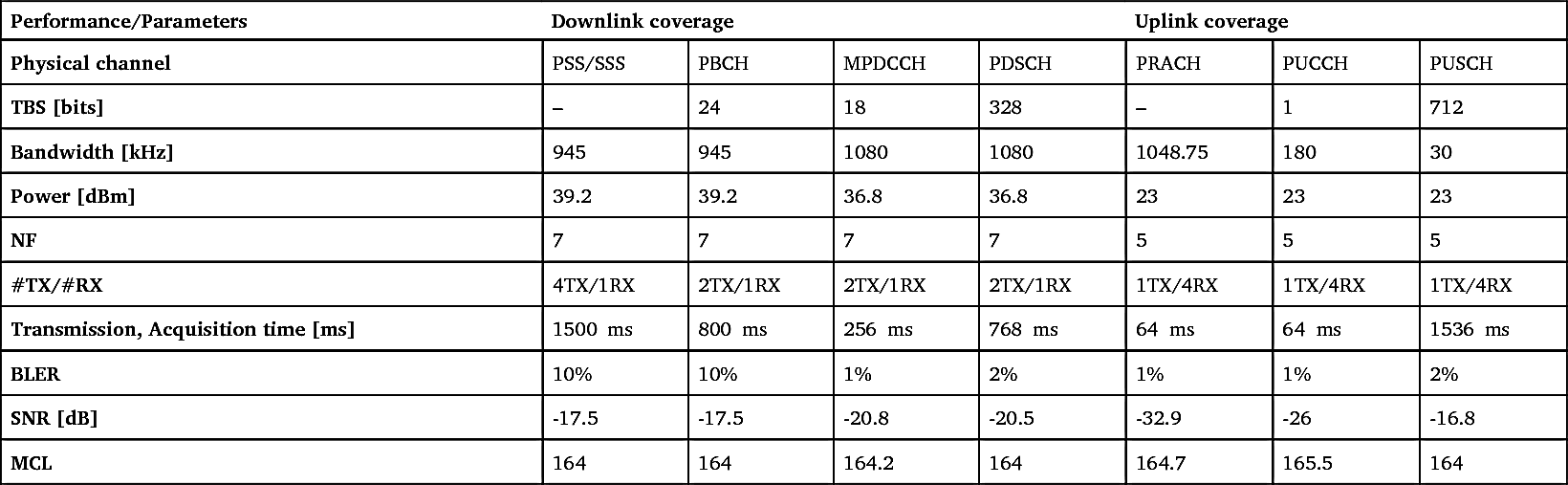

6.2. Coverage

(6.1)

(6.1)

Table 6.1

| Parameter | Value |

|---|---|

| Physical channels and signals |

DL: PSS/SSS, PBCH, MPDCCH, PDSCH

UL: PUCCH Format 1a, PRACH Format 0, PUSCH

|

| Frequency band | 700 MHz |

| TDL channel model | TDL-iii |

| Fading | Rayleigh |

| Doppler spread | 2 Hz |

| Device NF | 7 dB |

| Device antenna configuration | 1 TX and 1 RX |

| Device power class | 23 dBm |

| Base station NF | 5 dB |

| Base station antenna configuration | 2 or 4 TX and 4 RX |

| Base station power level |

29

dBm per PRB

3

dB power boosting on PSS, SSS and PBCH.

|

Table 6.2

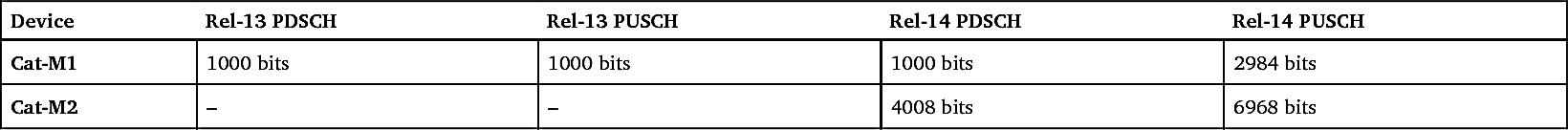

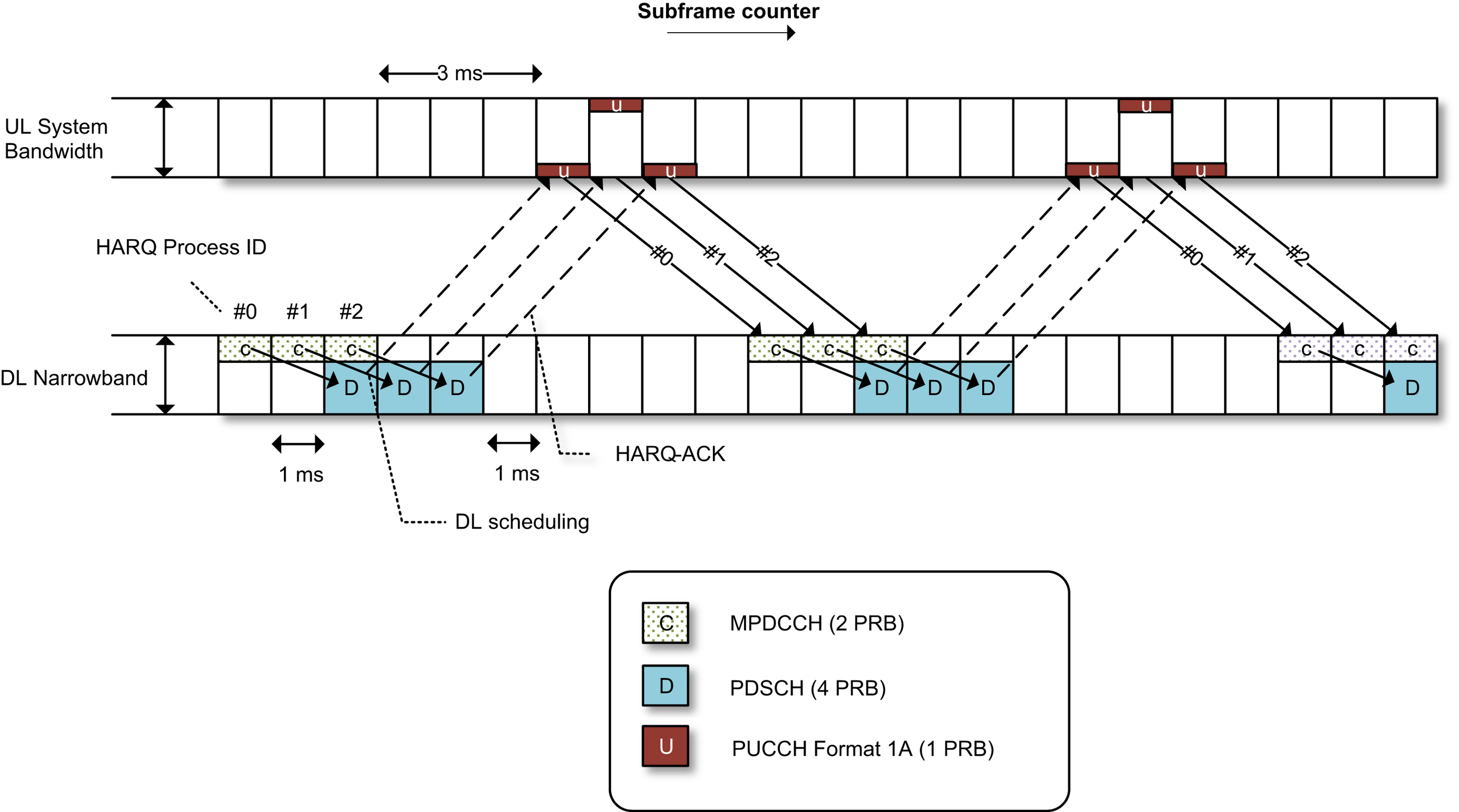

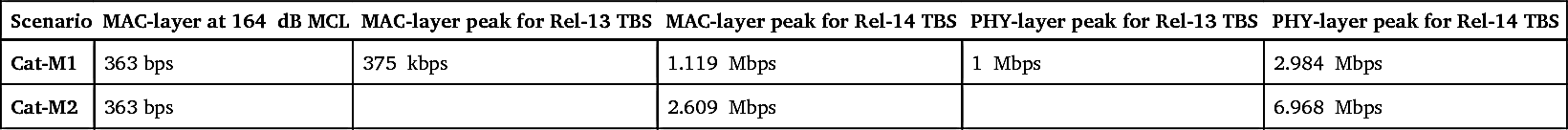

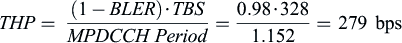

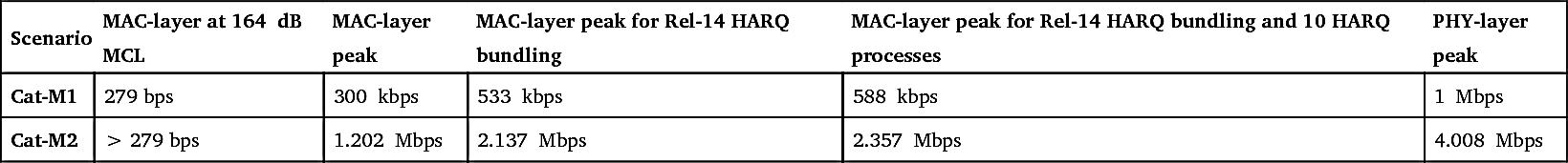

6.3. Data rate

6.3.1. Downlink data rate

(6.2)

(6.2)

Table 6.4

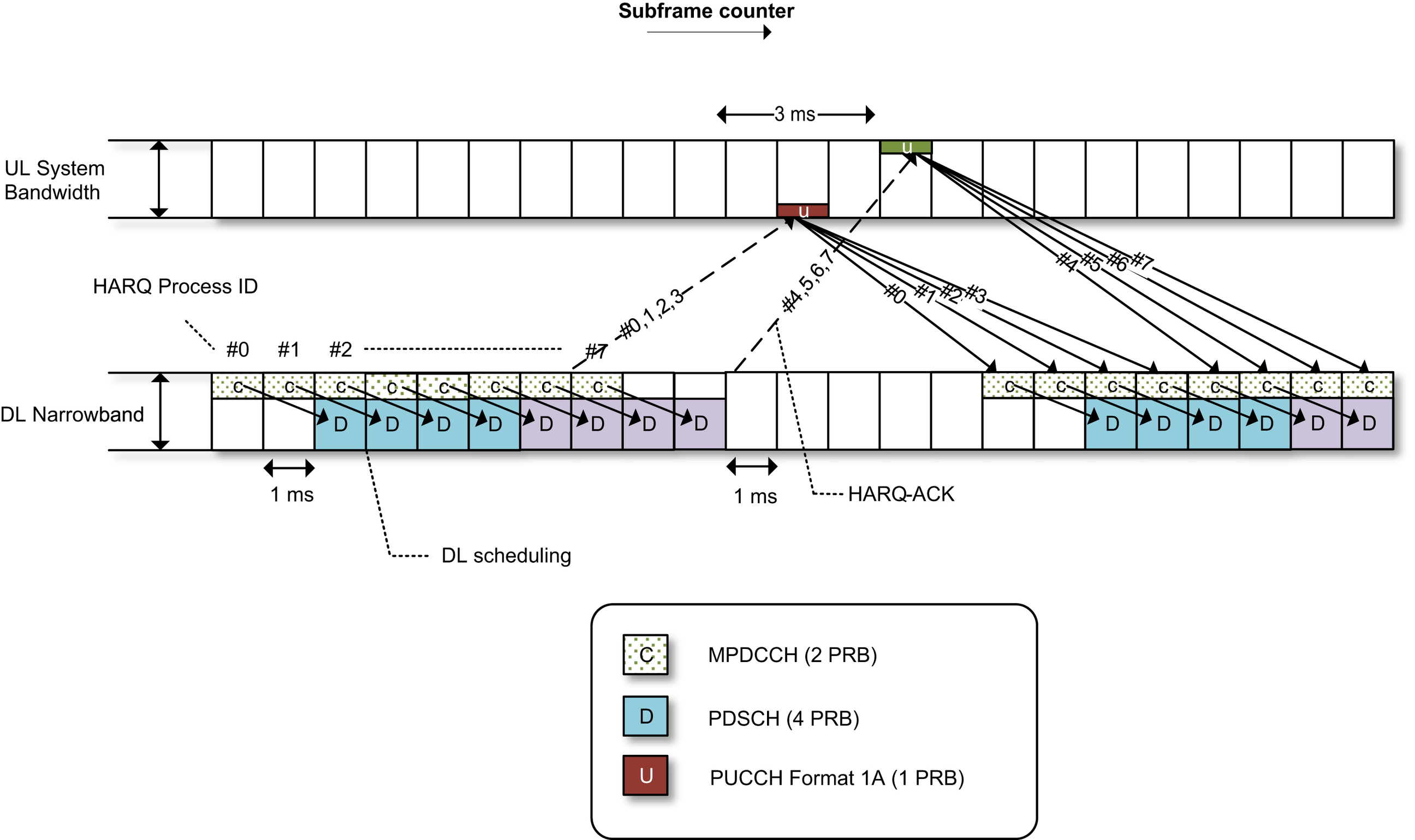

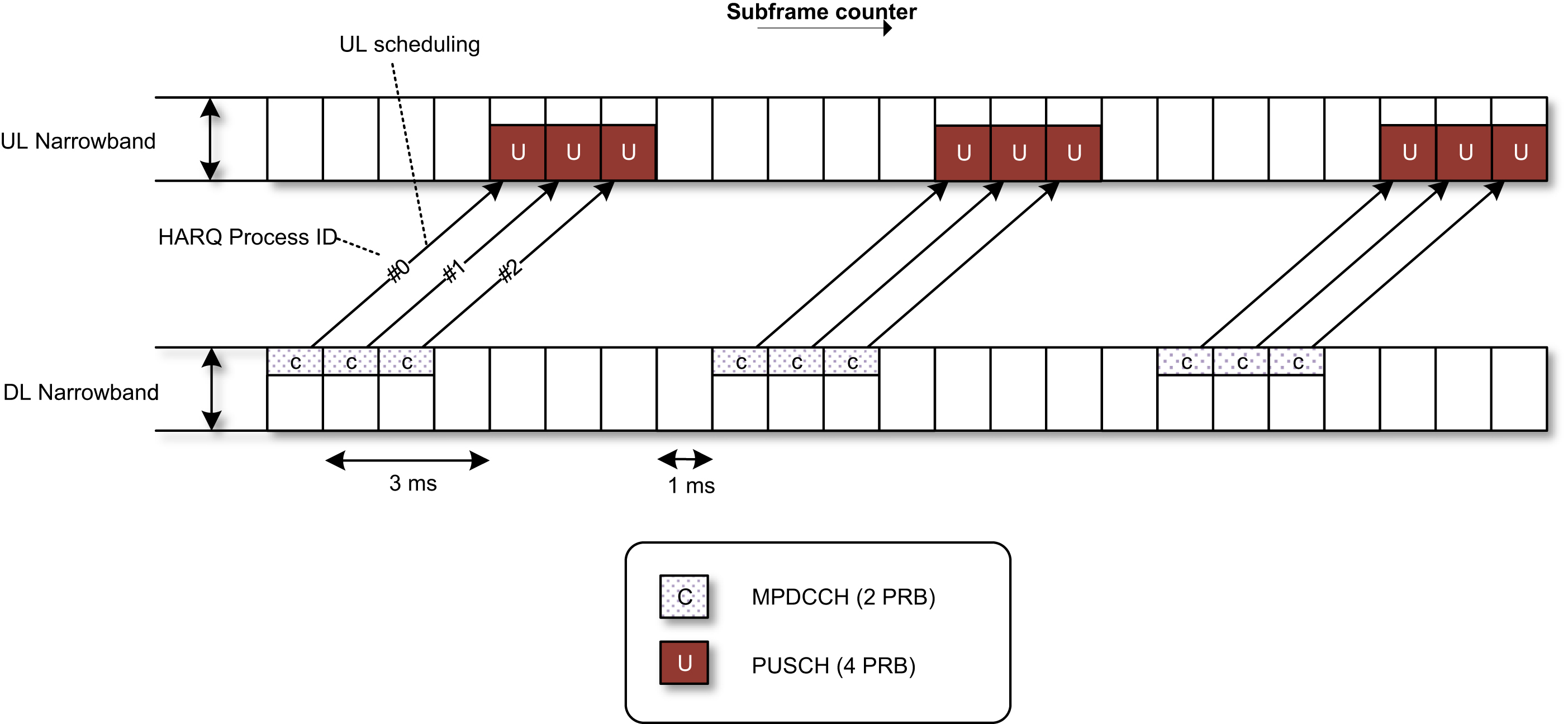

6.3.2. Uplink data rate

(6.3)

(6.3)

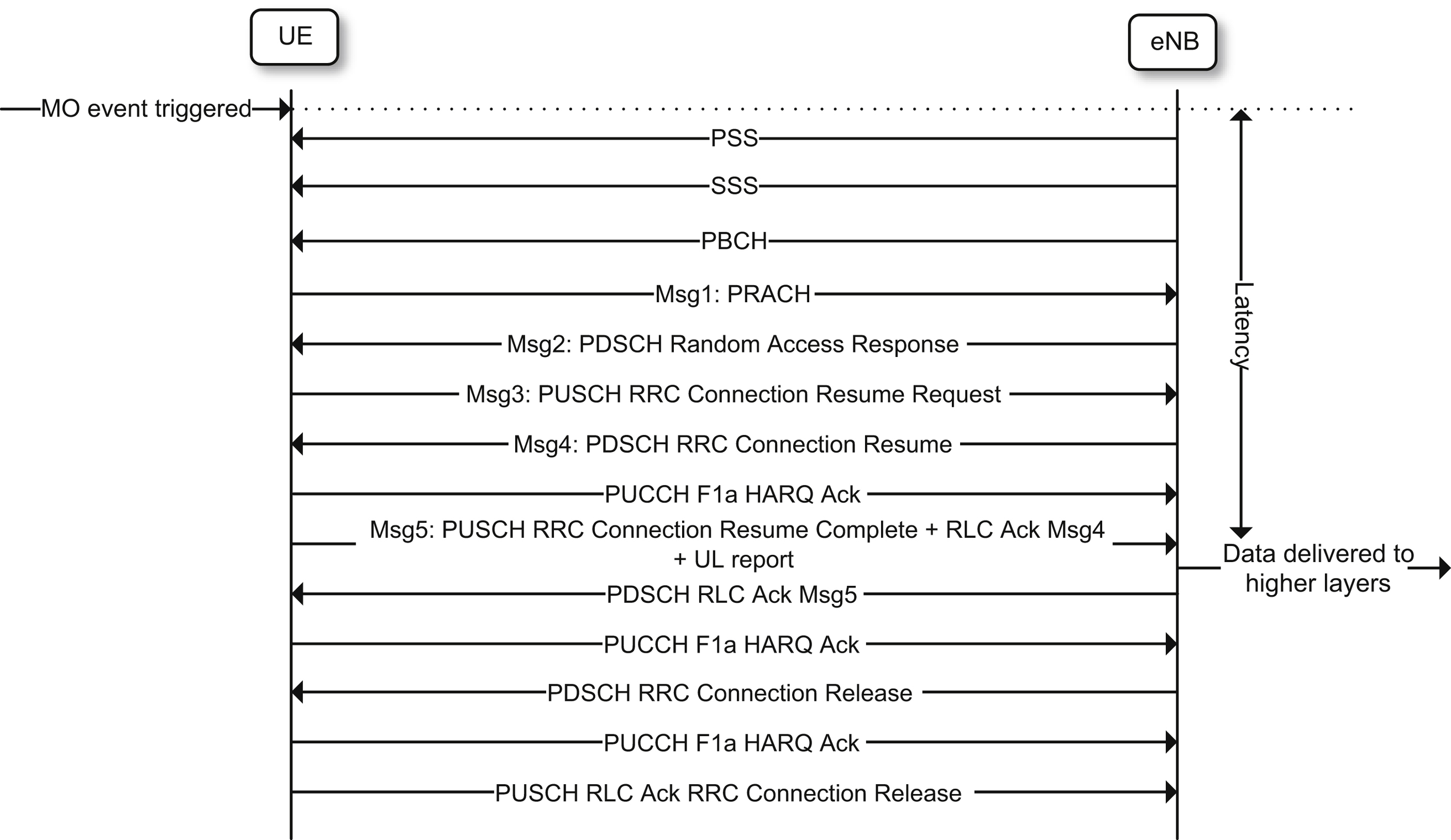

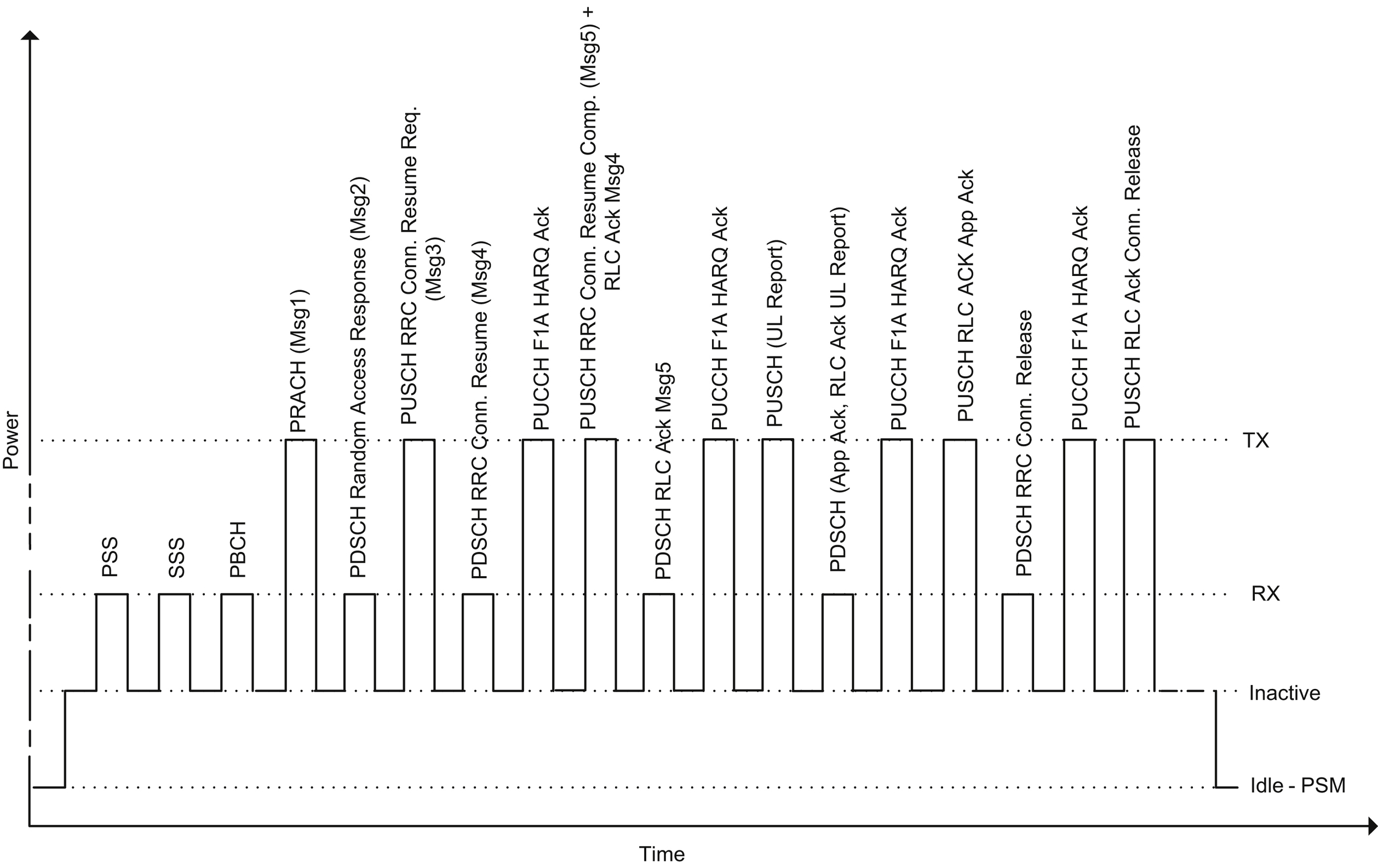

6.4. Latency

(6.4)

(6.4)

- • t SSPB : The PSS/SSS synchronization signals and the PBCH master information block can be acquired in a single radio frame, i.e. within 10 ms.

- • t PRACH : The LTE-M PRACH is highly configurable, and a realistic assumption is that a PRACH resource is available at least once every 10 ms.

- • t RAR, wait : The random access response window starts 3 ms after a PRACH transmission.

- • t RAR : The random access response transmission including MPDCCH and PDSCH transmission times and the cross-subframe scheduling delays requires 3 ms.

- • T Msg3 : The Message 3 transmission may start 6 ms after the RAR, and requires a 1-ms transmission time, i.e. in total 7 ms.

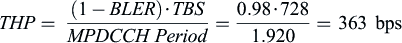

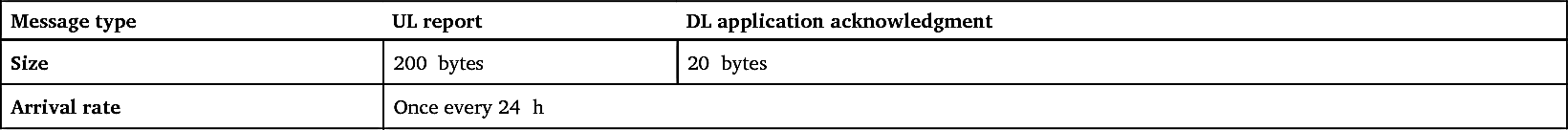

6.5. Battery life

Table 6.7

| Message type | UL report | DL application acknowledgment |

|---|---|---|

| Size | 200 bytes | 20 bytes |

| Arrival rate | Once every 24 h | |

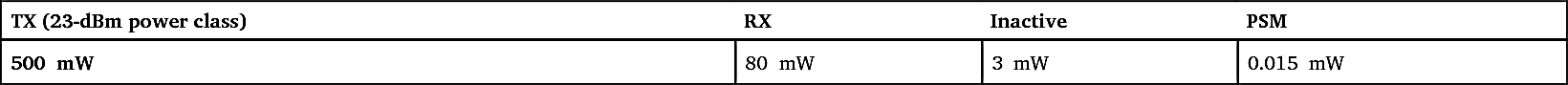

Table 6.8

| TX (23-dBm power class) | RX | Inactive | PSM |

|---|---|---|---|

| 500 mW | 80 mW | 3 mW | 0.015 mW |

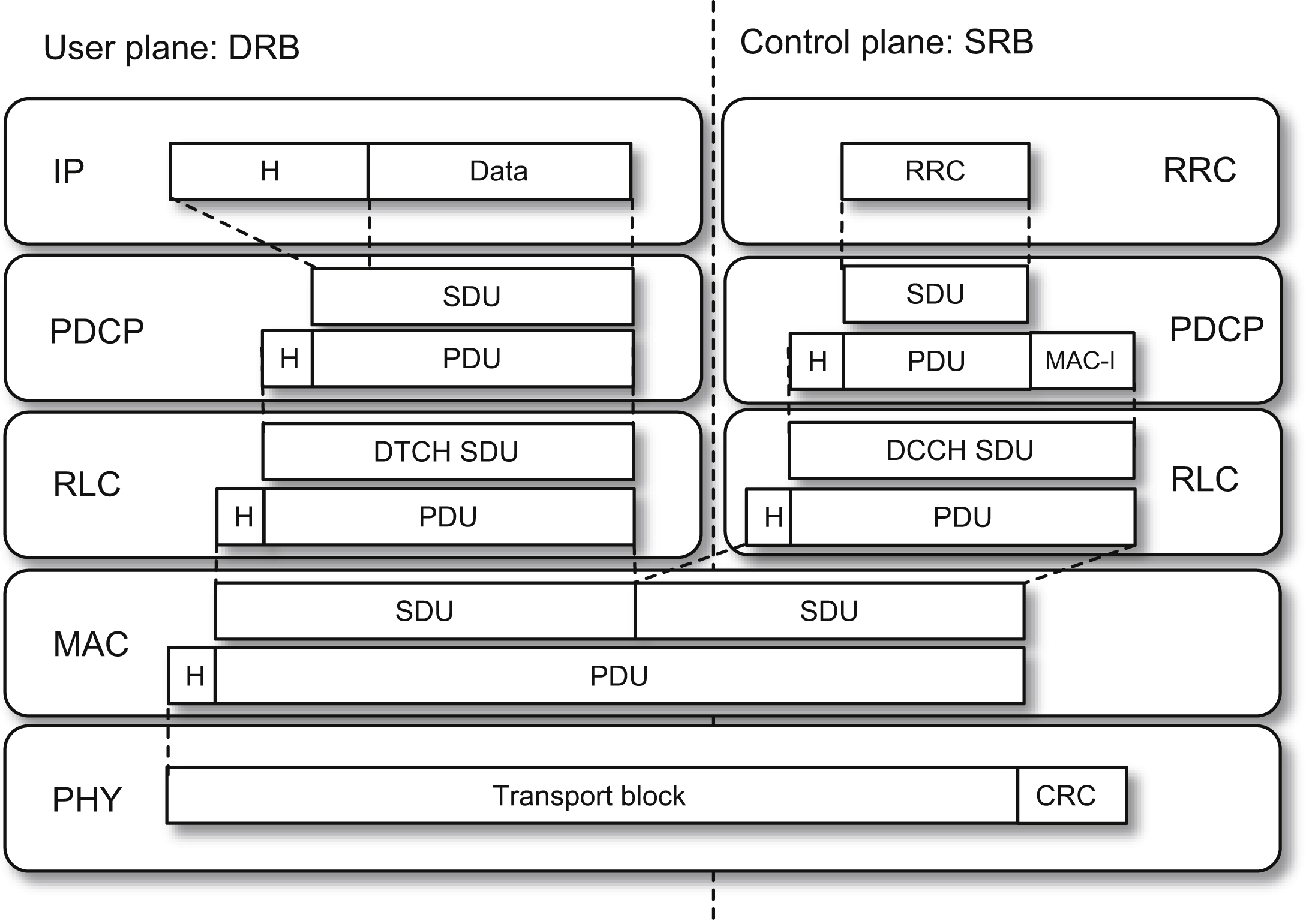

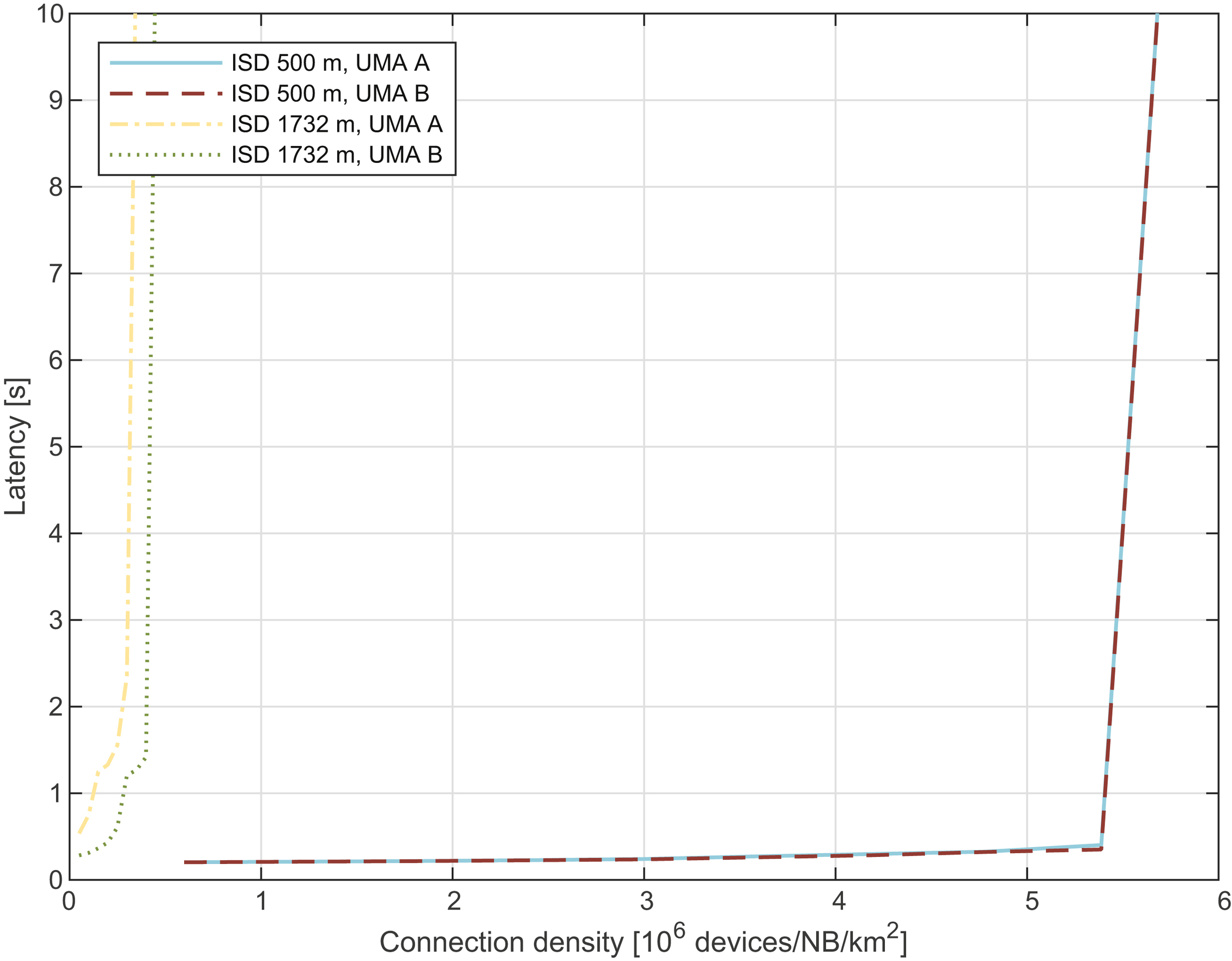

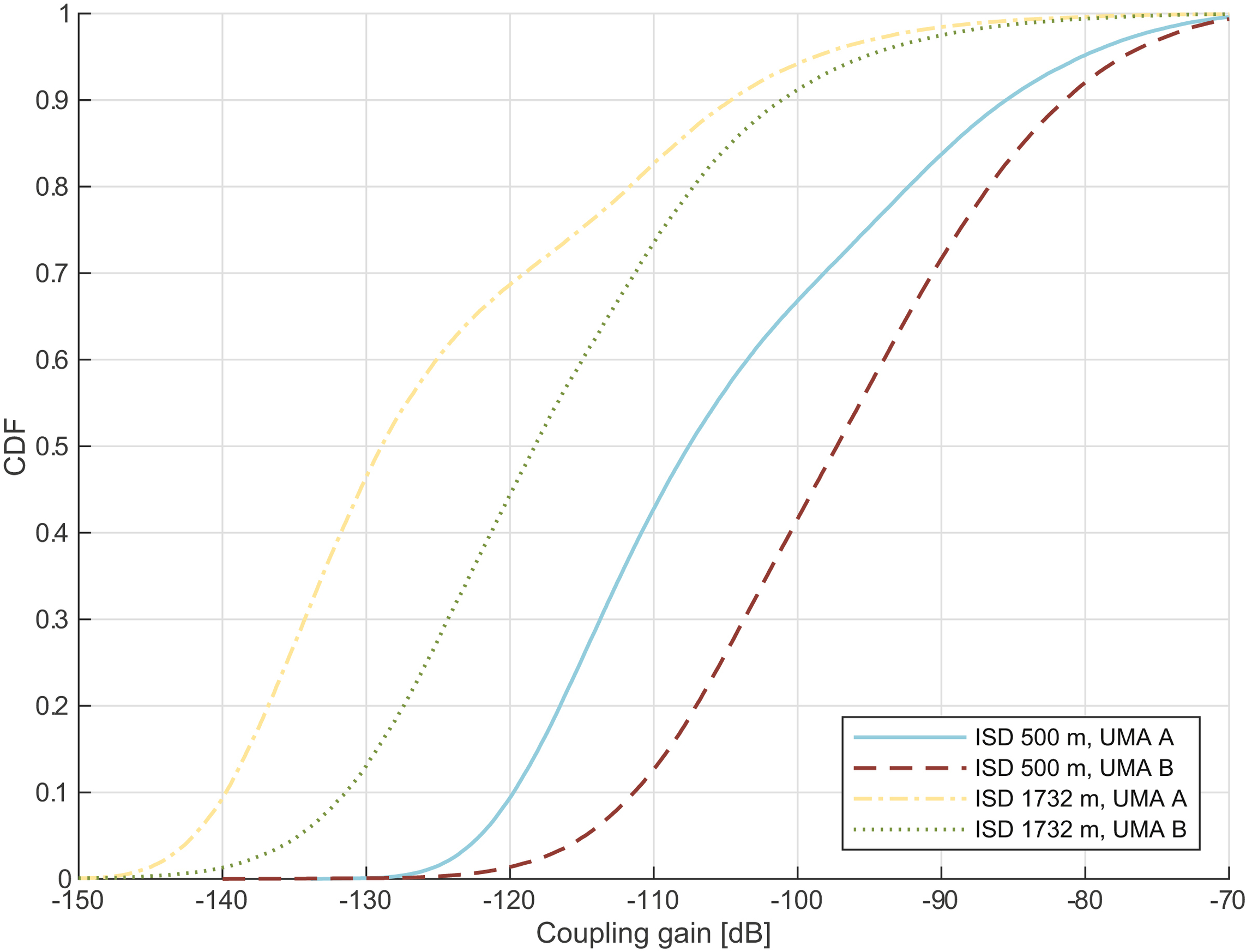

6.6. Capacity

Table 6.9

Table 6.10

| Scenario | Connection density | Resources to support 1,000,000 connections per km2 |

|---|---|---|

| ISD 500 m, UMA A | 5,680,000 devices/NB | 1 NB + 2 PRBs |

| ISD 500 m, UMA B | 5,680,000 devices/NB | 1 NB + 2 PRBs |

| ISD 1732 m, UMA A | 342,000 devices/NB | 3 NBs + 2 PRBs |

| ISD 1732 m, UMA B | 445,000 devices/NB | 3 NBs + 2 PRBs |

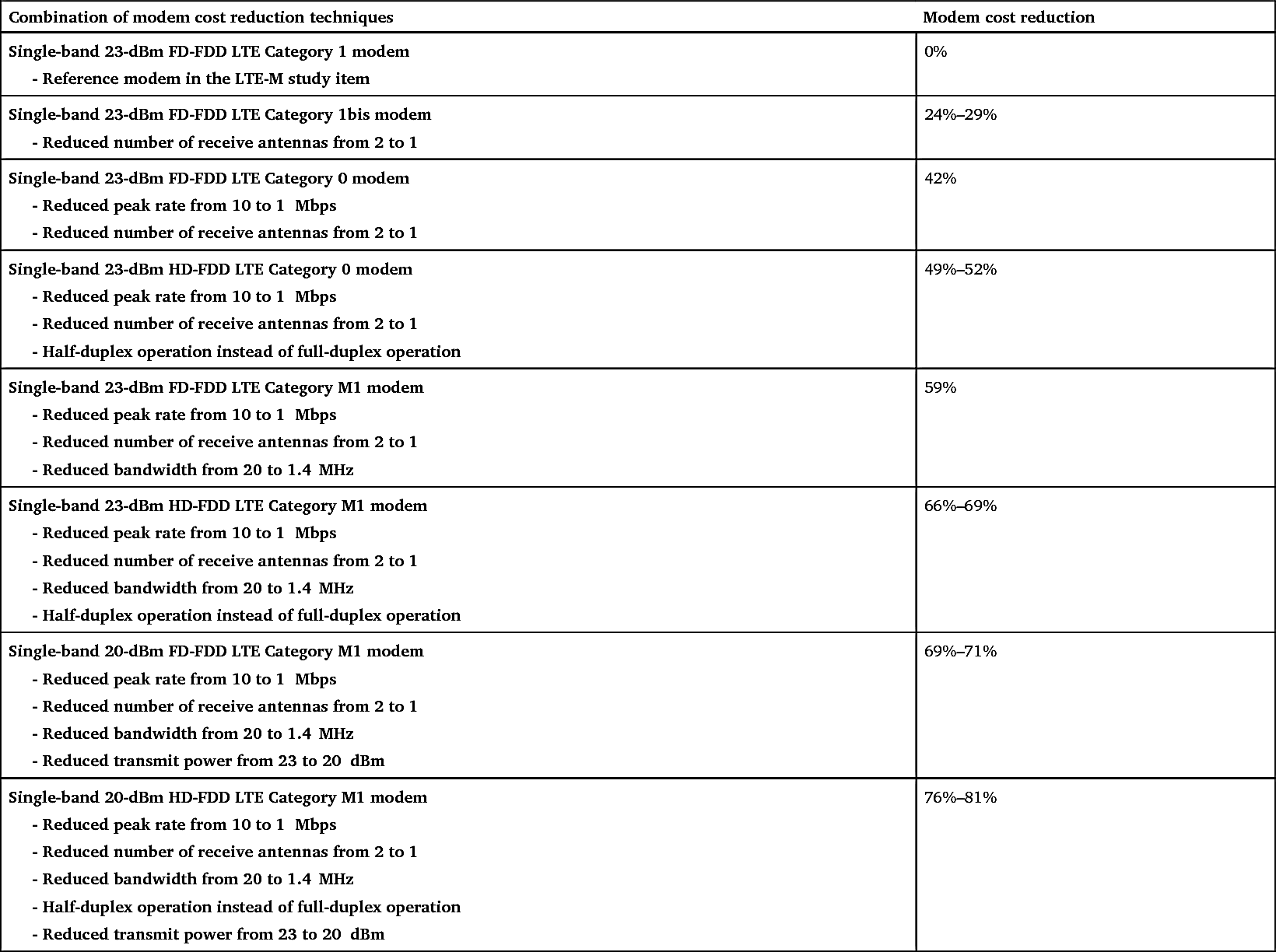

6.7. Device complexity

Table 6.11

Table 6.12

| Combination of modem cost reduction techniques | Modem cost reduction |

|---|---|

|

Single-band 23-dBm FD-FDD LTE Category 1 modem

- Reference modem in the LTE-M study item |

0% |

|

Single-band 23-dBm FD-FDD LTE Category 1bis modem

- Reduced number of receive antennas from 2 to 1 |

24%–29% |

|

Single-band 23-dBm FD-FDD LTE Category 0 modem

- Reduced peak rate from 10 to 1 Mbps - Reduced number of receive antennas from 2 to 1 |

42% |

|

Single-band 23-dBm HD-FDD LTE Category 0 modem

- Reduced peak rate from 10 to 1 Mbps - Reduced number of receive antennas from 2 to 1 - Half-duplex operation instead of full-duplex operation |

49%–52% |

|

Single-band 23-dBm FD-FDD LTE Category M1 modem

- Reduced peak rate from 10 to 1 Mbps - Reduced number of receive antennas from 2 to 1 - Reduced bandwidth from 20 to 1.4 MHz |

59% |

|

Single-band 23-dBm HD-FDD LTE Category M1 modem

- Reduced peak rate from 10 to 1 Mbps - Reduced number of receive antennas from 2 to 1 - Reduced bandwidth from 20 to 1.4 MHz - Half-duplex operation instead of full-duplex operation |

66%–69% |

|

Single-band 20-dBm FD-FDD LTE Category M1 modem

- Reduced peak rate from 10 to 1 Mbps - Reduced number of receive antennas from 2 to 1 - Reduced bandwidth from 20 to 1.4 MHz - Reduced transmit power from 23 to 20 dBm |

69%–71% |

|

Single-band 20-dBm HD-FDD LTE Category M1 modem

- Reduced peak rate from 10 to 1 Mbps - Reduced number of receive antennas from 2 to 1 - Reduced bandwidth from 20 to 1.4 MHz - Half-duplex operation instead of full-duplex operation - Reduced transmit power from 23 to 20 dBm |

76%–81% |