6.1 Hurst exponent analysis on real traffic

The following section presents the case study of an analysis of the Hurst exponent. It was done both to demonstrate the results of research and to highlight the value of the graphical estimators for time series with large horizons. The three previous presented graphical estimators are used: domain rescaling, time–dispersion diagram, and the periodogram. In order to implement these methods for determining the Hurst parameter, the MATLAB software package was used, which offers the user a powerful command interpreter as well as a series of predefined functions optimized for numerical computations.

The traffic to be analyzed was captured using the tcpdump utility (Brenner 1997). It spanned over approximately 5 h from a busy time interval of the day. The data capture was done between 11:38 and 16:47 on October 12, 2005 on the premises of the AccessNet, Bucharest (Rothenberg 2006). The network architecture comprises around 20 network stations connected to a router. The captured traffic was split into five parts of an hour each. Every sequence contains 36,000 measurements, and every measurement represents the number of packages sent through the network at every 100 ms. Note that, for time series of such a large horizon, the graphical estimators (domain rescaling and dispersion–time diagram) become quite powerful, thus rendering the self-similarity degree quite precise.

The tcpdump was used for capturing packet-level traffic. The utility attaches to the network socket of the Linux kernel and copies each package that arrives at the network interface. More precisely, it allows for the network packages to be logged. This utility was used to obtain for each particular frame

• Time of arrival to kernel

• Source and destination addresses and port numbers

• Frame size (only user data are reported; in order to obtain real frame sizes, the header of each protocol should be added)

• Type of traffic (tcp, udp, icmp, etc.)

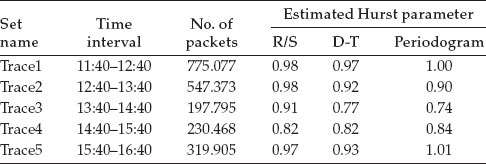

Table 6.1 The Hurst parameter estimated with the three estimator types

The time of arrival is practically the time when the kernel sees the packages and not the time of arrival at the network interface.

Note that for the first and last sets, the estimated Hurst parameter is close to 1, thus highlighting the strong self-similarity of the network traffic. For the other three sets, the estimated parameter is smaller, but different from 0.5. Judging by the time interval and the number of packets, one can conclude that the self-similarity increases when network usage is higher. In the lunch break (12–14 h) it is expected for the human activity to decrease, thus decreasing the traffic self-similarity (Table 6.1).

6.1.2 Graphical estimators representation

Hurst parameter estimation was performed only for time series composed of the number of packets that arrive in the 100 ms time interval. This chapter does not take into account the case of time series formed of the number of bytes transferred in the time unit. Anyway, estimation methods are identical in both cases.

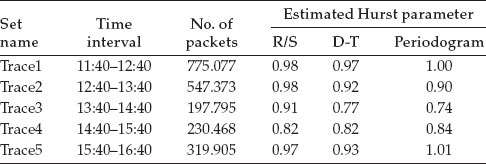

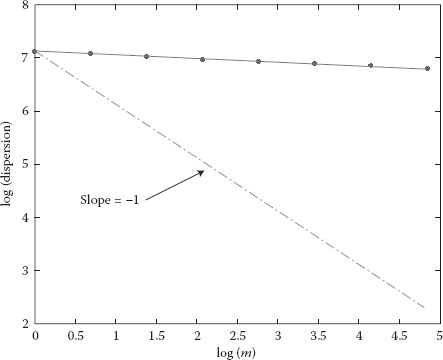

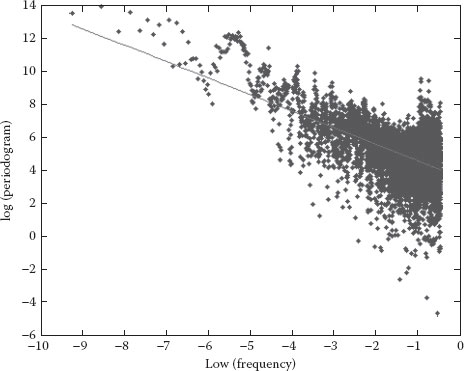

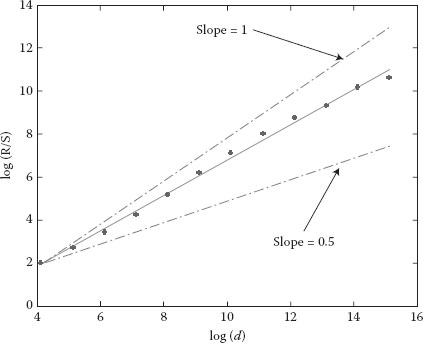

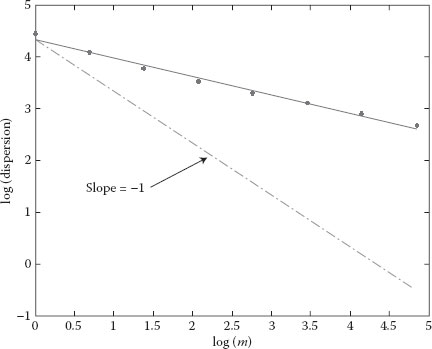

Graphical estimator was used for graphical representation, that is, domain rescaling, dispersion–time diagram, and periodogram. Only six diagrams are provided (Figures 6.1 through 6.6), three for Trace1 and another three for Trace4. Each diagram is represented on a fully logarithmic scale (both axes) and possesses a sequence of reference slopes. Thus, slopes corresponding to 0.5 and 1 values for the Hurst parameter have been drawn on the rescaled domain diagram. On the dispersion–time diagram, a line with −1 slope has been drawn, corresponding to a Hurst parameter of 0.5. The periodogram has no additional lines.

6.2 Inferring statistical characteristics as an indirect measure of the quality of service

6.2.1 Defining an inference model

In order to create a network model of a distributed environment such as the Internet, it is necessary to integrate the data collected from several nodes. Thus, a complete image of the network is obtained for the traffic visualization level. For network management, it is essential to know the dynamic traffic characteristics, for example, for error detection (anomalies, congestion), for monitoring QoS or for selecting servers. In spite of all these, because of the very large size and of the access rights, it is costly and sometimes impossible to measure such characteristics directly. In order to tackle this problem, several methods and instruments can be used for inferring the unseen performance characteristics of the network. In other words, it is necessary to identify and develop indirect traffic measuring techniques, with estimations based on direct measurements in accessible points, in order to define the global behavior in the Internet. Because of the similarities between the inference characteristics of a network and those of a medical tomography, the concept of network tomography was conceived. The method implies the usage of a statistic inference scheme for the unseen variables based on the simultaneous measurement of other, more easy to observe, characteristics from another (sub)network. Thus, the network tomography can be seen as an inverse statistic problem that includes two types of inference: (1) inference of the internal network characteristics based on an end-to-end way (Bestavros et al. 2000) and (2) inference of the aggregated internal flows on an end-to-end way (Cao et al. 2000).

Figure 6.1 Rescaled domain diagram (R/S) for the set Trace1.

Figure 6.2 Dispersion–time diagram for the set Trace1.

Figure 6.3 Periodogram for the set Trace1.

Figure 6.4 Rescaled domain diagram (R/S) for the set Trace4.

Figure 6.5 Dispersion–time diagram for the set Trace4.

Figure 6.6 Periodogram for the set Trace4.

Without reducing the general level of the problem, the information network examples to be provided will refer to the Internet. The fractal nature of the Internet traffic has been determined through several measurements and statistic studies. This work presents and analyses a stochastic mono-fractal process defined as a limit of the integrated superposition of diffusion process with a differential and linear generator, which indeed is a characteristic of the fractal nature of the network traffic. Technically, the Internet is centered on the IP that supports multiple applications, whose characteristics are mixed with the resources of the network, that is, the network management packets are sent on the same channels as the data packets (there is no separate signaling network). Additionally, there are also flow control packets of the transport protocol (TCP). Thus, traffic analysis is a complex process that must satisfy the following minimal requirement:

• User traffic request evaluation, especially when having different service classes

• Network resource sizing: router processing capacity, datalink transmission flow, and interface buffer sizing

• Checking the QoS offered by the network: packet loss rate, end-toend packet transfer delay for real-time applications, and defining the useful transport flow

• Testing the fitness level of performance models designed through analytical calculations or through simulation.

Network traffic measurements contain two categories: passive and active. For the majority of the following examples, the samples are obtained from passive probes, specifically adapted to provide traffic descriptive parameters.

The most common procedure for inferring traffic characteristics is the one using point to point links, based on the data collected from several routers via SNMP (Sikdar and Vastola 2001). Nevertheless, measuring static characteristics of aggregated flows still represents a problem open for research. The network traffic presents correlations between behaviors at different moments and different places, for various motives. It is already established that in the Internet one can observe daily self-similar patterns. Previous studies also demonstrated self-similarities that depend on the place of implementation (Wolman et al. 1999), nature of services (Lan and Heidemann 2001), and type of transiting data (Floyd and Paxson 2001).

The present approach is based on a dual modeling structure proposed in Lan and Heidemann (2002). In its simplest form, it is assumed that the network traffic (T) can be modeled as a function of three parameter sets: number of users (N), user behavior (U), and application-specific parameters (A). In other words, T = f (N, U, A). User behavior parameters can be packet arrival distribution probability or the number of requested pages, while application-specific parameters can be object size distributions or the number of objects in a Web page. Experimental results show that behavior parameter distributions (U) display a correlation trend in the same network. This statement suggests that the user behavior parameter distributions at time t2 can be modeled based on the ones at time t1 on the same network. In addition, it is already demonstrated that the application-specific parameter distributions (A) of two networks with similar user populations are susceptible of being correlated. More precisely, it is observed that the application-specific parameter distributions of two similar networks tend to be correlated when the traffic is aggregated on a higher level. On a lower traffic level, the application-specific parameter distributions are still correlated but only in their cores. Because a higher level of aggregate traffic can be generated either by a greater number of users or by a longer measurement horizon, we can conclude that similar user populations tend to have similar behaviors in similar applications. On the contrary, for a lower aggregation level, it is more probable for some “dominant” flows to cause large variations in the distributions. Moreover, the traffic in similar networks has the same distribution model every day regarding the number of active users. In other words, the number of active users is also correlated for two similar networks in different moments of the day. The last two statements suggest that, by using a spatial correlation between two similar networks, the application-specific parameter distributions and the number of active users can be inferred based on the measurements performed on the other network.

Starting from the previous statements, the authors propose the following approaches for inferring the traffic in network n1 based on the measurements of n2, considering the two networks n1 and n2 as having similar user populations:

• The first step is to collect the traffic from both networks n1 and n2 over a certain period. Based on these initial measurements, the three traffic parameter sets can be established (N, U, A) for n1 and n2. Afterward, the derived statistics can be compared in order to determine if there exists a spatial correlation between n1 and n2.

• Once the similarity between n1 and n2 has been confirmed and the spatial correlations between them have been calculated, we can establish a model for each of the three traffic parameter sets (N, U, A) for n1 at a given future time based only on measurements done on n2. In particular, the number of active users (N) and the application-specific parameter distributions (A) of network n1 can be inferred based on the measurements performed on network n2 in the same time interval, while the application-specific parameter distributions (U) from n1 can be inferred using the statistics derived from the initial measurements (done at step 1). Note that the first step must be performed only once.

Figure 6.7 illustrates this concept: the colored squares indicate the collected data, while in the striped squares have the inferred data. Based on the initial measurement from time t1 that confirms the similarity between n1 and n2, further measurement in n2 can be used to predict the traffic in n1 at times t2 and t3.

6.2.2 Highlighting network similarity

Intuitively, two networks are “similar” if they have user populations with similar characteristics. For example, the traffic generated in two laboratories of the Faculty of Automatic Control and Computer Science may be similar because the students display common behavior when using the applications. In other words, it can be considered that two networks are similar when there are correlations at the application level in the traffic. However, a formal procedure is needed in order to accomplish traffic parameters inference. First of all, the two networks will be checked for traffic similarities. Then, the distributions for user behavior and application-specific parameter will be calculated. Lastly, the statistical data of the traffic from both networks will be compared both qualitatively and quantitatively, in order to establish if the two are similar.

Figure 6.7 Traffic inference using network similarity.

In order to compare the traffic quantitatively, the graphic of the cumulative distribution function (CDF) is analyzed for the distributions derived from the two networks. For a quantitative comparison, the two distributions are normalized. Three types of tests have been used in order to determine if the two distributions are significantly different regarding the average, variance, and shape. Practically, the Student t-test was used for comparing average, the F test for evaluating variance, and the Kolmogorov–Smirnov test for testing the shape. It is considered that the two distributions are strictly similar if all the three tests output a 95% trust level. Note that all the mentioned tests are exact, and thus hard to apply. In order to relax the testing procedure, approximate similarity is given by the function s that results as a linear combination of the average, variance, and shape differences: s = w1 ⋅ m + w2 ⋅ v + w3 ⋅ D,

where

and

In the previous formulas, μ denotes the average, v denotes the data variance, and D is the value of the Kolmogorov–Smirnov test that represents the largest absolute value between the two data sets. N1 and N2 are data samples taken from the two networks, while w1, w2, and w3 are weights with positive values that allow the user to prioritize certain metrics. The possible values for m, V, and D are between 0 and 1. Intuitively, the two networks are more similar if the value for the function s is closer to 0.

It is clear that the application-specific parameter distributions of two networks are more susceptible of being correlated when the traffic is more intensely aggregated. In spite of this, one can observe that for a lower traffic aggregation level, there are some dominant flows that can cause large variations in the distributions tail. Such variations suggest the necessity of separately modeling the kernel and the tail, especially for traffic distributions with long distribution tails. While these dominant flows account for only a small number of the Internet flows, they consume a significant percent of bandwidth. In order to formally describe the aggregation level (G), it is defined as the product of the quantity of traffic generated by the sources (S) and the length of the measuring period (T), G = S × T. Moreover, S can be described as a function of the number of users and the traffic volume generated by each user. Such a definition implies that the effect of dominant flows in the traffic tail can be reduced, if the user population is larger or if the data is collected over a longer time interval. Network similarity suggests the possibility of inferring traffic data in places where direct data acquisition is otherwise impossible. In other words, user behavior parameter distributions tend to be correlated in the same way as the application-specific parameter distributions defined denoted T = f (N, U, A). By using such a correlation, the behavior parameter distribution can be modeled at t2 based on measurement done at t1 in the same network . The inference procedure obtained from Figure 6.7 can be refined as follows:

• The first step is to collect data traffic from both networks n1 and n2 for a certain period of time (from t0). The three parameter sets (number of active users (N), user behavior parameter distributions (U), and application-specific parameter distributions (A)) can be calculated from traffic traces.

• The similarity between n1 and n2 suggests that it is highly probable for the number of users from network n1 to be proportional to the number of users from n2 at any moment (Nn1 = α × Nn2 with α being a constant). By using traces collected at time t0, the scale factor α can be calculated. Moreover, the similarity between the networks n1 and n2 implies the existence of some functions g1, g2, etc., named correlation functions, so that and . After determining from the initial measurements of the spatial correlations between n1 and n2, the traffic from network n1 can be predicted using only measurements from network n2.

• Lastly, if the user behavior parameters are time correlated, they can be calculated for every moment in time in network n1 based on the statistical data calculated initially at moment t0. Now the relationship can be written as: .

6.2.3 Case study: Interdomain characteristic interference

The methodology described earlier was illustrated in a case study that wants to determine by inference the QoS inside the domain based on the measurements done at the end of one communication way. The network tomography technique is based on the assumption that end-to-end QoS time series data, denoted as Qe–e are observable characteristics on every end in the domain. Additional to the Qe–e there can be spatial QoS data series denoted Qspi, i = 1,…, n that are measured on the same route in the interdomain and can be linked to Qe–e for certain time intervals that are of interest for the estimation. The problem of determining the intermediate unobservable characteristics from the interdomain (hidden network) is thus formulated as follows: given time series Qe–e and space series Qspi—the hidden space data series can be calculated.

The scenario for a network tomography is as follows (Figure 6.8):

1. A trust interval (μ1 − μ2) is chosen, which represents the difference between the two averages and contains all the values that will not be rejected in neither one of the two test hypothesis: H0: μ1 − μ2 = 0 and H1: μ1 − μ2 ≠ 0.

2. The measured values that contributed to estimating the average are adjusted, in case they are quasi-independent (correlated, the self-correlation function, ACF(i) ≪ 1 for any i > 0).

3. For normal or highly correlated distributions, the measured values are kept.

The experiments have been done on an emulation environment requiring only one PC running a Linux operating system configured as a router to create a wide variety of networks and also critical end-to-end performance conditions for different voice over IP (VoIP) traffic classes. Because there was no additional traffic, maximum and minimum values of the average are almost identical. Time series estimated for the interdomain are given in Figure 6.9.

In case the load of the network is high, spatial QoS values can be ignored, while estimating the interdomain delay with the help of the model that reflects such a delay based on an end-to-end pattern. The estimated end-to-end delay is presented in Figure 6.10.

Figure 6.8 Block diagram of the network tomography.

Figure 6.9 Interdomain delay estimation.

Figure 6.10 “Hidden” delay estimation in high traffic environments.

6.3 Modeling nonlinear phenomena in complex networks and detecting traffic anomalies

The potential of fractal analysis for evaluating traffic characteristics, especially detecting traffic anomalies, is demonstrated in this chapter by simulation in a virtual environment. To this end, some original methods are proposed: an Internet traffic model based on graphs of scale-free networks, one method for determining self-similarity in generated traffic, a method that compares the behavior of two subnetworks and a powerful experimental testing method (using a parallel computing platform) running the pdns (parallel/distributed network simulator) on different subnetworks with a scale-free topology.

While the number of nodes, protocols, used ports, and new applications (e.g., Internet multimedia applications) keep increasing, it is more evident for the network administrators that there are not enough instruments for measuring and monitoring traffic and topology in high-speed networks. Even the simulation of such networks is becoming a challenge. Presently, there is no instrument to precisely simulate the Internet traffic.

There are two type of network monitoring: active and passive. During active monitoring, the routers of a domain are polled periodically in order to collect statistics about the general state of the system. This intense data volume is stored so that to extract useful information for monitoring. In the case of passive monitoring, the network is analyzed only on its contour edges. The parameters are determined by applying mathematical formulas on the set of collected data. In this case, the major problems are related to storing and processing the traffic that passes through the nodes on the contour.

Numerous network management applications have traffic processing methods for packets or bytes, differentiate according to the header fields in classes that depend on the application requirements, such as service development, security applications, system engineering, consumer billing, etc.

While for active measuring it is necessary to inject probes in the network traffic and then to extrapolate the network performance based on the performance of the injected traffic, the passive systems are based on observing and logging the network traffic. One characteristic of passive measuring systems is that they generate a large volume of measured data, obtained through inference techniques that exploit traffic self-similarity.

The network tomography (notion coined in (Vardi 1996)) tackles inference problems. It comprises of statistic inference schemes of unobservable characteristics from a network by simultaneously measuring other observable ones. The network tomography includes two typical forms: (1) inference of internal network characteristics based on end-to-end measurements (Coates and Nowak 2001; Pfeiffenberger et al. 2003) and (2) inference of the traffic behavior on the end-to-end path based on aggregated internal flows such as traffic intensity estimation at sender–receiver level based on analyzing link-level traffic measurements (Cao et al. 2000; Coates et al. 2002).

Fractal analysis seems to be suitable for both methods: direct and indirect measurement of network characteristics and anomalies. Previous work (Dobrescu et al. 2004a) is focalized on using fractal techniques in video traffic analysis. In this framework, two analytical methods have been used: self-similarity (Dobrescu et al. 2004b) and multifractal analysis (Dobrescu and Rothenberg 2005a).

The goal of this chapter is to demonstrate, through virtual environment simulations, the potential of fractal analysis techniques for measuring, analyzing, and synthesizing network information in order to determine the normal network behavior, to be used later for detecting traffic anomalies. To this end, the authors propose a model of Internet traffic based on graphs of scale-free networks where one can apply an inference based on self-similarity and fractal characteristics. This model allows for splitting a large network in several networks with quasi-similar behavior and then for applying fractal analysis for detecting traffic anomalies in the network.

The current state of rapid development of advanced technology has led to the emergence and spread of ubiquitous networks and packet data, which gradually began to force the system switching network and at the same time increase the relevance of the traffic self-similarity. The fractal analysis can solve several problems, such as the calculation of the burst for a given flow, the development of algorithms and mechanisms providing quality of service in terms of self-similar traffic and the determination of parameters and indicators of quality of information distribution.

6.3.2 Self-similarity characteristic of the informational traffic in networks

6.3.2.1 SS: Self-similar processes

Mandelbrot introduces an analogy between self-similar and fractal processes (Mandelbrot and Van Ness 1968). For incremental processes, Xs,t = Xt − Xs, the self-similarity is defined by the equation:

Mandelbrot builds its own self-similar process (fBm—fractional Brownian movement) starting from two properties of the Brownian movement (Bm): it has independent increments and it is self-similar with a Hurst parameter H = 0.5.

Denoting Bm as B(t) and fBm as BH(t), below is given a simplified version of the Mandelbrot fBm:

A self-similar process is one with long-range dependence (LRD) if α ∈ (0,1) and C > 0 exist so that:

where ρ(k) is the kth order self-correlation.

When presented in logarithmic coordinates, Equation 6.3 is called the correlogram of the process and has an asymptote at –α. Note that self-similar processes exist that are not LRD, while LRD processes also exist that are not self-similar. Nevertheless, fBm with H > 0,5 is both self-similar and LRD.

In a paper from 1993, Leland et al. report discovering the self-similar nature of local-area network (LAN) traffic in general, and of Ethernet traffic, to be more precise. Note that all methods used in (Leland et al. 1993) (and in numerous previous works) detect and estimate LRD rather than self-similarity. The only proof given by self-similarity is the visual inspection of the data series at different time scales. Since then, self-similarity (LRD) has been reported for different kinds of traffic: LAN, WAN, variable byte rate video, SS7, HTTP, etc.

The lack of access in high-speed, highly aggregated links, as well as the lack of devices capable of measuring traffic parameters have hindered until now the study of Internet informational traffic. In principle, in this case, the traffic can differ quantitatively from the types enumerated earlier, because of factors like: too high aggregation level, end-to-end traffic policing and shaping, and a RTT (round trip time) too large for TCP sessions. Currently, some researchers stated that the Internet traffic aggregation causes a convergence toward a Poisson limit. For reasons previously presented and based on several remarks on a rather short timescale, the authors do not agree on the following: on a short timescale, the effects of network transport protocols are believed to dominate the traffic correlations; and on a long timescale, nonstationary effects such as daily load graphs become significant.

6.3.2.1.1 Self-similarity in network operation In almost all fields of network communications, it is important that the traffic characteristics are understood. In a packet exchange environment, there are no reserved resources for a connection, which would allow for the packets to reach a node with a higher rate than the processing one.

Although, in general, there can be many possible operations, they are split in just two classes: serialized (sending bits outside the link) and internal processing (all the processing is done inside the node: classifying, routing tables parsing, etc). The serialization delays are determined by the speed of the output line. Internal processing delays are random, but data structures and advanced algorithms are employed in order to obtain deterministic performances. As a consequence, the truly random part of the delay is the time a packet spends waiting. The internal processing of a node is relatively well known (through analysis or direct measurements). In contrast, incoming traffic flows are neither fully known nor controllable. Thus, it is of great importance to accurately model these flows and to predict the impact they may have upon the network performance.

It is already demonstrated that a high variability associated with LRD or SS processes can strongly deteriorate network performance, generating queue delays higher than the ones predicted with traditional models. Figure 6.11 illustrates this aspect. The average length of a queue obtained through queue simulation is very different from the one obtained through a simulation model (M/D/1).

In the case of the simple node model, the “D” (determinist) and “1” (no parallel service) hypotheses are feasible, so we would like to identify “M” (Markovian, Poisson exponential process) as one discrepancy cause. In the following section, the aim is to demonstrate that there is a “fBm/s/1” queue distribution model.

6.3.2.1.2 Fractal analysis of Internet traffic When the measurements have fractal properties then this characteristic is expressed through some observations. Self-similarity is a statistic property. Suppose there are (very long) data series, with finite average and variance (covariance of the stationary statistic processes). Self-similarity implies a fractal behavior: it retains its aspect over several scales.

The main characteristics, deduced from the self-similarity, are long-range dependency (LRD), slowly decreasing dispersion. The dispersion–time graph shows a slow decrease. Moreover, the self-correlation function for aggregate processes cannot be identified against the original processes.

Consider that there is available a set of data expressed as a time series. In order to find long-range dependencies, data obtained from the simulation model must be evaluated on different timescales. The same number of cells sample versus time must be cut in lags. Cuts should be performed several times, varying, in the meantime, the number and the cut length. The length of n lags falls in the scope future observations as well as the number of observations in a lag. For each n, a number of lags is randomly selected. This lag number must be identical for all values of n. For the selected lags, two parameters are calculated:

Figure 6.11 Representation limit for simulating a queue with a classical model.

• that is the lag sample range

• S(n) – dispersion of {X1 + X2 + … + Xn} of a lag

For the short-range dependence sets, the expected value for E[R(n)/S(n)] is approximately equal to c0n1/2. On the contrary, for the sets with long-range dependency, E[R(n)/S(n)] is approximately c0nH with 0,5 < H < 1. H is the Hurst parameter and c0 is a constant of relative minor importance.

Hurst designed a normalized, dimensionless measure for characterizing variability, named the rescaled domain (R/S). For an observation data set X = {Xn, n ∈ Z+} with a sample average , sample dispersion S2(n), and domain R(n), the adjusted rescaled domain or the R/S statistics are given by

where

For many natural phenomena, E[R(n)/S(n)] ~ cnH when n → ∞, c being a positive constant, independent of n. By introducing logarithm on both sides, the result is log{E[R(n)/S(n)]} ~ H log(n) + log(c) for n → ∞. Thus, the value of H can be estimated by graphically representing log{E[R(n)/S(n)]} as a function of log(n) (POX diagram), and approximating the resulted points with a straight line of slope H.

The R/S method is not precise; it does only estimate the self-similarity level of a time series.

Figure 6.12 contains an example of a POX diagram. H is the Hurst parameter. If there is no fractal behavior, then H is close to 0.5, while a value higher than 0.5 suggests self-similarity and fractal character.

6.3.3 Using similarity in network management

6.3.3.1 Related work on anomalies detection methods

One of the main goals of fractal traffic analysis is to show the potential to apply signal processing techniques to the problem of network anomaly detection. Application of such techniques will provide better insight for improving existing detection tools as well as provide benchmarks to the detection schemes employed by these tools. Rigorous statistical data analysis makes it possible to quantify network behavior and, therefore, more accurately describe network anomalies. In this context, network behavior analysis (NBA) methods are necessary. Information about a single flow does not usually provide sufficient information to determinate its maliciousness. These methods have to evaluate the flow in the context of the flow set. There are several different approaches that can be classified into groups depending on the field they utilize for the detection.

Figure 6.12 POX diagram example.

6.3.3.1.1 Statistical-based techniques These techniques are widely used for network detection. Flow data represent the behavior of the network by statistical data, like a traffic rate, packet, and flow count for different protocols and ports. Statistical methods typically operate on the basis of a model and keep one traffic profile representing the normal or learned network traffic and a second one representing the current traffic. The anomaly detection is based on comparison of the current profile to the learned one, significant deviation of these two profiles means network anomaly and a possible attack (Garcia-Teodoro et al. 2009).

6.3.3.1.2 Knowledge-based techniques This category includes so-called expert system approach. Expert systems classify the network data according to a set of rules, which are deduced based on the training data. Specification-based anomaly methods use a model, which is manually constructed by a human expert, in terms of a set of rules (the specifications) that determine the normal system behavior.

6.3.3.1.3 Machine learning-based techniques Several tools can be included in this category, the most representative being the following.

Bayesian networks, which are probabilistic graphical models that represent a set of random variables and their conditional dependencies in a form of directed acyclic graph. The Bayesian network learns the casual relations between attributes and class labels from the training data set before it can classify unknown data.

Markov models include two different approaches: Markov chains and hidden Markov models. A Markov chain is a set of interconnected through certain transition probabilities, which determine the topology and the capabilities of the model. The probability of transition is determined during training phase and reflects the normal behavior of the system. Anomalies are detected by comparing the probability of observed sequences with a fixed threshold. The second hidden Markov model assumes that the system of interest is a Markov process with hidden transitions and states. Only the so-called productions are observable.

Decision tree, which can efficiently classify anomalous data. The root of a decision tree is the first attribute with test conditions that split input data toward one of the internal nodes depending on the characteristics of the data. The decision tree has to be first trained with known data before it can be used to classify unknown data.

Fuzzy logic techniques, which are derived from fuzzy set theory. Fuzzy methods can be used as an extension to other approaches like in case of the intrusion detection using fuzzy hidden Markov models. The main disadvantage is high resource requirements.

Clustering and outer detection, which are methods used to separate a set of data into groups which members are similar or close to each other according to given distance metric.

The abovementioned methods for anomaly detection in computer networks have been studied by many researchers.

One of the first surveys about anomaly detection was presented in Coates et al. (2002), which proposed a taxonomy in order to classify anomaly detection systems. On the other hand, it focused on classifying commercial products related to behavioral analysis and proposed a system that collects data from SNMP objects and organizes them as a time series. An autoregressive process is used to model those time series. Then, the deviations are detected by a hypothesis test based in the method generalized likelihood ratio (GLR). The behavior deviations that were detected in each SNMP object are correlated later according to the objects’ characteristics. The first component of the algorithm applies wavelets to detect abrupt changes in the levels of ingress and egress packet counts. The second one searches for correlations in the structures of ingress and egress packets, based on the premise of traffic symmetry in normal scenarios. The last component uses a Bayes network in order to combine the first two components, generating alarms.

In a notable paper (Li et al. 2008), the authors analyzed the traffic flow data using the technique known as principal component analysis (PCA). It separates the measurements into two disjoint subspaces: the normal subspace and the anomalous subspace, allowing the anomaly detection for characterizing the normal network-wide traffic using a spatial hidden Markov model (SHMM), combined with topology information. The CUSUM algorithm (Cumulative Sum) was applied to detect the anomalies. This work proposes the application of simple parameterized algorithms and heuristics in order to detect anomalies in network devices, building a lightweight solution. Besides, the system can configure its own parameters, meeting network administrator’s requirements and decreasing the need for human intervention in management.

Brutlag (2000) used a forecasting model to capture the history of the network traffic variations and to predict the future traffic rate in the form of a confidence band. When the variance of the network traffic continues to fall outside of the confidence band, an alarm is raised. Barford et al. (2002) employed a wavelet-based signal analysis of flow traffic to characterize single-link byte anomalies. Network anomalies are detected by applying a threshold to a deviation score computed from the analysis. Thottan and Ji take management information base (MIB) data collected from routers as time series data and use an auto-regressive process to model the process. Network anomalies are detected by inspecting abrupt changes in the statistics of the data (Thottan and Ji 2001). Wang et al. (2002) take the difference in the number of SYNs (beginning messages) and FINs (end messages) collected within one sampling period as time series data and use a nonparametric cumulative sum method to detect SYN flooding by detecting the change point of the time series. While these methods can detect anomalies, which cause unpredicted changes in the network traffic, they may be deceived by attacks that increase their traffic slowly.

Let us look at other studies related to the use of the machine learning techniques to detect outliers in data sets from a variety of fields. Ma and Perkins present an algorithm using support vector regression to perform online anomaly detection on time-series data (Ma and Perkins 2003). Ihler et al. present an adaptive anomaly detection algorithm that is based on a Markov-modulated Poisson process model, and use Markov chain Monte Carlo methods in a Bayesian approach to learn the model parameters (Ihler et al. 2006).

6.3.3.2 Anomaly detection using statistical analysis of SNMP–MIB

Detecting anomalies is the main reason for monitoring the network. Anomalies represent deviations from the normal behavior. For a basic definition, anomalies can be characterized by correlating changes in measured data during abnormal events (Thottan and Ji 2003). The term “transient changes” refer to abrupt modifications of measured data. Anomalies can be classified into two categories. The first category is related to performance problems and network failure. Typical examples of performance anomalies are file server defects and congestions. In certain cases, software problems can manifest as network anomalies, such as protocol implementation errors that determine increases or decreases of the traffic load characteristic. The second category of anomalies is related to security problems (Wang et al. 2002).

Probing instruments facilitate an instantaneous measurement of the network behavior. Network test samples are obtained with specialized instruments such as ping or traceroute that can used to obtain specific parameters such as packet loss or end-to-end delays.

These methods do not necessitate cooperation between network services providers, but imply a symmetrical route between source and destination. On the Internet, this assumption cannot be guaranteed and thus data obtained through the probing mechanism can be limited in the case of anomaly detection. Other methods have been used for determining the traffic behavior: data routing protocols (Aukia et al. 2000), packet filtering (Cleveland et al. 2000), and data from network management protocols (Stallings 1994). This last category includes simple network management protocol (SNMP) that assures the communication mechanism between a server and hundreds of SNMP agents in the network equipment. The SNMP server holds certain variables, management information base (MIB) ones, in a database. Because of the accuracy provided by the SNMP, it is considered an ideal data source for detecting network anomalies. The most used anomaly detection methods are the rule-based approach (Franceschi et al. 1996), finite state machine models (Lazar et al. 1992), pattern matching (Clegg 2004), and statistical analysis (Duffield 2004). Among these methods, statistical analysis is the only one capable of continually following the network behavior and does not need significant recalibration. For this reason, the proposed anomaly detection method employs statistical analysis based on correlation and similarity in SNMP MIB variable data series.

Network management protocols provide information about network traffic statistics. These protocols support variables that correspond to traffic counts at the device level. This information from the network devices can be passively monitored. The information obtained may not directly provide a traffic performance metric but could be used to characterize network behavior and, therefore can be used for network anomaly detection. In the following will be presented the details of the method for anomaly detection proposed by Thottan and Ji (2003).

Using this type of information requires the cooperation of the service provider’s network management software. However, these protocols provide a wealth of information that is available at very fine granularity. The following subsections will describe this data source in greater detail.

6.3.3.2.1 Simple Network Management Protocol (SNMP) SNMP works in a client–server paradigm. The protocol provides a mechanism to communicate between the manager and the agent. A single SNMP manager can monitor hundreds of SNMP agents that are located on the network devices. SNMP is implemented at the application layer and runs over the UDP. The SNMP manager has the ability to collect management data that is provided by the SNMP agent but does not have the ability to process this data. The SNMP server maintains a database of management variables called the management information base (MIB) variables. These variables contain information pertaining to the different functions performed by the network devices. Although this is a valuable resource for network management, we are only beginning to understand how this information can be used in problems such as failure and anomaly detection.

Every network device has a set of MIB variables that are specific to its functionality. MIB variables are defined based on the type of device as well as on the protocol level at which it operates. For example, bridges that are data link-layer devices contain variables that measure link-level traffic information. Routers that are network-layer devices contain variables that provide network-layer information. The advantage of using SNMP is that it is a widely deployed protocol and has been standardized for all different network devices. Due to the fine-grained data available from SNMP, it is an ideal data source for network anomaly detection.

6.3.3.2.2 SNMP–MIB Variables The MIB variables fall into the following groups: system, interfaces (if), address translation (at), Internet protocol (ip), Internet control message protocol (icmp), transmission control protocol (tcp), user datagram protocol (udp), exterior gateway protocol (egp), and simple network management protocol (snmp). Each group of variables describes the functionality of a specific protocol of the network device.

Depending on the type of node monitored, an appropriate group of variables can be considered. If the node being monitored is a router, then the ip group of variables are investigated. The ip variables describe the traffic characteristics at the network layer. MIB variables are implemented as counters. Time-series data for each MIB variable is obtained by differencing the MIB variables at two subsequent time instances called the polling interval.

There is no single MIB variable that is capable of capturing all network anomalies or all manifestations of the same network anomaly. Therefore, the choice of MIB variables depends on the perspective from which the anomalies are detected. For example, in the case of a router, the ip group of MIB is chosen, whereas for a bridge, the if group is used.

As the network evolves, each of the methods for statistical analysis described above requires significant recalibration or retraining. However, using online learning and statistical approaches, it is possible to continuously track the behavior of the network. Statistical analysis has been used to detect both anomalies corresponding to network failures, as well as network intrusions. Interestingly, both of these cases make use of the standard sequential change point detection approach.

Abrupt changes in time-series data can be modeled using an autoregressive (AR) process. The assumption here is that abrupt changes are correlated in time, yet are short-range dependent. In our approach, we use an AR process of order to model the data in a 5-min window. Intuitively, in the event of an anomaly, these abrupt changes should propagate through the network, and they can be traced as correlated events among the different MIB variables. This correlation property helps distinguish the abrupt changes intrinsic to anomalous situations from the random changes of the variables that are related to the network’s normal function. Therefore, network anomalies can be defined by their effect on network traffic as follows: Network anomalies are characterized by traffic-related MIB variables undergoing abrupt changes in a correlated fashion. Abrupt changes in the generated traffic are detected by comparing the variance of the residues obtained from two sequential data windows: learning and testing (Figure 6.13). Residues are obtained by imposing an AR model on the time series in each window.

It can be seen that in the learning windows there is a change, while in the test one the correlogram remains similar.

6.3.3.3 Subnetwork similarity testing

It can be said that two networks are similar if there are correlations at the application level of the traffic. In order to demonstrate similarity, the method proposed by Lan and Heidemann (2005) is used, which employs a global weight function s to determine if two distributions are significantly similar regarding average, dispersion, and shape: s = w1 · A + w2 · B + w3 · C,

Figure 6.13 Detecting abrupt changes.

where

where μ is the average, σ is the dispersion, and C is the Kolmogorov–Smirnov distance (the greatest absolute difference between the cumulative distributions of the two data sets).

N1 and N2 are samples that come from two different networks, and w1, w2, and w3 are positive, user-defined weights that allow he or she to prioritize some particular metrics (average, dispersion, or shape). Intuitively, two networks are similar if s is close to zero. This method was tested considering the Web traffic simulated on an SFN Internet model (Ulrich and Rothenberg 2005). After generating a large network model, a federalization is performed and the similarities are checked in pairs of subnetworks.

6.3.4 Test platform and processing procedure for traffic analysis

Traffic generation is an essential part of the simulation. Randomly, one to three simultaneous traffic connections are initiated from the client networks, and, for simplicity, ftp sessions are used to randomly select destination servers. It is decided that the links connecting routers must have speed superior to that of the link connecting clients to routers, for example, server-router 1 Gbps, client-router 10 Mbps, router-router 10, 100 Mbps or 1 Gbps depending on the router type. The code generated respecting these two conditions is added to the network description file in order to be processed by the simulator.

A modular solution is used, which permits reusing various components from different parts of the simulator. The same network type generated by the initial script can be used for both single-CPU and distributed simulation, thus offering a comparison between the two simulation setups.

NS2 is a network simulator based on discrete events, discussed already in Sections 4.3.2.3 and 5.1. The current version of NS (version 2) is written in C++ and OTcl (Chung 2011). Multi CPU simulations used the pdns extension of NS2, which has a syntax close to NS2. The main difference is in the number of extensions necessary for parallelizing so that the various instances of pdns to be able to communicate one to another and create the global image of the network to be simulated. All simulation scenarios run for 40 s.

In order to obtain a parallel/distributed environment, it was necessary to build a cluster running Linux. The cluster can run applications using a virtualization environment. Parallel virtual machine (PVM) was used for developing and testing applications. It is a framework of software packages that facilitate the creation of a machine running on multiple CPUs by using available network connections and specific libraries (Grama et al. 2003). The cluster comprises of a main machines and a total of six cluster nodes. The main machine provides all necessary services for the cluster nodes, that is, IP addresses, boot, NFS for data storage, tools for updating, managing, and controlling the images used by the cluster nodes, as well as the access to the cluster. The main computer provides an image of the operating system to be loaded on each of the cluster nodes, because they do not have their own storage. Because the images reside in the memory of each cluster, some special steps are necessary to reduce the dimension of the image and to provide as much free memory as possible for running simulation processes. The application partition is read-only, while the data partition is read-write and accessible to users on all machines, in a similar manner, providing transparent access to user data. In order to access the cluster, the user must connect to a virtual server on the main machine that can behave like a cluster node when it is not needed as an extra computer.

6.3.4.3 Network federalization

In order to use the pdns simulator, the network must be subdivided in quasi-independent subnetworks (Wilkinson and Pearson 2005). Each pdns instance addresses a specific subnetwork, thus the dependencies between them are minimal; there must be as few links as possible between nodes from different subnetworks.

The approach of simulating on a federalized network is used. A federalization algorithm was devised and implemented in order to separate the starting network in several smaller subnetworks. The algorithm generates n components. The pdns script takes as input the generated network and the federalization. Depending on the network connectivity, they are assigned different roles—router or end-user—and corresponding traffic scenarios are associated. Also, a different approach of partitioning a NS script in several pdns is employed, using autopart (Riley et al. 2004), a tool for simulating the partitioning based on the METIS partitioning package (METIS). Autopart takes a NS2 script and creates a number of pdns scripts that are ready to run in parallel on several machines.

6.3.5 Discussion on experimental results of case studies

6.3.5.1 Implementing and testing an SFN model for Internet

A scale-free network (SFN) is a model where the inference based on self-similarity and fractal characteristics can be applied. Scale-free networks are complex networks, in which a few nodes are heavily connected while the majority of the nodes have a very small number of connections. Scale-free networks have a degree distribution of P(k) = k(−λ) where λ can vary from 2 to 3 for the majority of real networks.

Numerous networks display a scale-free behavior including the Internet.

An algorithm was designed and implemented for generating those subsets of scale-free networks that are close to real ones, such as the Internet. This application is able to handle a very large collection of nodes, to control the generation of network cycles and the number of isolated nodes. It was written in Python, thus making it portable. The algorithm starts with a manually created network of a few nodes. By using increase and preferential attachment algorithms new ones are added. An original component is introduced calculating ahead a number of nodes for each level. Preferential attachment is followed by applying the restriction of having the optimal number of nodes per degree.

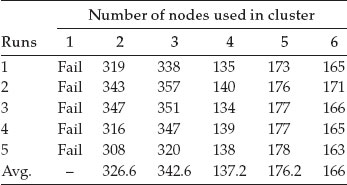

Table 6.2 Simulations time for a network model of 100,000 nodes and high traffic

For tests, it was decided to run 40 s traffic simulations for a scale-free networks model with 10,000 nodes. On such a scale, one-node processing is not possible, because the cluster node runs out of memory. In order to obtain valid results, the simulation was run on a different, more stronger machine, with more memory and virtual memory. Table 6.2 shows the time used for simulations(s) for a network model of 100,000 nodes and high traffic.

This scenario was simulated for five times in similar load conditions. During this simulation, it was noted that by adding more nodes (in our case, more than 4), the simulation process is slower. Another remark is that the 2-CPU simulation is swifter than the 3-CPU one, although the optimal number of nodes is not 2.

6.3.5.2 Detecting network anomalies

The statistical technique presented was applied for detecting anomalies for SNMP MIB. A summary of the results obtained through statistical techniques is presented in Table 6.3.

The types of observed anomalies are the following: server disfunctionalities (errors, failures), protocol implementation errors, network access problems. For example, in the case of a server error, the defect was predicted almost 7 min before incidence. In order to detect the anomalies from the point of view of a router, only the IP level is used. Figure 6.14 shows an anomaly of the MIB variable called ipIR (representing the total number of datagrams received for all router interfaces). The defect period is indicated by the dotted vertical line. Note the existence of a drop in the average traffic level reflected in the ipIR variable right after the failure.

Table 6.3 Summary of results obtained through statistical techniques

Type of anomaly |

Mean time of prediction (min) |

Mean time of detection (min) |

Mean time between false alarms (min) |

Server failures |

26 |

30 |

455 |

Access problems |

26 |

23 |

260 |

Protocol error |

15 |

– |

– |

Uncontrollable process |

1 |

– |

235 |

Figure 6.14 MIB anomaly of type ipIR.

Predicting an anomaly implies two situations: (a) In the case of server failures or network access problems, prediction is possible by analyzing the MIB data. (b) In the case of unpredictable errors such as protocol implementation error, early detection is possible as compared to the existing mechanisms such as syslog and defect reports. Any anomaly declaration that does not correspond to a label is declared to be false. The quantities used in the study are shown in Figure 6.15 (after Thottan and Ji 2003). The persistence criterion implies that the alarms need to remain for consecutive lags for an anomaly to be declared.

In the experiments, some example of defects were detected, coming from two networks: an enterprise and a campus network. Both networks have been actively monitored and were designed for the needs of the user. The types of anomaly that were observed were: server errors, protocol implementation errors, and access problems. The majority of these are caused by an abnormal usage activity, with the exception of the protocol implementation errors. Thus, all these cases affect the MIB data characteristic.

Although high-speed traffic on Internet links has an asymptotic self-similarity, its correlation structure at small timescales allows for modeling it as a self-similar processes (as fBm). Based on simulations done on SFN Internet model, one can conclude that the Internet traffic has self-similar properties even when it is heavily aggregated. Experiments revealed the following results:

Figure 6.15 Quantities used in performance analysis.

1. Self-similarity is adaptable to the network traffic and it is not based on a heavy tail transfer distribution.

2. The region of scaling the traffic self-similarity is divided into two timescale regions: short-range dependencies (SRD), determined by the bandwidth, the size of the TCP window and the packet size, and a long-range dependence (LRD), determined by the statistics with a long tail characteristic.

3. In LRD, an increase in the bandwidth does not increase the performance.

4. There is a significant advantage in using fractal analysis methods for solving anomaly detection problems.

An accurate estimation of the Hurst parameter for MIB variables offer a valuable anomaly indicator obtained from the ipIR variable. So, by increasing the prediction capability, it is possible to reduce the time prediction time and to increase the network availability. Regarding the parallel processing methods, it is very important for the network model to be correctly federalized, because there is an optimum point between separating and balancing the federalization—the more separate the networks are, the more unbalanced they become.

The method of testing self-similarity between networks suggests the possibility of inferring traffic data in places where measurements are inaccessible. Considering the results of the discussed method, it has been found to have competitive performance for network traffic analysis, similar with those claimed by Breunig et al. (2000) when using LOF algorithm for computation of similarity between a pair of flows that contain a combination of categorical and numerical features. In comparison, our technique enables meaningful calculation without being computationally intensive, because it directly integrates data sets of previously detected incidents.

One can conclude that the anomaly detection algorithm is also effective in detecting behavior anomalies on a network, which typically translates into malicious activities such as DoS traffic, worms, policy violations, and inside abuse.

6.3.6 Recent trends in traffic self-similarity assessment for Cloud data modeling

Existing modern Cloud data storage, like Cloud data warehousing, do not use its potential capabilities functionality due mainly to the complexity in behavior of network traffic. The quality of service can be increased by building a converged multiservice network. Such a network ought to provide an unlimited range of services to provide flexibility for the management and creation of new services.

The current state of rapid development of high technology has led to the emergence and spread of ubiquitous networks, packet data, which gradually began to force the system switching network and at the same time increase the relevance of the traffic self-similarity. The fractal analysis can solve several problems, such as the calculation of the burst for a given flow, the development of algorithms and mechanisms providing quality of service in terms of self-similar traffic, and the determination of parameters and indicators of quality of information distribution.

6.3.6.1 Anomalies detection in Cloud

Deploying high-assurance services in the Cloud increases security concerns. To address these concerns, a range of security measures must be put in place. An important measure is the ability to detect when a Cloud infrastructure, and the services it hosts, is under attack via the network, for example, from a distributed denial of service (DDoS) attack. A number of approaches to network attack detection exist, based on the detection of anomalies in relation to normal network behavior. An essential characteristic of Cloud computing is the use of virtualization technology (Mell and Grance 2011).

Virtualization supports the ability to migrate services between different underlying physical infrastructure. In some cases, a service must be migrated between geographically and topologically distinct data centers (process known as wide-area migration). When a service is migrated within a data centre, the process is defined as local-area migration. In both cases, there are multiple approaches to ensure the network traffic that is destined for a migrated service is forwarded to the correct location.

Importantly for anomaly detection, service migration may result in observable effects in network traffic—this depends on where network traffic that is to be analyzed is collected in the data center topology, and the type of migration that is carried out. For example, if a local-area migration is executed, the change in traffic could be observable at top-of-rack (ToR) and aggregate switches, but not at the gateway to the data center. Since it is common practice to analyze traffic at the edge of a data center, local-area migration is opaque at this location. However, for wide-area migration, traffic destined to the migrated service will be forwarded to a different data centre, and will stop being received at the origin after the migration process has finished. This will result in potentially observable effects in traffic collected at the edge of a data center, and hamper anomaly detection techniques, so it is important to explore the effect that wide-area service migration has on anomaly detection techniques when network traffic that is used for analysis is collected at the data center edge.

An anomaly detection tool (ADL) that analyses the traffic data for observing similar patterns, along with the injected anomalies and migration is described in Adamova et al. (2014). For the experiments, the authors used network flow data that was collected from the Swiss National Research Network—SWITCH—a medium-sized backbone network that provides Internet connectivity to several universities, research labs, and governmental institutions. The ADL has three inputs: an anomaly vector, which specifies at what times anomalies were injected, and two time-series files—a baseline file and an evaluation file. A time-series file contains entries that summarize each 5 min period of the traffic and is created by extracting the traffic features from a set of preselected services and anomalies. The traffic features are volume-based (the flow, packet, and byte count) and distribution-based (the Shannon entropy of source and destination IP and port distributions). These features are commonly used in flow-based anomaly detection. The baseline time series are created for training data; these are free from anomalies and migration. Conversely, evaluation time series contain anomalies and may contain migration, depending on the experiments we wish to conduct.

Figure 6.16 illustrates the tool chain used for testing the performance of the anomaly detection tool.

It can be seen that using this tool chain one can create evaluation scenarios that include a range of attack behavior, and service migration activity of different magnitudes. These scenarios can then be provided as input to different flow-based anomaly detection techniques. Among them, two are based on traffic self-similarity: spectral analysis, based on PCA, and clustering using expectation-maximization (EM). When using PCA, anomalies are detected based on the difference of the baseline and evaluation profiles. Detection of anomalous behavior based on clustering is realized by assigning data points that have similar features to cluster structures. Items that do not belong to a cluster, or are part of a cluster that is labeled as containing attacks, are considered anomalous.

Figure 6.16 The tool chain used in ADL testing.

6.3.6.2 Combining filtering and statistical methods for anomaly detection

Traffic anomalies such as attacks, flash crowds, large file transfers, and outages occur fairly frequently in the Internet today. Despite the recent growth in monitoring technology and in intrusion detection systems, correctly detecting anomalies in a timely fashion remains a challenging task.

A new approach for anomaly detection in large-scale networks is based on the analysis of traffic patterns using the traffic matrix (Soule et al. 2005). In the first step, a Kalman filter is used to filter out the “normal” traffic. This is done by comparing future predictions of the traffic matrix state to an inference of the actual traffic matrix that is made using more recent measurement data than those used for prediction. In the second step, the residual filtered process is then examined for anomalies. Since any flow will traverse multiple links along its path, it is intuitive that a flow carrying an anomaly will appear in multiple links, thus increasing the evidence to detect it.

A traffic matrix is a representation of the network-wide traffic demands. Each traffic matrix entry describes the average volume of traffic, in a given time interval, that originates at a given source node and is headed toward a particular destination node. Since a traffic matrix is a representation of traffic volume, the types of anomalies, which can be detected via analysis of the traffic matrix, are volume anomalies (Lakhina et al. 2004). Examples of events that create volume anomalies are denial-of-service attacks (DOS), flash crowds, and alpha events (e.g., nonmalicious large file transfers), as well as outages (e.g., coming from equipment failures).

Obtaining traffic matrices was originally viewed as a challenging task since it is believed that directly measuring them is extremely costly as it requires the deployment of monitoring infrastructure everywhere, the collection of fine granularity data at the flow level, and then the processing of large amounts of data. However, in the last few years many inference-based techniques have been developed that can estimate traffic matrices reasonably well given only per-link data such as SNMP data (that is widely available). Let note that usually these techniques focus on estimation and not prediction (Zhang et al. 2003).

A better approach for traffic matrix estimation seems to be that based on predictions of future values of the traffic matrix. A traffic matrix is a dynamic entity that continually evolves over time, thus estimates of a traffic matrix are usually provided for each time interval (most previous techniques focus on 5-min intervals), and the same interval for the prediction step of the traffic matrix will be considered in the following. The procedure consists in two stages. In the first stage, 5 minutes after the prediction is made, one obtain new link-level SNMP measurements, and then what the actual traffic matrix should be is estimated. In the second stage, one examines the difference between the last prediction (made without the most recent link-level measurements) and the estimation (made using the most recent measurements). If the estimates and predictor are good, then this difference should be close to zero. When the difference is necessary to analyze this residual further to determine whether or not an anomaly alert should be generated. In this connection, we recommend to use a generalized likelihood ratio test to identify the moment an anomaly starts, by identifying a change in mean rate of the residual signal. This method is a particular application of known statistical techniques to the anomaly detection domain and will be detailed in the following.

The traffic matrix (TM) that we discuss represents an interdomain view of traffic, and it expresses the total volume of traffic exchanged between pairs of backbone nodes, both edge and internal. Despite the massive growth of the Internet, its global structure is still heavily hierarchical, with a core made of a reduced number of large autonomous systems (ASs), among them some huge public Clouds (Casas et al. 2010). Our contribution regards first TM modeling and estimation, for which a new statistical model and a new estimation method to analyze the origin–destination (OD) flows of a large-scale TM from easily available link traffic measurements, and then regards the detection and localization of volume anomalies in the TM.

The traffic matrix estimation (TME) problem can be stated as follows: assuming a network with m OD flows and r links, Xt = [xt(1), …, xt(m)]T represents the TM organized as a vector, where xt(k), k = 1 … m stands for the volume of traffic of each OD flow at time t. The routing matrix indicates which links are traversed by each OD-flow. The element Rij = 1 if OD flow j takes link i and 0 otherwise. Finally, the vector ϒt = [yt(1), …, yt(r)]T, where yt(i), i = 1 … r represents the total aggregated volume of traffic from those OD-flows that traverse link at time t represents the SNMP measurements vector:

The TME problem consists in the estimation the value of Xt from R and ϒt.

Different methods have been proposed in the last few years to solve the TME problem. They can be classified into two groups. In the first group are included methods that rely exclusively upon SNMP measurements and routing information to estimate a TM, whereas in the second group are included mixed methods which additionally consider the availability of partial flow measurements used for calibration purposes and exploit temporal and spatial correlations among the multiple OD-flows of the TM to improve estimation results.

A mixed TME method named recursive traffic matrix estimation (RTME) will be discussed, which not only uses ϒt to estimate Xt, but also takes advantage of the TM temporal correlation, using a set of past SNMP measurements {ϒt−1, ϒt−2,…, ϒ1} to compute an estimate .

Let first consider the model that is assumed in Soule et al. (2005). In this model, authors consider the OD-flows of the TM as the hidden states of a dynamic system. A linear state space model is adopted to capture the temporal evolution of the TM, and the relation between the TM and the SNMP measurements given by Equation 6.4 is used as the observation process:

In Equation 6.5, matrix Xt characterizes the evolution of the TM. Matrix A is the transition matrix that captures the dynamic behavior of the system, and Wt is an uncorrelated zero-mean Gaussian white noise that accounts both for modeling errors and randomness in the traffic flows. Through the routing matrix the observed links traffic ϒt is related to the unobserved state Xt. The measurement noise Vt is also an uncorrelated zero-mean Gaussian white noise process. Given this model, it is possible to recursively derive the least mean-squares linear estimate of Xt given {ϒt−1, ϒt−2,…, ϒ1}, by using the standard Kalman filter (KF) method. The KF is an efficient recursive filter that estimates the state Xt of a linear dynamic system from a series of noisy measurements {Yt−1, ϒt−2, …, ϒ1}. It consists of two distinct phases, iteratively applied, and the update phase.

The prediction phase uses the state estimate from the previous time-step to produce an estimate of the state at the current time-step t + 1, usually known as the “predicted” state , according to the equation:

where Pt|t and Pt+1|t are the covariance matrices of the estimation error , and the prediction error , respectively.

In the update phase, the measurements vector at current time-step ϒt+1 is used to refine the prediction , computing a more accurate state estimate for current time-step t + 1,

where is the optimal Kalman gain, which minimizes the mean-square error: E(||et+1|t+1||2).

Combining Equations 6.6 and 6.7, the recursive Kalman filter-based estimation (RKFE) method recursively computes from

In his doctoral thesis (Hernandez 2010), Casas Hernandez reported some drawbacks of this model, especially in the case where A is a diagonal matrix, because the first equation in Equation 6.5 is valid only for centered data and false if data are not centered.

In order to have a correct state space model for the case of a diagonal state transition matrix A, he proposed a new model, considering the variations of Xt around its average value mX, that is, the system (6.5) becomes

This state space model is correct for centered TM variations, for both static and dynamic mean, but a problem still remains, because the Kalman filter does not converge to the real value of the traffic matrix if noncentered data ϒt is used in the filter. This problem can be easily solved considering a centered observation process , by adding a new deterministic state to the state model. Let us define a new state variable . In this case, Equation 6.9 becomes

where 0 is the null matrix of correct dimensions. This new model has twice the number of states, augmenting the computation time and complexity of the Kalman filter. However, it presents several advantages

• It is not necessary to center the observations ϒt.

• Matrix A can be chosen as a diagonal matrix, which corresponds to the case of modeling the centered OD-flows as autoregressive AR(1) processes. This is clearly much easier and more stable than calibrating a nondiagonal matrix such that (I − A)mX = 0.

• The Kalman filter estimates the mean value of the OD-flows mX, assumed constant in Equation 6.10.

• This model allows to impose a dynamic behavior to mX, improving the estimation properties of the filter.

Using model, Equation 6.10 with the Kalman filtering technique produces quite good estimation results. However, this model presents a major drawback: it assumes that the mean value of the OD-flows mX is constant in time. It can be improved by adopting a dynamic model for mX, in order to allow small variations of the OD-flows mean value:

where mX(t) represents the dynamic mean value of Xt and ζt is a zero-mean white Gaussian noise process with covariance matrix Qζ. In this context, Equation 6.10 becomes

Hernandez (2010) presented experimental results, which confirm that this model provides more accurate and more stable results.

One can conclude that considering a variable mean value mX(t) produces better results, both as regards accuracy and stability. In all evaluations, the stable evolution of the error shows that the underlying model remains valid during several days when considering such a transition matrix. Therefore, if the underlying model remains stable, it is not necessary to conduct periodical recalibrations, dramatically reducing measurement overheads.

Let us now explain how TM, as a tool for statistical analysis, can be used for optimal anomaly detection and localization and consequently for correctly managing the large and unexpected congestion problems caused by volume anomalies. Volume anomalies represent large and sudden variations in OD-flow traffic. These variations arise from unexpected events such as flash crowds, network equipment failures, network attacks, and external routing modifications among others. Besides being a major problem in itself, a volume anomaly may have a significant impact on the overall performance of the affected network. The focus is only on two main aspects of traffic monitoring: (1) the rapid and accurate detection of volume anomalies and (2) the localization of the origins of the detected anomalies.

The first issue corresponds to the anomaly detection field, with a procedure that consists of identifying patterns that deviate from normal traffic behavior. In order to detect abnormal behaviors, accurate and stable traffic models should be used to describe what constitutes an anomaly-free traffic behavior. This is indeed a critical step in the detection of anomalies, because a rough or unstable traffic model may completely spoil the correct detection performance and cause many false alarms. In this chapter, we focus on network-wide volume anomaly detection, analyzing network traffic at the TM level. In particular, we use SNMP measurements to detect volume anomalies in the OD-flows that comprise a TM. The TM is a volume representation of OD-flow traffic, and thus the types of anomalies that we can expect to detect from its analysis are volume anomalies. As each OD-flow typically spans multiple network links, a volume anomaly in one single OD-flow is simultaneously visible on several links. This multiple evidence can be exploited to localize the anomalous OD-flows.

The second addressed issue is the localization of the origins of a detected anomaly. The localization of an anomaly consists in inferring the exact location of the problem from a set of observed anomaly indications. This represents another critical task in network monitoring, given that a correct localization may represent the difference between a successful or a failed countermeasure. Traffic anomalies are exogenous unexpected events (flash crowds, external routing modifications, and external network attacks) that significantly modify the volume of one or multiple OD flows within the monitored network. For this reason, the localization of the anomaly consists in finding the OD-flows that suffer such a variation, referred to from now on as the anomalous OD-flows. The method that we discuss locates the anomalous OD-flows from SNMP measurements, taking advantage of the multiple evidence that these anomalous OD-flows leave through the traversed links.