Conversations about how to make a human often begin at the Villa Diodati on the shore of Lake Geneva in the “wet, ungenial” summer of 1816, where the teenaged Mary Godwin was trying to get to sleep. Some days earlier Lord Byron, the glamorous sovereign of that select little coterie of Romantics, had proposed that they each write a ghost story. And on every day that passed, Mary’s friends had been entreating her: “Have you thought of a story?”

On that fateful evening, Byron and Mary’s lover Percy Bysshe Shelley had discussed “the nature of the principle of life, and whether there was any probability of its ever being discovered and communicated.” Perhaps, they had mused, “a corpse would be re-animated.”

Night fell; Mary retired to bed but could not sleep. And then it came to her:

I saw – with shut eyes, but acute mental vision, – I saw the pale student of unhallowed arts kneeling beside the thing he had put together. I saw the hideous phantasm of a man stretched out, and then, on the workings of some powerful engine, show signs of life, and stir with an uneasy, half vital motion.

This, from the introduction that Mary – now Shelley, and widowed for almost a decade – wrote for the revised 1831 edition of Frankenstein, is as good as the account she gave in the book itself of how Frankenstein made his monster. “He sleeps,” she went on, describing her nocturnal vision, “but he is awakened; he opens his eyes; behold the horrid thing stands at his bedside, opening his curtains, and looking at him with yellow, watery, but speculative eyes.”

The moral of this tale, surely, was clear: “supremely frightful would be the effect of any human endeavour to mock the stupendous mechanism of the Creator of the world.” Frankenstein showed what would happen when man tried to play God.

That is still how Mary Shelley’s extraordinary book is usually interpreted today, and while rather few people now understand the accusation of “playing God” to involve genuine impiety, it serves as a shorthand admonition against the scientific hubris that attaches to attempts to manipulate and perhaps even to create life.

But what is most telling about this narrative is that society, not Shelley, has imposed it on Frankenstein. You might wonder why I say that, if the author herself stated that this is the book’s message. But there’s good reason to think that Shelley’s 1831 introduction was shaped by the way society wanted to read her story, first published anonymously in 1818. In the earlier version, there were fewer references to Victor Frankenstein having tempted or challenged God through his grim work, and none of the original reviews raised that notion. Indeed, Percy Shelley seems much closer to the mark in the account of the book (which he helped to shape and edit1) that he wrote in 1817, published posthumously in The Athenaeum in 1832: “In this the direct moral of the book consists: … Treat a person ill and he will become wicked.” Frankenstein does not, in truth, have a moral that can be put into a sentence – but if we were forced to choose one theme, it ought to be this: we must take responsibility for what we create. For the life we create.

Yet by 1831, the Faustian interpretation of Frankenstein had become firmly established within the public consciousness, not least because many people had come across the tale via the bowdlerized, simplistic and hugely popular stage adaptations that began to appear in the mid-1820s. Mary Shelley, now with a public image to curate (her livelihood depended on it), and perhaps also touched by the conservatism that, alas, often arrives with age, responded by bringing her text into alignment with general opinion. It’s possible too that she wanted to distance herself from the views of her husband’s former physician William Lawrence, who had suffered condemnation from the Royal College of Surgeons for his materialist views of life: that it is “mere matter”, requiring no mysterious animating soul. The influence of Lawrence’s ideas can be discerned in the 1818 text; but his book Lectures on Physiology, Zoology and the Natural History of Man, published a year later, was fiercely denounced as irreligious and immoral. The changes that Mary Shelley wrought in the 1831 edition, making Victor Frankenstein more religious and pruning his scientific background, were, in the view of literary critic Marilyn Butler, “acts of damage-limitation”.

The idea that Frankenstein is the foundational text warning against scientific hubris is, then, largely a twentieth-century view. This doesn’t mean that we have got Frankenstein “wrong” – or at least, not simply that. It means rather that we needed a cautionary tale to deal with our confusions and anxieties about life and how to make and change it – and Frankenstein can be interpreted to fit that bill.

The Faustian moral about over-reaching science might be, and indeed has been, applied to any technology, from nuclear energy to the internet. But Frankenstein is gloriously fleshy; that’s what made it so repugnant to some of its first readers. The creature is created from flesh as it should not be: flesh made monstrous, flesh out of place. The review in the Edinburgh Magazine in 1818 entreated the author and “his” ilk to “rather study the established order of nature as it appears, both in the world of matter and of mind, than continue to revolt our feelings by hazardous innovations in either of these departments.”

Shelley’s novel showed human tissue given new forms, assembled into a frame of “gigantic stature”. It is precisely this disturbing vision that we see revitalized, as it were, by the advent of tissue culture, with its tales of uncontrolled growth of human matter, of brains in jars and organs kept alive by blood controlled by pumps and valves, of chemical babies bred in vats.

These are the outer reaches of our new-found ability to grow and transform cells and tissues. At times, they do sound like what now is commonly invoked by the phrase “Frankenstein science”. But they testify in favour of William Lawrence’s materialist view of living matter, suggesting that the organism is indeed a collaboration of physiological structures, a “great system of organization” with no need of an intangible soul or spirit. We don’t yet know how far the limits of that “organization” can be tested and extended, and neither are we quite sure where in that space of possibilities any concepts of humanness and self are located and corralled. But we are finding out. In doing so, we are not playing God – the phrase dissolves on inspection (theologically as well as logically) into a vague expression of unease and distaste. But we must never lose sight of the duty of care – to ourselves, our creations, and society – that Victor Frankenstein so woefully neglected.

* * *

Stitching or plumbing together tissues and organs seems an odd, clumsy and improbable way of making a human. For William Lawrence, life was intimately bound up with the animal body intact and complete. But by the early twentieth century, it no longer seemed absurd to consider the parts to be imbued with their own vitality. We’ve seen how cell theory was central to that reappraisal: many physiologists and anatomists were content by the turn of the century to regard the cell as an autonomous entity, independently alive. Tissue culturing vindicated that idea, as well as suggesting that flesh as an abstract entity could endure without the body’s organizing influence.

Karel Čapek’s R.U.R. shows how far-reaching these scientific ideas had become. As well as being a parable on the dehumanizing effects of the modern industrial economy, it synthesizes a great deal of the evolving thoughts about the nature of living matter. Yet since God was now officially pronounced dead, Čapek could risk invoking his name in satire. I do not know quite when the phrase “playing God” is first recorded, but it may very well be in R.U.R. Here is the company’s director general Harry Domin discussing the founder’s early work with the idealistic heroine who is visiting the factory to argue that the robots should be granted moral rights:2

Helena: They do say that man was created by God.

Domin: So much the worse for them. God had no idea about modern technology. Would you believe that young Rossum, when he was alive, was playing at God.

It’s perhaps easier to imagine the piecemeal assembly of a person (which is how R.U.R.’s robots are made) happening by degrees – by default, as it were – as body parts are replaced or renewed. This was what Alexis Carrel seemed to envisage for his perfused organs. They would confer a kind of immortality through continual renewal: each time an organ gives out, it is replaced by a new one. Presumably all that needs to be retained for continuity of the individual is the original brain and nervous system. When Carrel and Lindbergh wrote of “the culture of whole organs” in 1935, a newspaper headline insisted that they had taken the human race “one step nearer to immortality”.

Through such constant renewal of parts, a person might become like Theseus’s legendary ship, replaced little by little until no component of the original remains. Is it then still the same person? Edgar Allan Poe’s story “The Man Who Was Used Up” depicts a process of that nature, albeit with replacement body parts that are mechanical rather than fleshy. They are used to patch up an old general injured in combat over his career – but as he becomes ever more mechanical, so too do his thought and speech. The scenario is played largely for comic effect, although it betrays a long-standing suspicion that turns out to have some element of scientific truth: the body does indeed affect the operation of the brain, and not just vice versa.

Of course, the brain too suffers wear and tear. Indeed, it may be the life-limiting organ: as average lifespans have increased over the course of the last century, we have become more prone to the debilitating and ultimately fatal neurodegenerative diseases that threaten the ageing brain, such as Alzheimer’s and Parkinson’s. Can the brain itself be replaced?

This was rumoured to be on Carrel and Lindbergh’s agenda. As the two men met on Carrel’s private island of Saint-Gildas, off the coast of Brittany, in 1937, reporters frustrated by lack of access came up with the most fanciful ideas. The Sunday Express claimed that the duo were engaged in “Lonely island experiments with machines that keep a brain alive”.

Perhaps it’s not surprising that such fantasies were common currency just six years after James Whale had shown “Henry” Frankenstein insert a bottled brain into his creature, as portrayed by Boris Karloff in the Hollywood movie. But the media are no less credulous today. Notwithstanding attention-grabbing headlines in 2017 of a successful human “head transplant” by controversial Italian neurosurgeon Sergio Canavero, no such thing has ever been achieved in any meaningful sense of the term, and it is far from clear if it ever could be. Canavero has previously claimed to have transplanted the head of a monkey, but the “patient” never recovered consciousness. Moreover, the surgeon did not reconnect the spinal cord (no one knows how to do that), so the animal would have been paralysed anyway. Canavero’s claims for humans were based on experiments on dead bodies, and were a mere surgical exercise in connecting blood vessels and nerves, with no indication (how could there be?) that this restored function.

Besides, it’s not really correct to call the procedure a “head transplant” even in principle. Imagine (even if it is highly unlikely) that a head could retain not just vitality but memories if separated from the body and kept “alive” by perfusion. And suppose that these memories could indeed be reawakened when the head was attached to a new body. Then it seems reasonable to assert that the brain, not the body, is what defines the patchwork individual so created – in which case this would more properly be called a body transplant.

But even if the thoughts and memories of a human brain can somehow be preserved in the face of the mortality of the flesh (I’ll return to that shortly), we can safely say that, unlike say the heart, kidneys or pancreas, there is no current evidence that the whole human brain is in any sense a replaceable organ.

* * *

Visions of a full-grown human body assembled from component parts, from Frankenstein to Čapek and Carrel, remain fixated on the old Cartesian picture of body as mechanism. Sure, the parts are soft and fleshy, but they are otherwise viewed here rather like the cogs and levers of the ingenious automata of the Enlightenment, which were clothed in a mere patina of skin much like the intelligent robots of the Terminator films and Eva from Alex Garland’s 2014 movie Ex Machina. In Carrel’s time there was still an immense scientific and conceptual gap between studies of cells and tissues, which had begun to reveal their mysterious self-assembling autonomy, and the gross anatomy of the human body. We had little idea how one becomes the other.

It’s different now; research on organoids brings that home very plainly. As these methods of cellular self-organization become better understood, it has become feasible to imagine and indeed to conduct experiments that shrink a piecemeal assembly process down to the microscopic scale: to build a human from an artificial aggregate of cells that can be considered a sort of synthetic, “hand-crafted” embryo.

Do we, in fact, need anything more than a single cell? That possibility was recognized when the advent of IVF began to reconfigure the way people thought about conception and embryogenesis. In 1969, Albert Rosenfeld wrote in Life that “there is the distinct possibility of raising people without using sperm or egg at all”:

Could people be grown, for example, in tissue culture? In a full-grown, mature organism, every normal cell has within itself all the genetic data transmitted by the original fertilized egg cell. There appears, therefore, to be no theoretical reason why a means might not be devised to make all of the cell’s genetic data accessible. And when that happens, should it not eventually become possible to grow the individual all over again from any cell taken from anywhere in the body?

What Rosenfeld describes here is more or less identical to the process of turning a somatic cell into an induced pluripotent stem cell, making its full genetic potential “accessible” again.

This is, indeed, what iPSCs now make possible. In 2009, Kristin Baldwin of the Scripps Research Institute in La Jolla, California and her co-workers made full-grown mice from the skin cells (fibroblasts) of other mice. They reprogrammed the cells using the standard mixture of four transcription factors devised by Shinya Yamanaka, and then injected these iPSCs into a mouse blastocyst embryo. The blastocyst was prepared in such a way that its own cells each had four rather than the normal two chromosomes; that’s done by making the cells of a normal two-cell embryo fuse together. Because these cells contain too many chromosomes, such embryos generally can’t develop much beyond the blastocyst stage. But the added iPSCs didn’t have that defect: they were normal two-chromosome cells. So the mouse embryo and fetus that developed from this blastocyst was derived just from the iPSCs.3 Those fetuses grew to full-term pups which were delivered by caesarean section, and about half of them survived and grew into adult mice with no apparent abnormalities.

Now, not all iPSCs are capable of this: not all appear to be truly pluripotent, able to grow an entire organism. Some are and some aren’t, and it’s not entirely clear what makes the difference or how it can be spotted. But the experiment shows that at least some iPSCs are capable of becoming whole new organisms. Although this work was done with mice, there’s no obvious reason why the conclusions would not apply equally to human cells.

Do you see what this means? It may be that pretty much every cell in your body can be grown into another human being. Had the iPSCs from which my mini-brain was grown been placed instead inside a human blastocyst, they could probably have been incorporated into, and perhaps have been entirely responsible for, a human fetus.

At this stage an experiment like that,4 with all the attendant unknown and health risks, would be deeply unethical, and in some countries illegal. But I’m not recommending it; I’m simply saying that it can be imagined.

You might say that this thought experiment is not, however, quite a matter of growing iPSCs from scratch into a person. And you’d be right. You need the vehicle of a blastocyst to supply the crucial non-embryonic tissues needed for proper in utero development: the placenta and the yolk sac. Can we, though, add those too? Could we assemble clumps of cells and proto-tissues into what we might figuratively (some will say luridly) call “Frankenstein’s embryo”?

No researchers currently feel it is either possible or desirable to actually make a human this way. The purpose of building an “artificial embryo” like this – or at least, a structure roughly resembling an embryo – is for fundamental research. It can supply a means of studying early human development that is not possible, or anyhow not permitted, with real embryos. In this way, we might learn more about what goes wrong – why so many embryos spontaneously abort – as well as what goes right in the in utero growth of humans.

Now that methods exist for culturing embryos at least right up to the 14-day limit legally imposed in several countries (see here), our relative ignorance of what directs embryo growth in the second and third weeks of development has started to look more glaring. “Synthetic embryos” or embryoids offer an alternative way of gathering knowledge about this critical stage of growth that doesn’t conflict with ethical and legal restrictions. An added attraction of embryoids as a tool for basic research is that they might be tailored to suit the problem under investigation: refined, say, to supply a realistic model of how germ cells form or how the gut takes shape. You can keep parts of the picture quite vague and sketchy and just put in the detail you’re interested in.

Embryoids have so far generally been made from embryonic stem (ES) cells rather than iPSCs. It has been known for over a decade that these cells alone can become somewhat embryo-like: in the right kind of culture medium, small clusters of them will spontaneously differentiate to form the three-layer structure that precedes gastrulation: the ectoderm (the progenitor of skin, brain and nerves), mesoderm (blood, heart, kidneys, muscle and other tissues) and endoderm (gut). There, however, the process typically stops: with a simple ball of concentrically layered cell types, endoderm outermost. In a normally developing human embryo, this triple layer goes on to develop the primitive streak and make the gastrula – the first appearance of a genuine body plan. For that to happen, the embryo needs to be implanted in the uterus wall.

In 2012, a team of researchers in Austria showed that implantation can be mimicked in a crude way by letting embryoid bodies made from mouse ES cells settle onto a surface coated with collagen, which can stand proxy for the uterus wall. Once the embryoid bodies are attached, their concentric, layered structure changes to a shape with bilaterial symmetry: with a kind of front and back. What’s more, some of the cells start to develop into heart muscle. The embryoid begins to look a little more like a true in utero embryo.

Still, the cells can’t be fooled for long. They won’t continue organizing themselves unless they get all the right signals from their surroundings. Pretty soon they discern that there’s no real uterus wall after all, and no placenta either. So they end up looking like a very crude gastrulated embryo – an infant’s abortive attempt to make a figure out of clay, the features barely recognizable.

Here is where it pays to do a bit of assembly by hand: to add the tissues that the embryoids require if they are going to develop further.

The simplest recipe involves just two types of cell: pluripotent ES cells, and the cells that give rise to placenta, called trophoblast cells. It’s these pre-placental cells that deliver key signals to ES cells in utero inducing them to take on the shape of a pre-gastrulated embryo. In 2017, Magdalena Zernicka-Goetz and her colleagues in Cambridge used this two-component recipe to create a mouse embryoid. Normal in utero embryos have a third type of cell too: the primitive endoderm cells, which form the yolk sac and supply signalling molecules needed to trigger the formation of the central nervous system. But as a first step, Zernicka-Goetz and her colleagues figured that the gel they used as the culture medium, infused with nutrients, might act as a crude substitute for the primitive endoderm: a kind of scaffold that would hold the embryoid in place as the trophoblasts did their job. They found that a blob of ES and trophoblast cells organize themselves into in a kind of peanut shape, one lobe composed of a placenta-like mass and the other being the embryo proper. After a few days, the embryonic cells developed into a hollow shape, the central void mimicking the amniotic cavity that forms in a normal embryo.

Zernicka-Goetz and her colleagues found that the patterning in these embryoids is dictated by the same genes that are responsible in actual embryos: for example, the morphogen called Nodal, which creates a kind of body axis around which the pseudo-amniotic cavity opens up, and the morphogen BMP, which triggers the emergence of the primordial-germ-cell-like cells. It’s just like real embryogenesis: a progressive unfolding in which each step of the patterning process literally builds on the last. Get one stage of the shaping right, and you produce the conditions to turn on another set of morphogen genes, creating the chemical gradients for the next step of the process.

What’s more, the researchers found that some of the ES cells in the embryoid start to differentiate towards particular tissues – not just the mesoderm that forms many of the internal organs but also cells that look rather like primordial germ cells, which go on to form sperm or eggs.5 That’s particularly exciting, because it raises the prospect of being able to make artificial gametes (see here) not by painstaking reprogramming of isolated stem cells but by housing them in a synthetic embryo-like body, which might be expected to make the process easier and more like that in normal embryos. Making fully functional germ cells this way, however, is still a speculative idea.

There’s a limit to how far you can get this way. Sooner or later, the embryoid is going to figure out that it’s not in a real womb but just a blob of gel. At that point, it will metaphorically stamp its foot and say, I’m stopping right here.

No one knows how long you can postpone this moment of protest. But it can be delayed by supplying the missing primitive endoderm cells themselves as part of the recipe, rather than just mimicking them crudely with the gel matrix. Zernicka-Goetz and her colleagues find that such three-component embryoids, which they make as clumps of the respective cells floating freely in a nutrient solution, truly resemble embryos entering the process of gastrulation. One of the key signatures of that process is that the layers of cells around the “amniotic” cavity turn into so-called mesenchymal stem cells, which in a normal embryo will move about and form the mesoderm: the progenitor cells of the internal organs. The three-component embryoids show these characteristic steps of a transition to mesenchymal cells and formation of mesoderm.

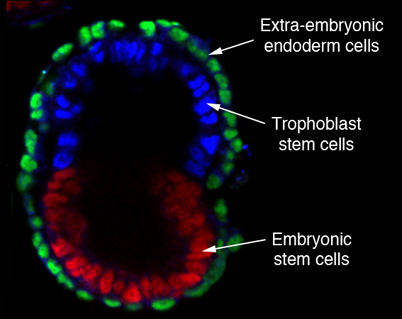

A mouse embryoid made by assembling embryonic stem cells with two types of extra-embryonic cell: the trophoblasts that would form a placenta, and extra-embryonic endoderm to make a yolk sac. The cells organize themselves into an embryo-like structure with an internal cavity.

There’s no obvious reason why a patchwork embryoid of this sort, given all the right cells and signals, should not keep on growing and reshaping itself further. If we could prolong the deception, as it were, that an embryoid is in utero, could it get to something like a fetal stage? Might we, alternatively, assemble cells from scratch into an embryoid that looks enough like a pre-implantation blastocyst to be implanted and grown in a uterus, making a person not only without sexual intercourse but without even sperm or egg?

Well, bear in mind that most of the embryoid work I’ve described so far used mouse cells. Many of the basic processes in early mouse embryogenesis are the same as those in humans, but development becomes very different even at the stage of gastrulation: the mouse gastrula looks quite unlike the human one. Yet it’s not obvious that there should be any fundamental obstacle to making human embryoids of at least this level of complexity. Human trophoblast cells – the vital ingredient for getting a placenta-like signal – aren’t easy to make, but that was achieved in 2018. What’s more, human trophoblasts have been grown into organoids that mimic a real placenta, raising the possibility of an in vitro tissue that can nurture embryoids as a stand-in for the maternal environment. And a team led by Jianping Fu at the University of Michigan has already grown human embryonic stem cells by themselves into embryoids that can recapitulate some of the stages involved in the formation of the amniotic sac.

We saw earlier that mere clumps of human ES cells can, in the right conditions and given the right biochemical signals, develop layered structures reminiscent of those in a pre-gastrulated embryo. With a bit more help, they can get even further. Ali Brivanlou of the Rockefeller University and his collaborators have shown that, if these rather crude human embryoids are exposed to a morphogen protein called Wnt, first identified in fruit-fly embryology (the W stands for “wingless”, describing a mutation it can cause), they can grow into a shape resembling a primitive streak, marking the onset of gastrulation.

That’s very striking. We saw that the development of a primitive streak in the in utero human embryo is taken as a rough-and-ready signature of “personhood” for the purposes of regulation: it’s the basis of the 14-day rule. Does a feature like this in a “synthetic” embryoid qualify it for any comparable status? But there’s more: Brivanlou and colleagues found that a combination of Wnt and another protein called Activin takes matters still further: it can induce in the embryoid the appearance of a group of “organizer” cells, of the kind that define the key axes for body formation. To show that this is genuinely an organizer, the researchers grafted the human embryoids onto chick embryos, and saw that the embryoid influences the growth of the chick embryo by introducing a new axis of development and triggering the appearance of progenitor cells to neurons – just as one would expect for an organizer that orchestrates the formation of the central nervous system.6 It really does seem, then, as though these manipulations of human embryonic stem cells are reproducing some of the key early stages in the emergence of the body plan. Such results lend credence to the claim by stem-cell biologist Martin Pera of the Jackson Laboratory in Maine that “there is no reason to believe that there are any insurmountable barriers to the creation of cell culture entities that resemble the human post-implantation embryo in vitro.”

Perhaps the barriers are not technical but conceptual. We need to ask: what sort of beings are these?

* * *

Embryoids are not exactly synthetic versions of the equivalent structures in normal embryogenesis. Their circumstances are different, and therefore so are some of their fine details. Like my mini-brain, they are both like and unlike the “real thing”. None yet has the slightest potential to continue its growth in vitro towards a baby animal. They are a class of living things in their own right. Anticipating that the very rudimentary embryoid structures so far created with human cells will evolve towards the more advanced forms made from mouse cells, synthetic biologist George Church of Harvard University has proposed that we call this family of existing and prospective living objects “synthetic human entities with embryo-like features”, or SHEEFs.

It may or may not catch on, but the rather cumbersome term summarizes what matters. The trajectory of this book has been precisely towards a conjunction of those first three words. These structures are synthetic because nature alone would not produce them. They are human to the extent that all the cells have human DNA. And they are entities because … well, because it is hard to find another word to lodge between the cell and the fully developed organism, granting individuality while remaining agnostic about selfhood.

It’s true, albeit a rather banal truth, that SHEEFs sound like something out of Amazing Stories. Yet their possibility has been staring us in the face ever since we first granted cells the status of autonomous living things. We humans are a particular arrangement that those living things can adopt. A very special arrangement, to be sure, because it is the one evolution has conferred on the totipotent human cell in its normal environment of division and growth. But there is no reason to think it is unique. And embryoids don’t have to fully recapitulate normal development, or adopt the same shapes as normal embryos, in order to be useful research tools.

Most researchers in the field recommend a ban on the use of embryo-like entities made from stem cells for reproductive purposes. But even if this were not to mean “growing a human”, research on ordinary human embryos is much more tightly constrained than that – in particular by the 14-day rule observed in many countries. It’s not clear, though, how or if that might apply to embryoids, given that they have no obligation to follow a natural developmental pathway. The 14-day limit is dictated by the appearance of the primitive streak, which will eventually become the central nervous system. But an embryoid might be tailored so that it reproduces some aspect of development normally evident only later in embryogenesis, without having to make a primitive streak first. It might never develop a primitive streak. But if so, how can we decide on a time limit for its growth?

These entities alter our view of the entire developmental process. Previously, it was seen as a single highway, with checkpoints all travellers must pass. But as Church and his colleagues have argued, SHEEFs change the highway to a network of many paths. We can take new routes, perhaps avoiding the conventional routemarkers altogether, and perhaps reaching new destinations. No one knows quite what is possible.

Partly for that reason, there is no consensus on how to legislate research on embryoids and SHEEFs. It’s not just that their status is ambiguous; there is no standard form for an embryoid, any more than there is for an aeroplane or a television. They are put together however we want them, and the cells work with what they are given. “It is unclear at which point a partial model [of the embryo] contains enough material to ethically represent the whole,” said a group of experts in this field at the end of 2018.

Throw into this pot the option of genome editing – which I will come to shortly – and the possibilities become dizzying, some might say terrifying. What if we found a way to make a human embryoid lacking the genes to make a proper brain, but which could nevertherless develop into a fetal body containing nascent organs that might be used for transplantation? Or, supposing some low-level brain functions to be needed for effective maintenance of the body, maybe we could engineer an embryoid with a “minimal” brain, lacking the regions and functions needed to feel pain or sentience? These are fanciful visions, but they are not obviously absurd. More to the point, they illustrate how hard it might become to develop clear moral and ethical reasoning about embryoids and SHEEFs. We can probably agree that SHEEFs able to register pain or sentience cross some moral boundary. But if they lack that capacity, are they really moral beings at all, even if made of human cells and vaguely humanoid in shape? I can’t articulate a logical argument for why it would be morally wrong to create such entities, but the idea makes me uneasy to say the least. Would it make things any better if they were designed not to look troublingly humanoid? There’s an urgent need to start the discussion now, because the science is likely to catch up sooner than we might imagine.

* * *

Even some of the more accessible and, from some angles, rather innocuous reproductive technologies that IVF has made possible are unsettling traditional notions of what is entailed in making humans. Take mitochondrial replacement. Mitochondria, we saw earlier, are organelles in our cells that generate the energy-rich molecules used in most enzymatic processes – they are, if you like, the furnaces of metabolism. Uniquely among the cell’s compartments, they have their own dedicated genes, separate from those on the 23 chromosomes; that’s one of the reasons to believe that the mitochondria are relict forms of what were once separate single-celled organisms (see here). Some mutant forms of the mitochondrial genes can give rise to so-called mitochondrial diseases, which can be highly debilitating and even life-threatening.7 As mitochondria are inherited only from the mother, diseases arising from defective mitochondrial genes can be passed on down the maternal line.

Mitochondrial replacement therapies aim to avoid inheritance of these kinds of mitochondrial disease. There are various approaches, but all involve transplanting the chromosomes of an affected egg – perhaps after being fertilized by the biological father – into an egg donated by a woman with healthy mitochondria from which the chromosomes have been removed. If this zygote then develops into an embryo, its genes all come from the biological mother and father except for those in the mitochondria, which come from the egg donor. Insofar as it involves the transfer of chromosomes into a “de-chromosomed” egg, the technique uses the same kind of manipulation of cells as in cloning by nuclear transfer (see here).

Mitochondrial replacement has become possible thanks to IVF: the manipulations, including fertilization, all happen in a petri dish. Alison Murdoch at Newcastle University, whose group has pioneered the technique, says that “if we had not had the prior experience in [in vitro] human embryology … we would probably never have been able to achieve this.”

The treatment has been highly controversial, partly because of questions about long-term safety that are almost impossible to answer with certainty for such a new medical technique. Replacing the mitochondria also amounts to a genetic alteration of the “germ line”: if the embryo is female then this genetic change will be transmitted to any future female offspring. Even though it’s hard to see what objection there could be to eliminating a thoroughly nasty disease throughout the subsequent generations, there are good reasons for scientists to proceed very cautiously with any genetic alteration that affects the germ line. All the same, the method was approved for use in the UK by the Human Fertilisation and Embryology Authority (HFEA) at the end of 2016, and in early 2018 the authority granted a licence to a Newcastle hospital for use of the method in IVF for two women with mitochondrial disease mutations. An apparently healthy child has already been born from the technique in a clinic in Mexico, supervised by a doctor from a fertility clinic in New York.

Some of the opposition to mitochondrial replacement has been driven not by concerns about safety or the wisdom of germ line alteration but by accusations of “unnaturalness”. Because the genetic material in babies conceived this way would have a small component – the 37 genes in the mitochondria – that comes from the egg donor rather than the biological mother and father, such infants have been dubbed “three-parent babies”. Everything that feels unsettling about new reproductive technologies is crystallized in that term: it suggests a runaway can-do science that has far outstripped our conventional moral boundaries and categories, permitting biological permutations that our instincts tell us should not be possible. By permitting such creations, critics say, we aren’t just playing God but exceeding the bounds of nature, tinkering with the natural order “just because we can”.

It’s a perfect illustration of the use of narrative to frame – here in both senses – the science. “Parent” joins a list of words like “sex”, “person” and “life” that have strong cultural resonance while being rather hazy in any scientific sense. All the emotive power of “parenthood” is here being channelled onto a handful of genes, in order to embed a biological procedure within a particular story, for a particular agenda.

For the fact is that when parenthood, with all the associations of kinship, responsibility and nurture, becomes reduced to a tiny strand of DNA that orchestrates some of the biochemistry within a specific cell organelle, anyone who has ever parented a child8 has cause to feel affronted. So too do the families in which there are already effectively three or more parents, whether through step-parenting, same-sex relationships, adoption or whatever. Like the “test-tube baby”, the “three-parent baby” is not a neutral description but a label designed to convey a particular moral message and invite a particular response. It’s up to us whether we accept it.

* * *

Which bring us to perhaps the most notorious bogey, the Huxlerian worry-doll, of the age of IVF: the designer baby. As the technology was taking off in the late 1960s, Time’s medical writer David Rorvik described the future according to reproductive biologist E. S. E. Hafez:

[He] foresees the day, perhaps only ten or fifteen years hence, when a wife can stroll through a special kind of market and select her baby from a wide selection of one-day-old frozen embryos, guaranteed free of all genetic defects and described, as to sex, eye colour, probable IQ, and so on, in details on the label. A colour picture of what the grown-up product is likely to resemble, he says, could also be included on the outside of the packet.

As ever, a glance back puts our present-day fears in perspective. It’s remarkable both how little the popular, commercially inflected image of designer babies has changed over the past four decades and how little real progress there has been towards it.

Here’s the premise. Since the 1970s, it has become possible to edit genomes: to excise or insert genes at will, sometimes from different species entirely. What then is to stop us from creating an IVF embryo and tampering with its genes to alter and enhance the traits of the resulting child? Might we tailor her (for we can certainly select the sex) to have flame-red hair and green eyes, to be smart and athletic, full of grace and musical ability? (The stereotypical wishlist for discussing designer babies is generally along lines like these.)

Let’s first have a look at what is possible.

Genome editing9 has become rather routine in biotechnology. Organisms modified this way – especially bacteria – are used industrially for producing useful chemicals and drugs. In one especially striking (though not very commercially important) example, goats have been genetically modified with the genes responsible for making spider silk so that they secrete the silk proteins in their milk.

In bacteria it’s not necessary to modify the cell’s genome; one can simply inject additional DNA with the requisite gene, which the cell’s transcription machinery will process in the usual manner to produce the desired protein product. But editing the genomes of organisms like us to change or restore the function of a gene is generally a cut-and-paste job: excising one gene, or part thereof, and inserting another. This demands tools for snipping the DNA in the right place, removing the gene fragment, and splicing in the new piece of DNA.

Molecular scissors and splicers that work on DNA have existed for decades – there are natural enzymes capable of these things that can be adapted for the job. But a technique called CRISPR, developed in 2012 largely by biochemists Emmanuelle Charpentier, Jennifer Doudna and Feng Zhang, has transformed the field because of the accuracy with which it can target and edit the genome. CRISPR10 exploits a family of natural DNA-snipping enzymes in bacteria called Cas proteins – usually one denoted Cas9, but others are finding specialized uses too – to target and edit genes. Bacteria have evolved these enzymes as a defence against pathogenic viruses: the enzymes can recognize and remove foreign DNA inserted by the viruses into bacterial genomes. The targeted section of DNA is recognized by a “guide RNA” molecule carried alongside Cas9: the sequence of base pairs on the DNA complements that of the RNA. In other words, the RNA programmes the DNA-snipping Cas9 enzyme to cut out a particular sequence – any sequence you choose to write into the RNA.

CRISPR is far more accurate, as well as cheaper, than previous gene-editing techniques. If it proves to be safe for use in humans, then gene therapies – techniques for eliminating and replacing mutant genes that cause some severe, mostly very rare diseases – might finally become possible after decades of rather fruitless effort. The method could potentially supply a powerful way to cure diseases that are caused by mutations of one or a few specific genes, such as muscular dystrophy and thalassemia. Clinical trials are now underway.

Gene-modified embryos and babies are another matter. Gene therapies aim to alter genes in somatic cells, but any changes to genes made in an embryo at the outset will be incorporated into the germ line and passed down future generations. As we saw earlier, scientists are rightly hesitant about introducing changes to the germ line. What’s more, if the editing process makes any other inadvertent alterations to the genome at this early stage in development, those changes will be spread throughout the body as the embryo grows.

CRISPR has been used to genetically modify human embryos, purely to see if it is possible in principle. The first results, obtained in 2015 by a team in China, were mixed: editing worked, but not with total reliability or accuracy. The following year, Kathy Niakan of the Francis Crick Institute in London became the first (and so far the only) person to be granted a licence by the Human Fertilisation and Embryology Authority in the UK to use CRISPR on human embryos. Her group had no intention to modify embryos for reproductive purposes, which remains illegal in the UK. Rather, they studied embryos just a few days old to find out more about genetic problems in these early stages of development that can lead to miscarriage and other reproductive problems.

Then in 2017, an international team led by reproductive biologist Shoukhrat Mitalipov at the Oregon Health and Science University reported the use of the CRISPR-Cas9 system to snip out disease-related mutant forms of a gene called MYBPC3, which causes a heart muscle disorder, in single-celled human zygotes, and replace it with the healthy gene. They fertilized eggs with sperm carrying the mutant gene, injected the CRISPR system into the zygotes to replace the genes with the properly functional form, and analysed the resulting embryos at the four or eight-cell stage to see how well the gene transplant had succeeded. In most cases, the embryos had only the “healthy” gene variant.11 Unlike the Chinese experiments, these ones involved embryos that could in principle continue to grow if implanted in a uterus, although that was never part of the plan.

Indeed, the use of CRISPR for human reproduction is forbidden in all countries that legislate on it, and is almost wholly rejected in principle by the medical research community. Even research on genetic modification of human embryos that does not have reproductive goals cannot legally receive federal funding in the United States, although privately funded research (like Mitalipov’s work) is not banned. And in 2015, a team of scientists warned in Nature that genetic manipulation of the germ line by methods like CRISPR, even if focused initially on improving health, “could start us down a path towards non-therapeutic genetic enhancement”.

Until the end of 2018, most researchers in this field felt confident that, given the risks and uncertainties of CRISPR genome editing in human reproduction, and the almost unanimous view among researchers that it should not be tried until much more was understood, if at all, there was no prospect of genome-edited babies any time soon. They were shocked and dismayed, then, when a Chinese biologist named He Jiankui affiliated to the Southern University of Science and Technology in Shenzhen announced at a press conference in Hong Kong in November that he had used the method to modify IVF embryos and implanted them in women, one of whom had already given birth to twins.

The story was mysterious, bizarre and disturbing. He said that he had used CRISPR to alter a gene called CCR5, which is involved in infection of cells by the AIDS virus HIV. If the gene is inactivated, that could hinder the virus from entering cells. His aim, he said, was to produce babies resistant to HIV, so that couples who carry the virus might have children without fear that AIDS would be transmitted to them. (After a history of denial, China now recognizes that AIDS is a problem of epidemic proportions.)

He Jiankui offered few details, but it seemed he had added the molecular machinery of the CRISPR-Cas9 system to the embryos after fertilization, and had removed cells from them a few days later for genetic testing to see if the CCR5 gene had been successfully modified. If so, the couples were offered the choice of using either edited or unedited embryos for implantation. He said that a total of 11 embryos were used for six implantations before a (non-identical twin) pregnancy was achieved. In the press conference, he merely stated that “society will decide what to do next”.

He Jiankui provided no documented evidence to back up his claims, nor did he say where the work was done or with what funding. But although stem-cell and embryological research has been plagued with fraudulent claims, this one seemed genuine. He was an academic with a respectable pedigree, having studied in the United States at top universities. His adviser at Rice University in Houston, American bioengineer Michael Deem, admitted to collaborating on the work, though it was done in China.

The work was almost universally condemned as deeply unethical and unwise by specialists around the world. Over one hundred Chinese scientists put out a statement denouncing it and saying that it would damage China’s reputation for responsible research in this field (a reputation that, contrary to what is sometimes asserted, is largely justified). “The experiment was heedless,” wrote cardiologist Eric Topol of the Scripps Research Institute in California. “It had no scientific basis and must be considered unethical when balanced against the known and unknown risks.” Jennifer Doudna, one of the inventors of the CRISPR technique, issued a statement saying, “It is imperative that the scientists responsible for this work fully explain their break from the global consensus that application of CRISPR-Cas9 for human germline editing should not proceed at the present time.” He’s university denied knowledge of the work (although it now seems possible that he used state funding to conduct it) and has now fired him, while authorities at Rice began an investigation into Deem’s involvement. He’s work is now under investigation by the Chinese authorities, and he could eventually face criminal charges if he is found to have subverted ethical rules or compromised the babies’ health. Several leading scientists in the field have now called for “a global moratorium on all clinical uses of human germline editing”.

In her statement, Doudna added that there was an “urgent need to confine the use of gene editing in human embryos to cases where a clear unmet medical need exists, and where no other medical approach is a viable option.” That was not the case here: the embryos that He Jiankui treated had no known intrinsic genetic defect, but were modified in anticipation of HIV infection. It wasn’t clear that the strategy would be effective anyway: one of the twins in the pregnancy apparently acquired only one copy of the altered CCR5 gene, and so would not get complete resistance to HIV. Besides, there are other ways of treating HIV infection. What’s more, knocking out the CCR5 gene brings dangers in itself: a lack of the functioning gene increases susceptibility to some other viral infections. All in all, it was a barely explicable choice for a first use of CRISPR in human reproduction, quite aside from the unknown risks. To make matters worse, the work seemed to have been done shoddily: as more details emerged, it became clear that there had been some off-target genome modification, with unknown consequences.

The eyes of the world will be on these two children (both girls). They are the proverbial guinea pigs, facing an uncertain future due to the recklessness of maverick researchers.

As Doudna and others realized, this headline-grabbing and irresponsible act would only damage the prospects for potentially valuable uses of the technology. For there is no obvious reason why genome editing for human reproduction should be forever ruled out of court. In those relatively rare cases where a debilitating disease is caused by a single gene, and the consequences of replacing a faulty with a healthy version can be reliably predicted, there may be a place for it eventually in reproductive medicine – although it is far from clear that it would be a better option than alternative strategies for tackling the medical problems it would seek to address.

After all, as with mitochondrial diseases, eliminating such diseases from the germ line seems an unqualified good. Why would you not want to ensure that not only a person grown from the modified embryo but also their offspring too are free of the disease? Some of the qualms arise from the irreversible nature of the change – what if it turned out that the excised gene mutant, as well as creating a disease risk, had some beneficial value, in the way that the gene variant that causes sickle-cell disease when on both chromosomes can confer some resistance to malaria when on just one? But in the unlikely event of a gene that causes some serious disease turning out to have unforeseen benefits too, genome editing is not irreversible: a mutant gene can be restored as well as removed. In February 2019 the World Health Organization established a committee to draw up guidelines for human genome editing, which will doubtless wrestle with questions like these.

The new techniques of cell reprogramming and repurposing expand the possibilities for genome editing. “Pretty much any genetic modification could be introduced in iPSC lines derived from somatic cells using CRISPR,” says stem-cell scientist Werner Neuhausser. The generation of “artificial gametes” or even embryos from somatic cells would open doors in reproductive technologies because they could make the safety margins wider. If those methods created a ready supply of human eggs for IVF, one could carry out the editing procedure on many eggs or embryos at once and pick out instances where it worked well – there would be slack in the system to accommodate a bit of inefficiency and mistargeting. At any rate, bioethicist Ronald Green believes that human genome editing “will become one of the central foci of our social debates later in this century and in the century beyond.” For better or worse, the headline-grabbing antics of He Jiankui may well have launched us on that trajectory sooner than anyone expected.

* * *

Would embryos genome-edited to prevent disease be “designer babies”? That would seem a perverse use of the pejorative label. It is supposed to convey a sense that the babies are luxury items – accessories that feed our vanity. But disease prevention is no luxury.

More often, the term is invoked in discussions about what we might call the positive, non-medical selection of traits – not eliminating undesirable genes that create health risks, but selecting ones that will make a person smarter, better looking and better performing.

But predicting traits like these from genes is far more complicated than is often assumed. Talk of “IQ genes”, “gay genes” and “musical genes” has led to a widespread perception that there is a straightforward one-to-one relationship between our genes and our traits. In general, it’s anything but. As we saw earlier, most of our traits, including aspects of personality and health, emerge from complex and still largely inscrutable interactions between many genes, most of which have negligible impact on that trait individually. Intelligence, as measured by current metrics such as IQ (and we can argue elsewhere about their merits), is significantly heritable, meaning that it has genetic roots. Typically around 50 to 70 per cent of a person’s “intelligence” is thought to be due to genetic factors. But there are no “intelligence” genes in any meaningful sense; the many genes that influence intelligence doubtless serve other roles (often connected to brain development).

Scientists have got much better in recent years at being able to make predictions about intelligence from a person’s genome sequence, largely because of the availability of more data from many thousands of individuals. Given enough data, it becomes possible to spot even very small correlations between genes and intelligence. But these predictions are and always will be probabilistic. They are along the lines of “your genome suggests that you’re likely to be in the top 10 per cent of the population for IQ/school achievement/exam results”. But environment has an influence too: if a person with a “good genetic profile” has suffered extreme neglect or abuse, or has had a serious head injury, their IQ might be far lower than their genes predict. And even without considering the influence of environment, a given genetic profile for intelligence will have a wide range of outcomes because it only biases, and does not totally prescribe, the way the brain wires up. There is some random “noise” in the developmental programme.

It’s the same for other behavioural traits – creativity, say, or perseverance, or propensity for violence. This goes for many of the more common diseases or medical predispositions with a genetic component too. While there are thousands of mostly rare and nasty genetic diseases that can be pinpointed to a specific gene mutation, most of the common ones, like diabetes, heart disease or certain types of cancer, are linked to several or even many genes, can’t be predicted with any certainty, and depend also on environmental factors such as diet.

So the genetic basis of attributes like intelligence and musicality is too thinly spread throughout the genome to make them amenable to design by editing. You would need to tinker with hundreds, perhaps thousands of genes. Quite apart from the cost and practicality, such extensive rewriting of a genome would be likely to introduce many errors. And because all those genes have other roles, you couldn’t be sure what other traits your putative little genius would end up with: he might be insufferable, sociopathic, lazy. “The creation of designer babies is not limited by technology, but by biology,” says epidemiologist Cecile Janssens at Emory University in Atlanta. “The origins of common traits and diseases are too complex and intertwined to modify the DNA without introducing unwanted effects.” And it would all be for the sake of a probabilistic outcome that might still turn out to be disappointing: the tail end of the bell curve for those with “top 10 per cent IQ” genes reaches well into the realm of mediocrity.

Unfortunately, it is the same with those “many-gene” diseases. Genome editing might help to eliminate a nasty single-gene disease like cystic fibrosis, but probably won’t help much with an inherited propensity to heart disease.

Is the designer baby off the menu then? Not quite.

* * *

Genome editing would be a difficult, expensive and uncertain way to achieve what can mostly be achieved already in other ways. For the fact is that we are already in effect genetically modifying the germ line of babies – not by changing their genomes, but by selecting embryos with particular genes in preference to others.

At present, this is mostly done for what most would agree are good reasons: to avoid serious disease. Couples in which both partners know they are carriers of a gene variant that causes disease – that’s to say, they have just one copy of the gene on their pairs of chromosomes12 – may elect to have a child using the procedure called pre-implantation genetic diagnosis (PGD), which can spot if an embryo inherits the “bad gene variant” from both parents and so will develop the disease. (There is a one in four chance of that: of the four possible combinations of gene variants from the two parents, only one corresponds to a double copy of the “disease” variant.) In PGD, embryos produced by IVF are genetically screened by removing a cell at around the four or eight-cell stage and sequencing its genome. Only recently has it become possible to do this cheaply and quickly enough to make the technique possible. The screening process shows which embryos can be safely implanted.

PGD is already used in around 5 per cent of IVF cycles in the USA, and in the UK it is performed under licence from the HFEA to screen for around 250 genetic diseases – those in which a single gene is known to cause or present a high risk of incurring the disease, which include thalassemia, early-onset Alzheimer’s and cystic fibrosis.

PGD is not generally thought of as “germ-line genome editing”. But that’s really just because no one elects to call it that. If you want to have a randomly selected grab-bag of sweets with no green ones in, you could either take a single bag and replace the green ones by hand or take a hundred bags and look for ones with no green sweets in them. The end result is the same.

Putting it another way: we already seem to accept that there is nothing wrong in principle with specifying some genetic aspects of the germ line. The discussion is really about what those aspects may and may not be.

For one thing, what counts as a disease? What would selection against genetic disabilities do for those people who have them? “They have a lot to be worried about here,” says Hank Greely, “in terms of ‘Society thinks I should never have been born’, but also in terms of how much medical research there is into their disease, how well understood it is for practitioners and how much social support there is.”

In the UK and some other countries, PGD remains strictly forbidden for any purposes other than avoidance of specified diseases like those listed on the HFEA’s register. It would not be permitted, say, for selecting the sex or hair colour of a child. But around 16 countries, including the United States, do permit sex selection. In these cases, there seems to be no preference for either a boy or a girl. Still the practice is controversial, not least because there are good reasons to think that some cultures would express a strong preference (for boys). Because of traditional attitudes towards gender, abandonment, mistreatment and even infanticide of baby girls have seriously skewed the male-to-female ratios in China and India, despite efforts of governments to promote the equal value of both sexes. The worst affected regions in these countries now face the prospect of social unrest due to large numbers of young men with no marriage opportunities.

Where prejudices about the gender of a child do not seem to exist, however, sex selection for “family balancing” doesn’t seem obviously abhorrent. One might argue that gender should be irrelevant to how a child is valued; but is it so unreasonable for the parents of three boys to wish for a girl next time? Advocates point out that, if any of the folk beliefs about how best to conceive through ordinary intercourse in order to get a boy or girl turned out to actually work, one could hardly justify governments banning them from a couple’s sex life. On the other hand, one might wonder if a child selected for gender would be burdened by an even greater degree of stereotypical expectations than we so often (despite our best intentions) impose on our children. It’s complicated. The possibilities opened up by new medical techniques often are.

At any rate, once embryo selection beyond avoidance of genetic disease becomes an option, as it is already in some countries (the practice is not nationally regulated in the United States), the ethical and legal aspects are a minefield. When is it proper for governments to coerce people into, or prohibit them from, particular choices, such as not selecting for a disability?13 How can one balance individual freedoms and social consequences?

* * *

If there is going to be anything even vaguely resembling the popular designer-baby fantasy, it will come first from embryo selection, not genetic manipulation. “Almost everything you can accomplish by gene editing, you can accomplish by embryo selection,” says Greely.

But “designing” your baby by PGD looks currently unattractive, as well as rather ineffectual. Egg harvesting is painful and invasive, entailing a course of hormones to stimulate egg production and then a very uncomfortable surgical procedure to extract them. And it doesn’t yield many eggs anyway: a typical IVF cycle might produce perhaps 6 to 15, of which around half might become fertilized in vitro to give embryos that look good enough for potential implantation (generally only one or two are returned to the uterus). There’s not a lot of choice. And the success rate for implanted embryos is still typically just 30 per cent or so at best. The procedure is gruelling both physically and emotionally, and expensive too, typically costing £3,000 to £5,000 per cycle in the UK in 2018.

That’s why no one currently chooses IVF unless they have to; it’s not by any stretch of the imagination a fun way to make a human being. But some think that this may change. What if IVF was reliable, cheap and relatively painless? And what if it could allow you to decide which baby you wanted?

That choice would look more meaningful if there were many more options. Even if the outcomes were somewhat uncertain and probabilistic, might people not feel tempted by the prospect of having, say, a hundred embryos to select from, rather than simply accepting whatever fate provides? PGD on such a scale looks ever more possible as genetic screening becomes more affordable. Since the completion of the Human Genome Project, the cost of human whole-genome sequencing has plummeted. In 2009, it cost around $50,000; in 2017, it was more like $1500, which is why several private companies can now offer this service. In a few decades, it could cost just a few dollars per genome. Then it becomes feasible to think of conducting PGD on industrial-scale batches of embryos.

That’s an uncomfortable conjunction of words, but an apt one if there were some way of obtaining and fertilizing many eggs at once. “The more eggs you can get, the more attractive PGD becomes,” Greely says. One possibility is a once-for-all medical intervention that extracts a slice of a woman’s ovary and freezes it for future ripening and harvesting of eggs. It sounds drastic but would not really be so much worse than current egg-extraction and embryo-implantation methods. And it could give access to thousands of eggs for future use.

But we saw earlier that there could one day be another option that requires no significant surgery at all: the manufacture of eggs in vitro by reprogramming somatic cells into gametes. Greely believes that a confluence of these two new technologies – making artificial gametes from the induced pluripotent stem cells of the prospective parents, and fast, cheap PGD of embryos to identify the “best” – could make what he calls Easy PGD the preferable option for procreating in the near future. He foresees “the end of sex” – not for recreational purposes but as a means of having babies. “The science for safe and effective Easy PGD is likely to exist some time in the next 20 to 40 years,” he says – and he figures that on a similar timescale reproductive sex “will largely disappear, or at least decrease markedly.”

So here are your hundred or thousand embryos, made from in vitro-generated eggs and each with a genetic profile obtained by PGD. What sort of child would you like, madam?

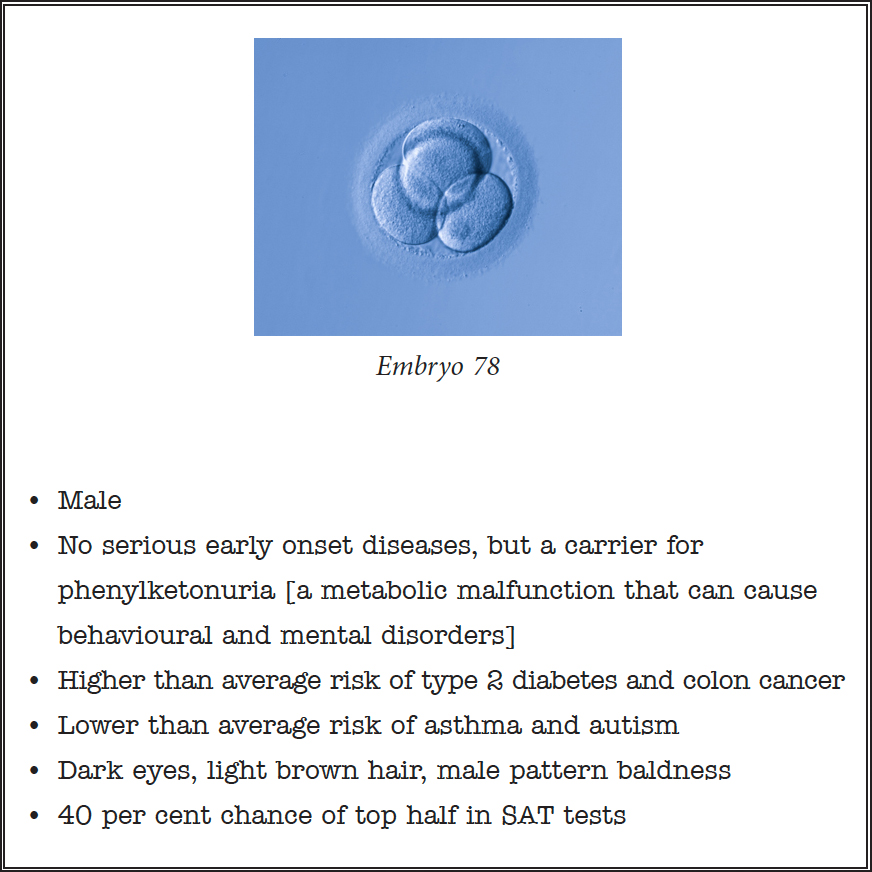

The catch is that these genetic menus will be phenomenally hard to assess and interpret. Some of the outcomes predicted from an embryo’s genome will be definite, some likely, some just vaguely statistical. It might look something like this:

Let’s say you have 200 of these to choose from. Beyond avoiding serious diseases, how on earth do you make that choice? How do you weigh up the pros and cons and balance them against analogous outcomes for another ten, or a hundred, embryos?

You can’t. This is another example of the emptiness of the free-market mantra of choice.

For such reasons, some experts are sceptical of Greely’s forecast of widespread use of PGD, even if it became “easy”. “Where there is a serious problem, such as a deadly condition, or an existing obstacle, such as infertility, I would not be surprised to see people take advantage of technologies such as embryo selection,” says bioethicist Alta Charo. “But we already have evidence that people do not flock to technologies when they can conceive without assistance.” The low take-up rate for sperm banks offering “superior” sperm, she says, already shows that. For most women, “the emotional significance of reproduction outweighed any notion of ‘optimization’.” Charo feels that “our ability to love one another with all our imperfections and foibles outweighs any notion of ‘improving’ our children through genetics.”

Not everyone is so confident. In the next 40 to 50 years, says Ronald Green, “we’ll start seeing the use of gene editing and reproductive technologies for enhancement: blonde hair and blue eyes, improved athletic abilities, enhanced reading skills or numeracy, and so on.” The allegedly HIV-resistant “CRISPR babies” made by He Jiankui are arguably already examples of this.

One factor that might help to spread something like Greely’s Easy PGD is peer pressure. Goaded by the distorted agendas of current education policies, we are being encouraged to regard child-rearing as a competitive horse race in which we are made to feel irresponsible if we don’t seize every advantage. If you’ll invest your savings to move to a new house within the catchment area of a good school, or pay eye-watering private fees, why not a thousand or so more for a spot of genetic tailoring at the outset – even if the benefits are small at best?

Already the bullying rhetoric from gene-analysis companies has begun: “Genetic testing is a responsibility if you’re having children,” Anne Wojcicki, CEO of the US-based genome-sequencing company 23andMe, has asserted. Greely anticipates the kind of sloganeering that will seek to capitalize on these pressures: “You want the best for your kids, so why not have the best kids you can?”

Despite all the uncertainties, ambiguities and conflicting signals of a PGD analysis, it’s not hard to see the temptations. Imagine that you are presented with just two embryos that have been screened by PGD, and you’re told that one has a genetic profile matching the top 10 per cent for intelligence and the other the bottom 10 per cent. All else being relatively equal, which are you going to choose?

This is not a hypothetical scenario. It is already happening. In late 2018, an American company called Genomic Prediction announced that it would offer screening for IVF embryos to identify those whose genetic profiles suggest that a resulting child would have an IQ low enough to be classed as a mental disability. That in itself does not seem obviously any more objectionable than screening for disease or for Down’s syndrome (which lowers IQ). But because it involves measuring the “polygenic” score (the summed effect of a great many genes) for intelligence, the test could just as readily be used to identify potentially high-IQ embryos. Genomic Prediction says it will not permit such uses – but even the company’s co-founder, physicist Stephen Hsu, agrees that the demand will exist and that other companies in the unregulated United States are likely to satisfy it. Some experts objected that the science isn’t (yet) up to supporting such claims – but do you think that will deter customers?

Beyond traits like intelligence, it may well be feasible to select embryos for all-round health. Greely thinks that a 10 to 20 per cent improvement in health via PGD is perfectly feasible. If so, isn’t it reasonable to seek that for your child? Should a government be able to obstruct that choice?

But there are plenty of reasons, beyond safety considerations, to feel wary of permitting this kind of selection. The siren allure of perfection via Easy PGD could drive expectations to pathological extremes. What if the child genetically selected for athletic ability or artistic talent fails to deliver – as some inevitably will, given that such predictions are only probabilistic? You can hear the outrage of the parents: “Do you know what that treatment cost?!”

Some will argue that unequal availability of choice to different socioeconomic sectors of the population could seriously disturb social stability, leading to a “genetic divide” of the kind portrayed in the 1997 movie Gattaca. Greely warns that PGD to boost health, added to the comparable advantage that wealth already brings, could lead to a widening of the health gap between rich and poor, both within a society and between nations.

Yet others will say that prohibition of the reproductive choices that PGD (especially an “Easy” variant) offers is an infringement of rights. Might it not, indeed, be crazy or even immoral, if we have the means to improve the general intelligence of the population by making this choice available to all, to forego that chance?

Yes, it’s complicated. I have my views, and I daresay you will too, but I think it is fair to say that the answers to these questions are not obvious. They demand that we find a balance between personal choice, liberty and morality versus the role of government: how states forbid, regulate or promote technologies. That of course is nothing more than the age-old question of democratic politics – except that now we are talking about how it might impinge on our capacity to shape human nature itself. “For better or worse, human beings will not forego the opportunity to take their evolution into their own hands,” says Green. “Will that make our lives happier and better? I’m far from sure.”

* * *

If you’re seeking to conceive a child with intelligence or good looks, why bother with the lottery of sexual recombination of genomes in the hope of getting the right genes? Why not just make a copy of a smart or attractive person who you know already to have the requisite genetic make-up?

We saw earlier how cloning entails moving a fully formed set of chromosomes from one cell – which can be an adult somatic cell (in somatic-cell nuclear transfer or SCNT) – into an egg that has had its own nucleus removed. The egg is then stimulated in some way – chemical or electrical triggers will do the trick – to develop into an embryo under the guidance of the new chromosomes.

Cloning of animals did not start with Dolly the sheep. As we saw, Hans Spemann achieved it in the 1920s, first by dividing a salamander embryo in half with a fine noose, then using SCNT. Briggs and King did it for frogs in 1955. Sheep were first cloned that way in 1984; the big deal about Dolly was that the transplanted nucleus came from an adult somatic cell. In 2005, Woo Suk Hwang and his team in South Korea were the first to clone a dog, which they called Snuppy.14 Hwang fell from grace soon after, when his claim to have cloned human embryos to generate stem cells was shown to be based on fraudulent data (see here).

We don’t know if cloning is possible for reproductive purposes in humans. The only way to find out for sure is to try it, and that is banned in most countries;15 a declaration by the United Nations in 2005 called on all states to prohibit it as “incompatible with human dignity and the protection of human life”. Yet a kind of human cloning was already conducted back in 1993, when scientists at the George Washington University Medical Center in Washington DC artificially divided human embryos produced by IVF: a kind of induced twinning of the sort that generates identical twins.16 The cells grew into early-stage embryos but didn’t reach the point where they could implant in a uterus. The work caused much controversy, because it wasn’t clear if it had received proper ethical clearance.

Human cloning by somatic-cell nuclear transfer is another matter. The best reason we have so far to think it might be possible is a demonstration in 2017 that the technique works for other primates. Mu-ming Poo and his co-workers at the Institute of Neuroscience in Shanghai made two macaque monkey clones by SCNT, which they christened Hua Hua and Zhong Zhong. The donor cells came from macaque fetuses, not adult monkeys, but the researchers are confident that the latter will eventually work too. Primates have been particular difficult mammals to clone, for reasons not fully understood, and so the result represented a significant step towards human cloning. The work was conducted not as an end in itself but to create a strain of genetically identical monkeys that could aid research into the genetic roots of Alzheimer’s disease.

Quite aside from the wider ethical issues, safety concerns would make it deeply unwise to attempt human cloning yet. Hua Hua and Zhong Zhong were the only live births from six pregnancies, resulting from the implantation of 79 cloned embryos into 21 surrogate mothers. Two baby macaques were in fact born from embryos cloned from adult cells, but both died – one from impaired body development, the other from respiratory failure.

To construct a scenario where cloning seems a worthwhile option for human reproduction takes a lot of ingenuity anyway. One might perhaps imagine a heterosexual couple who want a biological child, but one of them will necessarily pass on some complex genetic disorder that can’t be edited or screened out – so they opt to clone the “healthy” parent. But there’s more than a hint of narcissism about such a hypothetical choice.

Of course, it’s not hard to think up bad reasons for human cloning – most obviously, the vanity of imagining that one is somehow creating a “copy” of oneself and thereby prolonging one’s life by proxy. That would not only be obnoxious but deluded. Even the idea that a clone will be a “perfect” reproduction of the person who supplies the DNA is mistaken. As we’ve seen, the “genetic programme” of a zygote is filtered and interpreted by the chance and contingency of development, with results that are not entirely predictable. Dolly the sheep was not the spitting image of the ewe from which she was cloned in 1997, and four ewes cloned by the same team at the Roslin Institute in Scotland two years previously from embryonic donor cells were, according to the researchers, “very different in size and temperament”. A clone of Einstein would by no means be a genius of equal measure.

It will take some effort to dislodge the naïve genetic determinism that surrounds talk of cloning, though – and not just because of absurd fantasies like the cloning of Hitler in Ira Levin’s 1976 novel The Boys from Brazil. Scientists will have to mind their language – to desist from calling the genome a “blueprint that makes us who we are”, or (as Francis Collins, the head of the US National Institutes of Health recently said apropos the CRISPR editing of human embryos) “the very essence of humanity”. That kind of talk is now dangerously misleading.

Claims from mavericks and cults notwithstanding, no human being has been cloned to date. But my feeling is that this will happen eventually. It’s not a prospect I welcome, because (unlike IVF) there does not seem to be any sound justification for it, motivated solely by the mitigation of suffering and the welfare of a person created this way. All the same, if it comes to pass then we should anticipate another “Louise Brown moment”, where we struggle to reconcile a sense of unease at an unfamiliar process of making humans with what is likely to be the evidence that it makes people just like us.

Ronald Green may well be right in principle, if not in terms of timescale, to have suggested in 2001 that within one or two decades “around the world a modest number of children (several hundred to several thousand) will be born each year as a result of somatic cell nuclear transfer cloning.” It is quite possible that, as Green says, within several decades “cloning will have come to be looked on as just one more available technique of assisted reproduction among the many in use.” I would rather see that being done in an open and regulated manner, with proper safeguards, than in remote locations by mavericks and profit-hungry companies who care little for the motives or perhaps even the welfare of their clients. Just because we might be wary of human cloning, that does not mean there is the slightest reason to be wary of a human made this way.

* * *

The possibilities for growing and shaping human beings provided by our new technologies for manipulating cells might seem dramatic, even alarming, but they are rather conservative compared to what some of scientists in the early days of the field foresaw.