As my mini-brain in a dish took shape, I found myself recalling the fledgling novel that, many years ago and quite wisely, I buried in a drawer and forgot. It contained a scene satirizing the Grand Guignol of Amazing Stories, in which a crazed doctor is building a gigantic brain, sustained by perfusion of blood, in the basement of a hospital by merging the organs he removes from hapless patients. Ultimately, he plans to house it in an immense, grotesquely designed synthetic head with multiple pairs of eyes, no nose, that sort of thing.

Fear not: this hideous progeny is not about to re-emerge from my bottom drawer. But while Selina Wray does not exactly fit the persona of my deranged Dr Zoback, what she and Chris Lovejoy (oh yes, there was a lunatic henchman too …) had created in the incubators of UCL was in some ways far stranger, and certainly more wonderful, than anything my juvenile mind conjured up. All I was doing back then was toying with an old and hackneyed trope. We find it, for example, in a story by M. M. Hasta called “The Talking Brain” from a 1926 issue of Amazing Stories, which was directly inspired by Alexis Carrel’s work. “If a heart could be kept beating in a bottle for years at a time,” asks Hasta’s obsessed scientist Professor Murtha, “why should not a brain be kept thinking in a bottle forever?”

The reader can, of course, immediately think of several good reasons why not. But such considerations don’t stop Murtha from removing the brain of a student who was severely injured in a car crash and placing it in a wax head. Murtha wires up the perfused brain so that it can communicate using Morse code, and … well, you can guess, can’t you? It says:

This place is more terrible than you can know. Set me free. Kill me or let him [Murtha] kill me. Now. Now. Now. Now. Now. Now. Now.

I don’t know if Roald Dahl ever read Hasta’s story, but he was of course perfectly capable of thinking up that fiendish scenario on his own. It appears in Dahl’s short story “William and Mary”, published in 1959 and later used for an episode of the TV series Roald Dahl’s Tales of the Unexpected. William is a philosopher who knows he will shortly die of cancer. He is approached by a doctor who offers to preserve his brain after death, hooked up to an artificial heart and to one eye, like a nightmare version of Carrel’s experiments. William agrees to this proposal, and all goes ahead, William’s wife Mary having agreed to look after the disembodied assembly of organs her husband has become. But Mary has plans. After a lifetime of boorish domination by her husband, who forbade her to smoke or to get a television, she now intends to do those very things with the helpless brain looking on. The lone eye stares at her, somehow registering fury; she calmly blows smoke in it. “From now on, my pet, you’re going to do just exactly what Mary tells you,” she purrs. “Do you understand that?”

But there are more uses for a “brain in a jar” than making the skin crawl. The image has tantalized philosophers for a long time, who weave it into scenarios through which to examine belief and scepticism. They used to regard this as a mere (forgive me) thought experiment. But thought experiments have a habit of turning into real ones.

* * *

Nothing about the brain quite makes sense to our intuition. Here is a surgeon holding in her hands a human brain freshly removed from its cranium, and it seems like so much offal from a butcher’s slab, a labyrinthine blancmange with bloodied crannies. (Not until the formalin preservative gets to work does the tissue attain the rubbery consistency of the specimen jar.) The mere pressure of a finger will dent the juddering tissue.

And yet in that bland mass was once a universe and a lifetime. Everything that person knew and felt and experienced – the sound of breakers on a tropical beach, the taste of roast chestnuts, the pain of a mother’s death – was locked within those membranes, coded in electrical patterns travelling within a substance about as different from our typical image of an information-processing device as it could be. You can’t tell me there is not something mysterious about this.

The brain conjures up worlds with the skill of a master illusionist. I can tell myself that the keyboard I see before me, the street outside the window, are the objective world – but their colours, their distinctness and perspective, every aspect of what I perceive them to be, are constructed inside my head. I could never convince myself to fully accept that truth. I can’t even talk about it coherently; here I am creating some little homunculus that, like the Beano’s Numskulls, lives in my brain and is fed sensory data by the blobs of grey matter. The brain is the ultimate philosophical puzzle. This is the seat of personhood, yet it’s not clear who is to be found there, or where they are.

We can’t help imagining that within the soft clefts of the human brain somehow resides the person themselves – or at least clues to what made them who they were. Albert Einstein’s brain, removed by pathologist Thomas Stoltz Harvey after the physicist’s death in Princeton in 1955, was cut into slices and preserved. Harvey himself hoarded some of those fragments. Others have now found their way into museums, where they have become macabre emblems of genius akin to the alleged somatic relics of saints. Claims abound, some coming from serious neuropathologists, about why Einstein’s brain was anatomically “special”. But the truth is that everyone’s brain is likely to show some deviations from the norm, and attributing specific abilities to this or that variation in shape, size and cellular structure of brain tissue can be a hazardous enterprise. We don’t know what made Einstein intellectually exceptional, but it’s not obvious that answers can be found by rummaging around in his preserved grey matter.

But even if you can’t make deductions about character or genius from the general topology of the brain, still there is some truth in the notion that our brain makes us what we are. The brain gets hard-wired as we develop, guided (but not prescribed) by our genes, and from someone’s genetic profile you can make meaningful predictions about likely character traits. But as we saw earlier, these are probabilities, not destinies. Brain development is contingent on the sensitivities of this complex genetic machinery to random noise accidents of growth – you can’t tell exactly how it will turn out.

The brain is a modular organ: certain behaviours can be linked to physical features of different brain regions. That’s why damage to specific areas can have very particular consequences for cognitive function. There are clear, albeit minor, anatomical differences between male and female brains, although what that might imply for putative behavioural differences is still debated. And atrophy of specific brain regions can result in highly specific symptoms. Dementia, for example, is not just about generalized loss of memory; there are many types, with distinct symptoms linked to the parts of the brain that are affected. Primary progressive aphasia (PPA), produced by deterioration of the frontotemporal lobes, affects aspects of semantic processing: how we label concepts, or our ability to retrieve those linguistic tags. For some people living with PPA this manifests as a struggle to connect words to sound production. But if the neurodegeneration of PPA happens a little further back in the part of the brain (the temporal lobes) where language is processed, what gets impaired is not the forming of words but accessing their semantic content.

In contrast, posterior cortical atrophy (PCA), a variant of Alzheimer’s disease, commonly affects spatial awareness and can lead to disorientation, visual illusions and coordination problems. The experience can be akin to that moment when we’re unable to parse what we see: is that a face, or just the folds in a piece of fabric? Is that object near or far? One man with PCA who I spoke to during the Created Out of Mind project that spawned my “brain in a dish” told me how the piano keyboard he was playing seemed on one occasion to rise up a couple of feet higher. Such experiences might be best viewed not as a “distortion of reality” but a reminder of how much of a mental construct all perception is.

The brain is shaped by experience. Musicians who began their training at a young age show enlargement of the region called the corpus callosum, which connects and integrates the processing of the separate hemispheres. The part of the cortex used to process musical pitch also shows boosted development in musicians. Brain scans of London taxi drivers show that they have enhanced development of the rear of the hippocampus, a region associated with memory and navigation. This enlargement happens in proportion to the amount of training that the drivers have had in learning the city’s street layout – so it seems to be a genuine response to, and not a cause of, their skill at finding their way around. In so many ways, our brains, like our bodies, are not a given fleshy mechanism by means of which we navigate the world, but an adaptive and responsive record of our personal histories.

* * *

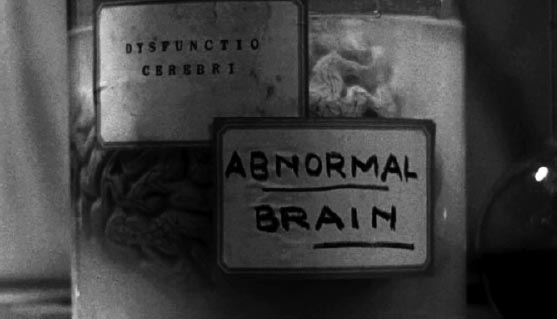

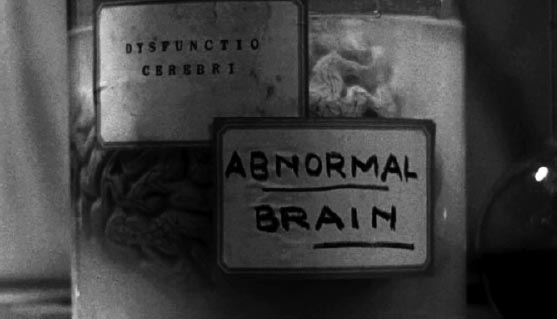

Who can forget Frankenstein’s crazed assistant Fritz giving him the “abnormal” brain of a criminal in James Whale’s 1931 movie of Mary Shelley’s story?1 This, we are supposed to infer, accounts for the monster’s murderous tendencies, totally undermining Shelley’s message that the creature is turned that way by Frankenstein’s failure to give his creation care and love.

No, not that one Fritz: a still from Frankenstein (1931).

The preserved brain in a jar was no Hollywood invention, though. The organs were often stored this way for medical research, to be analysed for visible features that might be linked to behaviour patterns. Anatomical dissection seems now a rather crude approach to understanding such a phenomenally complex organ, but it was once the only way to find out about associations of form and function in the body.

There are exceptions to that respectable tradition of tissue preservation far more grotesque and horrific than anything you find in Gothic horror films. In the 1970s, shelves of jars containing the preserved brains of hundreds of children were found to have been kept in the basement of the Otto-Wagner Hospital in Vienna, where attendants had unquestioningly been topping up the preserving fluid for decades. These brains, it transpired, had been removed from children kept in a special ward and murdered as “mental defectives” by command of the Nazi doctor Heinrich Gross. Gross apparently intended to study the anatomical causes of such “defects”. The remains of the child victims were buried in a ceremony in 2002.

Some people want their brain to end up in a jar by choice – not for the benefit of medical research, but because they figure they might one day need it again. Or at least what’s allegedly stored in it. Brain freezing is big business: many hundreds of people have paid for their bodies (or just their heads – the budget option) to be cryogenically preserved after death in the hope that science will one day enable the brain to be revived and the person in effect brought back to life. You won’t necessarily need or want your original body, especially if you died from some fatal accident or illness. According to Alcor Life Extension Foundation in Scottsville, Arizona, one of the leading companies to offer this service, “When carried out under favorable conditions, both current evidence and current theory support the conclusion that cryonics has a reasonable chance of working.” (It’s not clear quite what this “current evidence and theory” are.) The company adds that:

Survival of your memories and personality depend on the extent of survival of brain structures that store your memories and other identity-critical information … It cannot be reliably known with present scientific knowledge how a given degree of preservation would translate to a given degree of memory retention after extensive repair, but sophisticated future recovery techniques using advanced technology might allow for memory recovery even after damage that today might make many think there was little room for hope.

Well, it’s your choice – at a minimum cost of $80,000 per capita (never has that Latin term been more literal). Some critics accuse human-cryopreservation companies of profiting from false hopes peddled to vulnerable people. Experts point out that today’s cryogenic techniques inevitably cause damage to tissues, and that thawing would damage them more, so there is no prospect that a frozen brain could be revived.

Respectable cryonics companies like Alcor have tried to distance themselves from the more far-fetched claims of the brain-freezing immortalists, but nonetheless such technologies are vaunted as offering a glimmer of hope that death can one day be cheated. The practice of post-mortem brain-freezing became especially controversial when, in 2016, the British High Court ruled to respect the expressed wish of a 14-year-old girl who died from cancer to have her body cryogenically frozen by an American company so that she might one day have a chance of being “woken up”.2

Immortalists might take some comfort, however ghoulish, from controversial experiments by researchers at Yale University in 2018 in which decapitated pigs’ heads taken from an abattoir shortly after slaughter were reportedly kept alive in some sense for 36 hours by perfusion with an oxygenated fluid. “Alive” here meant merely that some of the cells showed signs of surviving during the perfusion; the heads did not – mercifully, but unsurprisingly – regain consciousness.

Sustaining cell activity in a head recently removed from the body is a long way from reviving memories in a head deep-frozen for many years. But some advocates think it is not a vain hope that we might one day have the technical means to do that. “If you can bridge the gap (it’s only a few decades),” writes computer scientist Ralph Merkle, “then you’ve got it made. All you have to do is freeze your system state if a crash occurs and wait for the crash recovery technology to be developed … you can be suspended until you can be uploaded.”

Wait – a crash? Uploaded? You can see where this is going: towards the idea that the brain is just a kind of computer and can be described using the terminology of the laptop.

To Merkle it’s just a matter of physics. Your brain is made of material, and so is governed by the laws of physics; those laws can be simulated on a computer, and therefore your brain can be too. You don’t even need squishy, fragile grey matter – it’s all just a question of bits and bytes. The network of neural connections in the brain is astronomically complex, but all the same we can put an upper limit on how many bits should be needed to encode it. Merkle calculates that uploading a brain will need a computer memory of about a million trillion bits, performing around ten thousand trillion logic operations a second. That’s at least imaginable with the current rate of technological advance. According to this transhumanist vision, we will soon be able to live on, inside computer hardware: the brain in a jar becomes the brain on a chip.

Whether a cryogenically frozen brain can preserve all the data responsible for your experiences and mental states during life is another matter (even setting aside the problem of freeze-induced damage). How signalling neurons conspire to create consciousness is one of the big scientific unknowns, and this number-crunching of bit counts is a little like describing the global economy simply as the net sum of its monetary value, or by counting up the trading agents involved. Some cognitive scientists dispute the idea that the human brain is merely a sophisticated kind of computer that can be mapped onto any other substrate if it has enough circuitry. They say that consciousness might be a property of very specific kinds of processing networks, and not necessarily compatible with silicon or digital hardware. To neuroscientist Christof Koch of the Allen Institute for Brain Science in Seattle, the idea that conscious thought is just a kind of computation is “the dominant myth of our age”.

What such heady visions of brain downloads also ignore is that the brain is not the hardware of the self, but an organ of the body. Several experts in both artificial intelligence and cognitive science now argue that embodiment is central to experience and brain function. At the physiological level, the brain doesn’t just control the rest of the body but engages in many-channelled discourse with its sensory experience, for example via hormones in the bloodstream. And embodiment is central to thought itself, according to artificial-intelligence expert Murray Shanahan of Imperial College London. Shanahan says that cognition is largely about figuring out the possible consequences of physical actions we might make in the world: a process of “inner rehearsal” of imaginary future scenarios. The brain doesn’t just conduct such imagery in the abstract: during cognition, we see activation of the very same brain regions, such as those controlling motor function, that we would use if we were to carry out the imagined actions for real.

That fits with the suggestion of cognitive scientists Anil Seth and Manos Tsakiris that the function of the brain is not to compute sensory information in some abstract sense, but to use it to construct a model of the world that is consistent with our bodily experience of it. In other words, we don’t create some bottom-up representation from basic sensory data, but start top-down with our sense of “being a body” and figure out what kind of world is consistent with that. We infer (subconsciously) that objects have an opposite side we can’t see, not because we have learnt that “objects have hidden sides” but because we deduce that we’ll see it if we walk around in the physical space.

It’s for this reason, say Seth and Tsakiris, that we don’t perceive ourselves as a Numskull-like homunculus looking out of a body-machine from the window of the eyes. Instead, we take the body for granted as an aspect of our mental world-map: it is woven tightly into our sense of self. Any aspect of our physiology that affects the integrity and function of our body – our immune system, say, or the microbes in our guts – then contributes to this sense of selfhood. In short, there can be no self without the somatic component: without a body.

In this view, the “brain in a jar” is not a feasible avatar of the entire human. One could argue that the transhumanist idea of a brain-on-a-chip could be coupled to a robotic body that allows such physical interaction with the surroundings, or even to just a simulation of a virtual environment. But the somatic view of selfhood raises questions about whether there is any purely mental “essence of you” that can be bottled and downloaded in the first place, independent of its embodiment in an environment.

The more interesting and relevant version of transhumanist self-realization, then, considers what happens to mind when body is extended and altered, given new senses or new capabilities by new interfaces between human and machine. Donna Haraway’s classic 1985 essay “The Cyborg Manifesto” argued that we are at this point already. The boundaries between natural body and artificial mechanism (as well as between human and animal), she said, became increasingly blurred throughout the twentieth century. Like many transhumanists, she welcomed this development as a liberation from the “antagonistic dualisms”, for example in gender and race, that society constructs. For some, this notion of the human increasingly embedded within the machine is unsettling: science-fiction writer Bruce Sterling, who pioneered the cyberpunk genre, imagines a wretched being who is as trapped and constrained as the vat-minds of The Matrix: “old, weak, vulnerable, pitifully limited, possibly senile”. But it is precisely because we are “profoundly embodied agents”, not “minds in bodies”, says philosopher Andy Clark, that by extending embodiment by whatever means available – computational, genetic, chemical, mechanical – we will necessarily extend the self. We are, says Clark, “agents whose boundaries and components are forever negotiable, and for whom body, thinking, and sensing are woven flexibly from the whole cloth of situated, intentional action.” The technologies of cell transformation have added a new and powerful axis of negotiation – and as we have seen, they recognize no distinction between the brain and body. All are contingent tissues, ripe for change. Clark’s comment serves as a reminder that as we alter the body, we should expect the mind to change too.

* * *

The brain in a vat is a familiar image to philosophers, who have long used it as a vehicle for exploring the epistemological question of how we can develop reliable notions of truth about the world. How can you be sure, they ask, that you’re not just a brain in a vat, being fed stimuli that merely simulate a world? How can you know that all your beliefs about the world are not falsehoods shaped by illusory data?

The question goes back to the sceptical enquiry of René Descartes in the seventeenth century. Descartes argued that, since we can only develop a picture of the objective world from sensory impressions, we might just be being systematically deceived by an evil demon. In his 1641 Meditations on First Philosophy, he imagined that a being of “utmost power and cunning has employed all his energies in order to deceive me.” In that event, he said,

I shall think that the sky, the air, the earth, colours, shapes, sounds and all external things are merely the delusions of dreams which he has devised to ensnare my judgement. I shall consider myself as not having hands or eyes, or flesh, or blood or senses, but as falsely believing that I have all these things.

For Descartes this was not so much a disconcerting possibility that he had to argue his way out of, but a reason to doubt his convictions. It was through such scepticism that he devised his famous, reductive formula for the one thing of which he could be sure: I think, therefore I am.

Demons often did the job of constructing counterfactuals and conundrums for thinkers from the Enlightenment onwards. Pierre-Simon Laplace had one (which foretold the future of the universe from a complete knowledge of its present state), and so did James Clerk Maxwell (which undermined the second law of thermodynamics). In the twentieth century, scientific scenarios were deemed a more proper thinking tool than supernatural ones. Thus in 1973, the American philosopher Gilbert Harman recast Descartes’s problem in the form of a medical scenario:

It might be suggested that you have not the slightest reason to believe that you are in the surroundings you suppose you are in … various hypotheses could explain how things look and feel. You might be sound asleep and dreaming or a playful brain surgeon might be giving you these experiences by stimulating your cortex in a special way. You might really be stretched out on a table in his laboratory with wires running into your head from a large computer. Perhaps you have always been on that table. Perhaps you are quite a different person from what you seem.

Here the legacy of the brain in a vat becomes apparent. For what is this but the underlying premise of the Matrix movies? By the time the Wachowski brothers (now sisters) began riffing on it, “brain in a vat” scepticism about what we take to be reality had a thorough philosophical pedigree. The most celebrated critic of the idea was American philosopher Hilary Putnam, who argued in 1981 that the proposition that you might be a mere brain in a vat fails because, in effect, it dissolves into contradiction. Words used by a brain in a vat can’t be meaningfully applied to real objects outside of the brain’s experience, Putnam said. Even if there are actual trees in the world containing the vat that are simulated for the brain, the concept “tree” can’t be said to refer to them from the brain’s point of view. Philosopher Lance Hickey encapsulates Putnam’s argument with what I suspect is an unintended whiff of absurdist humour:

Take this slowly. Or alternatively, take Anthony Brueckner’s neat précis of the argument in the title of his 1992 paper on the issue: “If I Am a Brain in a Vat, Then I Am Not a Brain in a Vat.”

Not everyone is persuaded by Putnam’s rather elusive reasoning. Philosopher Thomas Nagel adds to the impression that philosophers seem here to be attempting to escape, Houdini-like, from the sealed glass jar of their own minds with the somersaulting logic of his riposte to Putnam:

If I accept the argument, I must conclude that a brain in a vat can’t think truly that it is a brain in a vat, even though others can think this about it. What follows? Only that I cannot express my skepticism by saying “Perhaps I am a brain in a vat.” Instead I must say “Perhaps I can’t even think the truth about what I am, because I lack the necessary concepts and my circumstances make it impossible for me to acquire them!” If this doesn’t qualify as skepticism, I don’t know what does.

No wonder Neo just decided to shoot his way out of the problem.

Feel free to decide for yourself how convincing you find these arguments, for there is still no real consensus about whether we must just accept the world as we find it or can be sure we aren’t the dupes of Descartes’s demon. Technological advances now offer new variants of the same basic conundrum. Might we be virtual agents in the computer simulations of some advanced intelligence: one of countless simulated worlds, perhaps, created as an experiment in social science? According to one argument, it is overwhelmingly likely that we are just that, for if such simulations are possible (and some people think we are not so far off from being able to build a primitive degree of cognition into the avatars of our own virtual worlds) then there will be many more of them than the unique “real world”.

Some philosophers and scientists have even considered how we might spot fingerprints of a simulated character in what we take to be the physical world, like the glitches that feature in The Matrix. But I can’t help wondering what, to a simulated avatar, any notion of a “world outside” could mean – as if once again there exists within the experiential self some homunculus that can climb outside it. I wonder too at the readiness to make these supposed super-advanced intelligences merely smarter versions of ourselves: cosmic doyens of computer gaming. We have lost none of Descartes’s solipsism; perhaps that’s the human condition.

The “brain in a vat” might sound like one of those reductio ad absurdum scenarios for which philosophers enjoy notoriety, but some think it is already a reality. Anthropologist Hélène Mialet used precisely that expression to describe British physicist Stephen Hawking on the occasion of his seventy-first birthday in 2013. Hawking was famously confined to a wheelchair for decades by motor neurone (or Lou Gehrig’s) disease, in his final years his only volitional movements confined to twitching muscles in his cheek. A computer interface allowed Hawking to use those movements to communicate and interact with the world. In the popular consciousness, he became an archetypally brilliant brain trapped in a non-functioning body. Mialet argued that he was in effect a brain hooked up to machinery: like Darth Vader, she said, he had become “more machine than man”, that effect enhanced by Hawking’s choice to retain his trademark retro “automated” voice.

Mialet’s description drew intense criticism and condemnation, among others from the UK Motor Neurone Disease Association. But she didn’t intend it as any kind of judgement, far less as insult. She wanted to encourage us to consider if we aren’t all now part-machine, networked to our technologies and inhabiting worlds in which the real and the virtual bleed into one another. Because Hawking was at the same time so powerful in terms of the directive force of his thoughts – the twitch of a cheek was enough to activate his army of attendants and collaborators – and so powerless in terms of his physical agency, he embodied (so to speak) an extreme case, barely any different from the Machine Man of Ardathia or Olaf Stapledon’s Fourth Men:

his entire body and even his entire identity have become the property of a collective human-machine network. He is what I call a distributed centered-subject: a brain in a vat, living through the world outside the vat.

Comparing Hawking’s situation with that of other individuals whose wishes are expressed and exerted through social and technological networks, Mialet concluded that “Someone who is powerful is a collective, and the more collective s/he becomes, the more singular they seem.” The machinery of command becomes an extension of body and mind. Or to put it another way, the personhood of a “brain in a vat” might depend on its degree of agency.

Perhaps. But there is another, not incompatible, way to read Hawking’s situation. The society in which he lived never, I think, quite came to terms with this man. We insisted on attributing to his fine scientific mind an almost preternatural genius, we heaped praise on his pleasantly wry but somewhat prosaic wit, we elected to sanctify – as much as secular society will sanction – a person of immense fortitude but of somewhat traditional, even conservative mien. In the end, excepting the small group of people close to him, Stephen Hawking’s machine existence made us lose sight of the human – and to see only the legendary brain.