22 Thompson’s Hack

Ken Thompson is a famous computer scientist with many accomplishments, but we are interested in him primarily for what he did to teach the world about the problem of trust in software systems. Among his achievements, Thompson was one of the inventors of an operating system named Unix that has been very popular and influential. In 1983 that work won him the Turing Award, often described as the “Nobel Prize of computer science.” That might not seem very relevant to our story, but here’s where things get interesting.

In his Turing Award acceptance speech, Thompson disclosed that he had deliberately created a weakness in Unix—he had built in a secret mechanism that computer scientists would call a back door. Specifically, Thompson had arranged it so that any Unix system allowed him to sign in. Every Unix system in the world would allow him this privilege, even though system administrators would not see any indication that he could do this—for example, he did not appear in any lists of authorized users. Further, Thompson had built the back door so that it was extremely well hidden. Without knowing his specific trick—which we will examine in this chapter—it is unlikely that even the most careful scrutiny would have found it.

The scene must have been strange indeed when Thompson gave his talk. When dignitaries assemble to confer a major annual award—even in a young field like computer science—one expects a certain bland predictability to the event. A few people stand up to speak, there are various polite rounds of applause, the awards are handed over, photographs are taken, and then everyone goes home. The audience is emphatically not expecting to learn new information, and they’re certainly not expecting to hear a borderline confession of borderline criminal behavior. But that’s what they got at that particular Turing Award convocation.

Although Thompson did not use the word “hack” in his Turing talk to refer to his construction, the mechanism is most commonly referred to now as Thompson’s Hack or the Ken Thompson Hack, or KTH for short. The word “hack” has been used with both positive and negative connotations. Among engineers, “hack” is often a positive term for an ingenious trick. In the media, “hack” is usually a negative term for an attack on the integrity of computer systems. What Thompson described is a hack in both senses, and it is eye-opening when properly understood. (In my experience, even many computer professionals do not really understand its significance.)

Translating High-Level Language

To appreciate the mechanism Thompson used, we must be aware of two aspects of programming that are important in practice but have not yet appeared in our discussion of computer science concepts.

- 1. The steps taken by computer hardware are very small and simple: they are so tiny and repetitive, and so many of them are required to get anything useful done, that they are a poor match for human cognitive capabilities. Instead of working at the machine’s level of tiny steps and mechanisms, humans generally program in terms of high-level languages: more comfortable languages that express the computation with fewer, more sophisticated operations.

- 2. Operating systems can be—and usually are—written in terms of these high-level languages.

The Thompson hack cunningly takes advantage of these two aspects of the computational landscape.

Before we go further, we should consider a concrete example of a high-level language vs. the machine code that’s really executed. A very simple case is the kind of thing that we’ve written before in talking about fragments of programs, like

y ← x + 1

We can understand this easily enough: something called y is going to be updated to contain the result of adding the value of x and 1. But the instructions actually available on the machine don’t allow for abstract variable names like x and y. Although the machine instructions do let us add one to a value and store it somewhere, using those instructions requires relentless attention to concrete details, such as exactly where each of these variables is stored. Instead of talking about x and y, the machine instructions need to specify particular memory addresses.

Working in machine instructions is roughly as annoying as if we had to refer to people by globally unique ID numbers instead of names. Instead of introducing John to Jane, we have to introduce 492–93–2901 to 937–01–3874, and continue to use those numbers consistently thereafter.

Worse is that the machine’s addition operations can only be applied to special locations called registers. So not only do we have to talk about the abstract values in terms of concrete locations with long numbers, we also have to move things in and out of these special registers. To return to our example of John and Jane, we not only have to introduce 492–93–2901 to 937–01–3874, but we first have to escort each of those people (as identified by number) to a particular room before the introduction can take place. And afterward, because there’s only a limited number of such rooms available, we have to be prepared to escort both of them back to where they came from after we’ve introduced them to each other.

What has finally been accomplished after all that shuffling and bookkeeping? Only the addition of two numbers, which might seem kind of trivial.

The great thing about a high-level language is that it lets the programmer write something simple, that’s similar to the way they want to think about the calculation. Then those high-level expressions are translated into all of the necessary numbers, moving, and bookkeeping required to get the real work done. But a key consequence is that the “human-friendly” programming language must be translated into “machine-friendly” instructions before the originally written program can actually be executed by the computer.

Thompson’s Hack: Simple Version

Returning now to Thompson’s Turing lecture, there were two elements to his attack. We’ll first identify the concrete elements of his hack, then extract the general principles.

The Unix operating system is written in the programming language called C. Computers can’t run C programs directly; instead, those programs have to be translated into simpler machine instructions. This process is much like the one we outlined for the expression “y ← x + 1.”

The program that translates C into machine instructions is itself a program written in C, and then translated into machine instructions for execution. It’s called cc (for “C compiler”). We’ll use the terms “compiler” and “translator” interchangeably in this chapter. A computing professional might quibble that not all translators are compilers, but that distinction doesn’t matter for our purposes.

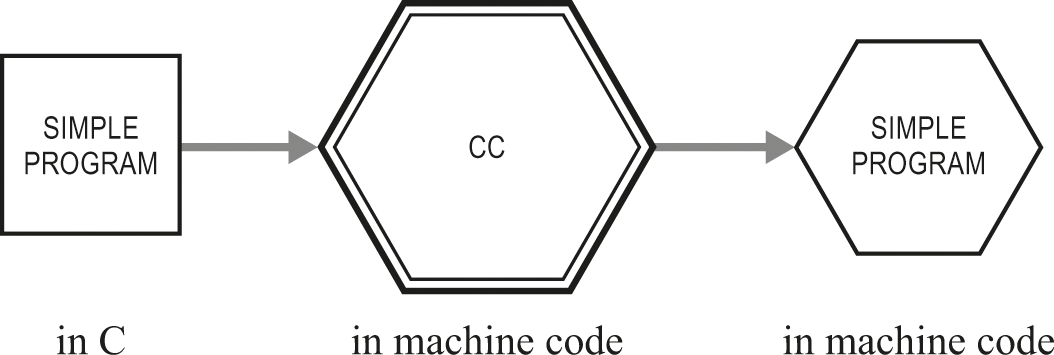

Figure 22.1 shows how a simple program is processed by the compiler cc. All we’re really doing here is introducing a diagram to match the narrative we gave before about the need to translate from a C version to a machine-code version. Notice again that the C compiler is itself machine code: the computer has to execute it to perform the translation.

Compiler cc translates an ordinary program.

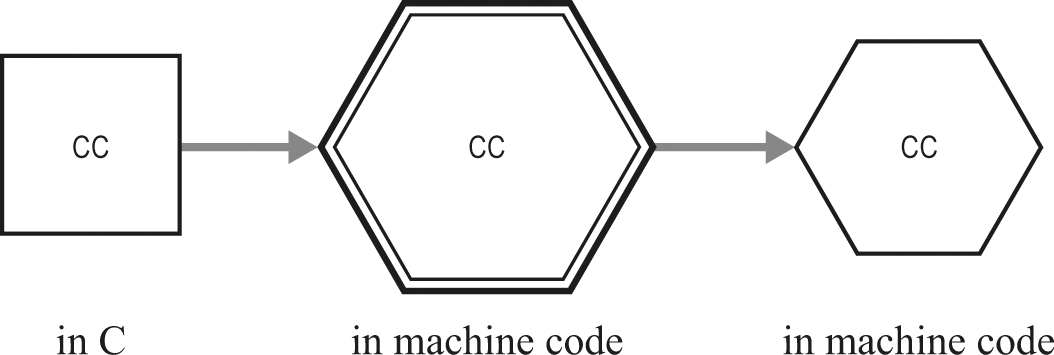

Next, figure 22.2 shows how cc executes to translate itself. The executing cc (in the center, heavy lines) takes in a version of cc written in C (on the left) and produces a version of cc written in machine code (on the right).

Compiler cc translates itself.

One element of Thompson’s hack is a high-level language that is translated by a program in the same high-level language. The other element is that the operating system is also written in the same high-level language. The operating system is itself a program—albeit a special kind of program—and like other high-level-language programs, it must be translated into machine instructions before it can be executed.

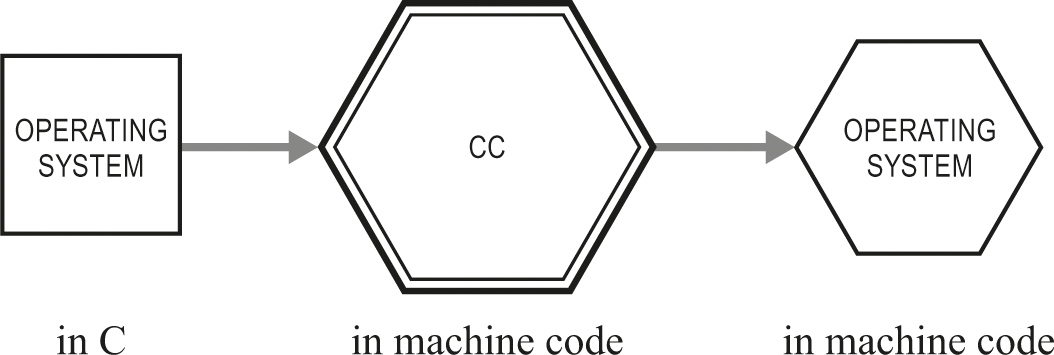

Since the operating system is also a C program, it has a similar diagram (figure 22.3).

Compiler cc translates the operating system.

In ordinary situations where we’re not concerned about Thompson’s hack, there is nothing very interesting about the relationship of the operating system to cc. However, as we mentioned before, Thompson’s hack hid a “back door” in the Unix operating system that allowed him to log in. The C compiler is a part of the attack, as we’ll see.

We’ll start with a simplified version of the attack. In this version, we change the C compiler (cc) so that it can detect when it is translating a particular program. The program to be detected is the operating system. It doesn’t matter to us how the translator does this detection—in a relatively large and stable program like an operating system, there are almost certain to be distinctive features that would let the translator identify it as the program of interest. The crucial part is that the translator behaves in a special way when it has figured out that it’s translating the operating system.

Let’s call our modified (naughty) translator “HackedC,” or HC for short. We can use HC just like we used cc previously. For the moment we aren’t going to think much about HC translating itself, although that will be important a little later. We mostly want to note that it’s possible to modify the C compiler so that it does something naughty. In particular, something interesting happens when HC is translating the operating system (figure 22.4).

Compiler HC translates the operating system, naughtily.

When HC translates the operating system, it does not do a straightforward translation: instead, it first detects (somehow) that this input is the operating system. Then, it (somehow) inserts the back door. In particular, when it sees a relevant part of the operating system, it not only translates as it is supposed to, but it also inserts some additional machine instructions that allow Thompson to login with his name and password.

We haven’t really explained how the detection and rewriting happen, and we’re not going to try to make that more detailed. For our purposes, it doesn’t much matter how those happen, just that they can happen. The central point here is that there is a translation step involved in getting from programming the operating system to executing the operating system, and that the translation can be creatively subverted.

The full version of the hack gets more elaborate, as we’ll see in the next section; but even with this simple version of the attack, there is a substantial security problem. The operating system has a back door that is not apparent from reading the C version. There is no back door in the C version—instead, the translation process of going from C to machine instructions inserted the back door.

However, the attacker built HC by modifying cc, which means that the defender can read the C version of HC to find the unusual behavior. Somewhere in HC there is a part that looks roughly like this:

If (modifying the operating system) then insert back door

There is nothing like this section in the original cc. So the defender can examine the system’s C compiler, looking for this rather unusual behavior. The defender can find and remove the relevant operating-system-modifying instructions, converting HC back to cc.

Thompson’s Hack: The Real Thing

Putting the back door attack into the translator instead of directly into the operating system made it harder to find. Repeating that trick produces a particularly devilish attack, and is the real version of Thompson’s hack.

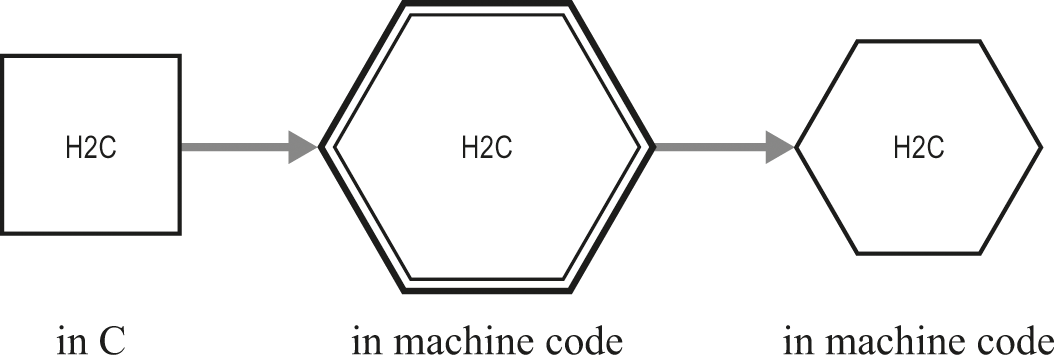

In this refinement, we’ll have yet another modified translator called “DoubleHackedC” or “H2C” for short. As we saw with its predecessor HC, H2C looks just like cc for most purposes, and we can show a diagram where it “produces itself” after translation (figure 22.5).

Compiler H2C translates itself.

Like its predecessor HC, H2C detects when it’s translating the operating system and inserts the back door. What’s more interesting about this new version is that it also detects when it’s translating the translator: the original, unhacked compiler cc.

So in roughly the same way that the translator could detect the operating system being translated and insert the back door, the translator H2C can instead detect that the original translator cc is being translated and insert the back-door-inserting instructions (figure 22.6).

Compiler H2C translates cc, naughtily.

Let’s put that another way: H2C can detect when it is being asked to translate some version of the C compiler cc. What the user expects is for H2C to produce a straightforward machine-instruction version of what it’s handed. But what H2C actually does is to produce a machine-instruction version of itself. It effectively substitutes itself for the translator program that it was asked to translate.

The Invisible Attack

This is all a little mind-scrambling, so let’s pause briefly and consider the implications. Go back one step to where H2C is converted into a machine-instruction version. After that, the hacked part that does the sneaky rewriting can actually be removed (!) from the C version of H2C. The attacker doesn’t need it in the C program anymore, because the corrupted translator will insert it.

Even though the translator-hacking trick doesn’t appear at all anywhere in the C version of the translator, the functionality of creating a hacked translator will happen every time the translator is translated! Even if you get suspicious of the translator you’re using, and you retranslate it from the correct-looking C version, you’ll still get the hacked version as the output.

How could we get rid of this hack? Once it’s in the translator, it’s almost like an infection that we can’t easily wipe out. We haven’t said much about how the translator detects that it should play the trick. Depending on how that works, it might be possible to fool it, or to change the program so drastically that it no longer triggers the trick. But any ordinary process of rebuilding the translator and operating system is now thoroughly corrupted. Only a radical change might have a chance of eliminating this hack. Unless someone goes to the trouble of rebuilding little by little from a clean program—what computer scientists would call bootstrapping from the source code—the attack-inserting trick will stay in the translator almost regardless of how the translator is changed.

We may be able to improve our chances of detecting this problem if we maintain multiple independent C compilers. By carefully comparing what happens when a compiler translates itself vs. what happens when a compiler translates a completely different compiler, we can get indications of when this kind of back-door-inserting trick is happening. Unfortunately, that approach is expensive and probably unworkable in most cases, for all the reasons we already identified as problems when we previously considered multiversion software (chapter 17).

Assessing the Danger

Why is Thompson’s hack so dangerous? We can analyze the problem informally in terms of “levels of trouble” from a back door or other attack. We start with the idea of a correctly functioning system, with no back door.

• The first level of attack simply puts the back door into the operating system directly. That creates a vulnerability, but one that is easily seen in the operating system program itself.

• The second level of attack has the translator insert the back door into the operating system. That creates a vulnerability that is invisible in the operating system but is still visible in the translator program.

• The third level of attack is to have the translator insert the back door-inserting instructions into the translator itself. That creates a vulnerability that is invisible in both the operating system and the translator.

What is the significance of all this fooling around with programs translating programs and inserting other programs? It helps delineate another limit of what we can do in the digital world. In chapter 6 we pointed out that the sheer size and complexity of programs, with their many discrete states, make it hard to ensure that a program functions correctly. We can now see that the problem is not only that a program may contain errors or mistakes that impair its function; but also, it can have carefully hidden mechanisms that subvert it undetectably. As much as we may want to think in terms of using mechanisms to protect us from untrusted code, there are some ways in which using someone else’s software is inseparable from trusting them.

The style of subversion in Thompson’s hack is not limited to software: its effects can occur in any system that has been touched by software. In the modern world, essentially any complex artifact is touched by software. For example, the step-taking machinery of modern computers is built by using elaborate design tools, made of software. Accordingly, an attacker using these ideas could put undetectable elements into those design tools. The subverting elements would then insert misbehaving components into the hardware being designed. A checking or analysis tool for the hardware could likewise be subverted. Subverted hardware could potentially be carrying out a version of this hack on all the instructions executed, making it literally impossible to avoid the subversion without replacing the hardware. The implication is sobering: you can’t really trust anything that you didn’t build yourself from the ground up—which is impractical. So in a practical sense, all of us are exposed all the time to systems we cannot really trust.