Within a few hours of Albert Einstein’s death in 1955, the great scientist’s brain had been surgically removed from his skull and placed in formalin. The autopsy and the events surrounding it were shrouded in secrecy and marked by contradictory claims. The brain was extracted by a hospital pathologist named Thomas Harvey in Princeton, New Jersey, where Einstein lived during the final years of his life.1 After the autopsy was completed, officials at Princeton University asked Harvey to turn over the brain to the university, but he refused. The pathologist claimed, but could not prove, that Einstein’s family had given him permission to keep the brain indefinitely. Thomas Harvey was determined to keep Einstein’s brain for himself.

For a long time, the whereabouts of Einstein’s brain remained a closely guarded secret known only to a select few. To the larger public, the iconic brain seemed to have vanished, probably forever. The mystery had been nearly forgotten when, in 1978, a journalist named Steven Levy tracked down Thomas Harvey in Wichita, Kansas. Levy was determined to get some answers. After relentless questioning, Harvey “sighed deeply and pulled from a cardboard box two glass jars with sectioned pieces of Einstein’s brain.”2 At long last, Einstein’s brain had been recovered.

Levy subsequently published a magazine article entitled “My Search for Einstein’s Brain,” which put the scientific community on notice that sections of the brain might become available for research. The obvious question, intuitive to neuroscientists and laypeople alike, was whether Einstein’s brain was unusual in any way. Could his prodigious intellect be correlated with any distinctive features of the brain’s anatomy? The answer was not obvious. Superficially, Einstein’s brain appeared to be quite average in size and structure, but more detailed analysis showed that the brain did have distinguishing features after all. One of the first scientists to receive a sample of Einstein’s brain was UC Berkeley neuroscientist Marian Diamond. Diamond found that the brain sample had far more glial cells than is typical.3 Glial cells do not directly participate in the brain’s signaling, but rather provide neurons with nutritional support and maintenance. Einstein’s brain cells appear to have been “well fed.” Other research showed that the brain’s cerebral cortex, while not particularly thick, had a high density of neurons. This finding led investigators to speculate that “an increase in neuronal density might be advantageous in decreasing inter-neuronal conduction time,” thereby increasing the brain’s efficiency.4 In other words, because the neurons were tightly packed, they could presumably carry information efficiently and with exceptional speed.

Further analysis showed that Einstein’s brain had an unusually large parietal lobe, a region responsible for mathematical cognition as well as mental imagery.5 The enlarged parietal lobe seems consistent with Einstein’s own accounts of how he constructed the special theory of relativity. His thought experiments included imagining how objects traveling at the speed of light would be affected. Visualization gave him insight into the problem. Einstein envisioned how a light beam would appear if he were traveling alongside the beam at the same speed.6 Perhaps his enlarged parietal lobe helped him to integrate mental images with mathematical abstractions.

The case of Einstein’s brain illustrates the sorts of questions posed by some neuroscientists. These concern the relationship between brain structure and function. Among the most basic questions is whether a larger brain is advantageous. Evidence from the study of human evolution strongly suggests that having a larger brain helps tremendously in adapting to, and surviving in, a hostile environment. During the past three million years, the average human brain increased in size threefold, from the modest 500-gram brain of the australopithecines to the robust 1,500-gram brain of Homo sapiens.7 However, this is a comparison between two different species—modern humans and their evolutionary ancestors. If we consider only the effects of brain size within Homo sapiens, the variation from person to person is not as clearly predictive of survival value or the ability to adapt. Einstein’s brain was not particularly large, which tells us that if there is a positive correlation between brain size and intelligence, it can only be approximate.

In general, a bigger brain is a more intelligent brain. In more than 50 studies dating back to 1906, measures of head size, such as length, perimeter, and volume, are at least weakly predictive of higher IQ scores, with a correlation of about r = .20.8 Many early studies, lacking brain-imaging technologies, could only approximate brain size by measuring head size. With the invention of brain-imaging technologies, such as CT and MRI scanning, it became possible to gather precise data on brain volume and compare those measurements to IQ. The more precise correlations between brain size and IQ vary quite a bit, but yield an average across research studies of r = .38—much higher than the correlations between head size and IQ.9 The correlations hold with equal strength in males and females.

Changes in brain size over the life span may help explain how various forms of intelligence change with age. Recall that fluid intelligence typically declines as we get older. After early adulthood, people normally lose some of their ability to adapt to novel problems, which is the essence of fluid intelligence. On the other hand, crystallized intelligence generally continues to climb until very late in life. The connection to brain size is that total brain volume correlates positively with fluid intelligence but not with crystallized intelligence. Brain size decreases somewhat as we age, which might contribute to the decline in fluid intelligence that is common during middle age and the later years. Crystallized intelligence seems to be unaffected by the decline in total brain size, which could explain why it remains stable or increases throughout life.10

On a strictly structural level, the correspondence between brain size and intelligence should not be surprising. Larger brains have, in nearly direct proportion, greater numbers of neurons. More neurons mean greater computational power in the service of adaptation and survival. The intelligent brain in any species must somehow generate a model of the environment, a perceptual world, to which an animal can adapt. In reptiles, the brain constructs this internal world primarily through the sense of vision and its associated neurons.11 The more developed brains of mammals tend to support the sensory construction of the world through hearing, as well as vision and olfaction. In primates, high visual acuity acquires special importance in representing the external world. While larger brains imply a greater ability to adapt to the environment, we should not ignore the possibility of causal influence in the reverse direction, from environment to anatomy. Certainly this is the case on an evolutionary timescale, but even at the level of individual development, it is possible that intellectually demanding events lead to a larger brain volume.12

The correlation between intelligence and brain size is far from perfect, as Einstein’s brain vividly illustrates. Clearly, the relationship between brain size and IQ falls short of a complete account of how the brain relates to intelligence. If we want to understand how intelligence is related to the brain, we must consider factors besides sheer size. At minimum, we need to examine the basic structure of the brain and ask how that gross structure relates to function. Fortunately, we have quite a bit of research to draw upon. We know, for example, that the outer layer of the brain, the cortex, is especially important to intelligent thought. The cortex is the structural basis for complex reasoning, perception, and language—indeed, for virtually all forms of higher-order cognition. Tellingly, the cortex is especially large in the human brain. Yet the human cerebral cortex is also surprisingly thin, only about 2 to 4 millimeters thick. In three dimensions, though, the cortex achieves considerable volume because it is massively convoluted. More than two-thirds of its area is tucked away into folds. Folding allows for a large cortical volume, and a large volume gives the human brain an enhanced potential for higher-order intellective functioning.

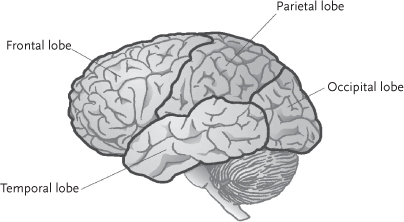

Aside from recognizing the special importance of the cerebral cortex, anatomists have also noted the division of the brain into distinct lobes. Those lobes are shown in Figure 4.1.

Figure 4.1

Four Lobes of the Human Brain. Martinez, Michael E., Learning and Cognition: The Design of the Mind, ©2010. Printed and Electronically reproduced by permission of Pearson Education, Inc., Upper Saddle River, New Jersey.

Intelligence cannot be traced to a single region but is distributed through the brain.13 All four lobes—frontal, parietal, temporal, and occipital—have important relationships to intelligence. More precisely, variation within each lobe is correlated with IQ variation.14 Different brain regions show considerable variation from one person to another. This suggests a structural basis for different profiles of intellectual strengths and weaknesses even when overall IQ is similar.

While acknowledging that the entire brain underlies the exercise of intelligent thought, one particular lobe, the frontal lobe, has a special role: It is responsible for planning, monitoring, and problem solving. Size matters here, too. The volume of gray matter in the brain’s frontal lobe is predictive of IQ, even when total brain volume is statistically held constant.15 As its name suggests, the frontal lobe is located at the front of the brain, just behind the forehead. Neuroscientists have known about the special functions of the frontal lobe for a very long time. The earliest evidence for those functions came from clinical studies on the effects of damage to the frontal lobe. Some cases were so significant that they became historical milestones in our understanding of the brain. In the history of clinical neuroscience, one such case centers on a 25-year-old railway worker named Phineas Gage.16

In 1848, America was coming of age. At that time, Abraham Lincoln was a relatively unknown junior congressman from Illinois, and gold had just been discovered in the foothills of the Sierra Nevada mountains of California. Across the United States, the railroad infrastructure was expanding rapidly. Late in the afternoon of September 13, Phineas Gage and a group of his fellow workers were preparing the track bed of a new railroad in rural Vermont. Mr. Gage was packing down explosive powder into a hillside when an errant spark ignited the powder, sending a three-foot tamping bar at high speed through his left cheek, behind his left eye, and out the top of his skull, landing some distance away. Gage was knocked to the ground like a rag doll. Remarkably, he soon got up and walked away from the accident. He remained conscious throughout, and although the tamping bar had completely penetrated the frontal lobe of Gage’s brain, he was able to speak.

Phineas Gage’s short-term resilience was remarkable. Nevertheless, it soon became apparent that Gage’s personality had been radically transformed by the accident. Previously a foreman, Gage could no longer marshal the interpersonal skills necessary to manage a crew of workers. He became easily angered and used obscene language, expressing behavior that was previously uncharacteristic and now problematic for his role as a railway worker. Within a few months of returning to work, Phineas Gage left his railroad job and joined P.T. Barnum’s Museum in New York as a human curiosity. Later, he traveled to South America and drove a stagecoach in Chile before moving to San Francisco with his mother and sister. Over the years, his health and coping abilities worsened. In 1860, Gage died of seizures related to the brain injury suffered 12 years previously on the Vermont railroad.

The story of Phineas Gage spotlights the profound effects of targeted disruption of brain tissue. Gage’s abilities to relate to other people and to manage his emotions were deeply compromised. By all accounts, the loss of function was directly associated with damage to his frontal lobe. For neuroscientists, the theoretical upshot was clear: Social competence, to a significant degree, seemed to have an anatomical home in the brain. The brain’s frontal lobe is also deeply implicated in the executive control of information in working memory as well as in planning functions. When working memory is taxed by holding information while performing a complex task, certain areas of the frontal cortex are highly metabolically active. Overlapping regions of the frontal lobe are also active when separating relevant from irrelevant information to focus on the task at hand.17 These associations tell us that the frontal lobe has unique importance in the exercise of intelligent thought. More than any other brain region, the volume of brain tissue in the frontal lobe is correlated with IQ scores.18 To the degree that intelligence is an inherited trait, the genetic control of intelligence is probably exerted largely by variation in gray matter in the brain’s frontal lobe.19

With this research in hand, we can identify with some precision the structural correspondences between brain anatomy and the two most important psychometric factors, fluid and crystallized intelligence. The brain’s frontal lobe is strongly identified with the planning and control functions that are associated with fluid intelligence, whereas all other brain lobes that lie posterior to the frontal lobe—parietal, temporal, and occipital—are more closely associated with the accumulated knowledge that we call crystallized intelligence.20 The border between the frontal lobe and the other brain lobes is marked by a large groove, the central sulcus, which runs from one side of the head to the other. This groove effectively divides the brain into two functional areas. Brain images show that the parietal lobe, situated at the top of the brain and just behind the central sulcus, is especially correlated with differences in crystallized intelligence.21 The functional role of the parietal lobe may include the consolidation of knowledge through enhanced neuronal connectivity. The parietal lobe might also serve as a relay center connecting the brain’s posterior regions with the executive planning activity that is localized in the frontal lobes.22 Thus, the brain’s central sulcus is akin to an international border, dividing the brain into the “nations” of fluid intelligence and crystallized intelligence.

The forward-most region of the frontal lobe, known as the prefrontal cortex, has special importance across the range of mental activities measured by intelligence tests. So important is the prefrontal cortex that, in some studies, it is the single brain area consistently activated by a broad array of intelligence tests.23 If we had to name the anatomical epicenter of intelligence, it would be the prefrontal cortex. Phylogenetically, it was one of most recent evolutionary developments in humans and apes. The prefrontal cortex has distinct developmental qualities that align with its role in regulating complex thought. Its primary role is to control attention and resist distraction, both key features of working memory and absolutely vital to intelligent behavior. The prefrontal cortex develops slowly in comparison to other brain regions and shows a surprising degree of plasticity in response to experience.24 It is one of the last areas of the brain to mature completely, with synapse formation and restructuring extending far past childhood into early adulthood. The protracted period of synaptic structuring and restructuring is use-dependent, implying that it is specially attuned to higher-order cognitive demands.

The case of Phineas Gage was forever instructive to neuroscientists about the functional role of the brain’s frontal lobe. In 1861, a year after Gage died, a French physician named Paul Pierre Broca documented a different correspondence between localized brain injury and specific compromises to intellectual functioning. Broca found that injury to the brain’s left temporal lobe, located just above the ear, produces specific and profound speech disruption, called aphasia. The localization of speech to the left hemisphere hints of a more sweeping pattern: the separation of function by the brain’s left and right hemispheres.

Like two halves of an intact walnut, the brain exhibits bilateral symmetry. That structural separation, it turns out, also has counterpart functional separations: The two hemispheres exhibit somewhat different ways of processing information.25 We noted, for example, that the left hemisphere tends to be more proficient at language processing; the right hemisphere, by contrast, specializes more directly in processing spatial and musical information. This separation of function exists not only in the temporal lobes but also in the frontal lobe. The left side of the frontal lobe is dedicated to the processing of verbal information, whereas the right side is active when spatial information is processed.26 The bilateral separation of function must be qualified, however. It holds up well for right-handed people, but less so for left-handers: About 40% of left-handers have language centers in their right hemispheres. The brain’s hemispheres also differentiate in a second way. Whereas the left hemisphere tends to process information analytically by attending to details, the right hemisphere interprets information more holistically by seeing the big picture. The two processes are, of course, complementary. Every brain needs to operate both analytically and holistically in order to interpret the environment in an intelligent way.

The brain’s hemispheres display other differences of function. The most basic difference is that each hemisphere exercises muscular control over the opposite side of the body. The left hand, for example, is controlled by the right hemisphere. Partial paralysis following a stroke on one side of the brain is manifest on the opposite, or contralateral, side. Sensory information, too, is at least partly processed by the opposite hemisphere. The retina of each eye divides incoming light sensation into the right and left visual fields, and the signals from each field are shunted to the opposite brain hemisphere. Hearing is mostly contralateral, but not completely. Auditory data from each ear is sent to both hemispheres, but most of the auditory processing occurs in the hemisphere opposite to the ear that received the input.

The independent functions of the cerebral hemispheres were explored more fully in the 1960s, when in some epilepsy patients the neural bridge connecting the two hemispheres was surgically cut. The main neural structure connecting the brain’s left and right hemispheres is the corpus callosum, the sheet of neurons that runs beneath the two hemispheres. In “split brain” patients, the corpus callosum was severed to alleviate symptoms of epilepsy. The surgery resulted in the functional isolation of the hemispheres, whose psychological consequences were revealed by a series of fascinating studies.27 For example, when common objects such as scissors were presented to the right visual field, the patient had no difficulty naming the object because information was projected to the language centers in the left hemispheres. However, when objects were shown to the left visual field, the information was shunted to the brain’s right hemisphere. Because the right hemisphere ordinarily has a much-reduced capacity to use language, the patient was frequently unable to name the object.

Split-brain patients sometimes displayed quite remarkable behavior showing that the left and right hemispheres could have different, and even contradictory, knowledge. Keep in mind that each brain hemisphere communicates directly only with the contralateral side of the body. Now consider what happened when conflicting instructions were presented to the brain’s right and left hemispheres. Through spoken instructions to the left ear, the right hemisphere was told to pick up a paper clip; instructions to the right ear told the left hemisphere to pick up an eraser. If the left hand correctly picked up the paper clip, this action was consistent with information given to the controlling right hemisphere, but it contradicted the request given to the left hemisphere. In split-brain patients, the left hemisphere would sometimes respond by misnaming the object, calling it an eraser. The two hemispheres could even hold contradictory wishes. This was illustrated when a 15-year-old boy was asked about his ideal future job. The question was posed independently to his left and right hemispheres. The answer given by his left hemisphere was “draftsman,” but his right hemisphere responded “automobile racer.”28

Differences in function between the brain’s right and left hemispheres are fascinating, but have sometimes been overextended or misapplied. For example, some people have interpreted the separation of function within the individual brain as a way to understand differences between people. That’s why we sometimes hear people describe themselves or others as “left-brained” or as “right-brained.” “Left-brained” people tend to process information more linearly and rationally; they are analytic, detail-oriented, and not at all averse to splitting technical hairs. By contrast, “right-brained” people prefer to consider the larger picture. Less concerned with dissecting a problem or situation, their propensity is to understand and appreciate sweeping patterns, including their aesthetic qualities.

Separating people into left-brained and right-brained is deeply problematic for two reasons. First, it fails to recognize that the separation of function in the individual brain is only partial. The relative specialization of the left side to analytical processing and the right to holistic processing is only a tendency, a partial division of labor, and certainly not a strict assignment. After all, the hemispheres are normally cross-wired by the corpus callosum, which ensures that information is communicated between the two halves of the brain. More importantly, the analytic-holistic distinction that holds up when describing the individual brain does not automatically map on to descriptions of people. For such descriptions as “left-brained” and “right-brained” to be validated, an entirely separate research track must test and verify that individuals really do differ significantly and consistently in their preferences for processing information. The binary categorization, either left or right, makes such a clean distinction unlikely. Such differences may turn out to be a continuum rather than categories, but this is speculation. Such a hypothetical dimension for understanding cognitive preferences has yet to be backed by data. These missteps and misconstruals tell us that the study of brain structure and function provides plenty of grist for speculation, but the resulting enthusiasm should be tempered with caution against errors of application.29

As neuroanatomists continue to employ sophisticated imaging technologies to map brain anatomy to specific cognitive functions, the resulting “cartography” becomes more precise and, at the same time, less simplistic. As research continues, the emerging field of cognitive neuroscience becomes progressively more complex. Now we know that the mapping of brain function to brain anatomy does not follow a pattern of one-to-one correspondence. Instead, as a rule, every complex cognitive function is supported by several brain locations acting in a coordinated fashion. Thus, while the frontal lobe and Broca’s areas have specific functions, they never work alone. Every act of intelligence requires the activation of multiple brain sites, or circuits, working in concert.30 Reading engages its own characteristic brain circuitry;31 mathematics activates a different circuit.32 Brain circuits display both unity and diversity—another version of the “one and many” logic that we found describes the organization of intelligence as viewed through the lens of psychometrics.

Let’s acknowledge, then, that complex cognition invokes multiple areas of the brain as coordinated circuits. This does not mean that the entire brain is activated. In fact, when neuroscientists identify multisite circuits through scans showing areas of high metabolic activity, other areas of the brain are not particularly active—at least not above baseline levels. This fact raises a question: Does the average person use all of the brain’s potential capacity, or does most of that potential lie dormant? Let’s ask the question in a more familiar form: Does the average person use only 10% of his or her brain’s capacity? The answer is important. If the answer is yes, it means that the brain’s potential is largely untapped, implying a lamentable waste. It also hints at something exciting—the possibility of far greater brainpower in the average person if only some of that unused 90% were put into play. If we use only 10% of our brain capacity, as is widely believed, then perhaps each of us has tremendous reserves of intellectual capacity. Is the idea valid?

To cut straight to the answer, the claim that we use no more than 10% of our brain is a myth, plain and simple. We have no evidence that 90% of the brain is held in unused reserve. How, then, did this widely believed myth arise? The claim has sometimes been attributed to Albert Einstein, though there is no evidence that he ever said anything about the topic. More likely, it traces to the great 19th-century American psychologist William James. Along with his scholarly manuscripts, Professor James wrote popular articles in which he expressed his belief that people “make use of only a small part of our mental and physical resources.”33 In 1936, this statement was paraphrased in the preface to Dale Carnegie’s classic self-help book How to Win Friends and Influence People. The writer of the preface, journalist Lowell Thomas, attributed to James the belief that “the average man develops only ten percent of his latent mental ability.”34 The attribution to William James gave the statement instant, but undeserved, credibility.

Other explanations for the 10% myth arise directly from the known structure and function of the brain itself. Early studies of the brain identified large regions of cortex that served no identifiable function. Originally, these expanses were referred to as “silent areas,” implying unused potential. Later, the same regions were called “association areas,” which recognized their function as sites of neural connections associated with learning and development. Pioneering studies by the great neuroscientist Karl Lashley further recognized the brain’s remarkable plasticity of function in response to trauma or to specialized demands.35 The brain’s impressive ability to adapt may have been mistaken for untapped potential and so indirectly corroborated the 10% myth. Whatever its origin, it remains a myth—an inaccurate statement about the way brain anatomy relates to function. Though false, the 10% myth does contain a germ of truth—or perhaps more accurately, a credible hypothesis—that the mind is capable of much more than is typically realized. We have yet to understand what the human brain can achieve when developed to its full capacity.

The gross anatomy of the brain can take us only so far in understanding the biological basis for intelligence. To go further, we must examine the structure and function of the brain at a microscopic level—at the level of specialized cells called neurons.36 Here we are considering a biological scale roughly 1,000 times smaller than the level of detail revealed by brain-imaging technologies. At this level of detail, cells are the basic structural elements. We learned in our basic biology courses that cells are the building blocks of nearly all life forms—animals, plants, and microbes. The human body is composed of trillions of cells, including muscle cells, heart cells, and red and white blood cells. Most human cells are tiny sacs of fluid wrapped in a thin membrane. Inside the cell is its nucleus, which contains the body’s genetic blueprint, coded in DNA. The genetic code gives cells their ability to replicate as well as to differentiate into their particular cell type. As living entities, cells also have the ability to metabolize food and to get rid of waste products.

Neurons are cells that carry signals in the body, much like copper wires carry signals in electrical circuits. Because neurons function something like wires, they can be very long: The longest neuron in the human body reaches from the base of the spine to the toes. We can think of neurons as serving three functions underlying intelligent behavior. First, neurons relay information from the senses. It’s impossible to interact effectively with the external world unless we have some idea of what’s going on outside. Our senses provide that information by carrying sensations detected by our eyes, ears, and other sense organs to the brain. In order to behave intelligently, we also need to activate muscles to control movement, whether walking, talking, or engaging in other forms of muscle control. Accordingly, a second function of neurons is to enable us to act on the environment through precisely articulated muscle activation. A third neuronal function is most relevant to our quest to understand intelligence. Although neurons are distributed through our bodies, they are concentrated in two locations, the brain and the spinal cord. In the brain especially, amassed neurons are the basis for the spectrum of information-processing activity we call “thinking.” These three functions—sensory input, muscular output, and computation—are all components of intelligent behavior.

Because neurons are the anatomical basis for intelligence at the microscopic level, we need to probe more deeply into how they work. If neurons are the equivalent of wiring in a complex electrical system, say a computer, then to a first approximation a large number of neurons would seem to be advantageous. The human brain is particularly large in comparison to most other species, with about a trillion (1,000,000,000,000) neurons. For any individual, this number stays fairly constant through the life span, a fact that led some scientists to infer that adults lose the ability to generate new neurons.37 As children, some of us were warned against reckless behavior because, it was assumed, brain cells could never be regenerated. We now know that this is not the case. Researchers have found that the adult brain can generate new neurons in the hippocampus, a brain structure that plays a key role in memory formation.38

One trillion neurons is such a huge number that it is nearly unfathomable. To gain some perspective, it equates to roughly 100 times the human population of the earth. That’s impressive, yet it’s not so much the number of neurons that expresses the intricacy of the human brain, but rather the number of connections between neurons that shows just how complex the brain is. After all, the number of neurons in the brain is irrelevant if those neurons are not connected properly. Each neuron typically connects to a thousand or so other neurons. This means that the total number of neuron-to-neuron connections in a single brain is a far greater number—approximately a quadrillion (1,000,000,000,000,000). This number is roughly equal to the number of ants living on the entire earth. The brain’s dazzling intricacy has inspired some neuroscientists to claim that it is the most complex object in the universe.

The connections between neurons have a special name—synapses. A diagram showing a hypothetical neuron and close-up of a synapse connecting two neurons is shown in Figure 4.2. Notice that the synapse connecting the two neurons has a surprising feature. It is not a physical connection at all. Instead, the synapse is actually a very narrow gap separating the communicating neurons. This gap implies that the form of signaling between neurons is different from the way a signal is carried along the length of a neuron. Within a single neuron, and especially along the cable-like section called an axon, the signal is electrical. A rapidly changing voltage is propagated from one end of the neuron to the other. At the synapse, though, the signal is mediated solely through tiny messenger chemicals called neurotransmitters. In the diagram, the tiny particles shown floating across the synaptic gap from left to right symbolize this chemical signal. If enough neurotransmitters cross the gap, then the next neuron line will “fire,” continuing the signal down the line to the next neuron.

Figure 4.2

Neural Activity at the Synapse. Martinez, Michael E., Learning and Cognition: The Design of the Mind, ©2010. Printed and Electronically reproduced by permission of Pearson Education, Inc., Upper Saddle River, New Jersey.

The picture is starting to look complicated. Human intelligence is somehow based on wild profusions of neuronal connections, which are established through a quadrillion microscopically small connections (actually gaps) bridged by neurotransmitters. Even if we take in this complexity and assume that this picture gets even more complicated (and it does!), we can still identify a few simple ideas that give order to concepts that might otherwise seem bewildering. One idea that lends clarity is the association between learning (a mental event) and the formation of new synapses (an anatomical event). This conceptual bridge links what the mind does and how the brain reacts by restructuring itself. Because each life path is uniquely personal, our individual patterns of neuronal connections are likewise uniquely constructed. Far more complex than a fingerprint, the intricate pattern of synapses makes every brain one of a kind, distinguishing even between identical twins, who share the same DNA code. The 1,000,000,000,000,000 or so neuronal connections that compose each brain’s structure form its unique signature, unprecedented and unrepeatable.

The synaptic connections in the brain form our best model for learning and intelligent functioning. Whenever learning occurs, synapses change.39 Learning can involve the formation of entirely new synapses or the modification of existing synapses. It’s possible that people differ significantly in how efficiently their neurons adapt to environmental input. According to one theory, variation in IQ arises directly from how efficiently brains respond to stimulation.40 Brains primed to respond to environmental stimulation, quite possibly through being genetically predisposed to form new connections are, for that reason, more intelligent. Less adaptable brains are not as capable of forming and maintaining new neuronal connections. This theory of brain adaptability, though somewhat speculative, has the attractive feature of merging important facts from theories of intelligence and theories of brain development.

Research on laboratory rats has provided our best understanding of how learning leads to synapse formation. In one study, rats were divided into groups and raised in one of two conditions.41 One group was raised in cages arranged to encourage play and exploration; another group was raised in barren cages. Rats raised in the more complex environment developed healthier, more capable brains. They had larger cerebral cortices and more extensive brain vascularization. At the microscopic level, their brains also had a greater density of synapses.42 These differences were expressed in the behavior of the animals: Rats raised in more complex environments were better at solving problems, such as how to find their way through a maze.

Applied to humans, the upshot of this research is that the connection between brain microanatomy and learning is direct. The brain’s experience alters its structure. This is true not only at the level of lobes and hemispheres, but also at the microscopic and molecular levels, especially at synapses. We must therefore appreciate that the “intelligence” of a laboratory rat is not the result of a unidirectional causal force from biology to behavior. The reverse direction is also operative. Differences in behavioral experience can “echo back” to modify the organism’s biological structure as manifest in modifications to its neurons.43 The enriched environment of some cages is what produced a greater profusion of synapses and vascularization. This general responsiveness is referred to as the brain’s plasticity. Neuronal structures, whether in rodents or in humans, respond to experience in ways that are sometimes surprising. In our quest to explore the potential of experience—the “nurture” term of the nature/nurture equation—the phenomenon of brain plasticity is a key discovery.44

In human populations, we observe clear examples of brain plasticity among professionals whose work places intense demands on focused kinds of cognition. A professional violinist, for example, relies on extraordinary finger dexterity in her left hand. Much less dexterity is required in the fingers of her right hand, which is responsible for bowing. Fine coordination between sensory inputs and muscular control is handled in the somatosensory cortex, a band of brain tissue that runs crosswise just behind the frontal cortex. Brain scans of the somatosensory cortex in violinists reveal an unusually large region devoted to the fingers of the left hand—much larger than the region that supports finger movement in the right hand.45 The asymmetry shows that the brain has responded to the demands placed upon it. The professional violinist “needs” extraordinary finger control in the left hand, and the brain adapts by recruiting neurons to help support that function. Each finger is allocated relatively more neural “real estate” than would normally be the case. This expansion of function to adjacent neurons is beneficial—to a point. In some very experienced violinists, the recruitment of additional neurons can expand to such a degree that areas of cortex that control individual fingers begin to overlap. This results in a decline in finger dexterity, a condition known as focal dystonia. A similar pattern of degradation has been identified among Braille readers. Regions of brain tissue needed to read the elevated dots composing Braille symbols initially expand in such a way that increases sensitivity and reading efficiency. When those same brain areas expand into areas that support adjacent fingers, sensitivity and efficiency can decline.46

The brain’s responsiveness to the cognitive demands of work has likewise been documented in a study of London taxi drivers. Unlike the grid pattern common to many capital cities, London streets run helter-skelter, often intersect at obtuse angles, and generally present drivers with a layout that is quite challenging to master. To become licensed, prospective taxi drivers must demonstrate a thorough knowledge of the London streets and traffic patterns so that they can efficiently navigate between any two points. In this study, the demands on long-term memory as well as spatial reasoning were particularly significant because the research was conducted in the 1990s, prior to widespread use of GPS navigation devices. Perhaps not too surprising, brain scans of the 16 London taxi drivers showed a particularly large posterior hippocampus, a region of the brain that supports two-dimensional spatial processing. The more intriguing finding is that, over time, the drivers’ brains appeared to respond to the memory demands: The posterior hippocampus was largest in taxi drivers with more than 40 years of experience navigating the streets of London.47

Violinists and taxi drivers typically devote thousands of hours to their professions. Given such prolonged experience, the brain’s adaptation to long-term demands may not be too surprising. Yet brain adaptation seems to accompany short-term learning and skill development as well. One study used the skill of juggling to investigate this possibility. The point was to determine whether learning to juggle would result in measurable changes to the brain. Researchers divided a group of 24 nonjugglers into two groups, asking 12 participants to independently learn how to juggle using the basic three-ball cascade routine. No new demands were placed on the other 12 participants.

After three months, all 12 in the experimental group were successful in learning to juggle for at least 60 seconds. When their brains’ morphologies were compared before and after learning to juggle, the scans showed changes in regions specific to visualization in the temporal lobes. The total volume of those areas increased an average of 3%—a small but measurable difference.48 No such structural changes were detected in the brains of the nonjuggling group. A third scan was conducted three months later. In the intervening period, none of the study participants practiced juggling. The third scan showed that the structural changes had reversed partially and now showed only a 2% structural expansion over baseline levels. The study’s authors interpreted the findings as showing that the human brain’s macrostructure can change in direct response to training. The specific mechanisms underlying the structural change were not completely clear—local expansion of gray matter might be the result of changes in existing neurons or, possibly, production of new neurons. Whatever the full explanation, the brains of jugglers adapted structurally, presumably in response to, at most, a few dozen hours of practice.

The research on brain adaptation tells an important story: The brain responds to the specific demands placed upon it; in particular, it exhibits structural changes as it adapts. Those changes vary in intensity and scale: Some alterations amount to no more than heightened sensitivity of the connections between neurons. A more significant form of adaptation is the formation of new synapses, which supports longer-term knowledge and skill development. The most radical structural change entails rewiring large sections of the cortex. Although we are used to thinking about learning along the timeline of minutes or hours, wholesale brain rewiring can occur over the course of many years.49 We see manifestations of significant neurological “remodeling” in the neural organizations of professional violinists and taxi drivers. Such remodeling might even include brain enlargement. Some scholars have advanced the intriguing hypothesis that correlations between brain size and IQ might arise, at least in part, from the behavior of individuals. Those who seek more intellectually challenging environments might experience a small but significant gain in total brain volume.50

The research evidence is quite clear: The human brain exhibits amazing flexibility. We saw evidence of this flexibility in the brain’s adaptations to the professional demands on violinists and taxi drivers. Dramatic manifestations of functional flexibility are also apparent when large sections of the brain are injured or must be removed surgically. In some instances, trauma results in very little reduction of cognitive function; in other cases, those cognitive functions that are compromised can be substantially relearned. Sometimes this means that functions originally supported by one brain region are regained when the brain “reassigns” those functions to new areas. So while the brain’s anatomy maps to specific cognitive functions, the brain can deviate from those mappings when its plasticity is what the organism needs.

Studies of brain plasticity tell us a lot about the long-term effects of the environment on brain structure. Brain adaptations often occur over very long spans of time—years in the case of many vital forms of cognitive development, such as becoming literate, growing the capacity for abstract thought, or building the knowledge base constituent to expertise. But anyone who has been startled by a loud sound knows that the brain can also respond very rapidly to stimuli. A complete stimulus-response event can take place in mere fractions of a second. These vary rapid adaptations are surprisingly informative. They, too, can tell us quite a bit about how minds differ and why. We now shift our attention to those rapid and evanescent changes in neural activity commonly known as brain waves.

Functionally, the brain is an electrochemical organ that transmits information over biological circuits. Electrical activity in the brain can be monitored by means of electrodes attached to the scalp. This is the basis of electroencephalographic (EEG) research. As in IQ measurement, individual differences make EEG research possible as well as interesting. People differ in the patterns of brain waves that are picked up in an EEG study. One way to explore those differences is by studying so-called event-related potentials (ERPs). Here, an “event” is simply the presentation of some stimulus, such as a sound or a flash of light, and “potential” refers to voltage changes at the scalp. So, quite simply, ERP research involves studying the voltage changes on the scalp when the brain reacts to a new stimulus. Brains respond quickly to unexpected stimuli, so important voltage changes occur within the time span of a single second. This means that changes in wave forms are described in milliseconds (1/1000 of a second), depending on how quickly they occur after the stimulus “event.”

The question we want answered is whether variations in EEG patterns relate to differences in IQ. Many decades of research have confirmed regular associations between IQ and evoked potentials. People respond differently depending on whether the stimulus is novel or familiar. Normally, we are alert to new stimuli—think of how a police siren gets our attention. We are instantly alert, but as the seconds pass we become less focused on the siren and expand our attention to the other objects and events in our immediate environment. Even though this pattern is common, it varies somewhat depending on the person. Precise measurements of brain waves show that high-IQ subjects respond with greater intensity to novel stimuli and with less intensity to familiar stimuli.51 In other words, more intelligent brains are hyperalert to novelty, but then adjust rapidly to the new information and thereafter pay less attention to what is by now familiar. A comparable pattern has been found in infants: “Smarter” babies adapt rapidly. Infants who quickly shift attention away from familiar patterns and toward new patterns tend to have higher IQ scores years later.52

Other aspects of brain waves are amplitude (height) and complexity. Researchers have sometimes found associations between these wave parameters and IQ, but the associations are inconsistent. Findings from one research study are often unconfirmed in another. There is one exception, however. Among the various wave forms, one has shown consistent results—the p300. This is the positive (p) spike that occurs approximately 300 milliseconds after the stimulus. A graph showing the p300 is depicted in Figure 4.3.

To interpret the graph, note that positive voltages, or “spikes,” extend downward. As its name p300 suggests, the time delay of the positive spike is typically 300 milliseconds, but it can occur as long as 600 milliseconds after the stimulus. The graph shows a p300 spike at about 400 milliseconds. Longer delays, called latencies, are associated with unfamiliar or more complex stimuli, but latencies also vary from person to person. Researchers not only measure the delay of the p300, they also measure its height, or amplitude. Both parameters—wave height (or amplitude) and time delay (latency)—correlate with IQ scores.

The p300 is the brain’s signature response to recognition, revealing a sense of “I know what that is.” Higher IQs are associated with higher amplitudes and shorter latencies—in everyday language, with reactions that are intense and fast. The associations with IQ have been most consistent with latency. Correlations between p300 latency and IQ are in the range of r = .30 to r = .50. Shorter latencies imply that intelligence is associated with rapid recognition, which, in turn, implies efficient information processing. Even though the differences in the p300 latency are measured in fractions of a second, the marginal advantages in efficiency may accumulate over time. A small advantage can, in aggregate, lead to superiority in rendering effective decisions, learning abstract information, and solving complex problems.

Figure 4.3

Example of an Evoked Potential Illustrating the P300 Spike.

Another way to understand the relationship of intelligence to the brain is to explore its basis in brain metabolism. To do so, we cannot rely on measures of voltage fluctuations at the scalp. Instead, we need tools that can more directly measure brain activity internally by capitalizing on sophisticated imaging technologies, such as CT and PET scanners. CT, PET, and other imaging technologies measure somewhat different aspects of brain activity or metabolism, but all give us insight into brain activity at a greater level of precision than that afforded by clinical studies of brain damage or by external recordings of electrical activity (EEGs). To illustrate, let’s consider what PET scans tell us about intelligent brains.

PET stands for positron emission tomography. PET scans require injecting a small amount of radioactive glucose into the bloodstream. Glucose serves as the basic fuel for the body’s cells, so it is absorbed and metabolized throughout the body. However, glucose is absorbed fastest by the most active cells. This means that regions of the brain that are most active will absorb comparatively more of the tracer glucose. Differential uptake is important, but so is decay of the radioactive portion. An unstable fluorine atom on the glucose molecule releases a positively charged electron, called a positron. The released positrons scatter in all directions, and so the function of the PET scanner is to determine the points in the brain from which the positrons are emanating. By showing which brain areas produce relatively more positron emissions, the PET device can infer quite precisely which areas are most metabolically active. Cognitive neuroscientists extend the chain of inferences one link further: They reason that the most active brain areas mediate the cognitive functions in play while scanning occurs.

Through a chain of reasoning, PET technology can say something about the level and nature of thinking that occurs during the scan. With that as background, let’s now examine more directly the connections between brain activity and intelligence. In particular, let’s consider a study of how brain metabolism is associated with a classic test of intelligence, Raven’s Matrices. The most basic question we can ask is whether the brain is more active when engaged in Raven’s Matrices than in a less demanding task. Research confirmed that those who worked on a repetitive task consumed less glucose than those who worked on Raven’s Matrices. This is exactly what we would expect: As the brain works harder, it consumes fuel faster.

The surprise came when comparing the scores on Raven’s to the glucose consumption rates. Brain metabolism and Raven’s scores were negatively correlated, indicating that those who had the highest scores had the lowest rates of glucose consumption.53 Apparently, higher-IQ participants solved the puzzles most efficiently. Not only was their cognitive performance higher, their brains were working less hard to achieve a superior result. This pattern has since been replicated using a variety of IQ measures, with correlations between IQs and brain metabolisms averaging about r = -.50.54 One possible explanation for the negative correlations is that higher-IQ people have relatively more gray matter in regions correlated with scores on intelligence tests. The larger volumes of gray matter would require less average metabolic activity in the relevant brain region.55 Another possibility is that more intelligent brains simply operate more efficiently regardless of size or composition.

The brain’s capacity for intelligent thought is partly attributable to its ability to learn from experience. Memory is therefore one aspect of the intelligent mind, though it is far from the only, or even the most important, component of intelligence. Still, given that memory plays a role in the intellectual toolkit, we need to consider how the brain supports the formation, storage, and retrieval of memories.

Many years ago, neuroscientists thought that the brain might store complete and accurate memories from the entire life experience. If true, then every single event would be recorded in the brain, potentially available for playback if the right cue were presented to trigger that particular memory. This hypothesis was famously set forth by the great Canadian neurosurgeon Wilder Penfield based on his experiences performing brain surgery on epileptic patients. While preparing to remove brain structures to alleviate the symptoms of epilepsy, Penfield stimulated the surface of the brain to create a “map” of the patient’s brain to improve surgical accuracy. The patients were awake during the procedure through conscious sedation.

When their brains were probed, some of Penfield’s patients reported “hearing” sounds or “seeing” flashing lights. None of these reports were unusual; sensations of light and sound had previously been reported by patients undergoing brain surgery. But when Penfield stimulated the temporal lobes on either side of the brain, something unusual occurred: Some patients immediately described vivid personal memories, such as a familiar song or the voice of an old friend. The patients seemed to be reliving events from their past. In some cases, restimulating the same spot caused an identical memory to be reexperienced. Penfield interpreted these finding by hypothesizing that stimulation applied to the brain surface “unlocks the experience of bygone days.”56 To Penfield, these results “came as a complete surprise.” He summed up his findings by saying that “there is within the brain a … record of past experience which preserves the individual’s current perceptions in astonishing detail.” The view of human memory as complete and accurate—much like a video camera—came to be widely believed. Is it true? Does the brain store a complete record of every life experience? For better or worse, the answer is no.

Penfield’s hypothesis, though fascinating in the extreme, was incorrect. Instead of being complete and accurate, human memory is highly selective and vulnerable to distortion. As every lawyer knows, any two eyewitnesses to an accident or crime scene can remember events quite differently, even though both feel confident in the accuracy of their memories. Conflicting memories of events is in fact a recurring challenge to the practice of trial law.57 That’s precisely because memory does not function like a video camera. Instead, long-term memory is an interpretation of experience filtered through the mind’s preexisting categories, values, and expectations. What we remember may have little to do with what actually occurred.

Penfield’s interpretation of memory as complete and accurate was challenged in two ways. First, only about 10% of Penfield’s patients reported reliving life experiences when their brains were probed. Second, and more significant, patients’ recollections during surgery were never confirmed for accuracy. Electrical stimulation might well have evoked personal memories, but those could have been exactly the sort of incomplete and highly processed remembrances that we hear whenever a person tries to recount an event from the past. Dr. Penfield eventually backed down from his belief that the brain stores a memory of every life experience, but he never renounced the hypothesis completely. Despite the lack of support for Penfield’s original hypothesis, the myth of complete and accurate memory storage lives on. This widely held myth proves that the workings of the human mind and its underlying brain mechanisms are not obvious, and that we can easily acquire false ideas about how they operate.

Even if we set aside Penfield’s hypothesis as a poor generalization of how memory works, we know that some rare individuals do in fact have highly accurate memories. Commonly called photographic memory, the ability to remember past events—especially factual information—in great detail is known by psychologists as eidetic memory. The most celebrated “eidetiker” was the Russian journalist Solomon Shereshevskii. While working as a newspaper reporter, Shereshevskii frustrated his editor because the reporter never took notes while working on an assignment. Notes were superfluous: Shereshevskii could remember the details of any story—who, what, when, and so on—indefinitely and with perfect accuracy.

The case of the young reporter was fascinating to the Russian psychologist Alexander Luria, who studied Shereshevskii and, to preserve anonymity, gave him the code name “S.” Luria confirmed that S’s memory was indeed extraordinary, noting that

his memory had no distinct limits… there was no limit either to the capacity of S’s memory or to the durability of the traces he retained…. He had no difficulty reproducing any lengthy series of words whatever, even though these originally had been presented to him a week, a month, a year, or many years earlier.58

While documenting the case of S, Luria discovered many aspects of his psychology that were less than impressive and, in some cases, alarming. First, S had only average intelligence. This fact alone should prompt us to question whether having a highly accurate memory is necessarily a contributor to intelligent functioning. Of course, intelligence is manifest not only on IQ tests but also in behavior. Yet in practical ways as well, S was not the picture of a psychologically integrated human being.

According to Luria, S seemed to owe his extraordinary memory to a habit of forming profuse and unusual connections. He thought of numerals, for example, not simply as symbols but as characters with imputed personalities—7 was a man with a mustache, and 8 was a very stout woman! Normally, the ability to form connections between ideas is a functional advantage to cognition, but in S the connections were so extensive that they often resulted in confusion. S could remember details, but at the cost of forgetting context, such as whether a particular conversation took place last week or last year. Eventually, his mind came to resemble “a junk heap of impressions” more than an orderly database.59 These drawbacks began to take a serious toll. S could no longer carry out his professional responsibilities or live a normal life. Eventually, Solomon Shereshevskii was committed to an asylum for mental pathology. The story of S lives on in the annals of psychology as a vivid reminder not to confuse an extraordinary memory with either high levels of intelligence or an effective and satisfying life.

A different variety of extraordinary memory was reported in 2006 by neuroscientists at UCLA and the University of California, Irvine.60 The case involved a 40-year-old woman, “AJ,” who had an uncanny ability to recall the events of her life over a span of several decades. Given any calendar date from 1974 forward, AJ could instantly recall the day’s events. Her extraordinary memory was focused exclusively on personal information—the events of her life. In that way it differed from the heightened memory capabilities of S and, indeed, all other known cases of exceptional memory. AJ’s mental habits seemed to bolster her autobiographical memories. Interviews revealed that she had an uncontrollable tendency to constantly mentally “relive” events from her past. She described her daily conscious experience as viewing two movies simultaneously—one focused on her present experience and the other a rerun of past events.

AJ’s memory for autobiographical events is striking, but did not convey the advantages we normally associate with high intelligence. Indeed, AJ’s measured IQ was a modest 93, within the range of normal but slightly below average, although on memory subtests she scored somewhat higher than average. Academically, AJ was not a standout student: She received mostly Cs, with a smattering of Bs and an occasional A. Although AJ earned a bachelor’s degree, she admitted that learning course material was not easy. Somewhat reminiscent of the Russian mnemonist S, AJ reported suffering from recurrent anxiety and depression, which over her adult years were treated with medication and psychotherapy. What can we learn from the case of AJ, S, and lives of those rare individuals who exhibit extraordinary memory? A fair conclusion is that a photographic or eidetic memory is not necessarily the boon that most people would believe. An extraordinary memory does not lead in any predictable way to academic or career success, nor to a satisfying life.

Before leaving the fascinating topic of exceptional memory, let us explore unusual modes of perception that, in some cases, may enhance a person’s ability to remember information. Solomon Shereshevskii, for example, routinely forged connections between new information and the extant knowledge in his long-term memory. Those connections included interpreting digits as having personalities. Like S, the ability to see more than the stark information in everyday experience is found among people who have the capacity for synesthesia, the rare phenomenon in which sensory input in one modality is experienced in a second modality.61 One of the most extraordinary forms of synesthesia involves the ability to see sounds as color. For such synesthetes, hearing a piece of music produces a continuously varying experience of color in addition to the normal perceptions of sound variation in pitch, tone, timbre, and rhythm. Streams of color drift through the synesthete’s field of view, with hue varying by pitch and brightness increasing or decreasing in correspondence with the musical dynamics.

The most common form of synesthesia is grapheme-color synesthesia. Its defining characteristic is that letters of the alphabet are perceived as having colors. The letter “A,” though printed in black ink, will commonly appear to the synesthete as red. Other letters also appear as having consistent mappings to specific colors. Neuroscientists have found objective evidence for the synesthete’s subjective experience: Brain scans reveal that the perceptions of letters activate color-processing areas in the brains of grapheme-color synesthetes. Those same regions are inactive in the scans of nonsynesthetes.

A synesthete may assume that everyone else perceives the alphabet as differentiated by color, producing quite a shock when she discovers that her perceptions are unusual. One synesthete, Lynn Duffy, remembered vividly when at age 16 she realized that her perceptions were not like everyone else’s. During a conversation with her father, Duffy described what happened when she changed a “P” into an “R.” She said to him:

I realized that to make an R all I had to do was first to write a P and then draw a line down from its loop. And I was so surprised that I could turn a yellow letter into an orange letter just by adding a line.62

Duffy’s father was startled to hear Lynn’s statement. He had no idea that his daughter perceived the alphabet in distinct colors.

A fascinating variety of synesthesia is the lexical-gustatory form in which the synesthete experiences sounds as distinct tastes. One synesthete reported, for example, that the sound of the letter “K” evoked the taste of an egg. Such odd cross-sensory experiences are uncommon: Only about 1 person in 200 experiences synesthesia. Though rare, the forms of synesthesia are quite varied—more than 100 varieties have been identified.

The experiential reality of synesthesia is well documented through highly consistent self-reports over time as well as through corroborating data from brain-imaging studies. Even so, the precise neurological basis of synesthesia is unclear. One quite intuitive theory postulates neurological cross-wiring. This explanation is credible given that brain regions that process written symbols and the regions that process color are anatomically quite close. In the brain of the synesthete, neurons may cross into adjacent regions that are normally neurologically distinct. A related hypothesis is that the inhibitory neurotransmitters in synesthetes may not effectively isolate neuron activity, and so excitatory signals might spill over into adjacent brain regions.

Though neurologically abnormal, synesthesia is not typically disadvantageous. Unlike S, most people who experience synesthesia are not predisposed to psychopathology, and in fact may be cognitively advantaged in some ways. Synesthetes may experience superior memory for new information because it is encoded in multiple forms, as in the case of S. Synesthetes might also be advantaged in creative pursuits as the firsthand experience of cross-modality sensation may provoke metaphors that can be expressed artistically. Subjectively, the experience of synesthesia is often reported as either neutral or pleasant.

Both eidetic memory and synesthesia are statistically very rare. Neither should be considered to be necessarily advantageous to the affected person. If a “photographic” memory or cross-sensory experiences are helpful at all, they will most likely be so in narrower pursuits. Neither has the kind of sweeping power that is normally meant by the word intelligence.

Brain-imaging technologies give us exciting insights into how neurological functioning relates to intelligence. Those technologies—PET, CT, and other methods—connect to a far older “technology” of tests and test scores. The classical approach to studying human intelligence is the psychometric paradigm, which rests firmly on correlations among test scores and the search for underlying factors. Starting with Spearman and Thurstone, research on intelligence relied on psychometric techniques, especially factor analysis, to reveal patterns among diverse tests and, ultimately, to give us insight into the nature of human intelligence.

The psychometric paradigm, though powerful, is not without limitations. One downside of the psychometric approach is that it often treats cognitive factors as “black boxes” whose inner workings remain largely mysterious. The term “black box” implies that such factors as fluid intelligence, verbal ability, and spatial ability tend to be accepted at face value, which forestalls probing their nature more analytically. Insufficient attention is paid to what forms of thinking occur when people exercise these abilities. We need to ask: How do intelligent people represent knowledge and reason through problems? Above all, we want to know: How does thinking differ between people who register high in cognitive ability and those who are less able? Understanding the thought processes that underlie cognitive factors is not really the province of psychometrics. To obtain a more detailed picture of the kinds of thinking that underlie intelligent performance, we must draw upon a different paradigm—cognitive theory.63

The word cognitive means thinking, so we can readily appreciate that the basic goal of cognitive theory is to understand the nature of thought. Superficially, such a quest might seem impossible. The mind’s products—those varied and monumental achievements of civilizations—can be startlingly complex. How can such great achievements—writing a novel, solving a complex mathematical problem, and designing skyscrapers—be possible given the capacity limitations, distorted perceptions, and error-prone reasoning of even the world’s greatest minds? Ultimately, our theories must explain not only how such marvelous feats are possible, but also why people differ so markedly in their ability to achieve them.64 It’s easy to assume that the mind must be unfathomably complex and forever opaque to scientific analysis. Fortunately, this is not so. The basic structure of the mind is surprisingly simple.

We can gain insight into the nature of human cognition by drawing on a very helpful metaphor, the digital computer. Although human minds and computers are quite different in many ways, they do evince some key similarities.65 Both, for example, have multiple forms of memory. Whenever you purchase a new computer, you are likely to be interested in the information storage capacity for various models. Clearly, human cognition also depends quite fundamentally on memory and, like various models of computers, human beings differ in their memory capacity. Human minds and computers are also alike in that both function not simply by storing information, but also by transforming information to make it more useful. In other words, both human minds and computers are information processors. So even though we must be wary of stretching the computer metaphor too far, we can at minimum use the comparison to gain a foothold on understanding how the human mind works. With that in mind, let us explore what cognitive theory has to offer.

Consider as a starting point that the cognitive perspective recognizes two basic forms of memory—short-term and long-term memory—as well as the flow of information between those two fundamental structures.66 Even though you may hear the term “short-term memory” in everyday conversations, it is often used incorrectly. In casual usage, short-term memory refers to memory of recent experiences, such as where you left your keys. More accurately, though, it refers the content of current thought. Whatever you are thinking about at this very moment—whether it’s the ideas presented on this page or daydreams about your next vacation—that is the content of your short-term memory. Understood this way, the content of your short-term memory corresponds to your immediate attention and awareness. If you are thinking, then short-term memory is “where” that thinking takes place.67

Now let’s consider long-term memory. As the name suggests, this memory holds the vast array of knowledge that each of us acquires over the course of life. Long-term memory stores the totality our knowledge of words, facts, people, life experiences, skills, and sensory perceptions. To maintain such a vast array of knowledge, long-term memory must be enormous in capacity as well as accommodating to diverse kinds of knowledge. Just how big is long-term memory? Unlike the capacity of your computer’s hard drive, the capacity of long-term memory seems to be limitless. Indeed, the growth of knowledge over time seems to function something like a positive feedback loop in which any knowledge gained becomes the foundation for yet further learning. Layer upon layer, knowledge accumulates over a lifetime, with new knowledge always building on what is already known.

Clearly, short-term and long-term memory are quite different. Whereas long-term memory has virtually boundless capacity, the capacity of short-term memory is very restricted. You can’t possibly think about 20 different ideas at once. At most, your momentary awareness consists of only a few ideas. This defining feature of cognition—the small capacity of short-term memory—is quite important to understanding how the mind works. The human mind can actively hold only a very limited amount of information—you can entertain only a small number of discrete ideas at any given moment.

To illustrate, consider what happens when you try to memorize a new phone number. Memorizing seven digits is not too difficult, but trying to memorize 10 digits might stretch your mind’s ability to remember every digit in the proper order. You might well let a digit slip from memory or reverse the order of two digits. This simple difference—between seven digits and 10—tells us something important. It begins to parameterize short-term memory by pinning a number on its capacity. That number is somewhere around seven pieces of information.68 It also implies that this processing constraint directly affects our ability to handle complexity. We often face decisions or tasks that are extremely data-rich, so much so that they can easily overwhelm our short-term memory. We simply cannot hold every bit of relevant information in conscious awareness. If we cannot fully wrap our minds around a problem, some important pieces of information may slip our memory and, subsequently, performance suffers. In this way, the restricted capacity of short-term memory places limits on our ability to think and act intelligently. This example illustrates how the design of the mind—the cognitive architecture—predicts and explains how the mind works or, in some cases, fails to work. That architecture is the structural basis for all human cognition, including intelligent thought.69

Our understanding of short-term memory has evolved over time. Psychologists eventually came to appreciate that this form of memory not only holds information, it also works on that information. It’s one thing to remember the numbers 10 and 11, but another to multiply them together to get 110. Because the mind actively works on information, we must recognize a related memory function—working memory.70 Short-term memory and working memory are highly correlated but somewhat different in meaning and how they are measured.71 A typical test of short-term memory is digit span. If I tell you a series of random digits—say, 5, 8, 3, 2, 9—your digit span would be the maximum number of digits you could repeat back accurately. To measure working memory, though, means that you would have to transform the information in some way, such as by saying the digits reverse order—9, 2, 3, 8, 5. This is the backward digit span task, a classic measure of working memory. Another working memory task is to read a series of sentences and answer comprehension questions, and to later recall the last words of each sentence.72 Here, again, memory is combined with mental work.

Two additional facts about working memory are important. First, people vary in their ability to hold and transform information in working memory. People with larger working memory capacities have a key advantage because they can solve problems and complete tasks that are more complex. That being so, it should be no surprise that working memory capacity and IQ are highly correlated.73 In some research that correlation is as high as 0.8 or 0.9.74 Working memory also correlates with many other expressions of higher-order cognition, including reading comprehension, writing, following directions, reasoning, and complex learning. All these correlations make sense: As the immediate capacity for processing information increases, the mind can handle more complex information at any given moment, and this advantage ought to apply to virtually any intellectual task. Working memory preserves information even when facing potential distraction by competing or irrelevant information.75 A person with a larger working memory capacity is better equipped to comprehend problems with many elements, think through problems without being overwhelmed, and generate complete and accurate responses. Through exercising control over attention, the mind is primed to operate intelligently. By virtue of data-linked correlations with intelligent thinking, working memory capacity is a fundamental parameter of the mind’s ability to process information, akin to the basic operating parameters of an engine, such as torque or horsepower. Working memory is so important to the mind’s operations that it has been called “psychology’s best hope … to understand intelligence.”76

Now consider this: What if it were possible to increase working memory capacity? For many years, the conventional wisdom among psychologists was that working memory is impervious to deliberate change. Although working memory varied from person to person, within individuals it appeared to be a stable parameter whose precise capacity was presumably an inherited function of the individual’s nervous system. People whose working memory capacity was larger benefited from a superior ability to actively process more information, an advantage that translated to higher scores on cognitive tests, including tests of intelligence. But, like fingerprints, working memory capacity seemed to be dictated by genetics or other factors beyond control. The assumption of fixed capacity now appears to be false; recent research shows that working memory can in fact be expanded. How is that possible?

At one time, it was common to obtain computer memory upgrades by adding memory cards to open slots or by swapping low-capacity memory cards with high-capacity ones. In the human mind, the effect is achieved by the mental equivalent of exercise. Working memory is responsive to tasks that stretch it to its limits. In the psychology laboratory, one task that has achieved this result is the “n-back” procedure.77 This is a computer-based task in which objects are presented on a computer screen. The point is to remember the position of objects in previously presented screens. The key screen might be one back from the present screen, two back, and so on. Difficulty goes up with the complexity of the information on the computer screen as well as how many screens must be kept in memory to answer correctly.

Presumably, other tasks that gradually and consistently stretch working memory to its limits can produce a similar effect. With lots of practice, for example, you may be able to increase the capacity of your backward digit span. But increasing your backward digit span is, by itself, rather pointless. After all, the point is not to increase your ability to repeat a string of digits backward, but something more significant: To increase your working memory capacity for all kinds of stimuli on a wide variety of tasks. That sort of memory augmentation would be truly advantageous. The true test of whether memory enhancement works is whether or not we find evidence of performance gains on different tasks—tasks that also challenge working memory but in ways not previously trained or tested. In the language of psychology, the question is whether apparent gains in working memory capacity transfer to new tasks.78 Recent research shows that gains in working memory capacity do, in fact, transfer.79 Working memory, a central component of the intelligent mind, can be expanded with benefits extending beyond the original training materials. For those interested in the elevation of human intelligence, this is welcome news.