CHAPTER 11

Low Tech: Social Engineering and Physical Security

In this chapter you will

• Define social engineering

• Describe different types of social engineering techniques and attacks

• Describe identity theft

• List social engineering countermeasures

• Describe physical security measures

As the story goes, a large truck was barreling down a highway one day carrying equipment needed to complete a major public safety project. The deadline was tight, and the project would be doomed to failure if the parts were delayed for too long. As it journeyed down the road, the truck came to a tunnel and was forced to a stop—the overhead clearance was just inches too short, not allowing the truck to pass through, and there was no way around the tunnel. Immediately calls were made to try to solve this problem.

Committees of engineers were quickly formed and solutions drawn up, with no idea too outlandish and no expense spared. Tiger teams of geologists were summoned to gauge the structural integrity of the aging tunnel in preparation for blasting the roof higher for the truck to pass. The U.S. Air Force was consulted on the possibility of airlifting the entire truck over the mountain via helicopter. And, while all this was going on, hundreds gathered at the blocked entrance to the tunnel, everyone postulating their own theory.

A little girl wandered out of the crowd and walked up to the lead engineer, who was standing beside the truck scratching his head and wondering what to do. She asked, “Why is the truck blocking the road?” The man answered, “Because it’s just too tall to get through the tunnel.” She then asked, “And why are all these people here looking at it?” The man calmly answered, “Well, we’re all trying to figure out how to get it through to the other side without blowing up the mountain.” The little girl looked at the truck, gazed up at the man, and said, “Can’t you just let some air out of the tires and roll it through?”

Sometimes we try to overcomplicate things, especially in this technology-charged career field we’re in. We look for answers that make us feel more intelligent, to make us appear smarter to our peers. We seem to want the complicated way—to have to learn some vicious code listing that takes six servers churning away in our basement to break past our target’s defenses. We look for the tough way to break in when it’s sometimes just as easy as asking someone for a key. Want to be a successful ethical hacker? Learn to take pride in, and master, the simple things. Sometimes the easy answer isn’t just one way to do it—it’s the best way. This chapter is all about the nontechnical things you may not even think about as a “hacker.” Checking the simple stuff first, targeting the human element and the physical attributes of a system, is not only a good idea, it’s critical to your overall success.

When it comes to your exam, social engineering and physical security aren’t covered heavily. In fact, outside of phishing, many of you won’t see much of this at all on your exam. That does not mean it’s not important—in my humble opinion, social engineering is as important as many of the technical efforts you’ll use on your job. I’m not saying you’ll always be able to talk your way into a hardened facility or gather connectivity credentials just by smiling and talking nicely, but I am saying it’s a very important part of successful hacking and pen testing. And isn’t that what this is all supposed to be about anyway?

Social Engineering

Every major study on technical vulnerabilities and hacking will say the same two things. First, the users themselves are the weakest security link. Whether on purpose or by mistake, users, and their actions, represent a giant security hole that simply can’t ever be completely plugged. Second, an inside attacker poses the most serious threat to overall security. Although most people agree with both statements, they rarely take them in tandem to consider the most powerful—and scariest—flaw in security: what if the inside attacker isn’t even aware she is one? Welcome to the nightmare that is social engineering.

Show of hands, class: how many of you have held the door open for someone racing up behind you, with his arms filled with bags? How many of you have slowed down to let someone out in traffic, allowed the guy with one item in line to cut in front of you, or carried something upstairs for the elderly lady in your building? I, of course, can’t see the hands raised, but I bet most of you have performed these, or similar, acts on more than one occasion. This is because most of you see yourselves as good, solid, trustworthy people, and given the opportunity, most of us will come through to help our fellow man or woman in times of need.

For the most part, people naturally trust one another—especially when authority of some sort is injected into the mix—and they will generally perform good deeds for one another. It’s part of what some might say is human nature, however that may be defined. It’s what separates us from the animal kingdom, and the knowledge that most people are good at heart is one of the things that makes life a joy for a lot of folks. Unfortunately, it also represents a glaring weakness in security that attackers gleefully, and successfully, take advantage of.

Social engineering is the art of manipulating a person, or a group of people, into providing information or a service they otherwise would never have given. Social engineers prey on people’s natural desire to help one another, their tendency to listen to authority, and their trust of offices and entities. For example, I bet the overwhelmingly majority of users will say, if asked directly, that they would never share their password with anyone. However, I bet out of that same group a pretty decent percentage of them will gladly hand over their password—or provide an easy means of getting it—if they’re asked nicely by someone posing as a help desk employee or network administrator. I’ve seen it too many times to doubt it. Put that request in an official-looking e-mail, and the success rate can go up even higher.

Social engineering is a nontechnical method of attacking systems, which means it’s not limited to people with technical know-how. Whereas “technically minded” people might attack firewalls, servers, and desktops, social engineers attack the help desk, the receptionist, and the problem user down the hall everyone is tired of working with. It’s simple, easy, effective, and darn near impossible to contain. And I’d bet dollars to doughnuts the social engineer will often get just as far down the road in successful penetration testing in the same amount of time as the “technical” folks.

And why do these attacks work? Well, EC-Council defines five main reasons and four factors that allow them to happen. The following are all reasons people fall victim to social engineering attacks:

• Human nature (trusting in others)

• Ignorance of social engineering efforts

• Fear (of consequences of not providing requested information)

• Greed (promised gain for providing the requested information)

• A sense of moral obligation

As for the factors that allow these attacks to succeed, insufficient training, unregulated information (or physical) access, complex organizational structure, and lack of security policies all play roles. Regardless, you’re probably more interested in the “how” of social engineering opposed to the “why it works,” so let’s take a look at how these attacks are actually carried out.

Human-Based Attacks

All social engineering attacks fall into one of three categories: human based, computer based, or mobile based. Human-based social engineering uses interaction in conversation or other circumstances between people to gather useful information. This can be as blatant as simply asking someone for their password or as elegantly wicked as getting the target to call you with the information—after a carefully crafted setup, of course. The art of human interaction for information gathering has many faces, and there are innumerable attack vectors to consider. We won’t, because this book is probably already too long, and most of them ECC doesn’t care about, so we’ll just stick to what’s on your exam.

Dumpster diving is what it sounds like—a dive into a trash can of some sort to look for useful information. However, the truth of real-world dumpster diving is a horrible thing to witness or be a part of. Dumpster diving is the traditional name given to what some people affectionately call “TRASHINT” or trash intelligence. Sure, rifling through the dumpsters, paper-recycling bins, and office trashcans can provide a wealth of information (like written-down passwords, sensitive documents, access lists, PII, and other goodies), but you’re just as likely to find hypodermic needles, rotten food, and generally the vilest things you can imagine. Oh, and here’s a free tip for you—make sure you do this outside. Pulling trash typically requires a large area, where the overall smell of what you retrieve won’t infect the building in which you’re operating. Febreze, thick gloves, a mask, and a strong stomach are mandatory. To put this mildly, Internet Tough Guys are often no match for the downright nastiness of dumpster diving, and if you must resort to it, good luck. Dumpster diving isn’t as much “en vogue” as it used to be, but in specific situations it may still prove valuable. Although technically a physical security issue, dumpster diving is covered as a social engineering topic per EC-Council.

Probably the most common form of social engineering, impersonation is the name given to a huge swath of attack vectors. Basically the social engineer pretends to be someone or something he or she is not, and that someone or something—like, say, an employee, a valid user, a repairman, an executive, a help desk person, an IT security expert…heck, even an FBI agent—is someone or something the target either respects, fears, or trusts. Pretending to be someone you’re not can result in physical access to restricted areas (providing further opportunities for attacks), not to mention any sensitive information (including credentials) your target feels you have a need and right to know. Pretending to be a person of authority introduces intimidation and fear into the mix, which sometimes works well on “lower-level” employees, convincing them to assist in gaining access to a system or, really, anything you want. Just keep in mind the familiar refrain we’ve kept throughout this book and be careful—you might think pretending to be an FBI agent will get a password out of someone, but you need to be aware the FBI will not find that humorous at all. Impersonation of law enforcement, military officers, or government employees is a federal crime, and sometimes impersonating another company can get you in all sorts of hot water. So, again, be careful.

Of course, as an attacker, if you’re going to impersonate someone, why not impersonate a tech support person? Calling a user as a technical support person and warning him of an attack on his account almost always results in good information.

Tech support professionals are trained to be helpful to customers—it’s their goal to solve problems and get users back online as quickly as possible. Knowing this, an attacker can call up posing as a user and request a password reset. The help desk person, believing they’re helping a stranded customer, unwittingly resets a password to something the attacker knows, thus granting him access the easy way. Another version of this attack is known as authority support.

Shoulder surfing and eavesdropping are other valuable human-based social engineering methods. Assuming you already have physical access, it’s amazing how much information you can gather just by keeping your eyes open. An attacker taking part in shoulder surfing simply looks over the shoulder of a user and watches them log in, access sensitive data, or provide valuable steps in authentication. Believe it or not, shoulder surfing can also be done “long distance,” using vision-enhancing devices such as telescopes and binoculars. And don’t discount eavesdropping as a valuable social engineering effort. While standing around waiting for an opportunity, an attacker may be able to discern valuable information by simply overhearing conversations. You’d be amazed what people talk about openly when they feel they’re in a safe space.

Tailgating is something you probably already know about, but piggybacking is a rather ridiculous definition term associated with it you’ll need to remember, even though many of us use the terms interchangeably. Believe it or not, there is a semantic difference between them on the exam—sometimes. Tailgating occurs when an attacker has a fake badge and simply follows an authorized person through the opened security door. Piggybacking is a little different in that the attacker doesn’t have a badge but asks for someone to let her in anyway. She may say she’s left her badge on her desk or at home. In either case, an authorized user holds the door open for her even though she has no badge visible.

Another access card attack that’s worth mentioning here may not be on your exam, but it should be (and probably will at some point in the near future). Suppose you’re minding your own business, wandering around to get some air on a nice, sunny afternoon at work. A guy with a backpack accidentally bumps into you and, after several “I’m sorry—didn’t see you man!” apologies, he wanders off. Once back in his happy little abode he duplicates the RFID signal from your access card and—voilà—your physical security access card is now his.

RFID identity theft (sometimes called RFID skimming) is usually discussed regarding credit cards, but assuming the bad guy has the proper equipment (easy enough to obtain) and a willingness to ignore the FCC, it’s a huge concern regarding your favorite proximity/security card. Again, this isn’t in the official study material that I can find, so I’m not sure there is a specific name given to the attack by ECC, but the principle is something you need to be aware of—both as a security professional looking to protect assets and as an ethical hacker looking to get into a building.

Another really devious social engineering impersonation attack involves getting the target to call you with the information, known as reverse social engineering. The attacker will pose as some form of authority or technical support and set up a scenario whereby the user feels he must dial in for support. And, like seemingly everything involved in this certification exam, specific steps are taken in the attack—advertisement, sabotage, and support. First, the attacker advertises or markets his position as “technical support” of some kind. In the second step, the attacker performs some sort of sabotage, whether a sophisticated DoS attack or simply pulling cables. In any case, the damage is such that the user feels they need to call technical support, which leads to the third step: the attacker attempts to “help” by asking for login credentials, thus completing the third step and gaining access to the system.

For example, suppose a social engineer has sent an e-mail to a group of users warning them of “network issues tomorrow” and has provided a phone number for the “help desk” if they are affected. The next day, the attacker performs a simple DoS on the machine, and the user dials up, complaining of a problem. The attacker then simply says, “Certainly I can help you—just give me your ID and password, and we’ll get you on your way.”

Regardless of the “human-based” attack you choose, remember that presentation is everything. The “halo effect” is a well-known and well-studied phenomenon of human nature, whereby a single trait influences the perception of other traits. If, for example, a person is attractive, studies show that people will assume they are more intelligent and will also be more apt to provide them with assistance. Humor, great personality, and a “smile while you talk” voice can take you far in social engineering. Remember, people want to help and assist you (most of us are hardwired that way), especially if you’re pleasant.

Social Engineering Grows Up

Seems there’s a certification for everything of import in IT. Everything from the manual build and maintenance of systems up to ethical hacking and data forensics is covered with some kind of official, vetted, sponsored, industry-standard and recognized certification. Heck, we even certify IT managers. I suppose, then, it was only a matter of time before social engineering jumped into the fray.

Social engineering certifications aren’t as popular as many of the others right now, but their popularity, acceptance, and availability are growing. CompTIA offers a certification called the CompTIA Social Media Security Professional (https://certification.comptia.org/certifications/social-media-security), centered mainly on using social media as an attack measure. They hail it as “the industry’s first social media security certification... validating knowledge and skills in assessing, managing and mitigating the security risks of social media,” and they may be right. Other training opportunities include Mitnick Security’s Security Awareness Training (https://www.mitnicksecurity.com/security/kevin-mitnick-security-awareness-training), which specializes in “making sure employees understand the mechanisms of spam, phishing, spear-phishing, malware and social engineering, and are able to apply this knowledge in their day-to-day job,” and several others found with a quick Internet search.

The Social Engineering Pentest Professional certification (https://www.social-engineer.com/certified-training/), offered by Social-Engineer.com founder Chris Hadnagy, is definitely one to note. Mr. Hadnagy created the courseware and certification along with Robin Dreeke—the head of the Behavioral Analysis Program at the FBI—and it has become highly sought after training. In fact, it’s featured at Black Hat in Las Vegas (July of 2016) and is endorsed by companies and organizations worldwide.

SANS has also gotten into the game, offering the Social Engineering for Penetration Testers (SEC567) certification (https://www.sans.org/course/social-engineering-for-penetration-testers). Much like SEPP, Social Engineering for Penetration Testers is designed to teach the “how to” of social engineering, utilizing psychological principles and technical techniques to measure success and manage risk. According to the website, “SEC567 covers the principles of persuasion and the psychology foundations required to craft effective attacks and bolsters this with many examples of what works from both cyber criminals and the author’s experience in engagements.”

Social engineering has definitely come of age. I think it, and physical security, are often overlooked in security strategy, but perhaps the education efforts of the community, over time, will change that. Security conferences like Black Hat and Defcon routinely have live social engineering challenges, and videos of social engineering techniques and successes are virtually everywhere now. Either our employees become better educated on the subject, or we’ll find out how bad it can be first hand.

Finally, this portion of our chapter can’t be complete without a quick discussion on what EC-Council has determined to be the single biggest threat to your security—the insider attack. I mean, after all, they’re already inside your defenses. You trust them and have provided them with the access, credentials, information, and resources to do their job. If one of them goes rogue or decides for whatever reason they want to inflict damage, there’s not a whole lot you can do about it. What if they decide to spy for the competition, to bring home a little extra money from time to time? And if that’s not bad enough, suppose you add anger, frustration, and disrespect to the situation. Might an angry, disgruntled employee go the extra step beyond self-gratification and just try to burn the whole thing down? You better believe they will.

Disgruntled employees get that way for a variety of reasons. Maybe they’re just angry at the organization itself because of some policy, action, or political involvement. Maybe they’re angry at a real or perceived slight—sometimes it’s seeing someone else take credit for their work, and sometimes it’s as simple as not hearing “thank you for doing a good job” enough. And sometimes they’re just mad at the people they work with on a day-to-day basis—whether they’re peers or supervisors. Interpersonal relationships in the office place are oftentimes the razor’s edge. A disgruntled employee—someone who is angry at the circumstances and situations surrounding his duties, the organization itself, or even the people he works with—has the potential to do some serious harm to the bottom line.

And there’s more to it than just the obvious. While you may instantly be picturing an angry employee “hacking” his way around inside the network to exact revenge on the company, suppose the “attack” isn’t technical in nature at all. Suppose the employee just takes the knowledge and secrets in his head and provides them to the competition over lunch at Applebee’s? For added fun, also consider that the disgruntled employee doesn’t even need to still be employed at your organization to cause problems. A recently fired angry employee potentially holds a lot of secrets and information that can harm the organization, and he won’t need to be asked nicely to provide it. It’s enough to make you toss your papers in the air and take off for the woods. Certainly you can enforce security policies and pursue legal action as a deterrent, and you can practice separation of duties, least privilege, and controlled access all you want, but at some point you must trust the individuals who work in the organization. Your best efforts may be in vetting the employees in the first place, ensuring you do your absolute best to provide everything needed for them to succeed at work, and making sure you have really good disaster recovery and continuity of operations procedures in place.

Finally, in this disgruntled employee/internal user discussion, there’s one other horrifying idea to consider. We’ve discussed before in this book how a hacker always has the advantage of time, so what happens if an attacker is really dedicated to the task and just applies for a job in your organization? We’ve said multiple times and all along that your insider risks far outweigh those from external; the insider is already trusted, so a lot of your defenses won’t come into play. And if that’s the case, what’s to stop a dedicated hacker from applying for a job and working a couple of months to set things up?

Just how hard could it be to generate a good resume and find a working position in the company? I know from experience how difficult it is sometimes to find truly talented employees in the IT sector, and it’s nothing for an HR department to see an IT resume with multiple, short-term job listings on it. Hiring managers, over time, can even get desperate to find the right person for a given need, and it’s a gold mine for a smart hacker. The prospect of a bad guy simply walking in to the organization with a badge and access I gave him is frightening to me, and it should concern you and your organization as well. Just remember that hackers aren’t the pimply-faced teenage kids sitting in a dark room anymore. They’re highly intelligent, outgoing folks, and they oftentimes have one heck of a good resume.

Computer-Based Attacks

Prepare for a shock: computer-based attacks are those attacks carried out with the use of a…computer. ECC lists several of these attack types, although there are probably more we could find if we really thought about it. Attacks include specially crafted pop-up windows, hoax e-mails, chain letters, instant messaging, spam, and phishing. Add social networking to the mix, and things can get crazy in a hurry. A quick jaunt around Facebook, Twitter, and LinkedIn can provide all the information an attacker needs to profile, and eventually attack, a target. Lastly, although it may be little more involved, why not just spoof an entire website or set up a rogue wireless access point? These may be on the fuzzy edge of social engineering, but they are a gold mine for hackers.

Social networking has provided one of the best means for people to communicate with one another and to build relationships to help further personal and professional goals. Unfortunately, this also provides hackers with plenty of information on which to build an attack profile. For example, consider a basic Facebook profile: date of birth, address, education information, employment background, and relationships with other people are all laid out for the picking. LinkedIn provides that and more—showing exactly what specialties and skills the person holds, as well as peers they know and work with.

Information such as date of birth seems like legitimate information to mine from social media, but is the rest of that fluff really all that important? Should we really spend time reading others’ Facebook walls? I mean, seriously, what can you do with all those arguments, posted videos of cats, and selfies? Well, consider the following as a small, oversimplified, but very easy to pull off social media attack structure: Suppose you’re a bad guy (or an ethical hacker hired to portray one) and want to gain access to Oinking Pig Computing (a company I just made up, because the little pig toy I have on my desk is begging to be a part of this book). You spend a little time researching OPC and find this employee name Julie Nocab, who is active on Facebook a lot. Julie posts about everything—where she goes, who she hangs out with, pictures of the food she eats, and what projects at work really stink. By reading through these posts, you discover she works for a guy named Bob Krop. You also discover she loves red wine, kayaking, and hanging out with her friends, including somebody named Joe Egasuas, who also works in her department.

You crack your virtual fingers and start thinking about what you can do with this information. You could craft an e-mail to Julie from Bob, asking her about one of the projects she was working on and telling her to open this Excel spreadsheet attachment to update the status. You might also send her a message from Joe about one of their favorite hangouts, alerting her that it was going to close. All she needs to do is click the website link to read the story. Pretty simple example, but you get the drift. The filler for these types of messages comes from the stuff people share on social media without even thinking about it, and a little specific personalization goes a long way toward getting someone to open your message and unwittingly install your access.

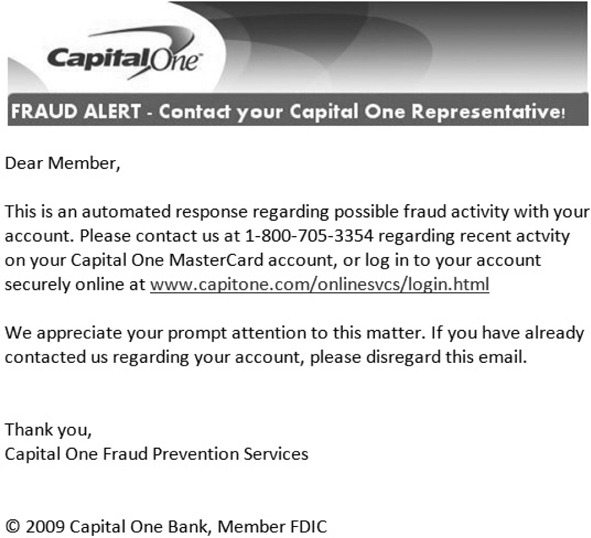

Speaking of the e-mail examples we just talked about, probably the simplest and most common method of computer-based social engineering is known as phishing. A phishing attack involves crafting an e-mail that appears legitimate but in fact contains links to fake websites or to download malicious content. The e-mail can appear to come from a bank, credit card company, utility company, or any number of legitimate business interests a person might work with. The links contained within the e-mail lead the user to a fake web form in which the information entered is saved for the hacker’s use.

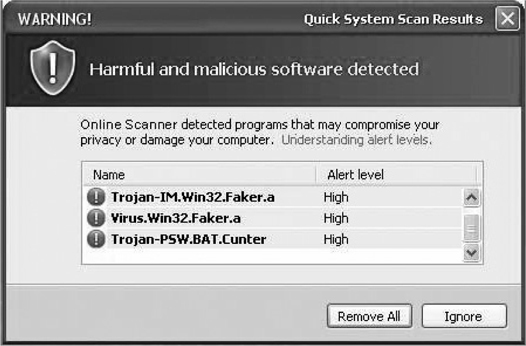

Phishing e-mails can be very deceiving, and even a seasoned user can fall prey to them. Although some phishing e-mails can be prevented with good perimeter e-mail filters, it’s impossible to prevent them all. The best way to defend against phishing is to educate users on methods to spot a bad e-mail and hope for the best. Figure 11-1 shows an actual e-mail I received a long while ago, with some highlights pointed out for you. Although a pretty good effort, it still screamed “Don’t call them!” to me. Note the implied urgency, with all the official-looking logos all over the place—after all, it just has to be real because nobody could cut and paste logos into an email…could they?

Figure 11-1 Phishing example

The following list contains items that may indicate a phishing e-mail—items that can be checked to verify legitimacy:

• Beware unknown, unexpected, or suspicious originators As a general rule, if you don’t know the person or entity sending the e-mail, it should probably raise your antenna. Even if the e-mail is from someone you know but the content seems out of place or unsolicited, it’s still something to be cautious about. In the case of Figure 11-1, not only was this an unsolicited e-mail from a known business, but the address in the “From” line was cap1fraud@prodigy.net—a far cry from the real Capital One and a big indicator this was destined for the trash bin. Ensure the originator is actually the originator you expect: cap1fraud@capital-one=fraud.com looks really official, but it’s just as fraudulent as a plug nickel.

• Beware whom the e-mail is addressed to We’re all cautioned to watch where an e-mail’s from, but an indicator of phishing can also be the “To” line itself, along with the opening e-mail greeting. Companies just don’t send messages out to all users asking for information. They’ll generally address you, personally, in the greeting instead of providing a blanket description: “Dear Mr. Walker” vs. “Dear Member.” This isn’t necessarily an “a-ha!” moment, but if you receive an e-mail from a legitimate business that doesn’t address you by name, you may want to show caution. Besides, it’s just rude.

• Verify phone numbers Just because an official-looking 800 number is provided does not mean it is legitimate. There are hundreds of sites on the Internet to validate the 800 number provided. Be safe, check it out, and know the friendly person on the other end actually works for the company you’re doing business with. And as a quick note for the real world: professional attackers will always have someone manning a fake 800 number to answer whatever phish they’re trying (they’re also usually the supervisor of someone who might have physically broken in).

• Beware bad spelling or grammar Granted, a lot of us can’t spell very well, and I’m sure e-mails you receive from your friends and family have had some “creative” grammar in them. However, e-mails from MasterCard, Visa, and American Express aren’t going to have misspelled words in them, and they will almost never use verbs out of tense. Note in Figure 11-1 that the word activity is misspelled.

• Always check links Many phishing e-mails point to bogus sites. Simply changing a letter or two in the link, adding or removing a letter, changing the letter o to a zero, or changing a letter l to a one completely changes the DNS lookup for the click. For example, www.capitalone.com will take you to Capital One’s website for your online banking and credit cards. However, www.capita1one.com will take you to a fake website that looks a lot like it but won’t do anything other than give your user ID and password to the bad guys. Additionally, even if the text reads www.capitalone.com, hovering the mouse pointer over it will show where the link really intends to send you.

Another version of this attack is still phishing—in other words, it involves the use of fake e-mails to elicit a response—but the objective base makes it different. While a phishing attack usually involves a mass-mailing of a crafted e-mail in hopes of snagging some unsuspecting reader, spear phishing is a targeted attack against an individual or a small group of individuals within an organization. Spear phishing usually is a result of a little reconnaissance work that has churned up some useful information. For example, an attacker may discover the names and contact info for all the executives within an organization and may decide a specifically crafted e-mail could be created just for this group and sent to them specifically. And don’t forget spear phishing can be used against a single target as well. Suppose, for example, you discovered the contact information for a shipping and receiving clerk inside the organization. Perhaps crafting an e-mail to look like a bill of lading or something similar might be worthwhile?

And one final note on spear phishing: perhaps not so surprisingly, spear phishing is very effective—even more so than regular phishing. The reasoning for this comes down to your audience: if the audience is smaller and has a specific interest or set of duties in common, it makes it easier for the attacker to craft an e-mail they’d be interested in reading. In fact, because it is so successful, spear phishing is the number-one social engineering attack in today’s world, with too many government organizations and business entities falling prey to list here.

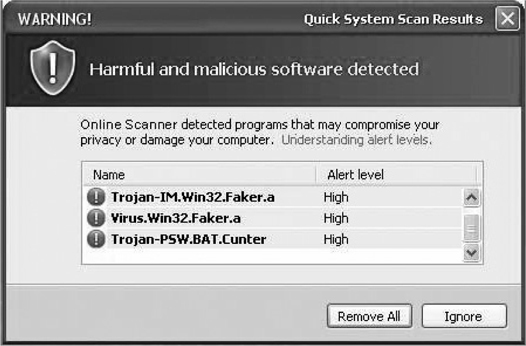

Although phishing is probably the most prevalent computer-based attack you’ll see, there are plenty of others. Many attackers make use of code to create pop-up windows users will unknowingly click, as shown in Figure 11-2. These pop-ups take the user to malicious websites where all sorts of badness is downloaded to their machines, or users are prompted for credentials at a realistic-looking web front. A common method of implementation is the prevalence of fake antivirus (AV) programs taking advantage of outdated Java installations on systems. Usually hidden in ad streams on legitimate sites, a Java applet is downloaded that, in effect, takes over the entire system, preventing the user from starting any new executables. All that said, modern browsers have developed a near hatred for Java due to all this nonsense, so it’s getting harder and harder to pull off these attacks.

Figure 11-2 Fake AV pop-up

Another successful computer-based social engineering attack involves the use of chat or messenger channels. Attackers not only use chat channels to find out personal information to employ in future attacks, but they make use of the channels to spread malicious code and install software. In fact, Internet Relay Chat (IRC) is one of the primary ways zombies (computers that have been compromised by malicious code and are part of a “bot-net”) are manipulated by their malicious code masters.

And, finally, we couldn’t have a discussion on social engineering attacks without at least a cursory mention of how to prevent them. Setting up multiple layers of defense, including change-management procedures and strong authentication measures, is a good start, and promoting policies and procedures is also a good idea. Other physical and technical controls can also be set up, but the only real defense against social engineering is user education. Training users—especially those in technical-support positions—how to recognize and prevent social engineering is the best countermeasure available.

In the real world, though, defense against a very skilled social engineer may be nearly impossible. Social engineering preys on the very things that make us human, and a successful attack really comes down to the right person for the right situation. Male, female, old, young, sexy, ugly, muscular, or thin, it all matters, and it matters differently in different situations. The true social engineering master can figure out what they need to be in the matter of seconds, and before you know it, the attacker who is a pure alpha male in real life turns into a floor-staring introvert in order to achieve the goal. Recognizing what is needed—what role to play, what people in the room will respond to, and so on—is the hard part and is what separates the very successful from the also-rans.

“That’ll Never Happen to Me”

Identity theft is a real, nonstop, ever-present threat in our information age, and no one—not even you, my highly educated and security-minded Dear Reader—is immune. It’s amazing to me that every time someone hears a story about identity theft or scams, they always have the same reaction regarding the victim: “Those poor uneducated buffoons, how could they fall for something that obvious?” But if this were a Jeopardy episode and the preceding title was revealed for the Daily Double, I’d hit my buzzer and respond with “What’s something every victim of identity theft says before they become a victim?”

Since the first edition of this book, I’ve been revisiting statistics and sources, and identity theft was no exception. When I pulled up statistics on ID theft, I had some expectations, based on recent history and such. I thought I’d see declines in overall numbers and maybe some definitive indicators of those most vulnerable. After all, we’re smarter now, right? There are all sorts of ads, TV shows, and movies talking about ID theft. Heck, there are multiple companies who do nothing but ID theft prevention and recovery. So of course it’ll be better now. Right? Man, was I wrong.

According to the U.S. Department of Justice (http://www.bjs.gov/content/pub/pdf/vit14.pdf), statistics on ID theft show it’s still not just the naive among us falling victim, it’s everyone, and it’s happening more now than it used to. Approximately 17.6 million Americans have their identities used fraudulently each year (up from 15 million in 2013), with each reported instance averaging approximately $1343 in losses. And just which groups fall victim most often? How about where you live? Gender? Marital status? Race? Preference between Xbox and PS4? Proclivity to eat fish fried (as God intended) versus grilled?

It seems to have nothing to do with sex or age, Xbox versus PlayStation, and grilled fish eaters, sadly, weren’t called out in the study. Men and women were statistically equally likely to be victimized, although more women seem to fall victim to ID theft than men. As for age group, it turns out that’s not a definitive indicator either. Fewer than 1 percent of 16- and 17-year-olds experienced ID theft, and just 1 percent of 18- to 24-year-olds were targeted. Every other age group is a statistical dead heat, with 50–64-year-olds taking the slight lead (mainly due to medical record theft).

The only statistical differences in groups comes down to race, income, and, surprisingly, where you live. Whites were almost three times more likely to be victimized than any other race, and income levels of $75,000 and above blow away lower-income brackets when it comes to ID theft. Interestingly, it’s not the income or race that seems to be the catch in these groups (ID thieves don’t necessarily have any idea what income level the target is at), but more the use of income. High-income earners, surprisingly enough, tend to spend more, and use their credit cards and ID much more frequently than other groups. This provides more of a target-rich environment for the bad guys, so not surprisingly higher-income groups tend to fall victim more frequently. As for where you live, Florida, Georgia, California, Michigan, and Nevada were by far the worst ID theft States to live in, while residents of North Dakota, South Dakota, Hawaii, Maine, and Iowa report much fewer ID theft activities.

In any case it’s worth noting, however, that statistics can be misleading. It may well be that higher income levels simply report ID theft at a higher rate because of the hope of criminal prosecution and reclamation of loses; somebody stealing $20 isn’t as likely to get you as outraged as someone stealing $20,000. And geography may have more to do with population numbers than any real threat to your identity. ID theft occurs across all designators, however you try to categorize people, and the methods to pull it off are easy and oftentimes silent. Any attacker can rifle through the trash to find telephone or utility bills and use them at certain DMV offices to garner a new driver’s license in another’s name, and the educated 40-year-old computer-literate man wouldn’t even know it was going on.

What’s truly concerning in all the ID theft statistics is this sobering note: the overwhelming majority of ID theft victims did not even know they were being victimized and only discovered the ID theft when the criminal’s activity caused a roadblock in their life—a credit card was declined, or they discovered a bad credit rating while trying to buy a car or a home. If the attacker is smart, by doing things such as paying the minimum on credit cards opened in the victim’s name to keep things running, it could take months and sometimes even years to even know the extent of the damage. It’s rare that the victim can point to any specific instance where their ID was stolen, so it’s very difficult to pinpoint the vulnerable access points for ID theft.

So what’s the answer to all this? How do you prevent ID theft when oftentimes you don’t even know it’s going on? There really isn’t one way to mitigate against ID theft; there are several. You can take steps to prevent ID theft by shredding your documents, signing up for various protection services, keeping watch over your credit, and visiting the FTC’s site on ID theft (a list of the top, most recent scams can be found here: https://www.consumer.ftc.gov/scam-alerts). Stay vigilant with your records and keep an eye out for anything weird. Much like many medical conditions, catching it early is key.

Mobile-Based Attacks

Generally speaking, I despise made-up memorization terms solely for exam purposes, and I used to look at this section in the same way. But recently my thoughts on the matter have changed, since mobile computing, and subsequently mobile attacks, have become so ubiquitous in our lives. Don’t get me wrong—I’m still no fan of memorization terms—but there’s no ignoring the fact that social engineering not only can work on mobile devices, but one could argue it’s becoming one of the primary attack vectors for it. For example, consider the “fool-proof” two-factor authentication measures banks and other sites use now—log in on the PC, then have a code texted to you to complete the process. With most of our security eyeballs trained on desktop security, doesn’t the mobile side of it become the logical target?

For example, consider ZitMo (ZeuS-in-the-Mobile), a piece of malware that turned up on Android phones all over the place. Attackers knew two-factor authentication was taking place, so ZitMo was designed to capture the phone itself, ensuring the one-time passwords also belonged to the bad guys. The target would log on to their bank account and see a message telling them to download an application on their phone in order to receive security messages. Thinking they were installing security, victims instead were installing a means for the attacker to have access to their user credentials (sending the second authentication factor to both victim and attacker via text).

Other malware types activated an SMS message from the victim’s phone that was sent to request premium services. The attacker would then delete any return SMS messages acknowledging the charges, ensuring the victim would have no idea this was going on until a giant cell phone bill arrived in the mail. Change that just a tad to send messages to everyone in the user’s contact list and cha-ching—now the attacker has several phones unknowingly installing and charging to his services.

Mobile social engineering attacks are those that take advantage of mobile devices—applications or services in mobile devices—in order to carry out their end goal. While phishing and pop-ups fall under computer-based attacks, mobile-based attacks show up as an app or SMS issue. EC-Council defines four categories of mobile-based social engineering attacks:

• Publishing malicious apps An attacker creates an app that looks like, acts like, and is namely similarly to a legitimate application.

• Repackaging legitimate apps An attacker takes a legitimate app from an app store and modifies it to contain malware, posting it on a third-party app store for download. For example, recently a version of Angry Birds was repackaged to contain all sorts of malware badness.

• Fake security applications This one actually starts with a victimized PC: the attacker infects a PC with malware and then uploads a malicious app to an app store. Once the user logs in, a malware pop-up advises them to download bank security software to their phone. The user complies, thus infecting their mobile device.

• SMS An attacker sends SMS text messages crafted to appear as legitimate security notifications, with a phone number provided. The user unwittingly calls the number and provides sensitive data in response. Per EC-Council, this is known as “smishing.”

I know you’re thinking that this was a very short section and, surely, I must have left something out. While I could go on and on with mobile attack stories and malware examples from Internet searches, I’ve scoured the ECC official courseware and, I promise you, this is all you need for mobile social engineering. As often repeated throughout this book, you need to keep abreast of this topic as each day goes by. Research mobile vulnerabilities and threats just as you would desktop and network ones, and give mobile security the care and concern it deserves.

Physical Security

Physical security is perhaps one of the most overlooked areas in an overall security program. For the most part, all the NIDS, HIDS, firewalls, honeypots, and security policies you put into place are pointless if you give an attacker physical access to the machines. And you can kiss your job goodbye if that access reaches into the network closet, where the routers and switches sit.

From a penetration test perspective, it’s no joyride either. Generally speaking, physical security penetration is much more of a “high-risk” activity for the penetration tester than many of the virtual methods we’re discussing. Think about it: if you’re sitting in a basement somewhere firing binary bullets at a target, it’s much harder for them to actually figure out where you are, much less to lay hands on you. Pass through a held-open door and wander around the campus without a badge, and someone, eventually, will catch you. And sometimes that someone is carrying a gun—and pointing it at you. I’ve even heard of a certain tech-editing pen test lead who has literally had the dogs called out on him. When strong IT security measures are in place, though, determined testers will move to the physical attacks to accomplish the goal.

And one final note on physical security as a whole, before we dive into what you’ll need for your exam: as a practical matter, and probably one we can argue from the perspective of Maslow’s Hierarchy of Needs, physical security penetration is often seen as far more personal than cyber-penetration. For example, a bad guy can tell Company X that he has remotely taken their plans and owns their servers, and the company will react with, “Ah, that’s too bad. We’ll have to address that.” But if he calls and says he broke into the office at night, sat in the CEO’s chair, and installed a keylogger on the machine, you’ll often see an apoplectic meltdown. Hacking is far more about people than it is technology, and that’s never truer than when using physical methods to enable cyber-activities.

Physical Security 101

Physical security includes the plans, procedures, and steps taken to protect your assets from deliberate or accidental events that could cause damage or loss. Normally people in our particular subset of IT tend to think of locks and gates in physical security, but it also encompasses a whole lot more. You can’t simply install good locks on your doors and ensure the wiring closet is sealed off to claim victory in physical security; you’re also called to think about those events and circumstances that may not be so obvious. These physical circumstances you need to protect against can be natural, such as earthquakes and floods, or manmade, ranging from vandalism and theft to outright terrorism. The entire physical security system needs to take it all into account and provide measures to reduce or eliminate the risks involved.

Furthermore, physical security measures come down to three major components: physical, technical, and operational. Physical measures include all the things you can touch, taste, smell, or get shocked by. Concerned about someone accidentally (or purposefully) ramming their vehicle through the front door? You may what to consider installing bollards across the front to prevent attackers from taking advantage of the actual layout of the building and parking/driveways. Other examples of physical controls include lighting, locks, fences, and guards with Tasers or accompanied by angry German Shepherds. Technical measures are a little more complicated. These are measures taken with technology in mind to protect explicitly at the physical level. For example, authentication and permissions may not come across as physical measures, but if you think about them within the context of smartcards and biometrics, it’s easy to see how they should become technical measures for physical security. Operational measures are the policies and procedures you set up to enforce a security-minded operation. For example, background checks on employees, risk assessments on devices, and policies regarding key management and storage would all be considered operational measures.

To get you thinking about a physical security system and the measures you’ll need to take to implement it, it’s probably helpful to start from the inside out and draw up ideas along the way. For example, apply the thought process to this virtual room we’re standing in. Look over there at the server room, and the wiring closet just outside. Aren’t there any number of physical measures we’ll need to control for both? You bet there are.

Power concerns, the temperature of the room, static electricity, and the air quality itself are just a few examples of things to think about. Dust can be a killer, believe me, and humidity is really important, considering static electricity can be absolutely deadly to systems. Anti-static mats and wrist straps should be something to implement if there are folks working on the systems—along with humidity-control systems and grounding, they’ll help in combatting static electricity. Along that line of thinking, maybe the ducts carrying air in and out need special attention. Positive pressure (increasing air pressure inside the room greater than that outside the room) might mess up a few hairstyles, but will greatly reduce the number of contaminants allowed in. And while we’re on the subject, what about the power to all this? Do you have backup generators for all these systems? Is your air conditioning unit susceptible? Someone knocking out your AC system could affect an easy denial of service on your entire network, couldn’t they? What if they attack and trip the water sensors for the cooling systems under the raised floor in your computer lab?

How about some technical measures to consider? Did you have to use a PIN and a proximity badge to even get into room? What about the authentication of the server and network devices themselves? If you allow remote access to them, what kind of authentication measures are in place? Are passwords used appropriately? Is there virtual separation—that is, a DMZ they reside in—to protect against unauthorized access? Granted, these aren’t physical measures by their own means (authentication might cut the mustard, but location on a subnet sure doesn’t), but they’re included here simply to continue the thought process of examining the physical room.

Continuing our example here, let’s move around the room together and look at other physical security concerns. What about the entryway itself? Is the door locked? If so, what is needed to gain access to the room? Perhaps a key? If so, what kind of key and how hard is it to replicate? In demonstrating a new physical security measure to consider—an operational one, this time—who controls the keys, where are they located, and how are they managed? And what if you’re using an RFID access card that processes all sorts of magic on the back side—like auto-unlocking doors and such? Doing anything to protect against that being skimmed and used against you? We’ve already covered enough information to employ at least two government bureaucrats and we’re not even outside the room yet. You can see here, though, how the three categories work together within an overall system.

Another term you’ll need to be aware of is access controls. Access controls are physical measures designed to prevent access to controlled areas. They include biometric controls, identification/entry cards, door locks, and man traps. Each of these is interesting in its own right.

Biometrics includes the measures taken for authentication that come from the “something you are” concept. We’ve hit on these before, and I won’t belabor them much here, but I just want to restate the basics in regard to physical security. Biometrics can include fingerprint readers, face scanners, retina scanners, and voice recognition (see Figure 11-3). The great thing behind using biometrics to control access—whether physically or virtually—is that it’s difficult to fake a biometric signature (such as a fingerprint). The bad side, though, is a related concept: because the nature of biometrics is so specific, it’s easy for the system to read false negatives and reject a legitimate user’s access request.

Figure 11-3 Biometrics

Death of the Password?

I’m probably safe in saying that almost everyone reading this book hates passwords. If you’re like me, you have dozens of them, and on occasion you either forget one or lose it, prompting a day’s worth of work ensuring everything is safely changed and backed up. Passwords just don’t work; they create a false sense of security and seemingly cause more aggravation than a sense of peace. A recent study showed that the 1000 most common passwords found are used on more than 91 percent of all systems tested (http://www.passwordrandom.com/most-popular-passwords). Want to know something even more disturbing? Almost 70 percent of those studied use the same password on multiple sites.

Biometrics was supposed to be a new dawn in authentication, freeing us from password insanity. The idea of “something you are” sounded fantastic, right up until the costs involved made it prohibitive to use in day-to-day operation. Not to mention, the technology just isn’t reliable enough for the average guy to use on his home PC. For example, I have a nice little fingerprint scanner right here on my laptop that I never use because it was entirely unreliable and unpredictable. So, where do we turn for the one true weapon that will kill off the password? If “something I know” and “something I am” won’t work, what’s left?

One possible answer for password death may come in the form of “something you have,” and one getting a lot of buzz lately has a really weird-sounding name. The Yubikey (www.yubico.com) is a basic two-factor authentication token that works right over a standard USB port. The idea is brilliant—every time it’s used, it generates a one-time password that renders all before it useless. So long as the user has the token and knows their own access code, every login is fresh and secure; however, it doesn’t necessarily answer all the ills. What happens if the token is stolen or lost? What happens if the user forgets their code to access the key? Even worse, what if the user logs in and then leaves the token in the machine?

We could go on and on, but the point is made: we’re still stuck with passwords. Biometrics and tokens are making headway, but we’re still a long way off. The idea of one-time passwords isn’t new and is making new strides, but it’s not time to start celebrating the password’s death just yet. Between accessing the system itself and then figuring out how to pass authentication credentials to the multiple and varied resources we try to access on a daily basis, the death of the password may indeed be greatly exaggerated.

When it comes to measuring the effectiveness of a biometric authentication system, the FRR, FAR, and CER are key areas of importance. False rejection rate (FRR) is the percentage of time a biometric reader will deny access to a legitimate user. The percentage of time that an unauthorized user is granted access by the system, known as false acceptance rate (FAR), is the second major factor. These are usually graphed on a chart, and the intercepting mark, known as crossover error rate (CER), becomes a ranking method to determine how well the system functions overall. For example, if one fingerprint scanner had a CER of 4 and a second one had a CER of 2, the second scanner would be a better, more accurate solution.

From the “something you have” authentication factor, identification and entry cards can be anything from a simple photo ID to smartcards and magnetic swipe cards. Also, tokens can be used to provide access remotely. Smartcards have a chip inside that can hold tons of information, including identification certificates from a PKI system, to identify the user. Additionally, they may also have RFID features to “broadcast” portions of the information for “near swipe” readers. Tokens generally ensure at least a two-factor authentication method because you need the token itself and a PIN you memorize to go along with it.

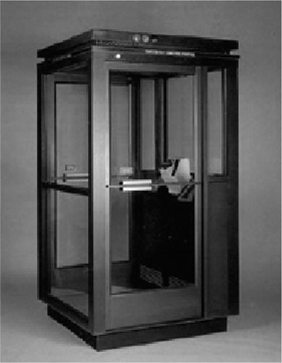

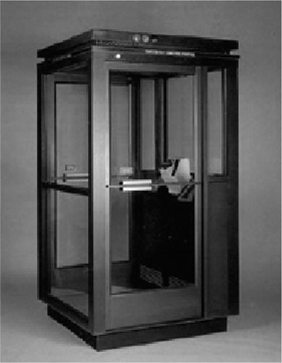

The mantrap, designed as a pure physical access control, provides additional control and screening at the door or access hallway to the controlled area. In the mantrap, two doors are used to create a small space to hold a person until appropriate authentication has occurred. The user enters through the first door, which must shut and lock before the second door can be cleared. Once inside the enclosed room, which normally has clear walls, the user must authenticate through some means—biometric, token with pin, password, and so on—to open the second door (Figure 11-4 shows one example from Hirsch Electronics). If authentication fails, the person is trapped in the holding area until security can arrive and come to a conclusion.

Figure 11-4 Mantrap

Usually mantraps are monitored with video surveillance or guards, and from experience I can tell you they can be quite intimidating. If you’re claustrophobic at all, there’s a certain amount of palpable terror when the first door hisses shut behind you, and a mistyped PIN, failed fingerprint recognition, or—in the case of the last one I was trapped in—a bad ID card chip will really get your heart hammering. Add in a guard or two aiming a gun in your direction, and the ambiance jumps to an entirely new level of terror.

A few final thoughts on setting up a physical security program are warranted here. The first is a concept I believe anyone who has opened a book on security in the past 20 years is already familiar with—layered defense. The “defense in depth” or “layered security” thought process involves not relying on any single method of defense but, rather, stacking several layers between the asset and the attacker. In the physical security realm, these are fairly easy to see: if your data and servers are inside a building, stack layers to prevent the bad guys from getting in. Guards at an exterior gate checking badges and a swipe card entry for the front door are two protections in place before the bad guys are even in the building. Providing access control at each door with a swipe card, or biometric measures, adds an additional layer. Once an attacker is inside the room, technical controls can be used to prevent local logon. In short, layer your physical security defenses just as you would your virtual ones—you may get some angry users along the way, huffing and puffing about all they have to do just to get to work, but it’ll pay off in the long run.

Another thought to consider, as mentioned earlier, is that physical security should also be concerned with those things you can’t really do much to prevent. No matter what protections and defenses are in place, an F5 tornado doesn’t need an access card to get past the gate. Hurricanes, floods, fires, and earthquakes are all natural events that could bring your system to its knees. Protection against these types of events usually comes down to good planning and operational controls. You can certainly build a strong building and install fire-suppression systems; however, they’re not going to prevent anything. In the event something catastrophic does happen, you’ll be better off with solid disaster-recovery and contingency plans.

From a hacker’s perspective, the steps taken to defend against natural disasters aren’t necessarily anything that will prevent or enhance a penetration test, but they are helpful to know. For example, a fire-suppression system turning on or off isn’t necessarily going to assist in your attack. However, knowing the systems are backed up daily and offline storage is at a poorly secured warehouse across town could become useful. And if the fire alarm system results in everyone leaving the building for an extended period of time, well....

Finally, there’s one more thought we should cover (more for your real-world career than for your exam) that applies whether we’re discussing physical security or trying to educate a client manager on prevention of social engineering. There are few truisms in life, but one is absolute: hackers do not care that your company has a policy. Many a pen tester has stood there listening to the client say, “That scenario simply won’t (or shouldn’t or couldn’t) happen because we have a policy against it.” Two minutes later, after a server with a six-character password left on a utility account has been hacked, it is evident the policy requiring 10-character passwords didn’t scare off the attacker at all, and the client is left to wonder what happened to the policy. Policies are great, and they should be in place. Just don’t count on them to actually prevent anything on their own. After all, the attacker doesn’t work for you and couldn’t care less what you think.

Physical Security Hacks

Believe it or not, hacking is not restricted to computers, networking, and the virtual world—there are physical security hacks you can learn, too. For example, most elevators have an express mode that lets you override the selections of all the previous passengers, allowing you to go straight to the floor you’re going to. By pressing the Door Close button and the button for your destination floor at the same time, you’ll rocket right to your floor while all the other passengers wonder what happened.

Others are more practical for the ethical hacker. Ever hear of the bump key, for instance? A specially crafted bump key will work for all locks of the same type by providing a split second of time to turn the cylinder. See, when the proper key is inserted into the lock, all of the key pins and driver pins align along the “shear line,” allowing the cylinder to turn. When a lock is “bumped,” a slight impact forces all of the bottom pins in the lock, which keeps the key pins in place. This separation only lasts a split second, but if you keep a slight force applied, the cylinder will turn during the short separation time of the key and driver pins, and the lock can be opened.

Other examples are easy to find. Some Master-brand locks can be picked using a simple bobby pin and an electronic flosser, believe it or not. Combination locks can be easily picked by looking for “sticking points” (apply a little pressure and turn the dial slowly—you’ll find them) and mapping them out on charts you can find on the Internet. Heck, last I heard free lock pick kits were being given away at Defcon, so there may not even be a lot of research necessary on lock picking anymore.

What about physical security hacks in the organizational target? Maybe you can consider raised floors and drop ceilings as an attack vector. If the walls between rooms aren’t properly sealed (that is, they don’t go all the way to the ceiling and floor), you can bypass all security in the building by just by crawling a little. And don’t overlook the beauty of an open lobby manned by a busy or distracted receptionist. Many times you can just walk right in.

I could go on and on here, but you get the point. Sadly, many organizations do not, and they overlook physical security in their overall protection schemes. As a matter of fact, it seems even standards organizations and certification providers are falling into this trap. ISC2 proved this out by recently taking physical security from its place of honor, with its own domain in the CISSP material, and downgrading it to just a portion of another domain. Personally, I think organizations, security professionals, and, yes, pen testers who ignore or belittle its place in security are doomed to failure. Whichever side you’re on, it’s in your best interest to give physical security its proper place.

Chapter Review

Social engineering is the art of manipulating a person, or a group of people, into providing information or a service they otherwise would never have given. Social engineers prey on people’s natural desire to help one another, their tendency to listen to authority, and their trust of offices and entities. ECC defines four phases of successful social engineering:

1. Research (dumpster dive, visit websites, tour the company, and so on).

2. Select the victim (identify frustrated employee or other promising targets).

3. Develop a relationship.

4. Exploit the relationship (collect sensitive information).

Social engineering is a nontechnical method of attacking systems, which means it’s not limited to people with technical know-how. EC-Council defines five main reasons and four factors that allow social engineering to happen. Human nature (to trust others), ignorance of social engineering efforts, fear (of consequences of not providing the requested information), greed (promised gain for providing requested information), and a sense of moral obligation are all reasons people fall victim to social engineering attacks. As for the factors that allow these attacks to succeed, insufficient training, unregulated information (or physical) access, complex organizational structure, and lack of security policies all play roles.

All social engineering attacks fall into one of three categories: human based, computer based, or mobile based. Human-based social engineering uses interaction in conversation or other circumstances between people to gather useful information.

Dumpster diving is digging through the trash for useful information. Although technically a physical security issue, dumpster diving is covered as a social engineering topic per EC-Council. Impersonation is a name given to a huge swath of attack vectors. Basically the social engineer pretends to be someone or something he or she is not, and that someone or something—like, say, an employee, a valid user, a repairman, an executive, a help desk person, or an IT security expert—is someone or something the target either respects, fears, or trusts. Pretending to be someone you’re not can result in physical access to restricted areas (providing further opportunities for attacks), not to mention any sensitive information (including the credentials) your target feels you have a need and right to know. Using a phone during a social engineering effort is known as “vishing.”

Shoulder surfing and eavesdropping are other valuable human-based social engineering methods. An attacker taking part in shoulder surfing simply looks over the shoulder of a user and watches them log in, access sensitive data, or provide valuable steps in authentication. This can also be done “long distance,” using vision-enhancing devices like telescopes and binoculars.

Tailgating occurs when an attacker has a fake badge and simply follows an authorized person through the opened security door. Piggybacking is a little different in that the attacker doesn’t have a badge but asks for someone to let her in anyway. If you see an exam question listing both tailgating and piggybacking, the difference between the two comes down to the presence of a fake ID badge (tailgaters have them, piggybackers don’t). On questions where they both do not appear as answers, the two are used interchangeably.

Reverse social engineering is when the attacker poses as some form of authority or technical support and sets up a scenario whereby the user feels he must dial in for support. Potential targets for social engineering are referred to as “Rebecca” or “Jessica.” When you’re communicating with other attackers, the terms can provide information on whom to target—for example, “Rebecca, the receptionist, was very pleasant and easy to work with.” Disgruntled employees and insider attacks present the greatest risk to an organization.

Computer-based attacks are those attacks carried out with the use of a computer. Attacks include specially crafted pop-up windows, hoax e-mails, chain letters, instant messaging, spam, and phishing. Social networking and spoofing sites or access points also belong in the mix.

Most likely the simplest and most common method of computer-based social engineering is known as phishing. A phishing attack involves crafting an e-mail that appears legitimate but in fact contains links to fake websites or to download malicious content. Another version of this attack is known as spear phishing. While a phishing attack usually involves a mass-mailing of a crafted e-mail in hopes of snagging some unsuspecting reader, spear phishing is a targeted attack against an individual or a small group of individuals within an organization. Spear phishing usually is a result of a little reconnaissance work that has churned up some useful information. Options that can help mitigate against phishing include the Netcraft Toolbar and the PhishTank Toolbar.

Setting up multiple layers of defense, including change-management procedures and strong authentication measures, is a good start in social engineering mitigation. Other physical and technical controls can also be set up, but the only real defense against social engineering is user education.

Mobile social engineering attacks are those that take advantage of mobile devices—that is, applications or services in mobile devices—in order to carry out their end goal. ZitMo (ZeuS-in-the-Mobile) is a piece of malware for Android phones that exploits an already-owned PC to take control of a phone in order to steal credentials and two-factor codes. EC-Council defines four categories of mobile-based social engineering attacks: publishing malicious apps, repackaging legitimate apps, fake security applications, and SMS (per EC-Council, this is known as “smishing”).

Physical security is perhaps one of the most overlooked areas in an overall security program. Physical security includes the plans, procedures, and steps taken to protect your assets from deliberate or accidental events that could cause damage or loss. Physical security measures come down to three major components: physical, technical, and operational. Physical measures include all the things you can touch, taste, smell, or get shocked by. Technical measures are measures taken with technology in mind to protect explicitly at the physical level. Operational measures are the policies and procedures you set up to enforce a security-minded operation. Access controls are physical measures designed to prevent access to controlled areas. They include biometric controls, identification/entry cards, door locks, and man traps. FRR, FAR, and CER are important biometric measurements. False rejection rate (FRR) is the percentage of time a biometric reader will deny access to a legitimate user. The percentage of time that an unauthorized user is granted access by the system, known as false acceptance rate (FAR), is the second major factor. These are usually graphed on a chart, and the intercepting mark, known as crossover error rate (CER), becomes a ranking method to determine how well the system functions overall.

The mantrap, designed as a pure physical access control, provides additional control and screening at the door or access hallway to the controlled area. In the mantrap, two doors are used to create a small space to hold a person until appropriate authentication has occurred. The user enters through the first door, which must shut and lock before the second door can be cleared. Once inside the enclosed room, which normally has clear walls, the user must authenticate through some means—biometric, token with pin, password, and so on—to open the second door.

Questions

1. An attacker creates a fake ID badge and waits next to an entry door to a secured facility. An authorized user swipes a key card and opens the door. Jim follows the user inside. Which social engineering attack is in play here?

A. Piggybacking

B. Tailgating

C. Phishing

D. Shoulder surfing

2. An attacker has physical access to a building and wants to attain access credentials to the network using nontechnical means. Which of the following social engineering attacks is the best option?

A. Tailgating

B. Piggybacking

C. Shoulder surfing

D. Sniffing

3. Bob decides to employ social engineering during part of his pen test. He sends an unsolicited e-mail to several users on the network advising them of potential network problems and provides a phone number to call. Later that day, Bob performs a DoS on a network segment and then receives phone calls from users asking for assistance. Which social engineering practice is in play here?

A. Phishing

B. Impersonation

C. Technical support

D. Reverse social engineering

4. Phishing, pop-ups, and IRC channel use are all examples of which type of social engineering attack?

A. Human based

B. Computer based

C. Technical

D. Physical

5. An attacker performs a Whois search against a target organization and discovers the technical point of contact (POC) and site ownership e-mail addresses. He then crafts an e-mail to the owner from the technical POC, with instructions to click a link to see web statistics for the site. Instead, the link goes to a fake site where credentials are stolen. Which attack has taken place?

A. Phishing

B. Man in the middle

C. Spear phishing

D. Human based

6. Which threat presents the highest risk to a target network or resource?

A. Script kiddies

B. Phishing

C. A disgruntled employee

D. A white-hat attacker

7. Which of the following is not a method used to control or mitigate against static electricity in a computer room?

A. Positive pressure

B. Proper electrical grounding

C. Anti-static wrist straps

D. A humidity control system

8. Phishing e-mail attacks have caused severe harm to a company. The security office decides to provide training to all users in phishing prevention. Which of the following are true statements regarding identification of phishing attempts? (Choose all that apply.)

A. Ensure e-mail is from a trusted, legitimate e-mail address source.

B. Verify spelling and grammar is correct.

C. Verify all links before clicking them.

D. Ensure the last line includes a known salutation and copyright entry (if required).

9. Lighting, locks, fences, and guards are all examples of __________ measures within physical security.

A. physical

B. technical

C. operational

D. exterior

10. A man receives a text message on his phone purporting to be from Technical Services. The text advises of a security breach and provides a web link and phone number to follow up on. When the man calls the number, he turns over sensitive information. Which social engineering attack was this?

A. Phishing

B. Vishing

C. Smishing

D. Man in the middle

11. Background checks on employees, risk assessments on devices, and policies regarding key management and storage are examples of __________ measures within physical security.

A. physical

B. technical

C. operational

D. None of the above

12. Your organization installs mantraps in the entranceway. Which of the following attacks is it attempting to protect against?

A. Shoulder surfing

B. Tailgating

C. Dumpster diving

D. Eavesdropping

Answers

1. B. In tailgating, the attacker holds a fake entry badge of some sort and follows an authorized user inside.

2. C. Because he is already inside (thus rendering tailgating and piggybacking pointless), the attacker could employ shoulder surfing to gain the access credentials of a user.

3. D. Reverse social engineering occurs when the attacker uses marketing, sabotage, and support to gain access credentials and other information.

4. B. Computer-based social engineering attacks include any measures using computers and technology.

5. C. Spear phishing occurs when the e-mail is being sent to a specific audience, even if that audience is one person. In this example, the attacker used recon information to craft an e-mail designed to be more realistic to the intended victim and therefore more successful.

6. C. Everyone recognizes insider threats as the worst type of threat, and a disgruntled employee on the inside is the single biggest threat for security professionals to plan for and deal with.

7. A. Positive pressure will do wonderful things to keep dust and other contaminants out of the room, but on its own it does nothing against static electricity.

8. A, B, C. Phishing e-mails can be spotted by who they are from, who they are addressed to, spelling and grammar errors, and unknown or malicious embedded links.

9. A. Physical security controls fall into three categories: physical, technical, and operational. Physical measures include lighting, fences, and guards.

10. C. The term smishing refers to the use of text messages to socially engineer mobile device users. By definition it is a mobile-based social engineering attack. As an aside, it also sounds like something a five-year-old would say about killing a bug.

11. C. Operational measures are the policies and procedures you set up to enforce a security-minded operation.

12. B. Mantraps are specifically designed to prevent tailgating.