Section 7.2 describes two little programs for estimating the time and space consumed by various primitive operations. This appendix shows how those can grow into useful programs for generating one-page time and space estimates. The complete source code for both programs can be found at this book’s web site.

The program spacemod.cpp produces a model of the space consumed by various constructs in C++. The first part of the program uses a sequence of statements like

cout << "sizeof(char)=" << sizeof(char);

cout << " sizeof(short)=" << sizeof(short);

to give precise measurements of primitive objects:

sizeof(char)=l sizeof(short)=2 sizeof(int)=4

sizeof(float)=4 sizeof(struct *)=4 sizeof(long)=4

sizeof(double)=8

The program also defines a dozen structures, using the simple naming convention illustrated in these examples:

struct structc { char c; };

struct structic { int i; char c; };

struct structip { int i; structip *p; };

struct structdc { double d; char c; };

struct structcl2 { char c[12]; };

The program uses a macro to report the sizeof the structure, and then to estimate the number of bytes that new allocates in a report like this:

structc 1 48 48 48 48 48 48 48 48 48 48

structic 8 48 48 48 48 48 48 48 48 48 48

structip 8 48 48 48 48 48 48 48 48 48 48

structdc 16 64 64 64 64 64 64 64 64 64 64

structcd 16 64 64 64 64 64 64 64 64 64 64

structcdc 24 -3744 4096 64 64 64 64 64 64 64 64

structiii 12 48 48 48 48 48 48 48 48 48 48

The first number is given by sizeof and the next ten numbers report the differences between successive pointers returned by new. This output is typical: most of the numbers are quite consistent, but the allocator skips around every now and then.

This macro makes one line of the report:

#define MEASURE(T, text) { \

cout << text << "\t"; \

cout << sizeof(T) << "\t"; \

int lastp = 0; \

for (int i = 0; i < 11; i++) { \

T *p = new T; \

int thisp = (int) p; \

if (lastp != 0) \

cout << " " << thisp - lastp; \

lastp = thisp; \

} \

cout << "\n"; \

}

The macro is called with the structure name followed by the same name in quotes, so it can be printed:

MEASURE(structc, "structc") ;

(My first draft used a C++ template parameterized by structure type, but measurements were led wildly astray by artifacts of the C++ implementation.)

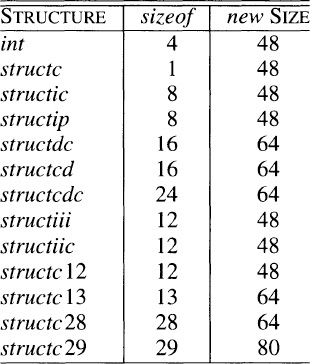

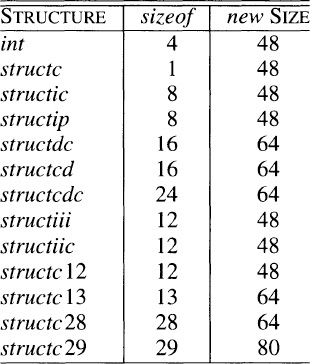

This table summarizes the output of the program on my machine:

The left column of numbers helps us to estimate the sizeof structures. We start by summing the sizeof the types; that explains the 8 bytes for structip. We must also account for the alignment; although its components sum to 10 bytes (two chars and a double), structcdc consumes 24 bytes.

The right column gives us insight into the space overhead of the new operator. It appears that any structure with a sizeof 12 bytes or less will consume 48 bytes. Structures with 13 through 28 bytes consume 64 bytes. In general, the allocated block size will be a multiple of 16, with 36 to 47 bytes of overhead. This is surprisingly expensive; other systems that I use consume just 8 bytes of overhead to represent an 8-byte record.

Section 7.2 also describes a little program for estimating the cost of one particular C operation. We can generalize that to the timemod.c program that produces a one-page cost model for a set of C operations. (Brian Kernighan, Chris Van Wyk and I built a predecessor of this program in 1991.) The main function of that program consists of a sequence of a T (for title) lines followed by M lines to measure the cost of operations:

T("Integer Arithmetic");

M({});

M(k++);

M(k = i + j);

M(k = i – j);

...

Those lines (and a few more like them) produce this output:

Integer Arithmetic (n=5000)

{} 250 261 250 250 251 10

k++ 471 460 471 461 460 19

k = i + j 491 491 500 491 491 20

k = i – j 440 441 441 440 441 18

k = i * j 491 490 491 491 490 20

k = i / j 2414 2433 2424 2423 2414 97

k = i % j 2423 2414 2423 2414 2423 97

k = i & j 491 491 480 491 491 20

k = i | j 440 441 441 440 441 18

The first column gives the operation that is executed inside the loop

for i = [1, n]

for j = [1, n]

op

The next five columns show the raw time in clock clicks (milliseconds on this system) for five executions of that loop (these times are all consistent; inconsistent numbers help to identify suspicious runs). The final column gives the average cost in nanoseconds per operation. The first row of the table says that it takes about ten nanoseconds to execute a loop that contains the null operation. The next row says that incrementing the variable k consumes about 9 additional nanoseconds. All of the arithmetic and logical operations have about the same cost, with the exception of the division and remainder operators, which are an order-of-magnitude more expensive.

This approach gives rough estimates for my machine, and they must not be over-interpreted. I conducted all experiments with optimization disabled. When I enabled that option, the optimizer removed the timing loops and all times were zero.

The work is done by the M macro, which can be sketched in pseudocode as:

#define M(op)

print op as a string

timesum = 0

for trial = [0, trials)

start = clock()

for i = [1, n]

fi = i

for j = [1, n]

op

t = clock()-start

print t

timesum += t

print 1e9*timesum / (n*n * trials * CLOCKS_PER_SEC)

The complete code for this cost model can be found at this book’s web site.

We’ll now survey the output of the program on my particular system. Because the clock clicks are all consistent, we will omit them and report only the average time in nanoseconds.

Floating Point Arithmetic (n=5000)

fj=j; 18

fj=j; fk = fi + fj 26

fj=j; fk = fi – fj 27

fj=j; fk = fi * fj 24

fj=j; fk = fi / fj 78

Array Operations (n=5000)

k = i + j 17

k = x[i] + j 18

k = i + x[j] 24

k = x[i] + x[j] 27

The floating point operations originally assign the integer j to the floating-point fi (in about 8 nanoseconds); the outer loop assigns i to the floating-point fi. The floating operations themselves cost about as much as their integer counterparts, and the array operations are equally inexpensive.

The next tests give us insight into control flow in general and some sorting operations in particular:

Comparisons (n=5000)

if (i < j) k++ 20

if (x[i] < x[j]) k++ 25

Array Comparisons and Swaps (n=5000)

k = (x[i]<x[k]) ? –1:1 34

k = intcmp(x+i, x+j) 52

swapmac(i, j) 41

swapfunc(i, j) 65

The function versions of comparing and swapping each cost about 20 nanoseconds more than their inline counterparts. Section 9.2 compares the cost of computing the maximum of two values with functions, macros and inline code:

Max Function, Macro and Inline (n=5000)

k = (i > j) ? i : j 26

k = maxmac(i, j) 26

k = maxfunc(i, j) 54

The rand function is relatively inexpensive (though recall that the bigrand function makes two calls to rand), square root is an order of magnitude greater than basic arithmetic operations (though only twice the cost of a division), simple trigonometric operations cost twice that, and advanced trigonometric operations run into microseconds.

Math Functions (n=1000)

k = rand() 40

fk = j+fi 20

fk = sqrt(j+fi) 188

fk = sin(j+fi) 344

fk = sinh(j+fi) 2229

fk = asin(j+fi) 973

fk = cos(j+fi) 353

fk = tan(j+fi) 465

Because those are so pricey, we shrunk the value of n. Memory allocation is more expensive yet, and merits an even smaller n:

Memory Allocation (n=500)

free(malloc(16)) 2484

free(malloc(100)) 3044

free(malloc(2000)) 4959