1

AI for Good, Bad, and Ugly

Imagine a world with no elephants. This fiction could become fact in a mere decade, as poachers kill an elephant every fifteen minutes.1 Rhinos, tigers, gorillas, and other large mammals also face extinction due to illegal poaching across national parks in Africa and Southeast Asia.

The conservation community considers AI to be one of the most important game changers in doing good.2 According to a 2021 report from WildLabs, a central hub for conservation technology, AI can reduce the physical labor that goes into collecting vital conservation data.3 AI can pinpoint an animal call from hours of field recordings in mere seconds and identify rare species from thousands of images online. Where humans fail, AI may be able to step in and save our planet.

But what makes AI “good”? Is it sufficient to have noble intent when designing AI-enabled tools? Are they tackling the most important problems? Do AI stakeholders agree on what needs fixing? What is the nature of the data feed that informs policy and practice?

Garbage in means garbage out. Many experts today are attending to digital rights, data justice, and AI ethics to correct AI biases in the Global North. When shifting contexts to the Global South, however, tech philanthropists, AI evangelists, and utopian gurus lead the way. AI for Good initiatives are the latest manifestations of their supposed altruistic efforts.

In the ping-pong game of good and evil there is little room for nuanced values. With grand ideologies, the mundane gets left behind—the stuff that dictates our digital and social lives. To stay on track to make positive social impacts using AI, reliance on morality needs to give way to relational approaches to tech innovations. Taking a deep dive into the culture on the ground in the Global South and according fair weight to people’s lived realities there will help AI find a home in the hearts and minds of those most affected.

When AI Needs the Pope’s Blessings

The Vatican hosted an unusual guest in February 2019—Microsoft president Brad Smith. Pope Francis, the Roman Catholic leader who some years earlier remarked that he was a “disaster” when it came to technology, joined Brad Smith to discuss AI for common good.4 In the last decade, AI for Good has grown as a field, as an ideology, and as a mission to save the world. With no country on target to meet the Sustainable Development Goals (SDGs) by 2030, the UN has doubled down on AI to accelerate progress.

Doing good has always been a big business. With AI, it is on steroids. Fei-Fei Li, former chief scientist at Google AI, placed her bets on AI when she declared, “I believe AI and its benefits have no borders. . . . It has the potential to make everyone’s life better for the entire world.”5 She is not alone in this exhausting clarity on AI-enabled solutionism. The breeding season of nonprofits for AI for Good kickstarted a decade ago. For instance, in 2015, the AI for Good nonprofit was established to find solutions to advance the UN’s sustainability mission.6 Its headquarters is, unsurprisingly, in Berkeley, California, where it sits near others in Silicon Valley to innovate for good in the West to trickle down to the rest. The usual tech suspects have joined in, propelling a new generation of lab geeks toward this mission. Google has channeled $25 million to support the AI for the Global Goals project.7

What happens in Silicon Valley does not stay in Silicon Valley. There is a cascading effect on the usage of limited resources, energy, and drive for AI in countries like Nigeria and India. Tejumade Afonja, a twenty-four-year-old Nigerian with engineering training, believes, “We [Nigerians] could compete. . . . AI is something Africa could do along with everyone else.”8 Africa is touted as AI’s future, given it is the world’s youngest continent. Its population has a median age of twenty and 60 percent of residents are under the age of twenty-five.9

In the meantime, Indian tech gurus are busy Indianizing AI. The “prophet of inclusive innovation” R. A. Mashelkar calls it “Gandhian engineering.”10 AI can bring to fruition Gandhi’s thinking on science where “there is enough for everyone’s needs, but not for everyone’s greed.” He proposes the acronym ASSURED to illustrate seven key factors to take it the Indian way: affordable, scalable, sustainable, universal, rapid, excellent, and distinctive. These are the new converts to the missionary zeal for AI.

With God in the picture, the devil is not far behind. Chris Nodder, author of Evil by Design, paints a dire scenario of how design can lead us to the dark side.11 Our seven deadly sins get a tech makeover. Pride translates to products pandering to user values. Sloth builds a pathway of least resistance, as with the infinite and mind-numbing scroll. Gluttony taps into customers’ fear of missing out to keep them connected. Anger finds its home in anonymity, channeling the world’s angst through digital taunts and trolls. Envy is all about status feeding into user aspirations. Lust nudges users to trust emotion over rationality, and greed reinforces customers’ behaviors to keep them perennially engaged.

Faith drives the way we imagine tech innovations. When God speaks in the tech cathedral, the bazaar is forced to listen.

When Good Was Bad

News outlets are increasingly covering issues of algorithmic discrimination, predictive analytics, and automation, and exploring how they infiltrate welfare communities, policing, health care, and surveillance of public spaces. Public officers face some soul-searching today as they straddle AI-enabled efficiency and social justice. Aid agencies and state actors stand on a graveyard of failures of global innovations that claimed good intent—for instance, the last fifty years of trickle-down economics that justified tax cuts for the rich purportedly to benefit the poor.12

Few, particularly in Latin America, can forget the crusader Jeffrey Sachs with his “disaster capitalism” in the 1970s.13 He justified administering “shock therapy” to the world’s economies outside the West as a means for unparalleled economic prosperity for all. Today, tech saviors offer their AI-enabled medicine packaged as goodness for all to swallow. AI is meant to aid in curing economic recessions, geopolitical wars, and planetary degradation.

Doing good comes with a toxic legacy of oppression in the name of uplift. The centuries-long colonial project was the most ambitious in its agenda for “doing good.” The English language was one of the most potent instruments to shape the “good subjects.” During the British Raj, Lord Macaulay, the secretary of war in colonial India, became infamous for “Macaulay’s Minute,” written in 1835, in which he focused on the deficiencies of the Natives’ cultures and languages and proposed the English language as a solution. He argued that it was an “indisputable fact” that Asian sciences and languages were insufficient for advancing knowledge. Only English could truly give us the “enlightenment of our minds” and unravel the “richest treasures of modern thought and knowledge.”14

“Macaulay’s Minute” was an “English for Good” moment of the day. English continues to enjoy a privileged place in the former British colonies and is equated with progress, mobility, and modernity.15 Historically, what qualified as good was often bad, as it translated to subservience, oppression, and taming “subjects” to the existing social order. Even today “Uncle Tom” is a slur among African American communities as it has come to mean a “good slave” who complies with his master at his own expense.16

A “good woman” of virtue and honor remains a live template in patriarchal societies, though many continue to resist. A case in point is the Iranian women’s uprising, defying the morality police as they burn their hijabs. A “good subject” for governance under colonial rule was racialized and legitimized by philosophers who are still revered and often uncritically taught in our institutions. Philosopher Immanuel Kant’s hierarchy of “good subjects” was based on supposedly innate traits of entire groups. For instance, he asserted that Indigenous Americans, as a race, were “incapable of any culture” as they were too weak for hard labor and industrious work.17 Old intellectual habits die hard.

Moral awakenings do happen, however. They have served as land mines in ideologies of goodness, blowing up atomized and culturally myopic constructs. Today’s intelligentsia has come quite a distance from celebrating the nineteenth century poet-novelist Rudyard Kipling to condemning him. His work fostered empathy for the white man for the “burden” he must carry to transform colonial Natives from “half devil and half child” to “good subjects” worthy of compassion.18

Today, tech companies hungry to capture the next billion users markets frame their presence in the Global South in altruistic terms. AI for Good projects driven by tech philanthropy spread the narrative that tech can solve problems of “the natives” by tapping into their aspirational yearnings for freedom, stability, and inclusion. History does not die; it is reborn. Sometimes in the best of ways, through human reckoning or resuscitation of beautiful ideas of the past. Sometimes in the worst of ways, through a nostalgia for the “good old days” and even a fervent zeal to reestablish moral clarity with a binary logic of good and bad. To ensure that computational cultures automate the good and track the bad, we need to heed the diversity of contexts and peoples they strive to impact.

The Bretton Woods of AI

In October 2019, the Rockefeller Foundation brought together a group of technologists, philosophers, economists, lawyers, artists, and philanthropists at their Bellagio Center in Italy to explore how AI can “create a better future for humanity.”19 They called it “The Bretton Woods for AI: Ensuring Benefits for Everyone.”20

Seventy-five years ago, the Bretton Woods Conference laid the foundation for a global economic order. Nobody wanted another world war. The summit called for a radical reimagining of the financial system, trade, and markets at a global scale. Institutional invention led to the birth of the International Monetary Fund (IMF) and the World Bank. The vision went beyond the economic paradigm with the birth of the UN, attending to how social and cultural factors shape global governance.

While these efforts contributed to decades of peace and prosperity in many parts of the world, they also led to rising inequality among nations.21 Governance, over the decades, mirrored colonial relations. Europe and the United States shaped the leadership of these global organizations. They fixed the agendas and laid out the priorities for others to follow. Times have changed today as postcolonial nations have newfound confidence. In this data economy, the Global South demands data sovereignty. Global South countries have the world’s majority of online users and data as well as indigenous companies that can build infrastructures to protect and preserve their nations from foreign extraction.22

The Belt and Road Initiative (BRI) financed by China has built infrastructures and alliances between China and East Asia, Africa, and Europe. This geopolitical pack is already having a significant impact on AI-driven alliances and agendas, leaving the old guard from the United States and Europe outside the decision-making.23 French finance minister Bruno Le Maire admits, “The Bretton Woods order as we know it has reached its limits.”24 He makes an urgent case to reinvent Bretton Woods by acknowledging shifts in geopolitical power, else the “Chinese standards—on state aid, on access to public procurements, on intellectual property—could become the new global standards.”25

The Rockefeller event was one such effort to reinvent Bretton Woods. I was invited to be part of this group alongside thought leaders like Rumman Chowdhury, then director for the Machine Learning Ethics, Transparency and Accountability (META) team at Twitter (now called X); Joy Buolamwini, computer scientist and founder of the Algorithmic Justice League; Tim O’Reilly, founder of O’Reilly Media; economist Mariana Mazzucato; Ronaldo Lemos, director of the Institute of Technology and Society in Rio de Janeiro, and others. Many in this group, while diverse in their leadership, expertise, and organizational affiliations, were pegged to the Anglo-Saxon context.

While others spoke about the data deluge, data glut, and the need for data privacy, Ronaldo Lemos and I spoke about data black holes, data deficits, and the urgency of data visibility. In an age where visibility generates engagement, what does not exist online does not exist in our social imagination. Informal settlements from Chad to Brazil, where more than a billion people live in precarious arrangements, show up as blank areas in location-based apps. Data deficits create and amplify marginalization for millions of people, as in the case of Brazil’s favelas, where 1.5 million people live in informal housing. Ronaldo Lemos explains that by being unmapped, people living in the favelas have limited access to public services. “They don’t have an address, so they don’t get mail at the post office. . . . You don’t get garbage collection, you don’t get electricity. That’s the reality.”26

Demand for Data Presence

Digital absence negates the lives of billions of people already at the margins. Object recognition algorithms perform significantly worse at identifying household items from low-income countries compared to items from high-income countries.27 Researchers at Meta’s AI Lab tested some common household items like soap, shoes, and sofas with image recognition algorithms by Microsoft Azure, Clarifai, Google Cloud Vision, Amazon Rekognition, and IBM Watson. They found the algorithms were 15–20 percent better at identifying household objects from the United States compared to items from low-income households in Burkina Faso and Somalia.

In less wealthy regions, a bar of soap is a typical image for “soap,” while a container of liquid is the default image used in wealthier counterparts. Data feeds for “global datasets” for basic commodities come primarily from Europe and North America. Africa, India, China, and Southeast Asia are the most under-sampled for visual training data.28 The fact is that some groups enjoy more empathy than others due to long-standing efforts to make them visible and understandable. The prototype user for the designs of our everyday lives has long been “WEIRD”—Western, Educated, Industrialized, Rich, and Democratic.29 The rest of the world has had to contend with centuries of colonial and patriarchal baggage, which have resulted in cultural erasures and historical fictions in mainstream narratives.

In The Next Billion Users, I explored how the “rest of the world,” predominantly young, low-income, and living outside the West, fall into caricatured tropes.30 Biased framings lead to biased datasets. Aid agencies have typically defined the next billion users as the “virtuous poor”—users who are utility-driven and leapfrog their way out of constrained contexts through innovative technology. Businesses have framed the global poor in recent years as natural “entrepreneurs from below,” ready to hack their way out of poverty and innovate in scarcity. Education policymakers view them as “self-organized learners” who can teach themselves with EdTech tools and liberate themselves from the schooling institution.

These framings generate skewed datasets, which in turn legitimize these framings. The next billion users are very much like you and I—they seek self-actualization and social well-being through pleasure, play, romance, sociality, and intimacy. These youth need the state and the market to step up and keep their end of the deal, to provide a security net and fair opportunities. Disregarding our common humanity contributes to a failure-by-design approach and a graveyard of apps.

Male Universality

The gender dimension amplifies this already biased approach. Feminist activist Caroline Criado Perez argues how we are in a world where the default user is male, reinforcing the myth of “male universality.” She points out how the lives of men have become standard representations for those of all humans, disfiguring our databases by a woman-shaped “absent presence.”31 She calls for breaking data silences by diversifying our media diet. Films, news, literature, the arts—all could benefit from how the rest of the world tells their stories.

This male-oriented culture feeds a gender data gap and influences how we design our systems, tools, and services.32 Those who are cisgender and male, abled-bodied, and middle-class serve as “unmarked users,” while the rest of us who do not fit the mold are marked as fringe, despite constituting the majority.33 Crash test dummies correspond to the height, weight, and body structure of an average man. Planners measure grocery aisles by the average height of a man, making the top shelves inaccessible to many women. Smartphones are too large for the palms of typical women. Office air conditioners are set to lower temperatures that favor men’s instead of women’s metabolic comfort, negatively impacting the latter’s productivity. It does not take much to scratch the surface of any industry, urban space, or product design to discover the gender bias underlying the logic of its design.

These biases gain a new lease on life as they seep into training data for algorithms. We have become alarmingly familiar with the impacts of these biased algorithms on access to welfare, jobs, education, health care, and other essential services. Joy Buolamwini came to prominence with her article for the New York Times on racial and gender biases in facial-recognition software.34 In an experiment at the MIT Media Lab, she used a white mask to show why facial recognition tools performed poorly in identifying Black women.

In recent years, female data scientists have doubled down as digital activists, taking on a disproportionate burden as antidiscrimination watchdogs when building AI systems. These commendable efforts, however, concentrate around racial and gender bias in the United States and Europe. What we need is an equivalent army of Global South advocates, activists, and thought leaders to care for the rest of the world and ensure an inclusive internet. It matters not just whose stories we tell, but what stories we tell. History is written by the victors, as the cliché goes, but what of the vast majority who yearn for their versions of the story, about their ancestral resilience, heroism, creativity, and accomplishment? Sometimes it could be as humble a story to humanize.

The Story of Poaching

Poaching strikes a chord in celebrities and public personalities. Prince William spoke about wildlife in his first speech as Prince of Wales in October 2022 to honor the legacy of his grandmother, Queen Elizabeth II. In line with the royal tradition, he championed the protection of endangered species in a rare emotional plea to the global community. He talked about the shocking loss of 70 percent of Africa’s elephant population in the last three decades, saying, “At this rate, children born this year—like my daughter Charlotte—will see the last wild elephants and rhinos die before their 25th birthdays.”35

Prince William shares a crowded space with celebrities such as Jackie Chan and Leonardo DiCaprio as they campaign for tigers, polar bears, and elephants. Ironically, COVID-19 improved the plights of less majestic wildlife such as the bat, snake, and pangolin. COVID-19 also drew the world’s attention to the lucrative wet market trade in Asia, with Wuhan acting as ground zero for this campaign. Just after the pandemic broke in 2020, China issued a temporary ban on wildlife trade and consumption and kick-started the removal of pangolins from the official list of traditional medicine treatments.36

Nevertheless, conservationists face an uphill battle to curtail this illicit sector. Wildlife poaching is a highly profitable criminal enterprise, slightly less lucrative than narcotics, human trafficking, and arms smuggling. A rhino horn costs more than a pound of gold or cocaine in the market.37 The United Nations estimates that this trade is worth $7–23 billion a year.38 This multibillion-dollar illegal industry comes with sophisticated high-tech logistics, global infrastructures, and organizational processes, much like any successful multinational business. In 2012 the World Wildlife Fund (WWF) partnered with TRAFFIC, a global wildlife trade specialist, to combat the industry. Their strategy entails four pillars in the global wildlife trade chain: stop poaching, stop trafficking, stop buying, and inform and influence international policy.39 Bottom-up forces like community involvement and protection work alongside top-down global policy initiatives such as banning sales of wildlife items and running public campaigns to shift consumption patterns. In recent years the global supply chain of this illicit marketplace has become increasingly digitized and automated. Wildlife conservation organizations have reached out to the tech industry to combat this formidable problem—to fight fire with fire. The cliché “crisis breeds opportunity” comes to life with Intel’s TrailGuard AI project, a tech solution from the AI for Social Good initiative at Intel Corporation. The head of the lab, Anna Bethke, explains how by pairing tech with human decision makers, “we can solve some of our greatest challenges, including illegal poaching of endangered animals.40

TrailGuard AI’s cameras alert park rangers in real time when they identify suspected poachers entering conservation areas. These computational networks can process significant amounts of incoming security data at high speed and accuracy to detect and alert rangers at the national reserves. This project is one among many in the AI for Good movement, including the Microsoft-supported Elephant Listening Project, Google’s Wildlife Insights AI, and Alibaba’s cloud computing for conservation.

Multiple tech companies, research institutes, and nonprofits are now utilizing machine learning in their conservation efforts. Drones, infrared cameras, satellite images, audio recordings, and sensors are sourcing the data needed from the field to create more AI-enabled, targeted interventions to combat poaching, trafficking, and sales. The question remains on whether AI lives up to its reputation as a savior in the field of conservation.

Shoot to Kill

I teach a class called “AI and Social Design” in which I introduce students to current AI for Good projects. Students critically examine these projects and make their recommendations. In one session, we discussed Intel’s TrailGuard AI project. I divided the class into small groups and within minutes the students were buzzing with ideas. After the break, I brought the teams together to share their thoughts.

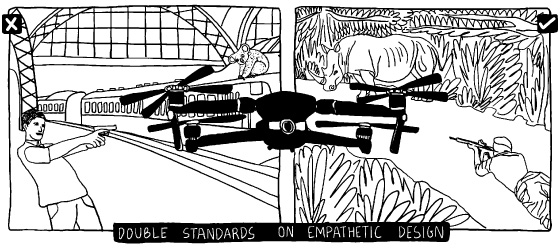

All groups expressed concerns about the digital divide: how to sustain AI in low-bandwidth environments with limited connectivity where communities have basic digital literacy skills. Students questioned whether rangers could reach the scene in time to prevent the killing of endangered species. The class offered a radical solution: that Intel should enable their AI-based cameras in the African national reserve with drones that can shoot to kill poachers on the spot. This solution was efficient, albeit morally problematic. A perfect learning opportunity, I thought, and shifted context.

I then asked my students to picture a man ready to kill an endangered koala at the Amsterdam train station, which has a police drone with the capacity to kill. Should the drone go ahead and shoot the man to save the animal? Students responded with an immediate and vocal “no.” We have a rule of law, they said, adding how we cannot take a human life without due process, and not for an animal, even if endangered. I then asked them what made the two contexts different. Total silence.

This incident reveals that empathy is not an intrinsic quality; it is a learned process. It is a core part of intelligence that nurtures our ability to relate to another person within their frame of reference. We need to expand our frames of reference to break out of our default modes and include the world’s majority in our emotional repository.

The Good Life

We know little of poachers’ communities, their motivations, and their aspirations. They are abstract, which makes them easier to demonize. In 2020, Africa Geographic embarked on a “reality check” on poachers to reveal the people behind the statistics—capturing poachers’ voices to understand why they do what they do. The revelations were at times heartbreaking and unsurprising: the need to escape poverty, hope for respect, aspirations for their loved ones, and opportunities for prosperous futures.41 One poacher expressed his desire to send his firstborn child to school and give him “the opportunity which I was denied as a child.” Another poacher shared his attraction to poaching as it promised a “good life”—a nicer home, purchasing power, stability. He was tired of feeling like the poor one in the community. “The way they [peers] were behaving made me look like I am not man enough because I couldn’t afford what they could. I was turned into a laughingstock in my community,” he exclaimed. Several others shared that they faced tough times due to the poor economy and had to resort to “desperate measures.” The majority of the poachers interviewed perceived this line of work as low risk and high reward given the easy access to these “commodities” and their lucrative value. Poaching was a smart career choice given high unemployment rates, low legitimacy of the state to deliver decent job opportunities, and deep and persistent inequality.

Neuroscientists have confirmed that emotional and cognitive intelligence share several neural systems and produce deep interdependencies, which impact how we make decisions and behave.42 In the age of AI, there is momentum among programmers to see if and how they can code empathy into machines. Computer scientists Michael Bennett and Yoshihiro Maruyama make the case that empathetic AI is explainable AI.43 If we can explain how AI operates—its intentions, its logics, and its patterns—we can address underlying issues of bias and redesign accordingly. To be explainable is to be accountable.

Users have lost patience with the “black box” treatment of not knowing how their digital tools make decisions that affect their everyday lives. Companies are listening to pleas for data diversity. For tech organizations, data diversity entails expanding the communicative repertoire through data capture of facial expression, voice, and gesture as proxies for empathy.44 Emotions, however, have little meaning without their context. Diversifying our sources of learning remains a significant challenge.

Technology, by itself, cannot solve the confounding problems of unemployment, poverty, state corruption, and social justice that poachers face. AI has a humbler task to fulfill. The premise of most AI-enabled antipoaching tools is a basic one—intelligence gathering. It should assist institutions on the ground. These tools gather specific kinds of data from the field, tracking species and poachers for detection and deterrence. This, however, needs to connect with a whole spectrum of intelligences from the field that can ensure we build our systems to matter.

Intelligence on the Ground

Notions of humanity and humankind are trapped in a Western bubble. In 2020 I supervised a project on how conservationists use AI in Africa. A young media scholar, Natasha Rusch, took on the study of AI-enabled antipoaching tools in different conservation areas in Africa. Our report was part of a larger initiative by Digital Asia Hub and Konrad-Adenauer-Stiftung (KAS) on AI trends in the Global South, launched at the onset of the pandemic.45 Born and raised in Zimbabwe, Natasha spent time in Zambia and South Africa, as her family members were well connected to the conservation industry. Their networks helped open doors for us to connect with rangers to learn about their ground realities, including the use of AI-enabled tools. We engaged with NGOs, conservationists, technology researchers, and companies working in different conservation sites in Africa, and asked them questions on motivations, missions, financial backing, strategies, community involvement, intelligence-gathering tools, partnerships, and the local politics that surround such projects. We wanted to know what the different beliefs about wildlife poaching were and why, the rangers’ biggest challenges and their strategies to count poaching, and how they used AI in their everyday tactics.

We found that everyone agreed that intelligence plays a vital role in the war against poaching. But what is critical data in this war of survival? Intel gathering is risky business. Rangers shared their stories of patrolling on foot in difficult terrain over massive areas. They face poachers who are armed militia; trained sniffer dogs are useful but exorbitantly expensive. Going by foot in this game reserve is not optimal, as “you have one in a million chance of bumping into poachers unless you have intelligence,” admits a ranger from the Malilangwe Trust, a Zimbabwean NGO.46 These facts make for a good case to use AI-driven patrolling sensors. Patrolling, however, demands smart tactics. According to a ranger from Painted Dog Conservation, another Zimbabwean NGO, it pays to be unpredictable and keep poachers on their toes. They mix up their routines, change their hours of inspection, and shift their patrol pathways and times of operation. Typically, AI-enabled anti-poaching tools predict tactics of poachers, fed on past movements across these regions. Is machine learning capable of this dynamism with these ground-level tactics?

Intelligence Leakage

Tech companies are experimenting busily in this area. Predictive analytics of poaching activities uses machine learning and game theory. Microsoft’s PAWS project, for example, provides real-time heat maps that detect activities so that the rangers can see which locations have higher risk levels than others and adjust their patrolling strategies. The real intelligence, however, lies in how rangers develop tactics to make themselves unpredictable. These human intermediaries transform data into intelligence as they leverage their deep experience, informant networks, shifts in animal movements, and cultural rootedness.

Reliable informant networks are critical to such intermediaries. One of the biggest problems is “intelligence leakage”—rangers becoming informants for poachers. A ranger from Malilangwe Trust explains:

Like Kruger National Park, they brought in all this fancy equipment, tracking equipment. They’ve put in transmitters into rhino horns. And the very people you look—are looking after them, are using that to actually kill the rhinos.47

Relationships of trust dictate the quality of intelligence. A ranger from the Panda Masuie Project (an NGO) explains that 90–95 percent of poaching takes place on designated conservation property. Rangers and poachers are often from the same villages, sometimes related to one another. To go against your peer network is no easy feat. The informant networks can swing in either direction based on how you treat your rangers. On that end, the picture is dismal.

While tech companies work hard making AI for rangers, rangers are hard at work trying to get their basic needs met. A 2018 World Wildlife Fund report interviewed 7,110 public-sector patrol rangers at hundreds of sites across twenty-eight countries. The report revealed that 82 percent of rangers had faced life-threatening situations while on duty.48 Despite these high risks, rangers were inadequately armed and had limited access to vehicles and training to combat organized crime. Other grievances included insufficient boots, shelter, and clean water supplies. Their pay was low and intermittent, work conditions were poor, and jobs came with limited or no health and disability insurance. Intelligence on poachers was not their key problem. Investing in rangers’ well-being would have paid more dividends.

The realities on the ground are that most rangers have rotary phones and limited connectivity and electricity. They resort to paper-based recordkeeping. The more computationally dynamic the AI tools promise to be, the more investment hungry these tools will be as they demand quality digital and data infrastructures. The competition for funding is fierce. AI wins over salary hikes for rangers, better roads and vehicles, training and arming rangers, and investments in wildlife tourism and other alternative livelihoods. Even though AI wins the battle, it loses the war. Without addressing other related factors on the ground, a Painted Dog Conservation ranger points out why AI-enabled tools will not work. The ranger talks about how bad the roads are in the Kalahari area. To ensure the AI-based alerts translate to action, they need to invest in basic transport infrastructures:

Imagine you receive a phone call, or a radio: there’s been an incursion; there are poachers in the area and you need to pull from Main Camp; it is going to take four hours before you get to Makomo. And I don’t know how many more hours to get to where the actual problem is.49

Moreover, cofounder of Rhino 911 Nico Jacobs suggests we need to get off the high horse on high tech. AI should work in tandem with tech better suited to being on the ground:

With a proper placing of radar, a helicopter with unlimited flying time, monitored fences. . . . Better electrified fences, thermal cameras on the fences, radar systems and a four-pilot team that works eight-hour rotating shifts. I’ll decrease it [poaching] for you by 80 percent.50

If we step back, our questions get tougher, even existential. Is there a point to detecting poachers if rangers cannot get there on time? Why place all bets on new tech when old tech can still be powerful? How will users perceive AI tools if they go against their own interests? What value does AI have when detached from social intelligence on the ground? This is not tech nihilism or Luddism; it is social realism. Social solutions can help solve technological problems. Human and technical intermediaries come as a package. We need to zoom out to see the full complexity of humanity.

From Morality to Relationality

There is a reason why the tech industry leads the way on AI for Good projects. Tech leaders miss being the good guys, like in the early days of the internet. Today, Google’s “Don’t Be Evil” motto reads like satire. In 2018 the phrase was quietly retired from Google’s preface. Similarly, the popular HBO television series Silicon Valley, which parodies the bro culture of California’s Silicon Valley from 2014 to 2019, was no longer a laughing matter. The show’s writer and producer, Clay Tarver, remarked, “I’ve been told that, at some of the big companies, the P.R. departments have ordered their employees to stop saying ‘We’re making the world a better place,’ specifically because we have made fun of that phrase so mercilessly.”51

The tech industry’s credibility is at rock bottom. It has a proven record of generating new problems with old tech and promising to solve these problems with newer tech. This pattern guarantees a cycle of technocentrism. Think of the cringeworthy aha! moments among the geeks in Netflix’s docudrama The Social Dilemma about how the tech industry controls, manipulates, and polarizes its billions of users. Tech inventors of the hashtag, the scroll, and other such digital addictions promise to save the world again as redemption. But tech creators need to stop trying to be messiahs in this game. They need to break away from this legacy of moral certainty and binaries of good and bad.

Designers need to shift from morality-driven design with grandiose visions of doing good. Instead, they should strive for relational-driven design that focuses on the relationships between people, contexts, and policies. Like any relationship, it is the process that needs nurturing. Designers, programmers, and funders can benefit from attending to, listening to, and reflecting on what users at the margins say and do about AI intervening in their social lives. Policymakers should bury the terms “AI for Good” and “AI for Evil.” Words shape design, so regulators can establish transparency by reframing AI as it relates to the values of diversity and representation, authenticity and trustworthiness, and explainability and responsibility. Media can stop debating whether tech can be an autonomous solution to the world’s problems.

All tech is innately assistive and investing in the human is investing in the machine. AI does not generate contextual intelligence; we—humans—do. We need to learn to live without our binary instincts. This pursuit demands a messy but essential relational solution.