10

Case Studies

In this chapter, we present case studies to demonstrate the methods and ideas described in the book. Any company, institution or business can benefit from monetising their data, so the case studies cover a range of different applications. We do not include the more common examples, which are readily available on the internet. Note also that many of the ideas are widely applicable and the aim of the book is to enable the reader to make use of them in their specific business.

The case studies illustrate how the ideas in Chapters 7– 9 pan out in real life. They show different ways of uplifting the business. In some of the case studies the benefit from monetising data is obvious; in others it is more hidden and the benefits will arise from improved processes, such as algorithms replacing manual work or greater streamlining because more use is made of forecasts. Examples of this are found in planning, purchasing accuracy and in all aspects of supply chain management. The benefits can also arise from reduction of time and costs, especially by replacing the more routine work carried out by experts with algorithms, thereby freeing up the experts for more creative thinking and innovation.

The case studies cover different types of data and different sectors and sizes of business. One of the main barriers to successful monetisation is poor data readiness. Data preparation can absorb a lot of time and be very frustrating. All of the case studies have arisen in our practice and each emphasises key learning points.

Each case study has the following sections:

- Background and Content

- Methods

- Monetisation

- Key Learning Points and Caveats

- Skills and Knowledge Needed

- Cascading, wider relevance

The case studies are summarised in Table 10.1.

Table 10.1 Summary of case studies.

| Number | Case study | Sector | Monetisation opportunity | Exchange | Further comments |

| 1 | Job scheduling in utilities | Utilities | Efficiency | Own use data | |

| 2 | Shipping | Shipping | New services | Own use data | |

| 3 | On line sales or mail order | Retail: mail order, over the counter, health products | Predictive targeting | B2C selling goods and services | Online |

| 4 | Intelligent profiling with loyalty card schemes | Retail: mail order, over the counter, health products | Segmentation, individual offers | B2C selling goods and services | Online |

| 5 | Social media | All | Connected world | Social network | Using public data with a subtle theme |

| 6 | Making a business out of boring statistics | All | Increase market intelligence | Advisors selling knowledge | |

| 7 | Social media and web intelligence services | All | Business improvement and selling information | Advisors selling knowledge | Using official statistics and public data |

| 8 | Service provider example | All | Emailing newsletters | Service providers | Public and provider’s own data |

| 9 | Data source | All | Providing addresses | Service providers | Public and customer’s own data |

| 10 | Industry 4.0: metamodelling | Manufacturing | Mass customisation | Advisors selling knowledge | |

| 11 | Industry 4.0: modelling | Manufacturing | Supply chain management | Own use data | |

| 12 | Monetising in an SME | Health and well being | Data analytics to generate insight | Own use data | Selling insight |

| 13 | Making sense of public finance and other data | Finance | Business improvement and selling information | Open data | Using privately owned data and integrating it with public data |

| 14 | Benchmarking | Brands | Learn to decide how good or bad company performs | Market research data enriched with public data | |

| 15 | Shopping habits | Retail | Improved customer relations, efficient and product handling | B2C selling goods and services | |

| 16 | Shopping habits | Retail | Improved customer relations, efficient and product handling | B2C selling goods and services | |

| 17 | Shopping habits | Retail | Improved customer relations, efficient and product handling | B2C selling goods and services | |

| 18 | Monetising data arising from IoT | Service providers, households and facility management | Business improvement, better targeting and selling information | ||

| 19 | Monetising data arising from IoT | Insurance, healthcare and risk management | Business improvement, better targeting and selling information | ||

| 20 | Monetising data arising from IoT | Mobility and connected cars | Business improvement, better targeting and selling information | ||

| 21 | Monetising data arising from IoT | Production and automation | Business improvement, better targeting and selling information |

Case studies 15–17 and 18–21 encapsulate distinctly different aspects of shopping habits and the internet of things. As these application areas are of great importance they are given as separate case studies. Most of the text in these case studies is specific to the specific aspect being described, but some of the text is repeated for the sake of completeness. Therefore, these case studies can be read as complete entities.

The case studies are introductions to the fields rather than prescriptive. They are not complete solutions but give an overview and indication of how to deal with the main issues. This is a necessary way to approach the case studies because each could merit a whole book in its own right.

10.1 Job Scheduling in Utilities

10.1.1 Background and Content

The utilities sector is highly regulated and there are strict guidelines as to response rates to be achieved by service providers when attending to faults and failures. Customers with problems contact the company and expect solutions. For example, if your phone or internet, electricity, water or gas are not working, you expect the utility company to come and repair your system. Although from the customer point of view all jobs are important, from the company point of view some jobs are more important, urgent or risky than others and need a higher priority.

Service‐level agreements are usually set to ensure that the company gives an agreed level of service. In utilities, jobs with a higher level of risk are prioritised. Risk is determined by a combination of likelihood and consequence and usually a risk‐based points score is applied. There is a time consideration, in that the highest‐risk jobs must be completed immediately, or at least within 24 hours, and others can be dealt with at a later date.

Consider a service‐level agreement that states all highest‐risk jobs must be attended immediately and overall at least 60% of jobs arising in a month must be completed within 24 hours. The difficult issue is that jobs of different risk levels arise in a random manner and the timing and seriousness of the problems varies with the seasons. As the month progresses, which jobs should be tackled so that 60% is achieved over the whole year but is not exceeded by too much? For example, if all the jobs in one month are tackled, this will be an excessive response rate from the company point of view if only 20% were at the highest risk level. If only the highest‐risk jobs are tackled, only 20% of jobs will be carried out and there is a shortfall under the 60% service‐level agreement.

The business issue is to conform to the service‐level agreement in the face of the random arrival of problems. The company needs to avoid excessive overwork that can arise from a fear of missing the target. This situation is reminiscent of the classic manufacturing dilemma of having to fill containers to a certain level or weight, with regulatory penalties for underfill and financial implications for overfill. In the case of weights and measures, filling may be expected to be subject to natural variation following an approximate normal distribution, so that reasonable estimates can be made of the percentage over‐ and underfilled. In the utilities jobs scenario, the important measure is risk score rather than fill level, and the distribution of risk scores is likely to be highly non‐linear.

Companies hold details of all the jobs that arise and are attended; a periodic review of risk and response rates will help to address the scheduling problem and bring financial gains in terms of improved scheduling.

It is interesting that data analytics has evolved from its early applications in manufacturing and retail, and now extends to all spheres of work including workforce planning and performance management. A summary of this evolution is shown in Figure 10.1.

Figure 10.1 The evolution of data analytics

The company needs to predict the risk cutoff score that they can apply throughout the month to ensure they meet their obligations but do not excessively overrun. The current position is to adopt a conservative cutoff but this can be improved upon by analysing the data in greater detail. More sensitive application of cutoffs leads to savings in job scheduling, increased efficiency and potentially a reduction in costs, which can be passed on to customers.

10.1.2 Methods

The distribution of risk scores tends to be non‐linear because it is ‘man‐made’ and has lumps of risk allocated to jobs on an ad‐hoc basis. For example, the risk associated with a job to fix a fault affecting a small area may be 1 and for a fault affecting a large area it may be 10. This risk component is then multiplied by the risk raised by the usage of the faulty component. Low usage has a value of 1 and high usage has a value of 5. Hence, it can be seen that in this simple case, the possible outcomes are 1, 5, 10, 50. This is shown in Table 10.2.

Table 10.2 Risk scores in a simple case.

| Risk scores | Usage | |

| Affected people | Low | High |

| Low risk (few) | 1 | 5 |

| High risk (many) | 10 | 50 |

The arrival time of jobs is random and the distribution of jobs with risk scores 1, 5, 10 and 50 is not normal. In addition, the distribution of risk scores varies in different seasons and in different locations. For example, certain problems may be more common in summer than in winter; some types of property may need jobs more often than others. An example of a distribution of risk scores is shown in Table 10.3.

Table 10.3 Distribution of risk scores in different seasons.

| Risk score (RS) | 1 | 5 | 10 | 50 |

| Percentage in summer | 55 | 10 | 30 | 5 |

| Cumulative percentage of jobs with risk score less than or equal to RS in summer | 55 | 65 | 95 | 100 |

| Percentage of jobs with risk score greater than or equal to RS in summer | 100 | 45 | 35 | 5 |

| Percentage in winter | 25 | 30 | 20 | 25 |

| Cumulative percentage of jobs with risk score less than or equal to RS in winter | 25 | 55 | 75 | 100 |

| Percentage of jobs with RS greater than or equal to RS in winter | 100 | 75 | 45 | 25 |

In this simple example, suppose that the service‐level agreement is that all jobs with risk score greater than 10 must be tackled immediately, and that even if there are fewer than 60% of jobs with risk score over 10, an overall level of 60% of jobs must be dealt with in 24 hours. So the question is how to set the cutoff risk score for action.

The cumulative distribution functions (CDFs) are shown in Figure 10.2. Deciding to prioritise the most risky 60% of jobs requires a risk score cutoff corresponding to 40%. This is 1 in summer and 5 in winter, to ensure that at least the worst 60% of jobs are tackled. Because of the low granulation of the risk scores, these cutoffs lead to wastage because 100% of summer jobs will be dealt with and 75% of winter jobs, whereas only 60% of jobs need to be completed. However, it also shows that if a cutoff of 1 is chosen for both summer and winter, there is a lost opportunity because actually the cutoff could be 5 in winter and still meet the service level requirements.

Figure 10.2 Cumulative distribution of risk scores.

In the real‐life situation, the percentage cutoff each month depends on the job mix and the CDFs differ each month. This presents an opportunity for setting a more finely tuned cutoff.

Data from even a small number of years, for example five years, may be enough to identify a pattern in the data if it is a strong, well‐defined pattern, but a weaker pattern will require more data before we can be sure enough to make recommendations.

Simulation can be used to explore the stability of the patterns and any rules based on the data distributions. Repeated random samples of data from the dataset can be examined and the variation in the CDF, percentile cutoff risk scores and percentage above a specified risk score can be observed, leading to 95% tolerance intervals.

In addition to proposing a solution to the scheduling problem it is important to set up a method of ongoing assessment. The differences between observed and expected results should be examined on a monthly basis and if the discrepancy is too large then action should be taken. The natural control limits for the discrepancies can be estimated from data in a set‐up period, and then as time goes on, any discrepancy that exceeds the control limits will trigger an action.

10.1.3 Monetisation

It is important to keep strategic goals in mind and realise that even a small discovery can be beneficial. For example, merely identifying that the risk score distributions are different in different months gives an advantage. If this leads to a saving of 10% of a month’s jobs, the extra cost of dealing with prioritised jobs enables the savings to be calculated. For example, if the extra cost is £1,000 per job and there are 10,000 jobs per month, the savings are 10% × 10,000 × £1,000 which is £1 million per month. These savings can be used for other actions within the company, such as increased customer‐focussed activities with the potential to raise customer satisfaction and loyalty.

Unfortunately, establishing the veracity of the differences between the monthly cumulative risk score distributions is not easy, because we need to have replication to be confident. Several years’ worth of data are required to determine if the pattern is an enduring feature of the risk scores or whether it is just a random fluctuation. The replication data needs to be similar in salient features and this is difficult to ensure because there may be changes in the business, the evaluation of risk scores or in the range of properties within the catchment area. Even with satisfactory replication, it is not easy to be confident about the similarity of patterns, which may themselves be rather vague and subtle.

10.1.4 Key Learning Points and Caveats

Collating the dataset ready for analysis can be a major undertaking. Operational data is often not in a format suitable for analysis for a different purpose. It is a key learning point for a company to be aware that their data is valuable and that it may have to underpin all sorts of what‐if scenarios; for each investigation, the data needs to be readily accessible and reliable.

Data investigation is likely to raise issues and queries with the data, for example the exact operational definitions and lists of quality checks undertaken. Good effective communication channels need to be established early on in the project so that there can be fast exchange of information and explanations throughout the investigation.

The importance of the investigation needs to be clear to all stakeholders to ensure their commitment to the project. There needs to be clarification of aims, timescales and communication channels. Official statistics can be used as a back‐up to the operational data. This will provide evidence of the distribution of properties and population characteristics in the catchment area and also the trends taking place over time.

The balance of penalties and savings needs to be examined. The uplift compared to doing nothing needs to be noted and also the potential cost of faulty guidance due to failed assumptions.

It may be impossible to establish a reliable pattern. In this case it is a business decision whether to act on the suggestions evident from the historic data or to retain current practice. Patterns, even if established, may change over time and there must be ongoing checks and balances to ensure that targets are still being met and regulations are being followed.

The analytical skills needed for this sort of investigation are significant. It is important to provide support to the analyst so that they do not have to spend all their time on the technical‐administrative tasks associated with accessing and rationalising the data.

10.1.5 Skills and Knowledge Needed

An investigation of the sort described in this case study is a classic application of data science. The analyst needs to understand the business need, have excellent communication skills and ability to keep on task to deliver a viable solution. In addition, there is likely to be considerable data manipulation and so IT skills are vital. The statistical skills needed include an understanding of natural and systematic variation, CDFs, percentiles and pattern recognition techniques. There needs to be an awareness of the possibilities and consequences of errors arising from underestimating and overestimating the levels of risk likely to be encountered in the next accounting period. Statistical process control methods are needed to ensure that the process stays on track and that control limits will alert to the need for actions, such as re‐analysis, in good time.

10.1.6 Cascading, Wider Relevance

The scenario in this case study applies to any situation where there are competing demands dictating how things are done. The competing forces can be financial, regulatory, health and safety or customer‐related. Utility companies and IT or infrastructure service providers must look after their expenditure and demonstrate value for money as well as reaching well‐defined service‐level agreements. Due to restrictions in expenditure, such institutions are limited in the number of experts they can employ, hence the importance of learning from each investigation and embedding data monetisation skills in the company ready for the next opportunity to use them.

10.2 Shipping

10.2.1 Background and Content

The shipping industry is extremely important. It deals with 90% of global trade and is responsible for around 3% of global carbon emissions. There has been rapid expansion of the use of sensors and a massive amount of shipping data is now collected routinely. Shipping data arises from different sources and in different formats (see Figure 10.3); big data is a topical issue. Big data analytics can help to determine hidden patterns and trends that can be very useful for performance management, maintenance planning and emissions monitoring, thus providing decision support for the ship operator.

Figure 10.3 Data sources in the shipping industry.

However, as in many industry sectors, less use is made of the data beyond its immediate operational value. The different sources of data are often owned by different stakeholders, making it difficult to get the full benefit. Ships’ logs contain valuable details of sailing conditions, sea states, routes and time taken, as well as cargo and staffing information. These are company owned data, are confidential, guarded from competitors and not usually accessible. However, in conjunction with publicly available data on weather and tidal conditions, this data can give great insight into the efficient running of the business. Producing case studies showing the benefits of analysing shipping data will encourage a greater sharing of data, take‐up of the methods and nurturing of the skills needed.

If appropriate equipment is fitted, sensors return minute‐by‐minute fuel consumption figures and GPS locations. The data is used for navigation, timing and fuelling purposes. The data can also help to compare ship management strategies, including economic speed of travel (see Figure 10.4) and maintenance planning. Case studies listed in the bibliography show how ships’ data is analysed to give valuable insights and monitor shipping performance.

Figure 10.4 Optimum speed recommendation.

Shipping is a cost‐effective mode of transportation for global trade. With increases in fuel prices and increasing environmental legislation, fleet operators are keen to save fuel and keep a tight control on emissions. Increasing shipping efficiency, reducing emissions and operational costs is environmentally sound and brings competitive advantage to the ship operators.

Analysis of data on a ship’s location and fuel consumption is the main focus of this case study. Three additional facilities were developed for use by shipping management: automatic mode detection, identification of an economic speed and a methodology for calculating emissions. Further work is underway on monitoring ship performance.

10.2.2 Methods

The data need to be time stamped, amalgamated and interpreted ready for analysis. One of the first realisations when studying shipping data is the need to identify different modes of operation for the voyage. Offshore vessels will spend periods of time in port, in transit and holding their position at a site; tugs will spend time in port, in transit and in action; ferries will spend time in port and in transit, often having to modify their speed and course to negotiate other vessels in busy shipping lanes. Before fuel consumption data can be interpreted each journey needs to be separated into its separate modes of operation.

Identifying modes of operation involves a thorough analysis of historical measures of fuel consumption, speed and location with known modes so that mode thresholds can be set. On some vessels, the crew set the mode manually, but this can be inaccurate due to operational distractions. The automatic detection of different modes is more reliable.

Fuel consumption and speed are related by a power law. A statistically designed experiment was used to generate comparable data from which to establish the relationship between power and fuel consumption for a specific vessel travelling under calm conditions. Replicated journeys were carried out at different power levels according to a statistical design. The data for power and speed are analysed by fitting a regression model based on a cubic curve. Using this model, the fuel consumption for a journey of a set number of nautical miles was calculated for different speeds. The speed giving the minimum cost was identified and recommended as the eco‐speed. Using this relationship, a dashboard display shows the time for the journey, the expected fuel consumption and the expected excess cost if a different speed is used. The expected emissions are also calculated based on the specified type of fuel being used. Thus the economic speed recommendation is explained and justified.

Sensors to detect emissions are expensive. An alternative is to use statistical calculations to estimate emissions from the speed, fuel consumption and fuel type. These estimates are added to the eco speed dashboard to provide a sound management tool.

Data for successive journeys can be analysed to produce a regression model predicting fuel consumption under specified conditions. The predicted fuel consumption can be compared with observed fuel consumption and the discrepancy monitored using statistical process control. Large discrepancies alert the ship’s management to possible issues of equipment malfunction, wear or damage.

10.2.3 Monetisation

Analysing data beyond its immediate operational use gives information on the estimated fuel consumption, duration and harmful gas emissions for the upcoming journey. There are monetary savings in terms of optimised fuel consumption and better prediction of shipping times. Current and upcoming regulations regarding emissions can be accommodated. Automatic mode detection is fundamental to managing performance and reduces costs caused by errors introduced by manual recording of mode information. These uses of available data give a competitive advantage and increase revenue.

10.2.4 Key Learning Points and Caveats

Shipping sensor data can be used to optimise economic factors and increase business. It requires use of sensor equipment, familiarity with open data sources and access to operational data. There has to be documentation and strict management to ensure that the agreed recommendations are adhered to.

There are a wide range of influences on fuel consumption in shipping. Experiments on active ships are more appropriate than carrying out tests in a workshop or using simulations. They need to cover a wide range of weather conditions, which is clearly difficult to achieve; the costs of experiments are considerable as the vessel has to be taken temporarily out of service with the resultant cost in fuel, lost trading and staff time. Although this is an important limitation, the experimental results are nevertheless valuable as a first guideline and further refinement can be carried out according to the likely benefits. Insight can be obtained from observational data that covers a wide range of conditions but this data includes a lot of noise so that a large quantity is needed to detect the signals within.

10.2.5 Skills and Knowledge Needed

Sensor data from ships needs to be captured and analysed. Data needs to be cleaned and verified. There needs to be knowledge of relationships between the many factors influencing shipping costs. Baseline data also needs to be analysed to detect patterns and trends and determine thresholds. Experiments are required to set up baseline measurements upon which to build models. The experiments must be designed to maximise information for minimum cost. Engineering knowledge of emissions calculations is needed. Statistical skills are required to analyse correlations and construct regression models for prediction. Business knowledge is vital to determine the key areas for improvement and ensure that data analysis is focussed on providing competitive advantage.

10.2.6 Cascading, Wider Relevance

Similar use of sensor data can be made in all industries to save energy and costs, and conserve essential raw materials. Other industries such as production and manufacturing already use their data in quality improvement projects, but there is less emphasis on energy saving. These industries are starting from a strong base by having statisticians employed and knowledge of how to use data. Such usage is less well developed in shipping so there are a lot of opportunities.

10.3 Online Sales or Mail Order

10.3.1 Background and Content

Examples from online sales and mail order houses are well known for data monetisation and are the most commonly cited cases in this area. Mail order businesses are not new, having been in existence long before the digital age. They are distinguished in that they do not have face‐to‐face contact with their customers. However, from the beginning, they have made good use of their data to generate profit and to compensate for having no salesperson who can read the expression of the customer.

Because they do not meet the customer in person, all the decisions to do with pricing, offers and products depend on the information gathered from customers. This information is not just from sales data but also the way customers navigate the website, the way they pay, their response to advertisements and so on.

Mail order houses and online shops use the full range of data mining methods. These include predictive modelling, forecasting and targeting, behavioural pricing, credit risk forecasting, testing sensitivity to prices and intelligent segments. In addition, association and sequence analysis lead to cross‐ and upselling and planned product placement. Forecasting on the level of prices leads to profit optimisation and cash flow management. Forecasting on the level of advertising leads to cost savings by using just as much advertising as is needed to meet given goals. Most of the time, these dimensions are linked, for example in targeting and advertising optimisation.

Even though they have been in existence for a long time, they are often ahead of the curve in using their data because it is the only way to improve the business. They are also interesting because their methods need to be opaque, so as not to deter customers or make them aware that their behaviour is being observed and analysed.

This case study is based on a mail order house selling over‐the‐counter products. They want to individualise their offers to the health needs of each customer. Currently they are targeting their customers in gross segments, but they intend to refine these segments so that their advertising can be more precise. Everything has to be handled to minimise the cost and avoid extra expense through the individualised offerings.

10.3.2 Methods

Out of the universe of methods that these companies use as part of their business culture, this case study focuses only on monetisation by behavioural‐based offerings. The first step focuses on gathering the customer data from past interaction with the company, including dates of sales, timing and location of touchpoints with the company, complaints, advertising received and responded to, products bought, returned and commented upon. The data needs to be collated by customer ID and typically will have many variables; there might be several thousand variables of different types, including binary, continuous and ordinal. It is important to realise that demographics form only a small part of this set and most of the variables relate to individual behaviour.

A predictive model is generated for each product. For product A, the target variable might be whether or not the customer bought product A in the last 14 days. The potential explanatory variables are all the other variables, with their values as they were before the 14‐day period. Decision tree analysis is used to identify important explanatory variables and create a model that makes it possible to determine the individual buying likelihood, a value between 0 and 1. The model creation must follow the data mining process. It is created with a learning dataset extracted from the whole dataset, a test procedure and validation to make sure that the model is reliable. So, for example, there might be several hundred important explanatory variables. A decision tree may have many layers (around 50 is not uncommon) and a logistic regression model may have many coefficients (around 200 is not uncommon). A pruned example of a decision tree is given in Figure 10.5.

Figure 10.5 Pruned decision tree.

An important explanatory variable might be whether the individual bought product A two orders before. Reading the decision tree in Figure 10.6 from bottom to top, an individual who bought product A two orders before is likely to buy with likelihood of 22.7%, whereas an individual who did not buy product A two orders before is likely to buy with likelihood of 32.1%. Results are listed for training and validation samples. Likelihood of purchase depends on which leaf of the decision tree the customer fits.

Figure 10.6 Detail from decision tree

Models are built for all of the products of interest and each model may have a different collection of explanatory variables. Each model gives a likelihood of purchase for each customer. For each customer, we compare the likelihoods to determine which products are the most likely to be bought. We choose the top few and include these products in individualised advertising.

The next step is to decide on the channel for the marketing for each customer. This time the predictive models have as their target variable whether or not the product that the person bought in the last 14 days was as a result of each type of marketing campaign. Each product purchase has a different identifying code depending on the type of marketing in which it was featured. A model is generated for each potential channel. In this case, the channels are phone, mail, catalogue, email and website banners. Again, the channel with the highest likelihood is chosen. The result is presented in a customised communication with the customer. Each customer has their own version; an example is given in Figure 10.7.

Figure 10.7 Customised communication.

If the number of products and channels is large, creating a model for each product/channel combination runs the risk of rather small datasets and a large number of models. In this case, it makes sense that the products are treated together rather than separately by each channel. Thus the number of models is one for each product plus one for each channel.

An associated issue is that we need to be creative to think of ways to combine advertising for different sets of products. For example, Dirk may be most likely to buy products B, A and D in that order of likelihood whereas the ordering for Chris may be products E, B and C. These products need to be presented in an appealing and appropriate manner. For example, the opening sentence may need to differ depending on which product is the most likely purchase. One solution is to create a modular framework for the advertising which can be filled automatically corresponding to the model. The diverse communications give an example of the subtle differences generated by behavioural targeting.

10.3.3 Monetisation

Using predictive modelling is expected to produce better responses without spending more money on marketing. There is also a reduced amount of marketing effort, which could include saving on printing and posting, which can be considerable. For example, a coloured two‐page letter featuring the chosen products and postage can be as much as €0.80 and may be sent to 250,000 customers. If you can reduce the number of recipients to 180,000 because 70,000 are likely to respond better to an email, then the saving is €56,000 in one campaign without reducing revenue.

Behavioural targeting also reduces potential annoyance due to over contact with the customer with the wrong product. The cost is in gathering the data and carrying out the analysis. However, the data is generally available and can be used for multiple purposes. The company has to invest in IT infrastructure and experts. The models have to be reassessed periodically. The payback is that with every marketing activity the company saves costs; every outgoing action is carefully tuned and only used when there is evidence of it being useful and the return on investment is expected to be good.

As well as identifying products and channels, predictive models can also be created around pricing strategies, thereby avoiding offering unnecessarily low prices. This has potential to increase revenue. Figure 10.8 shows different versions of individualised communications with different products and prices offered.

Figure 10.8 Individualised communication.

10.3.4 Key Learning Points and Caveats

The value of the behavioural marketing depends on the reliability of the models and the stability of the population. A bad model could be worse than doing nothing. The data mining process has to be followed carefully to ensure reliable models are created. Data must be of high quality. The briefing from the business side must be clear and understood so that all agree which are the products of interest and which are the potential channels, to avoid having to repeat the analysis. The models should be built so that they can be developed and used again and/or used in an automated environment. This is particularly important when real‐time prediction is required to offer behaviour based offers on the website or customised phone calls.

Figure 10.9 shows a cut‐down version of the complexity of the steps carried out in this case study in an automated environment. In this environment, there are also metadata relating to the application of the models, such as date of applying the model and the group of customers on which the models are applied.

Figure 10.9 Complexity of data mining steps.

The lines spreading into the bottom node represent the passage of possible errors from the data preparation steps, for example summarising sales data for each consumer in terms of variables such as whether product A was bought in the last 14 days. The data preparation is typically carried out every week. Note that the values of the variables change each week as the time window changes.

The lines starting top centre span out from the control node and represent the flow of prepared data into 103 different models, one for each product and type of communication channel. The mid‐central span represents metadata for each model. The lower right‐hand‐side node represents the flow of information into the recommendation algorithm that receives and sorts the likelihoods and formulates the recommendation.

It may be that a suitable model cannot be found for one or more products. In this case, the product can be ignored or a random choice made. This situation may occur with newer products, where there is less data and no comparable products. It can also occur with products that are less easily predicted because they occur more randomly, for example different types of presents. For these products, the model is unlikely to produce a high likelihood of purchasing the product based on the explanatory variables.

Even if the problem seems to be very complex, it can be seen that the problem can be tackled in pieces. An alternative is to use association rules or cluster analysis to see which products go together, but this does not include the people aspect and is less precise and less useful for re‐use. The predictive modelling procedure has the advantage that the models can be used in different ways, which saves time and money.

10.3.5 Skills and Knowledge Needed

Communication skills are necessary to ensure that the business briefing is effective. Knowledge to translate a business case into an analytical task is important, for example in identifying that optimum product combinations are needed. Clear ability in data mining is a fundamental requirement, as is creativity to set up the data environment, building on the data available in the data warehouse and additional sources of data if required. There also needs to be data awareness to create a range of variables that could be good explanatory variables, for example whether product A was purchased two orders ago, or whether the customer complained or whether they only purchase when there is a ‘buy one get one free’ offer, and so on.

10.3.6 Cascading, Wider Relevance

The ideas in this case study are widely applicable to all kinds of businesses dealing directly with customers. It is vital to be able to identify each customer and to capture their purchasing behaviour and all interactions with the company. The ideas can be used by companies having loyalty cards, or for on line portals for travel and other sales opportunities. We just need to be able to identify customers and track their behaviour.

10.4 Intelligent Profiling with Loyalty Card Schemes

10.4.1 Background and Content

This case study focuses on loyalty card schemes and their enormous benefits for companies in the travel sector. In contrast to online or mail order houses, most companies, including those in retail, travel, hotels and car rental, have the disadvantage that customer interactions may not include customer details so that it is difficult, if not impossible, to link purchases and monitor reactions to advertising. Even if customer details are available, without a proper scheme the details may not be shared between different branches, so a complete picture is not available. Loyalty cards have advantages in addition to a straightforward gathering of data because they facilitate a focus on marketing activities and enhancing customer loyalty by giving bespoke gifts and advantages to their members. Customers are also given special coupons and benefits in other business areas that have teamed up with the scheme, giving added value. For example, airline and hotel loyalty schemes are commonly linked, providing a start‐to‐finish potential for better service. Nearly all loyalty schemes include a benefit around extra comfort, such as entry to airline lounges, and extra advantages that come with the scheme but are not easy to buy as stand‐alone benefits, such as invitations to special launches.

Loyalty card schemes provide the underlying structure to develop promotional strategies and guide customers through their customer journey, ensuring that the customer stays loyal, comes back and hopefully becomes an advocate.

10.4.2 Methods

We focus in this case study on using the data arising from the loyalty cards for intelligent profiling. The data from the customer journey gives us more or less complete profiles. Not all parts of the data will be available so the methods have to cope with incomplete data.

There has never been so much data from prospective customers and actual customers, from so many possible sources, as today. In addition to the inventory data that every company stores in its customer database (name, gender, address, customer ID number, orders, purchases, requests, customer service inquiries, payment history, and so on) we can, and do, amass much more information. For example, we collect data about the online activities of customers and prospects in social media, their registrations in online forms, their usage data from websites and apps, their click behaviour and interactions, their completed or aborted purchase transactions and their preferred devices and browsers.

Offline data also arise from visits to the retail location, from responses to offline campaigns, and from regional peculiarities and market environments, to name just a few. Offline campaigns are marked with codes. Most point‐of‐sale systems measure activities there, while mobile devices provide other geo‐location data. Online and social media marketing activities get unique URLs or cookies to help track the origin of user actions. Information about consumers’ preferred communication and information channels are obtained. It does make a difference whether visitors have found their way to the company’s website via the Facebook fan page, the company’s profile on Xing or LinkedIn, a specific mobile ad, the company’s e‐newsletter, the corporate video on YouTube or the QR code in a magazine display ad.

Through monitoring tools and services, as well as application interfaces (API interfaces to web or social services, which can be used to read personal data), we can gain other valuable information about consumers’ education, lifestyle, peer group information, preferred brands, exposure to areas of interest and more.

So data is collected at various stages of interaction with the company. The loyalty card is a convenient way to summarise all this information. Typical data is shown in Figure 10.10.

Figure 10.10 Data in the customer journey.

Two crucial points should be kept in mind:

- Legal requirements A company has to respect national laws. In many countries an active declaration of agreement by consumers – both prospects and customers – is required for storage and usage of their data for marketing and targeting purposes. This can be a communication challenge, but it seems to be getting easier. People have become more willing to pay for information or entertainment with their data.

- Data matching The more sophisticated point is the issue of data matching and how to add data from different sources to a single person’s record. As a rule of thumb, in early stages of the consumer lifecycle, matching points are sparse and may not lead back to a single person. But they may lead back to a group of people who have something in common. As the relationship grows, more contact data and behavioural data become available for identification and matching. The goal is to try to collect these data as soon as possible. A loyalty scheme is an excellent assistance in this.

10.4.2.1 Creating profiles

Using descriptive, exploratory analysis, unsupervised learning techniques and predictive modelling, we can create individual, precise and informative profiles from consumer data. These profiles consist of social, self‐reported and observed behaviours, blended with classical CRM database information, which allow insights into how individuals act and connect in real life.

A profile, as we use it, is a single record (which may include thousands of variables) for an individual or an artificial representative of a small group of similar people. Such a profile is the result of a complex compiling process where data from different levels of individualisation and sources are brought together to give a good view of the individual or small group. A profile should be updated at regular intervals, with the timing based on how often changes happen. This individual information (raw data) must be stored in a big data solution or at least in a data warehouse. Ideally the raw data is kept for a certain time period, depending on the kind of information, so that it is possible to calculate the profiles for past periods as well. This allows learning from past campaigns and simulations of potential behaviour using earlier data to forecast planned campaigns.

10.4.2.2 Identifying segments

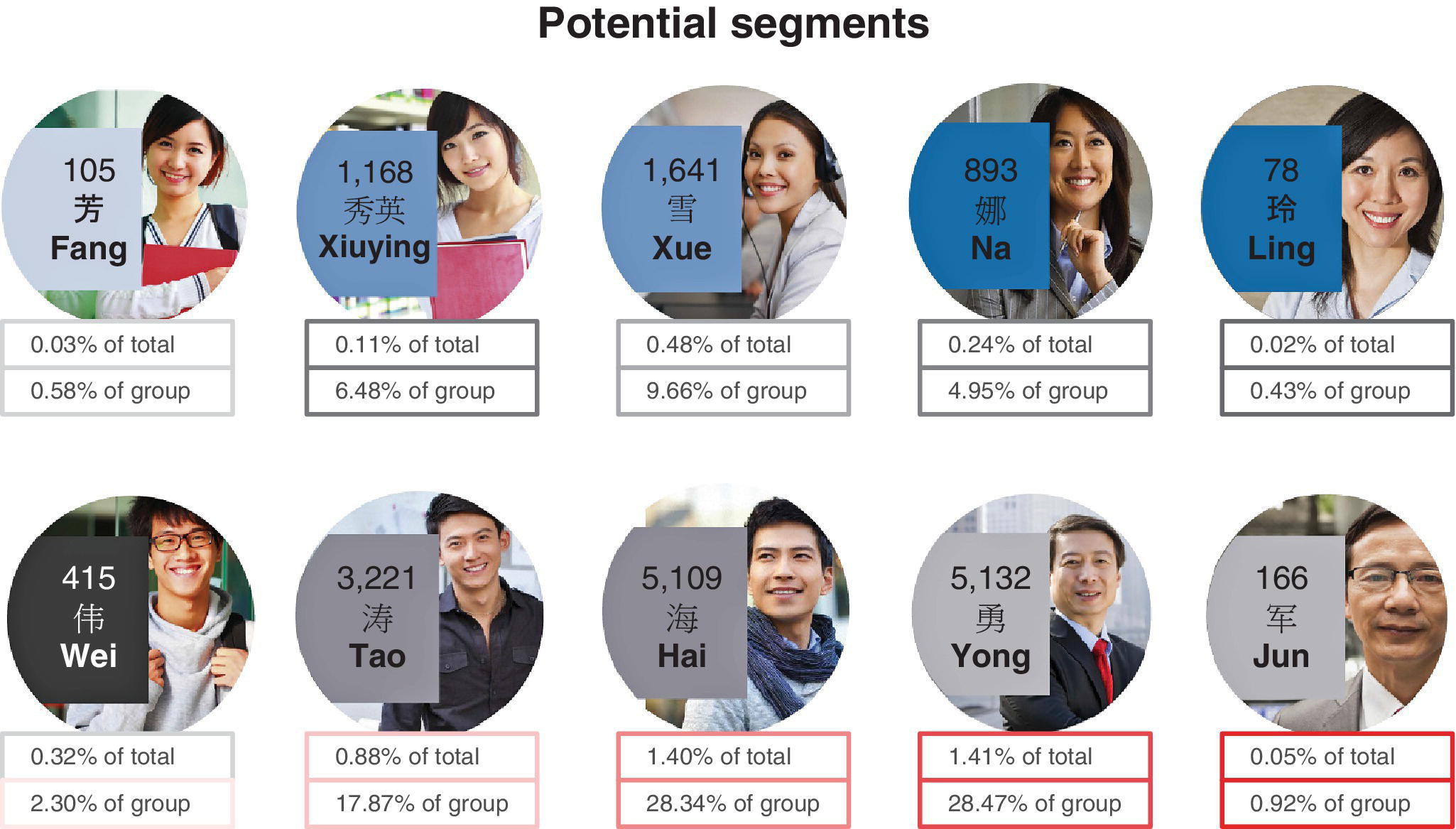

Segments are the dynamic aggregation of homogeneous profiles. Among other things, cluster analysis and predictive models are used to calculate segments. K‐means cluster analysis is preferable due to the size of the data. The number of clusters is taken as 15 in this case study, based on domain pre‐knowledge reinforced by hierarchical clustering on a sample of data. A problem with cluster analysis is that cluster sizes vary enormously. In this case study, there are a number of rather small but very homogeneous clusters, a couple of middle‐sized clusters and one large cluster containing nearly a third of the customer profiles. The small clusters contain customers with particular behaviours; often they are very active with special interests or habits. If there are clusters that contain very few people, it may be better to note these customers and their special needs but leave them out of the cluster analysis.

There is a choice of ways of evaluating the distances between customers based on their data as discussed in the theory chapters above. In this case study, Ward’s distance is used because it gives a reliable and stable clustering.

Segments help to operationalise communication and interaction, taking into account data from various available sources, such as social network data, existing master data management and CRM system data, behavioural data, transactional data and so on. This makes personalized mass communication more flexible, without being overly complex. One communication extreme is mass communication, where everyone in the selection gets the same communication. The other extreme is completely customised, individual communication, for example based on individual profiles. Segments provide an in‐between strategy. Several profiles can belong to a single segment or, if no specific profiles are built, all customers may be divided into several segments. So you might have all 15 segments in a campaign, with segment‐specific creative or tonality for each one. It is especially important that the segments are actionable and customised, and are always kept up to date.

10.4.2.3 Imputation

The next step is to use the segments and profiles to help enhance and update customer data records using information that is found in the records of other profile members, but missing from a particular customer belonging to the same profile.

No database is perfectly accurate and we will always have bad data in our files, whether imputed or not. There is a risk involved in imputing data, but there is also a risk when we do not try to repair the data. The advantage of estimation based on segments or profiles is that the risk of error is lower than with estimates based on the full population.

10.4.2.4 How Profiles and Segments become Intelligent

Profiling and segmentation are not new, although the quantity of data we can use has grown exponentially, so our accuracy has improved. But these practices become really exciting when they are combined with predictive analytics and other techniques as a basis for further analysis. Used in this way, they help in identifying the potential for cross‐selling and upselling, better exhausting geographic opportunities, finding multipliers, influencers and brand ambassadors, optimising communication channels’ mix and content, discovering trends early, reducing waste, counteracting churn risk and more.

Figure 10.11 illustrates how an intelligent profile represents a group of real people (with sparse datasets indicated by empty circles) and how several profiles will build a segment (with more complete data indicated by filled‐in circles). If you decide that each customer will have their own profile, you will need layers. The decision ultimately depends on the type of business, the data collected and the number of customers in the database.

Figure 10.11 Intelligent profiles and segments in B2C.

Cluster analysis results in a segment membership variable being added to each customer in the database. However, new customers are added all the time and existing customers may change their behaviour (and hence their segment). Therefore it is important to generate rules for segment membership. This can be done by predictive modelling. For each segment, the target variable is binary, indicating membership or not of that segment. In this case study, logistic regression is used to establish a model that gives a likelihood for each customer of belonging to that segment. Almost as a by‐product, logistic regression modelling identifies variables that are important in distinguishing segment memberships. These variables are of interest to marketing to help them understand the different segments and to create customised activities.

10.4.3 Monetisation

All the loyalty card information attached to a person is like any other resource; until it is filtered, cleaned, analysed and aggregated, it cannot provide its full effect as a driver for business. Technologies and analyses are therefore not ends in themselves. They are used to provide the best added‐value for customers and businesses.

Customers rightly expect businesses to communicate and interact with them as individually as possible. They expect relevant offers and quickly become irritated by irrelevant advertising that offers no particular benefit. Only those organisations that know their customers and prospects well can build and maintain a mutually profitable relationship.

Individual profiles allow marketing and sales to communicate to customers and prospects one‐to‐one at several touchpoints (see Figure 10.12). Every part of the campaign can be completely personalised to what the customer wants, to where they are in the buying cycle, and more. This, of course, requires an automated framework to compile a modular campaign or the installation of an alert system for trigger‐based campaigns. This level of individualisation can be difficult and expensive, so most companies decide to use segments.

Figure 10.12 Personalised journey.

10.4.4 Key Learning Points and Caveats

Data protection issues need to be understood when working on data‐focused activities such as behavioural profiling. Data protection laws may be more or less restrictive in different countries; some industries function under tight regulations, and some clients are very sensitive about privacy issues. It is true that the world of accessible data has changed and grown to a universe, but usage of parts of that universe is restricted. In particular, whilst it may be acceptable to use personalised data after an agreement has been signed, data collected before such agreement may be restricted.

Regardless of whether customers are addressed individually or in segments, they will be happier and stay longer and the business can reap the benefits in terms of optimised marketing costs. The company invests effort appropriately depending on the customer segments. Note that the segmentation discussed in this case study is about behaviour and interests and the segments will not necessarily align to customer value as defined by customer lifetime analysis or easier concepts such as RFM (recency, frequency and monetary value). The aim is always to lift people to the next loyalty level or avoid them dropping down a level; appropriate behavioural profiling will help.

It is useful to name the segments to help communicate their meaning to management and marketing and the predictive modelling of segment membership yields important variables for this purpose. Note that important segment‐defining variables are also often identified during the cluster analysis process. These variables could differ from those given by predictive modelling and may not be easy to extract from the results given by the data mining software. Important variables can also be explored by observation. For example, if the histograms of age for each segment differ markedly, age is a defining variable.

In this travel case study, the main segments found and the numbers in each segment are:

- active and trendy (12,564)

- gourmet (9, 472)

- one‐time customers (942,909)

- loyal business (1,260)

- regular business (13,209)

- potential business (1,105,325)

- high‐earning regulars (16,338).

Segments could perhaps be recognised by the intelligent observer, but our statistical analysis is able to detect less obvious but nevertheless important segments, such as the gourmet segment and the high‐earning regulars. It also gives much greater detail. For example, regular business customers are characterised by arriving alone, spending one or two days, reserving less than a day before arrival, disproportionately on a Monday, staying for only 80% of the reservations, averaging four stays per year, paying regular rather than discount rates for the room, not spending much at the front desk and spending additionally on food and drinks at about 25% of room rate. This contrasts with loyal business customers who have some similar characteristics but who differ, for example in that they stay eleven days instead of four days per year but only spend 20% of room rate on food and drink.

10.4.5 Skills and Knowledge Needed

Data gathering from many sources requires skills in cleaning, reshaping and storing data and dealing with data ownership issues. Good communication with workers in different parts of the business is needed to access data and then to explain the meaning of the segments. There need to be clear strategic aims for the work, and skill in identifying and defining these is paramount. Knowledge of cluster analysis techniques is needed, as are marketing skills.

10.4.6 Cascading, Wider Relevance

The learning from this case study can cascade to any business that has a loyalty scheme and plans to adapt it for behavioural profiling, with all its benefits.

10.5 Social Media: a Mechanism to Collect and Use Contributor Data

10.5.1 Background and Content

Social media emerged in the early 2000s and more and more players are entering the market every day. It is a highly volatile market with many players also exiting the market. Social media is a generic concept, which is characterised by exchange of self‐created content via the web, including text, music and images in such a way that the social media provider is concerned with creating a platform rather than creating content. The content is sometimes shared between a defined audience, and sometimes spread throughout a wider audience. In most social media, the user decides what personal information is contained in their profile and what parts of their profile are shared by which subsets of the whole community. Creating networks is a major feature, with the implication that the wider your network the more influential you are. Large networks give greater access because they attract more people and so your chances of meeting people you know are higher.

Figure 10.13 illustrates that this kind of network reaches across the world.

Figure 10.13 The reach of social media.

Social media differs from traditional media in terms of its connectivity. Whereas traditional media is broadcast from one point to many people, social media has ‘n to m’ exchange; that is, many people communicating with many people, so that everyone has the opportunity to be both broadcaster and receiver. The advantage of these mobile and web applications is that things happen in near real time. The disadvantage is that there is vigorous feedback both in terms of instant response and lack of quality control. In principle, anyone can say or share anything, although this possibility is limited by state and provider censorship. In addition, the fear of repercussions is an issue.

The time people spend on social media, both reading and interacting, is enormous and is increasing enormously. Most of the social media providers offer a basic free membership and the payback for the provider is obtained through mechanisms such as advertising and selling insight. The user stands to gain in terms of career, reputation and esteem. Company users also gain from reputation as well as brand awareness, recruitment and in some cases from selling products.

This case study concentrates on the social media provider and how they use the data to generate profit.

10.5.2 Methods

In general, the methods are quite straightforward. The social media provider has legal access to all the data generated by the consenting membership. Personal data, such as gender, age, affiliation, education, and contact details, is collected from user profiles. Further data is obtained by tracking users’ activities and learning from their connections and behaviour in subgroups from their user logs. The data needs to be cleaned and standardised. Missing data has to be handled carefully, and most providers will impute additional data based on a combination of the user’s activities and the profiles and activities of their networks. This imputed information is not shown to the user; most users are unaware that this imputation is done. The implications of the imputation are that more detailed profiles of the user are created and can be shared and monetised, for example in advertising.

The captured and imputed information needs to be summarised, categorised and interpreted. Methods for doing this include cluster analysis, which can highlight groupings, and predictive analytics, which enables targeted actions that have a good chance of being successful.

There is a high potential for false information or non‐human followers (robots) and providers must have algorithms in place to predict potential fraud and fakes. These algorithms may include basic checks such as comparing the country of residence of followers to the expected distribution of the item of interest. For example, a local politician would be expected to have followers nearby or related in some way to the locality. So if a politician from the north‐eastern UK has a majority of followers in Asia, the provider should check why this is and if it is reasonable. If there is no obvious connection there will be a suspicion of paid followers and data analysis of these people may give misleading predictions. More complex fraud detection algorithms may also be necessary.

10.5.3 Monetisation

For social media providers there are real financial gains to be made from advertising, especially targeted advertising, and selling insight. Social media users have the opportunity to develop their networks and create business prospects and further their careers, as well as having free entertainment in exchange for their privacy (see Figure 10.14).

Figure 10.14 The power of social media.

Compared to the traditional methods of word of mouth and family and peer group recommendations, social media tries to simulate this function in the digital world using the fact that the social media persona, often in the form of a ‘blog’, takes the place of the real person. The blog clearly cannot give the full picture and may be out of date, incorrect or exaggerated. People writing the blogs often make a living out of promoting products and this is not always obvious to their readers or followers.

The extraordinary reach of social media makes its influence extremely powerful. The power arises from the fabulous combination of extensive range and precise targeting. This precision is only possible because the social media provider can capitalise on the personal data from the user profile as well as the details of their activities and those of their networks. Even if a person does not complete their personal profile, the social network provider will use information from their peer group to estimate values to fill in those that are missing. This is visualised in Figure 10.15.

Figure 10.15 Using peer group behaviour.

Social media providers are some of the few who realised very early on that ‘data is the new oil’, a basic money‐making material. They have great opportunities to capitalise on their data and to develop new products out of it. Their knowledge about their audience enables them to develop a more or less comfortable, all‐encompassing and entertaining environment that keeps the user on their platforms. It also allows them to track their behaviour outside the network by offering a single sign‐on technology, where their social media login works as a key to access other websites and services. Previously the biggest marketing budget went to classic methods of advertisement such TV, billboards and newspapers. Social media data and the subsequent targeting opportunities have a major influence and are instrumental in the shift from taking advertising (and the associated money) from these wider‐reaching but less precise media to the more targeted advertisements possible with social media, which still have the benefit of wide reach. These targeted advertisements are typically customised in terms of content and style, with particular colours, words, and images used that have been identified as appropriate, influential or meaningful for particular individuals. For example, someone classified as homely may be sent images that have been analysed (predicted) to be attractive and to fit the mindset of homely people, for example beautiful garden furniture. The targeted advertisement need not be used exclusively and is usually combined with all other types of marketing.

Another opportunity is for social media to be a starting point for viral marketing campaigns. In these ways, analysing social media data with statistics methods shifts millions of advertising money from classical broadcast and billboard owners to the social media providers who are the owners of the social media networks.

10.5.4 Key Learning Points and Caveats

Social media providers have a self‐selected user base. They have to be aware that their user base is not representative of the whole population. The user profile data may not be up to date; employment, education and interests may change over time and not all users regularly refresh their profiles. It is well known that there is a major potential for fake identities and fake followers: people who are paid to follow particular users. There can also be fake recommendations, criticisms and reviews. Serious social media providers must take account of these issues and have algorithms in place to predict potential fraud and fakes. For example, if 100,000 followers of a certain person join one day, but they are all from a location without any obvious relationship to the person, then this might merit suspicion. The social media providers are very vulnerable to changes in this fast moving market and are well advised to keep a good lookout for impending changes and disruptive advances ahead.

Too much targeting can inhibit innovation. Targeting is essentially based on old data (even if it is only a millisecond old) and can therefore only build on the current situation. It cannot include upcoming ideas or new users with new patterns of behaviour. One way to counteract this problem and to enhance innovation is to send out non‐targeted advertising to a random selection of users from time to time and see what the response is. This can also be used to recalibrate predictive models periodically as appropriate for the application.

10.5.5 Skills and Knowledge Needed

Skills in data handling, including data preparation, are necessary. There also needs to be good communication with business experts to decide on the best ways to detect fraud. Social media providers have to be aware of the limitations of their data and carry out validity checks: for incompleteness, non‐representativeness and bias. Advanced predictive analytics can be carried out on the data but the analysis is only as effective as the quality of the data upon which it is based.

10.5.6 Cascading, Wider Relevance

Other industries can learn from social media how valuable it is to collect and track customer data and the importance of caring about the quality and accuracy of the data. They can also learn from the sea change of advertising focus that has happened in this area and that solid business rules can change in a decade.

10.6 Making a Business out of Boring Statistics

10.6.1 Background and Content

Data visualisation, including infographics, intelligent maps and responsive data manipulation, is one of the great explosions of data usage in recent times and a means of drawing more people into the world of data science. Companies providing these services are satisfying the need for fast, accessible information. They help people navigate through the mass of options for reporting structured data. Data from official statistics, company reports and research are assembled and regrouped for wider purposes. The company typically accesses available data, uses their business acumen to perceive a need and identifies an application. They rearrange the data, annotate it, add associated interesting information, and construct a versatile, accessible and presentable resource from which insight is readily available.

This case study is aimed at showcasing how a business can be formed around published data. There is clearly an opportunity to create a business and make money. Some companies are a more general data access site, and others focus on a particular area such as house purchases and real estate, or job vacancies and the labour market, or the stock exchange. More are emerging all the time. The common key drivers are: saving time, selling insight, taking in data and giving the user a comfortable ride.

10.6.2 Methods

The business opportunity needs to be clearly thought through as it affects not only the data required but also the level of detail required. Once the business opportunity has been identified, the main components of this type of activity are:

- accessible, open, high‐quality, up‐to‐date data from reliable sources

- web‐based platforms where people can interact with the data

- a monetisation mechanism.

After deciding whether to be general or specialised, you need to search for data sources, note their frequency, detail level and reliability, and establish the data‐transfer mechanism. For example, oil prices data can be accessed through national statistics institutes (NSIs) such as Office for National Statistics in the UK, DeStatis – Statistiches Bundesamt in Germany, or Eurostat, which collates statistics from NSIs across the whole of Europe. Figure 10.16 shows the six‐monthly oil prices from January 2000 to July 2017 for petrol, diesel, heating oil and crude oil, prepared by DeStatis – Statistiches Bundesamt.

Figure 10.16 National statistics oil prices.

Other sources are well known private companies with which one has to form partnerships and which one must pay for the information. Published business reports from big companies can also be used. These sources are very reliable and the producers spend a lot of money ensuring high quality. A web search for data related to the specific business area of interest yields a wide variety of information providers.

Businesses make use of these publicly available data and customise them for different purposes in the form of interactive graphics, annotated tables and reports. For example, a search for oil prices reveals amongst others, a company called Statista. A search within Statista shows the list of reports available, some of which are shown in Figure 10.17. Added usability is included by allowing different download options and formats.

Figure 10.17 Example of reports portal

The accessibility of each data source at a suitably detailed level needs to be checked and negotiated. Most official statistics sources release data in aggregated format, for example, mean values for different age groups. This may not be sufficient, but access to the individualised data is usually protected for all but well specified, approved research purposes. This is the reason why people often need to collect their own primary data rather than using secondary sources.

It is vital that the data is easily accessible for clients. As illustrated in Figure 10.18 the data needs to be combined and presented in a meaningful way that is immediately understandable and appealing.

Figure 10.18 Making a business out of boring statistics.

Normally the access would be web‐based and could be in the form of apps or interactive services. The method of monetisation must be decided before devising the platform and its technical specification. For example, if the information is free then it must be possible to lodge advertisements and to capture their effectiveness to earn money from them, for example by click through. If the information is to be paid for this could be by subscription models to different levels of access for corporate or individual users. Alternatively, it could be set up as a bespoke research payment.

10.6.3 Monetisation

Money‐paying customers have to be attracted somehow. Some companies offer a free taster or start off for free to persuade people of the value of the product and create a pool of potential customers who see the comfort and advantages of using the business system. Later these companies start charging. Companies must be creative and innovative to attract customers. Marketing ideas and a sound budget are vital to generate the necessary penetration and reach.

The service provider has the opportunity to collect information from the users to offer targeted advertising to third parties.

10.6.4 Key Learning Points and Caveats

Data and statistical analysis give added value if they are combined and summarised and transferred to a level of complexity that is valuable to users. This added value is the key success factor. The service provider has to be careful because different sources of data and information might have different quality levels in terms of coverage, completeness, currency, the way the data is gathered and how they deal with outliers; unlike official statistics sources, many data sources do not proclaim a level of quality or spend resources to ensure quality.

10.6.5 Skills and Knowledge Needed

The service provider needs IT skills to manipulate and store data and ensure it is current. They need business awareness to know what is likely to be of interest. They need to know how to reach the right people and what level of complexity is appropriate for the presentation of the digested content. Marketing and advertising skills are needed to show why it is worth paying for something the customer can also get for free (albeit with some extra work).

10.6.6 Cascading, Wider Relevance

Offering a summarising service using any kind of official statistics and reports is clearly highly relevant in any sector. Providing graphs, tables and information in a flexible format that is easy to use and can be readily incorporated into further reports will encourage greater use of data.

10.7 Social Media and Web Intelligence Services

10.7.1 Background and Content

The concept explored in this case study is that of generating revenue from publicly available web data, especially social media data. There are two points of view:

- that of businesses obtaining social media information and using it to improve their business, for example by learning what kind of customers are interested in their products and what the customers think of them

- that of a business based on using the information in social media, summarising it into usable statistics and selling it on to customers.

The first viewpoint represents commonsense, to use all possible ways of gaining insight about prospects and customers; the second viewpoint aligns with what has been called ‘web mining’ services. The case study focuses on the second viewpoint, of creating revenue by providing services, as this is part of the new world of big data. The concept of the first viewpoint is a continuation of database marketing and market research and is fundamental to sound business practice.

Note that there are free, open‐source solutions to web scraping and related activities and that SMEs and companies less willing to pay for services are likely to use these. Therefore, any service you develop must have a unique selling point so that third parties are willing to pay for it. They must be confident of getting extra value over and above what they can do themselves.

Generally, there are three main service areas that your web mining based business needs to offer:

- measuring and understanding performance

- providing tools to measure and manage advertising content

- providing tools to measure and manage audiences.

All these areas aim towards enabling your customers to monetise and optimise their return on investment in social media engagement and their web based presence. This includes them being able to monitor and benchmark themselves against their competitors, and detect market opportunities and identify obstacles to their well‐being.

The requirements of your business therefore include:

- delivering up‐to‐date, near real‐time results

- providing early warnings of trends and challenges in the marketplace, including detecting up‐coming issues and crises

- being flexible enough to incorporate customer feedback and new findings and ideas (see Figure 10.19).

Figure 10.19 Right place, right time.

A public health example, is a web based mining service to help understand teenage pregnancy and thereby reduce its prevalence. It does this by capturing social media output including certain words in a local area. It was found that there was a good association between key words ‘pregnancy’ and the names of various music bands. The public health body could try to access teenagers through their attraction to this specific music and disseminate information that would be helpful to them.

10.7.2 Methods

Setting up the business, we need to decide what areas we are interested in and the depth required, so that we know how we are going to handle and obtain permissions. Accessing limited detail from social media feeds is possible through an ordinary personal social media account. We can use R or other programming languages to capture comments being made in real time. You have to use specific application interfaces (APIs), such as those made available by the social media company. These APIs are typically described in developer and service provider guidelines. Different social media sites are more appropriate for different subjects.

It requires considerable effort to extract meaning from comments. The first step is a thematic analysis of what information is important. A business appraisal needs to be carried out, for example to determine key words and sentiments of interest. The comments then need to be dismantled so that the thematic words can be identified. The relevance and effectiveness of the capture mechanism needs to be regularly validated using an agreed set of example comments. This ensures that the right information is being extracted. Buyers of the information will only be impressed if they feel that the current mood and trends of their customers are being successfully secured.

To access more detail of the user profiles, including their demographics and social connectivity, an application has to be made to the social media provider. For example, if we want to know ages and gender of users, we must apply to the social media provider for access to the profiles. Only those users who have indicated willingness to be known will appear and rules and access differ between social networks.

You can also study your customer’s surfing behaviour if you have been able to use cookies to track their behaviour, or access their log files if you have been given permission. Another opportunity is to use robots. These are programs that read through all kinds of websites, including social media, personal and company websites and blogs. Simple robots search for key words or search terms; complex robots use more developed text mining technologies to look, for example, for sentiments.

The social media data has to be summarised and clearly presented, and made available in an accessible way and be easily interrogated. Dashboards are a convenient way of presenting diverse summary data. An example is shown in Figure 10.20. The bars illustrate the different numbers of interactions (postings, likes, shares, and so on) relating to two specific alternative brands on a particular social media platform in five consecutive weeks. It can be seen the number of interactions for the left‐hand column is increasing compared with the right‐hand column. However, the scaled numbers per thousand followers is greater for the right‐hand side showing that these followers are more active and engaged. So both the graphic and the numbers are informative. Such a dashboard should not only give gross numbers but also standardised numbers.

Figure 10.20 Social media information summarised.

It must be possible to generate reports tailored to the customer and in the customer’s corporate style. As well as gathering information about chosen keywords, the system should be capable of detecting emerging associated words and changes in known correlations between terms. More advanced services include dealing with ambiguous terms and enriching data with product and/or location details, creating networks of users, brands and products, and carrying out data analytics.

10.7.3 Monetisation