Saves a database ID field in an object to maintain identity between an in-memory object and a database row.

Relational databases tell one row from another by using key—in particular, the primary key. However, in-memory objects don’t need such a key, as the object system ensures the correct identity under the covers (or in C++’s case with raw memory locations). Reading data from a database is all very well, but in order to write data back you need to tie the database to the in-memory object system.

In essence, Identity Field is mind-numbingly simple. All you do is store the primary key of the relational database table in the object’s fields.

Although the basic notion of Identity Field is very simple, there are oodles of complicated issues that come up.

The first issue is what kind of key to choose in your database. Of course, this isn’t always a choice, since you’re often dealing with an existing database that already has its key structures in place. There’s a lot of discussion and material on this in the database community. Still, mapping to objects does add some concerns to your decision.

The first concern is whether to use meaningful or meaningless keys. A meaningful key is something like the U.S. Social Security number for identifying a person. A meaningless key is essentially a random number the database dreams up that’s never intended for human use. The danger with a meaningful key is that, while in theory they make good keys, in practice they don’t. To work at all, keys need to be unique; to work well, they need to be immutable. While assigned numbers are supposed to be unique and immutable, human error often makes them neither. If you mistype my SSN for my wife’s the resulting record is neither unique nor immutable (assuming you would like to fix the mistake.) The database should detect the uniqueness problem, but it can only do that after my record goes into the system, and of course that might not happen until after the mistake. As a result, meaningful keys should be distrusted. For small systems and/or very stable cases you may get away with it, but usually you should take a rare stand on the side of meaninglessness.

The next concern is simple versus compound keys. A simple key uses only one database field; a compound key uses more than one. The advantage of a compound key is that it’s often easier to use when one table makes sense in the context of another. A good example is orders and line items, where a good key for the line item is a compound of the order number and a sequence number makes a good key for a line item. While compound keys often make sense, there is a lot to be said for the sheer uniformity of simple keys. If you use simple keys everywhere, you can use the same code for all key manipulation. Compound keys require special handling in concrete classes. (With code generation this isn’t a problem). Compound keys also carry a bit of meaning, so be careful about the uniqueness and particularly the immutability rule with them.

You have to choose the type of the key. The most common operation you’ll do with a key is equality checking, so you want a type with a fast equality operation. The other important operation is getting the next key. Hence a long integer type is often the best bet. Strings can also work, but equality checking may be slower and incrementing strings is a bit more painful. Your DBA’s preferences may well decide the issue.

(Beware about using dates or times in keys. Not only are they meaningful, they also lead to problems with portability and consistency. Dates in particular are vulnerable to this because they are often stored to some fractional second precision, which can easily get out of sync and lead to identity problems.)

You can have keys that are unique to the table or unique database-wide. A table-unique key is unique across the table, which is what you need for a key in any case. A database-unique key is unique across every row in every table in the database. A table-unique key is usually fine, but a database-unique key is often easier to do and allows you to use a single Identity Map (195). Modern values being what they are, it’s pretty unlikely that you’ll run out of numbers for new keys. If you really insist, you can reclaim keys from deleted objects with a simple database script that compacts the key space—although running this script will require that you take the application offline. However, if you use 64-bit keys (and you might as well) you’re unlikely to need this.

Be wary of inheritance when you use table-unique keys. If you’re using Concrete Table Inheritance (293) or Class Table Inheritance (285), life is much easier with keys that are unique to the hierarchy rather than unique to each table. I still use the term “table-unique,” even if it should strictly be something like “inheritance graph unique.”

The size of your key may effect performance, particularly with indexes. This is dependent on your database system and/or how many rows you have, but it’s worth doing a crude check before you get fixed into your decision.

The simplest form of Identity Field is a field that matches the type of the key in the database. Thus, if you use a simple integral key, an integral field will work very nicely.

Compound keys are more problematic. The best bet with them is to make a key class. A generic key class can store a sequence of objects that act as the elements of the key. The key behavior for the key object (I have a quota of puns per book to fill) is equality. It’s also useful to get parts of the key when you’re mapping to the database.

If you use the same basic structure for all keys, you can do all of the key handling in a Layer Supertype (475). You can put default behavior that will work for most cases in the Layer Supertype (475) and extend it for the exceptional cases in the particular subtypes.

You can have either a single key class, which takes a generic list of key objects, or key class for each domain class with explicit fields for each part of the key. I usually prefer to be explicit, but in this case I’m not sure it buys very much. You end up with lots of small classes that don’t do anything interesting. The main benefit is that you can avoid errors caused by users putting the elements of the key in the wrong order, but that doesn’t seem to be a big problem in practice.

If you’re likely to import data between different database instances, you need to remember that you’ll get key collisions unless you come up with some scheme to separate the keys between different databases. You can solve this with some kind of key migration on the imports, but this can easily get very messy.

To create an object, you’ll need a key. This sounds like a simple matter, but it can often be quite a problem. You have three basic choices: get the database to auto-generate, use a GUID, or generate your own.

The auto-generate route should be the easiest. Each time you insert data for the database, the database generates a unique primary key without you having to do anything. It sounds too good to be true, and sadly it often is. Not all databases do this the same way. Many that do, handle it in such a way that causes problems for object-relational mapping.

The most common auto-generation method is declaring one auto-generated field, which, whenever you insert a row, is incremented to a new value. The problem with this scheme is that you can’t easily determine what value got generated as the key. If you want to insert an order and several line items, you need the key of the new order so you can put the value in the line item’s foreign key. Also, you need this key before the transaction commits so you can save everything within the transaction. Sadly, databases usually don’t give you this information, so you usually can’t use this kind of auto-generation on any table in which you need to insert connected objects.

An alternative approach to auto-generation is a database counter, which Oracle uses with its sequence. An Oracle sequence works by sending a select statement that references a sequence; the database then returns an SQL record set consisting of the next sequence value. You can set a sequence to increment by any integer, which allows you to get multiple keys at once. The sequence query is automatically carried out in a separate transaction, so that accessing the sequence won’t lock out other transactions inserting at the same time. A database counter like this is perfect for our needs, but it’s nonstandard and not available in all databases.

A GUID (Globally Unique IDentifier) is a number generated on one machine that’s guaranteed to be unique across all machines in space and time. Often platforms give you the API to generate a GUID. The algorithm is an interesting one involving ethernet card addresses, time of the day in nanoseconds, chip ID numbers, and probably the number of hairs on your left wrist. All that matters is that the resulting number is completely unique and thus a safe key. The only disadvantage to a GUID is that the resulting key string is big, and that can be an equally big problem. There are always times when someone needs to type in a key to a window or SQL expression, and long keys are hard both to type and to read. They may also lead to performance problems, particularly with indexes.

The last option is rolling your own. A simple staple for small systems is to use a table scan using the SQL max function to find the largest key in the table and then add one to use it. Sadly, this read-locks the entire table while you’re doing it, which means that it works fine if inserts are rare, but your performance will be toasted if you have inserts running concurrently with updates on the same table. You also have to ensure you have complete isolation between transactions; otherwise, you can end up with multiple transactions getting the same ID value.

A better approach is to use a separate key table. This table is typically one with two columns: name and next available value. If you use database-unique keys, you’ll have just one row in this table. If you use table-unique keys, you’ll have one row for each table in the database. To use the key table, all you need to do is read that one row and note the number, the increment, the number and write it back to the row. You can grab many keys at a time by adding a suitable number when you update the key table. This cuts down on expensive database calls and reduces contention on the key table.

If you use a key table, it’s a good idea to design it so that access to it is in a separate transaction from the one that updates the table you’re inserting into. Say I’m inserting an order into the orders table. To do this I’ll need to lock the orders row on the key table with a write lock (since I’m updating). That lock will last for the entire transaction that I’m in, locking out anyone else who wants a key. For table-unique keys, this means anyone inserting into the orders table; for database-unique keys it means anyone inserting anywhere.

By putting access to the key table in a separate transaction, you only lock the row for that, much shorter, transaction. The downside is that, if you roll back on your insert to the orders, the key you got from the key table is lost to everyone. Fortunately, numbers are cheap, so that’s not a big issue. Using a separate transaction also allows you to get the ID as soon as you create the in-memory object, which is often some before you open the transaction to commit the business transaction.

Using a key table affects the choice of database-unique or table-unique keys. If you use a table-unique key, you have to add a row to the key table every time you add a table to the database. This is more effort, but it reduces contention on the row. If you keep your key table accesses in a different transaction, contention is not so much of a problem, especially if you get multiple keys in a single call. But if you can’t arrange for the key table update to be in a separate transaction, you have a strong reason against database-unique keys.

It’s good to separate the code for getting a new key into its own class, as that makes it easier to build a Service Stub (504) for testing purposes.

Use Identity Field when there’s a mapping between objects in memory and rows in a database. This is usually when you use Domain Model (116) or Row Data Gateway (152). You don’t need this mapping if you’re using Transaction Script (110), Table Module (125), or Table Data Gateway (144).

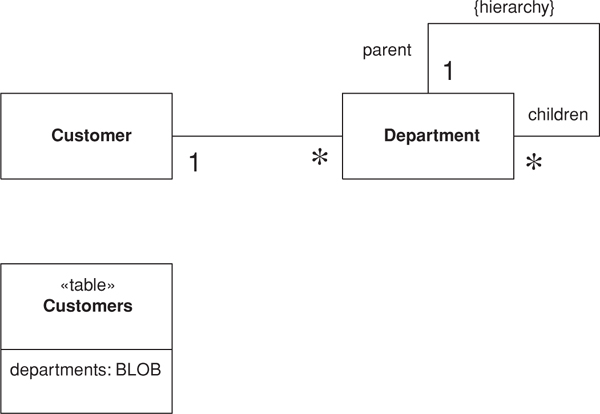

For a small object with value semantics, such as a money or date range object that won’t have its own table, it’s better to use Embedded Value (268). For a complex graph of objects that doesn’t need to be queried within the relational database, Serialized LOB (272) is usually easier to write and gives faster performance.

One alternative to Identity Field is to extend Identity Map (195) to maintain the correspondence. This can be used for systems where you don’t want to store an Identity Field in the in-memory object. Identity Map (195) needs to look up both ways: give me a key for an object or an object for a key. I don’t see this very often because usually it’s easier to store the key in the object.

[Marinescu] discusses several techniques for generating keys.

The simplest form of Identity Field is a integral field in the database that maps to an integral field in an in-memory object.

class DomainObject...

public const long PLACEHOLDER_ID = -1;

public long Id = PLACEHOLDER_ID;

public Boolean isNew() {return Id == PLACEHOLDER_ID;}

An object that’s been created in memory but not saved to the database will not have a value for its key. For a .NET value object this is a problem since .NET values cannot be null. Hence, the placeholder value.

The key becomes important in two places: finding and inserting. For finding you need to form a query using a key in a where clause. In .NET you can load many rows into a data set and then select a particular one with a find operation.

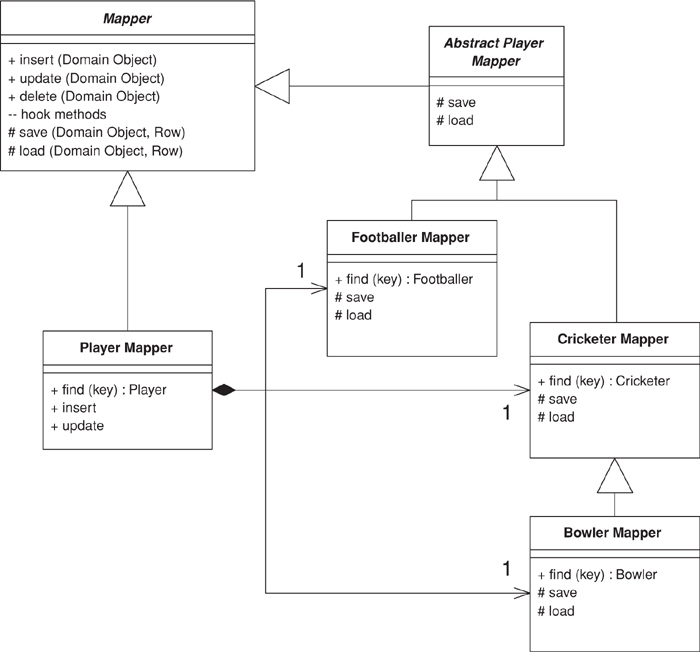

class CricketerMapper...

public Cricketer Find(long id) {

return (Cricketer) AbstractFind(id);

}

class Mapper...

protected DomainObject AbstractFind(long id) {

DataRow row = FindRow(id);

return (row == null) ? null : Find(row);

}

protected DataRow FindRow(long id) {

String filter = String.Format("id = {0}", id);

DataRow[] results = table.Select(filter);

return (results.Length == 0) ? null : results[0];

}

public DomainObject Find (DataRow row) {

DomainObject result = CreateDomainObject();

Load(result, row);

return result;

}

abstract protected DomainObject CreateDomainObject();

Most of this behavior can live on the Layer Supertype (475), but you’ll often need to define the find on the concrete class just to encapsulate the downcast. Naturally, you can avoid this in a language that doesn’t use compile-time typing.

With a simple integral Identity Field the insertion behavior can also be held at the Layer Supertype (475).

class Mapper...

public virtual long Insert (DomainObject arg) {

DataRow row = table.NewRow();

arg.Id = GetNextID();

row["id"] = arg.Id;

Save (arg, row);

table.Rows.Add(row);

return arg.Id;

}

Essentially insertion involves creating the new row and using the next key for it. Once you have it you can save the in-memory object’s data to this new row.

If your database supports a database counter and you’re not worried about being dependent on database-specific SQL, you should use the counter. Even if you’re worried about being dependent on a database you should still consider it—as long as your key generation code is nicely encapsulated, you can always change it to a portable algorithm later. You could even have a strategy [Gang of Four] to use counters when you have them and roll your own when you don’t.

For the moment let’s assume that we have to do this the hard way. The first thing we need is a key table in the database.

CREATE TABLE keys (name varchar primary key, nextID int)

INSERT INTO keys VALUES ('orders', 1)

This table contains one row for each counter that’s in the database. In this case we’ve initialized the key to 1. If you’re preloading data in the database, you’ll need to set the counter to a suitable number. If you want database-unique keys, you’ll only need one row, if you want table-unique keys, you’ll need one row per table.

You can wrap all of your key generation code into its own class. That way it’s easier to use it more widely around one or more applications and it’s easier to put key reservation into its own transaction.

We construct a key generator with its own database connection, together with information on how many keys to take from the database at one time.

class KeyGenerator...

private Connection conn;

private String keyName;

private long nextId;

private long maxId;

private int incrementBy;

public KeyGenerator(Connection conn, String keyName, int incrementBy) {

this.conn = conn;

this.keyName = keyName;

this.incrementBy = incrementBy;

nextId = maxId = 0;

try {

conn.setAutoCommit(false);

} catch(SQLException exc) {

throw new ApplicationException("Unable to turn off autocommit", exc);

}

}

We need to ensure that no auto-commit is going on since we absolutely must have the select and update operating in one transaction.

When we ask for a new key, the generator looks to see if it has one cached rather than go to the database.

class KeyGenerator...

public synchronized Long nextKey() {

if (nextId == maxId) {

reserveIds();

}

return new Long(nextId++);

}

If the generator hasn’t got one cached, it needs to go to the database.

class KeyGenerator...

private void reserveIds() {

PreparedStatement stmt = null;

ResultSet rs = null;

long newNextId;

try {

stmt = conn.prepareStatement("SELECT nextID FROM keys WHERE name = ? FOR UPDATE");

stmt.setString(1, keyName);

rs = stmt.executeQuery();

rs.next();

newNextId = rs.getLong(1);

}

catch (SQLException exc) {

throw new ApplicationException("Unable to generate ids", exc);

}

finally {

DB.cleanUp(stmt, rs);

}

long newMaxId = newNextId + incrementBy;

stmt = null;

try {

stmt = conn.prepareStatement("UPDATE keys SET nextID = ? WHERE name = ?");

stmt.setLong(1, newMaxId);

stmt.setString(2, keyName);

stmt.executeUpdate();

conn.commit();

nextId = newNextId;

maxId = newMaxId;

}

catch (SQLException exc) {

throw new ApplicationException("Unable to generate ids", exc);

}

finally {

DB.cleanUp(stmt);

}

}

In this case we use SELECT... FOR UPDATE to tell the database to hold a write lock on the key table. This is an Oracle-specific statement, so your mileage will vary if you’re using something else. If you can’t write-lock on the select, you run the risk of the transaction failing should another one get in there before you. In this case, however, you can pretty safely just rerun reserveIds until you get a pristine set of keys.

Using a simple integral key is a good, simple solution, but you often need other types or compound keys.

As soon as you need something else it’s worth putting together a key class. A key class needs to be able to store multiple elements of the key and to be able to tell if two keys are equal.

class Key...

private Object[] fields;

public boolean equals(Object obj) {

if (!(obj instanceof Key)) return false;

Key otherKey = (Key) obj;

if (this.fields.length != otherKey.fields.length) return false;

for (int i = 0; i < fields.length; i++)

if (!this.fields[i].equals(otherKey.fields[i])) return false;

return true;

}

The most elemental way to create a key is with an array parameter.

class Key...

public Key(Object[] fields) {

checkKeyNotNull(fields);

this.fields = fields;

}

private void checkKeyNotNull(Object[] fields) {

if (fields == null) throw new IllegalArgumentException("Cannot have a null key");

for (int i = 0; i < fields.length; i++)

if (fields[i] == null)

throw new IllegalArgumentException("Cannot have a null element of key");

}

If you find you commonly create keys with certain elements, you can add convenience constructors. The exact ones will depend on what kinds of keys your application has.

class Key...

public Key(long arg) {

this.fields = new Object[1];

this.fields[0] = new Long(arg);

}

public Key(Object field) {

if (field == null) throw new IllegalArgumentException("Cannot have a null key");

this.fields = new Object[1];

this.fields[0] = field;

}

public Key(Object arg1, Object arg2) {

this.fields = new Object[2];

this.fields[0] = arg1;

this.fields[1] = arg2;

checkKeyNotNull(fields);

}

Don’t be afraid to add these convenience methods. After all, convenience is important to everyone using the keys.

Similarly you can add accessor functions to get parts of keys. The application will need to do this for the mappings.

class Key...

public Object value(int i) {

return fields[i];

}

public Object value() {

checkSingleKey();

return fields[0];

}

private void checkSingleKey() {

if (fields.length > 1)

throw new IllegalStateException("Cannot take value on composite key");

}

public long longValue() {

checkSingleKey();

return longValue(0);

}

public long longValue(int i) {

if (!(fields[i] instanceof Long))

throw new IllegalStateException("Cannot take longValue on non long key");

return ((Long) fields[i]).longValue();

}

In this example we’ll map to an order and line item tables. The order table has a simple integral primary key, the line item table’s primary key is a compound of the order’s primary key and a sequence number.

CREATE TABLE orders (ID int primary key, customer varchar)

CREATE TABLE line_items (orderID int, seq int, amount int, product varchar,

primary key (orderID, seq))

The Layer Supertype (475) for domain objects needs to have a key field.

class DomainObjectWithKey...

private Key key;

protected DomainObjectWithKey(Key ID) {

this.key = ID;

}

protected DomainObjectWithKey() {

}

public Key getKey() {

return key;

}

public void setKey(Key key) {

this.key = key;

}

As with other examples in this book I’ve split the behavior into find (which gets to the right row in the database) and load (which loads data from that row into the domain object). Both responsibilities are affected by the use of a key object.

The primary difference between these and the other examples in this book (which use simple integral keys) is that we have to factor out certain pieces of behavior that are overridden by classes that have more complex keys. For this example I’m assuming that most tables use simple integral keys. However, some use something else, so I’ve made the default case the simple integral and have embedded the behavior for it the mapper Layer Supertype (475). The order class is one of those simple cases. Here’s the code for the find behavior:

class OrderMapper...

public Order find(Key key) {

return (Order) abstractFind(key);

}

public Order find(Long id) {

return find(new Key(id));

}

protected String findStatementString() {

return "SELECT id, customer from orders WHERE id = ?";

}

class AbstractMapper...

abstract protected String findStatementString();

protected Map loadedMap = new HashMap();

public DomainObjectWithKey abstractFind(Key key) {

DomainObjectWithKey result = (DomainObjectWithKey) loadedMap.get(key);

if (result != null) return result;

ResultSet rs = null;

PreparedStatement findStatement = null;

try {

findStatement = DB.prepare(findStatementString());

loadFindStatement(key, findStatement);

rs = findStatement.executeQuery();

rs.next();

if (rs.isAfterLast()) return null;

result = load(rs);

return result;

} catch (SQLException e) {

throw new ApplicationException(e);

} finally {

DB.cleanUp(findStatement, rs);

}

}

// hook method for keys that aren't simple integral

protected void loadFindStatement(Key key, PreparedStatement finder) throws SQLException {

finder.setLong(1, key.longValue());

}

I’ve extracted out the building of the find statement, since that requires different parameters to be passed into the prepared statement. The line item is a compound key, so it needs to override that method.

class LineItemMapper...

public LineItem find(long orderID, long seq) {

Key key = new Key(new Long(orderID), new Long(seq));

return (LineItem) abstractFind(key);

}

public LineItem find(Key key) {

return (LineItem) abstractFind(key);

}

protected String findStatementString() {

return

"SELECT orderID, seq, amount, product " +

" FROM line_items " +

" WHERE (orderID = ?) AND (seq = ?)";

}

// hook methods overridden for the composite key

protected void loadFindStatement(Key key, PreparedStatement finder) throws SQLException {

finder.setLong(1, orderID(key));

finder.setLong(2, sequenceNumber(key));

}

//helpers to extract appropriate values from line item's key

private static long orderID(Key key) {

return key.longValue(0);

}

private static long sequenceNumber(Key key) {

return key.longValue(1);

}

As well as defining the interface for the find methods and providing an SQL string for the find statement, the subclass needs to override the hook method to allow two parameters to go into the SQL statement. I’ve also written two helper methods to extract the parts of the key information. This makes for clearer code than I would get by just putting explicit accessors with numeric indices from the key. Such literal indices are a bad smell.

The load behavior shows a similar structure—default behavior in the Layer Supertype (475) for simple integral keys, overridden for the more complex cases. In this case the order’s load behavior looks like this:

class AbstractMapper...

protected DomainObjectWithKey load(ResultSet rs) throws SQLException {

Key key = createKey(rs);

if (loadedMap.containsKey(key)) return (DomainObjectWithKey) loadedMap.get(key);

DomainObjectWithKey result = doLoad(key, rs);

loadedMap.put(key, result);

return result;

}

abstract protected DomainObjectWithKey doLoad(Key id, ResultSet rs) throws SQLException;

// hook method for keys that aren't simple integral

protected Key createKey(ResultSet rs) throws SQLException {

return new Key(rs.getLong(1));

}

class OrderMapper...

protected DomainObjectWithKey doLoad(Key key, ResultSet rs) throws SQLException {

String customer = rs.getString("customer");

Order result = new Order(key, customer);

MapperRegistry.lineItem().loadAllLineItemsFor(result);

return result;

}

The line item needs to override the hook to create a key based on two fields.

class LineItemMapper...

protected DomainObjectWithKey doLoad(Key key, ResultSet rs) throws SQLException {

Order theOrder = MapperRegistry.order().find(orderID(key));

return doLoad(key, rs, theOrder);

}

protected DomainObjectWithKey doLoad(Key key, ResultSet rs, Order order)

throws SQLException

{

LineItem result;

int amount = rs.getInt("amount");

String product = rs.getString("product");

result = new LineItem(key, amount, product);

order.addLineItem(result);//links to the order

return result;

}

//overrides the default case

protected Key createKey(ResultSet rs) throws SQLException {

Key key = new Key(new Long(rs.getLong("orderID")), new Long(rs.getLong("seq")));

return key;

}

The line item also has a separate load method for use when loading all the lines for the order.

class LineItemMapper...

public void loadAllLineItemsFor(Order arg) {

PreparedStatement stmt = null;

ResultSet rs = null;

try {

stmt = DB.prepare(findForOrderString);

stmt.setLong(1, arg.getKey().longValue());

rs = stmt.executeQuery();

while (rs.next())

load(rs, arg);

} catch (SQLException e) {

throw new ApplicationException(e);

} finally {DB.cleanUp(stmt, rs);

}

}

private final static String findForOrderString =

"SELECT orderID, seq, amount, product " +

"FROM line_items " +

"WHERE orderID = ?";

protected DomainObjectWithKey load(ResultSet rs, Order order) throws SQLException {

Key key = createKey(rs);

if (loadedMap.containsKey(key)) return (DomainObjectWithKey) loadedMap.get(key);

DomainObjectWithKey result = doLoad(key, rs, order);

loadedMap.put(key, result);

return result;

}

You need the special handling because the order object isn’t put into the order’s Identity Map (195) until after it’s created. Creating an empty object and inserting it directly into the Identity Field would avoid the need for this (page 169).

Like reading, inserting has a default action for a simple integral key and the hooks to override this for more interesting keys. In the mapper supertype I’ve provided an operation to act as the interface, together with a template method to do the work of the insertion.

class AbstractMapper...

public Key insert(DomainObjectWithKey subject) {

try {

return performInsert(subject, findNextDatabaseKeyObject());

} catch (SQLException e) {

throw new ApplicationException(e);

}

}

protected Key performInsert(DomainObjectWithKey subject, Key key) throws SQLException {

subject.setKey(key);

PreparedStatement stmt = DB.prepare(insertStatementString());

insertKey(subject, stmt);

insertData(subject, stmt);

stmt.execute();

loadedMap.put(subject.getKey(), subject);

return subject.getKey();

}

abstract protected String insertStatementString();

class OrderMapper...

protected String insertStatementString() {

return "INSERT INTO orders VALUES(?,?)";

}

The data from the object goes into the insert statement through two methods that separate the data of the key from the basic data of the object. I do this because I can provide a default implementation for the key that will work for any class, like order, that uses the default simple integral key.

class AbstractMapper...

protected void insertKey(DomainObjectWithKey subject, PreparedStatement stmt)

throws SQLException

{

stmt.setLong(1, subject.getKey().longValue());

}

The rest of the data for the insert statement is dependent on the particular subclass, so this behavior is abstract on the superclass.

class AbstractMapper...

abstract protected void insertData(DomainObjectWithKey subject, PreparedStatement stmt)

throws SQLException;

class OrderMapper...

protected void insertData(DomainObjectWithKey abstractSubject, PreparedStatement stmt) {

try {

Order subject = (Order) abstractSubject;

stmt.setString(2, subject.getCustomer());

} catch (SQLException e) {

throw new ApplicationException(e);

}

}

The line item overrides both of these methods. It pulls two values out for key.

class LineItemMapper...

protected String insertStatementString() {

return "INSERT INTO line_items VALUES (?, ?, ?, ?)";

}

protected void insertKey(DomainObjectWithKey subject, PreparedStatement stmt)

throws SQLException

{

stmt.setLong(1, orderID(subject.getKey()));

stmt.setLong(2, sequenceNumber(subject.getKey()));

}

It also provides its own implementation of the insert statement for the rest of the data.

class LineItemMapper...

protected void insertData(DomainObjectWithKey subject, PreparedStatement stmt)

throws SQLException

{

LineItem item = (LineItem) subject;

stmt.setInt(3, item.getAmount());

stmt.setString(4, item.getProduct());

}

Putting the data loading into the insert statement like this is only worthwhile if most classes use the same single field for the key. If there’s more variation for the key handling, then having just one command to insert the information is probably easier.

Coming up with the next database key is also something that I can separate into a default and an overridden case. For the default case I can use the key table scheme that I talked about earlier. But for the line item we run into a problem. The line item’s key uses the key of the order as part of its composite key. However, there’s no reference from the line item class to the order class, so it’s impossible to tell a line item to insert itself into the database without providing the correct order as well. This leads to the always messy approach of implementing the superclass method with an unsupported operation exception.

class LineItemMapper...

public Key insert(DomainObjectWithKey subject) {

throw new UnsupportedOperationException

("Must supply an order when inserting a line item");

}

public Key insert(LineItem item, Order order) {

try {

Key key = new Key(order.getKey().value(), getNextSequenceNumber(order));

return performInsert(item, key);

} catch (SQLException e) {

throw new ApplicationException(e);

}

}

Of course, we can avoid this by having a back link from the line item to the order, effectively making the association between the two bidirectional. I’ve chosen not to do it here to illustrate what to do when you don’t have that link.

By supplying the order, it’s easy to get the order’s part of the key. The next problem is to come up with a sequence number for the order line. To find that number, we need to find out what the next available sequence number is for an order, which we can do either with a max query in SQL or by looking at the line items on the order in memory. For this example I’ll do the latter.

class LineItemMapper...

private Long getNextSequenceNumber(Order order) {

loadAllLineItemsFor(order);

Iterator it = order.getItems().iterator();

LineItem candidate = (LineItem) it.next();

while (it.hasNext()) {

LineItem thisItem = (LineItem) it.next();

if (thisItem.getKey() == null) continue;

if (sequenceNumber(thisItem) > sequenceNumber(candidate)) candidate = thisItem;

}

return new Long(sequenceNumber(candidate) + 1);

}

private static long sequenceNumber(LineItem li) {

return sequenceNumber(li.getKey());

}

//comparator doesn't work well here due to unsaved null keys

protected String keyTableRow() {

throw new UnsupportedOperationException();

}

This algorithm would be much nicer if I used the Collections.max method, but since we may (and indeed will) have at least one null key, that method would fail.

After all of that, updates and deletes are mostly harmless. Again we have an abstract method for the assumed usual case and an override for the special cases.

Updates work like this:

class AbstractMapper...

public void update(DomainObjectWithKey subject) {

PreparedStatement stmt = null;

try {

stmt = DB.prepare(updateStatementString());

loadUpdateStatement(subject, stmt);

stmt.execute();

} catch (SQLException e) {

throw new ApplicationException(e);

} finally {

DB.cleanUp(stmt);

}

}

abstract protected String updateStatementString();

abstract protected void loadUpdateStatement(DomainObjectWithKey subject,

PreparedStatement stmt)

throws SQLException;

class OrderMapper...

protected void loadUpdateStatement(DomainObjectWithKey subject, PreparedStatement stmt)

throws SQLException

{

Order order = (Order) subject;

stmt.setString(1, order.getCustomer());

stmt.setLong(2, order.getKey().longValue());

}

protected String updateStatementString() {

return "UPDATE orders SET customer = ? WHERE id = ?";

}

class LineItemMapper...

protected String updateStatementString() {

return

"UPDATE line_items " +

" SET amount = ?, product = ? " +

" WHERE orderId = ? AND seq = ?";

}

protected void loadUpdateStatement(DomainObjectWithKey subject, PreparedStatement stmt)

throws SQLException

{

stmt.setLong(3, orderID(subject.getKey()));

stmt.setLong(4, sequenceNumber(subject.getKey()));

LineItem li = (LineItem) subject;

stmt.setInt(1, li.getAmount());

stmt.setString(2, li.getProduct());

}

Deletes work like this:

class AbstractMapper...

public void delete(DomainObjectWithKey subject) {

PreparedStatement stmt = null;

try {

stmt = DB.prepare(deleteStatementString());

loadDeleteStatement(subject, stmt);

stmt.execute();

} catch (SQLException e) {

throw new ApplicationException(e);

} finally {

DB.cleanUp(stmt);

}

}

abstract protected String deleteStatementString();

protected void loadDeleteStatement(DomainObjectWithKey subject, PreparedStatement stmt)

throws SQLException

{

stmt.setLong(1, subject.getKey().longValue());

}

class OrderMapper...

protected String deleteStatementString() {

return "DELETE FROM orders WHERE id = ?";

}

class LineItemMapper...

protected String deleteStatementString() {

return "DELETE FROM line_items WHERE orderid = ? AND seq = ?";

}

protected void loadDeleteStatement(DomainObjectWithKey subject, PreparedStatement stmt)

throws SQLException

{

stmt.setLong(1, orderID(subject.getKey()));

stmt.setLong(2, sequenceNumber(subject.getKey()));

}

Maps an association between objects to a foreign key reference between tables.

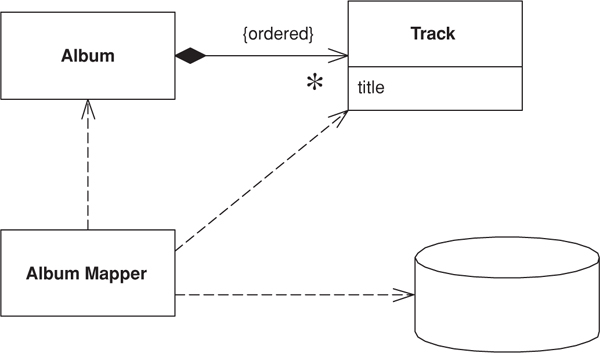

Objects can refer to each other directly by object references. Even the simplest object-oriented system will contain a bevy of objects connected to each other in all sorts of interesting ways. To save these objects to a database, it’s vital to save these references. However, since the data in them is specific to the specific instance of the running program, you can’t just save raw data values. Further complicating things is the fact that objects can easily hold collections of references to other objects. Such a structure violates the first normal form of relational databases.

A Foreign Key Mapping maps an object reference to a foreign key in the database.

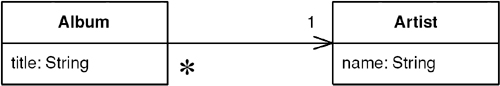

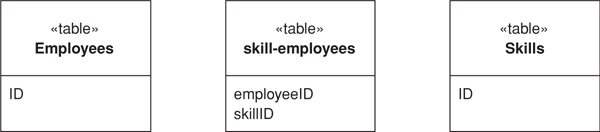

The obvious key to this problem is Identity Field (216). Each object contains the database key from the appropriate database table. If two objects are linked together with an association, this association can be replaced by a foreign key in the database. Put simply, when you save an album to the database, you save the ID of the artist that the album is linked to in the album record, as in Figure 12.1.

Figure 12.1. Mapping a collection to a foreign key.

That’s the simple case. A more complicated case turns up when you have a collection of objects. You can’t save a collection in the database, so you have to reverse the direction of the reference. Thus, if you have a collection of tracks in the album, you put the foreign key of the album in the track record, as in Figures 12.2 and 12.3. The complication occurs when you have an update. Updating implies that tracks can be added to and removed from the collection within an album. How can you tell what alterations to put in the database? Essentially you have three options: (1) delete and insert, (2) add a back pointer, and (3) diff the collection.

Figure 12.2. Mapping a collection to a foreign key.

Figure 12.3. Classes and tables for a multivalued reference.

With delete and insert you delete all the tracks in the database that link to the album, and then insert all the ones currently on the album. At first glance this sounds pretty appalling, especially if you haven’t changed any tracks. But the logic is easy to implement and as such it works pretty well compared to the alternatives. The drawback is that you can only do this if tracks are Dependent Mappings (262), which means they must be owned by the album and can’t be referred to outside it.

Adding a back pointer puts a link from the track back to the album, effectively making the association bidirectional. This changes the object model, but now you can handle the update using the simple technique for single-valued fields on the other side.

If neither of those appeals, you can do a diff. There are two possibilities here: diff with the current state of the database or diff with what you read the first time. Diffing with the database involves rereading the collection back from the database and then comparing the collection you read with the collection in the album. Anything in the database that isn’t in the album was clearly removed; anything in the album that isn’t on the disk is clearly a new item to be added. Then look at the logic of the application to decide what to do with each item.

Diffing with what you read in the first place means that you have to keep what you read. This is better as it avoids another database read. You may also need to diff with the database if you’re using Optimistic Offline Lock (416).

In the general case anything that’s added to the collection needs to be checked first to see if it’s a new object. You can do this by seeing if it has a key; if it doesn’t, one needs to be added to the database. This step is made a lot easier with Unit of Work (184) because that way any new object will be automatically inserted first. In either case you then find the linked row in the database and update its foreign key to point to the current album.

For removal you have to know whether the track was moved to another album, has no album, or has been deleted altogether. If it’s been moved to another album it should be updated when you update that other album. If it has no album, you need to null the foreign key. If the track was deleted, then it should be deleted when things get deleted. Handling deletes is much easier if the back link is mandatory, as it is here, where every track must be on an album. That way you don’t have to worry about detecting items removed from the collection since they will be updated when you process the album they’ve been added to.

If the link is immutable, meaning that you can’t change a track’s album, then adding always means insertion and removing always means deletion. This makes things simpler still.

One thing to watch out for is cycles in your links. Say you need to load an order, which has a link to a customer (which you load). The customer has a set of payments (which you load), and each payment has orders that it’s paying for, which might include the original order you’re trying to load. Therefore, you load the order (now go back to the beginning of this paragraph.)

To avoid getting lost in cycles you have two choices that boil down to how you create your objects. Usually it’s a good idea for a creation method to include data that will give you a fully formed object. If you do that, you’ll need to place Lazy Load (200) at appropriate points to break the cycles. If you miss one, you’ll get a stack overflow, but if you’re testing is good enough you can manage that burden.

The other choice is to create empty objects and immediately put them in an Identity Map (195). That way, when you cycle back around, the object is already loaded and you’ll end the cycle. The objects you create aren’t fully formed, but they should be by the end of the load procedure. This avoids having to make special case decisions about the use of Lazy Load (200) just to do a correct load.

A Foreign Key Mapping can be used for almost all associations between classes. The most common case where it isn’t possible is with many-to-many associations. Foreign keys are single values, and first normal form means that you can’t store multiple foreign keys in a single field. Instead you need to use Association Table Mapping (248).

If you have a collection field with no back pointer, you should consider whether the many side should be a Dependent Mapping (262). If so, it can simplify your handling of the collection.

If the related object is a Value Object (486) then you should use Embedded Value (268).

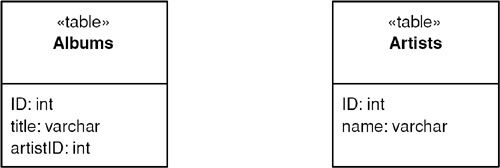

This is the simplest case, where an album has a single reference to an artist.

class Artist...

private String name;

public Artist(Long ID, String name) {

super(ID);

this.name = name;

}

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

class Album...

private String title;

private Artist artist;

public Album(Long ID, String title, Artist artist) {

super(ID);

this.title = title;

this.artist = artist;

}

public String getTitle() {

return title;

}

public void setTitle(String title) {

this.title = title;

}

public Artist getArtist() {

return artist;

}

public void setArtist(Artist artist) {

this.artist = artist;

}

Figure 12.4 shows how you can load an album. When an album mapper is told to load a particular album it queries the database and pulls back the result set for it. It then queries the result set for each foreign key field and finds that object. Now it can create the album with the appropriate found objects. If the artist object was already in memory it would be fetched from the cache; otherwise, it would be loaded from the database in the same way.

Figure 12.4. Sequence for loading a single-valued field.

The find operation uses abstract behavior to manipulate an Identity Map (195).

class AlbumMapper...

public Album find(Long id) {

return (Album) abstractFind(id);

}

protected String findStatement() {

return "SELECT ID, title, artistID FROM albums WHERE ID = ?";

}

class AbstractMapper...

abstract protected String findStatement();

protected DomainObject abstractFind(Long id) {

DomainObject result = (DomainObject) loadedMap.get(id);

if (result != null) return result;

PreparedStatement stmt = null;

ResultSet rs = null;

try {

stmt = DB.prepare(findStatement());

stmt.setLong(1, id.longValue());

rs = stmt.executeQuery();

rs.next();

result = load(rs);

return result;

} catch (SQLException e) {

throw new ApplicationException(e);

} finally {cleanUp(stmt, rs);}

}

private Map loadedMap = new HashMap();

The find operation calls a load operation to actually load the data into the album.

class AbstractMapper...

protected DomainObject load(ResultSet rs) throws SQLException {

Long id = new Long(rs.getLong(1));

if (loadedMap.containsKey(id)) return (DomainObject) loadedMap.get(id);

DomainObject result = doLoad(id, rs);

doRegister(id, result);

return result;

}

protected void doRegister(Long id, DomainObject result) {

Assert.isFalse(loadedMap.containsKey(id));

loadedMap.put(id, result);

}

abstract protected DomainObject doLoad(Long id, ResultSet rs) throws SQLException;

class AlbumMapper...

protected DomainObject doLoad(Long id, ResultSet rs) throws SQLException {

String title = rs.getString(2);

long artistID = rs.getLong(3);

Artist artist = MapperRegistry.artist().find(artistID);

Album result = new Album(id, title, artist);

return result;

}

To update an album the foreign key value is taken from the linked artist object.

class AbstractMapper...

abstract public void update(DomainObject arg);

class AlbumMapper...

public void update(DomainObject arg) {

PreparedStatement statement = null;

try {

statement = DB.prepare(

"UPDATE albums SET title = ?, artistID = ? WHERE id = ?");

statement.setLong(3, arg.getID().longValue());

Album album = (Album) arg;

statement.setString(1, album.getTitle());

statement.setLong(2, album.getArtist().getID().longValue());

statement.execute();

} catch (SQLException e) {

throw new ApplicationException(e);

} finally {

cleanUp(statement);

}

}

While it’s conceptually clean to issue one query per table, it’s often inefficient since SQL consists of remote calls and remote calls are slow. Therefore, it’s often worth finding ways to gather information from multiple tables in a single query. I can modify the above example to use a single query to get both the album and the artist information with a single SQL call. The first alteration is that of the SQL for the find statement.

class AlbumMapper...

public Album find(Long id) {

return (Album) abstractFind(id);

}

protected String findStatement() {

return "SELECT a.ID, a.title, a.artistID, r.name " +

" from albums a, artists r " +

" WHERE ID = ? and a.artistID = r.ID";

}

I then use a different load method that loads both the album and the artist information together.

class AlbumMapper...

protected DomainObject doLoad(Long id, ResultSet rs) throws SQLException {

String title = rs.getString(2);

long artistID = rs.getLong(3);

ArtistMapper artistMapper = MapperRegistry.artist();

Artist artist;

if (artistMapper.isLoaded(artistID))

artist = artistMapper.find(artistID);

else

artist = loadArtist(artistID, rs);

Album result = new Album(id, title, artist);

return result;

}

private Artist loadArtist(long id, ResultSet rs) throws SQLException {

String name = rs.getString(4);

Artist result = new Artist(new Long(id), name);

MapperRegistry.artist().register(result.getID(), result);

return result;

}

There’s tension surrounding where to put the method that maps the SQL result into the artist object. On the one hand it’s better to put it in the artist’s mapper since that’s the class that usually loads the artist. On the other hand, the load method is closely coupled to the SQL and thus should stay with the SQL query. In this case I’ve voted for the latter.

The case for a collection of references occurs when you have a field that constitutes a collection. Here I’ll use an example of teams and players where we’ll assume that we can’t make player a Dependent Mapping (262) (Figure 12.5).

class Team...

public String Name;

public IList Players {

get {return ArrayList.ReadOnly(playersData);}

set {playersData = new ArrayList(value);}

}

public void AddPlayer(Player arg) {

playersData.Add(arg);

}

private IList playersData = new ArrayList();

Figure 12.5. A team with multiple players.

In the database this will be handled with the player record having a foreign key to the team (Figure 12.6).

class TeamMapper...

public Team Find(long id) {

return (Team) AbstractFind(id);

}

class AbstractMapper...

protected DomainObject AbstractFind(long id) {

Assert.True (id != DomainObject.PLACEHOLDER_ID);

DataRow row = FindRow(id);

return (row == null) ? null : Load(row);

}

protected DataRow FindRow(long id) {

String filter = String.Format("id = {0}", id);

DataRow[] results = table.Select(filter);

return (results.Length == 0) ? null : results[0];

}

protected DataTable table {

get {return dsh.Data.Tables[TableName];}

}

public DataSetHolder dsh;

abstract protected String TableName {get;}

class TeamMapper...

protected override String TableName {

get {return "Teams";}

}

Figure 12.6. Database structure for a team with multiple players.

The data set holder is a class that holds onto the data set in use, together with the adapters needed to update it to the database.

class DataSetHolder...

public DataSet Data = new DataSet();

private Hashtable DataAdapters = new Hashtable();

For this example, we’ll assume that it has already been populated by some appropriate queries.

The find method calls a load to actually load the data into the new object.

class AbstractMapper...

protected DomainObject Load (DataRow row) {

long id = (int) row ["id"];

if (identityMap[id] != null) return (DomainObject) identityMap[id];

else {

DomainObject result = CreateDomainObject();

result.Id = id;

identityMap.Add(result.Id, result);

doLoad(result,row);

return result;

}

}

abstract protected DomainObject CreateDomainObject();

private IDictionary identityMap = new Hashtable();

abstract protected void doLoad (DomainObject obj, DataRow row);

class TeamMapper...

protected override void doLoad (DomainObject obj, DataRow row) {

Team team = (Team) obj;

team.Name = (String) row["name"];

team.Players = MapperRegistry.Player.FindForTeam(team.Id);

}

To bring in the players, I execute a specialized finder on the player mapper.

class PlayerMapper...

public IList FindForTeam(long id) {

String filter = String.Format("teamID = {0}", id);

DataRow[] rows = table.Select(filter);

IList result = new ArrayList();

foreach (DataRow row in rows) {

result.Add(Load (row));

}

return result;

}

To update, the team saves its own data and delegates the player mapper to save the data into the player table.

class AbstractMapper...

public virtual void Update (DomainObject arg) {

Save (arg, FindRow(arg.Id));

}

abstract protected void Save (DomainObject arg, DataRow row);

class TeamMapper...

protected override void Save (DomainObject obj, DataRow row){

Team team = (Team) obj;

row["name"] = team.Name;

savePlayers(team);

}

private void savePlayers(Team team){

foreach (Player p in team.Players) {

MapperRegistry.Player.LinkTeam(p, team.Id);

}

}

class PlayerMapper...

public void LinkTeam (Player player, long teamID) {

DataRow row = FindRow(player.Id);

row["teamID"] = teamID;

}

The update code is made much simpler by the fact that the association from player to team is mandatory. If we move a player from one team to another, as long as we update both team, we don’t have to do a complicated diff to sort the players out. I’ll leave that case as an exercise for the reader.

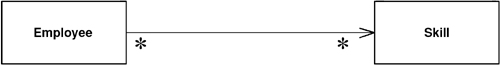

Saves an association as a table with foreign keys to the tables that are linked by the association.

Objects can handle multivalued fields quite easily by using collections as field values. Relational databases don’t have this feature and are constrained to single-valued fields only. When you’re mapping a one-to-many association you can handle this using Foreign Key Mapping (236), essentially using a foreign key for the single-valued end of the association. But a many-to-many association can’t do this because there is no single-valued end to hold the foreign key.

The answer is the classic resolution that’s been used by relational data people for decades: create an extra table to record the relationship. Then use Association Table Mapping to map the multivalued field to this link table.

The basic idea behind Association Table Mapping is using a link table to store the association. This table has only the foreign key IDs for the two tables that are linked together, it has one row for each pair of associated objects.

The link table has no corresponding in-memory object. As a result it has no ID. Its primary key is the compound of the two primary keys of the tables that are associated.

In simple terms, to load data from the link table you perform two queries. Consider loading the skills for an employee. In this case, at least conceptually, you do queries in two stages. The first stage queries the skillsEmployees table to find all the rows that link to the employee you want. The second stage finds the skill object for the related ID for each row in the link table.

If all the information is already in memory, this scheme works fine. If it isn’t, this scheme can be horribly expensive in queries, since you do a query for each skill that’s in the link table. You can avoid this cost by joining the skills table to the link table, which allows you to get all the data in a single query, albeit at the cost of making the mapping a bit more complicated.

Updating the link data involves many of the issues in updating a many-valued field. Fortunately, the matter is made much easier since you can in many ways treat the link table like a Dependent Mapping (262). No other table should refer to the link table, so you can freely create and destroy links as you need them.

The canonical case for Association Table Mapping is a many-to-many association, since there are really no any alternatives for that situation.

Association Table Mapping can also be used for any other form of association. However, because it’s more complex than Foreign Key Mapping (236) and involves an extra join, it’s not usually the right choice. Even so, in a couple of cases Association Table Mapping is appropriate for a simpler association; both involve databases where you have less control over the schema. Sometimes you may need to link two existing tables, but you aren’t able to add columns to those tables. In this case you can make a new table and use Association Table Mapping. Other times an existing schema uses an associative table, even when it isn’t really necessary. In this case it’s often easier to use Association Table Mapping than to simplify the database schema.

In a relational database design you may often have association tables that also carry information about the relationship. An example is a person/company associative table that also contains information about a person’s employment with the company. In this case the person/company table really corresponds to a true domain object.

Here’s a simple example using the sketch’s model. We have an employee class with a collection of skills, each of which can appear for more than one employee.

class Employee...

public IList Skills {

get {return ArrayList.ReadOnly(skillsData);}

set {skillsData = new ArrayList(value);}

}

public void AddSkill (Skill arg) {

skillsData.Add(arg);

}

public void RemoveSkill (Skill arg) {

skillsData.Remove(arg);

}

private IList skillsData = new ArrayList();

To load an employee from the database, we need to pull in the skills using an employee mapper. Each employee mapper class has a find method that creates an employee object. All mappers are subclasses of the abstract mapper class that pulls together common services for the mappers.

class EmployeeMapper...

public Employee Find(long id) {

return (Employee) AbstractFind(id);

}

class AbstractMapper...

protected DomainObject AbstractFind(long id) {

Assert.True (id != DomainObject.PLACEHOLDER_ID);

DataRow row = FindRow(id);

return (row == null) ? null : Load(row);

}

protected DataRow FindRow(long id) {

String filter = String.Format("id = {0}", id);

DataRow[] results = table.Select(filter);

return (results.Length == 0) ? null : results[0];

}

protected DataTable table {

get {return dsh.Data.Tables[TableName];}

}

public DataSetHolder dsh;

abstract protected String TableName {get;}

class EmployeeMapper...

protected override String TableName {

get {return "Employees";}

}

The data set holder is a simple object that contains an ADO.NET data set and the relevant adapters to save it to the database.

class DataSetHolder...

public DataSet Data = new DataSet();

private Hashtable DataAdapters = new Hashtable();

To make this example simple—indeed, simplistic—we’ll assume that the data set has already been loaded with all the data we need.

The find method calls load methods to load data for the employee.

class AbstractMapper...

protected DomainObject Load (DataRow row) {

long id = (int) row ["id"];

if (identityMap[id] != null) return (DomainObject) identityMap[id];

else {

DomainObject result = CreateDomainObject();

result.Id = id;

identityMap.Add(result.Id, result);

doLoad(result,row);

return result;

}

}

abstract protected DomainObject CreateDomainObject();

private IDictionary identityMap = new Hashtable();

abstract protected void doLoad (DomainObject obj, DataRow row);

class EmployeeMapper...

protected override void doLoad (DomainObject obj, DataRow row) {

Employee emp = (Employee) obj;

emp.Name = (String) row["name"];

loadSkills(emp);

}

Loading the skills is sufficiently awkward to demand a separate method to do the work.

class EmployeeMapper...

private IList loadSkills (Employee emp) {

DataRow[] rows = skillLinkRows(emp);

IList result = new ArrayList();

foreach (DataRow row in rows) {

long skillID = (int)row["skillID"];

emp.AddSkill(MapperRegistry.Skill.Find(skillID));

}

return result;

}

private DataRow[] skillLinkRows(Employee emp) {

String filter = String.Format("employeeID = {0}", emp.Id);

return skillLinkTable.Select(filter);

}

private DataTable skillLinkTable {

get {return dsh.Data.Tables["skillEmployees"];}

}

To handle changes in skills information we use an update method on the abstract mapper.

class AbstractMapper...

public virtual void Update (DomainObject arg) {

Save (arg, FindRow(arg.Id));

}

abstract protected void Save (DomainObject arg, DataRow row);

The update method calls a save method in the subclass.

class EmployeeMapper...

protected override void Save (DomainObject obj, DataRow row) {

Employee emp = (Employee) obj;

row["name"] = emp.Name;

saveSkills(emp);

}

Again, I’ve made a separate method for saving the skills.

class EmployeeMapper...

private void saveSkills(Employee emp) {

deleteSkills(emp);

foreach (Skill s in emp.Skills) {

DataRow row = skillLinkTable.NewRow();

row["employeeID"] = emp.Id;

row["skillID"] = s.Id;

skillLinkTable.Rows.Add(row);

}

}

private void deleteSkills(Employee emp) {

DataRow[] skillRows = skillLinkRows(emp);

foreach (DataRow r in skillRows) r.Delete();

}

The logic here does the simple thing of deleting all existing link table rows and creating new ones. This saves me having to figure out which ones have been added and deleted.

One of the nice things about ADO.NET is that it allows me to discuss the basics of an object-relational mapping without getting into the sticky details of minimizing queries. With other relational mapping schemes you’re closer to the SQL and have to take much of that into account.

When you’re going directly to the database it’s important to minimize the queries. For my first version of this I’ll pull back the employee and all her skills in two queries. This is easy to follow but not quite optimal, so bear with me.

Here’s the DDL for the tables:

create table employees (ID int primary key, firstname varchar, lastname varchar)

create table skills (ID int primary key, name varchar)

create table employeeSkills (employeeID int, skillID int, primary key (employeeID, skillID))

To load a single Employee I’ll follow a similar approach to what I’ve done before. The employee mapper defines a simple wrapper for an abstract find method on the Layer Supertype (475).

class EmployeeMapper...

public Employee find(long key) {

return find (new Long (key));

}

public Employee find (Long key) {

return (Employee) abstractFind(key);

}

protected String findStatement() {

return

"SELECT " + COLUMN_LIST +

" FROM employees" +

" WHERE ID = ?";

}

public static final String COLUMN_LIST = " ID, lastname, firstname ";

class AbstractMapper...

protected DomainObject abstractFind(Long id) {

DomainObject result = (DomainObject) loadedMap.get(id);

if (result != null) return result;

PreparedStatement stmt = null;

ResultSet rs = null;

try {

stmt = DB.prepare(findStatement());

stmt.setLong(1, id.longValue());

rs = stmt.executeQuery();

rs.next();

result = load(rs);

return result;

} catch (SQLException e) {

throw new ApplicationException(e);

} finally {DB.cleanUp(stmt, rs);

}

}

abstract protected String findStatement();

protected Map loadedMap = new HashMap();

The find methods then call load methods. An abstract load method handles the ID loading while the actual data for the employee is loaded on the employee’s mapper.

class AbstractMapper...

protected DomainObject load(ResultSet rs) throws SQLException {

Long id = new Long(rs.getLong(1));

return load(id, rs);

}

public DomainObject load(Long id, ResultSet rs) throws SQLException {

if (hasLoaded(id)) return (DomainObject) loadedMap.get(id);

DomainObject result = doLoad(id, rs);

loadedMap.put(id, result);

return result;

}

abstract protected DomainObject doLoad(Long id, ResultSet rs) throws SQLException;

class EmployeeMapper...

protected DomainObject doLoad(Long id, ResultSet rs) throws SQLException {

Employee result = new Employee(id);

result.setFirstName(rs.getString("firstname"));

result.setLastName(rs.getString("lastname"));

result.setSkills(loadSkills(id));

return result;

}

The employee needs to issue another query to load the skills, but it can easily load all the skills in a single query. To do this it calls the skill mapper to load in the data for a particular skill.

class EmployeeMapper...

protected List loadSkills(Long employeeID) {

PreparedStatement stmt = null;

ResultSet rs = null;

try {

List result = new ArrayList();

stmt = DB.prepare(findSkillsStatement);

stmt.setObject(1, employeeID);

rs = stmt.executeQuery();

while (rs.next()) {

Long skillId = new Long (rs.getLong(1));

result.add((Skill) MapperRegistry.skill().loadRow(skillId, rs));

}

return result;

} catch (SQLException e) {

throw new ApplicationException(e);

} finally {DB.cleanUp(stmt, rs);

}

}

private static final String findSkillsStatement =

"SELECT skill.ID, " + SkillMapper.COLUMN_LIST +

" FROM skills skill, employeeSkills es " +

" WHERE es.employeeID = ? AND skill.ID = es.skillID";

class SkillMapper...

public static final String COLUMN_LIST = " skill.name skillName ";

class AbstractMapper...

protected DomainObject loadRow (Long id, ResultSet rs) throws SQLException {

return load (id, rs);

}

class SkillMapper...

protected DomainObject doLoad(Long id, ResultSet rs) throws SQLException {

Skill result = new Skill (id);

result.setName(rs.getString("skillName"));

return result;

}

The abstract mapper can also help find employees.

class EmployeeMapper...

public List findAll() {

return findAll(findAllStatement);

}

private static final String findAllStatement =

"SELECT " + COLUMN_LIST +

" FROM employees employee" +

" ORDER BY employee.lastname";

class AbstractMapper...

protected List findAll(String sql) {

PreparedStatement stmt = null;

ResultSet rs = null;

try {

List result = new ArrayList();

stmt = DB.prepare(sql);

rs = stmt.executeQuery();

while (rs.next())

result.add(load(rs));

return result;

} catch (SQLException e) {

throw new ApplicationException(e);

} finally {DB.cleanUp(stmt, rs);

}

}

All of this works quite well and is pretty simple to follow. Still, there’s a problem in the number of queries, and that is that each employee takes two SQL queries to load. Although we can load the basic employee data for many employees in a single query, we still need one query per employee to load the skills. Thus, loading a hundred employees takes 101 queries.

It’s possible to bring back many employees, with their skills, in a single query. This is a good example of multitable query optimization, which is certainly more awkward. For that reason do this when you need to, rather than every time. It’s better to put more energy into speeding up your slow queries than into many queries that are less important.

The first case we’ll look at is a simple one where we pull back all the skills for an employee in the same query that holds the basic data. To do this I’ll use a more complex SQL statement that joins across all three tables.

class EmployeeMapper...

protected String findStatement() {

return

"SELECT" + COLUMN_LIST +

" FROM employees employee, skills skill, employeeSkills es" +

" WHERE employee.ID = es.employeeID AND skill.ID = es.skillID AND employee.ID = ?";

}

public static final String COLUMN_LIST =

" employee.ID, employee.lastname, employee.firstname, " +

" es.skillID, es.employeeID, skill.ID skillID, " +

SkillMapper.COLUMN_LIST;

The abstractFind and load methods on the superclass are the same as in the previous example, so I won’t repeat them here. The employee mapper loads its data differently to take advantage of the multiple data rows.

class EmployeeMapper...

protected DomainObject doLoad(Long id, ResultSet rs) throws SQLException {

Employee result = (Employee) loadRow(id, rs);

loadSkillData(result, rs);

while (rs.next()){

Assert.isTrue(rowIsForSameEmployee(id, rs));

loadSkillData(result, rs);

}

return result;

}

protected DomainObject loadRow(Long id, ResultSet rs) throws SQLException {

Employee result = new Employee(id);

result.setFirstName(rs.getString("firstname"));

result.setLastName(rs.getString("lastname"));

return result;

}

private boolean rowIsForSameEmployee(Long id, ResultSet rs) throws SQLException {

return id.equals(new Long(rs.getLong(1)));

}

private void loadSkillData(Employee person, ResultSet rs) throws SQLException {

Long skillID = new Long(rs.getLong("skillID"));

person.addSkill ((Skill)MapperRegistry.skill().loadRow(skillID, rs));

}

In this case the load method for the employee mapper actually runs through the rest of the result set to load in all the data.

All is simple when we’re loading the data for a single employee. However, the real benefit of this multitable query appears when we want to load lots of employees. Getting the reading right can be tricky, particularly when we don’t want to force the result set to be grouped by employees. At this point it’s handy to introduce a helper class to go through the result set by focusing on the associative table itself, loading up the employees and skills as it goes along.

I’ll begin with the SQL and the call to the special loader class.

class EmployeeMapper...

public List findAll() {

return findAll(findAllStatement);

}

private static final String findAllStatement =

"SELECT " + COLUMN_LIST +

" FROM employees employee, skills skill, employeeSkills es" +

" WHERE employee.ID = es.employeeID AND skill.ID = es.skillID" +

" ORDER BY employee.lastname";

protected List findAll(String sql) {

AssociationTableLoader loader = new AssociationTableLoader(this, new SkillAdder());

return loader.run(findAllStatement);

}

class AssociationTableLoader...

private AbstractMapper sourceMapper;

private Adder targetAdder;

public AssociationTableLoader(AbstractMapper primaryMapper, Adder targetAdder) {

this.sourceMapper = primaryMapper;

this.targetAdder = targetAdder;

}

Don’t worry about the skillAdder—that will become a bit clearer later. For the moment notice that we construct the loader with a reference to the mapper and then tell it to perform a load with a suitable query. This is the typical structure of a method object. A method object [Beck Patterns] is a way of turning a complicated method into an object on its own. The great advantage of this is that it allows you to put values in fields instead of passing them around in parameters. The usual way of using a method object is to create it, fire it up, and then let it die once its duty is done.

The load behavior comes in three steps.

class AssociationTableLoader...

protected List run(String sql) {

loadData(sql);

addAllNewObjectsToIdentityMap();

return formResult();

}

The loadData method forms the SQL call, executes it, and loops through the result set. Since this is a method object, I’ve put the result set in a field so I don’t have to pass it around.

class AssociationTableLoader...

private ResultSet rs = null;

private void loadData(String sql) {

PreparedStatement stmt = null;

try {

stmt = DB.prepare(sql);

rs = stmt.executeQuery();

while (rs.next())

loadRow();

} catch (SQLException e) {

throw new ApplicationException(e);

} finally {DB.cleanUp(stmt, rs);

}

}

The loadRow method loads the data from a single row in the result set. It’s a bit complicated.

class AssociationTableLoader...

private List resultIds = new ArrayList();

private Map inProgress = new HashMap();

private void loadRow() throws SQLException {

Long ID = new Long(rs.getLong(1));

if (!resultIds.contains(ID)) resultIds.add(ID);

if (!sourceMapper.hasLoaded(ID)) {

if (!inProgress.keySet().contains(ID))

inProgress.put(ID, sourceMapper.loadRow(ID, rs));

targetAdder.add((DomainObject) inProgress.get(ID), rs);

}

}

class AbstractMapper...

boolean hasLoaded(Long id) {

return loadedMap.containsKey(id);

}

The loader preserves any order there is in the result set, so the output list of employees will be in the same order in which it first appeared. So I keep a list of IDs in the order I see them. Once I’ve got the ID I look to see if it’s already fully loaded in the mapper—usually from a previous query. If it not I load what data I have and keep it in an in-progress list. I need such a list since several rows will combine to gather all the data from the employee and I may not hit those rows consecutively.

The trickiest part to this code is ensuring that I can add the skill I’m loading to the employees’ list of skills, but still keep the loader generic so it doesn’t depend on employees and skills. To achieve this I need to dig deep into my bag of tricks to find an inner interface—the Adder.

class AssociationTableLoader...

public static interface Adder {

void add(DomainObject host, ResultSet rs) throws SQLException ;

}

The original caller has to supply an implementation for the interface to bind it to the particular needs of the employee and skill.

class EmployeeMapper...

private static class SkillAdder implements AssociationTableLoader.Adder {

public void add(DomainObject host, ResultSet rs) throws SQLException {

Employee emp = (Employee) host;

Long skillId = new Long (rs.getLong("skillId"));

emp.addSkill((Skill) MapperRegistry.skill().loadRow(skillId, rs));

}

}

This is the kind of thing that comes more naturally to languages that have function pointers or closures, but at least the class and interface get the job done. (They don’t have to be inner in this case, but it helps bring out their narrow scope.)

You may have noticed that I have a load and a loadRow method defined on the superclass and the implementation of the loadRow is to call load. I did this because there are times when you want to be sure that a load action will not move the result set forward. The load does whatever it needs to do to load an object, but loadRow guarantees to load data from a row without altering the position of the cursor. Most of the time these two are the same thing, but in the case of this employee mapper they’re different.

Now all the data is in from the result set. I have two collections: a list of all the employee IDs that were in the result set in the order of first appearance and a list of new objects that haven’t yet made an appearance in the employee mapper’s Identity Map (195).

The next step is to put all the new objects into the Identity Map (195).

class AssociationTableLoader...

private void addAllNewObjectsToIdentityMap() {

for (Iterator it = inProgress.values().iterator(); it.hasNext();)

sourceMapper.putAsLoaded((DomainObject)it.next());

}

class AbstractMapper...

void putAsLoaded (DomainObject obj) {

loadedMap.put (obj.getID(), obj);

}

The final step is to assemble the result list by looking up the IDs from the mapper.

class AssociationTableLoader...

private List formResult() {

List result = new ArrayList();

for (Iterator it = resultIds.iterator(); it.hasNext();) {

Long id = (Long)it.next();

result.add(sourceMapper.lookUp(id));

}

return result;

}

class AbstractMapper...

protected DomainObject lookUp (Long id) {

return (DomainObject) loadedMap.get(id);

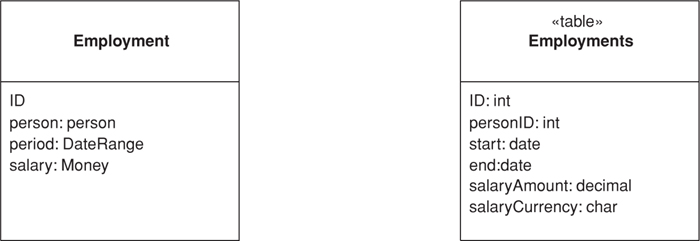

}