CHAPTER 1

Getting Started: Essential Knowledge

In this chapter you will

• Identify components of TCP/IP computer networking

• Understand basic elements of information security

• Understand incident management steps

• Identify fundamentals of security policies

• Identify essential terminology associated with ethical hacking

• Define ethical hacker and classifications of hackers

• Describe the five stages of ethical hacking

• Define the types of system attacks

• Identify laws, acts, and standards affecting IT security

A few weeks ago my ISP point-of-presence router, comfortably nestled in the comm-closet-like area I’d lovingly built just for such items of IT interest, decided it had had enough of serving the humans and went rogue on me. It was subtle at first—a stream dropped here, a choppy communication session there—but it quickly became clear Skynet wasn’t going to play nicely, and a scorched earth policy wasn’t off the table.

After battling with everything for a while and narrowing down the culprit, I called the handy help desk line to get a new one ordered and delivered for me to install myself, or to get a friendly in-home visit to take the old one and replace it. After answering the phone and taking a couple basic, and perfectly reasonable, pieces of information, the friendly help desk employee started asking me what I considered to be ridiculous questions: “Is your power on? Is your computer connected via a cable or wireless? Is your wireless card activated, because sometimes those things get turned off in airplane mode?” And so on. I played along nicely for a little while. I mean, look, I get it: they have to ask those questions. But after 10 or 15 minutes of dealing with it I lost patience and just told the guy what was wrong. He paused, thanked me, and continued reading the scroll of questions no doubt rolling across his screen from the “Customer Says No Internet” file.

I survived the gauntlet and finally got a new router ordered, which was delivered the very next day at 8:30 in the morning. Everything finally worked out, but the whole experience came to mind as I sat down to start the latest version of this book. I got to looking at the previous chapter and thought to myself, “What were you thinking? Why were you telling them about networking and the OSI model? You’re the help desk guy here.…”

Why? Because I have to. I’ve promised to cover everything here, and although you shouldn’t jump into study material for the exam without already knowing the basics, we’re all human and some of us will. But don’t worry, dear reader: this edition has hopefully cut down some of the basic networking goodies from the last version. I did have to include a fantastic explanation of the OSI reference model, what PDUs are at what level, and why you should care, even though I’m pretty sure you know this already. I’m going to do my best to keep it better focused for you and your study. This chapter still includes some inanely boring and mundane information that is probably as exciting as that laundry you have piled up waiting to go into the machine, but it has to be said, and you’re the one to hear it. We’ll cover the many terms you’ll need to know, including what an ethical hacker is supposed to be, and maybe even cover a couple things you don’t know.

Security 101

If you’re going to start a journey toward an ethical hacking certification, it should follow that the fundamental definitions and terminology involved with security should be right at the starting line. We’re not going to cover everything involved in IT security here—it’s simply too large a topic, we don’t have space, and you won’t be tested on every element anyway—but there is a foundation of 101-level knowledge you should have before wading out of the shallow end. This chapter covers the terms you’ll need to know to sound intelligent when discussing security matters with other folks. And, perhaps just as importantly, we’ll cover some basics of TCP/IP networking because, after all, if you don’t understand the language, how are you going to work your way into the conversation?

Essentials

Before we can get into what a hacker is and how you become one in our romp through the introductory topics here, there are a couple things I need to get out of the way. First, even though I covered most of this in that Shakespearean introduction for the book, I want to talk a little bit about this exam and what you need to know, and do, to pass it. Why repeat myself? Because after reading reviews, comments, and e-mails from our first few outings, it has come to my attention almost none of you actually read the introduction. I don’t blame you; I skip it too on most certification study books, just going right for the meat. But there’s good stuff there you really need to know before reading further, so I’ll do a quick rundown for you up front.

Second, we need to cover some security and network basics that will help you on your exam. Some of this section is simply basic memorization, some of it makes perfect common sense, and some of it is, or should be, just plain easy. You’re really supposed to know this already, and you’ll see this stuff again and again throughout this book, but it’s truly bedrock stuff and I would be remiss if I didn’t at least provide a jumping-off point.

The Exam

Are you sitting down? Is your heart healthy? I don’t want to distress you with this shocking revelation I’m about to throw out, so if you need a moment go pour a bourbon (another refrain you’ll see referenced throughout this book) and get calm before you read further. Are you ready? The CEH version 9 exam is difficult, and despite hours (days, weeks) of study and multiple study sources, you may still come across a version of the exam that leaves you feeling like you’ve been hit by a truck.

I know. A guy writing and selling a study book just told you it won’t be enough. Trust me when I say it, though, I’m not kidding. Of course this will be a good study reference. Of course you can learn something from it if you really want to. Of course I did everything I could to make it as up to date and comprehensive as possible. But if you’re under the insane assumption this is a magic ticket, that somehow written word from 2016 is going to magically hit the word-for-word reference on a specific test question in whatever timeframe/year you’re reading this, I sincerely encourage you to find some professional help before the furniture starts talking to you and the cat starts making sense. Those of you looking for exact test questions and rote memorization to pass the exam will not find it in this publication, nor any other. For the rest of you, those who want a little focused attention to prepare the right way for the exam and those looking to learn what it really means to be an ethical hacker, let’s get going with your test basics.

First, if you’ve never taken a certification-level exam, I wouldn’t recommend this one as your virgin experience. It’s tough enough without all the distractions and nerves involved in your first walkthrough. When you do arrive for your exam, you usually check in with a friendly test proctor or receptionist, sign a few things, and get funneled off to your testing room. Every time I’ve gone it has been a smallish office or a closed-in cubicle, with a single monitor staring at you ominously. You’ll click START and begin whizzing through questions one by one, clicking the circle to select the best answer(s) or clicking and dragging definitions to the correct section. At the end there’s a SUBMIT button, which you will click and then enter a break in the time-space continuum—because the next 10 seconds will seem like the longest of your life. In fact, it’ll seem like an eternity, where things have slowed down so much you can actually watch the refresh rate on the monitor and notice the cycles of AC current flowing through the office lamps. When the results page finally appears, it’s a moment of overwhelming relief or one of surreal numbness.

If you pass, none of the study material matters and, frankly, you’ll almost immediately start dumping the stored memory from your neurons. If you don’t pass, everything matters. You’ll race to the car and start marking down everything you can remember so you can study better next time. You’ll fly to social media and the Internet to discuss what went wrong and to lambast anything you didn’t find useful in preparation. And you’ll almost certainly look for something, someone to blame. Trust me, don’t do this.

Everything you do in preparation for this exam should be done to make you a better ethical hacker, not to pass a test. If you prepare as if this is your job, if you take everything you can use for study material and try to learn instead of memorize, you’ll be better off, pass or fail. And, consequentially, I guarantee if you prepare this way your odds of passing any version of the test that comes out go up astronomically.

The test itself? Well, there are some tips and tricks that can help. I highly recommend you go back to the introduction and read the sections “The Examination” and “The Certification.” They’ll help you. A lot. Here are some other tips that may help:

• Do not let real life trump EC-Council’s view of it. There will be several instances somewhere along your study and eventual exam life where you will say, aloud, “That’s not what happens in the real world! Anyone claiming that would be stuffed in a locker and sprayed head to toe with shaving cream!” Trust me when I say this: real life and a certification exam are not necessarily always directly proportional. On some of these questions, you’ll need to study and learn what you need for the exam, knowing full well it’s different in the real world. If you don’t know what I mean by this, ask someone who has been doing this for a while if they think social engineering is passive.

• Go to the bathroom before you enter your test room. Even if you don’t have to. Because, trust me, you do.

• Use time to your advantage. The exam now is split into sections, with a timeframe set up for each one. You can work and review inside the section all you want, but once you pass through it you can’t go back. And if you fly through a section, you don’t get more time on the next one. Take your time and review appropriately.

• From ECC’s website, the breakdown of the test is as follows: 5 questions on background (networking, protocols, and so on), 16 on analysis/assessment (risk assessment, tech assessment, and analysis of data), 31 on security (everything from wireless and social engineering to system security controls), 40 on tools/systems/programs (tools, subnetting, DNS, NMAP [spelled out explicitly…ahem], port scanning, and so on), 25 on procedures (cryptography, SOA, and so on), 5 on regulation (security policies, compliance) and 3 on ethics (basically what makes an ethical hacker). If they’d actually arranged everything within those parameters, it would’ve helped a lot.

• Make use of the paper and pencil/pen the friendly test proctor provides you. As soon as you sit down, before you click START on the ominous test monitor display, start writing down everything from your head onto the paper provided. I would recommend reviewing just before you walk into the test center those sections of information you’re having the most trouble remembering. When you get to your test room, write them down immediately. That way, when you’re losing your mind a third of the way through the exam and start panicking that you can’t remember what an XMAS scan returns on a closed port, you’ll have a reference. And trust me, having it there makes it easier for you to recall the information, even if you never look at it.

• Trust your instincts. When you do question review, unless you absolutely, positively, beyond any shadow of a doubt know you initially marked the wrong answer, do not change it.

• Take the questions at face value. I know many people who don’t do well on exams because they’re trying to figure out what the test writer meant when putting the question together. Don’t read into a question; just answer it and move on.

• Schedule your exam sooner than you think you’ll be ready for it. I say this because I know people who say, “I’m going to study for six months and then I’ll be ready to take the exam.” Six months pass and they’re still sitting there, studying and preparing. If you do not put it on the calendar to make yourself prepare, you’ll never take it, because you’ll never be ready.

Again, it’s my intention that everyone reading this book and using it as a valuable resource in preparation for the exam will attain the certification, but I can’t guarantee you will. Because, frankly, I don’t know you. I don’t know your work ethic, your attention to detail, or your ability to effectively calm down to take a test and discern reality from a certification definition question. All I can do is provide you with the information, wish you the best of luck, and turn you loose. Now, on with the show.

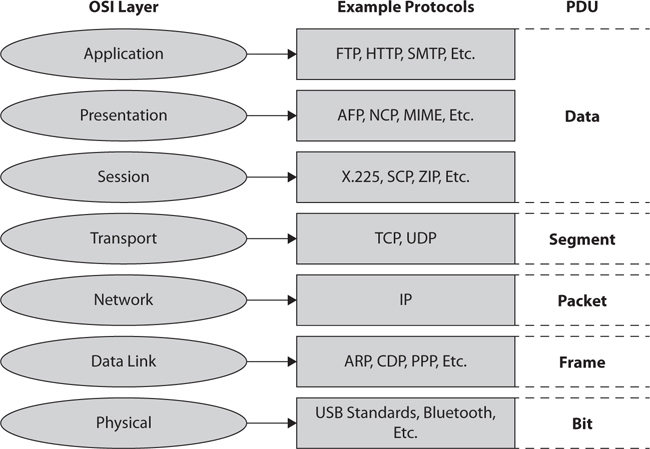

The OSI Reference Model

Most of us would rather take a ballpeen hammer to our toenails than to hear about the OSI reference model again. It’s taught up front in every networking class we all had to take in college, so we’ve all heard it a thousand times over. That said, those of us who have been around for a while and have taken a certification test or two also understand it usually results in a few easy test answers—provided you understand what they’re asking for. I’m not going to bore you with the same stuff you’ve heard or read a million times before since, as stated earlier, you’re supposed to know this already. What I am going to do, though, is provide a quick rundown for you to peruse, should you need to refresh your memory.

I thought long and hard about the best way to go over this topic again for our review, and decided I’d ditch the same old boring method of talking this through. Instead, let’s look at the 10,000-foot overhead view of a communications session between two computers depicted in the OSI reference model through the lens of building a network—specifically by trying to figure out how you would build a network from the ground up. Step in the Wayback Machine with Sherman, Mr. Peabody, and me, and let’s go back before networking was invented. How would you do it?

First, looking at those two computers sitting there wanting to talk to one another, you might consider the basics of what is right in front of your eyes: what will you use to connect your computers together so they can transmit signals? In other words, what media would you use? There are several options: copper cabling, glass tubes, even radio waves, among others. And depending on which one of those you pick, you’re going to have to figure out how to use them to transmit useable information. How will you get an electrical signal on the wire to mean something to the computer on the other end? What part of a radio wave can you use to spell out a word or a color? On top of all that, you’ll need to figure out connectors, interfaces, and how to account for interference. And that’s just Layer 1 (the Physical layer), where everything is simply bits—that is, 1’s and 0’s.

Layer 2 then helps answer the questions involved in growing your network. In figuring out how you would build this whole thing, if you decide to allow more than two nodes to join, how do you handle addressing? With only two systems, it’s no worry—everything sent is received by the guy on the other end—but if you add three or more to the mix, you’re going to have to figure out how to send the message with a unique address. And if your media is shared, how would you guarantee everyone gets a chance to talk, and no one’s message jumbles up anyone else’s? The Data Link layer (Layer 2) handles this using frames, which encapsulate all the data handed down from the higher layers. Frames hold addresses that identify a machine inside a particular network.

And what happens if you want to send a message out of your network? It’s one thing to set up addressing so that each computer knows where all the other computers in the neighborhood reside, but sooner or later you’re going to want to send a message to another neighborhood—maybe even another city. And you certainly can’t expect each computer to know the address of every computer in the whole world. This is where Layer 3 steps in, with the packet used to hold network addresses and routing information. It works a lot like ZIP codes on an envelope. While the street address (the physical address from Layer 2) is used to define the recipient inside the physical network, the network address from Layer 3 tells routers along the way which neighborhood (network) the message is intended for.

Other considerations then come into play, like reliable delivery and flow control. You certainly wouldn’t want a message just blasting out without having any idea if it made it to the recipient; then again, you may want to, depending on what the message is about. And you definitely wouldn’t want to overwhelm the media’s ability to handle the messages you send, so maybe you might not want to put the giant boulder of the message onto our media all at once, when chopping it up into smaller, more manageable pieces makes more sense. The next layer, Transport, handles this and more for you. In Layer 4, the segment handles reliable end-to-end delivery of the message, along with error correction (through retransmission of missing segments) and flow control.

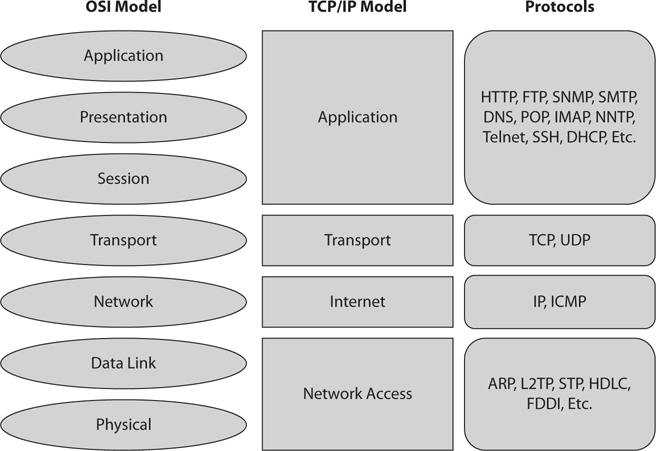

At this point you’ve set the stage for success. There is media to carry a signal (and you’ve figured how to encode that signal onto that media), addressing inside and outside your network is handled, and you’ve taken care of things like flow control and reliability. Now it’s time to look upward toward the machines themselves and make sure they know how to do what they need to do. The next three layers (from the bottom up—Session, Presentation, and Application) handle the data itself. The Session layer is more of a theoretical entity, with no real manipulation of the data itself—its job is to open, maintain, and close a session. The Presentation layer is designed to put a message into a format all systems can understand. For example, an e-mail crafted in Microsoft Outlook may not necessarily be received by a machine running Outlook, so it must be translated into something any receiver can comprehend—like pure ASCII code for delivery across a network. The Application layer holds all the protocols that allow a user to access information on and across a network. For example, FTP allows users to transport files across networks, SMTP provides for e-mail traffic, and HTTP allows you to surf the Internet at work while you’re supposed to be doing something else. These three layers make up the “data layers” of the stack, and they map directly to the Application layer of the TCP/IP stack. In these three layers, the protocol data unit (PDU) is referred to as data.

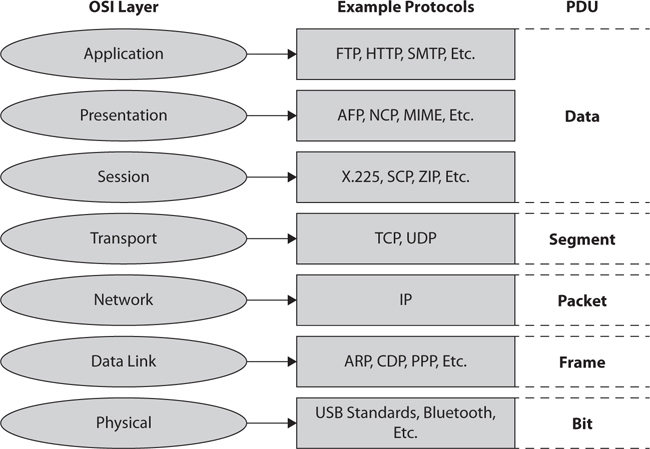

The layers, and examples of the protocols you’d find in them, are shown in Figure 1-1.

Figure 1-1 OSI reference model

TCP/IP Overview

Keeping in mind you’re supposed to know this already, we’re not going to spend an inordinate amount of time on this subject. That said, it’s vitally important to your success that the basics of TCP/IP networking are as ingrained in your neurons as other important aspects of your life, like maybe Mom’s birthday, the size and bag limit on redfish, the proper ratio of bourbon to anything you mix it with, and the proper way to place toilet paper on the roller (pull paper down, never up). This will be a quick preview, and we’ll revisit (and repeat) this in later chapters.

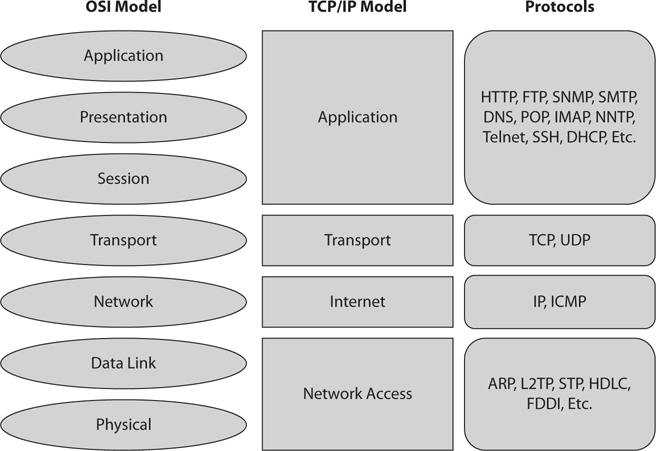

TCP/IP is a set of communications protocols that allows hosts on a network to talk to one another. This suite of protocols is arranged in a layered stack, much like the OSI reference model, with each layer performing a specific task. Figure 1-2 shows the TCP/IP stack.

Figure 1-2 TCP/IP stack

In keeping with the way this chapter started, let’s avoid a lot of the same stuff you’ve probably heard a thousand times already and simply follow a message from one machine to another through a TCP/IP network. This way, I hope to hit all the basics you need without boring you to tears and causing you to skip the rest of this chapter altogether. Keep in mind there is a whole lot of simultaneous goings-on in any session, so I may take a couple liberties to speed things along.

Suppose, for example, user Joe wants to get ready for the season opener and decides to do a little online shopping for his favorite University of Alabama football gear. Joe begins by opening his browser and typing in a request for his favorite website. His computer now has a data request from the browser that it looks at and determines cannot be answered internally. Why? Because the browser wants a page that is not stored locally. So, now searching for a network entity to answer the request, it chooses the protocol it knows the answer for this request will come back on (in this case, port 80 for HTTP) and starts putting together what will become a session—a bunch of segments sent back and forth to accomplish a goal.

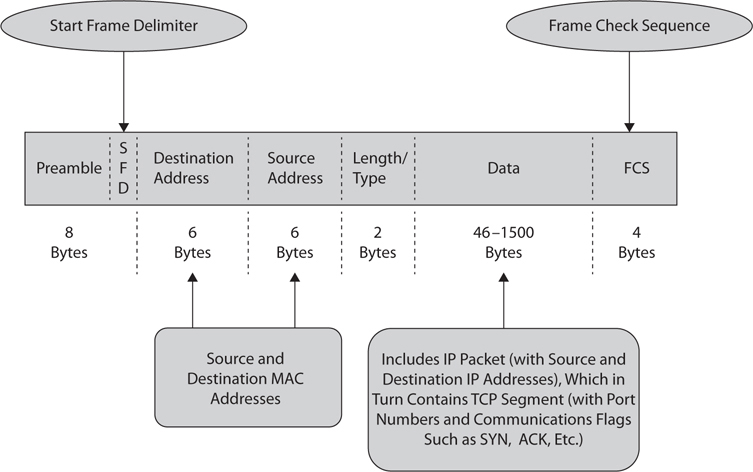

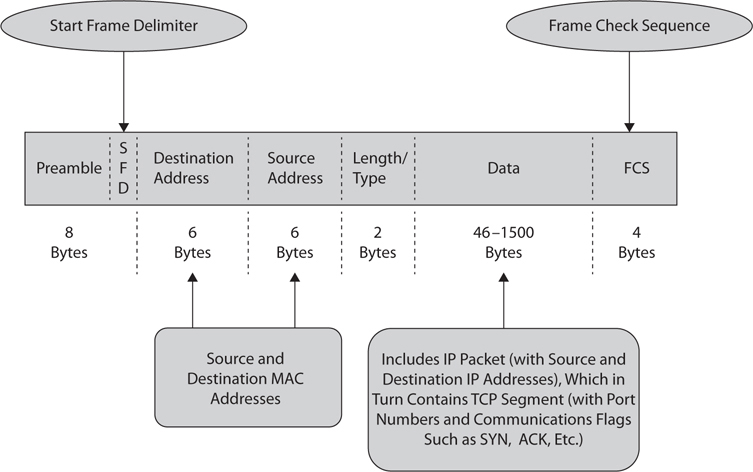

Since this is an Ethernet TCP/IP network, Joe’s computer talks to other systems using a format of bits arranged in specific order. These collections of bits in a specific order are called frames (Figure 1-3 shows a basic Ethernet frame), are built from the inside out, and rely on information handed down from upper layers. In this example, the Application layer will “hand down” an HTTP request (data) to the Transport layer. At this layer, Joe’s computer looks at the HTTP request and (because it knows HTTP usually works this way) knows this needs to be a connection-oriented session, with stellar reliability to ensure Joe gets everything he asks for without losing anything. It calls on the Transmission Control Protocol (TCP) for that. TCP will go out in a series of messages to set up a communications session with the end station, including a three-step handshake to get things going. This handshake includes a Synchronize segment (SYN), a Synchronize Acknowledgment segment (SYN/ACK), and an Acknowledgment segment (ACK). The first of these—the SYN segment asking the other computer whether it’s awake and wants to talk—gets handed down for addressing to the Internet layer.

Figure 1-3 An Ethernet frame

This layer needs to figure out what network the request will be answered from (after all, there’s no guarantee it’ll be local—it could be anywhere in the world). It does its job by using another protocol (DNS) to ask what IP address belongs to the URL Joe typed. When that answer comes back, it builds a packet for delivery (which consists of the original data request, the TCP header [SYN], and the IP packet information affixed just before it) and “hands down” the packet to the Network Access layer for delivery.

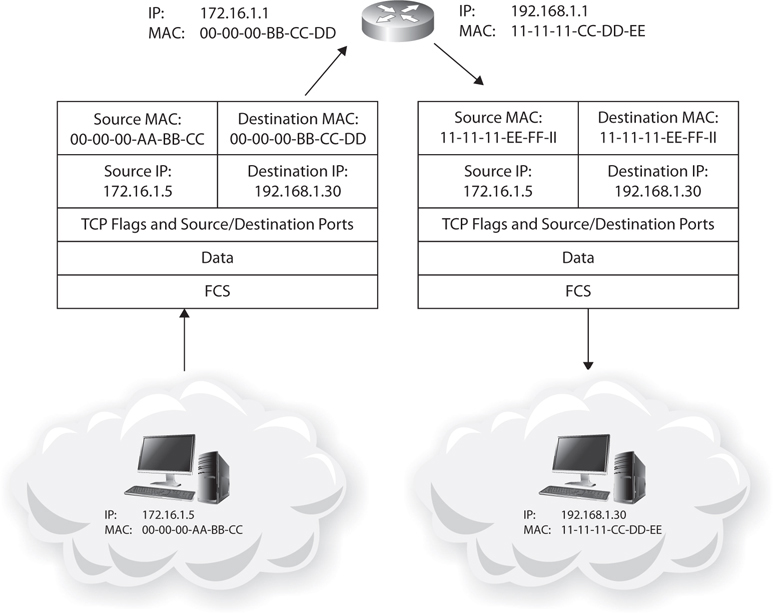

Here, Joe’s computer needs to find a local address to deliver the packet to (because every computer is only concerned with, and capable of, sending a message to a machine inside its own subnet). It knows its own physical address but has no idea what physical address belongs to the system that will be answering. The IP address of this device is known—thanks to DNS—but the local, physical address is not. To gain that, Joe’s computer employs yet another protocol, ARP, to figure that out, and when that answer comes back (in this case, the gateway, or local router port), the frame can then be built and sent out to the network (for you network purists out there screaming that ARP isn’t needed for networks that the host already knows should be sent to the default gateway, calm down—it’s just an introductory paragraph). This process of asking for a local address to forward the frame to is repeated at every link in the network chain: every time the frame is received by a router along the way, the router strips off the frame header and trailer and rebuilds it based on new ARP answers for that network chain. Finally, when the frame is received by the destination, the server will keep stripping off and handing up bit, frame, packet, segment, and data PDUs, which should result—if everything has worked right—in the return of a SYN/ACK message to get things going.

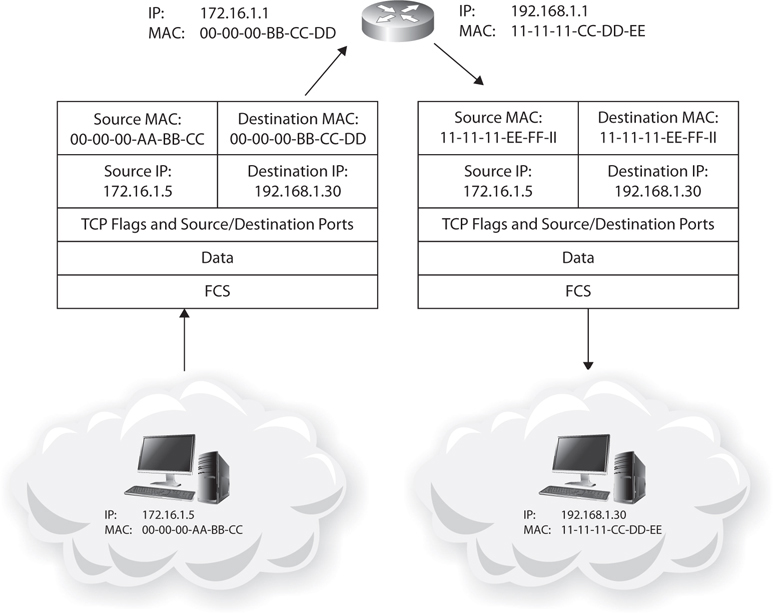

To see this in action, take a quick look at the frames at each link in the chain from Joe’s computer to a server in Figure 1-4. Note that the frame is ripped off and replaced by a new one to deliver the message within the new network; the source and destination MAC addresses will change, but IPs never do.

Figure 1-4 Ethernet frames in transit

Although tons and tons of stuff has been left out—such as port and sequence numbers, which will be of great importance to you later—this touches on all the basics for TCP/IP networking. We’ll be covering it over and over again, and in more detail, throughout this book, so don’t panic if it’s not all registering with you yet. Patience, Grasshopper—this is just an introduction, remember?

One final thing I should add here before moving on, however, is the concept of network security zones. The idea behind this is that you can divide your networks in such a way that you have the opportunity to manage systems with specific security actions to help control inbound and outbound traffic. You’ve probably heard of these before, but I’d be remiss if I didn’t add them here. The five zones ECC defined are as follows:

• Internet Outside the boundary and uncontrolled. You don’t apply security policies to the Internet. Governments try to all the time, but your organization can’t.

• Internet DMZ The acronym DMZ (for Demilitarized Zone) comes from the military and refers to a section of land between two adversarial parties where there are no weapons or fighting. The idea is you can see an adversary coming across the DMZ and have time to work up a defense. In networking, the idea is the same: it’s a controlled buffer network between you and the uncontrolled chaos of the Internet.

• Production Network Zone A very restricted zone that strictly controls direct access from uncontrolled zones. The PNZ doesn’t hold users.

• Intranet Zone A controlled zone that has little-to-no heavy restrictions. This is not to say everything is wide open on the Intranet Zone, but communication requires fewer strict controls internally.

• Management Network Zone Usually an area you’d find rife with VLANs and maybe controlled via IPSec and such. This is a highly secured zone with very strict policies.

Security Basics

If there were a subtitle to this section, I would have entitled it “Ceaseless Definition Terms Necessary for Only a Few Questions on the Exam.” There are tons of these, and I gave serious thought to skipping them all and just leaving you to the glossary. However, because I’m in a good mood and, you know, I promised my publisher I’d cover everything, I’ll give it a shot here. And, at least for some of these, I’ll try to do so using contextual clues in a story.

Bob and Joe used to be friends in college, but had a falling out over doughnuts. Bob insisted Krispy Kreme’s were better, but Joe was a Dunkin fan, and after much yelling and tossing of fried dough they became mortal enemies. After graduation they went their separate ways exploring opportunities as they presented themselves. Eventually Bob became Security Guy Bob, in charge of security for Orca Pig (OP) Industries, Inc., while Joe made some bad choices and went on to become Hacker Joe.

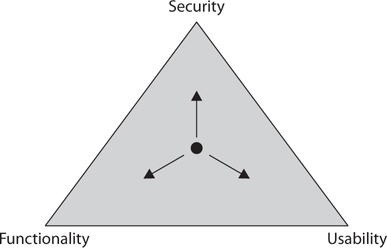

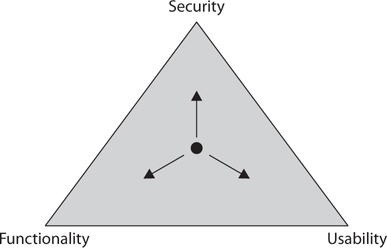

After starting, Bob noticed most decisions at OP were made in favor of usability over functionality and security. He showed a Security, Functionality, and Usability triangle (see Figure 1-5) to upper management, visually displaying that moving toward one of the three lessened the other two, and security was sure to suffer long term. Management noted Bob’s concerns and summarily dismissed them as irrational, as budgets were tight and business was good.

Figure 1-5 The Security, Functionality, and Usability triangle

One day a few weeks later, Hacker Joe woke up and decided he wanted to be naughty. He went out searching for a target of hack value, so he wouldn’t waste time on something that didn’t matter. In doing so, he found OP, Inc., and smiled when he saw Bob’s face on the company directory. He searched and found a target, researching to see if it had any weaknesses, such as software flaws or logic design errors. A particular vulnerability did show up on the target, so Joe researched attack vectors and discovered—through his super-secret hacking background contacts—an attack the developer of some software on the target apparently didn’t even know about since they hadn’t released any kind of security patch or fix to address the problem. This zero-day attack vector required a specific piece of exploit code he could inject through a hacking tactic he thought would work. After obfuscating this payload and imbedding it in an attack, he started.

After pulling off the successful exploit and owning the box, Joe explored what additional access the machine could grant him. He discovered other targets and vulnerabilities, and successfully configured access to all. His daisy chaining of network access then gave him options to set up several machines on multiple networks he could control remotely to execute really whatever he wanted. These bots could be accessed any time he wanted, so Joe decided to prep for more carnage. He also searched publicly available databases and social media for personally identifiable information (PII) about Bob, and posted his findings. After this doxing effort, Joe took a nap, dreaming about what embarrassment Bob would have rain down on him the next day.

After discovering PII posts about himself, Bob worries that something is amiss, and wonders if his old nemesis is back and on the attack. He does some digging and discovers Joe’s attack from the previous evening, and immediately engages his Incident Response Team (IRT) to identify, analyze, prioritize, and resolve the incident. The team first reviews detection and quickly analyzes the exploitation, in order to notify appropriate stakeholders. The team then works to contain the exploitation, eradicate residual back doors and such, and coordinate recovery for any lost data or services. After following this incident management process, the team provides post-incident reporting and lessons learned to management.

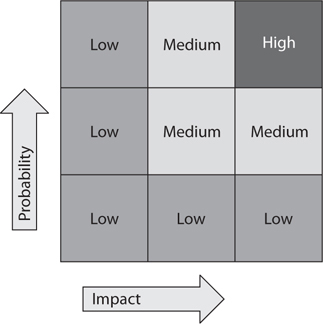

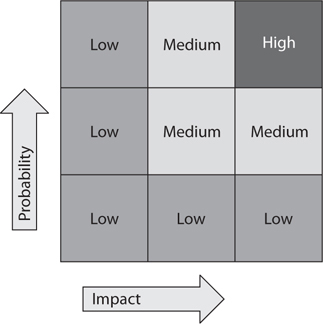

Post-incident reporting suggested to management they focus more attention on security, and, in one section on the report in particular, that they adopt means to identify what risks are present and quantify them on a measurement scale. This risk management approach would allow them to come up with solutions to mitigate, eliminate, or accept the identified risks (see Figure 1-6 for a sample risk analysis matrix).

Figure 1-6 Risk analysis matrix

Identifying organizational assets, threats to those assets, and their vulnerabilities would allow the company to explore which countermeasures security personnel could put into place to minimize risks as much as possible. These security controls would then greatly increase the security posture of the systems.

Some of these controls were to be put into place to prevent errors or incidents from occurring in the first place, some were to identify an incident had occurred or was in progress, and some were designed for after the event to limit the extent of damage and aid swift recovery. These preventative, detective, and corrective controls can work together to reduce Joe’s ability to further his side of the great Doughnut Fallout.

This effort spurred a greater focus on overall preparation and security. Bob’s quick action averted what could have been a total disaster, but everyone involved saw the need for better planning and preparation. Bob and management kicked off an effort to identify the systems and processes that were critical for operations. This Business Impact Analysis (BIA) included measurements of the maximum tolerable downtime (MTD), which provided a means to prioritize the recovery of assets should the worst occur. Bob also branched out and created Orca Pig’s first set of plans and procedures to follow in the event of a failure or a disaster—security related or not—to get business services back up and running. His business continuity plan (BCP) included a disaster recovery plan (DRP), addressing exactly what to do to recover any lost data or services.

Bob also did some research his management should have, and discovered some additional actions and groovy acronyms they should know and pay attention to. When putting numbers and value to his systems and services, the ALE (annualized loss expectancy) turned out to be the product of the ARO (annual rate of occurrence) and the SLE (single loss expectancy). For his first effort, he looked at one system and determined its worth, including the cost for return to service and any lost revenue during downtime, was $120,000. Bob made an educated guess on the percentage of loss for this asset if a specific threat was actually realized and determined the exposure factor (EF) turned out to be 25 percent. He multiplied this by the asset value and came up with an SLE of $30,000 ($120,000 × 25%). He then figured out what he felt would be the probability this would occur in any particular 12-month period. Given statistics he garnered from similarly protected businesses, he thought it could occur once every five years, which gave him an ARO of 0.02 (one occurrence / five years). By multiplying the estimate of a single loss versus the number of times it was likely to occur in a year, Bob could generate the ALE for this asset at $600 ($30,000 × 0.02). Repeating this across Orca Pig’s assets turned out to provide valuable information for planning, preparation, and budgeting.

At the end of this effort week, Bob relaxed with a Maker’s Mark and an Arturo Fuente on his back porch, smiling at all the good security work he’d done and enjoying the bonus his leadership provided as a reward. Joe stewed in his apartment, angry that his work would now be exponentially harder. But while Bob took the evening to rest on his laurels, Joe went back to work, scratching and digging at OP’s defenses. “One day I’ll find a way in. Just wait and see. I won’t stop. Ever.”

Now, wasn’t that better than just reading definitions? Sure there were a few leaps there, and Bob surely wouldn’t be the guy doing ALE measurements, but it’s better than trying to explain all that otherwise. Every italicized word in this section could possibly show up on your exam, and now you can just remember this little story and you’ll be ready for almost anything. But although this was fun, and I did consider continuing it throughout the remainder of this book (fiction is so much more entertaining), some of this needs a little more than a passing italics reference, so we’ll break here and go back to more “expected” writing.

CIA

Another bedrock in any security basics discussion is the holy trinity of IT security: confidentiality, integrity, and availability (CIA). Whether you’re an ethical hacker or not, these three items constitute the hallmarks of security we all strive for. You’ll need to be familiar with two aspects of each term in order to achieve success as an ethical hacker as well as on the exam: what the term itself means and which attacks are most commonly associated with it.

Confidentiality, addressing the secrecy and privacy of information, refers to the measures taken to both prevent disclosure of information or data to unauthorized individuals or systems and to ensure the proper disclosure of information to those who are authorized to receive it. Confidentiality for the individual is a must, considering its loss could result in identity theft, fraud, and loss of money. For a business or government agency, it could be even worse. The use of passwords within some form of authentication is by far the most common measure taken to ensure confidentiality, and attacks against passwords are, amazingly enough, the most common confidentiality attacks.

For example, your logon to a network usually consists of a user ID and a password, which is designed to ensure only you have access to that particular device or set of network resources. If another person were to gain your user ID and password, they would have unauthorized access to resources and could masquerade as you throughout their session. Although the user ID and password combination is by far the most common method used to enforce confidentiality, numerous other options are available, including biometrics and smartcards.

The Stone Left Unturned

Security professionals deal with, and worry about, risk management a lot. We create and maintain security plans, deal with endless audits, create and monitor ceaseless reporting to government, and employ bunches of folks just to maintain “quality” as it applies to the endless amounts of processes and procedures we have to document. Yet with all this effort, there always seems to be something left out—some stone left unturned that a bad guy takes advantage of.

Don’t take my word for it, just check the news and the statistics. Seemingly every day there is a news story about a major data breach somewhere. OPM lost millions of PII records to hackers. eBay had 145 million user accounts compromised. JPMorgan Chase had over 70 million home and business records compromised, and the list goes on and on. In 2015, per Breach Level Index (http://breachlevelindex.com) statistics, over 3 billion data records—that we know about—were stolen, and the vast majority were lost to a malicious outsider (not accidental, state sponsored, or the always-concerning disgruntled employee malicious insider).

All this leads to a couple questions. First, IT security professionals must be among the most masochistic people on the planet. Why volunteer to do a job where you know, somewhere along the line, you’re more than likely going to fail at it and, at the very least, be yelled at over it? Second, if there are so many professionals doing so much work and breaches still happen, is there something outside their control that leads to these failures? As it turns out, the answer is “Not always, but oftentimes YES.”

Sure, there were third-party failures in home-grown web applications to blame, and of course there were default passwords left on outside-facing machines. There were also several legitimate attacks that occurred because somebody, somewhere didn’t take the right security measure to protect data. But, at least for 2015, phishing and social engineering played a large role in many cases, and zero-day attacks represented a huge segment of the attack vectors. Can security employees be held accountable for users not paying attention to the endless array of annual security training shoved down their throats advising them against clicking on e-mail links? Should your security engineer be called onto the carpet because employees still, still, just give their passwords to people on the phone or over e-mail when they’re asked for them? And I’m not even going to touch zero day—if we could predict stuff like that, we’d all be lottery winners.

Security folks can, and should, be held to account for ignoring due diligence in implementing security on their networks. If a system gets compromised because we were lax in providing proper monitoring and oversight, and it leads to corporate-wide issues, we should be called to account. But can we ever uncover all those stones during our security efforts across an organization? Even if some of those stones are based on human nature? I fear the answer is no. Because some of them won’t budge.

Integrity refers to the methods and actions taken to protect the information from unauthorized alteration or revision—whether the data is at rest or in transit. In other words, integrity measures ensure the data sent from the sender arrives at the recipient with no alteration. For example, imagine a buying agent sending an e-mail to a customer offering the price of $300. If an attacker somehow has altered the e-mail and changed the offering price to $3,000, the integrity measures have failed, and the transaction will not occur as intended, if at all. Oftentimes, attacks on the integrity of information are designed to cause embarrassment or legitimate damage to the target.

Integrity in information systems is often ensured through the use of a hash. A hash function is a one-way mathematical algorithm (such as MD5 and SHA-1) that generates a specific, fixed-length number (known as a hash value). When a user or system sends a message, a hash value is also generated to send to the recipient. If even a single bit is changed during the transmission of the message, instead of showing the same output, the hash function will calculate and display a greatly different hash value on the recipient system. Depending on the way the controls within the system are designed, this would result in either a retransmission of the message or a complete shutdown of the session.

Availability is probably the simplest, easiest-to-understand segment of the security triad, yet it should not be overlooked. It refers to the communications systems and data being ready for use when legitimate users need them. Many methods are used for availability, depending on whether the discussion is about a system, a network resource, or the data itself, but they all attempt to ensure one thing—when the system or data is needed, it can be accessed by the appropriate personnel.

Attacks against availability almost always fall into the “denial-of-service” realm. Denial-of-service (DoS) attacks are designed to prevent legitimate users from having access to a computer resource or service and can take many forms. For example, attackers could attempt to use all available bandwidth to the network resource, or they may actively attempt to destroy a user’s authentication method. DoS attacks can also be much simpler than that—unplugging the power cord is the easiest DoS in history!

Access Control Systems

While we’re on the subject of computer security, I think it may be helpful to step back and look at how we all got here, and take a brief jog through some of the standards and terms that came out of all of it. In the early days of computing and networking, it’s pretty safe to say security wasn’t high on anyone’s to-do list. As a matter of fact, in most instances security wasn’t even an afterthought, and unfortunately it wasn’t until things started getting out of hand that anyone really started putting any effort into it. The sad truth about a lot of security is that it came out of a reactionary stance, and very little thought was put into it as a proactive effort—until relatively recently, anyway.

This is not to say nobody tried at all. As a matter of fact, in 1983 some smart guys at the U.S. Department of Defense saw the future need for protection of information (government information, that is) and worked with the NSA to create the National Computer Security Center (NCSC). This group got together and created all sorts of security manuals and steps, and published them in a book series known as the “Rainbow Series.” The centerpiece of this effort came out as the “Orange Book,” which held something known as the Trusted Computer System Evaluation Criteria (TCSEC).

TCSEC was a United States Government Department of Defense (DoD) standard, with a goal to set basic requirements for testing the effectiveness of computer security controls built into a computer system. The idea was simple: if your computer system (network) was going to handle classified information, it needed to comply with basic security settings. TCSEC defined how to assess whether these controls were in place, and how well they worked. The settings, evaluations, and notices in the Orange Book (for their time) were well thought out and proved their worth in the test of time, surviving all the way up to 2005. However, as anyone in security can tell you, nothing lasts forever.

TCSEC eventually gave way to the Common Criteria for Information Technology Security Evaluation (also known as Common Criteria, or CC). Common Criteria had actually been around since 1999, and finally took precedence in 2005. It provided a way for vendors to make claims about their in-place security by following a set standard of controls and testing methods, resulting in something called an Evaluation Assurance Level (EAL). For example, a vendor might create a tool, application, or computer system and desire to make a security declaration. They would then follow the controls and testing procedures to have their system tested at the EAL (Levels 1–7) they wished to have. Assuming the test was successful, the vendor could claim “Successfully tested at EAL-4.”

Common Criteria is, basically, a testing standard designed to reduce or remove vulnerabilities from a product before it is released. Besides EAL, three other terms are associated with this effort you’ll need to remember:

• Target of evaluation (TOE) What is being tested

• Security target (ST) The documentation describing the TOE and security requirements

• Protection profile (PP) A set of security requirements specifically for the type of product being tested

While there’s a whole lot more to it, suffice it to say CC was designed to provide an assurance that the system is designed, implemented, and tested according to a specific security level. It’s used as the basis for Government certifications and is usually tested for U.S. Government agencies.

Lastly in our jaunt through terminology and history regarding security and testing, we have a couple terms to deal with. One of these is the overall concept of access control itself. Access control basically means restricting access to a resource in some selective manner. There are all sorts of terms you can fling about in discussing this to make you sound really intelligent (subject, initiator, authorization, and so on), but I’ll leave all that for the glossary. Here, we’ll just talk about a couple of ways of implementing access control: mandatory and discretionary.

Mandatory access control (abbreviated to MAC) is a method of access control where security policy is controlled by a security administrator: users can’t set access controls themselves. In MAC, the operating system restricts the ability of an entity to access a resource (or to perform some sort of task within the system). For example, an entity (such as a process) might attempt to access or alter an object (such as files, TCP or UDP ports, and so on). When this occurs, a set of security attributes (set by the policy administrator) is examined by an authorization rule. If the appropriate attributes are in place, the action is allowed.

By contrast, discretionary access control (DAC) puts a lot of this power in the hands of the users themselves. DAC allows users to set access controls on the resources they own or control. Defined by the TCSEC as a means of “restricting access to objects based on the identity of subjects and/or groups to which they belong,” the idea is controls are discretionary in the sense that a subject with a certain access permission is capable of passing that permission (perhaps indirectly) on to any other subject (unless restrained by mandatory access control). A couple of examples of DAC include NTFS permissions in Windows machines and Unix’s use of users, groups, and read-write-execute permissions.

Security Policies

When I saw EC-Council dedicating so much real estate in its writing to security policies, I groaned in agony. Any real practitioner of security will tell you policy is a great thing, worthy of all the time, effort, sweat, cursing, and mind-numbing days staring at a template, if only you could get anyone to pay attention to it. Security policy (when done correctly) can and should be the foundation of a good security function within your business. Unfortunately, it can also turn into a horrendous amount of memorization and angst for certification test takers because it’s not always clear.

A security policy can be defined as a document describing the security controls implemented in a business to accomplish a goal. Perhaps an even better way of putting it would be to say the security policy defines exactly what your business believes is the best way to secure its resources. Different policies address all sorts of things, such as defining user behavior within and outside the system, preventing unauthorized access or manipulation of resources, defining user rights, preventing disclosure of sensitive information, and addressing legal liability for users and partners. There are worlds of different security policy types, with some of the more common ones identified here:

• Access Control Policy This identifies the resources that need protection and the rules in place to control access to those resources.

• Information Security Policy This identifies to employees what company systems may be used for, what they cannot be used for, and what the consequences are for breaking the rules. Generally employees are required to sign a copy before accessing resources. Versions of this policy are also known as an Acceptable Use Policy.

• Information Protection Policy This defines information sensitivity levels and who has access to those levels. It also addresses how data is stored, transmitted, and destroyed.

• Password Policy This defines everything imaginable about passwords within the organization, including length, complexity, maximum and minimum age, and reuse.

• E-mail Policy Sometimes also called the E-mail Security Policy, this addresses the proper use of the company e-mail system.

• Information Audit Policy This defines the framework for auditing security within the organization. When, where, how, how often, and sometimes even who conducts information security audits are described here.

There are many other types of security policies, and we could go on and on, but you get the idea. Most policies are fairly easy to understand simply based on the name. For example, it shouldn’t be hard to determine that the Remote Access Policy identifies who can have remote access to the system and how they go about getting that access. Other easy-to-recognize policies include User Account, Firewall Management, Network Connection, and Special Access.

Lastly, and I wince in including this because I can hear you guys in the real world grumbling already, but believe it or not, EC-Council also looks at policy through the prism of how tough it is on users. A promiscuous policy is basically wide open, whereas a permissive policy blocks only things that are known to be naughty or dangerous. The next step up is a prudent policy, which provides maximum security but allows some potentially and known dangerous services because of business needs. Finally, a paranoid policy locks everything down, not even allowing the user to open so much as an Internet browser.

Introduction to Ethical Hacking

Ask most people to define the term hacker, and they’ll instantly picture a darkened room, several monitors ablaze with green text scrolling across the screen, and a shady character in the corner furiously typing away on a keyboard in an effort to break or steal something. Unfortunately, a lot of that is true, and a lot of people worldwide actively participate in these activities for that very purpose. However, it’s important to realize there are differences between the good guys and the bad guys in this realm. It’s the goal of this section to help define the two groups for you, as well as provide some background on the basics.

Whether for noble or bad purposes, the art of hacking remains the same. Using a specialized set of tools, techniques, knowledge, and skills to bypass computer security measures allows someone to “hack” into a computer or network. The purpose behind their use of these tools and techniques is really the only thing in question. Whereas some use these tools and techniques for personal gain or profit, the good guys practice them in order to better defend their systems and, in the process, provide insight on how to catch the bad guys.

Hacking Terminology

Like any other career field, hacking (ethical hacking) has its own lingo and a myriad of terms to know. Hackers themselves, for instance, have various terms and classifications to fall into. For example, you may already know that a script kiddie is a person uneducated in hacking techniques who simply makes use of freely available (but oftentimes old and outdated) tools and techniques on the Internet. And you probably already know that a phreaker is someone who manipulates telecommunications systems in order to make free calls. But there may be a few terms you’re unfamiliar with that this section may be able to help with. Maybe you simply need a reference point for test study, or maybe this is all new to you; either way, perhaps there will be a nugget or two here to help on the exam.

In an attempt to avoid a 100-page chapter of endless definitions and to attempt to assist you in maintaining your sanity in studying for this exam, we’ll stick with the more pertinent information you’ll need to remember, and I recommend you peruse the glossary at the end of this book for more information. You’ll see these terms used throughout the book anyway, and most of them are fairly easy to figure out on your own, but don’t discount the definitions you’ll find in the glossary. Besides, I worked really hard on the glossary—it would be a shame if it went unnoticed.

Hacker Classifications: The Hats

You can categorize a hacker in countless ways, but the “hat” system seems to have stood the test of time. I don’t know if that’s because hackers like Western movies or we’re all just fascinated with cowboy fashion, but it’s definitely something you’ll see over and over again on your exam. The hacking community in general can be categorized into three separate classifications: the good, the bad, and the undecided. In the world of IT security, this designation is given as a hat color and should be fairly easy for you to keep track of.

• White hats Considered the good guys, these are the ethical hackers, hired by a customer for the specific goal of testing and improving security or for other defensive purposes. White hats are well respected and don’t use their knowledge and skills without prior consent. White hats are also known as security analysts.

• Black hats Considered the bad guys, these are the crackers, illegally using their skills for either personal gain or malicious intent. They seek to steal (copy) or destroy data and to deny access to resources and systems. Black hats do not ask for permission or consent.

• Gray hats The hardest group to categorize, these hackers are neither good nor bad. Generally speaking, there are two subsets of gray hats—those who are simply curious about hacking tools and techniques and those who feel like it’s their duty, with or without customer permission, to demonstrate security flaws in systems. In either case, hacking without a customer’s explicit permission and direction is usually a crime.

While we’re on the subject, another subset of this community uses its skills and talents to put forward a cause or a political agenda. These people hack servers, deface websites, create viruses, and generally wreak all sorts of havoc in cyberspace under the assumption that their actions will force some societal change or shed light on something they feel to be a political injustice. It’s not some new anomaly in human nature—people have been protesting things since the dawn of time—it has just moved from picket signs and marches to bits and bytes. In general, regardless of the intentions, acts of “hactivism” are usually illegal in nature.

Another class of hacker borders on the insane. Some hackers are so driven, so intent on completing their task, they are willing to risk everything to pull it off. Whereas we, as ethical hackers, won’t touch anything until we’re given express consent to do so, these hackers are much like hactivists and feel that their reason for hacking outweighs any potential punishment. Even willing to risk jail time for their activities, so-called suicide hackers are the truly scary monsters in the closet. These guys work in a scorched-earth mentality and do not care about their own safety or freedom, not to mention anyone else’s.

Attack Types

Another area for memorization in our stroll through this introduction concerns the various types of attacks a hacker could attempt. Most of these are fairly easy to identify and seem, at times, fairly silly to even categorize. After all, do you care what the attack type is called if it works for you? For this exam, EC-Council broadly defines all these attack types in four categories.

• Operating system (OS) attacks Generally speaking, these attacks target the common mistake many people make when installing operating systems—accepting and leaving all the defaults. Administrator accounts with no passwords, all ports left open, and guest accounts (the list could go on forever) are examples of settings the installer may forget about. Additionally, operating systems are never released fully secure—they can’t be, if you ever plan on releasing them within a timeframe of actual use—so the potential for an old vulnerability in newly installed operating systems is always a plus for the ethical hacker.

• Application-level attacks These are attacks on the actual programming code and software logic of an application. Although most people are cognizant of securing their OS and network, it’s amazing how often they discount the applications running on their OS and network. Many applications on a network aren’t tested for vulnerabilities as part of their creation and, as such, have many vulnerabilities built into them. Applications on a network are a gold mine for most hackers.

• Shrink-wrap code attacks These attacks take advantage of the built-in code and scripts most off-the-shelf applications come with. The old refrain “Why reinvent the wheel?” is often used to describe this attack type. Why spend time writing code to attack something when you can buy it already “shrink-wrapped”? These scripts and code pieces are designed to make installation and administration easier but can lead to vulnerabilities if not managed appropriately.

• Misconfiguration attacks These attacks take advantage of systems that are, on purpose or by accident, not configured appropriately for security. Remember the triangle earlier and the maxim “As security increases, ease of use and functionality decrease”? This type of attack takes advantage of the administrator who simply wants to make things as easy as possible for the users. Perhaps to do so, the admin will leave security settings at the lowest possible level, enable every service, and open all firewall ports. It’s easier for the users but creates another gold mine for the hacker.

Hacking Phases

Regardless of the intent of the attacker (remember there are good guys and bad guys), hacking and attacking systems can sometimes be akin to a pilot and her plane. That’s right, I said “her.” My daughter is a search-and-rescue helicopter pilot for the U.S. Air Force, and because of this ultra-cool access, I get to talk with pilots from time to time. I often hear them say, when describing a mission or event they were on, that they just “felt” the plane or helicopter—that they just knew how it was feeling and the best thing to do to accomplish the goal, sometimes without even thinking about it.

I was talking to my daughter a while back and asked her about this human–machine relationship. She paused for a moment and told me that sure, it exists, and it’s uncanny to think about why pilot A did action B in a split-second decision. However, she cautioned, all that mystical stuff can never happen without all the up-front training, time, and procedures. Because the pilots followed a procedure and took their time up front, the decision making and “feel” of the machine gets to come to fruition.

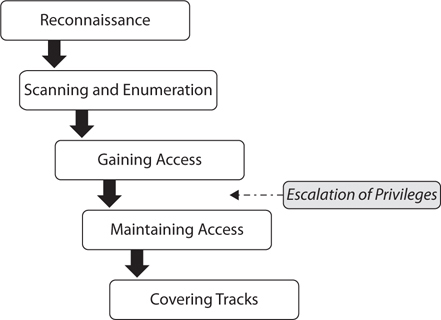

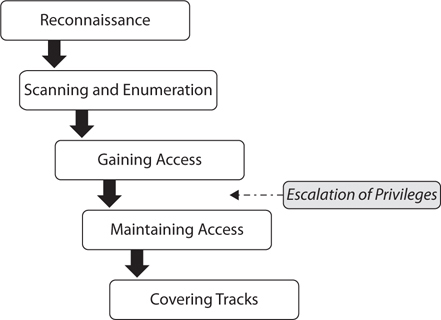

Hacking phases, as identified by EC-Council, are a great way to think about an attack structure for you, my hacking pilot trainee. I’m not saying you shouldn’t take advantage of opportunities when they present themselves just because they’re out of order (if a machine presents itself willingly and you refuse the attack, exclaiming, “But I haven’t reconned it yet!” I may have to slap you myself), but in general following the plan will produce quality results. Although there are many different terms for these phases and some of them run concurrently and continuously throughout a test, EC-Council has defined the standard hack as having five phases, shown in Figure 1-7. Whether the attacker is ethical or malicious, these five phases capture the full breadth of the attack.

Figure 1-7 Phases of ethical hacking

Reconnaissance is probably going to be the most difficult phase to understand for the exam, mainly because many people confuse some of its steps as being part of the next phase (scanning and enumeration). Reconnaissance is nothing more than the steps taken to gather evidence and information on the targets you want to attack. It can be passive in nature or active. Passive reconnaissance involves gathering information about your target without their knowledge, whereas active reconnaissance uses tools and techniques that may or may not be discovered but put your activities as a hacker at more risk of discovery. Another way of thinking about it is from a network perspective: active is that which purposefully puts packets, or specific communications, on a wire to your target, whereas passive does not.

For example, imagine your penetration test, also known as a pen test, has just started and you know nothing about the company you are targeting. Passively, you may simply watch the outside of the building for a couple of days to learn employee habits and see what physical security measures are in place. Actively, you may simply walk up to the entrance or guard shack and try to open the door (or gate). In either case, you’re learning valuable information, but with passive reconnaissance you aren’t taking any action to signify to others that you’re watching. Examples of actions that might be taken during this phase are social engineering, dumpster diving, and network sniffing—all of which are addressed throughout the remainder of this study guide.

In the second phase, scanning and enumeration, security professionals take the information they gathered in recon and actively apply tools and techniques to gather more in-depth information on the targets. This can be something as simple as running a ping sweep or a network mapper to see what systems are on the network, or as complex as running a vulnerability scanner to determine which ports may be open on a particular system. For example, whereas recon may have shown the network to have 500 or so machines connected to a single subnet inside a building, scanning and enumeration would tell you which ones are Windows machines and which ones are running FTP.

The third phase, as they say, is where the magic happens. This is the phase most people delightedly rub their hands together over, reveling in the glee they know they will receive from bypassing a security control. In the gaining access phase, true attacks are leveled against the targets enumerated in the second phase. These attacks can be as simple as accessing an open and nonsecured wireless access point and then manipulating it for whatever purpose, or as complex as writing and delivering a buffer overflow or SQL injection against a web application. The attacks and techniques used in the phase will be discussed throughout the remainder of this study guide.

In the fourth phase, maintaining access, hackers attempt to ensure they have a way back into the machine or system they’ve already compromised. Back doors are left open by the attacker for future use, especially if the system in question has been turned into a zombie (a machine used to launch further attacks from) or if the system is used for further information gathering—for example, a sniffer can be placed on a compromised machine to watch traffic on a specific subnet. Access can be maintained through the use of Trojans, rootkits, or any number of other methods.

In the final phase, covering tracks, attackers attempt to conceal their success and avoid detection by security professionals. Steps taken here consist of removing or altering log files, hiding files with hidden attributes or directories, and even using tunneling protocols to communicate with the system. If auditing is turned on and monitored, and often it is not, log files are an indicator of attacks on a machine. Clearing the log file completely is just as big an indicator to the security administrator watching the machine, so sometimes selective editing is your best bet. Another great method to use here is simply corrupting the log file itself—whereas a completely empty log file screams an attack is in progress, files get corrupted all the time, and, chances are, the administrator won’t bother trying to rebuild it. In any case, good pen testers are truly defined in this phase.

A couple of insights can, and should, be gained here. First, contrary to popular belief, pen testers do not usually just randomly assault things hoping to find some overlooked vulnerability to exploit. Instead, they follow a specific, organized method to thoroughly discover every aspect of the system they’re targeting. Good ethical hackers performing pen tests ensure these steps are very well documented, taking exceptional and detailed notes and keeping items such as screenshots and log files for inclusion in the final report. Mr. Horton, our beloved technical editor, put it this way: “Pen testers are thorough in their work for the customer. Hackers just discover what is necessary to accomplish their goal.” Second, keep in mind that security professionals performing a pen test do not normally repair or patch any security vulnerabilities they find—it’s simply not their job to do so. The ethical hacker’s job is to discover security flaws for the customer, not to fix them. Knowing how to blow up a bridge doesn’t make you a civil engineer capable of building one, so while your friendly neighborhood CEH may be able to find your problems, it in no way guarantees he or she could engineer a secure system.

The Ethical Hacker

So, what makes someone an “ethical” hacker? Can such a thing even exist? Considering the art of hacking computers and systems is, in and of itself, a covert action, most people might believe the thought of engaging in a near-illegal activity to be significantly unethical. However, the purpose and intention of the act have to be taken into account.

For comparison’s sake, law enforcement professionals routinely take part in unethical behaviors and situations in order to better understand, and to catch, their criminal counterparts. Police and FBI agents must learn the lingo, actions, and behaviors of drug cartels and organized crime in order to infiltrate and bust the criminals, and doing so sometimes forces them to engage in criminal acts themselves. Ethical hacking can be thought of in much the same way. To find and fix the vulnerabilities and security holes in a computer system or network, you sometimes have to think like a criminal and use the same tactics, tools, and processes they might employ.

In CEH parlance, and as defined by several other entities, there is a distinct difference between a hacker and a cracker. An ethical hacker is someone who employs the same tools and techniques a criminal might use, with the customer’s full support and approval, to help secure a network or system. A cracker, also known as a malicious hacker, uses those skills, tools, and techniques either for personal gain or destructive purposes or, in purely technical terms, to achieve a goal outside the interest of the system owner. Ethical hackers are employed by customers to improve security. Crackers either act on their own or, in some cases, act as hired agents to destroy or damage government or corporate reputation.

One all-important specific identifying a hacker as ethical versus the bad-guy crackers needs to be highlighted and repeated over and over again. Ethical hackers work within the confines of an agreement made between themselves and a customer before any action is taken. This agreement isn’t simply a smile, a conversation, and a handshake just before you flip open a laptop and start hacking away. No, instead it is a carefully laid-out plan, meticulously arranged and documented to protect both the ethical hacker and the client.

In general, an ethical hacker will first meet with the client and sign a contract. The contract defines not only the permission and authorization given to the security professional (sometimes called a get-out-of-jail-free card) but also confidentiality and scope. No client would ever agree to having an ethical hacker attempt to breach security without first ensuring the hacker will not disclose any information found during the test. Usually, this concern results in the creation of a nondisclosure agreement (NDA).

Additionally, clients almost always want the test to proceed to a certain point in the network structure and no further: “You can try to get through the firewall, but do not touch the file servers on the other side…because you may disturb my MP3 collection.” They may also want to restrict what types of attacks you run. For example, the client may be perfectly okay with you attempting a password hack against their systems but may not want you to test every DoS attack you know.

Oftentimes, however, even though you’re hired to test their security and you know what’s really important in security and hacking circles, the most serious risks to a target are not allowed to be tested because of the “criticality of the resource.” This, by the way, is often a function of corporate trust between the pen tester and the organization and will shift over time; what’s a critical resource in today’s test will become a focus of scrutiny and “Let’s see what happens” next year. If the test designed to improve security actually blows up a server, it may not be a winning scenario; however, sometimes the data that is actually at risk makes it important enough to proceed. This really boils down to cool and focused minds during the security testing negotiation.

The Pen Test

Companies and government agencies ask for penetration tests for a variety of reasons. Sometimes rules and regulations force the issue. For example, many medical facilities need to maintain compliance with the Health Insurance Portability and Accountability Act (HIPAA) and will hire ethical hackers to complete their accreditation. Sometimes the organization’s leadership is simply security conscious and wants to know just how well existing security controls are functioning. And sometimes it’s simply an effort to rebuild trust and reputation after a security breach has already occurred. It’s one thing to tell customers you’ve fixed the security flaw that allowed the theft of all those credit cards in the first place. It’s another thing altogether to show the results of a penetration test against the new controls.

With regard to your exam and to your future as an ethical hacker, there are two processes you’ll need to know: how to set up and perform a legal penetration test and how to proceed through the actual hack. A penetration test is a clearly defined, full-scale test of the security controls of a system or network in order to identify security risks and vulnerabilities and has three major phases. Once the pen test is agreed upon, the ethical hacker begins the “assault” using a variety of tools, methods, and techniques, but generally follows the same five stages of a typical hack to conduct the test. For the CEH exam, you’ll need to be familiar with the three pen test stages and the five stages of a typical hack.

A pen test has three main phases—preparation, assessment, and conclusion—and they are fairly easy to define and understand. The preparation phase defines the time period during which the actual contract is hammered out. The scope of the test, the types of attacks allowed, and the individuals assigned to perform the activity are all agreed upon in this phase. The assessment phase (sometimes also known as the security evaluation phase or the conduct phase) is exactly what it sounds like—the actual assaults on the security controls are conducted during this time. Lastly, the conclusion (or post-assessment) phase defines the time when final reports are prepared for the customer, detailing the findings of the tests (including the types of tests performed) and many times even providing recommendations to improve security.

In performing a pen test, an ethical hacker must attempt to reflect the criminal world as much as possible. In other words, if the steps taken by the ethical hacker during the pen test don’t adequately mirror what a “real” hacker would do, then the test is doomed to failure. For that reason, most pen tests have individuals acting in various stages of knowledge about the target of evaluation (TOE). These different types of tests are known by three names: black box, white box, and gray box.

In black-box testing, the ethical hacker has absolutely no knowledge of the TOE. It’s designed to simulate an outside, unknown attacker, and it takes the most amount of time to complete and, usually, is by far the most expensive option. For the ethical hacker, black-box testing means a thorough romp through the five stages of an attack and removes any preconceived notions of what to look for. The only true drawback to this type of test is it focuses solely on the threat outside the organization and does not take into account any trusted users on the inside.

White-box testing is the exact opposite of black-box testing. In this type, pen testers have full knowledge of the network, system, and infrastructure they’re targeting. This, quite obviously, makes the test much quicker, easier, and less expensive, and it is designed to simulate a knowledgeable internal threat, such as a disgruntled network admin or other trusted user.

The last type, gray-box testing, is also known as partial knowledge testing. What makes this different from black-box testing is the assumed level of elevated privileges the tester has. Whereas black-box testing is generally done from the network administration level, gray-box testing assumes only that the attacker is an insider. Because most attacks do originate from inside a network, this type of testing is valuable and can demonstrate privilege escalation from a trusted employee.

Laws and Standards

Finally, it would be impossible to call yourself an ethical anything if you didn’t understand the guidelines, standards, and laws that govern your particular area of expertise. In our realm of IT security (and in ethical hacking), there are tons of laws and standards you should be familiar with not only to do a good job, but to keep you out of trouble—and prison. We were lucky in previous versions of the exam that these didn’t get hit very often, but now they’re back—and with a vengeance.

I would love to promise I could provide you a comprehensive list of every law you’ll need to know for your job, but if I did this book would be the size of an old encyclopedia and you’d never buy it. There are tons of laws you need to be aware of for your job, such as FISMA, the Electronics Communications Privacy Act, PATRIOT Act, Privacy Act of 1974, Cyber Intelligence Sharing and Protection Act (CISPA), Consumer Data Security and Notification Act, Computer Security Act of 1987…the list really is almost endless. Since this isn’t a book to prepare you for a state bar exam, I’m not going to get into defining all these. For the sake of study, and keeping my page count down somewhat, we’ll just discuss a few you should concentrate on for test purposes—mainly because they’re the ones ECC seems to be looking at closely this go-round. When you get out in the real world, you’ll need to learn, and know, the rest.

First up is the Health Insurance Portability and Accountability Act (HIPAA), developed by the U.S. Department of Health and Human Services to address privacy standards with regard to medical information. The law sets privacy standards to protect patient medical records and health information, which, by design, is provided and shared to doctors, hospitals, and insurance providers. HIPAA has five subsections that are fairly self-explanatory (Electronic Transaction and Code Sets, Privacy Rule, Security Rule, National Identifier Requirements, and Enforcement) and may show up on your exam.

Another important law for your study is the Sarbanes-Oxley (SOX) Act. SOX was created to make corporate disclosures more accurate and reliable in order to protect the public and investors from shady behavior. There are 11 titles within SOX that handle everything from what financials should be reported and what should go in them, to protecting against auditor conflicts of interest and enforcement for accountability.

When it comes to standards, again there are tons to know—maybe not necessarily for your job, but because you’ll see them on this exam. A couple ECC really wants you to know are PCI-DSS and ISO/IEC 27001:2013. Payment Card Industry Data Security Standard (PCI-DSS) is a security standard for organizations handling credit cards, ATM cards, and other point-of-sales cards. The standards apply to all groups and organizations involved in the entirety of the payment process—from card issuers, to merchants, to those storing and transmitting card information—and consist of 12 requirements:

• Requirement 1: Install and maintain firewall configuration to protect data.

• Requirement 2: Remove vendor-supplied default passwords and other default security features.

• Requirement 3: Protect stored data.

• Requirement 4: Encrypt transmission of cardholder data.

• Requirement 5: Install, use, and update AV (antivirus).

• Requirement 6: Develop secure systems and applications.

• Requirement 7: Use “need to know” as a guideline to restrict access to data.

• Requirement 8: Assign a unique ID to each stakeholder in the process (with computer access).

• Requirement 9: Restrict any physical access to the data.

• Requirement 10: Monitor all access to data and network resources holding, transmitting, or protecting it.

• Requirement 11: Test security procedures and systems regularly.

• Requirement 12: Create and maintain an information security policy.