Chapter 20. Continuous Integration (CI)

As our site grows, it takes longer and longer to run all of our functional tests. If this continues, the danger is that we’re going to stop bothering.

Rather than let that happen, we can automate the running of functional tests by setting up a “Continuous Integration” or CI server. That way, in day-to-day development, we can just run the FT that we’re working on at that time, and rely on the CI server to run all the tests automatically, and let us know if we’ve broken anything accidentally. The unit tests should stay fast enough that we can keep running them every few seconds.

The CI server of choice these days is called Jenkins. It’s a bit Java, a bit crashy, a bit ugly, but it’s what everyone uses, and it has a great plugin ecosystem, so let’s get it up and running.

Installing Jenkins

There are several hosted-CI services out there that essentially provide you with a Jenkins server, ready to go. I’ve come across Sauce Labs, Travis, Circle-CI, ShiningPanda, and there are probably lots more. But I’m going to assume we’re installing everything on a server we control.

Note

It’s not a good idea to install Jenkins on the same server as our staging or production servers. Apart from anything else, we may want Jenkins to be able to reboot the staging server!

We’ll install the latest version from the official Jenkins apt repo, because the Ubuntu default still has a few annoying bugs with locale/unicode support, and it also doesn’t set itself up to listen on the public Internet by default:

# instructions taken from jenkins site user@server:$ wget -q -O - http://pkg.jenkins-ci.org/debian/jenkins-ci.org.key |\ sudo apt-key add - user@server:$ echo deb http://pkg.jenkins-ci.org/debian binary/ | sudo tee \ /etc/apt/sources.list.d/jenkins.list user@server:$ sudo apt-get update user@server:$ sudo apt-get install jenkins

While we’re at we’ll install a few other dependencies:

user@server:$ sudo apt-get install git firefox python3 python-virtualenv xvfbWarning

At the time of writing, the shiningpanda plugin was incompatible with Python 3.4. It works fine with Python 3.3, so I recommend using a slightly older distro, so that the default Python 3 is slightly older. Ubuntu Saucy (13.10) is OK, for example, but Trusty isn’t.

You should then be able to visit it at the URL for your server on port 8080,

as in Figure 20-1.

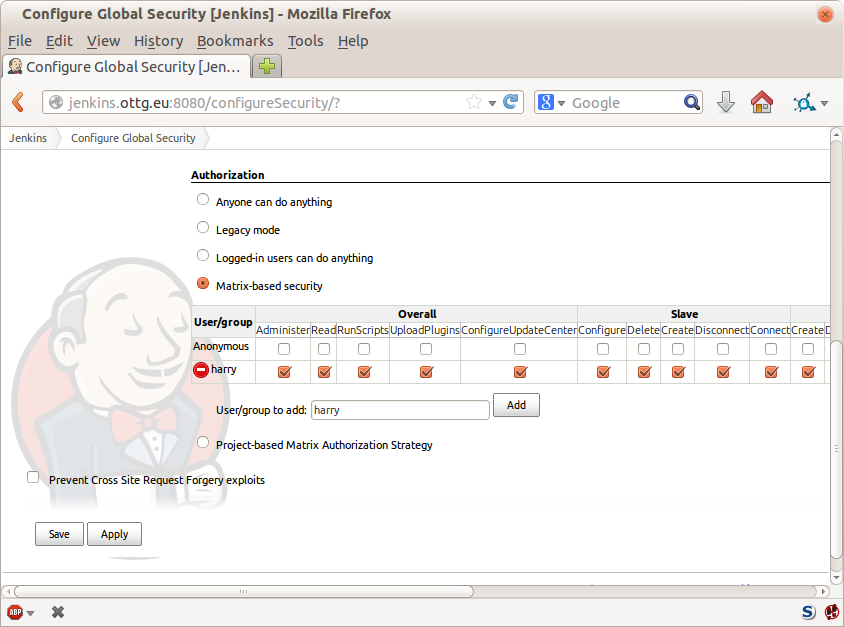

Configuring Jenkins Security

The first thing we’ll do is set up some authentication, since our server is available on the public Internet:

- Manage Jenkins → Configure Global Security → Enable security.

- Choose “Jenkins’ own user database”, “Matrix-based security”.

- Disable all permissions for Anonymous.

- And add a user for yourself; give it all the permissions (Figure 20-2).

- The next screen offers you the option to create an account that matches that username, and set a password.[32]

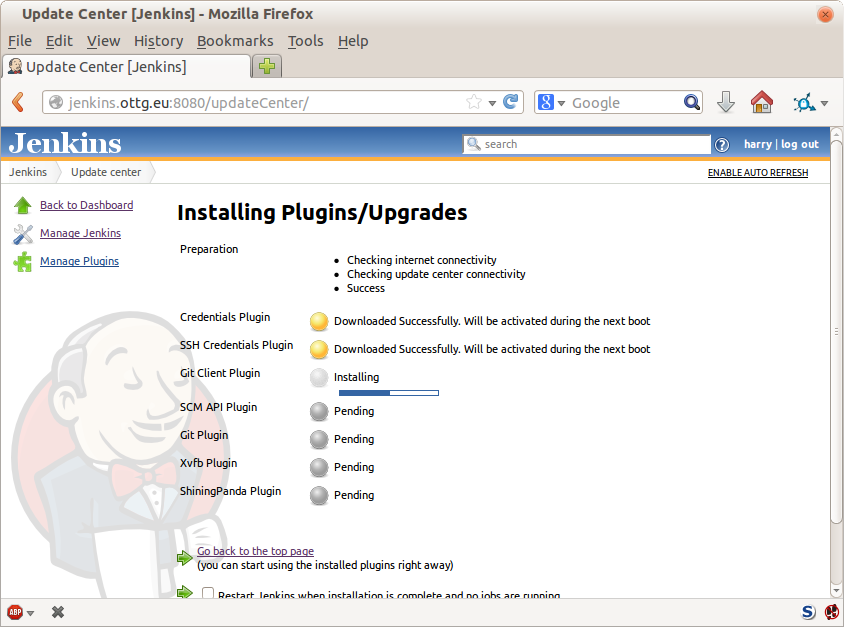

Adding Required Plugins

Next we install a few plugins, to help us work with Git, Python, and virtual displays; see Figure 20-3:

- Manage Jenkins → Manage Plugins → Available

We’ll want the plugins for:

- Git

- ShiningPanda

- Xvfb

Restart afterwards using either the tick-box on that last screen, or

from the command line with a sudo service jenkins restart.

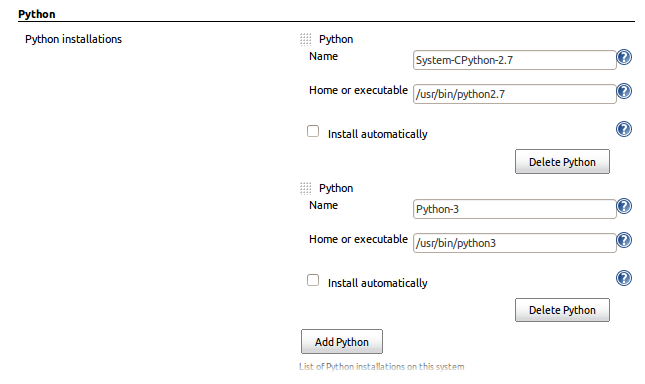

Telling Jenkins where to find Python 3 and Xvfb

We need to tell the ShiningPanda plugin where Python 3 is installed

(usually /usr/bin/python3, but you can check with a which python3):

* Manage Jenkins → Configure System.

- Python → Python installations → Add Python (Figure 20-4).

-

Xvfb installation → Add Xvfb installation; enter

/usr/binas the installation directory.

Setting Up Our Project

Now we’ve got the basic Jenkins configured, let’s set up our project:

- New Job → Build a free-style software project.

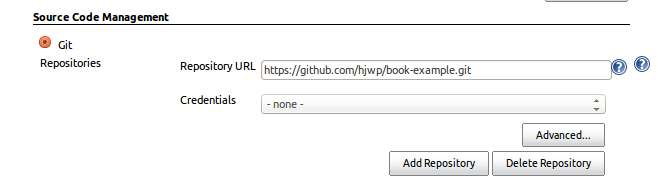

- Add the Git repo, as in Figure 20-5.

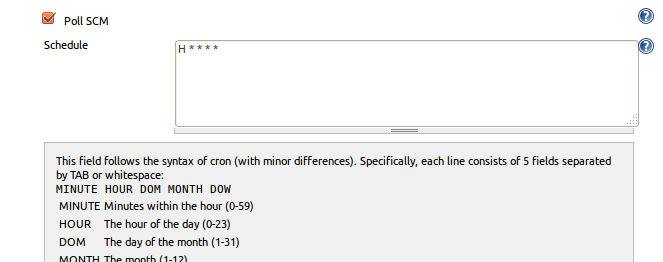

- Set it to poll every hour (Figure 20-6) (check out the help text here—there are many other options for ways of triggering builds).

- Run the tests inside a Python 3 virtualenv.

- Run the unit tests and functional tests separately. See Figure 20-7.

First Build!

Hit “Build Now!”, then go and take a look at the “Console Output”. You should see something like this:

Started by user harry Building in workspace /var/lib/jenkins/jobs/Superlists/workspace Fetching changes from the remote Git repository Fetching upstream changes from https://github.com/hjwp/book-example.git Checking out Revision d515acebf7e173f165ce713b30295a4a6ee17c07 (origin/master) [workspace] $ /bin/sh -xe /tmp/shiningpanda7260707941304155464.sh + pip install -r requirements.txt Requirement already satisfied (use --upgrade to upgrade): Django==1.7 in /var/lib/jenkins/shiningpanda/jobs/ddc1aed1/virtualenvs/d41d8cd9/lib/python3.3/site-packages (from -r requirements.txt (line 1)) Downloading/unpacking South==0.8.2 (from -r requirements.txt (line 2)) Running setup.py egg_info for package South Requirement already satisfied (use --upgrade to upgrade): gunicorn==17.5 in /var/lib/jenkins/shiningpanda/jobs/ddc1aed1/virtualenvs/d41d8cd9/lib/python3.3/site-packages (from -r requirements.txt (line 3)) Downloading/unpacking requests==2.0.0 (from -r requirements.txt (line 4)) Running setup.py egg_info for package requests Installing collected packages: South, requests Running setup.py install for South Running setup.py install for requests Successfully installed South requests Cleaning up... + python manage.py test lists accounts ................................................... --------------------------------------------------------------------- Ran 51 tests in 0.323s OK Creating test database for alias 'default'... Destroying test database for alias 'default'... + python manage.py test functional_tests ImportError: No module named 'selenium' Build step 'Virtualenv Builder' marked build as failure

Ah. We need Selenium in our virtualenv.

Let’s add a manual installation of Selenium to our build steps:[33]

pip install -r requirements.txt

pip install selenium==2.39

python manage.py test accounts lists

python manage.py test functional_testsTip

Some people like to use a file called test-requirements.txt to specify packages that are needed for the tests, but not the main app.

Now what?

File

"/var/lib/jenkins/shiningpanda/jobs/ddc1aed1/virtualenvs/d41d8cd9/lib/python3.

line 100, in _wait_until_connectable

self._get_firefox_output())

selenium.common.exceptions.WebDriverException: Message: 'The browser appears to

have exited before we could connect. The output was: b"\\n(process:19757):

GLib-CRITICAL **: g_slice_set_config: assertion \'sys_page_size == 0\'

failed\\nError: no display specified\\n"'Setting Up a Virtual Display so the FTs Can Run Headless

As you can see from the traceback, Firefox is unable to start because the server doesn’t have a display.

There are two ways to deal with this problem. The first is to switch to using a headless browser, like PhantomJS or SlimerJS. Those tools definitely have their place—they’re faster, for one thing—but they also have disadvantages. The first is that they’re not “real” web browsers, so you can’t be sure you’re going to catch all the strange quirks and behaviours of the actual browsers your users use. The second is that they behave quite differently inside Selenium, and will require substantial amounts of rewriting of FT code.

Tip

I would look into using headless browsers as a “dev-only” tool, to speed up the running of FTs on the developer’s machine, while the tests on the CI server use actual browsers.

The alternative is to set up a virtual display: we get the server to pretend it has a screen attached to it, so Firefox runs happily. There’s a few tools out there to do this; we’ll use one called “Xvfb” (X Virtual Framebuffer)[34] because it’s easy to install and use, and because it has a convenient Jenkins plugin.

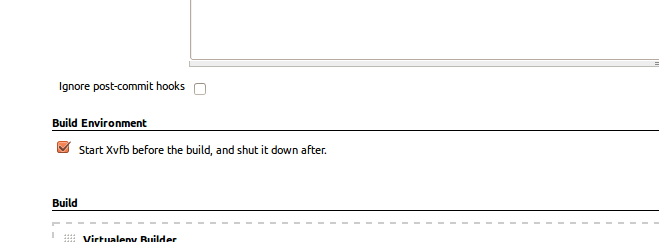

We go back to our project and hit “Configure” again, then find the section called “Build Environment”. Using the virtual display is as simple as ticking the box marked “Start Xvfb before the build, and shut it down after,” as in Figure 20-8.

The build does much better now:

[...]

Xvfb starting$ /usr/bin/Xvfb :2 -screen 0 1024x768x24 -fbdir

/var/lib/jenkins/2013-11-04_03-27-221510012427739470928xvfb

[...]

+ python manage.py test lists accounts

...................................................

---------------------------------------------------------------------

Ran 51 tests in 0.410s

OK

Creating test database for alias 'default'...

Destroying test database for alias 'default'...

+ pip install selenium

Requirement already satisfied (use --upgrade to upgrade): selenium in

/var/lib/jenkins/shiningpanda/jobs/ddc1aed1/virtualenvs/d41d8cd9/lib/python3.3/site-packages

Cleaning up...

+ python manage.py test functional_tests

.....F.

======================================================================

FAIL: test_logged_in_users_lists_are_saved_as_my_lists

(functional_tests.test_my_lists.MyListsTest)

---------------------------------------------------------------------

Traceback (most recent call last):

File

"/var/lib/jenkins/jobs/Superlists/workspace/functional_tests/test_my_lists.py",

line 44, in test_logged_in_users_lists_are_saved_as_my_lists

self.assertEqual(self.browser.current_url, first_list_url)

AssertionError: 'http://localhost:8081/accounts/edith@example.com/' !=

'http://localhost:8081/lists/1/'

- http://localhost:8081/accounts/edith@example.com/

+ http://localhost:8081/lists/1/

---------------------------------------------------------------------

Ran 7 tests in 89.275s

FAILED (errors=1)

Creating test database for alias 'default'...

[{'secure': False, 'domain': 'localhost', 'name': 'sessionid', 'expiry':

1920011311, 'path': '/', 'value': 'a8d8bbde33nreq6gihw8a7r1cc8bf02k'}]

Destroying test database for alias 'default'...

Build step 'Virtualenv Builder' marked build as failure

Xvfb stopping

Finished: FAILUREPretty close! To debug that failure, we’ll need screenshots though.

Taking Screenshots

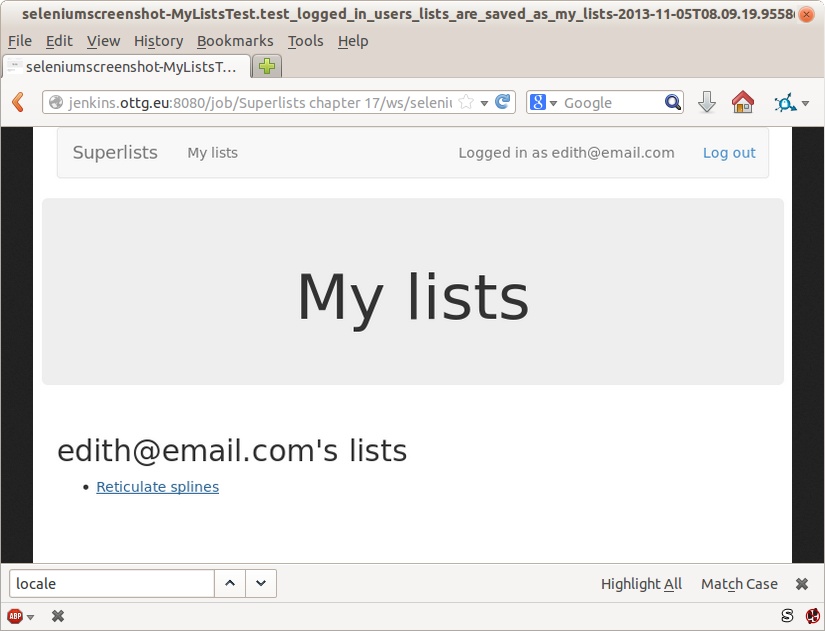

To be able to debug unexpected failures that happen on a remote PC, it

would be good to see a picture of the screen at the moment of the failure,

and maybe also a dump of the HTML of the page. We can do that using some

custom logic in our FT class tearDown. We have to do a bit of introspection of

unittest internals, a private attribute called _outcomeForDoCleanups, but

this will work:

functional_tests/base.py (ch20l006).

importosfromdatetimeimportdatetimeSCREEN_DUMP_LOCATION=os.path.abspath(os.path.join(os.path.dirname(__file__),'screendumps'))[...]deftearDown(self):ifself._test_has_failed():ifnotos.path.exists(SCREEN_DUMP_LOCATION):os.makedirs(SCREEN_DUMP_LOCATION)forix,handleinenumerate(self.browser.window_handles):self._windowid=ixself.browser.switch_to_window(handle)self.take_screenshot()self.dump_html()self.browser.quit()super().tearDown()def_test_has_failed(self):# for 3.4. In 3.3, can just use self._outcomeForDoCleanups.success:formethod,errorinself._outcome.errors:iferror:returnTruereturnFalse

We first create a directory for our screenshots if necessary. Then we

iterate through all the open browser tabs and pages, and use some Selenium

methods, get_screenshot_as_file and browser.page_source, for our image and

HTML dumps:

functional_tests/base.py (ch20l007).

deftake_screenshot(self):filename=self._get_filename()+'.png'('screenshotting to',filename)self.browser.get_screenshot_as_file(filename)defdump_html(self):filename=self._get_filename()+'.html'('dumping page HTML to',filename)withopen(filename,'w')asf:f.write(self.browser.page_source)

And finally here’s a way of generating a unique filename identifier, which includes the name of the test and its class, as well as a timestamp:

functional_tests/base.py (ch20l008).

def_get_filename(self):timestamp=datetime.now().isoformat().replace(':','.')[:19]return'{folder}/{classname}.{method}-window{windowid}-{timestamp}'.format(folder=SCREEN_DUMP_LOCATION,classname=self.__class__.__name__,method=self._testMethodName,windowid=self._windowid,timestamp=timestamp)

You can test this first locally by deliberately breaking one of the tests, with

a self.fail() for example, and you’ll see something like this:

[...] screenshotting to /workspace/superlists/functional_tests/screendumps/MyListsTes t.test_logged_in_users_lists_are_saved_as_my_lists-window0-2014-03-09T11.19.12. png dumping page HTML to /workspace/superlists/functional_tests/screendumps/MyLists Test.test_logged_in_users_lists_are_saved_as_my_lists-window0-2014-03-09T11.19. 12.html

Revert the self.fail(), then commit and push:

$ git diff # changes in base.py $ echo "functional_tests/screendumps" >> .gitignore $ git commit -am "add screenshot on failure to FT runner" $ git push

And when we rerun the build on Jenkins, we see something like this:

screenshotting to /var/lib/jenkins/jobs/Superlists/workspace/functional_tests/ screendumps/LoginTest.test_login_with_persona-window0-2014-01-22T17.45.12.png dumping page HTML to /var/lib/jenkins/jobs/Superlists/workspace/functional_tests/ screendumps/LoginTest.test_login_with_persona-window0-2014-01-22T17.45.12.html

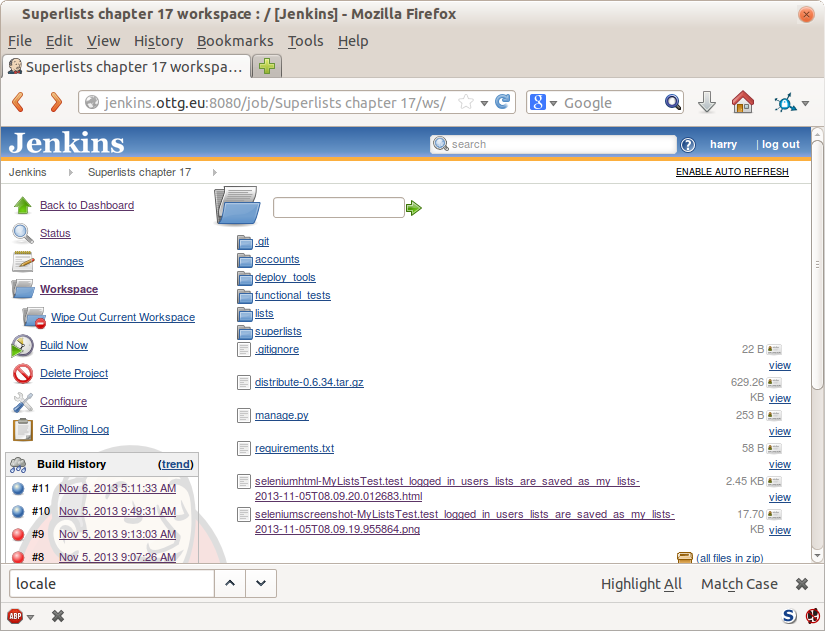

We can go and visit these in the “workspace”, which is the folder which Jenkins uses to store our source code and run the tests in, as in Figure 20-9.

And then we look at the screenshot, as shown in Figure 20-10.

A Common Selenium Problem: Race Conditions

Whenever you see an inexplicable failure in a Selenium test, one of the most likely explanations is a hidden race condition. Let’s look at the line that failed:

functional_tests/test_my_lists.py.

# She sees that her list is in there, named according to its# first list itemself.browser.find_element_by_link_text('Reticulate splines').click()self.assertEqual(self.browser.current_url,first_list_url)

Immediately after we click the “Reticulate splines” link, we ask Selenium to check whether the current URL matches the URL for our first list. But it doesn’t:

AssertionError: 'http://localhost:8081/accounts/edith@example.com/' != 'http://localhost:8081/lists/1/'

It looks like the current URL is still the URL of the “My Lists” page. What’s going on?

Do you remember that we set an implicitly_wait on the browser, way back in

Chapter 2? Do you remember I mentioned it was unreliable?

implicitly_wait works reasonably well for any calls to any of the

Selenium find_element_ calls, but it doesn’t apply to browser.current_url.

Selenium doesn’t “wait” after you tell it to click an element, so what’s

happened is that the browser hasn’t finished loading the new page yet, so

current_url is still the old page. We need to use some more wait code, like

we did for the various Persona pages.

At this point it’s time for a “wait for” helper function. To see how this is going to work, it helps to see how I expect to use it (outside-in!):

functional_tests/test_my_lists.py (ch20l012).

# She sees that her list is in there, named according to its# first list itemself.browser.find_element_by_link_text('Reticulate splines').click()self.wait_for(lambda:self.assertEqual(self.browser.current_url,first_list_url))

We’re going to take our assertEqual call and turn it into a lambda function,

then pass it into our wait_for helper.

functional_tests/base.py (ch20l013).

importtimefromselenium.common.exceptionsimportWebDriverException[...]defwait_for(self,function_with_assertion,timeout=DEFAULT_WAIT):start_time=time.time()whiletime.time()-start_time<timeout:try:returnfunction_with_assertion()except(AssertionError,WebDriverException):time.sleep(0.1)# one more try, which will raise any errors if they are outstandingreturnfunction_with_assertion()

wait_for then tries to execute that function, but instead of letting the

test fail if the assertion fails, it catches the AssertionError that

assertEqual would ordinarily raise, waits for a brief moment, and then loops

around retrying it. The while loop lasts until a given timeout. It also catches any

WebDriverException that might happen if, say, an element hasn’t appeared on

the page yet. It tries one last time after the timeout has expired, this time

without the try/except, so that if there really is still an AssertionError, the

test will fail appropriately.

Note

We’ve seen that Selenium provides WebdriverWait as a tool for doing

waits, but it’s a little restrictive. This hand-rolled version lets us pass a

function that does a unittest assertion, with all the benefits of the

readable error messages that it gives us.

I’ve added the timeout there as an optional argument, and I’m basing it on

a constant we’ll add to base.py. We’ll also use it in our original

implicitly_wait:

functional_tests/base.py (ch20l014).

[...]DEFAULT_WAIT=5SCREEN_DUMP_LOCATION=os.path.abspath(os.path.join(os.path.dirname(__file__),'screendumps'))classFunctionalTest(StaticLiveServerCase):[...]defsetUp(self):self.browser=webdriver.Firefox()self.browser.implicitly_wait(DEFAULT_WAIT)

Now we can rerun the test to confirm it still works locally:

$ python3 manage.py test functional_tests.test_my_lists

[...]

.

Ran 1 test in 9.594s

OKAnd, just to be sure, we’ll deliberately break our test to see it fail too:

functional_tests/test_my_lists.py (ch20l015).

self.wait_for(lambda:self.assertEqual(self.browser.current_url,'barf'))

Sure enough, that gives:

$ python3 manage.py test functional_tests.test_my_lists

[...]

AssertionError: 'http://localhost:8081/lists/1/' != 'barf'And we see it pause on the page for three seconds. Let’s revert that last change, and then commit our changes:

$ git diff # base.py, test_my_lists.py $ git commit -am"use wait_for function for URL checks in my_lists" $ git push

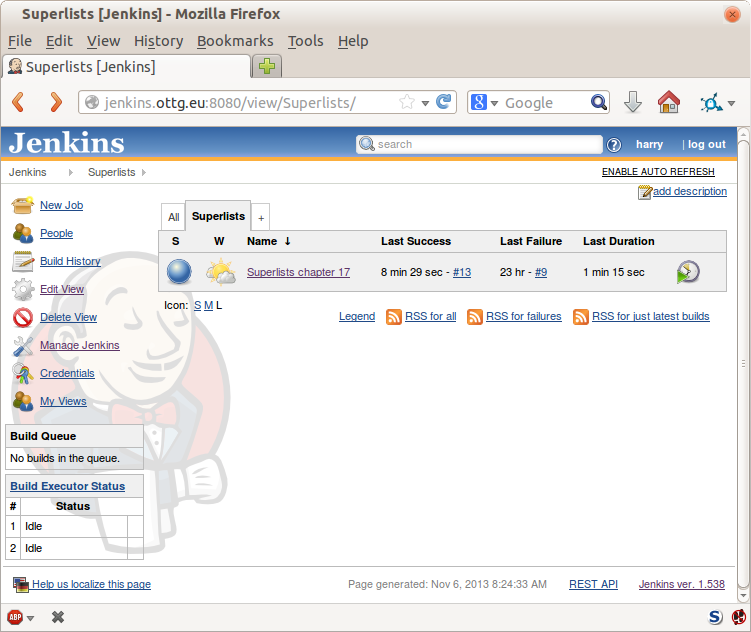

Then we can rerun the build on Jenkins using “Build now”, and confirm it now works, as in Figure 20-11.

Jenkins uses blue to indicate passing builds rather than green, which is a bit disappointing, but look at the sun peeking through the clouds: that’s cheery! It’s an indicator of a moving average ratio of passing builds to failing builds. Things are looking up!

Running Our QUnit JavaScript Tests in Jenkins with PhantomJS

There’s a set of tests we almost forgot—the JavaScript tests. Currently our “test runner” is an actual web browser. To get Jenkins to run them, we need a command-line test runner. Here’s a chance to use PhantomJS.

Installing node

It’s time to stop pretending we’re not in the JavaScript game. We’re doing web development. That means we do JavaScript. That means we’re going to end up with node.js on our computers. It’s just the way it has to be.

Follow the instructions on the node.js download page. There are installers for Windows and Mac, and repositories for popular Linux distros.[35]

Once we have node, we can install phantom:

$ npm install -g phantomjs # the -g means "system-wide". May need sudo.Next we pull down a QUnit/PhantomJS test runner. There are several out there (I even wrote a basic one to be able to test the QUnit listings in this book), but the best one to get is probably the one that’s linked from the QUnit plugins page. At the time of writing, its repo was at https://github.com/jonkemp/qunit-phantomjs-runner. The only file you need is runner.js.

You should end up with this:

$ tree superlists/static/tests/

superlists/static/tests/

├── qunit.css

├── qunit.js

├── runner.js

└── sinon.js

0 directories, 4 filesLet’s try it out:

$ phantomjs superlists/static/tests/runner.js lists/static/tests/tests.html Took 24ms to run 2 tests. 2 passed, 0 failed. $ phantomjs superlists/static/tests/runner.js accounts/static/tests/tests.html Took 29ms to run 11 tests. 11 passed, 0 failed.

Just to be sure, let’s deliberately break something:

lists/static/list.js (ch20l019).

$('input').on('keypress',function(){//$('.has-error').hide();});

Sure enough:

$ phantomjs superlists/static/tests/runner.js lists/static/tests/tests.html

Test failed: undefined: errors should be hidden on keypress

Failed assertion: expected: false, but was: true

at file:///workspace/superlists/superlists/static/tests/qunit.js:556

at file:///workspace/superlists/lists/static/tests/tests.html:26

at file:///workspace/superlists/superlists/static/tests/qunit.js:203

at file:///workspace/superlists/superlists/static/tests/qunit.js:361

at process

(file:///workspace/superlists/superlists/static/tests/qunit.js:1453)

at file:///workspace/superlists/superlists/static/tests/qunit.js:479

Took 27ms to run 2 tests. 1 passed, 1 failed.All right! Let’s unbreak that, commit and push the runner, and then add it to our Jenkins build:

$ git checkout lists/static/list.js $ git add superlists/static/tests/runner.js $ git commit -m"Add phantomjs test runner for javascript tests" $ git push

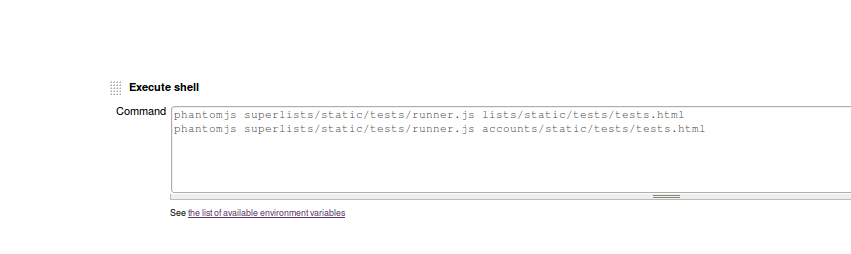

Adding the Build Steps to Jenkins

Edit the project configuration again, and add a step for each set of JavaScript tests, as per Figure 20-12.

You’ll also need to install PhantomJS on the server:

elspeth@server:$ sudo add-apt-repository -y ppa:chris-lea/node.js elspeth@server:$ sudo apt-get update elspeth@server:$ sudo apt-get install nodejs elspeth@server:$ sudo npm install -g phantomjs

And there we are! A complete CI build featuring all of our tests!

Started by user harry Building in workspace /var/lib/jenkins/jobs/Superlists/workspace Fetching changes from the remote Git repository Fetching upstream changes from https://github.com/hjwp/book-example.git Checking out Revision 936a484038194b289312ff62f10d24e6a054fb29 (origin/chapter_1 Xvfb starting$ /usr/bin/Xvfb :1 -screen 0 1024x768x24 -fbdir /var/lib/jenkins/20 [workspace] $ /bin/sh -xe /tmp/shiningpanda7092102504259037999.sh + pip install -r requirements.txt [...] + python manage.py test lists ................................. --------------------------------------------------------------------- Ran 33 tests in 0.229s OK Creating test database for alias 'default'... Destroying test database for alias 'default'... + python manage.py test accounts .................. --------------------------------------------------------------------- Ran 18 tests in 0.078s OK Creating test database for alias 'default'... Destroying test database for alias 'default'... [workspace] $ /bin/sh -xe /tmp/hudson2967478575201471277.sh + phantomjs superlists/static/tests/runner.js lists/static/tests/tests.html Took 32ms to run 2 tests. 2 passed, 0 failed. + phantomjs superlists/static/tests/runner.js accounts/static/tests/tests.html Took 47ms to run 11 tests. 11 passed, 0 failed. [workspace] $ /bin/sh -xe /tmp/shiningpanda7526089957247195819.sh + pip install selenium Requirement already satisfied (use --upgrade to upgrade): selenium in /var/lib/ Cleaning up... [workspace] $ /bin/sh -xe /tmp/shiningpanda2420240268202055029.sh + python manage.py test functional_tests ....... --------------------------------------------------------------------- Ran 7 tests in 76.804s OK

Nice to know that, no matter how lazy I get about running the full test suite on my own machine, the CI server will catch me. Another one of the Testing Goat’s agents in cyberspace, watching over us…

More Things to Do with a CI Server

I’ve only scratched the surface of what you can do with Jenkins and CI servers. For example, you can make it much smarter about how it monitors your repo for new commits.

Perhaps more interestingly, you can use your CI server to automate your staging tests as well as your normal functional tests. If all the FTs pass, you can add a build step that deploys the code to staging, and then reruns the FTs against that—automating one more step of the process, and ensuring that your staging server is automatically kept up to date with the latest code.

Some people even use a CI server as the way of deploying their production releases!

[32] If you miss that screen, you can still hit “signup”, and as long as you use the same username you specified earlier, you’ll have an account set up.

[33] At the time of writing, the latest Selenium (2.41) was causing me some trouble, so that’s why I’m pinning it to 2.39 here. By all means experiment with newer versions!

[34] Check out pyvirtualdisplay as a way of controlling virtual displays from Python.

[35] Make sure you get the latest version. On Ubuntu, use the PPA rather than the default package.