In chapter 1, we asked you to rate your ethics in comparison to others. We have asked groups of executives attending negotiation classes to answer similar questions, such as whether they are less honest than their peers, just as honest as their peers, or more honest than their peers. As you would now expect, an overwhelming majority tell us they believe they are more honest than most others in their class.

Now consider a recent survey of high school students.1 Nearly two-thirds of teens surveyed reported cheating on a test during the past year. More than a third admitted to plagiarizing off the Internet, nearly a third admitted to stealing from a store in the past year, and more than 80 percent said they had lied to a parent about something significant. Yet 93 percent of these high school students said they were satisfied with their ethical character.

As behavioral ethics research would predict, some of the most spectacular ethical scandals of recent years have involved people who insisted they were more ethical than their alleged actions suggested. Kenneth Lay, CEO of Enron, repeatedly insisted he had done nothing wrong during his tenure at the disgraced corporation. Bill Clinton told the American public—and perhaps rationalized to himself—that he didn’t have sexual relations with Monica Lewinsky. And after Rod Blagojevich, the former governor of Illinois, was accused of trying to sell Barack Obama’s vacated Senate seat to the highest bidder, he insisted he was innocent in the face of mounting evidence.

Several explanations might explain such claims of ethicality and denials of wrongdoing in the face of clearly dishonest behavior. First, it may be that the person truly is innocent; if only we had access to all the information that he does, we would agree with his assessment of his ethical character. Second, it is possible that the person doesn’t actually believe he behaved ethically, but rather claims to be ethical to reduce the damages associated with his unethical actions. The third—and, we argue, most likely—explanation is also the most troubling in terms of improving one’s behavior. It is possible that the person inherently believes in his own ethicality, despite the evidence to the contrary.

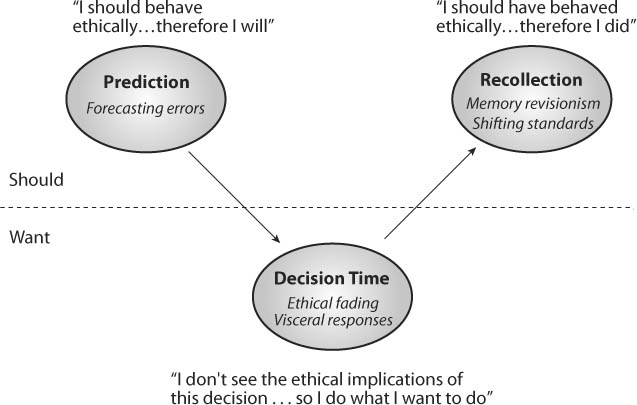

You may never have been accused of setting up fictitious corporate partnerships in order to steal money from investors, having relations with interns, or selling a Senate seat. Yet the chances are good that you, like Lay, Clinton, and Blagojevich (and like us), also believe you are more ethical than you really are and than others judge you to be. Behavioral ethics research suggests that biases in our thought processes make these illusions about our ethicality possible. Along with our colleagues Kristina Diekmann and Kimberly Wade-Benzoni, we argue that these biases occur at several stages of the decision-making process.2 Prior to being faced with an ethical dilemma, people predict that they will make an ethical choice. When actually faced with an ethical dilemma, they make an unethical choice. Yet when reflecting back on that decision, they believe they are still ethical people. Together, this culminating set of biases leads to erroneously positive perceptions of our own ethicality. Worse yet, it prevents us from seeing the need to improve our ethicality, and so the pattern repeats itself.

In this chapter, we focus on the psychological processes that behavioral ethicists have identified as preventing people from making ethical decisions at these three stages—before, during, and after a moral decision.

Imagine that a young female college student is seeking on-campus employment to supplement her living expenses. She sees a help-wanted ad posted on campus for a research assistant. The hours and pay are just what she’s looking for, so she immediately applies for the position. She is called in for an interview and meets with a man who appears to be in his early thirties. During the course of the interview, he asks her a number of standard interview questions, as well as the following three questions:

Do you have a boyfriend?

Do people find you desirable?

Do you think it is appropriate for women to wear bras to work?

What do you think the young woman would do in this situation? If you think she would feel outraged and confront the interviewer about his inappropriate questions, you are not alone. A research study examined this exact situation.3 When asked to predict how they would behave in such an interview, 62 percent of female college students said they would ask the interviewer why he was asking these questions or tell him that the questions were inappropriate, and 68 percent said they would refuse to answer the questions.

These students’ predictions may be unsurprising, yet they aren’t accurate. In the same study, the researchers put female college students in the same interview situation described above. A thirty-two-year-old male interviewer actually asked them the offensive questions. What happened? None of the students refused to answer the questions. A minority, not a majority, did ask the interviewer why the questions had been asked, but they did so politely and usually at the end of the interview.

The human tendency to make inaccurate predictions about our own behavior is well documented by behavioral ethics and other research.4 We firmly believe we will behave a certain way in a given situation. When actually faced with that situation, however, we behave differently. Examples of such “behavioral forecasting errors” abound. We aren’t very good at predicting how often we will go the dentist. We are lousy at estimating how long it will take us to complete a particular task at work or a project at home. We underestimate the extent to which we’ll be influenced by pressure from a boss or a peer. New Year’s resolutions are the epitome of behavioral forecasting errors. At the beginning of the year, we set expectations of ourselves for certain behaviors, including those in the moral domain. We even expend resources to “make sure” we meet our goals. We join health clubs, hire personal trainers, or buy clothes that are too small for us. We vow to be more patient, do volunteer work in our free time, or find ways to conserve energy. We believe that in the coming year, we will be a “new” person. Come December 31, we find that little has changed—yet we make the same predictions about our behavior for the following year.

Now consider the fact that when patients are diagnosed with an illness, they are sometimes presented with the choice to participate in a clinical trial. Clinical trials are used to evaluate the effectiveness of treatments for a particular disease or to assess the safety of medications. Patients involved in a clinical trial are divided into groups, and each group receives a different treatment. At the end of the trial, the effectiveness of each treatment is measured, including its impact on the disease and whether it came with side effects. Patients and their families are often presented with the question, “Do you want to participate in a clinical trial?”

The decision to participate in clinical trials is often a social dilemma. As we explained in Chapter 3, social dilemmas are situations in which a group’s interests conflict with the interests of the group’s individual members. For example, people who believe in the societal benefits of conserving fuel may nonetheless choose to drive rather than walk, using the justification that “my car’s emissions won’t really make a difference.” Social dilemmas lie at the heart of many intractable problems, including environmental conservation, nuclear disarmament, and even group projects at work. In social dilemmas, the easiest individual strategy is to “defect”—to harvest fish that are near extinction, consume fuel, maintain a nuclear stockpile, or slack in your efforts. Yet when individual members defect, the group goal—whether preserving certain species, creating environmental improvements, making a safer world, or completing a project—is often sacrificed. In these cases, if everyone cooperated just a little bit, a lot could be achieved for the broader group. Yet for the individual, pursuing one’s self-interest appears to be the most rational goal.

The decision of whether to participate in a clinical trial is a type of social dilemma because it requires individuals to cooperate in order to help others in the future. Participation does not necessarily improve patients’ outcomes; patients may receive a treatment in a clinical trial that is not only unproven, but possibly not as good as currently available treatments. Many clinical trials are most likely to benefit people who will receive new and better treatments in the future, rather than those who are sick in the present. Moreover, the benefits and costs to the current patient are typically unclear and very hard to assess. The ultimate goal of clinical trials is to improve the quality of medicine so that everyone will eventually receive better treatments and a better prognosis.

Suppose that as you think about the decision to participate in a clinical trial and predict how you would behave, you come to the conclusion that everyone who is qualified should participate in clinical trials when offered the chance. You believe that the “right” choice to make is to contribute to the greater good of advances in medicine and that everyone, including yourself, should do the same. As a result of such thinking, you predict that you would certainly choose to engage yourself or a family member in a clinical trial if the occasion ever arose.

Now fast-forward a number of years and imagine that your child has been diagnosed with a life-threatening illness. Depending on how your child responds to the latest treatment, the prognosis for your child’s five-year survival is between 75 and 95 percent. You have researched your child’s disease to some extent and know that the newest approved treatment has demonstrated significantly positive results.

As you are discussing your child’s disease, the doctor asks whether you would agree to place your child in a clinical trial in which a computer would determine which treatment your child will receive. When you ask the doctor about the comparative efficacy of the two treatments, she tells you that the new treatment is too early in its development for her to be able to answer the question. Are you willing to give up the known, approved treatment for a risky option in which the likely outcome is unknown? Your answer is quick and unwavering: No!

All of us can relate to this type of dramatic about-face. When a decision about your child’s health is purely theoretical, you have the luxury of carefully deliberating and making the decision that is most compatible with your ethics. But if such a decision ever becomes a reality, your ethical considerations related to the greater good are likely to go out the window. Now all that matters is your own child and what is best for her. In the next section, we explain why such behavioral forecasting errors occur.

Decision Time: The Want Self Rears Its Head

Social scientists have long argued that we often experience conflict within ourselves. The most common form of such conflict occurs between the “want self” and the “should self.”5 The want self describes the side of you that’s emotional, affective, impulsive, and hot-headed. In contrast, your should self is rational, cognitive, thoughtful, and cool-headed. The should self encompasses our ethical intentions and the belief that we should behave according to our ethical values and principles. By contrast, the want self reflects our actual behavior, which is typically characterized by self-interest and a relative disregard for ethical considerations.

Our research suggests that whether the want self or the should self dominates varies across time. The should self dominates before and after we make a decision, but the want self often wins at the moment of decision. Thus, when approaching a decision, we predict that we will make the decision we think we should make. We think we should confront a sexually harassing interviewer; therefore, we predict we will stand up to one during an interview. We think we should go to the dentist, do our share of the work at home or at the office, stand up to peer pressure, exercise, and eat healthy foods. We think we should cooperate in social dilemmas, even at a personal cost, for the sake of the greater good. In sum, we predict we will make “should decisions,” or those based on our principles and ethical ideals. But at launch time, when we actually make the decision, something entirely different happens.

Figure 5. A temporal perspective on the battle between our “want” and “should” selves

When it comes time to make a decision, our thoughts are dominated by thoughts of how we want to behave; thoughts of how we should behave disappear. A study of movie rental preferences vividly demonstrates the dominance of the want self at the time of a decision.6 Consider that we tend to categorize movies we haven’t seen into two basic types: educational or artistic movies that we think we should watch, such as 90 Degrees South: With Scott to the Antarctic, and movies we actually want to watch, such as Kill Bill 2. In Max’s study with Katy Milkman and Todd Rogers, people returned “want” movies to an online DVD rental company significantly earlier than they returned “should” movies, suggesting that the “should” DVDs sat unwatched on coffee tables longer than the “want” movies did. At the time study participants actually decided which movie to watch, the “want” self beat the “should” self.

When ordering movies to watch later, we are in the prediction phase of decision making, forecasting which movies we think we will watch. At this time, we are preoccupied by thoughts of what we should watch. An internal dialogue might go something like this: “If I’m going to sit in front of the screen doing nothing, the very least I can do is watch something educational.” The should self dominates, and you order an educational, “should watch” movie along with more entertaining fare. At the moment you actually decide which movie to watch, however, the thought of educating yourself is farthest from your mind. Your pragmatic, hotheaded, self-interested want self overwhelms the rational, cool-headed should self, and you decide to veg out in front of Kill Bill 2 (or a mindless comedy, if Kill Bill 2 isn’t your taste).

How does this reasoning apply to ethical decisions? When considering the behavior of those involved in recent scandals, such as Bernard Madoff or Rod Blagojevich, most of us firmly believe we never would have engaged in such behaviors, would not have supported such behaviors if told to do so, and would have reported any wrongdoing we saw. We believe we would behave as we think we should behave—according to our morals, ideals, and principles. Yet too often, behavioral ethics research shows that when presented with a decision with an ethical dimension, we behave differently than our predictions of how we would behave. Our want self wins out, and unethical behavior ensues.

Why do we predict we will behave one way and then behave another way, over and over again throughout our lives? Social scientists have discovered that we think about a decision quite differently when we are predicting how we will behave than when we have to act, a difference that is driven both by different motivations at these two points in time and by the process of ethical fading. When we think about our future behavior, it is difficult to anticipate the actual situation we will face. General principles and attitudes drive our predictions; we see the forest but not the trees. As the situation approaches, however, we begin to see the trees, and the forest disappears. Our behavior is driven by details, not abstract principles.

Consider a study of charitable contributions to the American Cancer Society’s “Daffodil Days.” More than 80 percent of participants who were asked if they would buy a daffodil to make a contribution predicted they would do so.7 In fact, when actually faced with this decision, only about half of study participants who said they would buy a daffodil actually did. Clearly, they thought about the general benefits of supporting a good cause and their motivation to do so, without thinking about what might influence them at the time they are asked to donate, such as time and money constraints or distractions. At the time of the actual decision, they may have found themselves faced with pragmatic issues, such as the need to buy lunch and a limited amount of money in their pockets, details that did not cross their mind when they were predicting how they would behave. They weighed buying a daffodil against buying lunch, and lunch won.

One of the reasons we think differently about a situation when we are predicting our behavior than when we are making an actual decision is that our motivations aren’t the same at these two points in time. In a study of negotiation behavior, negotiators predicted that if they were faced with a competitive opponent, they would fight fire with fire and behave competitively themselves.8 When actually faced with a competitive opponent, however, negotiators became less aggressive, not more. This difference between predicted and actual behavior was traced to differences in motivations at these two points in time. When thinking about how they would behave when faced with a competitive opponent, negotiators were motivated to “win” and to prevent someone from taking advantage of them. When actually negotiating, negotiators were instead motivated simply to get a deal and avoid walking away with nothing. Similarly, the average person’s motivation when predicting whether to enroll one’s child in a clinical trial centers on benefiting society; at the time of the decision, however, the motivation focuses on one’s own child.

When decisions have an ethical dimension, Ann’s research with David Messick shows that ethical fading may also be a key factor driving the difference between how we think we will behave and how we actually behave.9 In the prediction phase, we may clearly see the ethical aspect of a given decision. Our moral values are evoked, and we believe we will behave according to those values. As discussed in Chapter 2, models of ethical decision making derived by philosophers, in fact, often predict that moral awareness will prompt moral behavior.10 However, at the time of the decision, ethical fading occurs, and we no longer see the ethical dimension of a decision. Instead, we might be preoccupied with making the best business or legal decision. Ethical principles don’t appear to be relevant, so they don’t enter into our decision, and we behave unethically as a result.

The story of the Ford Pinto, an infamous case from the 1970s, illustrates how ethical fading at the time of the decision can lead to disastrous results. In the case of rear-end collisions, the Pinto’s gas tank was found to explode at an unacceptable frequency. And because the car’s doors jammed up during accidents, numerous people died in Pinto accidents in the 1970s.

In the aftermath of the scandal, the decision process that led to the Pinto’s faulty design was scrutinized. Under intense competition from Volkswagen, Ford had rushed the Pinto into production in a significantly shorter time period than was usually the case. The potential danger of ruptured fuel tanks was discovered in preproduction crash tests, but with the assembly line ready to go, the decision was made to manufacture the car anyway. This decision was based on a cost-benefit analysis that weighed the minimal cost of repairing the flaw (about $11 per vehicle at the time) against the cost of paying off potential lawsuits following accidents. Ford deemed it would be cheaper to pay off lawsuits than to make the repair. The Pinto was manufactured with its faulty design for eight more years.

We suspect that none of the Ford executives who were involved in this now-notorious decision would have predicted in advance that they would make such an unethical choice. Nonetheless, they made a choice that maimed and killed many people. Why? It appears that, at the time of the decision, they viewed it as a “business decision” rather than an “ethical decision.” Taking an approach heralded as “rational” in most business-school curriculums, the executives conducted a cost-benefit analysis that provided a lens through which they viewed and made the decision. Ethics faded from the decision; the moral dimensions of injuries and deaths were not part of the equation. Because the calculations suggested that producing the car without redesign was the best business decision, the dangerous fuel tank remained.

What causes ethical fading? Our body’s innate needs may be partly to blame. Visceral responses dominate at the time we make decisions.11 Such mechanisms are hardwired into our brains to increase our chances of survival. Hunger, for example, is a message that our body needs nourishment. Pain signals that we may be facing danger in our environment. Our behavior in such situations becomes automatic, geared toward addressing the messages from our brain. Our responses to such influences take over at the time of the decision to ensure our chances of survival.

Such mechanisms obviously provide valuable information that can guide us toward necessary behaviors, such as eating to nourish ourselves or fleeing danger. But our visceral responses can also be counterproductive in other domains. Suppose you decide to wake up early tomorrow to get a head start on cutting down your to-do list. You set your alarm for 5:30 a.m. with every intention of waking up early. When the alarm goes off, however, the desire to get more sleep overwhelms all other considerations. You turn off the alarm and go back to sleep. At the time of the decision, visceral responses lead to an inward focus dominated by short-term gains. We direct our attention toward satisfying our innate needs, which are driven by self-preservation. Other goals, such as concern for others’ interests and even our own long-term interests, vanish. In the case of the Pinto fuel tank decision, the pressures of competition likely produced feelings akin to the survival instinct: Avoiding market loss, getting a big bonus, and “looking good” within the company became the sole goals of the Ford executives at the time of the decision. Ethical considerations faded away.

And, as noted earlier, when we face ethical dilemmas, our actions often precede reasoning. In other words, we make quick decisions based on fleeting feelings rather than on carefully calculated reasoning. Our visceral responses are so dominant at the time of the decision that they overshadow all other considerations. We want to help our company maintain its market share. We want to earn profits and bonuses. As a result, want wins, and should loses. It is only later, behavioral ethics researchers argue, that we engage in any type of moral reasoning. The purpose of this moral reasoning is not to arrive at a decision—it’s too late for that—but to justify the decisions we have already made.

Postdecision: Recollection Biases

As we gain distance from our visceral responses to an ethical dilemma, the ethical implications of our choices come back into full color. We are faced with a contradiction between our beliefs about ourselves as ethical people and our unethical actions. This type of discrepancy is unsettling, to say the least, and we are likely to be motivated to reduce the dissonance that results. So strong is the need to do so that researchers found in one study that offering people an opportunity to wash their hands after behaving immorally reduced their need to compensate for an immoral action (for example, by volunteering to help someone).12 In this study, the opportunity to cleanse oneself of an immoral action—in this case, physically— was sufficient to restore one’s self-image; no other action was needed.

Individuals can also restore their self-image through psychological cleansing.13 Psychological cleansing is an aspect of moral disengagement, a process that allows us to selectively turn our usual ethical standards on and off at will. For example, Neeru Paharia and Rohit Deshpandé have found that consumers who desire an article of clothing that they know was produced with child labor reconcile their push-pull attraction to the purchase by reducing the degree to which they view child labor as a societal problem.14 Similarly, Max’s work with Lisa Shu and Francesca Gino shows that when people are in environments that allow them to cheat, they reduce the degree to which they view cheating as morally problematic.15 The process of moral disengagement allows us to behave contrary to our personal code of ethics, while still maintaining the belief that we are ethical people.

Psychological cleansing can take different forms. Just as our predictions of how we will respond to an ethical dilemma diverge from how we behave in the heat of the moment, our recollections of our behavior don’t match our thoughts at the actual time of the decision. Our memory is selective; specifically, we remember behaviors that support our self-image and conveniently forget those that do not. We rationalize unethical behavior, change our definition of ethical behavior, and, over time, become desensitized to our own unethical behavior.

Reflecting back on their high school or college days, most people remember an easygoing lifestyle, laughter, fun, and excitement. They may not remember specific conversations or what they did on a daily basis, but their recollections are probably vaguely positive. Most likely, they had very different perceptions of their lives when they were actually attending high school or college. They probably have forgotten the specifics, such as getting up early for an 8:00 a.m. class, suffering through four finals in two days, or obsessing about a boyfriend or girlfriend who failed to call. Similarly, when we reflect on the ethicality of our past behavior, we focus on abstract principles, not the small details of our actions—the forest, not the trees. Instead of thinking about a particular lie that you told or a particular misstatement in your finances, you are likely to think abstractly about your general behavior and to conclude from this perspective that you generally act according to your ethical principles.

Our inflated view of our own ethicality is also enabled by our tendency to become “revisionist historians.” After making the decision not to enroll your sick child in a clinical trial but instead to make sure your child receives the best treatment available, you quickly reformulate that decision as an example of your competence and diligence in examining and assessing the available medical options. Self-serving biases are responsible for such revisionist impulses. As we discussed in Chapter 3, two people can look at the same situation very differently, reflecting on what is advantageous to themselves and forgetting or never “coding” that which is not. When we recall our past behavior, these self-serving biases help to hide our unethical actions. The implicit goal is not to arrive at an accurate picture of ourselves, but rather to create a picture that fits with our desired self-view.

If we do remember details, we focus on times when we told the truth or stood up for our principles; meanwhile, we forget the lies we told or the times when we bowed under pressure. Upon looking back at a particular job negotiation, for instance, an applicant might remember that she told the interviewer the truth about where she wanted to live and whether she would be willing to relocate, but conveniently forgot that she lied about how much she was currently earning. Because we are motivated by a desire to see ourselves as ethical people, we remember the actions and decisions that were ethical and forget, or never even process, those that were not, thereby leaving intact our image of ourselves as ethical.

But our self-serving biases are not completely foolproof. Occasionally, we might actually “see” that, yes, perhaps we did behave unethically in a given situation. Typically, however, we find ways to internally “spin” this behavior, whether by rationalizing our role, changing our definition of what’s ethical, or casting unethical actions in a more positive light. Bill Clinton argued that he didn’t have “sexual relations” with Monica Lewinsky, a lie that he might have justified by changing the standard definition of “sexual relations” in his mind. Similarly, accountants might decide that they engaged in “creative accounting” rather than broke the law.

We are also experts at deflecting blame. Psychologists have long known that we like to blame other people and other things for our failures and take personal credit for our successes. We are able to maintain a positive self-image when we blame problems on influences outside of our control—whether the economy, a boss, or a family member—and take personal credit for all that has gone well thanks to our intelligence, intuition, or personality. A used-car salesman can view himself as ethical, despite selling someone a car that leaks oil, by noting that the potential buyer didn’t ask the right questions. Guards responsible for carrying out the death penalty rationalize their actions by placing responsibility on the legal system: “I’m just following the law.” And when caught engaging in unethical but legal acts, many people working in business environments are quick to note that the law permits their behavior and that they are maximizing shareholder value.

The hierarchies found in most organizations provide a built-in source of blame: one’s boss. Do any of these phrases sound familiar? “I’m just doing my job.” “Ask the boss, not me.” “I just follow orders.” The reverse is also true; bosses face strong temptations to blame their employees for unethical behavior and claim personal innocence. Kenneth Lay rationalized his unethical actions at Enron by blaming Andrew Fastow, the firm’s chief financial officer. Lay argued that Fastow misled him and Enron’s board of directors about the off-the-book partnerships that eventually led to the company’s demise. While admitting that the actions were wrong, Lay minimized his role in them. He preserved his ethical image, at least in his own mind.

Ethical spinning is also inherent in the cliché “Everybody’s doing it.” We all cheat on our taxes, don’t we? So powerful is this rationalization through blanket blame that it was Ben Johnson’s defense for steroid use, which cost him his 1988 Olympic Gold Medal. This rationalization itself may be subject to bias. Ann has found that the more tempted we are to behave unethically, the more common—and thus acceptable—we perceive the unethical action to be.16 That is, the bigger the deduction you’ll get for cheating on your taxes, the more likely you will be to believe that others are cheating as well.

If you can’t manage to spin your ethical behavior to your advantage, you can always change your ethical standards. In professions that require employees to bill their hours, such as consulting or the law, new employees may strongly believe they would never bill hours they hadn’t accrued. As time goes on, however, an employee might once find herself short an hour of the “standard” billable hours. To make up this shortage, she adds fifteen minutes each to four projects. What’s the big deal about rounding up? The big deal is what has happened psychologically. The ethical standard to which the employee held herself has shifted. The line between what is ethical and what is unethical has changed.

Once someone has adjusted her ethical standards, the power of her moral principles diminishes. There may no longer be a line she won’t cross. This process occurs so gradually and incrementally that she won’t discern each step she takes. A month after fudging her time by an hour, the consultant may find she has a two-hour deficit. Adding an additional hour for the week becomes the “new normal,” and she doesn’t even code it as being unethical anymore. Over time, the consultant may find herself overbilling by ten hours a week. Previously, she would never have found that amount of cheating to be acceptable under any circumstance, but the decision never involved ten hours of cheating; rather, she made a series of ten one-hour decisions—and a small adjustment to her ethical standard each time. To make matters worse, we can become desensitized as our exposure to unethical behavior increases. For the consultant, as ethical numbness sets in, each one-hour lie becomes less ethically painful. And to take a more dramatic example, prison counselors on “execution support teams,” who work with the families of inmates and their victims, tend to become more and more morally disengaged the more executions they witness.17

Accounts of Bernard Madoff’s Ponzi scheme suggest just how slippery a slope can become. Madoff’s scheme involved paying certain investors with the money from other investors, a practice that allegedly began when Madoff lost money on trades and needed a little extra cash to cover the losses from those investments. Over time, the amount of cash Madoff needed to cover his losses grew—and so did the extent of his deception. So incremental was the scam that it went unnoticed by regulators for at least thirty years. Why didn’t Madoff’s auditors notice his transgressions? Having analyzed how our own unethical decisions come about, in the next chapter we will consider the related question of how we so often fail to fully notice and act on the unethical behavior of others.