A

n organization’s quality management system (QMS) focuses on the strategies and tactics necessary to allow that organization to successfully achieve its quality goals and objectives, and provide high-quality products and services. As part of the QMS, method and techniques need to exist that:

-

Monitor the effectiveness of those strategies and tactics (cost of quality and return on investment)

-

Continually improve those strategies and tactics (process improvement models)

-

Deal with problems identified during the implementation and performance of those strategies and tactics (corrective action procedures)

-

Prevent problems from occurring during the implementation and performance of those strategies and tactics (defect prevention)

Analyze COQ categories (prevention, appraisal, internal failure, external failure) and return on investment (ROI) metrics in relation to products and processes. (Analyze)

BODY OF KNOWLEDGE II.B.1

Cost of Quality (COQ)

Cost of quality,

also called cost of poor quality,

is a technique used by organizations to attach a dollar figure to the costs of producing and/or not producing high-quality products and services. In other words, the cost of quality is the cost of preventing, finding, and correcting defects (nonconformances to the requirements or intended use). The costs of quality represent the money that would not need to be spent if the products could be developed or the services could be provided perfectly the first time, every time. According to Krasner (1998), “cost of software quality is an accounting technique that is useful to enable our understanding of the economic trade-offs involved in delivering good quality software” The costs of building the product or providing the service

perfectly the first time do not count as costs of quality. Therefore, the costs of performing software development, and perfective and adaptive maintenance activities do not count as costs of quality, including:

-

Requirements elicitation and specification

-

Architectural and detailed design

-

Coding

-

Creating the initial build and subsequent builds to implement additional requirements

-

Shipping and installing the initial release and subsequent feature releases of a product into operations

There are four major categories of cost of quality: prevention,

appraisal, internal failure costs, and external failure costs. The total cost of quality is the sum of the costs spent on these four categories.

-

Prevention cost of quality is the total cost of all activities used to prevent poor quality and defects from getting into products or services. Examples of prevention cost of quality include the costs of:

-

Quality training and education

-

Quality planning

-

Supplier qualification and supplier quality planning

-

Process capability evaluations including quality system audits

-

Quality improvement activities including quality improvement team meetings and activities

-

Process definition and process improvement

-

-

Appraisal cost of quality is the total cost of analyzing the products and services to identify any defects that do make it into those products and services. Examples of appraisal cost of quality include the costs of:

-

Peer reviews and other technical reviews focused on defect detection of new or enhanced software

-

Testing of new or enhanced software

-

Analysis, review and testing tools, databases, and test beds

-

Qualification of supplier's products, including software tools

-

Process, product, and service audits

-

Other verification and validation (V&V) activities

-

Measuring product quality

-

-

Internal failure cost of quality is the total cost of handling and correcting failures that were found internally before the product or service was delivered to the customer and/or users. Examples of internal failure cost of quality include the costs of:

-

Scrap—the costs of software that was created but never used

-

Recording failure reports and tracking them to resolution

-

Debugging the failure to identify the defect

-

Correcting the defect

-

Rebuilding the software to include the correction

-

Re-peer reviewing the product or service after the correction is made

-

Testing the correction and regression testing other parts of the product or service

-

-

External failure cost of quality is the total cost of handling and correcting failures that were found after the product or service has been made available externally to the customer and/or users. Examples of external failure cost of quality include many of the same correction costs that were included in the internal failure costs of quality:

-

Recording failure reports and tracking them to resolution

-

Debugging the failure to identify the defect

-

Correcting the defect

-

Rebuilding the software to include the correction

-

Re-peer reviewing the product or service after the correction is made

-

Testing the correction and regression testing other parts of the product or service

In addition, external failure costs of quality include the costs of the failure’s occurrence in operations. Examples of these costs include:-

Warranties, service level agreements, performance penalties, and litigation

-

Losses incurred by the customer, users and/or other stakeholders because of lost productivity or revenues due to product or service downtime

-

Product recalls

-

Corrective releases and installation of those corrective releases

-

Technical support services, including help desks and field service

-

Loss of reputation or goodwill

-

Customer dissatisfaction and lost sales

-

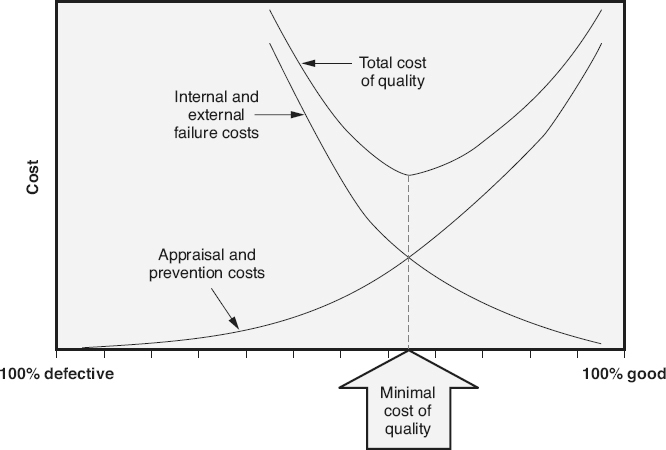

In order to reduce the costs of internal and external failures, an organization must typically spend more on prevention and appraisal. As illustrated in Figure 7.1

, the classic view of cost of quality states that there is, theoretically, an optimal balance, where the total cost of quality is at its lowest point. However, this point may be very hard to determine because many of the external failure costs, such as the cost of stakeholder dissatisfaction or lost sales, can be extremely hard to measure or predict.

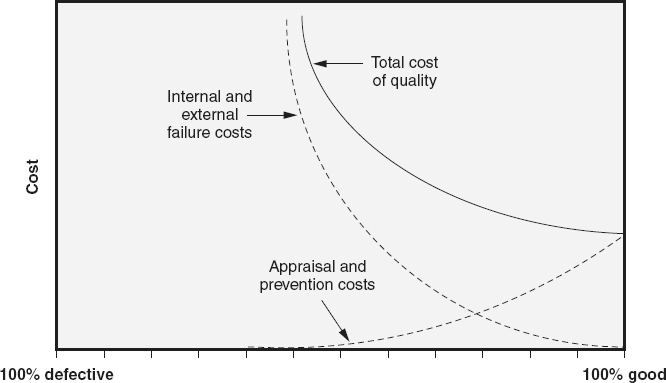

Figure 7.2

illustrates a more modern model of the optimal cost of quality. This view reflects the growing empirical evidence that process improvement activities and prevention techniques are subject to increasing cost-effectiveness. This evidence seems to indicate that near-perfection can be reached for a finite cost (Campanella 1990). For example, Krasner (1998) quotes a study of 15 projects over three years at Raytheon Electronic Systems (RES) as they implemented the Capability Maturity Model (CMM). At

maturity level 1, the total cost of software quality ranged from 55 to 67 percent of the total development costs. As maturity level 3 was reached, the total cost of software quality dropped to an average of 40 percent of the total development costs. After three years, the total cost of software quality had dropped to approximately 15 percent of the total development costs with a significant portion of that being prevention cost of quality.

Figure 7.1

Classic model of optimal cost of quality balance (based on Campanella [1990]).

Figure 7.2

Modern model of optimal cost of quality (based on Campanella [1990]).

Cost of quality information can be collected for the current implementation of a project and/or process, and then compared with historic, baselined, or benchmarked values, or trended over time and considered with other quality data to:

-

Identify process improvement opportunities by identifying areas of inefficiency, ineffectiveness, and waste.

-

Evaluate the impacts of process improvement activities. Provide information for future risk-based trade-off decisions between costs and product integrity requirements.

Return on Investment (ROI)

Return on investment

is a financial performance measure, used to evaluate the benefit of an investment, or to compare the benefits of

different investments. “ROI has become popular in the last few decades as a general purpose metric for evaluating capital acquisitions, projects, programs, and initiatives, as well as traditional financial investments in stock shares or the use of venture capital’’ (business-case-analysis.com

).

There are two primary equations used to calculate ROI:

-

ROI = (Cumulative Income/Cumulative Cost) x 100% (Westcott 2006)—This ROI equation shows the percentage of the investment returned to date. When this ROI calculation is equal to, or greater than 100 percent, there has been a positive return on the investment and the investment has been “paid back.”

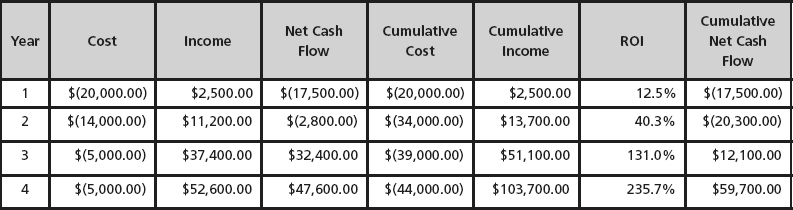

An example of the use of this equation is illustrated in Table 7.1

. At the end of year one in this example, only 12.5 percent of the investment to date has been returned. At the end of year two, 40.3 percent of the investment to date has been returned. At the end of year three, 131.0 percent of the investment to date has been returned and the investment to date has been “paid back” with a profit. At the end of year four, 235.7 percent of the investment to date has been returned, or 2.35 times return on investment.

-

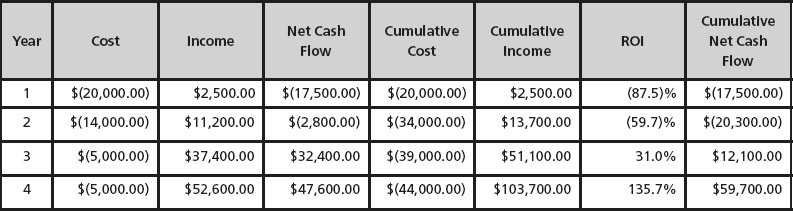

ROI = ((Cumulative Income - Cumulative Cost)/Cumulative Cost) x 100% (based on Juran 1999)—This ROI equation shows the percentage profit on investment returned to date. When this ROI calculation is equal to, or greater than zero, there has been a positive return on the investment and the investment has been “paid back.” An example of the use of this equation is illustrated in Table 7.2 , which shows the same cost and income as Table 7.1 , but uses this second equation to calculate ROI. At the end of year one in this example, there is a negative profitability of 87.5 percent to date. At the end of year two, there is a negative profitability of 59.7 percent to date. At the end of year three, the investment has been paid back and there is a positive profitability of 31.0 percent to date. At the end of year four, there is a positive profitability of 135.7 percent.

It should be noted that ROI is a very simple method for evaluating an investment or comparing multiple investments. Neither of the two ROI equations takes into consideration the Net Present Value (NPR) of an amount received in the future. In other words, ROI ignores the time value of money. In fact, time is not taken into consideration at all in ROI. For example, which of the following is the better investment? Investment A has a cumulative net cash flow of $75,000.00. Investment B has a cumulative net cash flow of $60,000.00. Both required a total cost of $50,000.00. If the ROI is calculated as ((Income - Cost) / Cost) x 100%), investment A has an ROI of 50 percent and investment B has an ROI of 20 percent. From just this information, investment A looks like the better investment. However, consider the time factor. Investment A took five years to see the 50 percent ROI, so it returned an average of 10 percent per year. Investment B took only one year to see the 20 percent ROI. Now which is the better investment?

Table 7.1

ROI as benefit to the investor.

Table 7.2

ROI as percentage profit.

Another issue is that there are no established standards for how organizations measure income and cost as inputs into ROI equations. For example, items like overhead, infrastructure, training, and ongoing technical support might or might not be counted as costs. Some organizations may consider only revenues as income, while others may assign a monetary value to factors like improved reputation in the marketplace or stakeholder good will, and included them as income. “This flexibility, then, reveals another limitation of using ROI, as ROI calculations can be easily manipulated to suit the user’s purposes, and the results can be expressed in many different ways” (Investopedia.com

2016).

Care must be taken when using ROI as a financial performance measure to make certain that the same equation is used, that incomes and costs are measured consistently, and that similar time intervals are used. This is especially true when making comparisons between investments.

Define and describe elements of benchmarking, lean processes, the six sigma methodology, and use the Define, Measure, Act,

Improve, Control (DMAIC) model and the plan-do-check-act (PDCA) model for process improvement. (Apply)

BODY OF KNOWLEDGE II.B.2

Benchmarking

Benchmarking

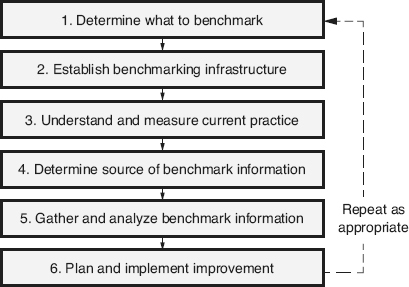

is the process used by an organization to identify, understand, adapt, and adopt outstanding practices and processes from others, anywhere in the world, to help that organization improve the performance of its processes, projects, products, and/or services. Benchmarking can provide management with the assurance that quality and improvement goals and objectives are aligned with the good practices of other teams or organizations. At the same time, benchmarking helps make certain that those goals and objectives are attainable, because others have attained them. The use of benchmarking can help an organization “think outside the box,” and can result in breakthrough, evolutionary improvements. Figure 7.3

illustrates the steps in the benchmarking process.

Step 1:

The first step is to determine what to benchmark, that is, which process, project, product, or services the organization wants to analyze and improve. This step involves assessing the effectiveness and efficiencies, strengths, and weaknesses, of the organization’s current practices, identifying areas that require improvement, prioritizing those areas, and selecting the area to benchmark first. The Certified Manager of Quality/ Organizational Excellence Handbook

(Westcott 2006) says, “examples of how to select what to benchmark include systems, processes, or practices that:

-

Incur the highest costs

-

Have a major impact on stakeholder satisfaction, quality, or cycle time

-

Strategically impact the business

-

Have the potential of high impact on competitive position in the marketplace

-

Present the most significant area for improvement

-

Have the highest probability of support and resources if selected for improvement”

Step 2:

The second step in the benchmarking process is to establish the infrastructure to do the benchmarking study. This includes identifying a sponsor to provide necessary resources, and champion the benchmarking activities within the organization. This also includes identifying the members of the benchmarking team who will actually perform the benchmarking activities. Members of this team should include individuals who are knowledgeable and involved in the area being benchmarked, and individuals who are familiar with benchmarking practices.

Figure 7.3

Steps in the benchmarking process.

Step 3:

In order to do an accurate comparison, the benchmarking team must obtain a thorough, in-depth understanding of the current practices in the selected area. Key performance factors for the area being benchmarked are identified, and the current values of those key factors are measured. Current practices for the selected area are studied, mapped as necessary, and analyzed.

Step 4:

Determine the source of benchmarking good practice information. Notice that the information-gathering steps of benchmarking focus on good practice. There are many good practices in the software industry. Good practices become best practices when they are adopted and adapted to meet the exact requirements, culture, infrastructure, systems, and products of the organization performing the benchmarking. During this fourth step, a search and analysis is performed to determine the good practice leaders in the selected area of study. There are several choices that can be considered, including:

-

Internal benchmarking: Looks at other teams, projects, functional areas, or departments within the organization for good practice information.

-

Competitive benchmarking: Looks at direct competitors, either locally or internationally, for good practice information. This information may be harder to obtain than internal information, but industry standards, trade journals, competitor’s marketing materials, and other sources, can provide useful data.

-

Functional benchmarking: Looks at other organizations performing the same functions or practices, but outside the industry. For example, an information technology (IT) team might look for good practices in other IT organizations in other industries. IEEE, ISO, IEC and other standards, and the Capability Maturity Model Integration (CMMI) models are likely sources of information, in addition to talking directly to individual organizations.

-

Generic benchmarking: Looks outside the box. For example, an organization that wants to improve:

-

On-time delivery practices might look to FedEx

-

Just-in-time, lean inventory practices might look to Wal-Mart or Toyota

-

Its product’s graphical user interface (GUI) might look to Google or Amazon’s web pages

It does not matter if the organization is not in any of these fields. -

Step 5:

Benchmarking good practices information is gathered and analyzed. There are many mechanisms for performing this step, including site visits to targeted benchmark organizations, partnerships where the benchmark organization provides coaching and mentoring, research studies of industry standards or literature, evaluations of good practice databases, Internet searches, attending trade shows, hiring consultants, stakeholder surveys, and other activities. The objective of this step is to:

-

Collect information and data on the performance of the identified benchmark leader and/or on good practices

-

Evaluate and compare the organization’s current practices with the benchmark information and data

-

Identify performance gaps between the organization’s current practices, and the benchmark information and data, in order to identify areas for potential improvement and lessons learned

This comparison is used to determine where the benchmark is better and by how much. The analysis then determines why the benchmark is better. What specific practices, actions, or methods result in the superior performance?

Step 6:

For benchmarking to be useful, the lessons learned from the good practice analysis must be used to actually improve the organization’s current practices. To complete the final step in the benchmarking process:

-

Obtain management buy-in and acceptance of the findings from the benchmarking study

-

Incorporate the benchmarked findings into business analysis and decision-making

-

Create a plan of specific actions and assignments, to adapt (tailor) and adopt the identified good practices, and to turn them into best practices for the organization by filling the performance gaps

-

Pilot those improvement actions, and measure the results against the initial values of identified key factors (identified in step #3), to monitor the effectiveness of the improvement activities

-

If the piloting was successful, propagate those improvements throughout the organization. For unsuccessful pilots or propagations, appropriate corrective action must be taken

Lessons learned from the benchmarking activities can be leveraged into the improvement of future benchmarking activities. The prioritized list created in the first step of the benchmarking process can be used to consider improvements in other areas, and of course this list should be updated as additional information is obtained over time. Benchmarking must be a continuous process that not only looks at current performance but also continues to

monitor key performance indicators into the future as industry practices change and improve.

Plan-Do-Check-Act (PDCA) Model

There are many different models that describe the steps to process improvement. One of the simplest models is the classic plan-do-check-act

(PDCA) model,

also called the Deming Circle,

or the Shewhart Cycle.

Figure 7.4

illustrates the PDCA model, which includes the following steps:

-

The plan step includes studying the current state of the practice and determining what opportunities and/or needs exist for process improvements. Priorities are established, and one or more process improvements are selected for implementation. For each selected improvement, a plan is created to define the specific objectives, tasks/activities, assignments, resources, budgets, and schedule needed to implement that improvement.

-

The do step implements the plan. This includes:

-

Identifying and involving relevant stakeholders

-

Identifying root causes of problems or nonconformances, investigating alternative solutions and selecting a solution

-

Implementing and testing the selected solution by creating and/or updating systems, policies, standards, processes, work instructions, products/services and/or metrics, as needed

-

Developing and/or providing training as needed

-

Communicating about the change and its status to its stakeholders

-

-

The check step, also called the study step (plan-do-study-act model), monitors and/ or measures the outcomes of the improvement process, and analyzes the resulting process after the plan is implemented to determine if the objectives were met, if the expected improvements actually occurred, and if any new problems were created. In other words, did the plan and its implementation work?

-

During the act step, the knowledge gained during the check step is acted upon. If the plan and implementation worked, action is taken and controls are put in place to institutionalize the process improvement throughout the organization, and the cycle is started over by repeating the plan step for the next improvement. If the plan and implementation did not result in the desired improvement, or if other problems were created, the act step identifies the root causes of the resulting issues and determines the needed corrective actions. In this case, the cycle is started over by repeating the plan step to plan the implementation of those corrective actions. The act step can also involve the abandoning of the change.

Figure 7.4

Plan-do-check-act model.

Six Sigma

The Greek letter sigma

(σ) is the statistical symbol for standard deviation. As illustrated in Figure 7.5

, assuming a normal distribution, plus or minus six standard deviations from the mean (average) would include 99.99999966 percent of all items in the sample. This leads to the origin of the concept of a six sigma measure of quality,

which is a near-perfection goal of no more than

3.4 defects per million opportunities.

More broadly than that, Six Sigma is a:

-

Philosophy that “views all work as processes that can be defined, measured, analyzed, improved and controlled” (Kubial 2009)

-

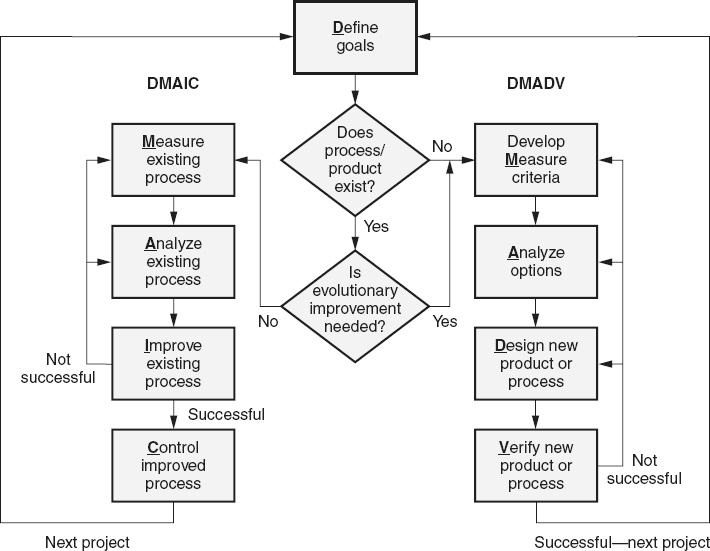

Fact-based, data-driven methodology for eliminating defects in processes, through focusing on understanding stakeholder needs, prevention, continual improvement of processes, and a reduction in the amount of variation in those processes through the implementation of a rigorous step-by-step approach (DMAIC and DMADV, as illustrated in Figure 7.6 )

-

Business management strategy that has evolved into “a comprehensive and flexible system for achieving, sustaining, and maximizing business success” (Pande 2000)

-

Collection of qualitative and quantitative techniques and tools that can be used by organizations and individual practitioners to drive process improvement

Figure 7.5

Standard deviation verse area under a normal distribution curve.

DMAIC Model

The Six Sigma DMAIC model

(define, measure, analyze, improve, control), is used to improve existing processes that are not performing at the required level, as measured against critical to x (CTx) requirements (where x is a critical customer/stakeholder requirement including quality, cost, process, safety, delivery and so on), through incremental improvement.

-

The define step in the DMAIC model identifies the stakeholders, defines the problem, determines the requirements, and sets goals for process improvement that are consistent with stakeholder needs (“voice of the customer”) and the organization’s strategy. An improvement project is chartered and planned. A team is formed that is committed to the improvement project, and provided with management support (a champion) and the required resources.

-

During the measure step in the DMAIC model, the current process is mapped (if a process map does not already exist). The CTx characteristics of the process being improved are determined. Metrics to measure those CTx characteristics are selected, designed, and agreed upon. A data collection plan is defined, and data are collected from the current process to determine the baselines and levels of variation for each selected metric. This information is used to determine the current process capability and to define the benchmark performance levels of the current process.

-

During the analyze step in the DMAIC model, statistical tools are used to analyze the data from the measure step to fully understand the influences that each input variable has on the process and its resulting outputs. Gap analysis is performed to identify the differences between the current performance of the process and the required performance. Based on these evaluations, the root cause(s) of the problem and/or variation in the process are determined and validated. The objective of the analyze step is to understand the process well enough that it is possible to identify alternative improvement actions during the improve step.

-

During the improve step in the DMAIC model, alternative approaches (improvement actions) to solving the problem and/or reducing the process variations are considered. The team then assesses the costs and benefits, impacts, and risks of each alternative and performs trade-off studies. The team comes to consensus on the best approach and creates a plan to implement the improvements. The plan contains the appropriate actions needed to meet the stakeholders’ requirements. Appropriate approvals for the implementation plan are obtained. A pilot is conducted to test the solution, and measures against the CTx requirements from that pilot are collected and analyzed. If the pilot is successful, the solution is propagated to the entire organization, and measures against the CTx requirements are again collected and analyzed. If the pilot is not successful, appropriate DMAIC steps are repeated as necessary.

-

During the control step in the DMAIC model, the newly improved process is standardized and institutionalized. Controls are put in place to make certain that the improvement gains are sustained into the future. This includes selecting, defining, and implementing key metrics to monitor the process and/or product to identify any future “out of control” conditions. The team develops a strategy for project hand-off to the process owners. This strategy includes propagating lessons learned, and creating documented procedures, training materials, and any other mechanisms necessary to guarantee ongoing maintenance of the improvement solution. The current Six Sigma project is closed, and the team identifies next steps for future process improvement opportunities.

DMADV Model

The Six Sigma DMADV model

(define, measure, analyze, design, verify), also known as Design for Six Sigma (DFSS),

is used to define new processes and products at Six Sigma quality levels. The DMADV model is also used when the existing process or product has been optimized, but still does not meet required quality levels. In other words, the DMADV model is used when evolutionary change (radically redesigned), instead of incremental change, is needed. Figure 7.6

illustrates the differences between the DMAIC and DMADV models.

-

During the define step in the DMADV model, the goals of the design activity are determined based on stakeholder needs and aligned with the organizational strategy. This step mirrors the define step of the DMAIC model.

-

During the measure step in the DMADV model, CTx characteristics of the new product or process are determined. Metrics to measure those CTx characteristics are then selected, designed, and agreed upon. A data collection plan is defined for each selected metric.

-

During the analyze step in the DMADV model, alternative approaches to designing the new product or process are considered. The team then assesses the costs and benefits, impacts, and risks of each alternative, and performs trade-off studies. The team comes to consensus on the best approach.

-

During the design step in the DMADV model, high-level and detailed designs are developed and those designs are implemented and optimized. Plans are also developed to verify the design.

-

During the verify step in the DMADV model, the new process/product is verified to make sure it meets the stakeholder requirements. This may include simulations, pilots, or tests. The new process or product design is then implemented into operations. The team develops a strategy for project handoff to the process owners. The current Six Sigma project is closed and the team identifies next steps for future projects.

Figure 7.6

DMAIC versus DMADV Six Sigma models.

Lean Techniques

While lean principles

originated in the continuous improvement of manufacturing processes, these lean techniques have now been applied to software development. The Poppendiecks adapted the seven lean principles to software as follows (Poppendieck 2007):

-

Eliminate waste

-

Build quality in

-

Create knowledge

-

Defer commitment

-

Deliver fast

-

Respect people

-

Optimize the whole

Waste

is anything that does not add value, or gets in the way of adding value, as perceived by the stakeholders. Organizations must evaluate the software development and maintenance timelines and reduce those timelines by removing non-value-added wastes. Examples of wastes in software development include:

-

Incomplete work: If work is left in various stages of being partially completed (but not fully complete) it can result in waste. If a task is worth starting, it should be completed before moving on to other work.

-

Extra processes: The process steps should be optimized to eliminate any unnecessary work, bureaucracy, or extra non-value-added activities.

-

Extra features or code: It is a fundamental software quality engineering principle that one should avoid “gold plating,” that is, avoid adding extra features or “nice to have” functionality that are not in the current development plan/cycle. Everything extra adds cost, both direct and hidden, due to increased testing, complexity, difficulty in making changes, potential failure points, and even obsolescence.

-

Task switching: Belonging to multiple teams causes productivity losses due to task switching and other forms of interruption (DeMarco 2001).

-

Waiting: Any step in a process that results in delays, or that causes personnel at the next step to wait, should be reevaluated. Anything interfering with progress is waste since it delays the early realization of value by the stakeholders.

-

Unnecessary motion (to find answers, information): Additional non-value-added steps or unnecessary approval cycles interrupt concentration and causes extra effort and waste. It is important to determine just how much effort is truly required to learn just enough useful information to move ahead with a project.

-

Defects: Another fundamental software quality engineering principle is that rework to correct defects is waste. The goal is to prevent as many defects as possible. If defects do get into the product, the goal is to find those defects as early as possible, when they are the least expensive to correct.

In order to eliminate waste, one technique to use is value stream mapping

that traces a product from raw materials to use. The current value stream of a product or service is mapped by identifying all of the inputs, steps, and information flows required to develop and deliver that product or service. This current value stream is analyzed to determine areas where wastes can be eliminated. Value stream maps are then drawn for the optimized process and that new process is implemented.

Building quality in

requires that quality must be built into the software rather than trying to test it in later (which never works). This means that developers focus on:

-

Perceived integrity: Involves how the whole experience with a product affects a stakeholder both now and as time passes.

-

Conceptual integrity: Comes from system concepts working together as a smooth, cohesive whole.

-

Refactoring: Involves adopting the attitude that internal structure will require continual improvement as the system evolves.

-

Testing: Becomes even more critical after a product goes into operations because 50 percent or more of product change occurs when the product is in operations. Having a healthy test suite helps maintain product integrity and helps document the system.

Knowledge

is created and learning is amplified through providing feedback mechanisms. For example, developing a large product using short iterations allows multiple feedback cycles as iterations are reviewed with the customer, users and other stakeholders. The continuous use of metrics and reflections/retrospective reviews throughout the project and process implementation creates additional opportunities for feedback. The team should be taught to use the scientific method to establish hypotheses, conduct rapid experiments, and implement the best alternatives.

Deferring commitment

means making irrevocable decisions as late as possible. This helps address the difficulties that can result from making those decisions when uncertainty is present. Of course, “first and foremost, we should try to make most decisions reversible, so they can be made and then easily changed (Poppendieck 2007). Making decisions at the “last responsible moment” means delaying decision until the point when failing to make a decision would eliminate an important alternative and cause decisions to be made by default. Gathering as much information as possible, as the process progresses, allows better, more informed decisions to be made.

Deliver fast

means getting the product to the customer/user as fast as possible. The sooner the product is delivered, the sooner feedback from the customer and/or users can be obtained. Fast delivery also means the stakeholders have less time to change their

minds before delivery, which can help eliminate the waste of rework caused by requirements volatility.

Organizations and leaders must respect people

in order to improve systems. This means creating teams of engaged, thinking, technically-competent people, who are motivated to design their own work rather than just waiting for others to order them to do things. This requires strong, entrepreneurial leaders who provide people with a clear, compelling, achievable purpose; give those people access to the stakeholders; and allow them to make their own commitments. Leaders provide the vision and resources to the team and help them when needed without taking over.

Optimize the whole

is all about systems thinking. Winning is not about being ahead at every stage (optimizing/measuring every task). It is possible to optimize an individual process and actually sub-optimize the entire system. All decisions and changes are made with consideration of their impacts on the entire system, as well as their alignment with organizational goals and critical customer/stakeholder requirements.

Evaluate corrective action procedures related to software defects, process nonconformances, and other quality system deficiencies. (Evaluate)

BODY OF KNOWLEDGE II.B.3

Corrective actions

are those actions taken to eliminate the root cause of a problem (nonconformance, noncompliance, defect, or other issue) in order to prevent its future recurrence. One of the generic goals of the Capability Maturity Model Integration (CMMI)

models is to monitor and control each process, which involves “measuring appropriate attributes of the process or work products produced by the process…” and taking appropriate ”…corrective action when requirements and objectives are not being

satisfied, when issues are identified, or when progress differs significantly from the plan for performing the process” (SEI 2010, SEI 2010a, SEI 2010b). The plan-do-check- act, Six Sigma, and Lean improvement models discussed previously in this chapter are all examples of models that can be used for corrective action, as well as, process improvement.

Corrective action begins once the problem has been reported. However, corrective action is about more than just taking the remedial action necessary to fix the problem. Corrective action also involves:

-

Researching and analyzing one or more problems to determine their root causes

-

Exploring alternatives and developing a plan to eliminate those root causes

-

Implementing that plan to improve the software products and/or processes to prevent the recurrence of the problem

-

Verifying the implementation

-

Verifying the effectiveness of the improvement

-

Analyzing the effects of the problem on the products produced while the problem was occurring, and determining whether these products can be used "as is," if they need to be reworked/corrected, or potentially even be recalled if they have already been released into operations

-

Closure including the retention of quality records for future analysis and management review

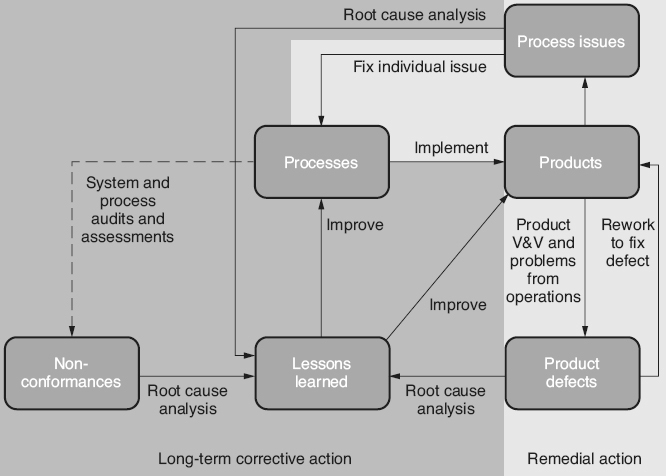

As illustrated in the example in Figure 7.7

, the corrective action process starts with the identification of a problem. Problems in current products, processes or systems can be identified through a variety of sources. For example, they can be identified:

-

As nonconformances or other negative observations during audits

-

Through suggestion systems

-

By quality action teams

-

Through lessons learned during project, process, or system implementation

-

Through the performance of root cause analysis on one or more product problems

-

Through the identification of unstable trends or out-of-control states using metrics

-

Through product verification and validation activities (for example, peer/technical reviews or testing)

The second step in the corrective action process is to assign a champion and/or sponsor for the corrective action and assemble a corrective action planning team. This team determines if remedial action is necessary to stop the problem from affecting the quality of the organization’s products and services until a longer-term solution can be implemented. If so, a remedial action team is assigned to make the necessary corrections. For example, if the source code module being produced does not meet the coding standard, a remedial action might be for team leads to review all newly written or modified code against the coding standard prior to it being baselined. While this is not a permanent solution, and may in fact cause a bottleneck in the process, it helps prevent any more occurrences until a long-term solution can be reached. If remedial action is needed, it is implemented as appropriate. For a software product problem, remedial action is typically the

correction of the immediate underlying defect(s) that is the direct cause of the problem. Remedial action has an immediate impact on the quality of the software process or product by eliminating the defect. Typical remedial actions include:

Figure 7.7

Corrective action process—example.

-

Fixing the product defect, testing the correction, and regression testing the product

-

If the product problem was reported from operations:

-

Including the fix in a corrective release of the product

-

Including the fix in the next planned feature release of the product

-

-

Rewriting/correcting the process or product documentation

-

Rewriting/correcting the process or product training materials

-

Training or retraining employees or other stakeholders

All corrective actions should be commensurate with the risk and impact of the problem. Therefore, it may be determined that it is riskier to fix the problem than to leave it uncorrected, in which case the problem is resolved with no action taken and the corrective action process is closed.

The third step in the corrective action process is the initiation of a long-term correction. If the problem is determined to be an isolated incident with minimal impact, remedial action may be all that is required. However, this may simply eliminate the symptoms of the problem and allow the problem to recur in the future. For multiple occurrences of a problem, a set of problems or problems with higher impact, more extensive analysis is required to implement long-term corrective actions in order to reduce the possibility of additional occurrences of the problem in the future. If the corrective action planning team determines that long-term corrective action is needed, that team researches the problem and identifies its root cause through the use of statistical techniques, data gathering metrics, analysis tools and/or other means. For the coding standard example, the root cause might be that:

-

The coding standard is out of date because a newer coding language is now in use

-

New hires have not been trained on the coding standard

-

Management has not enforced the coding standard and therefore the engineers consider it optional

Once the root cause is identified, the team develops alternative approaches to solving the identified root cause based on their research. The team analyzes the cost and benefits, risks, and impacts of each alternative solution and performs trade-off studies to come to a consensus on the best approach. The corrective action plan addresses improvements to the control systems that

will avoid potential recurrence of the problem. If the team determines that the root cause is lack of training, not only must the current staff be trained, but controls must be put in place to make certain that all future staff members (new hires, transfers, outsourced personnel) receive the appropriate training in the coding standard as well.

The corrective action team must also analyze the effects that the problem had on past products. Are there any similar software products that may have similar product problems? Does the organization need to take any action to correct the products/services created while a process problem existed? For example, assuming that training was the root cause of not following the coding standard, all of the modules written by the untrained coders need to be reviewed against the coding standard to understand the extent of the problem. Then a decision needs to be made about whether to correct those modules or simply accept them with a waiver.

The output of this step is one or more corrective action plans, developed by the corrective action planning team, that:

-

Define specific actions to be taken

-

Assign the individual responsible for performing each action

-

Assign an individual responsible for verifying that each action is performed

-

Estimate effort and cost for each action

-

Determine due dates for completion of each action

-

Select mechanisms or measures to determine if desired results are achieved

Those corrective action plans are then reviewed and approved by the approval authority, as defined in the quality management system processes. For example, if changes are recommended to

the organization’s quality management system (QMS), approval for those changes may need to come from senior management. If individual organizational- level processes or work instructions need to be changed, the approval body may be an engineering process group

(EPG) or process change control board

(PCCB) made up of process owners. On the other hand, if the action plans recommend training, the managers of the individuals needing that training may need to approve those plans. For product changes, the approval may come from a Configuration Control Board (CCB) or other change authority, as defined in the configuration management processes. For corrective actions that result from nonconformances found during an audit, the lead auditor may also need to review the corrective action plan.

During this plan approval step, any affected stakeholders are informed of the plans and given an opportunity to provide input into their impact analysis and approval. The approval/disapproval decisions from the approval authority are also communicated to impacted stakeholders. If the corrective action plans are not approved, the corrective action planning team determines what appropriate future actions to take (replanning).

In the implement corrective action step, the corrective action implementation team executes the corrective action plan. Depending on the actions to be taken, this implementation team may or may not include members from the original corrective action planning team. If appropriate, the implementation of the corrective action may also include a pilot project to test whether the corrective action plan works in actual application. If a pilot is held, the implementation team analyzes the results of that pilot to determine the success of the implementation. If a pilot is not needed, or if it is successful, the implementation is propagated to the entire organization and institutionalized. Results of that propagation are analyzed, and any issues are reported to the corrective action planning team. If the implementation and/or

pilot did not correct the problem or resulted in new problems, then these results may be sent back to the corrective action planning team to determine what appropriate future actions (replanning) to take.

Successful propagation and institutionalization may close the corrective action, depending on the source of the original problem (for example, if it was a process improvement suggestion or problem identified by the owners of the process). However, at some point in the future, auditors, assessors, or other individuals should perform an evaluation of the completeness, effectiveness, and efficiency of that implementation to verify its continued success. If the corrective action was the result of a nonconformance identified during an audit, the lead auditor would have to be provided evidence that the nonconformance was resolved and agree with that resolution before the corrective action is closed.

Evaluating the success of the corrective action implementation or verifying its continued success over time requires the determination of the CTx characteristics of the process being corrected (as discussed in the Six Sigma DMAIC process earlier in this chapter). Metrics to measure those CTx characteristics are selected, designed, and agreed upon as part of the corrective action plans. Examples of CTxs that might be evaluated as part of corrective action include:

-

Positive impacts on total cost of quality, cost of development, or overall cost of ownership

-

Positive impacts on development and/or delivery schedules or cycle times

-

Positive impacts on product functionality, performance, reliability, maintainability, technical leadership, or other quality attributes

-

Positive impacts on team knowledge, skills, or abilities

-

Positive impacts on stakeholder satisfaction or success

When evaluating the effectiveness of the product corrective action process, examples of factors to consider include:

-

How many problem reports were returned to the originator because not enough information was included to replicate or identify the associated defect

-

How many problem reports were not actual defects in the product (for example, operator errors, works as designed, could not duplicate)

-

What is the fix-on-fix ratio, where future V&V activities or operations determine that all or part of the problem was not corrected

-

Are identical problems being identified later in other products or other versions of the same product

-

Are unauthorized corrections being made to baselined configuration items

-

Are individuals involved in the process adequately trained in and following the process

When evaluating the efficiency of the corrective action process itself, examples of factors to consider include:

-

What are the cycle times for the entire process or individual steps in the process? Are problems being corrected in a timely manner?

-

Are there any bottlenecks or excessive wait times in the process?

-

Are there any wastes in the process? Are problems being corrected in a cost- effective manner?

So where does corrective action fit in with the other software processes? As illustrated in Figure 7.8

, every organization has

formal and/or informal software processes that are implemented to produce software products. These software products can include both the final products that are used by the customers/users (for example, executable software, user documentation) and interim products that are used internally to the organization (for example, requirements, designs, source/object code, plans, test cases). Product and process corrective action fit in as follows:

-

Product fix/correction: During software development, various V&V processes are used to provide confidence in the quality of those products, and to identify any defects in those products. Once the product has been released into operations, additional problems may be reported including software operational failures, usability issues, stakeholder complaints, and other issues. Analysis of these field reported problems may uncover additional software defects. Remedial corrective action activities take the form of rework to fix/correct these identified defects in the software products.

-

Product corrective action: Root cause analysis can be performed on one or more of the identified product defects. Based on the lessons learned from this analysis, one or more products or processes are improved. An example of a product improvement would be the redesign of the software architecture to provide more decoupling if the problems resulted from changes to one part of the software adversely impacted other parts of the software. An example of a process improvement would be the improvement of unit testing processes because too many logic and data initialization type defects are escaping into later testing cycles or into operations.

-

Process fix/correction: During software development, various process problems may also be identified as those processes are implemented to produce the products. For example, extra, non-value-added process steps might be identified, areas where a process is ineffective or inefficient might be identified, or defects in the process documentation might be found. System and process audits and assessments may also identify non-conformances in the processes. Remedial action activities fix/ correct these individual, identified problems in the software processes.

-

Process corrective action: Root cause analysis can be performed on one or more process defects. Based on the lessons learned from this analysis, long-term corrective action may be implemented for one or more products or processes.

Figure 7.8

Remedial action (fix/correct) versus long-term corrective action for problems.

Design and use defect prevention processes such as technical reviews, software tools and technology, special training. (Evaluate)

BODY OF KNOWLEDGE II.B.4

Unlike corrective action, which is intended to eliminate future repetition of problems that have already occurred, preventive actions

are taken to prevent problems that have not yet occurred.

For example, an organization’s supplier, Acme, experienced a problem because they were not made aware of the organization’s changing requirements. As a result, the organization established a supplier liaison whose responsibility it is to communicate all future requirements changes to Acme in a timely manner. This is corrective action, because the problem had already occurred. However, the organization also established supplier liaisons for all of its other key vendors. This is preventive action because those suppliers had not yet experienced any problems. The CMMI for Development specifically addresses corrective and preventive actions in its Causal Analysis and Resolution process area (SEI 2010).

Preventive action is proactive in nature. Establishing standardized processes based on industry good practice, as defined in standards such as ISO 9001 and the IEEE software engineering standards or in models such as the Capability Maturity Model Integration (CMMI), can help prevent defects by propagating known best practices throughout the organization (see chapters 3

and 6

). A standardized process approach strives to control and improve organizational results, including product quality, and process efficiencies and effectiveness. Creating an organizational culture that focuses on employee involvement, creating stakeholder value, and continuous improvement at all levels of the organization can help make sure that people not only do their work, but think about how they do their work and how to do it better.

Techniques such as risk management, failure modes effects analysis (FMEA), statistical process control, data analysis (for example, analysis of data trending in a problematic direction), and audits/assessments can be used to identify potential problems and address them before those problems actually occur. Once a potential problem is identified, the preventive action process is very similar to the corrective action process discussed above. The

primary difference is that instead of identifying and analyzing the root cause of an existing problem, the action team researches potential causes of a potential problem. Industry standards, process improvement models, good practice, and industry studies all stress the benefits of preventing defects over detecting and correcting them.

Since software is knowledge work, one of the most effective forms of preventive action is to provide practitioners with the knowledge and skills they need to perform their work with fewer mistakes. If people do not have the knowledge and skill to catch their own mistakes, those mistakes can lead to defects in the work products. Training and on-the-job mentoring/coaching are very effective techniques for propagating necessary knowledge and skills. This includes training or mentoring/coaching in the following areas:

-

Customer/user’s business domain: So that requirements are less likely to be missed or defined incorrectly, or misinterpreted after they are specified

-

Design techniques and modeling standards: So that defects are less likely to be inserted into the architecture and design

-

Coding language, coding standards, and naming conventions: So that defects are less likely to be inserted into the source code module

-

Tool set: So that misuse of tools and techniques do not cause the inadvertent insertion of defects

-

Quality management system, processes, and work instructions: So that practitioners know what to do and how to do it, resulting in fewer mistakes

Technical reviews, including peer reviews and inspections, are used to identify defects, but they can also be used as a mechanism

for promoting the common understanding of the work product under review. For example:

-

If designers and testers participate in the peer review of requirements, they may obtain a more complete understanding of the requirements, preventing misinterpretations from propagating into future design, code and test problems and rework

-

If a defect is identified and discussed in a peer review, the attendees of that peer review are less likely to make similar mistakes when developing similar work products

-

Inexperienced practitioners, who attend the technical reviews of expert practitioners’ work products, also have the benefit of seeing what high quality work products look like, and can use that reviewed work product as an example/ template when developing their own work products later

Other examples of problem prevention techniques include:

-

Incremental and iterative development methods, agile methods, and prototyping all create feedback loops, which help prevent mistakes by verifying a consistent and complete understanding of the stakeholder requirements.

-

Good design techniques including decoupling, cohesion, and information hiding, which help prevent future defects when the software is modified and/or maintained.

-

Good V&V practices, throughout the life cycle, which prevent defects from propagating forward into other work products. For example, a single defect, found during a requirements review and corrected, could have potentially become multiple design, code and test case defects if it had not been found.

-

Software tools and technologies also help prevent problems. For example, modern build tools help prevent problems by automatically initializing memory to zero so that missing variable initialization code does not cause product problems.

-

Mistake-proofing processes by using well-defined, repeatable processes, standardized templates and checklists can prevent problems. When practitioners understand exactly what to do, when to do it, how to do it, and who is supposed to do it, they are less likely to make mistakes. Checklists can prevent problems that might arise from missed steps, activities, or items for consideration.

Prevention also comes from benchmarking and identifying good practices within the organization and in other organizations, and adapting and adopting them into improved practices within the organization. This includes both benchmarking and holding lessons learned sessions,

also called post-project reviews, retrospectives

or reflections.

These techniques allow the organization and its teams to learn lessons from the problems encountered by others without having to make the mistakes and solve the problems themselves.

Evaluating the success of a preventive action implementation, or verifying its continued success over time, requires the determination of the CTx characteristics of the process being improved. Metrics to measure those CTx characteristics are selected, designed, and agreed upon as part of the preventive action plans. Examples of CTx characteristics that might be evaluated as part of preventive action include:

-

Positive trends showing decreases in total cost of quality, cost of development, or overall cost of ownership

-

Positive trends showing decreases in development and/or delivery cycle times

-

Positive trends showing increased first-pass yields (work product making it through development without needing correction because of defects) and reductions in defect density

-

Positive impacts on team knowledge, skills, or abilities

-

Positive impacts on stakeholder satisfaction