S

oftware metrics and analysis provide the data and information that allows an organization’s quality management system to be based on a solid foundation of facts. The objective is to drive continual improvement in all quality parameters through a goal-oriented measurement and analysis system.

Software metrics programs should be designed to provide the specific information necessary to manage software projects and improve software engineering processes, products, and services. The foundation of this approach is aimed at making practitioners ask not “what should I measure?” but “why am I measuring?” or “what business needs does the organization wish its measurement initiative to address?” (Goodman 1993).

Measuring is a powerful way to track progress toward project, process, and product goals. As Grady (1992) states, “Without such measures for managing software, it is difficult for any organization to understand whether it is successful, and it is difficult to resist frequent changes of strategy.”

According to Humphrey (1989), there are four major roles (reasons) for collecting data and implementing software metrics:

-

To understand: Metrics can be gathered to learn about software projects, processes, products, and services, and their capabilities. The resulting information can be used to:

-

Establish baselines, standards, and goals

-

Derive models of the software processes

-

Examine relationships between process parameters

-

Target process, product, and service improvement efforts

-

Better estimate project effort, costs, and schedules

-

-

To evaluate: Metrics can be examined and analyzed as part of the decision-making process to study projects, products, processes, or services in order to establish baselines, to perform cost/benefit analysis, and to determine if established standards, goals, and entry/exit/acceptance criteria are being met.

-

To control: Metrics can be used to control projects, processes, products, and services by providing triggers (red flags) based on trends, variances, thresholds, control limits, standards and/or performance requirements.

-

To predict: Metrics can be used to predict the values of attributes in the future (for example, budgets, schedules, staffing, resources, risks, quality, and reliability).

Effective software metrics provide the objective data and information necessary to help an organization, its management, its teams, and its individuals:

-

Make day-to-day decisions

-

Identify project, process, product and service issues

-

Correct existing problems

-

Identify, analyze, and manage risks

-

Evaluate performance and capability levels

-

Assess the impact of changes

-

Accurately estimate and track effort, costs, and schedules

The bottom line is that effective metrics help improve software projects, products, processes, and services.

Define and describe metrics and measurement terms such as reliability, internal and external validity, explicit and derived measures, and variation. (Understand)

BODY OF KNOWLEDGE V.A.1

Metrics Defined

The term metrics

means different things to different people. When someone buys a book or picks up an article on software metrics, the topic can vary from project cost, and effort prediction and modeling, to defect tracking and root cause analysis, to a specific test coverage metric, to computer performance modeling, or even to the application of statistical process control charts to software.

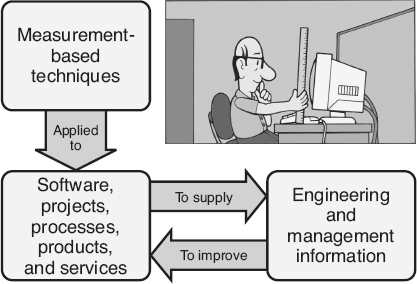

Goodman (2004) defines software metrics as “The continuous application of measurement-based techniques to the software development process and its products to supply meaningful and timely management information, together with the use of those techniques to improve that process and its products.”

As illustrated in Figure 18.1

, Goodman’s definition can be expanded to include software projects and services. Examples of software services include:

-

Responding with fixes to problems reported from operations

-

Providing training courses for the software

-

Installing the software

-

Providing the users with technical assistance

Goodman’s definition can also be expanded to include engineering, as well as management information. In fact, measurement is one of the key required elements to move the software from a craft to an engineering discipline.

Figure 18.1

Metrics defined.

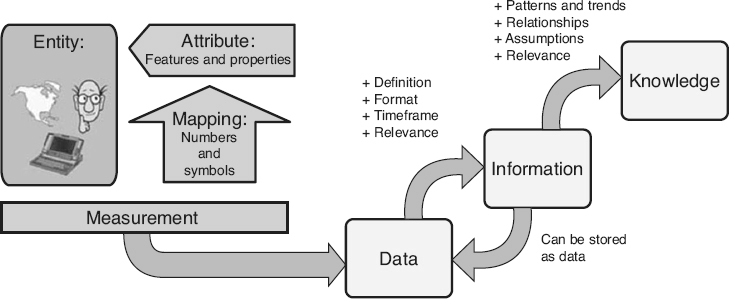

Figure 18.2

Converting measurement data into information and knowledge.

Software metrics are standardized ways of measuring the attributes of software processes, products, and services in order to provide the information needed to improve those processes, products, and services. The same metrics can then be used to monitor the impacts of those improvements, thus providing the feedback loop required for continual improvement.

Measurement Defined

The use of measurement is common. People use measurements in everyday life to weigh themselves in the morning, or when they glance at the clock or at the odometer in their car. Measurements are used extensively in most areas of operations and manufacturing to estimate costs, calibrate equipment, and monitor inventories. Science and engineering disciplines depend on the rigor that measurements provide. What does measurement really mean?

According to Fenton (1997), “measurement

is the process by which numbers or symbols are assigned to attributes of entities in the real world in such a way as to describe them according to clearly defined rules.” The left hand side of Figure 18.2

illustrates this definition of measurement.

Entities

are nouns, for example, a person, place, thing, event, or time period. An attribute is a feature or property of an entity. To measure, the entity being measured must first be determined. For example, a car could be selected as the entity. Once the entity is selected, the attributes of that entity that need to be described must be chosen. According to IEEE (1998d), an attribute

is a measurable physical or abstract property of an entity. Attributes for a car include its speed, its fuel efficiency, and the air pressure in its tires.

Finally, a mapping system,

also called the measurement method

or counting criteria,

must be defined and accepted. It is meaningless to say that the car’s speed is 65 or its tire pressure is 32 unless people know that they are talking about miles per hour or pounds per square inch. So what is a mapping system?

In ancient times there were no real standard measurements. This caused a certain level of havoc with commerce. Was it better to buy cloth from merchant A or merchant B? What were their prices per length? In England they solved this problem by standardizing the “yard” as the distance between King Henry I’s nose and his fingertips. The “inch” was the distance between the first and second knuckle of the king’s finger and the “foot” was literally the length of his foot.

To a certain extent, the software industry is still in those ancient times. As software practitioners try to implement software metrics, they quickly discover that very few standardized mapping systems exist for their measurements. Even for a seemingly simple metric such as the severity of a software defect, no standard mapping system has been widely accepted. Examples from different organizations include:

-

Outage, service-affecting, non-service-affecting

-

Critical, major, minor

-

C1, C2, S1, S2, NS

-

1, 2, 3, 4 (with some organizations using 1 as the highest severity and other organizations using 1 as the lowest)

An important element of a successful metrics program is the selection, definition, and consistent use of mapping systems for selected metrics. The software industry as a whole may not be able to solve this problem, but each organization must solve it to have a successful metrics program.

Data to Information to Knowledge

Once the measurement process is performed, the result is one or more numbers or symbols. These are data items. Data items

are simply “facts” that have been collected in some storable, transferable, or expressible format.

However, if the data are going to be useful, they must be transformed into information products that can be interpreted by people and transformed into knowledge, so that it can be used to make better, fact-based decisions. According to the Guide to Data Management Body of Knowledge

(DAMA 2009), “information

is data in context.” The raw material of information is data. By adding the context, collected data starts to have meaning. For example, a data item stored as the number 14 does not by itself provide any usable information. If the data item’s context is known, that data item is converted to information. For example, when the data item has:

-

A definition: Such as “the number of newly detected defects”

-

A timeframe: Such as “last week”

-

Relevance: Such as “while system testing software product ABC”

Once one or more data items are converted to information, the resulting information can also be stored as additional data items.

Information in and of itself is not useful until human intelligence is applied to convert it to knowledge

through the identification of patterns and trends, relationships, assumptions, and relevance. Going back to the data item example, if the information regarding the 14 newly-detected defects found last week during the system testing of software product ABC is simply put in a report that no one reads or uses, then the information is never

converted to knowledge. However, if the project manager determines that this is a higher defect arrival rate (trend

) than was found during the previous three weeks (relationship

) and determines that corrective action is needed (assumption

) resulting in the shifting of an additional software engineer onto problem correction (relevance

),

that information becomes knowledge. Of course the project manager can also decide that everything is under control and that no action is necessary. If this is the case, the information has still been converted to knowledge. The right hand side of Figure 18.2

illustrates this transformation of measurement data into information and then knowledge.

The moral is that the goal should never be “To put metrics (or measurements) in place.” That goal can result in an organization becoming DRIP

(Data Rich—Information Poor). The goal should be to provide people with the knowledge they need to make better, fact-based decisions. Metrics are simply the tools to make certain that standards exist for measuring in a consistent, repeatable manner in order to create reliable and valid data. For example, by establishing “what” is being measured through well-defined and understood entities and attributes, and “how” it is being measured through standardizing the mapping system and the conditions under which measures are done. Metrics provide a standardized definition of how to turn collected data into information, and how to interpret that information to create knowledge.

Reliability and Validity

Metric reliability is a function of consistency or repeatability of the measure. A metric is reliable

if different people (or the same person multiple times) can use that metric over and over to measure an identical entity and obtain the same results each time. For example, if two people count the number of lines of code in a source code module, they would both have the same, consistent count (within an acceptable level of measurement error, as defined later in this chapter). Or, if one person measured the cyclomatic complexity of a detailed design element today and then measures the same design element tomorrow, that person would get the same, consistent measure (within an acceptable level of measurement error).

A metric is valid

if it accurately measures what it is expected to measure, that is, if it captures the real value of the attribute it is intended to describe. IEEE (1998d) describes validity in terms of the following criteria:

-

Correlation: Whether there is a sufficiently strong linear association between the attribute being measured and the metric

-

Tracking: Whether a metric is capable of tracking changes in attributes over the life cycle

-

Consistency: Whether the metric can accurately rank, by attribute, a set of products or processes

-

Predictability: Whether the metric is capable of predicting an attribute with the required accuracy

-

Discriminative power: Whether the metric is capable of separating a set of high-quality software components from a set of low-quality software components

This definition of validity actually refers to the internal validity

of the metric or its validity in a narrow sense—does it measure the actual attribute? External validity, also called

predictive validity,

refers to the ability to generalize or transfer the metric results to other populations or conditions. For a metric to be externally valid, it must be internally valid. It must also be able to be used as part of a prediction system, estimation process, or planning model. For example, can the mean time to fix a defect, measured for one project,

be generalized to predict the mean time to fix for other projects? If so, then the mean-time-to-fix metric is considered externally valid. External validity is verified empirically through comparison of the predicted results to the subsequently observed actual results.

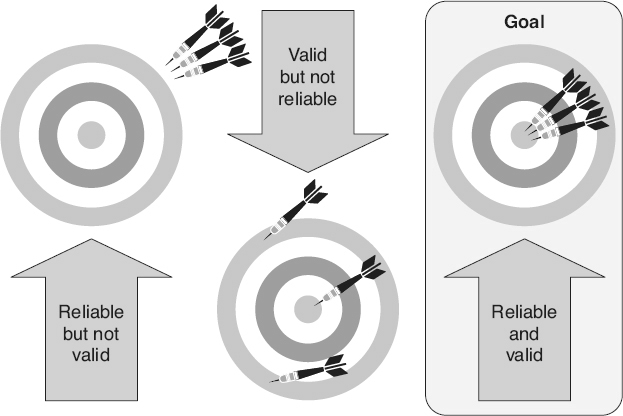

A metric that is reliable but not valid can be consistently measured, but that measurement does not reflect the actual attribute. An example of a metric that is reliable but not valid is using cyclomatic complexity for measuring reliability. Cyclomatic complexity can be consistently measured because it is well-defined and has a consistent mapping system. However, it is internally invalid as a measure of reliability because it is a measure of complexity, not of reliability.

A metric is internally valid but not reliable if it measures what it is supposed to measure, but can not be measured consistently. For example, if criteria are not clearly defined on how to assign numbers to the severity of a reported problem, resulting in different people assigning different severities to the same problem, the severity metric may be valid but not reliable. As illustrated in Figure 18.3

, the goal is to have metrics that are both reliable and internally valid. In fact, IEEE (1998d) actually includes reliability as one of the criteria for validity. For predictive-type metrics, external validity is also required.

To have metrics that are both reliable and valid, there must be agreement on standard definitions for the entity and its attributes that are being measured. Software practitioners may use different terms to mean the same thing. For example, the terms defect

report, problem report, incident report, fault report, anomaly report, issues report or call report may be used by various organizations or teams for the same item. But unfortunately, they may also refer to different items. One organization may use “user call reports” for a user complaint and “problem reports” as the description of a problem in the software. Their customer may use “problem reports” for the initial complaint and “defect reports” for the problem in the software.

Different interpretations of terminology can be a major barrier to correct interpretation and understanding of metrics. For example, a metric was created to report the “trend of open software problems” for a software development manager. The manager was very pleased with the report because she could quickly pull information that had previously been difficult to get from the antiquated problem-tracking tool. One day the manager brought this report to a product review meeting, so she could discuss the progress her team had made in resolving the problem backlog. The trend showed a significant decrease, from over 50 open problems six weeks ago, to only three open problems currently. When she put the graph up on the overhead, the customer service manager exploded. “What is going on here? Those numbers are completely wrong! I know for a fact that my customers are calling me every day to complain about the over 20 open field problems!” The problem was not with the numbers, but with the interpretation of the word “open.” To the software development manager, the problem was no longer open when they had fixed it, checked the source into the configuration management library, and handed it off for system testing. But to the customer service manager, the problem was still open until his customers in the field had their fix.

Figure 18.3

Reliability and validity.

As the above examples illustrate, the software industry has very few standardized definitions for software entities and their attributes. Everyone has an opinion, and the debate will probably continue for many years. An organization’s metrics program can not wait that long. The suggested approach is to adopt standard definitions within an organization and then apply them consistently. Glossaries such as the ISO/IEC/IEEE Systems and Software Engineering—Vocabulary

(ISO/IEC/IEEE 2010) or the glossary in this book can be used as a starting point. An organization can then pick and choose the definitions that correspond with their objectives, or use them as a basis for tailoring their own definitions. It can also be extremely beneficial to include these standard definitions as an appendix to each metrics report (or add definition pop-ups to online reports) so that

everyone who receives the report understands what definitions are being used.

Explicit Measures

Data items can be either explicit or derived measures. Explicit measures,

also called base measures, metrics primitives,

or direct metrics,

are measured directly. The Capability Maturity Model Integration

(CMMI

) for Development

(SEI 2010) defines a base measure as a “measure defined in terms of an attribute and the method for quantifying it.” For example, explicit measures for a code inspection would include the number of:

-

Lines of code inspected (loc_insp)

-

Engineering hours spent preparing for the inspection (prep_hrs)

-

Engineering hours spent in the inspection meeting (insp_hrs)

-

Defects (defects) identified during the inspection (defects)

For explicit measures, the mapping system used to collect the data for each measure must be defined and understood. Some mapping systems are established by using standardized units of measure (for example, dollars, hours, days). Other mapping systems define the counting criteria used to determine what does and does not get counted when performing the measurement. For example, if the metric is the “problem report arrival rate per month,” the counting criteria could be as simple as counting all of the problem reports in the problem-reporting database that had an open date during each month. However, if the measure was “defect counts” instead, the counting criteria might exclude all the problem reports in the database that did not result from a product defect (for example, those defined as works-as-designed, operator error, or withdrawn).

Some counting criteria are very complex, like the counting

criteria for measuring function points, or the criteria for counting effort on a project. In the latter example, the units of effort might be defined in terms of staff hours, months, or years, depending on the size of the project. Other counting criteria decisions would include:

-

Whose time counts: Does the systems analyst’s time count? Does the programmer’s or engineer’s time count? How about the project manager? The program manager? Upper management? The lawyer? The auditor?

-

When does the project start or end: Does the time spent doing the cost / benefit analysis count? Does the time releasing, replicating, delivering, and installing the product in operations count?

-

What activities count: If the programmer’s time counts, does the time they are coding count? How about the time they spend fixing problems on a previous release? Or the time they spend in training?

-

Does overtime count: Does it count if it is unpaid overtime?

Of course, many organizations solve this problem by simply stating that if the time is charged to the project account number, then it counts. But they still must have counting criteria established that define the rules for what to charge to those account numbers.

Having a clearly defined and communicated mapping system helps everyone interpret the measures the same way. The metrics mapping system and, if applicable, data needed based on the associated counting criteria, define the first level of data that needs to be collected in order to implement the metric.

Derived Measures

According to the CMMI for Development

(2010), a derived measure,

also called complex metrics,

is a “measure that is defined as a function

of two or more values of explicit measures,” that is, the mathematical combinations of explicit measures or other derived metrics. For a code inspection, examples of derived metrics would include:

-

Preparation rate: The number of lines of code inspected, divided by the hours spent preparing for the inspection (loc_insp/insp_hrs)

-

Defect detection rate: The number of defects found during the inspection, divided by the sum of the hours spent preparing for the inspections and the hours spent inspecting the work product (defects/ [prep_hrs + insp_hrs])

Most measurement functions include an element of simplification. When creating a function, an organization needs to be pragmatic. If an attempt is made to include all of the elements that affect the attribute or characterize the entity, the functions can become so complicated that the metric is useless. Being pragmatic means not trying to create the perfect function. Pick the explicit measures that are the most important. The ideal measurement function is simple enough to be easy to use, and at the same time provides enough information to help people make better, more informed decisions. Remember that the function can always be modified in the future to include additional levels of detail as the organization gets closer to its goal. The people designing a function should ask themselves:

-

Does the measurement provide more information than is available now?

-

Is that information of practical benefit?

-

Does it tell the individuals performing the measure what they want to know?

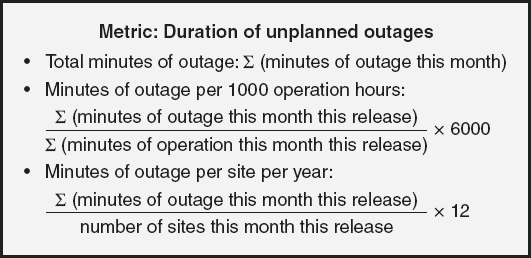

Measurement functions should be selected and tailored to the organization’s information needs. As illustrated in Figure 18.4

, to demonstrate the selection of a tailored function, consider a metric that calculates the duration of unplanned system outages.

Figure 18.4

Different functions for the same metric—examples.

-

If a software system, installed at a single site and running 24/7, is being measured, a simple function such as the sum of all of the minutes of outage for the calendar month may be sufficient

-

If the software system, installed at a single site, runs a varying number of operational hours each month, or if different software releases are installed on a varying number of sites each month, this might lead to the selection of a function such as minutes of outage per 1000 operation hours per release

-

If the focus is on the impact to the customers, this might leadto the selection of a function such as minutes of outage per siteper year

Measurement Scales

There are four different measurement scales

that define the type of data that is being collected. The measurement scale is important because it defines the mathematics, or kinds of statistics, that can be done using the collected data.

The nominal scale

is the simplest form of measurement scale. In nominal scale measurements, items are assigned to a classification (one-to-one mapping), where that classification categorizes the attribute of the entity. Examples of nominal scale measurements include:

-

Development method (waterfall, V, spiral, other)

-

Root cause (logic error, data initialization error, data definition error, other)

-

Document author (Linda, Bob, Susan, Tom, other)

The categories in a nominal scale measurement must be jointly exhaustive and cover all possibilities. This means that every measurement must be assigned a classification. Many nominal scale measures include a category of “other,” so everything fits somewhere. The categories must also be mutually exclusive. Each item must fit one and only one category. If an attribute is classified in one category, it can not be classified in any of the other categories.

The nominal scale does not make any assumptions about order or sequence. The only math that can be done on nominal scale measures is to count the number of items in each category and look at their distributions.

The ordinal scale

classifies the attribute of the entity by order. However, there are no assumptions made about the magnitude of the differences between categories (a defect with a critical severity is not twice as bad as one with a major severity). Examples of ordinal scale measurements include:

-

Defect severity (critical, major, minor)

-

Requirement priority (high, medium, or low)

-

SEI CMM level (1 = initial, 2 = repeatable, 3 = defined,4 = managed, 5 = optimized)

-

Process effectiveness (1 = very ineffective, 2 = moderately ineffective, 3 = nominally effective, 4 = moderately effective,5 = very effective)

Since there is order, the transitive property

is valid for ordinal scale measures, that is if data item A > data item B and data item B > data item C, then data item A > data item C. However, without an assumption of magnitude, mathematical operations of addition, subtraction, multiplication, and division can not be used on ordinal scale measurement values.

For interval scale

measurements, the exact distance between the data items is known. This allows the mathematical operations of addition and subtraction to be applied to interval scale measurement values. However, there is no absolute or non-arbitrary zero point in the interval scale, so multiplication and division do not apply. The classic example of an interval scale measure is calendar time. For example, it is valid to say, “May 1st

plus 10 days is May 11th

,” but saying “May 1st

times May 11th

” is invalid. Interval scale measurements require well-established units of measure that can be agreed upon by everyone using the measurement. Either the mode or the median can be used as the central tendency statistic for interval scale metrics.

In the ratio scale,

not only is the exact distance between the scales known, but there is an absolute or non-arbitrary zero point. All mathematical operations can be applied to ratio scale measurement values, including multiplication and division. Examples of ratio scale measurement include:

-

Defect counts

-

Defect density (defects per size)

-

Minutes of outage

-

Hours of effort

-

Cycle times

-

Rates (for example arrival rates and fix rates)

Note that since derived measures are mathematical combinations of two or more explicit measures or other derived measures, those explicit and derived measures must be at a minimum interval scale measurements (if only addition and subtraction are used in the measurement function) and they are typically ratio scale measurements.

Variation

To start the discussion of variation, consider an exercise from Shewhart’s book, Economic Control of Quality of Manufactured Product

(Shewhart 1980). This exercise begins by having a person write the letter “a” on a piece of paper. The person is then asked to write another “a” identical to the first one; then another just like it, then another, until the person has 10 a’s on the paper. When all of the letters are compared, are they all perfectly identical or are there variations between them? The instructions were to make all of the a’s identical, but as hard as the person may have tried, that person can not make them all identical. But, why can a person not write ten identical a’s? Most people could probably think of multiple reasons why there are variations between the letters. Examples might include:

-

The ink flow in the pen is not uniform

-

The paper is not perfectly smooth

-

The person’s hand placement was not the same each time

People accept the fact that there are many reasons for the

variation, and that it is impractical, and most likely impossible, to try to remove them all. If two people were asked to do this same exercise, there would probably be even more variation between one person’s a’s and another person’s a’s., because different people doing the same task adds another cause of variation.

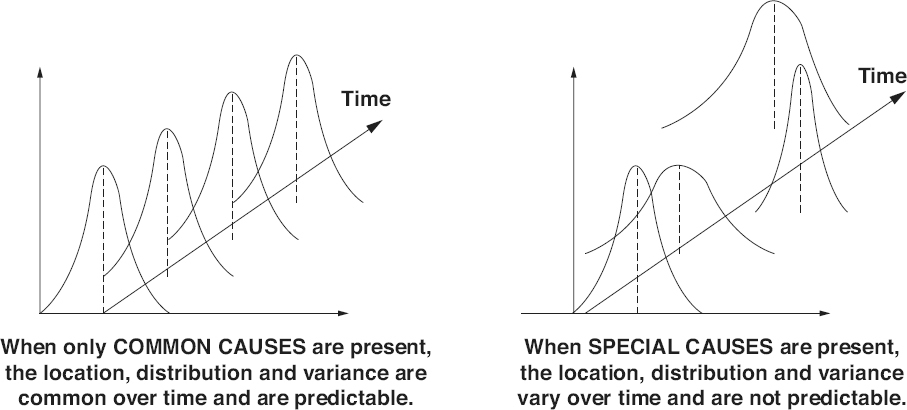

Quality practitioners talk about two distinct sources for variation. The first of these sources is called common causes,

also known as random causes

or chance causes

of variation. Common causes of variation are the normal or usual causes of variation that are inherent in a consistent, repeatable process. A process is said to be in statistical control

when it only exhibits common causes of variation. As long as the process does not change, these common causes of variation will not change. Therefore, based on historic data or on a sample set of data from the current process, practitioners can quantify common causes of variation in order to predict the amount of variation that will occur in the future.

Common cause variation comes from normal, expected fluctuations in various factors, typically classified as influences from:

-

People (worker) influences

-

Machinery influences

-

Environmental factors

-

Material (input) influences

-

Measurement influences

-

Method influences

The only way to change or reduce the common causes of variation is to change the process itself (for example, through process improvement). It should be noted that there is always some level of variation in every process, and that not all common causes of variation can be or should be eliminated. There are engineering trade-offs to be made. For example, eliminating common causes of

variation in one part of the process may cause problems in another part of the process, or it may not be economically feasible to eliminate a source of common cause variation.

The second source of variation is special causes,

also called assignable causes,

which are outside the normal “noise” of the process and result in abnormal or unexpected variation. Special causes of variation include:

-

One-time perturbations: For example, someone bumps the person’s elbow while he/ she is writing an a.

-

Things that cause trends: For example, the person’s hand starts getting tired after written the thousandth a.

-

Shifts and jumps: For example, making process improvement changes is a special cause that will typically result in a shift in the amount of variation in the process. Another example of a shift or jump may be caused by changing the person performing the task.

-

Unexpected variation in quantity or quality of the inputs to process: Such as raw materials, components, or subassemblies. For example, the pen’s ink gets clogged or starts skipping.

If special causes of variation exist in a process, that process is not performing at its best and is considered out of control.

In this case, the process is not sufficiently stable enough to use historic data or samples from the current process to predict the amount of variation that will occur in the future. That means that statistical quality control cannot be applied to processes that are out of control. Special causes of variation can usually be detected, and actions to eliminate these causes can typically be economically justified.

Statistics and Statistical Process Control

Statistics

is defined as the science of the collection, organization, analysis, and interpretation of data. Statistical quality control

refers to the use of measurement and statistical methods in the monitoring and maintaining of the quality of products and services. Faced with a large amount of data, it may seem daunting to try to turn that data into information that can be used to create knowledge. However, there are some basic descriptive statistics that can be used to characterize a set of data items in order to provide needed information about a data set.

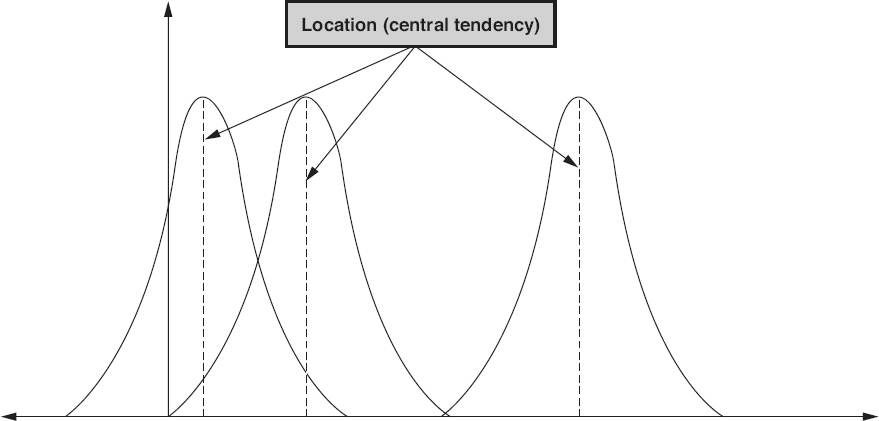

The location

of the data set refers to the typical value or central tendency that is exhibited by most data sets. The location refers to the way in which data items tend to cluster around one or more values in that data set. Figure 18.5

shows examples of three data sets, each with the same variance and shape, but with three different locations.

Three statistics are typically used to represent the location of a data set:

-

The mean is the arithmetic average of the numbers in the data set. The mean is “used for symmetric or near-symmetric distributions or for distributions that lack a clear, dominant single peak” (Juran 1999). The mean is calculated by summing the data values and dividing by the number of data items. For example, if the data items were 3, 5, 10, 15, 19, 21, 25, the mean would be (3 + 5 + 10 + 15 + 19 + 21 + 25)/7 = 14. The mean is probably the most used statistic in the quality field. It is used to report and/or predict “expected values” (for example, average size, average yield, average percent defective, mean time to failure, and mean time to fix). However, since division is involved in calculating the mean, it can only be used on ratio scale measurement data.

-

The median is the middle value when the numbers are arranged according to size. The median is “used for reducing the effects of extreme values or for data that can be ranked but are not economically measurable (shades of color, visual appearance, odors)” (Juran 1999). For example, if the data items were 3, 5, 10, 15, 19, 21, 102, the median would be 15. If there is an even number of items in the data set, then the median is calculated by adding the two middle values and dividing by two. For example, if the data items were 3, 5, 10, 15, 19, 21, 46, 102, the middle two items are 15 and 19, so the median would be calculated by (15 + 19)/2 = 17. Since the median requires the data items to be sorted in order, it can not be used on nominal scale measurement data.

-

The mode is the value that occurs most often in the data. The mode is “used for severely skewed distributions, describing irregular situations where two peaks are found, or for eliminating the effects of extreme values” (Juran 1999). For example, if the data items were, 1, 1, 1, 1, 1, 3, 3, 5, 10, 21, 96, the mode would be 1 (the most frequently occurring value). For the data set 1, 2, 3, 4, 5, there are 5 modes because all 5 data items have the same number of occurrences. The mode is also used for nominal scale measurement data.

Figure 18.5

Data sets with different locations—examples.

In most data sets, the individual data items tend to cluster around the location and then spread or scatter out from there toward the extreme or extremes of the data set. The extent of this scattering is the variance,

also called the spread

or dispersion,

of the data set. The variance is the amount by which smaller values in the data set differ from larger values. Figure 18.6

shows an example of three data sets, each with the same location and shape, but with three different variances.

The simplest measure of variance is the range of the data set. The range

is the difference between the maximum value and the minimum value in the data set. The more variance there is in the data set, the larger the value of the range will be. For example, if the data items were 3, 5, 10, 15, 19, 21, 25, the range would be equal to 25 − 3 = 22.

The most important measure of variance from the perspective of statistical quality control is standard deviation. Standard deviation

is a measure of how much variation there is from the mean of the data set. A low value for standard deviation indicates that the data values are tightly clustered around the mean. The

larger the standard deviation, the more spread out the data values are from the mean. The Greek letter sigma (σ) is used to represent the standard deviation of the entire population, and the letter “s” is used to represent the standard deviation for a sample from that population.

Figure 18.6

Data sets with different variances—examples.

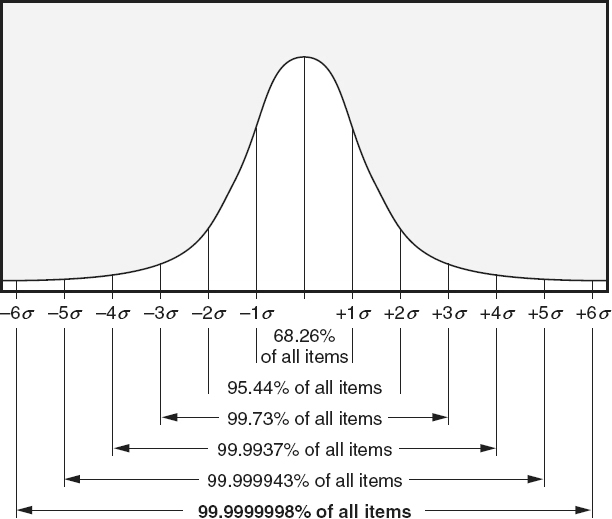

In statistics, the distribution

describes the shape of a set of data. More specifically, the probability distribution

describes the range of possible values for the data in the set and the probability that any given random value selected from that population is within a measurable subset of that range. Figure 18.7

illustrates a normal distribution,

also called a Gaussian distribution

or bell curve.

A normal distribution

is a continuous distribution where there is a concentration of observations around the mean, and the shape is a symmetrical bell-like shape. In a normal distribution, the three location statistics of mean, median, and mode are all equal. Figure 18.7

also illustrates the percentage of data items under a normal distribution curve as plus and minus various standard deviations.

For example, 68.26 percent of all data items in a normal distribution fall within ±1 standard deviation under the curve. At ±3 standard deviations, 99.73 percent of all data items in a normal distribution fall under the curve. Calculations for the standard deviation are dependent on the distribution of the data set. The normal distribution is the most well-known and used probability distribution. Data sets for many quality characteristics can be estimated and/ or described using a normal distribution. As discussed in Chapter 19

, normal distributions are also used as the basis for creating statistical process control charts.

A normal distribution is just one type of distribution or shape that a data set can have. There are also other types of distributions, grouped into two major classifications:

-

The shape of a set of variable data is described by a continuous distribution. A normal distribution is one example of a continuous distribution. Uniform exponential, triangular, quadratic, cosine, and u-shaped are other common continuous distributions.

-

The shape of a set of attribute data is described by a discrete distribution. Common discrete distributions include, uniform, Poisson, binomial, and hyper geometric distributions.

Figure 18.7

Normal distribution curve with standard deviations.

Figure 18.8

Common cause and special cause impacts on statistics—examples.

When only common cause variation exists in a process, the location, distribution, and variance statistics of the data collected from that process will be stable and predictable over time, as illustrated on the left side of Figure 18.8

. This means that statistics for a data set collected from the process today will be the same as for the data set collected next week, and the week after that, and the week after that and so on.

Whenever special causes of variation are introduced into the process, the location, distribution, and variance statistics of data sets collected from that process will vary over time, and will no longer be predictable, as illustrated on the right side of Figure 18.8

.

Choose appropriate metrics to assess various software attributes (e.g., size, complexity, the amount of test coverage needed, requirements volatility, and overall system

performance). (Apply)

BODY OF KNOWLEDGE V.A.2

Metric Customers

With all of the possible software entities and attributes, it is easy to see that a huge number of possible metrics could be implemented. So how does an organization or team decide which metrics to use? The first step is to identify the customer. The customer of the metrics is the person or team who will be making decisions or taking action based on the metrics. The customer is the person who needs the information supplied by the metrics.

If a metric does not have a customer—someone who will make a decision based on that metric (even if the decision is “everything is fine—no action is necessary”)—then stop producing that metric. Remember that collecting data and generating metrics is expensive, and if the metrics are not being used, it is a waste of people’s time and the organization’s money.

There are many different types of customers for a metrics program. This adds complexity because each customer may have different information requirements. It should be remembered that metrics do not solve problems—people solve problems. Metrics can only provide information so that those people can make informed decisions based on facts rather than “gut feelings.” Customers of metrics may include:

-

Functional managers: Are interested in applying greater control to the software development process, reducing risk, and maximizing return on investment.

-

Project managers: Are interested in being able to accurately predict and control project size, effort, resources, budgets, and schedules. They are also interested in controlling the projects they are in charge of and communicating facts to management.

-

Individual software practitioners: Are interested in making informed decisions about their work and work products. They will also be responsible for generating and collecting a significant amount of the data required for the metrics program.

-

Specialists: The individuals performing specialized functions (marketing, software quality assurance, process engineering, configuration management, audits and assessments, customer technical assistance). Specialists are interested in quantitative information on which they can base their decisions, findings, and recommendations.

-

Customers and users: Are interested in on-time delivery of high-quality, useful software products and in reducing the overall cost of ownership.

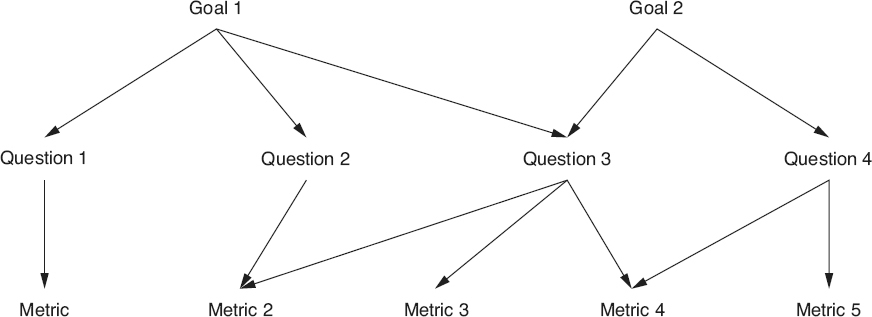

Selecting Metrics

Basili and his colleagues defined a goal/question/metric

paradigm, which provides an excellent mechanism for defining a goal-based measurement program (Grady 1992). The goal/question/metric paradigm is illustrated in Figure 18.9

. The first step to implementing the goal/question/metric paradigm is to select one or more measurable goals for the customer of the metrics:

-

At the organizational level, these are typically high-level strategic goals. For example, being the low-cost provider, maintaining a high level of customer satisfaction, or meeting projected revenue or profit margin targets.

-

At the project level, the goals emphasize project management and control issues, or project-level requirements and objectives. These goals typically reflect the project success factors such as on-time delivery, finishing the project within budget, delivering software with the required level of quality or performance, or effectively and efficiently utilizing people and other resources.

-

At the specific task level, goals emphasize task success factors. Many times these are expressed in terms of satisfying the entry and exit criteria for the task.

Figure 18.9

Goal/question/metric paradigm.

The second step is to determine the questions that need to be answered in order to determine whether each goal is being met or if progress is being made in the right direction. For example, if the goal is to maintain a high level of customer satisfaction, questions might include:

-

What is our current level of customer satisfaction?

-

What attributes of our products and services are most important to our customers?

-

How do we compare with our competition?

-

How do problems with our software affect our customers?

Finally, metrics are selected that provide the information needed to answer each question. When selecting metrics, be practical, realistic, and pragmatic. Metrics customers are turned off by

metrics that they see as too theoretical. They need information that they can interpret and utilize easily. Avoid the “ivory tower” perspective that is completely removed from the existing software engineering environment (currently available data, processes being used, and tools). Customers will also be turned off by metrics that require a great deal of work to collect new or additional data. Start with what is possible within the current process. Once a few successes are achieved, the metrics customers will be open to more radical ideas—and may even come up with a few metrics ideas of their own.

Again, with all of the possible software entities and attributes, it is easy to see that a huge number of possible metrics could be implemented. This chapter touches on only a few of those metrics as examples of the types of metrics used in the software industry. The recommended method is to use the goal/question/metric paradigm, or some other mechanism, to select appropriate metrics that meet the information needs of an organization and its teams, managers, and engineers. It should also be noted that these information needs will change over time. The metrics that are needed during requirements activities will be different than the metrics needed during testing, or once the software is being used in operations. Some metrics are collected and reported on a periodic basis (daily, weekly, monthly), others are needed on an event-driven basis (when an activity or phase is started or stopped), and others are used only once (during a specific study or investigation). The Software Capability Maturity Module Integration (CMMI) for Development, Service and Acquisition (SEI 2010; SEI 2010a; SEI 2010b) includes a Measurement and Analysis process area which provides a road map for developing and sustaining a measurement program.

As discussed previously, metrics must define the entity and the attributes of that entity that are being measured. Software product entities

are the outputs of software development, operations, and

maintenance processes. These include all of the artifacts, deliverables, and documents that are produced. Examples of software product entities include:

-

Requirements documentation

-

Software design specifications and models (entity diagrams, data flow diagrams)

-

Code (source, object, and executable)

-

Test plans, specifications, cases, procedures, automation scripts, test data, and test reports

-

Project plans, budgets, schedules, and status reports

-

Customer call reports, change requests and problem reports

-

Quality records and metrics

Examples of attributes associated with software product entities include size, complexity, number of defects, test coverage, volatility, reliability, availability, performance, usability and maintainability.

Size—Lines of Code

Lines of code

(LOC) counts are one of the most used and most often misused of all the software metrics. Some estimation methods are based on KLOC

(thousands of lines of code). The LOC metric may also be used in other derived metrics to normalize measures so that releases, projects, or products of different sizes can be compared (for example, defect density or productivity). The problems, variations, and anomalies of using lines of code are well documented (Jones 1986). Some of these include:

-

Problems in counting LOC for systems using multiple languages

-

Difficulty in estimating LOC early in the software life cycle

-

Productivity paradox—if productivity is measured in LOC per staff month, productivity appears to drop when higher-level languages are used, even though higher-level languages are inherently more productive

No industry-accepted standards exist for counting LOC. Therefore, it is critical that specific criteria for counting LOC be adopted for the organization. Considerations include:

-

Are physical or logical lines of code counted?

-

Are comments, data definitions, or job control language counted?

-

Are macros expanded before counting? Are macros counted only once?

-

How are products that include different languages counted?

-

Are only new and changed lines counted, or are all lines of code counted?

The Software Engineering Institute (SEI) has created a technical report specifically to present guidelines for defining, recording, and reporting software size in terms of physical and logical source statements (CMU/SEI 1992). This report includes check sheets for documenting criteria selected for inclusion or exclusion in LOC counting for both physical and logical LOC.

Size—Function Points

Function points

is a size metric that is intended to measure the size of the software without considering the language it is written in. The function point counting procedure has five steps:

Step 1:

Decide what to count (total number of function points, or just new or changed function points).

Step 2:

Define the boundaries of the software being measured.

Step 3:

Count the raw function points, which are determined by adding together the weighted counts for each of the following five function types, as illustrated in Figure 18.10

:

-

External input: The weighted count of the number of unique data or control input types that cross the external boundary of the application system and cause processing to happen within it

-

External output: The weighted count of the number of unique data or control output types that leave the application system, crossing the boundary to the external world and going to any external application or element

-

External inquiry: The weighted count of the number of unique input/output combinations for which an input causes and generates an immediate output

-

Internal logical file: The weighted count of the number of logical groupings of data and control information that are to be stored within the system

-

External interface file: The weighted count of the number of unique files or databases that are shared among or between separate applications

Within each function type, the counts are weighted based on complexity and other contribution factors. Raw function point counts have clearly defined measurement methods, as established by the International Function Point Users Group (IFPUG).

Step 4:

Assign a degree of influence to each of the 14 defined by IFPUG. These factors are used to measure the complexity of the software. Each factor is measured on a scale of zero to five (five being the highest). These adjustment factors include:

-

Data communications

-

Distributed data or processing

-

Performance objectives

-

Heavily used configuration

-

Transaction rate

-

Online data entry

-

End user efficiency

-

Online update

-

Complex processing

-

Reusability

-

Conversion and installation ease

-

Operational ease

-

Multiple site use

-

Facilitate change

Figure 18.10

Function points.

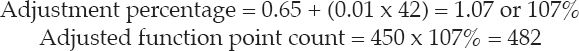

Step 5:

Adjust the raw function point count. To do this, the sum of all 14 degrees of influence to the value adjustment factors is multiplied by 0.01 and added to 0.65. This results in a value from

65 percent to 135 percent. That percentage is then multiplied by the raw function point count to calculate the adjusted function point count. For example, if the sum of the degrees of influence is 42 and the raw function point count is 450, then the adjusted function point count is calculated as:

As with lines of code, function points are used as the input into many project estimation tools, and may also be used in derived metrics to normalize those metrics, so that releases, projects, or products of different sizes can be compared (for example, defect density or productivity).

Other Size Metrics

There are many other metrics that may be used to measure the size of different software products:

-

In object-oriented development, size may be measured in terms of the number of objects, classes, or methods.

-

Requirements size may be measured in terms of the count of unique requirements, or weights may be included in the counts (for example, large requirements might be counted as 5, medium requirements as 3, and small requirements as 1). Other examples of weighted requirements counts include story points used in agile (the estimation of story points is discussed in Chapter 15 ), and use case points (based on the number and complexity of the use cases that describe the software application, and the number and type of the actors in those use cases, adjusted by factors of the technical complexity of the product and the environmental complexity of the project).

-

Design size may be measured in terms of the number of design elements (configuration items, subsystems, or source code modules).

-

Documentation is typically sized in terms of the number of pages or words, but for graphics-rich documents, counts of the number of tables, figures, charts, and graphs may also be valuable as size metrics.

-

The testing effort is often sized in terms of the number of test cases or weighted test cases (for example, large test cases might be counted as 5, medium test cases as 3, and small test cases as 1).

Complexity—Cyclomatic Complexity

McCabe’s cyclomatic complexity

is a measure of the number of linearly independent paths through a module or detailed design element. Cyclomatic complexity can therefore be used in structural testing to determine the minimum number of path tests that must be executed for complete coverage. Empirical data indicates that source code modules with a cyclomatic complexity of 10 or greater are more defect prone and harder to maintain.

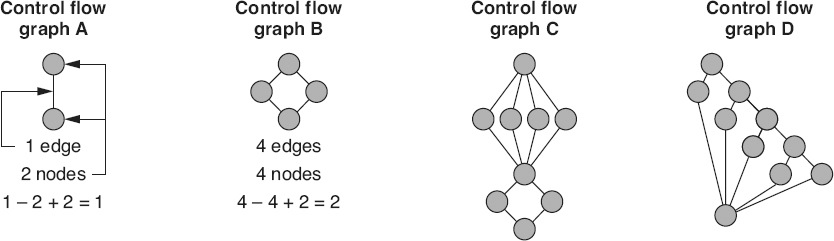

Cyclomatic complexity is calculated from a control flow graph by subtracting the number of nodes from the number of edges, and adding two times the number of unconnected parts of the graph (edges—nodes + 2p). In well-structured code, with one entry point and one exit point, there is only a single part to the graph, so p = 1. Cyclomatic complexity is a measure that is rarely calculated manually. This is a measurement where static analysis tools are extremely useful and efficient for calculate the cyclomatic complexity of the code. This explanation is included here so people understand what the tool is doing when it measures cyclomatic complexity

As illustrated in Figure 18.11

:

-

Straight-line code (control flow graph A) has one edge, two nodes and one part to the graph, so its cyclomatic complexity is 1 − 2 + 2 X 1 = 1

-

Control flow graph B is a basic if-then-else structure and has four edges and four nodes, so its cyclomatic complexity is 4 − 4 + 2 X 1 = 2

-

Control flow graph C is a case statement structure followed by an if-then-else structure. Graph C has 12 edges and nine nodes, so its cyclomatic complexity is 12 − 9 + 2 X 1 = 5.

Another way of measuring the cyclomatic complexity for well-structured software (no two edges cross each other in the control flow graph, and there is one part to the graph) is to count the number of regions in the graph. For example:

-

Control flow graph C divides the space into five regions, four enclosed regions, and one for the outside, so its cyclomatic complexity is again 5

-

Control flow graph A has a single region and a cyclomatic complexity of 1

-

Control flow graph B has two regions and a cyclomatic complexity of 2

-

Control flow graph D is a repeated if-then-else structure and has 13 edges and 10 nodes, so its cyclomatic complexity is 13 − 10 + 2 X 1 = 5 (note that it also has five regions)

Figure 18.11

Cyclomatic complexity—examples.

Just to illustrate an example of a software component where the number of parts is greater than one, assume an old piece of poorly structured legacy code has two entry points and two exit points. For example, assume control flow graphs C and D in Figure 18.11

are both part of the same source code module. That module would have 25 edges (12 from C and 13 from D), 19 nodes (9 from C and 10 from D) and 2 parts (part C and part D that are not connected). The cyclomatic complexity would be 25 − 19 + (2*2) = 10. This makes sense because both control flow graphs C and D each had a cyclomatic complexity of 5, so together they should have a cyclomatic complexity of 10.

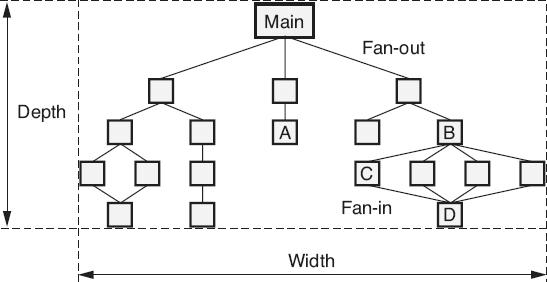

Complexity—Structural Complexity

While cyclomatic complexity is looking at the internal complexity of an individual design element or source code module, structural complexity is looking at the complexity of the interactions between the modules in a calling structure (or in the case of object-oriented development, between the classes in an inheritance tree). Figure 18.12

illustrates four structural complexity metrics. The depth and width metrics focus on the complexity of the entire structure. The fan-in and fan-out metrics focus on the individual elements within that structure.

The depth

metric is the count of the number of levels of control

in the overall structure or of an individual branch in the structure. For example, the branch in Figure 18.12

that starts with the element labeled “Main” and goes to the element labeled “A” has a depth of three. Each of the four branches that starts with the element labeled “Main” and go to the element labeled “D” has a depth of five. The depth for the entire structure is measured by taking the maximum depth of the individual branches. For example, the structure in Figure 18.12

has a depth of five.

The width

metric is the count of the span of control in the overall software system, or of an individual level within the structure. For example, the level that includes the element labeled “Main” in Figure 18.12

has a width of one. The level that includes the elements labeled “A” and “B” has a width of five. The level that includes the element labeled “C” has a width of seven. The width for the entire structure is equal to the maximum width of the individual levels. For example, the structure in Figure 18.12

has a width of seven.

Depth and width metrics can help provide information for making decisions about the integration and integration testing of the product and the amount of effort required.

Fan-out

is a measure of the number of modules that are directly called by another module (or that inherit from a class). For example, the fan-out of module Main in Figure 18.12

is three. The fan-out of module A is zero, the fan-out of module B is four, and the fan-out of module C is one.

Figure 18.12

Structural complexity—examples.

Fan-in

is the count of the number of modules that directly call a module (or that the class inherits from). For example, in Figure 18.12

, the fan-in of module A is one, the fan-in of module D is four, and the fan-in of module Main is zero.

Fan-in and fan-out metrics provide information for making decisions about the integration and integration testing of the product. These metrics can also be useful in evaluating the impact of a change and the amount of regression testing needed after the implementation of a change

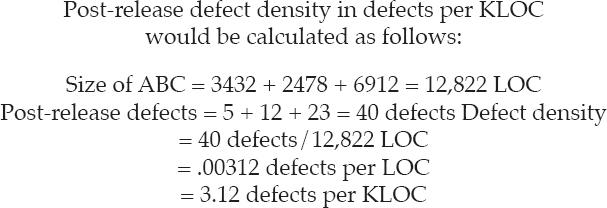

Quality—Defect Density

Defect density is a measure of the total known defects divided by the size of the software entity being measured (Number of known defects/Size). The number of known defects is the count of total defects identified against a particular software entity during a particular time period. Examples include:

-

Defects to date since the creation of the module

-

Defects found in a work product during an inspection

-

Defects to date since the shipment of a release to the customer

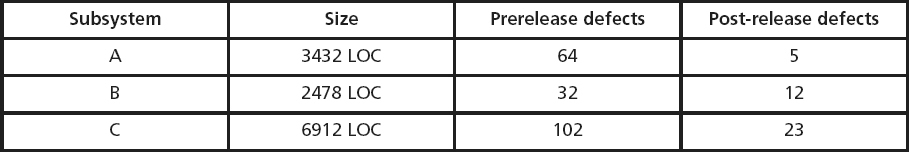

For defect density, size is used as a normalizer to allow comparisons between different software entities (for example, source code modules, releases, products) of different sizes. To demonstrate the calculation of defect density, Table 18.1

illustrates the size of the three major subsystems that make up the ABC software system and the number of prerelease and post-release defects discovered in each subsystem.

Defect density is used to compare the relative number of defects in various software components. This helps identify candidates for additional inspection or testing, or for possible reengineering or replacement. Identifying defect-prone components allows the concentration of limited resources into areas with the highest potential return on the investment. Typically this is done using a Pareto diagram as illustrated in Figure 19.16

.

Another use for defect density is to compare subsequent releases of a product to track the impact of defect reduction and quality improvement activities as illustrated in Figure 18.13

. Normalizing defect arrival rates by size allows releases of varying size to be compared. Differences between products or product lines can also be compared in this manner.

Table 18.1

Defect density inputs—example.

Figure 18.13

Post-release defect density—example.

Quality—Arrival Rates

Arrival rates

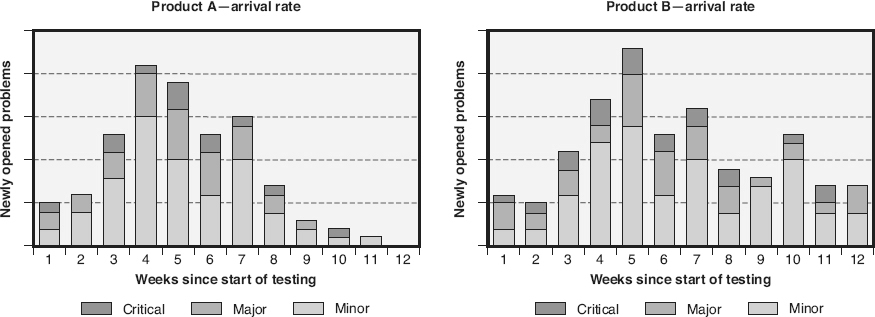

graph the trends over time of problems newly opened against a product. Note that this metric looks at problems, not defects. Testers, customers, technical support personnel, or other originators report problems because they think there is something wrong with the software. The software developers must then debug the software to determine if there is actually a defect.

In some cases, the problem report may be closed after this analysis as “operator error,” “works as designed,” “can not duplicate,” or some other non-defect-related disposition.

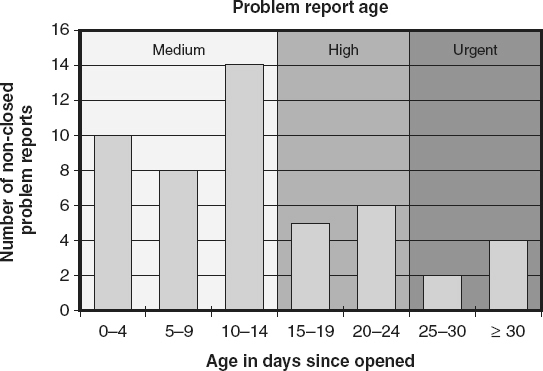

Prior to release, the objective of evaluating the arrival rate trends is to determine if the product is stable or moving toward stability. As illustrated in Figure 18.14

, when testing is first gearing up, arrival rates may be low. During the middle of testing, arrival rates are typically higher with some level of variation. However, as shown for product A, the goal is for the arrival rates to trend downward toward zero or stabilize at very low levels (the time between failures should be far apart) prior to the completion of testing and release of the software. By stacking the arrival rates by severity, additional information can be analyzed. For example, it is one thing to have a few minor problems still being found near the end of testing, but if critical problems are still being found, it might be appropriate to continue testing. Product B in Figure 18.14

does not exhibit this stabilization and therefore indicates that continued testing is appropriate. However, arrival rate trends by themselves are not sufficient to signal the end of testing. This metric could be made to “look good” simply by slowing the level of effort being expended on testing. Therefore, arrival rates should be evaluated in conjunction with other metrics, including effort rates, when evaluating test sufficiency.

Post-release arrival rate trends can also be evaluated. In this case the goal is to determine the effectiveness of the defect detection and removal processes, and process improvement initiatives. As illustrated in Figure 18.13

, post-release defect arrival rates can be normalized by release size in order to compare them over subsequent releases. Note that this metric must wait until after the problem report has been debugged, and counts only defects.

Figure 18.14

Problem report arrival rate—examples.

Figure 18.15

Cumulative problem reports by status—examples.

Quality—Problem Report Backlog

Arrival rates only track the number of problems being identified in the software. Those problems must also be debugged and defects corrected to increase the software product’s quality before that software is ready for release. Therefore, tracking the

problem report backlog

over time provides additional information. The cumulative problem reports by status metric illustrated in Figure 18.15

combines arrival rate information with problem report backlog information. In this example, four problem report statuses are used:

-

Open: The problem has not been debugged and corrected (or closed as not needing correction) by development

-

Fixed: Development has corrected the defect and that correction is awaiting integration testing

-

Resolved: The correction passed integration testing and is awaiting system testing

-

Closed: The correction passed system testing, or the problem was closed because it was not a defect

The objectives for this metric, as testing nears completion, is for the arrival rate trend (the shape of the cumulative curve) to flatten—indicating that the software has stabilized— and for all of the known problems to have a status of closed.

Quality—Data Quality

The ISO/IEC Systems and Software Engineering—Systems and Software Quality Requirements and Evaluation (SQuaRE)—Measurement of Data Quality

standard (ISO/IEC 2015) lists the following quality measures for data quality and provides associated measures:

-

Accuracy: A measure of the level of precision, correctness, and/or freedom from error in the data

-

Completeness: A measure of the extent to which the data fully includes all of the required attribute values for its associated entity

-

Consistency: A measure of extent to which the data is free from contradictions and inconsistencies, including the data’s strict and uniform adherence to prescribed data formats, types, structures, symbols, notations, semantics, and conventions

-

Credibility: A measure of the degree to which the users believe the data truly reflect the actual attribute values of the entities they describe

-

Currentness: A measure of timeliness of the data—is the data up-to-date enough for its intended use

-

Accessibility: A measure of the ease with which the data can be accessed

-

Compliance: A measure of the data’s adherence to required standards, regulations and conventions

-

Confidentiality: A measure of the degree to which only authorized users have access to the data

-

Efficiency: A measure of the degree to which the data’s performance characteristics can be accessed, stored, processed, and provided within the context of specific resources (including, memory, disk space, processor speed, and so on)

-

Precision: A measure of the data’s exactness and discrimination, representing the true values of the attributes for its associated entity

-

Traceability: A measure of the degree to which an audit trail exists of any events or actions that accessed or changed the data

-

Understandability: A measure of the degree to which the data can be correctly read and interpreted by the users

-

Availability: A measure of the extent to which the data is available for access and/ or use when needed

-

Portability: A measure of the effort required to migrate the data to a different platform or environment

-

Recoverability: A measure of the effort required to retrieve, refresh, or otherwise recover the data if it is lost, corrupted,or damaged in any way

Amount of Test Coverage Needed

There are a number of metrics that can help predict the amount of test coverage needed. For example, for unit-level white-box tests, metrics for measuring the needed amount of test coverage might include:

-

Number of source code modules tested: At its simplest, coverage is measured to confirm that all source code modules are unit tested

-

Number of lines of code: Coverage is measured to confirm that all statements are tested

-

Cyclomatic complexity: Coverage is measured to confirm that all logically independent paths are tested

-

Number of decisions: Coverage is measured to confirm that all choices out of each decision are tested

-

Number of conditions: Coverage is measured to confirm that all conditions used as a basis for all decisions are tested

For integration testing, metrics for measuring the needed amount of test coverage might include:

-

Fan-in and fan-out: During integration testing, coverage is measured to confirm that all interfaces are tested

-

Integration complexity: Coverage is measured using McCabe’s integration complexity metric to confirm that independent paths through the calling tree are tested

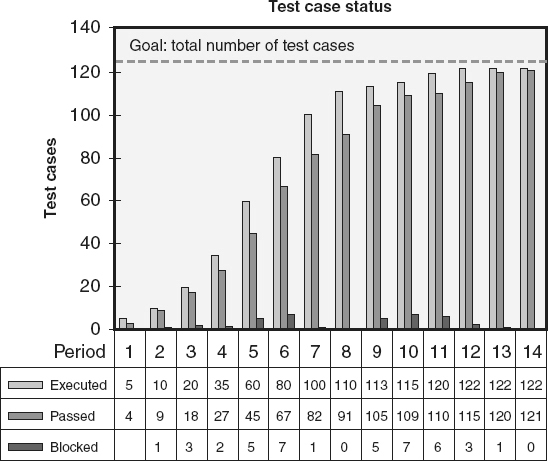

For functional (black box) testing, metrics for measuring the

needed amount of test coverage might include the number of requirements and the historic number of test cases per requirement. By multiplying these two metrics (Number of requirements × Historic number of test cases per requirement), the number of functional test cases for the project can be estimated. A forward-traceability metric can be used to track test case coverage during test design. For example, dividing the number of functional requirements that trace forward to test cases by the total number of functional requirements provides an estimate of the completeness of functional test case coverage. A graph such as the one depicted in Figure 18.16

can then be used to track the completeness of test coverage against the number of planned test cases during test execution.

Figure 18.16

Completeness of test coverage—examples.

Requirements Volatility

Requirements volatility,

also called requirements churn

or scope creep,

is a measure of the amount of change in the requirements once they are baselined. Jones (2008) reports that in the United States, requirements volatility averages range from:

-

0.5 percent monthly for end user software

-

1.0 percent monthly for management information systems and outsourced software

-

2.0 percent monthly for systems and military software

-

3.5 percent monthly for commercial software

-

5.0 percent monthly for Web software

-

10.0 percent monthly for agile projects, however, Jones states that the “high rate of creeping requirements for agile projects is actually a deliberate part of the agile method”

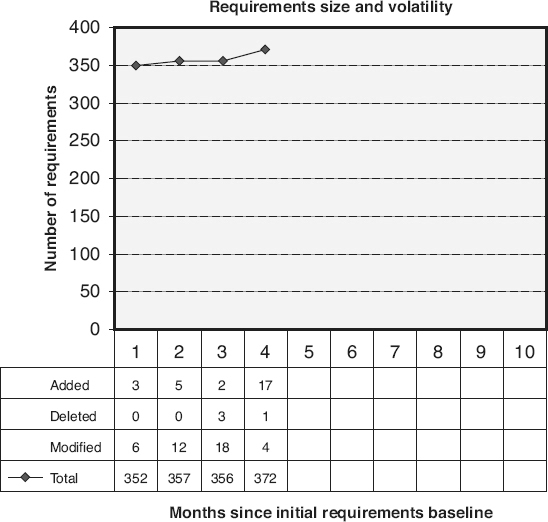

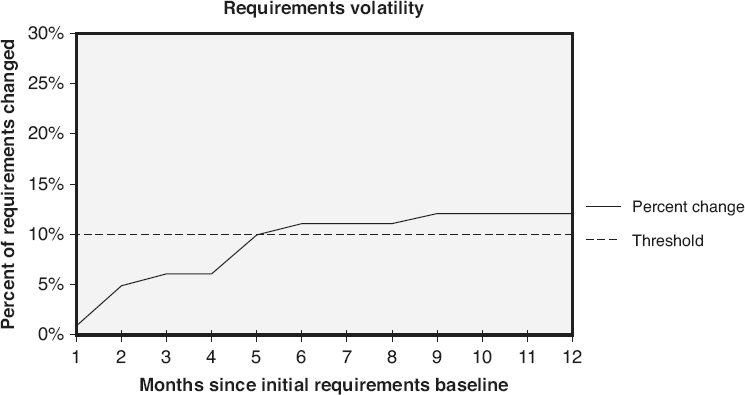

It is not a question of “if the requirements will change” during a project, but “how much will the requirements change?” Since requirements change is inevitable, it must be managed, and to appropriately manage requirements volatility, it must be measured. Figure 18.17

is an example of a graph that tracks requirements volatility as the number of changes to requirements over time. The line on this graph reports the current requirements size (number of requirements or weighted requirements). The data table includes details about the number of requirements added, deleted, and modified. This detail is necessary to understand the true requirements volatility. For example, if five requirements are modified, two new requirements are added, and two other requirements are deleted, the number of requirements remains unchanged even though a significant amount of change has occurred.

Figure 18.17

Requirements volatility: change to requirements size—example.

Another example of a requirements-volatility metric is illustrated in Figure 18.18

. Instead of tracking the number of changes, this graph looks at the percentage of baselined requirements that have changed over time. A good project manager understands that the requirements will change and takes this into consideration when planning project schedules, budgets, and resource needs. The risk is not that requirements will change—

that is a given. The risk is that more requirements change will occur than the project manager estimated when planning the project. In this example, the project manager estimated 10 percent requirements volatility, and then tracked the actual volatility to that threshold. In this example, the number of changed requirements is the cumulative count of all requirements added, deleted, or modified after the initial requirements are baselined and before the time of data extraction. If the same requirement is changed more than once, the number of changed requirements is incremented once for each change. These metrics act as triggers for contingency plans based on this risk.

Other metrics used to measure requirements volatility include:

-

Function point churn: The ratio of the function point changes to the total number of function points baselined

-

Use case point churn: The ratio of the use case point changes to total number of use case points baselined

Reliability

Reliability

is a quality attribute describing the extent to which the software can perform its functions without failure for a specified period of time under specified conditions. The actual reliability of a software product is typically measured in terms of the number of defects in a specific time interval (for example, the number of failures per month), or the time between failures (mean time to failure). Software reliability models are utilized to predict the future reliability or the latent defect count of the software. There are two types of reliability models: static and dynamic.

Static reliability models

use other project or software product attributes (for example, size, complexity, programmer capability) to predict the defect rate or number of defects). Typically, information from previous products and projects is used in these models, and the current project or product is viewed as an

additional data point in the same population.

Figure 18.18

Requirements volatility: percentage requirements change—examples.

Dynamic reliability models

are based on collecting multiple data points from the current product or project. Some dynamic models look at defects gathered over the entire life cycle, while other models concentrate on defects found during the formal testing phases at the end of the life cycle. Examples of dynamic reliability models include:

-

Rayleigh model

-

Jelinski-Moranda (J-M) model

-

Littlewood (LW) models

-

Goel-Okumoto (G-O) imperfect debugging model

-

Goel-Okumoto nonhomogeneous Poisson process (NHPP) model

-

Musa-Okumoto (M-O) logarithmic Poisson execution time model

-

Delayed S and inflection S models

Appropriately implementing a software reliability model requires an understanding of the assumptions underlying that model. For instance, the J-M model’s five assumptions are: (Kan 2003)

-

There are N unknown software faults at the start of testing

-

Failures occur randomly, and times between failures are independent

-

All faults contribute equally to cause a failure

-

Fix time is negligible

-

Fix is perfect for each failure; there are no new faults introduced during correction

Various other models make different assumptions than the J-M model. When selecting a model, consideration must be given to the likelihood that the model’s assumptions will be met by the project’s software environment.

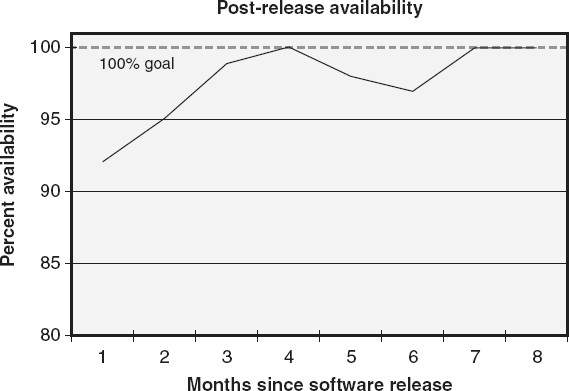

Availability

Availability is a quality attribute that describes the extent to which the software or a service is available for use when needed. Availability is closely related to reliability because unreliable software that fails frequently is unavailable for use because of those failures. Availability is also dependent on the ability to restore the software product to a working state. Therefore, availability is also closely related to maintainability in cases where a software defect caused the failure and that defect must be corrected before the operations can continue.

In the example of an availability metric illustrated in Figure 18.19

, post-release availability is calculated each month by subtracting from one the sum of all of the minutes of outage that

month, divided by the sum of all the minutes of operations that month, and multiplying by 100 percent. Tracking availability helps relate software failures and reliability issues to their impact on the user community.

When defining reliability and availability metric for software, care should be taken not to use formulas intended for calculating hardware reliability and availability to evaluate software. This is because:

-

Unlike hardware, software does not wear out and/or fail over time because of aging

-

Unlike hardware where manufacturing can interject the same error multiple times (bent pins, broken wires, solder splashed), once a defect is found and removed from the software that defect is gone, assuming good configuration management practices do not allow the defective component to be built back into the software by mistake

Figure 18.19

Availability—example.

System Performance

System performance metrics are used to evaluate a number of execution characteristics of the software. These can be measured during testing to evaluate the future performance of the product, or during actual execution. Examples of performance metrics include:

-

Throughput: A measure of the amount of work performed by a software system over a period of time, for example:

-

Mean or maximum number of transactions per time period

-

Percentage of throughputs per period that are compliant with requirements

-

-

Response time: A measure of how quickly the software reacts to a given input or how quickly the software performs a function or activity, for example:

-

Mean or maximum response time

-