U

se desk checks, peer reviews, walk-throughs, inspections, etc. to identify defects (Apply)

BODY OF KNOWLEDGE VI.C

The ISO/IEC/IEEE Systems and Software Engineering

— Vocabulary

(ISO/IEC/IEEE 2010) defines a review

as “a process or meeting during which a work product, or set of work products, is presented to project personnel, managers, users, customers, or other interested parties for comment or approval.” Reviews may be conducted as part of software development, and maintenance and acquisition activities.

The IEEE Standard for Software Reviews and Audits

(IEEE 2008) defines a management review

as “A systematic evaluation of a software product or process performed by or on behalf of management that monitors progress, determines the status of plans and schedules, confirms requirements and their system allocation, or evaluates the effectiveness of management approaches used to achieve fitness for purpose.” Management reviews are used to:

-

Evaluate the effectiveness and efficiency of management approaches, strategies, and plans

-

Monitor project, program and operational status and progress, against plans and objectives

-

Evaluate variance from plans and objectives

-

Track issues, anomalies, and risks

-

Provide management with visibility into the status of projects, programs and/or operations

-

Provide management with visibility into product and process quality

-

Make decisions about corrective and/or preventive action

-

Implement governance

Typically management reviews evaluate the results of verification and validation (V&V) activities, but are not used directly for V&V purposes. Examples of project and program related management reviews are discussed in Chapter 16

.

However, three other major types of reviews are used directly for V&V purposes and will be discussed in this chapter, including:

-

Technical reviews

-

Pair programming

-

Peer reviews:

-

Desk checks

-

Walk-throughs

-

Inspections

-

V&V Review Objectives

The primary objective of V&V reviews is to identify and remove defects in software work products as early in the software life cycle as possible. It can be very difficult for authors to find defects in their own work product. Most software practitioners have experienced situations where they hunt and hunt for that elusive

defect and just can not find it. When they ask someone else to help, the other person takes a quick look at the work product and spots the defect almost instantly. That is the power of reviews.

Another objective of V&V reviews is to provide confidence that work products meet requirements and stakeholders’ needs. Reviews can be used to validate that the requirements appropriately capture the stakeholders’ needs. Reviews can also be used to verify that all of the functional requirements and quality attributes have been adequately implemented in the design, code, and other software products, and are being effectively evaluated by the tests.

V&V reviews are also used to check the work product for compliance to standards. For example, the design can be peer reviewed to verify that it matches modeling standards and notations, or a source code module can be reviewed to verify that it complies with coding standards and naming conventions.

Finally, V&V reviews can be used to identify areas for improvement. This does not mean “style” issues—if it is a matter of style, the author wins. However, reviewers can identify opportunities to increase the quality of the software. For example:

-

When reviewing a source code module, a reviewer might identify a more efficient sorting routine, or a method of removing redundant code, or even identify areas where existing code can be reused.

-

During a review, a tester might identify issues with the feasibility or testability of a requirement or design.

-

Reviewers might identify maintainability issues. For example, in a code review, inadequate comments, hard-coded variable values, or confusing code indentation might be identified as areas for improvement.

What to Review

Every work product that is created during software development can be reviewed as part of the V&V activities. However, not every work product should be. Before a review is held, practitioners should ask the question, “Will it cost more to perform this review than the benefit of holding it is worth?” Reviews, like any other process activity, should always be value-added or they should not be conducted.

Every work product that is delivered to a customer, user or other external stakeholder should be considered as a candidate for review. Work products deliverables include responses to requests for proposals, contracts, user manuals, requirements specifications, and, of course, the software and its subcomponents. Every deliverable is an opportunity to make a positive, or negative, quality impression on the organization's external stakeholders. In addition, any work products that are inputs into or has major influence on the creation of these deliverables are also candidates for review. For example, architecture and component designs, interface specifications, or test cases may never get directly delivered, but defects in those work products can have a major impact on the quality of the delivered software.

So what does not get reviewed? Actually, many work products created in the process of developing, acquiring and maintaining software, may not be candidates for reviews. For example, many quality records, such as meeting minutes, status/progress reports, test logs, and defect reports typically are not candidates for review.

Types of Reviews

—Technical Reviews

The IEEE Standard for Software Reviews and Audits

(IEEE 2008) states that the “purpose of a technical review is to evaluate a software product by a team of qualified personnel to determine its suitability for its intended use and identify discrepancies from specifications and standards.” Technical reviews can also be used

to evaluate alternatives to the technical solution, to provide engineering analysis, and to make engineering tradeoff decisions. One of the primary differences between technical reviews in general, and peer reviews specifically, is that managers can be active participants in technical reviews while only peers of the author participate in peer reviews.

The IEEE Standard for Software Reviews and Audits

(IEEE 2008) defines the following major steps in the technical review process:

-

Management preparation: Project management makes certain that the reviews are planned, scheduled, funded, and staffed by appropriately trained and available individuals.

-

Planning. The review leader plans the review, which includes identifying the members of the review team, assigning roles and responsibilities, handling scheduling and logistics for the review meeting, distributing the review package, and setting the review schedule.

-

Overview: Overviews of the review process and/or the software product being reviewed are presented to the review team as needed.

-

Preparation. Each review team member reviews the work product and other inputs included in the review package before the review meeting. These reviewers forward their comments and identified problems to the review leader, who classifies them and forwards them on to the author for disposition. The review leader also verifies that the reviewers are prepared for the meeting and takes the appropriate corrective action if not.

-

Examination. Each software work product included in the review is evaluated to:

-

Verify the work product's completeness, suitability for its intended use and ready to transition to the next activity

-

Verify that any changes to the work product are appropriately implemented, including dispositions to problems identified during preparation

-

Confirm compliance to applicable regulations, standards, specifications, and plans

-

Identify additional defects and provide other engineering inputs on the reviewed work product and other associated work products

The results of these evaluations are documented, including a list of action items. The team leader determines if the number or criticality of any identified defects and/or nonconformances warrant the scheduling of an additional review to evaluate their resolutions. -

-

Rework/follow-up. After the evaluation, the review leader confirms that all assigned action items and identified defects are appropriately resolved.

Types of Reviews

—Pair Programming

Pair programming

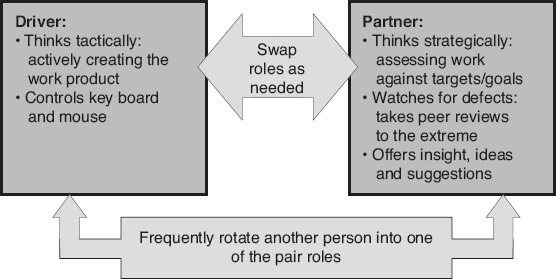

involves two people, one of whom is constantly reviewing what the other is developing. As illustrated in Figure 22.1

, pair programming offers a constant exchange of ideas about how the software can work. If one person hits a mental block, the other person can jump in and try something. Pair programming is a technique that helps people keep up the steady pace that agile methods advocate, through being fully engaged in the work and keeping one another “honest” (consistent about adhering to the project’s practices, standards, and so on). Martin (2003) notes that pair programming interactions can be “intense.” He advocates that “over the course of the iteration, every member of the team should

have worked with every other member of the team and they should have worked on just about everything that was going on in the iteration.” By doing this, everyone on the team comes to understand and appreciate everyone else’s work, as well as develop a deep understanding of the software as a whole. Pair programming is one way to avoid extraneous documentation since everyone shares in the requirements and design knowledge of the project.

Types of Reviews

—Peer Reviews

A peer review

is a type of V&V review where one or more of the author’s peers evaluate a work product to identify defects, to obtain a confidence level that the product meets its requirements and intended use, and/or to identify opportunities to improve that work product. The author

of a work product is the person who either originally produced that work product or the person who is currently responsible for maintaining that work product.

Figure 22.1

Pair programming.

Benefits of Peer Reviews

The Capability Maturity Model Integration (CMMI) for Development (SEI 2010) states, “Peer reviews are an important part of verification and are a proven mechanism for effective defect removal.” The benefits of peer reviews, especially formal inspections, are well documented in the industry. For example, more defects are typically found using peer reviews than other V&V methods. Capers Jones (2008) reports, “The defect removal efficiency levels of formal inspections, which can top 85 percent, are about twice those of any form of testing.” Well-run inspections with highly experienced inspectors can obtain 90 percent defect removal effectiveness (Wiegers 2002).“Inspections can be expected to reduce defects found in field use by one or two orders of magnitude” (Gilb 1993).

It typically takes much less time, per defect, to identify defects during peer reviews than it does using any of the other defect detection techniques. For example, Kaplan reports that at IBM’s Santa Teresa laboratory, it took an average of 3.5 labor hours to find a major defect using code inspection, while it took 15 to 25 hours to find a major defect during testing (Wiegers 2002). It also typically takes much less time to fix the defect because the defect is identified directly in the work product, which eliminates the need for potentially time-consuming debugging activities. Peer reviews can be used early in the life cycle, on work products such as requirements and design specifications, to eliminate defects before those defects propagate into other work products and become more expensive to correct.

Peer reviews also provide opportunities for cross-training. Less-experienced practitioners can benefit from seeing what a high-quality work product looks like when they help peer review the work of more experienced practitioners. More-experienced practitioners can provide engineering analysis and improvement

suggestions, which help transition knowledge, when they review the work of less-experienced practitioners. Peer reviews also help spread product, project, and technical knowledge around the organization. For example, after a peer review, more than one practitioner is familiar with the reviewed work product and can potentially support it if its author is unavailable. Peer reviews of requirements and design documents aid in communications, and help promote a common understanding that is beneficial in future development activities. For example, these peer reviews can help identify and clarify assumptions or ambiguities in the work products being reviewed.

Peer reviews can help establish shared workmanship standards and expectations. They can build a synergistic mind-set, as the work products transition from individual to team ownership with the peer review.

Finally, peer reviews provide data that aid the team in assessing the quality and reliability of the work products. Peer review data can also be used to drive future defect prevention and process improvement efforts.

Selecting Peer Reviewers

Peer reviewers are selected based on the type and nature of the work product being reviewed. Reviewers should be peers of the author and possess enough technical and/ or domain knowledge to allow for a thorough evaluation of the work product. For a peer review to be effective, the reviewers must also be available to put in the time and energy necessary to conduct the review. There are a variety of reasons why individuals might be unavailable to participate, including time constraints, the need to focus on higher-priority tasks, or the belief that they have inadequate domain/ technical knowledge. If an “unavailable” individual is considered essential to the success of the peer review, these issues need to be dealt with to the satisfaction of that individual, prior to

assigning them to participate in the peer review.

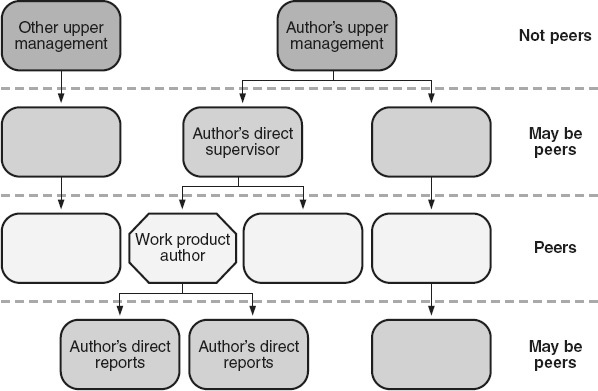

Peers

are the people at the same level of authority in the organization as the work product’s author. The general rule for peer reviews is that managers do not participate in peer reviews—they are not peers. If managers participate in the reviews, then:

-

Authors are more reluctant to have their work products peer reviewed because the defects found might reflect badly on the author in the eyes of their management

-

Reviewers are more reluctant to report defects in front of managers and make their colleagues look bad, knowing that it might be their turn next

However, it is not quite that simple. It depends a lot on the culture of the organization. In organizations where first-line managers are working managers (active members of the team), or where participative management styles prevail, having the immediate supervisor in the peer review may be a real benefit. They may possess knowledge and skills that are assets to the peer review team and the author. Individuals from one level above or below the author in the organizational level, as illustrated in Figure 22.2

, can be considered as reviewers, but only if the author is comfortable with that selection. In other words—ask the author. If the author is comfortable having their supervisor in the review, other reviewers will probably be comfortable as well.

When talking about “peers” in a peer review, it does not mean “clones.” For example, in a requirements peer review, where the author is a systems analyst, the peer review team should not be limited to just other systems analysts. Having reviewers with different perspectives increases the diversity on the peer review team. This diversity brings with it different perspectives that can increase the probability of finding additional defects and different kinds of defects. The reviewers should be selected to maximize this benefit by choosing diverse participants, including:

Figure 22.2

Selecting peer reviewers.

-

The author of the work product that is the predecessor to the work product that is being reviewed. “The predecessor perspective is important because one … goal is to verify that the work product satisfies its specification” (Wiegers 2002).

-

The authors of dependent (successor) work products, and authors of interfacing work products, who have a strong vested interest in the quality of the work product. For example, consider inviting a designer and a tester to the requirements peer review. Not only will they help find defects but they are excellent candidates for looking at issues such as understandability, feasibility, and testability.

-

Specialists, who may also be called on when special expertise can add to the effectiveness of the peer review (for example, security experts, safety experts, and human factors experts).

This does not mean that others with the same job as the author are excluded from the review. They have the knowledge of the workmanship standards and best practices for the specific product. They are also most familiar with the common defects made in that type of work product. This also adds diversity to the team.

For most peer reviews, the peers are typically other members of the same project team. However, for small projects or projects that have only a single person with a specialized skill set, it may be necessary to ask people from other projects to participate in the peer reviews.

As mentioned above, peer reviews are wonderful training grounds for teaching new people programming techniques, product and domain knowledge, processes, and other valuable information. However, too many trainees can also impact the efficiency and effectiveness of the peer review. There may also be several reasons why individuals may want to observe peer reviews. For example, an auditor may be observing to verify that the peer review is being conducted in accordance with documented processes, or an inspection moderator trainee may be observing an experienced moderator in action. Observers can be distracting to team members, even if they are not participating in the actual review. Limiting the number of trainees and observers in a peer review meeting to no more than one or two per meeting is recommended.

Management’s primary responsibility to the peer review process is to champion that process by emphasizing its value and maintaining the organization’s commitment to the process. To do this, management must understand that while the investment in peer reviews is required early in the project, their true benefits

(their return on that investment) will not be seen until the later phases of the life cycle, or even after the product is released. Management can champion peer reviews by:

-

Providing adequate scheduling and resources for peer reviews

-

Incorporating peer reviews into the project plans

-

Making certain that training in the review techniques being used is planned and completed for all software practitioners participating in peer reviews

-

Advocating and supporting the usefulness of peer reviews through communications and encouragement

-

Appropriately using data and metrics from the peer reviews

-

Being brave enough to stay away from the actual peer reviews unless specifically invited to participate

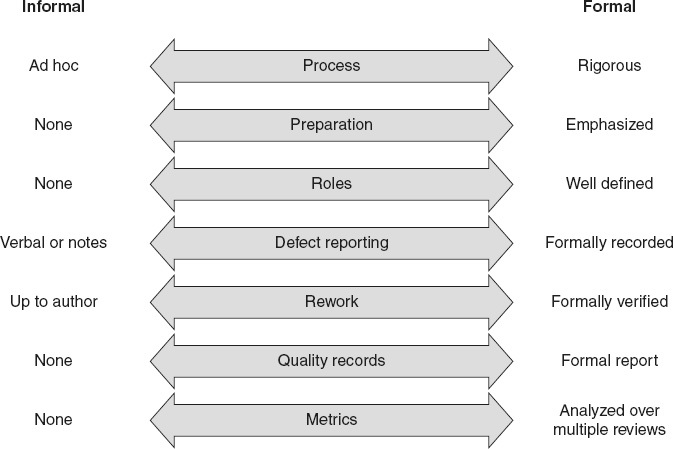

Informal Versus Formal Peer Reviews

Peer reviews can vary greatly in their level of formality, as illustrated in Figure 22.3

. At the most informal end of the peer review spectrum, a software practitioner can ask a colleague to, “Please take a look at this for me.” These types of informal peer reviews are performed all the time. It is just good practice to get a second pair of eyes on a work product when the practitioner is having problems, needs a second opinion, or wants to verify their work. These informal reviews are done ad hoc with no formal process, no preparation, no quality records or metrics. Defects are usually reported either verbally or as redlined mark-ups on a draft copy of the work product. Any rework that results from these informal peer reviews is up to the author’s discretion.

On the opposite end of the spectrum is the formal peer review. In formal peer reviews:

-

A rigorous process is documented, followed, and continuously improved with feedback from peer reviews as they are being conducted

-

Preparation before the peer review meeting is emphasized

-

Peer review participants have well-defined roles and responsibilities to fulfill during the review

-

Defects are formally recorded, and that list of defects, and a formal peer review report, become quality records for the review

-

The author is responsible for the rework required to correct the reported defects, and that rework is formally verified by either re-reviewing the work product or through checking done by another member of the peer review team (for example, the inspection moderator)

-

Metrics are collected and used as part of the peer review process, and are also used to analyze multiple reviews over time as a mechanism for process improvement and defect prevention

Figure 22.3

Informal versus formal peer reviews.

Types of Peer Reviews

There are different types of peer reviews, called by many different names in the software industry. Peer reviews go by names such as team reviews, technical reviews, walk-throughs, inspections, pair reviews, pass-arounds, ad hoc reviews, desk checks,

and others. However, the author has found that most of these can be classified into one of three major peer review types:

-

Desk checks

-

Walk-throughs

-

Inspections

Table 22.1

compares and contrasts the differences between these three major types of peer reviews

Figure 22.4

illustrates that while inspections are always very

formal peer reviews, the level of formality in desk checks and walk-throughs varies greatly depending on the needs of the project, the timing of the reviews, and the participants involved.

The type of peer review that should be chosen depends on several factors:

-

First, inspections are focused purely on defect detection. If one of the goals of the peer review is to provide engineering analysis and improvement suggestions (for example, reducing unnecessary complexity, suggesting alternative approaches, identifying poor methods or areas that can be made more robust), a desk check or walk-through should be used.

-

The maturity of the work product being reviewed should also be considered when selecting the peer review type. Desk checks or walk-throughs can be performed very early in the life of the work product being reviewed (for example, as soon as the source code module has a clean compile or a document has been spell-checked). In fact, walk-throughs can be used just to bounce around very early concepts before there even is a work product. However, inspections are only performed when the author thinks the work product is done and ready to transition into the next phase or activity in development.

Table 22.1

Compare and contrast major peer review types.

|

Desk Checks

|

Walk-Throughs

|

Inspections

|

|

Individual peer(s)

|

Team of peers

|

Team of peers

|

|

Evaluate product

|

Evaluate product

|

Evaluate product

|

|

First draft or clean code compile

|

First draft or clean code compile

|

Product ready for transition

|

|

Can range from informal to formal

|

Can range from

informal to formal

|

Always formal

|

|

Focus on defect detection and engineering analysis

|

Focus on defect detection and engineering analysis

|

Focus only on defect detection

|

|

Find errors as early as possible

|

Find errors as early as possible

|

Find errors before transition

|

|

No preparation

|

Preparation less emphasized

|

Preparation emphasized

|

|

No one presents the work product

|

Author presents the work product

|

Separate reader role presents the work product

|

|

Checklists not required

|

Checklists not required

|

Checklists required

|

|

Might collect metrics

|

Might collect metrics

|

Emphasizes use of metrics

|

Figure 22.4

Types of peer reviews.

-

Staff availability and location can also be a factor. If the peer review team is geographically dispersed, it can be much easier to perform desk checks than walkthroughs or inspections. Of course, modern, online meeting tools have minimized this distinction.

-

Economic factors such as cost, schedule, and effort should also be considered. Team reviews tend to cost more and take longer than individuals reviewing separately. More-formal peer reviews also tend to cost more and take longer. However, the trade-off is the effectiveness of the reviews. Team peer reviews take advantage of team synergy to find more defects. More-formal reviews are typically more thorough, and therefore more effective at identifying defects.

-

Risk is the final factor to consider when choosing which type of peer review to hold. Risk-based peer reviews are discussed below.

Risk-Based Peer Reviews

Risk-based peer reviews are simply a type of risk-based V&V activity. Risk analysis should be performed on each work product that is being considered for peer review to determine the probability that yet-undiscovered, important defects exist in that work product and the potential impact of those defects if they escape the peer review. If there is both a low probability and a low impact of undetected defects, then an informal desk check by a single peer may be appropriate, as illustrated in Figure 22.5

. As the probability and impact increase, the type of peer review that is most appropriate moves to a more-formal desk check, to informal walk-through, to more-formal walk-through, and then to formal inspection. For a very high-risk work product, having multiple peer reviews may be appropriate. For example, for high-risk work products like requirements, each set or section of requirements may be desk checked or have a walk-through as it is being developed, and then the entire requirements specification may be inspected late in its development, just before it is transitioned.

The number of people performing the peer review may also vary

based on risk. For very low-risk work products, having a single individual perform a desk check may be sufficient. For slightly higher-risk work products, it may be appropriate to have multiple people perform the desk check. For products worthy of an investment in an inspection, less risky work products may be assigned a smaller inspection team of two to four people, and higher-risk products may be assigned an inspection team of five to seven people.

Risk-based peer reviews also embrace the law of diminishing returns. When a software work product is first peer reviewed, many of the defects that exist in the product are discovered with less effort. As additional peer reviews are held, the probability of discovering any additional defects decreases. At some point, the return-on-investment to discover those last few defects is outweighed by the cost of additional peer reviews.

Figure 22.5

Risk-based selection of peer review types.

Soft Skills for Peer Reviews

One of the challenges an author faces during a peer review is to remain “egoless” during the process (especially in the meetings), and not become defensive or argumentative. An egoless approach enables an author to step back and accept improvement suggestions and acknowledge identified defects without viewing them as personal attacks on their professional abilities or their value as an individual. One way of doing this is for the author to think of the work product as transitioning from their personal responsibility, to becoming the team’s product when it is submitted for review. The other peer reviewers should also take an egoless approach and not try to prove how intelligent they are by putting down the work of the author, the comments of other reviewers, or the work product itself. During a peer review, everyone should treat the work product, the other review members, and especially the author with respect.

Throughout the peer review process the focus should be on the product, not on people. One way to accomplish this is to use “I” and “it” messages, avoiding the word “you” during discussions. For example, a reviewer might say,“I did not understand this logic” or “the logic in this section of the work product has a defect” rather than saying “you made a mistake in this logic.” Another method is to word comments as neutral facts, positive critiques, or questions—not criticisms.

During the peer review meetings, team members should not interrupt each other. They should listen actively to what other team members say and build on the inputs from other reviewers to find more defects. The author should not be forced to acknowledge every defect during a peer review meeting. This can really beat the author down. Assume that the author’s silence indicates their

agreement that the defect exists.

Types of Peer Reviews

—Desk Checks

A desk check

is the process where one or more peers of a work product’s author review that work product individually. Desk checks can be done to detect defects in the work product and/or to provide engineering analysis. The formality used during the desk check process can vary. Desk checks can be the most informal of the peer review processes or more formal peer review techniques can be applied. A desk check can be a complete peer review process in and of itself, or it can be used as part of the preparation step for a walk-through or inspection. The effectiveness of the desk check process is highly dependent on the skills of the individual reviewers and the amount of care and effort they invest in the review.

An informal desk check begins when the author of a work product asks one or more of their peers to evaluate that work product. Those peer reviewers then desk check the work product independently and feed back their comments to the author either verbally or by providing a copy of the work product that has been annotated (redlined) with their comments. This is typically done on the first draft of a document or after a clean compile of the source code module. However, desk checks can be performed at any time during the life of the work product.

Desk checks can also be done in a more formal manner. For example, when a desk check is done as part of a review and approval cycle, a defined process may be followed with specific assigned roles (for example, software quality engineer, security specialist or tester) and formally recorded defects. These more-formal desk checks may result in quality records being kept, including formal peer review defect logs and/or formal written reports. Formal desk checks can even involve reviewer follow-up by re-reviewing the updated work product to verify that defects

were appropriately corrected and that engineering suggestions were incorporated.

During a desk check, the reviewers should not try to find everything in a single pass through the work product. This defuses their focus, and fewer important defects and issues will be identified. Instead, each reviewer should make a first pass through the work product to get a general overview and understanding without looking for defects. This provides a context for further, more detailed review. Each reviewer then makes a second, detailed pass through the work product, documenting any identified defects. Each reviewer should then select one item from a common defect checklist, or one area of focus, and make a third pass through the work product, concentrating on reviewing just that one item/area. If there is time, each reviewer can select another checklist item or focus area and make a fourth pass, and so on. For example, in a requirements specification review, a reviewer could concentrate on making sure that all the requirements are finite in one pass and focus on evaluating the feasibility of each requirement in another pass. Making multiple passes through the work product is not considered rework (repeating work that is already done) because each pass looks at the work product from a different area of focus or emphasis.

One of the desk check techniques that reviewers can use on their second, general pass through a work product is mental execution. Using the mental execution technique,

the reviewer follows the flow of each path or thread through the work product, instead of following the line-by-line order in which it is written. For example, in a design review, the reviewer might follow each logical control flow through the design. In the review of a set of installation instructions, the reviewer might follow the flow of installation steps. A code review example might be to select a set of input data and follow the data flow through the source code module.

In the test case review technique,

the reviewer designs a set of

test cases and uses those test cases to review the work product. This technique is closely associated with the mental execution technique since the reviewer usually does not have executable software, so they have to mentally execute their test cases. The very act of trying to write test cases can help identify ambiguities in the work product and issues with the product’s testability. By shifting from a “how does the product work” mentality to the “how can I break it” mentality of a tester, the reviewer may also identify areas that are “missing” from the work product (for example, requirements that have not been addressed, process alternatives or exceptions that are not implemented, missing error-handling code, or areas in the code where resources are not released properly). The test case review technique can be used for

many different types of work products. For example, the reviewer can write test cases to a requirements specification, the architecture or component design element, source code module, installation instruction, the user manual, and even to test cases themselves.

One of the real advantages of having a second person look at a work product is that person can see things that the author did not consider. However, it is much harder to review what is missing from the work product than to review what is in the work product. Checklists and brainstorming are two techniques that can aid in identifying things missing from work products. Checklists

simply remind the reviewer to look for specific items, based on historic common problems in similar work products. If checklists do not exist, the reviewer(s) can brainstorm a list of things that might be missing based on the type of work product being reviewed. For example, for a requirements specification this list might include items such as:

-

Missing requirements

-

Assumptions that are not documented

-

Users or other stakeholders that are not being considered

-

Failure modes or error conditions that are not being handled

-

Special circumstances, alternatives, or exceptions that exist but are not specified

The goal of any peer review is to find as many important defects as possible within the existing time and resource constraints. It is typically easier to find small, insignificant defects such as typos and grammar problems than it is to find the major problems in the work product. In fact, if the reviewer starts finding many little defects, it is easy to get distracted by them. The reviewer should focus on finding important defects. If they notice minor defects or typos they should document them but then return their focus to looking for the “big stuff.” If the reviewers just can not resist, they should make one pass though the work product just to look for the small stuff and be done with it. This is why many organizations insist that all work products be spell/grammar-checked and/or run through a code analysis tool (for example, a compiler) to make sure that they are as clean as possible so reviewers can avoid wasting time finding defects that could be more efficiently found with a tool.

It is the computer age, and reviewers can take advantage of the tools that computers provide them. One of the tools that can be used in a desk check is the search function. If the reviewer can get an electronic copy of the work product, they can search for keywords. For example, words such as “all,” “usually,” “sometimes,” or “every” in a requirements specification may indicate areas that need further investigation for finiteness. After a defect is identified, the search function can also be used to help identify other occurrences of that same defect. For example, when this chapter was being written, one of the reviewers pointed out that the term “recorder” was being used in some places and the term “scribe” in others, to refer to the same inspection role. Since

this might add a level of confusion, the search function was used to find all occurrences of the word “scribe” and changed them to “recorder.” The moral here is do not search manually for things that the computer can find much more quickly and accurately.

During desk checking, the work product should be compared against its predecessor for completeness and consistency. A work product’s predecessor

is the previous work product used as the basis for the creation of the work product being peer reviewed. Table 22.2

shows examples of work products and their predecessors.

Table 22.2

Work product predecessor—examples.

|

Work product being peer reviewed

|

Predecessor examples

|

|

Software requirements specification

|

System requirements specification, stakeholder requirements (user stories, use cases, stakeholder requirements specification), business requirements document, or marketing specification

|

|

Architectural (high-level) design

|

Software requirements specification

|

|

Component (detailed or low-level) design

|

Architectural (high-level) design

|

|

New source code module

|

Component (detailed or low-level) design

|

|

Modified source code module

|

Defect report, enhancement request, or modified component (detailed or low-level) design

|

|

Unit test cases

|

Source code module or component (detailed or low-level) design

|

|

System test cases

|

Software requirements specification

|

|

Software quality assurance (SQA) plan

|

Software quality assurance (SQA)

standard processes, or required SQA plan template

|

|

work instructions

|

Standard process documentation

|

|

Response to request for proposal

|

Request for proposal

|

Types of Peer Reviews

—Walk-Throughs

A walk-through

is the process where one or more peers of a work product’s author meet with that author to review the work product as a team. A walk-through can be done to detect defects in the work product and/or to provide engineering analysis. Preparation before the walk-through meeting is less emphasized than it is in inspections. The effectiveness of the walk-through process is not only dependent on the skills of the individual reviewers and the amount of care and effort they invest in the review, but on the synergy of the review team.

The formality used during the walk-through process can vary. An example of a very informal walk-through might be an author holding an impromptu “white-board” walkthrough of an algorithm or other design element. In an informal walk-through there may be little or no preparation.

As illustrated in Figure 22.6

, more-formal peer review techniques can also be applied to walk-throughs. In a more formal walk-through, preparation is done prior to the team meeting, typically through the use of desk checking. Preparation is usually left to the discretion of the individual reviewer and may range from little or no preparation to an in-depth study of the work product under review. During the walk-through meeting, the author presents the work product one section at a time and explains each section to the reviewers. The reviewers ask questions, make suggestions (engineering analysis), or report defects found. The author answers the questions about the work product. The recorder keeps a record of the discussion and any suggestions, open issues or defects identified. After the walk-through meeting,

the recorder produces the minutes from the meeting and the author makes any required changes to the work product to incorporate suggestions, resolve issues and to correct defects.

While walk-throughs and inspections are both team-oriented peer review processes, there are significant differences between the two, as illustrated in Table 22.1

. However, many of the tools and techniques that will be discussed in the inspection section can also be applied to walk-throughs depending on the amount of formality selected for the walk-through.

Figure 22.6

Formal walk-through process—example.

Figure 22.7

Inspection process steps.

Types of Peer Reviews

—Inspections

An inspection

is a very formal method of peer review where a team of peers, including the author, performs detailed preparation and then meets to examine a work product. The work product is inspected when the author thinks it is complete and ready for transition to the next phase or activity. The only focus of an inspection is on defect identification. Individual preparation using checklists and assigned roles is emphasized. Metrics are collected and used to determine compliance to the entry criteria for the inspection meeting, as well as for input into product or process improvement efforts. The inspection process consists of several distinct activities, as illustrated in Figure 22.7

.

Inspection

—Process and Roles

In an inspection, team members are assigned specific roles:

-

The author is the original creator of the work product or the individual responsible for its maintenance. The author is responsible for initiating the inspection and works closely with the moderator throughout the inspection process. During the inspection meeting, the author acts as an inspector with the additional responsibility of answering questions about the work product. After the inspection meeting, the author works with other team members and/or stakeholders to close all open issues. The author is responsible for all required rework to the work product based on the inspection findings.

-

The moderator, also called the inspection leader, is the coordinator and leader of the inspection, and is considered the “owner” of the process during the inspection. The moderator keeps the inspection team focused on the product and makes certain that the inspection remains “egoless.” The moderator must possess strong facilitation, coordination, and meeting management skills. The moderator should receive training on how to perform their duties, and on the details of the inspection process. The moderator has the ultimate responsibility for making sure that inspection metrics are collected and recorded in the inspection metrics database. The moderator handles inspection meeting logistics such as making reservations for meeting rooms and handling any special arrangements, for example, if a projector is needed for displaying sections of the work product or if the recorder needs access to a computer to record the meeting information directly into an inspection database.

-

During the inspection meeting, the reader, also called the presenter, describes one section or part of the work product at a time to the other inspection team members and then opens that section up for discussion. This section-by-section paraphrasing has the added benefit of allowing the author to hear someone else’s interpretation of his/her work. Many times this interpretation leads to the author identifying additional defects during the meeting, when the reader’s interpretation differs from the author’s intended meaning. The reader’s “interpretation often reveals ambiguities, hidden assumptions, inadequate documentation, or style problems that hamper communications or are outright errors” (Wiegers 2002). The reader must have the appropriate mixture of good presentation skills, product technical familiarity, and strong organizational skills.

-

The recorder, also called the scribe, is responsible for acting as the team’s official record keeper. This includes recording the preparation and meeting metrics, and all defects and open issues identified during the inspection meeting. Since the recorder is also acting as an inspector at the meeting, this person must be able to “multitask” their time and should have solid writing skills.

-

All of the members of the inspection team, including the author, moderator, reader, and recorder are inspectors. The primary responsibility of the inspectors is to identify defects in the work product being inspected. During the preparation phase, the inspectors use desk check techniques to review the work product. They identify and note defects, questions, and other comments. During the inspection meeting, the inspectors work as a synergistic team. They actively listen to the reader paraphrasing each part of the work product to determine if their personal interpretation differs from the reader’s. They ask questions, discuss issues, and report defects. Inspectors should continue to search for additional defects that were not logged prior to the meeting but that are discovered through the synergy of the team interactions.

-

The observer role is optional in an inspection. The observer does not take an active role in the inspection but acts as a passive observer of the inspection activities. This may be done to learn about the work product or about the inspection process. It may also be done as part of an audit or other evaluation.

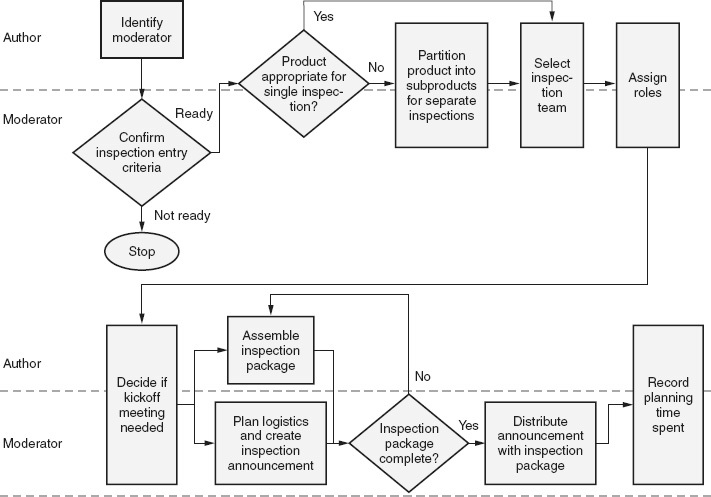

Inspection

—Planning Step

When the author completes a work product and determines that it is ready for inspection, the author initiates the inspection process by identifying a moderator for the inspection. There is typically a group of trained moderators available to each project from which the author can choose. The moderator works with the author to plan the inspection. The detailed process for the planning step is illustrated in Figure 22.8

.

During the planning step, the moderator verifies that the inspection entry criteria, as defined in the inspection process, are met before the inspection is officially started, and verifies that the work product is ready to be inspected. This may include doing a desk check of a sample of the work product to verify that it is of appropriate quality to continue. Any fundamental deficiencies should be removed from the work product before the inspection process begins.

Inspection meetings are limited to no more than two hours in length. If the work product is too large to be inspected in a two-hour meeting, the author and moderator partition the product into two or more sub-products and schedule separate inspections for each partition. The same inspection team should be used for each of these meetings, and a single kickoff meeting may be appropriate.

The moderator and author select appropriate individuals to

participate in the inspection, and then assign roles. The inspection teams should be kept as small as possible and still meet the inspection objectives, including having the diversity needed to find different types of defects. A general rule of thumb is to have no more than seven inspectors per meeting.

Figure 22.8

Inspection planning step process.

The author and moderator determine whether or not a kickoff meeting

is necessary. A kickoff meeting should be held, prior to the preparation step, if the inspectors need information about the important features, assumptions, background, and/or context of the work product to effectively prepare for the inspection, or if they need training on the inspection process. An alternative to holding a kickoff meeting is to include product overview

information as part of the inspection package.

Decisions about when and where to hold the kickoff and inspection meetings should take the availability and location of the participants into consideration. No more than two inspection meetings should be scheduled per day for the same inspectors, with a long break between those two meetings. The inspection announcement should be delivered with the inspection package to all inspection team members far enough in advance of the meeting to allow for adequate preparation (typically a minimum of one day before the kickoff meeting and two to three days before the inspection meeting).

The primary deliverable from inspection planning is the inspection package, which is sent with the inspection announcement. This package, which can be sent in hard copy or electronically, includes all of the materials needed by the inspection team members to adequately prepare for the inspection, including:

-

The work product being inspected

-

Inspection forms (for recording defects, questions, and issues)

-

Copies of or pointers to related work products, for example:

-

Predecessor work product(s)

-

Related standard(s)

-

Work product checklist(s)

-

Associated traceability matrix

-

-

Product overview information, if a kickoff meeting is not being scheduled

Inspection

—Kickoff Meeting Step

The primary objective of the kickoff meeting, also called the overview meeting,

is education. Some members of the inspection team may not be familiar with the work product being inspected or how it fits into the overall software product or customer requirements. First, the author gives a brief overview of the work product being inspected and answers any questions from the other team members. This product briefing section of the kickoff meeting is used to bring the team members up to speed on the scope, purpose, important functions, context, assumptions, and background of the work product being inspected.

In addition to a general evaluation of the work product from their own perspective, it may be valuable to ask individual inspectors to focus on special areas or characteristics of the work product. For example, if security is an important aspect of the work product, an inspector could be assigned to specifically investigate that characteristic of the product. Assigning special focus areas has the benefit of making sure that someone is covering that area while at the same time removing potential redundancy from the preparation process. The author is typically the best person to identify whether special areas of focus are appropriate for the work product under inspection. Inspectors may also be assigned to specific checklist items as areas of focus. This is done to make certain that at least one inspector looks at each item on the checklist. These areas of focus and checklist items, as well as the reader and recorder roles, are assigned during the kickoff meeting.

Finally, if there are members of the inspection team who are not familiar with the inspection process, the kickoff meeting can also be used to provide remedial inspection process training to those individuals to make certain that all the team members understand what is expected from them. In this case, the moderator conducts an inspection process briefing as part of the kickoff meeting to confirm that all of the inspection team members have the same understanding of the inspection process, roles, and assignments.

Inspection

—

Individual Preparation Step

The real work of finding defects and issues in the work product begins with the preparation step. Utilizing the information supplied in the inspection package, each inspector evaluates the work product independently using desk check techniques, with the intent of identifying and documenting as many defects, issues, and questions as possible. Checklists are used during preparation to make certain that important items are investigated. However, inspectors should look outside the checklists for other defects as well. If an inspector was assigned one or more specific areas of focus or checklist items, they should evaluate the work product from that point of view, in addition to doing their general inspection preparation. If an inspector has questions that are critical enough to impact that inspector’s ability to prepare for the inspection, the author should be contacted. Otherwise, questions should be documented and brought to the inspection meeting.

Preparation is also essential for the reader, who must plan and lay out the schedule for conducting the meeting, including how the work product will be separated into individual, small sections for presentation and discussion. The reader must determine how to paraphrase each section of the work product, make notes to facilitate that paraphrasing during the meeting, and then practice their paraphrasing so that it can be accomplished smoothly and quickly during the inspection meeting. Since this preparation is time-consuming, the reader is not assigned any additional areas of focus.

A rule of thumb for preparation time is that the inspectors should spend from one to one and a half times as much time getting ready for the inspection meeting as the inspection meeting is expected to last. For example, if the meeting is planned to be two hours in duration, the inspectors should spend two to three hours preparing for that meeting.

Inspection

—

Inspection Meeting Step

The goal of the inspection meeting is to find and classify as many important defects as possible in the time allotted. As illustrated in Figure 22.9

, the inspection meeting starts with the moderator requesting the preparation time, counts of defects by severity, and counts of questions/issues from each inspector, and the recorder records these items. The moderator uses this information to determine whether or not all of the members of the inspection team are ready to proceed. If one or more inspectors are unprepared for the meeting, the moderator reschedules the meeting for a later time or date, and the inspection process returns to the preparation step.

The moderator also uses this information to determine how to proceed with the inspection meeting. If there are a large number of major defects, questions, and issues to cover, the moderator may decide that only those major items will be brought up in the first round of discussion. If there is time, a second pass through the work product can be made to discuss minor defects. Remember, the goal is to find as many important defects as possible in the time allotted. If there are numerous major issues, the work product will probably require re-inspection anyway.

Figure 22.9

Inspection meeting step process.

After the moderator has reviewed any pertinent meeting procedures, the meeting is turned over to the reader. The

moderator then assumes the role of an inspector, but with an eye toward moderating the meeting to make sure that the inspection process is followed, and that the inspection stays focused on the work product and not on the people. The reader begins with a call for global issues with the work product. Any issues discovered in the predecessor work products, standards, or checklists can also be reported at this time. The recorder should record these issues. After the meeting, the author should inform the appropriate responsible individuals of any identified defects in predecessor work products, standards, or checklists so they can be corrected. However, these defects are not recorded in the inspection log nor are they counted in the inspection defect count metrics.

The reader then paraphrases one small section of the work product at a time and calls for specific issues for that part of the work product. The inspectors ask questions, and report issues and potential defects. The author answers questions about the work product. Team discussion on each question, issue, or potential defect should be limited to answering the questions, discussing the issue, and identifying actual defects and not include discussion of possible solutions. The intent of the discussion is for the team to come to a consensus on the classification (nonissue, major or minor defect, or open issue) of each question, issue, or potential defect. If consensus can not be reached on the classification within a reasonable period of time (less than two minutes), it is classified as an open issue. The recorder documents all open issues and the identified defects with their severities and types.

After the last section of the work product has been discussed, the moderator resumes control of the meeting. If the meeting time has expired but all of the sections of the work product have not been covered, the inspection team schedules a second meeting to complete the inspection. If a second continuation meeting is needed, the moderator coordinates the logistics of that meeting. The recorder then reviews all of the defects and open issues. This is

a verification step to confirm that everything has been recorded, and that the description of each item is adequately documented.

The inspection team then comes to consensus on the disposition of the work product. The work product dispositions include:

-

Pass: If no major defects were identified, this disposition allows the author to make minor changes to correct minor issues without any required follow-up.

-

Fail: Author rework: The author performs the rework as necessary and that rework is verified by the moderator. (Note: based on the technical knowledge of the moderator, other members of the inspection team may be assigned to verify part or all of the rework.)

-

Fail –re-inspect: There are enough important defects or open issues that the work product should be re-inspected after the rework is complete.

-

Fail —reject: The document has so many important defects or open issues that it is a candidate for reengineering.

The defect-logging portion of the meeting is now complete. The recorder notes the end time of the inspection meeting and the total effort spent (meeting duration x number of inspectors). As a final step in the inspection meeting process, the team spends the last few minutes of the meeting discussing suggestions for improving the inspection process. This step can provide useful information that can help continuously improve the inspection process. Examples of lessons learned might include:

-

Improvement to inspection forms

-

Improvement to the contents of the inspection package

-

Items that should be added to the checklist or standards

-

Defect types that need to be added or changed

-

Effective techniques used during inspection that should be propagated to other inspections

-

Ineffective techniques that need to be avoided in future inspections

-

Systemic defect types found across multiple inspections that should be investigated

Inspection

—Post-Meeting Steps

There are three inspection post-meeting steps:

-

Document minutes: After the meeting, the recorder documents and distributes the inspection meeting minutes.

-

Rework: The payback for all the time spent in the other inspection activities comes during the rework phase when the quality of the work product is improved through the correction of all of the identified defects. The author is responsible for resolving all defects and documenting the corrective actions taken. The author also has primary responsibility for making certain that all open issues are handled during rework. The author, working with other inspection team member(s) and/ or stakeholders as appropriate, either closes each open issue as resolved (if it is determined that no defect exists) or translates the open issue into one or more defect(s) and resolves those defects.

-

Follow-up: The purpose of the follow-up step is to verify defect resolution and to confirm that other defects were not introduced during the correction process. Follow-up also acts as a final check to make certain that all open issues have been appropriately resolved. Depending on the work product disposition decided upon by the inspection team, the moderator may perform the follow- up step or a re-inspection may be required.