Aylish Wood

Where Shapes Come From (2016) is a recent work by Semiconductor, the artist duo Ruth Jarman and Joe Gerhardt. Using 3D-computer-generated (CG) animation software, they create the abstract shapes of lattice diagrams and animate the motion of atoms arrayed within these three-dimensional crystalline lattice structures.1 The CG elements sit against live-action footage filmed during a residency at the Mineral Sciences Laboratory at the Smithsonian Institute. The whole is accompanied by a soundtrack comprised of three elements: A voice-over, ambient sound from the lab and seismic data of earthquake tremors converted into sound. As Semiconductor (2017) explain their work: ‘They depict interpretations of visual scientific forms associated with atomic structures, and the technologies which capture them [ … ] By combining these scientific processes, languages and products associated with matter formation in the context of the everyday, they become fantastical and strange encouraging us to consider how science translates nature and question our experiences of the physical world’. Semiconductor’s strategy for asking their audience to question our experiences of the physical world draws on experimental animation techniques. Combining 3D-animation of abstract shapes with live-action footage, and juxtaposing the animated events with a voice-over, they add layers of competing imagery to live-action footage, Semiconductor reveal gaps between artistic and scientific languages by avoiding any seamless integration of the two.

My interest in Where Shapes Come From lies in what it also reveals about digital materiality. Although an intangible thing, digital materiality is part and parcel of our everyday life. Any device that operates using digital technologies, Amazon’s Alexa for instance, has the capacity to alter the way we live in our spaces. With a voice-activated functionality relying on software, Alexa changes the active dimensions of a space so that touch and movement are no longer needed to switch things on or search for information, speech is enough. Physically, a location looks the same, but now has a digital materiality founded on computation that facilitates the ways in which many users engage with Alexa. As digital devices continue to become increasingly pervasive, and artists experiment with what they offer, there is a lot more to say about the digital materiality of their work. In design theory, Johanna Drucker has introduced the concept of performative digital materiality to explain digital objects by paying heed to their associations and connections with people, processes and cultural domains (Drucker 2013). In this essay, I take abstraction, a familiar term from discussions of experimental animation, and expand it via the idea of performative digital materiality. My aim is to offer an analytic tool connecting algorithms used by artists (and other kinds of image producers) to the technological and cultural systems within which they work.

Underpinning any computational work, whether experimental or not, is a process of abstraction. To illustrate, I begin by connecting the idea of abstraction in animation to its usage in computer science, and move to then establish a link to Drucker’s conceptualisation of performative digital materiality. With these connections in place, I draw comparisons between Where Shapes Come From and Category 4 Hurricane Matthew on October 2, 2016 (2017), a visualisation of Hurricane Matthew created by the Godard Science Visualization Studio, part of NASA. Using 3D-animation, the visualisation depicts the complex cloud formation, winds and rain patterns associated with 48 hours of Hurricane Matthew as it traversed the Caribbean. Through this comparison I show that an artwork or visualisation’s performative digital materiality is context specific. Where Shapes Come From and Category 4 Hurricane Matthew each use data – seismic data in the case of the former and precipitation data for the latter – to drive the motion of CG elements in the animations. Consequently, while both pieces are overtly concerned with events taking place in physical environments, they also rely on algorithms to facilitate motion. Though they share this same technique, tracing out their different associations and connections shows their very different performative materialities. The algorithm facilitating motion in Category 4 Hurricane Matthew is linked to consensus building across the different components behind data gathering and processing, whereas that used for Where Shapes Come From maintains the interrogative gap essential to the experimental animation’s engagement with scientific discourse.

Opening up abstraction

The first step of this essay is to move from abstraction to performative digital materiality. Both Where Shapes Come From and Category 4 Hurricane Matthew use visual abstractions as a mode of depiction. In Semiconductor’s work, the crystal lattices are abstract configurations of atoms, and in NASA’s animation colour coding is used to map rather than show rain density. Taken broadly, abstraction can mean various things, whether in relation to artistic movements, grammar, ways of thinking, mathematics and also computing. Despite the differences in detail across these domains, the word abstract denotes a similar condition, which is to do with idealised as opposed to concrete qualities. In animation studies, definitions of abstraction can be found in the work of both Maureen Furniss and Paul Wells. For Furniss, ‘abstraction describes the use of pure forms – a suggestion of a concept rather than an attempt to explicate it in real life terms’ (Furniss 1998, 5). This definition mobilises a distinction between an idealised version of something versus a particular version of that thing. Although referring to rhythm and movement, Paul Wells relies on a similar distinction when he states: ‘Abstract films are more concerned with rhythm and movement in their own right as opposed to the rhythm and movement of a particular character’ (Wells 1998, 43). Abstraction in these definitions is both a descriptor of a type of visual imagery and a conceptual process.

These definitions of abstraction offer avenues for thinking about imagery on the screen, but they do not say much about digital matters in any obvious way. The same distinction, between an idealised and concrete version of something, is also, however, relevant to computing. At first sight a computational definition of abstraction might seem to offer little to our interpretation of images, but once linked to performative materiality it becomes a conceptual model for thinking through how artists connect with processes and cultural domains through the technologies of animation software. Turning first to computer science, abstraction is understood as a process of filtering out, a setting aside of the characteristics of patterns not essential or relevant to the computational solution of a problem. Computer scientists Peter Denning and Craig Martell draw parallels with abstraction in computing and as a mode of thought: ‘Abstraction is one of the fundamental powers of the human brain. By bringing out the essence and suppressing detail, an abstraction offers a simple set of operations that apply to all cases’ (Denning and Martell 2015, 208). The process of defining something through its essential characteristics often involves rule building that necessarily includes and excludes characteristics based on whether they are general rules. Elaborating further, Denning and Martell add a further and very useful distinction when they compare the explanatory abstraction of classical science and abstraction in computing: ‘Abstractions in classical science are mostly explanatory – they define fundamental laws and describe how things work. Abstractions in computing do more: not only do they define computational objects, they also perform actions’ (208). Touch-sensitive screens for instance, rely on abstractions. Rather than having to provide input as variable parameters, such as position, we simply touch the screen and an algorithm carries out an action.

There are two important points to open out further here: Abstraction as rule building and as a performance of actions, with the latter especially helpful to thinking through performative digital materiality. Rule building, whether in complex or simple algorithms, relies on a series of simplifications, informed choices and assumptions about what is or is not an essential characteristic, and these are where cultural, social and political influences have the potential to come into play. When the process of rule-making is described as a pulling back from concrete examples, details about the wider situation in which a digital object operates or exists are left out. A statement such as ‘the essence of abstractions is preserving information that is relevant in a given context, and forgetting information that is irrelevant in that context’ (Guttag 2013, 43), suggests that while rule-making is embedded in technological circumstances, it remains aside from cultural ones. But, as software studies scholars point out, coding is not neutral (Mackenzie 2006; and Chun 2011). Similarly, the implementation of code is not neutral either, but happens via interactions with systems, people and objects. The claim that code lacks neutrality will be unlikely to surprise animation scholars; we are after all used to thinking through questions around the simplifications, choices and assumptions, or rule building, which inform and underlie the politics of representation when it comes to depictions of people and things on the screen. It is probably fair to say, though, that how we translate those kinds of insight into the world of coding and its implementation becomes less obvious when faced with the simulated rain patterns of Category 4 Hurricane Matthew or the crystal structures of Where Shapes Come From. Johanna Drucker’s approach to digital materiality as performative offers a conceptual scaffold to connect ideas about rule building with the images on-screen. Through Drucker, adding back detail to the abstractions of algorithms means taking notice of the associations and connections active in an image-making process. When thought of in this way, algorithms remain connected to their cultural operations as well as their computational ones.

The important move made through performative digital materiality is that digital objects and their materiality are linked to what these objects do, or how they perform actions, in the world. Rather than only thinking in terms of what an algorithm is (a line of code, say), we can pay attention to the difference an algorithm makes to a situation. There is a useful conceptual echo between Denning and Martell’s view of computational abstractions performing an action and algorithms making a difference to a situation. The latter places actions in a wider context, and a performed action grasped and given meaning through a range of associations. For instance, when changing an input into an output, a computation can be understood as performing an action. When framing this act in terms of its digital materiality, the action is not limited to a specific set of operations. So, rather than simply saying an algorithm drives the motion of an animation, the wider context in which that algorithm is working becomes important too – how it makes a difference within a large network of collaborators working with high-end technologies or a duo of artists critiquing science from their perspective as experimental animators. Any performed action ripples outwards since a software or algorithm is part of a wider situation in which something takes place. An algorithm which drives motion performs the further actions of enabling consensus across a network or maintaining an artistic challange. This perspective is informed in part by Paul M. Leonardi’s description of digital things: ‘When [ … ] researchers describe digital artifacts as having “material” properties, aspects, or features, we might safely say that what makes them “material” is that they provide capabilities that afford or constrain action’ (Leonardi 2010). Leonardi takes digital objects not only to be coded entities, but digital artefacts or objects which make a difference to activities in the world. Their actions can facilitate activities or obstruct them, they are mediators in the sense meant by Bruno Latour (2007, 72). It is also informed by Drucker, who argues too that digital materiality be associated with what something does. Introducing the additional word ‘performative’ to signal the doing of digital objects, Drucker says: ‘Performative materiality suggests that what something is has to be understood in terms of what it does, how it works within machinic, systemic, and cultural domains’ (emphases in original) (Drucker 2013). The final link between abstraction and algorithms is that algorithms are where abstractions become active in any given situation. As Ed Finn puts it: ‘The algorithm deploys concepts from the idealised space of computation in messy reality, implementing them in what I call “culture machines”: complex assemblages of abstractions, processes, and people’ (Finn 2017, 2).

In this section I have moved from familiar ideas about abstraction and experimental animation, to computational abstraction and performative digital materiality. At the heart of these different ways of thinking is the same distinction between an idealised and a concrete version of something. Where algorithms and their abstract thinking are often placed outside social, political and cultural concerns, performative digital materiality puts algorithms right back in the middle of things. Drawing out the performative digital materiality of Where Shapes Come From and Category 4 Hurricane Matthew involves tracing out associations and connections surrounding the production of both pieces through a focus on the ‘precipitation algorithm’ in the case of Category 4 Hurricane Matthew and for Where Shapes Come From, how Semiconductor use Autodesk 3ds Max. I will claim upfront that the following makes no attempt to go into the detail of algorithms. Instead, I draw out how they perform as an active element within the creative process of each piece of work, part of the complex assemblage of abstractions, processes and people co-participating in a production.

Cloudy with digital materiality

Category 4 Hurricane Matthew is an animated visualisation showing the rainfall and wind generated by Hurricane Matthew on 2 October 2016 as it moved in from the Atlantic towards the Caribbean Islands before making landfall in South Carolina. It combines several kinds of visual depiction to create an apparently coherent whole. A proof of concept work for a larger scale piece, the visualisation can be broadly taken as an experiment. Even so, because its many elements are coherently combined, it does not share the same traditions as the experimental animation techniques discussed later for Where Shapes Come From. Comparing the two allows me to show how the differing contexts of the animations generate distinct performative materialities.

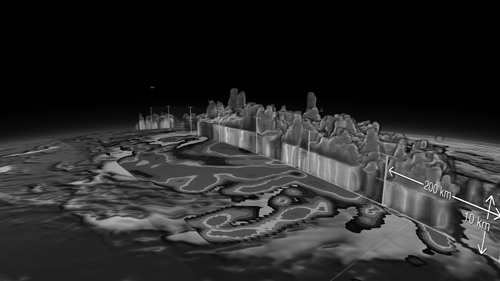

Starting with a satellite-type view of a hurricane swirl, Category 4 Hurriance Matthew transitions to a visualisation of the internal precipitation structure of the storm, and finally a static view of wind fields (as described in the text accompanying the visualisation: https://svs.gsfc.nasa.gov/4548). The internal precipitation is first shown as a 2D rain density map beneath the cloud mass of the hurricane, which then reconfigures into a 3D slice revealing the precipitation structure of the storm as a ‘rainfall curtain’ (see Figure 11.1). The 2D and 3D elements draw on abstract and diagrammatic conventions of colour coding to show rain density (reproduced here in greyscale gradations), whereas at first sight the hurricane cloud looks to be a copy of a natural event. Seeing the hurricane for what it is, a simulation of a complex entity crafted from a multiplicity of perspectives, opens up a place to introduce the digital materiality of the animation. The precipitation algorithm, which computed the data used in depicting the rainfall, is central to the visualisation’s digital materiality, and I approach the algorithm by looking at how it performs within a wider set of assocations triggered by imagery of hurricanes.

FIGURE 11.1 The 2D rainfall pattern is the foreground with the 3D rain curtain in the background of the image. Also visible are height and distance markers, which show the rain curtain to be over 10km in height. Image: NASA’s Scientific Visualization Studio Data provided by the joint NASA/JAXA GPM mission.

The image of the storm which opens Category 4 Hurricane Matthew is not an optical record, though as the animation begins it has the appearance of one. Since 1961, when the first images of hurricanes were recorded by television cameras on board Tiros III (Bandeen et al. 1964), the top-down perspective of satellite photos of hurricanes has entered into popular currency. The animation leverages that currency to visually establish the phenomenon, while also placing it in a specific geographical location. With the pattern of the hurricane secured through its recognisable shape and location, the animation shifts perspective by moving from the top-down view associated with the high altitude of a satellite, to a more parallel view. As the imagery of the rain curtain is drawn back, the 2D colour scale pattern of rainfall becomes visible. Once this happens, the image of Hurricane Matthew is clearly a simulation of the hurricane tracking across from the Atlantic Ocean to the Caribbean created using Autodesk Maya. With the digital status of the cloud obvious we can begin to think through the ways in which the imagery is, as Martin Kemp suggests, ‘both consistent and inconsistent with our normal ways of viewing things’ (Kemp 2006, 63). In Category 4 Hurricane Matthew, the consistency lies in our everyday familiarity with clouds as well as the swirl patterns of hurricanes. The inconsistency comes from the use of a 2D convention of colour scale to depict rainfall, and the more novel colour scaling of the 3D simulation of the rain curtain. By using the word inconsistent, Kemp is calling attention to how data, when used to generate the imagery, embodies features we would not normally see. Writing specifically about Landsat mapping, Kemp suggests soil moisture and the vigour of vegetation growth as things we would not normally see. For Category 4 Hurricane Matthew, equivalent features would encompass the amount of snow at the top of a cloud stack, rainfall on a wide area of ground and the wind patterns as they occur in and under the rainfall curtain.2 Given what I have said about digital materiality, we can go further than features only found in physical reality and consider how the visualisation embodies a performative digital materiality too.

First, I will briefly outline the monitoring system used to gather the data that drives the visualisation, giving insight into the assemblage of people and processes involved. As materials on NASA’s website, publicity information and papers published in science journals describe, rainfall data is captured on the Global Precipitation Mission (GPM) Core Observatory, whose instruments collect observations that allow ‘scientists to better “see” inside clouds’.3 The GPM Core Observatory, part of a constellation of satellites, is described on a factsheet thusly:

The GPM Core Observatory will measure precipitation using two science instruments: the GPM Microwave Imager (GMI) and the Dual-frequency Precipitation Radar (DPR). The GMI will supply information on cloud structure and on the type (i.e., liquid or ice) of cloud particles. Data from the DPR will provide insights into the three-dimensional structure of precipitation, along with layer-by-layer estimates of the sizes of raindrops or snowflakes, within and below the cloud. Together these two instruments will provide a database of measurements against which other partner satellites’ microwave observations can be meaningfully compared and combined to make a global precipitation dataset.

(GPM factsheet)

There are many places to begin interrogating this system, and my focus here is on how the global precipitation data set referred to in the quotation is generated. Undertaking the comparisons and combinations necessary to make a data set entails integrating observations across the satellite network with ground-based data gathering, and converting numbers registered on observational devices into data about precipitation. Central to this process is the precipitation algorithm (Kidd et al. 2012). George Huffman, head of the multi-satellite product team for GPM, describes working with algorithms designed to relate the numbers generated by the satellite instruments to the rain, snow and other precipitation within the cloud and on the ground. He states: ‘Algorithms are mathematical processes used by computer programs developed by the science team that relate what the satellite instruments measure to rain, snow and other precipitation observed throughout the cloud and actually felt on the ground’ (Space Daily Writing Team 2014). Information is gathered from current precipitation satellites, routine ground validation efforts, and focused field campaigns to capture the variety of rainfall and snowfall in different parts of the world. Getting this data together into information relies on the precipitation algorithm whose role is to: ‘Intercalibrate, merge, and interpolate “all” satellite microwave precipitation estimates, together with microwave-calibrated infrared (IR) satellite estimates, precipitation gauge analyses, and potentially other precipitation estimators at fine time and space scales for the TRMM and GPM eras over the entire globe’ (Huffman 2015). From Huffman’s detailing of the algorithm, the necessity of a large and varied group of supporting personnel, a network of satellite and land-based sensors, the simulation is evidently comprised of a multiplicity of perspectives. From this description, we can see how the precipitation algorithm sits at an intersection of spaces, and through exploring further the associations relayed via those intersections I show it has a performative materiality based around generating consensus.

The precipitation algorithm performs this materiality in complex ways. Enabling the cultural system of meteorological visualisations, it participates in reconfiguring global precipitation data into scales comparable to human perception and cognition. It also generates the data used to drive the simulation of the rainfall curtain and the colour coded precipitation patterns created in the 3D-animation software Autodesk Maya. The hurricane, neither copy nor optical record, is an explanation realised in a simulation. As Sherry Turkle notes: ‘Simulation makes itself easy to love and difficult to doubt. It translates the concrete materials of science, engineering, and design into compelling virtual objects that engage the body as well as the mind’ (Turkle 2009, 7). At the same time, by thinking in terms of abstraction and performative materiality, simulations are revealed as mathematical explanations. In her research into computer-generated visualisation practices in biological sciences, Annamaria Carusi argues: ‘Briefly we can say that if the visual images used in visualisations represent data, they do that by modelling rather than copying it, and what they represent is meant to be the mathematical ‘explanation’ of data rather than the appearance of data’ (Carusi 2011, 308). Outlining what is meant by a mathematical explanation, Carusi makes a distinction between modelling an explanation and enabling something to appear. I want to suggest that performative materiality emerges in the gap between explanation and appearance, complicating both terms so that neither explanations nor appearances occur without being weighted by associations of some kind. To explain, generating an explanation from raw data requires a series of manipulations in which the data is in some way translated, and that comes with both losses and gains in the available information. George Huffman, who I quoted above explaining that the precipitation algorithm intercalibrates, merges and interpolates data from various sensors in the observatory network, gives a more everyday account of the algorithm for an interview in Science Daily. Riffing on the idea of raw data he uses a metaphor of cooking soup, with vegetables standing in for data:

“They’re all vegetables, and so you have to wash them, peel them, take out the bad spots – that’s a really important step, since you don’t want your soup to taste bad,” Huffman said. “Maybe you sauté some things, and you boil other things, and you put it all together. When you get done you have to taste-test it to make sure you have the seasoning right. So each of those steps, in a mathematical sense, is what we have to do to take all the diverse sources of information and end up with a unified product, which the user finds to be useful.

(Space Daily Writing Team 2014)

Huffman’s soup analogy effectively indicates the degree to which data is smoothed, edited and manipulated before it becomes the basis for a simulation, which entails running the data through another set of complex algorithms. For the latter, GPM data is processed to create the data swathe geometry, volumetric brick maps and textures used in the Autodesk Maya/Renderman pipeline of a 3D-visualisation process (email conversation with Alex Kekesi, 2017).

Across the varied processes described above, we can see the more abstract and idealised spaces of mathematical explanations and computation coming into contact with the practicalities of available computational power, conventions of good or bad data, perceptions of what constitutes an artefact, styles of visualisations, the storytelling needs of the visualisation. Here we see algorithms as Ed Finn’s culture machines, as complex assemblages of abstraction, processes and people, a perspective resonating with Drucker’s performative digital materiality. Both descriptions understand digital entities – in this case, algorithms – as a material thing operating as part of a series of relations with people and systems. No longer only rule-based and theoretical descriptions of the essence of a problem, algorithms are a material-cultural entity where abstraction gets down and dirty and returns to the fold. We can go further: The computations of the precipitation algorithm perform not only by generating data within this assemblage, but also by generating consensus. Writing again about bioscience and visualisation, Annamaria Carusi claims: ‘Visualizations of computational simulations are common currency among the members of successful collaborations being used for analyses, in the context of workshops, and in publications’ (Carusi 2011, 308). As common currency amongst a successful collaboration, visualisations are based on consensus, a series of agreements operating across all levels of the project: The multinational team agreeing the terms of the project, the cross-disciplinary negotiations around conventions and visualisation practices, the calibrations of data, and agreements between data. The performative materiality of the precipitation algorithm, through its associations and connections with the animation software, the people on the visualisation and geophysics team, and all the conventions of visualisation practices, emerges as it gives the appearance of a seamless integration based on creating a consensus of both people and data.

Between a rock and software

Exploring Where Shape Comes From reveals a different performative digital materiality. The work of Semiconductor has always been to shake up the kind of consensus seen around Category 4 Hurricane Matthew, to destabilise the certainty of the metaphors used when nature is constructed through scientific language. With Autodesk 3ds Max they create 3d-animated crystalline structures whose spatio-temporal timescales sit in dialogue with the mineral science discourse of the live-action elements of the work. This interplay of spatio-temporal scales can be described as asymptotic, they are in dialogue but never converge.4 The performative digital materiality of Semiconductor’s use of 3ds Max intersects with and makes possible this asymptotic logic.

Instead of allowing language, whether that of words, pictures or moving images, to readily stand in for an accurate description of natural phenomena, Semiconductor disrupt scientific conventions by suggesting alternative possibilities. In the following combined quotation, Semiconductor say: [Joe Gerhardt:] ‘we try not to create metaphors, we are trying to actually allow you to feel things [ … ] [Ruth Jarman:] about the material, it’s always about the material whatever we’re doing’ (Semiconductor, in interview with the author). Semiconductor use animation to restage encounters with nature through an interpretive reimagining of scientific discourse, exposing how nature is mediated. When talking about what they mean by mediation, Gerhardt commented:

We were interested in how science educates our idea of what nature is and, of course, it’s one step away from the thing itself. And the science is then a mediation of nature itself and a lot of what we take for granted as being reality is actually an interpretation in scientific language.

(Semiconductor, in interview with the author)

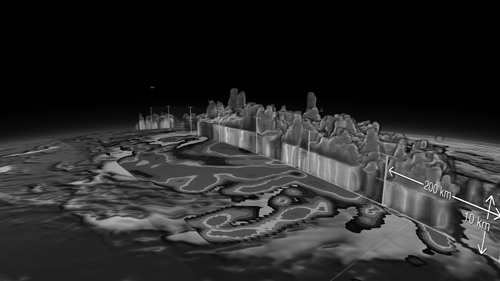

Gerhardt’s use of the word language here crosses between spoken language, the language associated with techniques of measurements and visualisations of the results of those measurements. In Where Shapes Come From, these different facets of language are present and in conversation with Semiconductor’s interpretative approach. The spoken language of a mineralogist (Jeffrey Post) is heard on the soundtrack explaining crystal formation. The location for the live-action footage, the Smithsonian Mineralogy Laboratory, is full of pieces of equipment, and the image lingers for a while on the abstracted language of geological mapping. The animation of the crystals draws on diffraction patterns generated using the technique of X-Ray crystallography (see Figure 11.2). Combined with a second sound track based on seismic data, the animation plays off these different languages. The artwork not only resists the kind of consensus found in Category 4 Hurricane Matthew, but actively challenges it too. This challenge is present in much of Semiconductor’s work, leading Lilly Husbands to describe it as a philosophical critique of scientific representation (Husbands 2013).

FIGURE 11.2 Still: Where Shapes From (Semiconductor, 2016). The atoms of a crystal are arrayed in a lattice structure based on a typical diffraction pattern generated using the technique of X-Ray crystallography. Courtesy of Semiconductor.

When talking about Semiconductor’s aim to create an experience of nature, Jarman says:

We are always trying to get as close to the material as possible and that’s why we’ve ended up questioning science so much. We’re so interested in matter and how we experience matter. When you look at an amazing picture from outer space the Hubble space telescope has taken, outer space doesn’t look like that. The image is hundreds of images put together and loads of colour put on it, and everything is still quite inaccessible in terms of how you experience something. When it comes down to our work, we’re trying to create experiences of nature, but there’s always science getting in the way of the thing you are looking at.

(Semiconductor, interview with the author)

One of the ways Semiconductor creates an experience of nature is by exploring the possibilities of expanding spatio-temporal scales through animation. They aim to make the invisible visible, which ‘sometimes requires a transformation in scale or another shift in the realms of human perception’ (Hinterwäldner 2014, 16). Commenting in an interview about their earlier film Magnetic Movie (2005), Semiconductor remark on the capacity of animation to enable their transformations of scale: ‘Animation was a useful tool for the way we wanted to explore man’s relationship to an experience of the natural environment, allowing us to manipulate and control time beyond human experience and scales larger and smaller than us’ (Selby 2009, 171). In Magnetic Movie Semiconductor created animations of magnetic waves and composited them within still photos of lab spaces, using CG animation to bring these invisible waves into visibility. Recently Paul Dourish has argued that ‘the material arrangements of information – how it is represented and how that shapes how it can be put to work – matters significantly for our experience of information and information systems’ (2017, 4). In Where Shapes Come From Semiconductor use Autodesk 3ds Max to generate 3D-digital crystals. How these shapes are put to work in challenging the conventions of scientific representation lies not only in how they look but also how they enter into a dialogue with the other elements of scientific discourse in the animation. Through this process a version of materiality emerges, and which is experienced by the viewers of the artworks. The materiality that emerges is, however, as much digital as it is physical.

Semiconductor have consistently, though not exclusively, used the 3D-animation software 3ds Max to model CG shapes and create movement (for Earthworks (2016), Semiconductor worked with Houdini). Autodesk 3ds Max was originally released in 1990 as 3D Studio. In a 2010 interview with CGPress, Tom Hudson, one of the early developers of the software, says that he ‘wanted software that [he] could make movies with’ (Baker et al. 2010). This design aim has been privileged and built into the software with toolsets for rendering and lighting continuing to evolve and get better at delivering photorealistic results. In the context of Semiconductor’s experimental work, the software design of 3ds Max is flexible enough to enable alternative associations and connections than those seen in animation for commercial sectors. Speaking of their early work with 3ds Max, Jarman recalls how they actively engaged in breaking down the conventional materiality of the then new software:

When we first went into 3ds Studio Max we would spend all the time turning things off. It was developed as a tool to make things look realistic, so we’d go in turning shininess off, everything off. Because we were doing experiments in the early stages, we were trying to understand the material nature of the software and how we could develop our own language [ … ] As we’ve progressed, I guess that hasn’t remained so important. It was a way of developing our own language, stripping everything back and we could build on it from there.

(Semiconductor, interview with the author)

As Jarman describes, in experiments such as A-Z of Noise (1999), Semiconductor set themselves against the primary visual language of the software by designing experiments to explore and expose both the limits and possibilities. This kind of experimentation connects Semiconductor to a growing body of artists, including Alan Warburton and Claudia Hart, who engage with a range of software to create images and challenge conventional usage. In his work Primitives (2016), Warburton explores the possibilities and limits of crowd simulation software, putting into practice a ‘close reading of software and its attendant aesthetic and political biases’ (Warburton 2016). Hart, in her mixed media installation The Dolls House (2016), combines 3D models with algorithmically generated patterns. Adapting the forms and the software normally used to create 3D shooter games, Hart describes how she challenges existing conventions: ‘By creating virtual images that are sensual but not pornographic within mechanised, clockwork depictions of the natural, I try to subvert earlier dichotomies of woman and nature pitted against a civilised, “scientific” and masculine world of technology’ (Claudia Hart, artist statement). Like Semiconductor, these experimental animators are artists who interrogate the language of the software not only to generate images but to also provoke a deeper understanding of what software contributes to creative practice.

In their early experiments, Semiconductor created associations and connections that generated their own language. The animation software has a performative materiality in this process too, it makes a difference, performs actions via the controllers designed as part of the software’s functionality and the possibilities for manipulation they offer to artists such as Semiconductor. The performance continues to emerge in both Semiconductor’s imagery of atoms and crystals and the audio-control of molecular motion. Gerhardt says one of the reasons Semiconductor continue to work with 3ds Max is its capacity to let them both expand the scales of world they are exposing and also build their story in the ways they want:

One of the reasons we work with 3ds Max still, and one of the first reasons we came to it as well, is you could technically make anything in it. You can build a world on any scale in it. When we first started out as artists and couldn’t have afforded a studio that was hugely beneficial situation. But you can also build the ways that you can tell your story. Like using certain connections of controllers and inputs. And that’s allowed us to develop a kind of signature in a way.

(Semiconductor, interview with the author)

This combination of scalar expansion and story building is seen in the modelling and movements of the crystals. With Where Shapes Come From, Semiconductor use the software not simply to depict 3D animated versions of crystal lattice structures based on the abstracted visual language of X-Ray patterns, but also craft a relational sense of atoms coming into being as they move through that structure. Gerhardt observes that the relationality of the atoms is informed by ideas in quantum mechanics:

We play with the nature of animation in our work and so what is also happening is that these things that look like atoms are more like fields and the shapes are moving through the fields of atoms. It’s like quantum mechanics where a particle has a place and a vector at the same time. Things don’t come into existence until they have a relationship with something else. These shapes are actually moving through something that is there but is also not fixed. It is a play with those relationships.

(Semiconductor, interview with the author)

As 3ds Max is used to animate atoms, some of which seed to crystals and then grow, the spatial and temporal scales of the work proliferate. Jeff Post’s live-action audio uses the language of scientific information to talk about an atomic scale and a timescale of thousands of years, and the video shows a man grinding a stone, an old piece of matter in this timescale. All the while, CG atoms oscillate and transition across the fields, at times coalescing through relations that precipitate into crystalline forms. As someone used to looking for patterns and degrees of coherency, a facet readily recognisable in Category 4 Hurricane Matthew, it seems reasonable to try and see these digital transformations of scale as a new ordering, an imagined spatio-temporal congruence where the microscopic has its own seamless system set against the macro-scale of a lab. But that is not the case as neither the atomic oscillations nor the growing crystals are of the same order of scaled space or time. Consequently, CG adds competing scales to the flow of images in Where Shapes Come From. As a visualization, Category 4 Hurricane Matthew presented a consensus around multiple perspectives. In contrast, Semiconductor bring multiple perspectives into proximity through different audio-visual elements, and then maintain a gap between them. The relations across the audio-visual elements are asymptotic, coming close enough for dialogue but never converging to a singular point or perspective.

The spatio-temporal complexity of imagery in Where Shapes Come From always holds a gap in place, and the way Semiconductor utilises seismic data brings another dimension into the (audio) mix. Semiconductor have used seismic data in several of their artworks, including Worlds in the Making (2011), The Shaping Grows (2012), and Earthworks (2016), and describe sound as a sculptural tool through which to add time and motion to microscopic worlds (Semiconductor, artists’ statement). Of using seismic data Jarman says: ‘when we first started working with seismic data we saw it as a tool for revealing events that took place over geological time frames long time ago’. Gerhardt adds: ‘When you listen to the seismic data you feel what’s actually happening in the earth. It sounds like what it is. It’s not a metaphor of something else’ (Semiconductor, interview with the author). In Where Shapes Come From, the audio track created using seismic data from the Mariana Trench sounds like the earth creaking and cracking as it subtly shifts deep beneath our feet. It is tempting to hear this audio track as only bringing a more direct experience of nature into the work, when in fact it also introduces another dimension of digital materiality. When used as a procedural software, 3ds Max can be programmed through its controllers, essentially mathematically-defined algorithms allowing the timing of movements in an animation to be automated. Seismic sound, converted back into a waveform, can be input into an audio controller adding motion to specific parameters in the models of lattices and crystals, the motion of a particular atom, for instance. Through this process, the audio and the visual become inseparable since sound sculpts motion. As Gerhardt explains, without further adjustment to the motion, this would quickly look rather repetitious and so a noise controller is used to add randomness to the motion:

A lot of the time, say with matrices and lattices, if everything just moved up and down in the z-axis, then it wouldn’t be very interesting. We have to then transfer that one-dimensional or rather two-dimensional wave, you know time and axis, into movement of noise so that the sound then goes into a noise controller that would then affect all the different points in different random ways so that the amplification of the noise is what’s happening. It allows variation, everything is moving in a different way.

(Semiconductor, interview with the author)

The underlying motion remains based on the seismic waveform, but the detail of the motion for different points within a model is randomised, creating a wave-like motion punctuated by jumps and rotations.

The complexity of the mixed materiality of physical sound and algorithmically generated noise is intriguing, and another place where performative digital materiality emerges. Seismic sound is used to sculpt motion, suggesting an experience closer to a natural phenomenon. At the same time, a digital element literally comes into the picture through the noise controller. The algorithm of the noise controller in 3ds Max creates Perlin noise, designed to reduce the unnatural smoothness of CG elements by imitating the appearance of texture and motion in nature. By creating random movement at points partly described by the seismic waveform, another asymptotic relationship becomes active in the animation. The gap runs through the materiality of the artwork, and via this gap performative materiality emerges. Every point of motion in an atomic array or a growing crystal has the possibility of being a combination of seismic waveform and a randomly defined point. In the flow of the animation, the origin of motion cannot be said to be either physical or computational, it is both. Like the counterpoint between Jeff Post’s audio track and the different spatio-temporal scales introduced by the CG animated atoms and crystals, these two drivers of motion can never coincide. Through Where Shapes Come From, Semiconductor challenge their audience to rethink their understanding of the world and its material nature. They do this by not only posing questions about how nature is mediated by scientific language, but also through the emergent performative digital materiality of 3ds Max and the Perlin noise algorithm.

Conclusion

With digital techniques increasingly accessible, artists are able to create experimental work using software, and in doing so they often explore and push against the materiality it offers. Since the materiality of software is intangible, expanding with any depth on this idea has not been an easy matter. In this essay, I propose a way forward. Using two case studies, one an experimental animation and the other a proof of concept visualisation, I have taken Johanna Drucker’s idea of performative digital materiality and linked it to the work of algorithms via the idea of abstraction. This linkage relies on excavating the complex assemblage of abstractions, processes and the people involved in making animations. By comparing Where Shapes Come From and Category 4 Hurricane Matthew I have shown performative digital materialities as concrete, they emerge in specific situations. Placing software and algorithms in their situation allows us to see two things: How the material arrangements of information alters our experience of information, and, algorithms are active in the cultural politics in which those arrangements evolve. Finally, returning to the title of this paper, where do shapes come from? Shapes happen at the intersections of complex assemblages, in the relations between the materials and technologies with which they are conceived, such as CG software, the histories and conventions of a discipline (say, animation, geometry or art history), in arenas of production or debate, and through the imaginations of people. As some or all of these happen together, the things we call shapes emerge.

Notes

1 Atoms in a crystalline structure form a regular repeating pattern known as a crystalline lattice. A lattice diagram depicts the repeating pattern in 2D or 3D. Semiconductor create 3D lattice diagrams.

2 A cloud stack is a term used to describe storm clouds that rise to dramatic heights.

3 GPM: ‘Global Precipitation Measurement,’ accessed 11 July 2017, https://eospso.nasa.gov/missions/global-precipitation-measurement-core-observatory.

4 An asymptote is a mathematical term used to describe a straight line that continually approaches a given curve but does not meet it at any finite distance. I use it here more metamorphically to describe visual and audio imageries that come close but never resolve into a single coherent whole.

References

Baker, Dave, Neil Blevins, Pablo Hadis, and Scott Kirvan . ‘The History of 3D Studio.’ CGPress (2010). Accessed July 11 2017, http://cgpress.org/archives/cgarticles/the_history_of_3d_studio.

Bandeen, W.R., V. Kunde, W. Nordberg, and H.P. Thompson . ‘Tiros III Meterological Satellite Radiation Observations of a Tropical Hurricane.’ Tellus XVI (1964): 481–502.

Carusi, Annamaria. ‘Scientific Visualization and Aesthetic Grounds for Trust.’ Ethics and Information Technology 10 (2008): 243–254.

Carusi, Annamaria. ‘Computational Biology and the Limits of Shared Vision.’ Perspectives on Science 19, no. 3 (2011): 300–336.

Chun, Wendy H.K. Programmed Visions: Software and Memory. Cambridge, Massachusetts: The MIT Press, 2011.

Denning, Peter J., and Criag H. Martell . Great Principles of Computing. Cambridge, Massachusetts: The MIT Press, 2015.

Dourish, Paul. The Stuff of Bits: an Essay on the Materialities of Information. Cambridge, Massachusetts: The MIT Press, 2017.

Drucker, Johanna. ‘Performative Materiality and Theoretical Approaches to the Interface.’ Digital Humanities Quarterly 7, no.1 (2013). Accessed July 11 2017, www.digitalhumanities.org/dhq/vol/7/1/000143/000143.html.

Finn, Ed. What Algorithms Want: Imagination in the Age of Computing. Cambridge, Massachusetts: The MIT Press, 2017.

Furniss, Maureen. Art in Motion: Animation Aesthetics. Barnet: John Libbey Publishing, 1998.

Godard Science Visualization Studio. Category 4 Hurricane Matthew on October 2, 2016. US, 2017. Video.

GPM: Global Precipitation Measurement. Accessed July 11 2017, https://eospso.nasa.gov/missions/global-precipitation-measurement-core-observatory.

Guttag, John V. Introduction to Computation and Programming Using Python. Cambridge, Massachusetts: The MIT Press, 2013.

Hart, Claudia. The Dolls House. US, 2016. Film.

Hinterwäldner, Inge. ‘Semiconductor’s Landscapes as Sound-Sculptured Time-Based Visualizations.’ Technoetic Arts: A Journal of Speculative Research 12, no.1 (2014): 15–38.

Huffman, George. ‘NASA Global Precipitation Measurement (GPM) Integrated Multi satellite Retrievals for GPM (IMERG).’ NASA (2015). Accessed July 2017, https://pmm.nasa.gov/sites/default/files/document_files/IMERG_ATBD_V4.5.pdf.

Husbands, Lilly. ‘The Meta-Physics of Data: Philosophical Science in Semiconductor’s Animated Videos.’ Moving Image Review and Art Journal 2, no. 2 (2013): 198–212.

Kemp, Martin. Seen|Unseen: Art, Science, and Intuition from Leonardo to the Hubble Telescope. Oxford: Oxford University Press, 2006.

Kidd, Chris, Erin Dawkins and George Huffman . ‘Comparison of Precipitation Derived from ECMWF Operation Forecast Model and Satellite Precipitation Datasets.’ Journal of Hydrometerology 14 (2012): 1463–1482.

Latour, Bruno. Reassembling the Social: An Introduction to Actor Network Theory. Oxford: Oxford University Press, 2007.

Leonardi, Paul M. ‘Digital Materiality? How Artefacts without Matter, Matter.’ First Monday 15, no. 6 (2010). Accessed July 11 2017, http://journals.uic.edu/ojs/index.php/fm/article/view/3036/2567.

Mackenzie, Adrian. Cutting Code: Software and Sociality. New York: Peter Lang, 2006.

Semiconductor. A-Z of Noise. UK, 1999. Film.

_____. Magnetic Movie. UK, 2005. Film.

_____. ‘Magnetic Movie.’ In Animation in Process. Edited by Andrew Selby, London: Laurence King Publishing, 2009, 170–175.

_____. Worlds in the Making. UK, 2011. Film.

_____. The Shaping Grows. UK, 2012. Film.

_____. Earthworks. UK, 2016. Film.

_____. Where Shapes Come From. UK, 2016. Film.

Space Daily Writing Team. ‘GPM Mission’s How to Guide for Making Global Rain Maps.’ Space Daily (2014). Accessed July 11 2017, www.spacedaily.com/reports/GPM_Missions_How_to_Guide_for_Making_Global_Rain_Maps_999.html.

Turkle, Sherry. Simulation and Its Discontents. Cambridge, Massachusetts: The MIT Press, 2009.

Warburton, Alan. Primitives. UK, 2016. Film.

Warburton, Alan. ‘Spectacle, Speculation, Spam.’ Video essay (2016). Acccessed January 3 2018, http://alanwarburton.co.uk/spectacle-speculation-spam/.

Wells, Paul. Understanding Animation. London: Routledge, 1998.