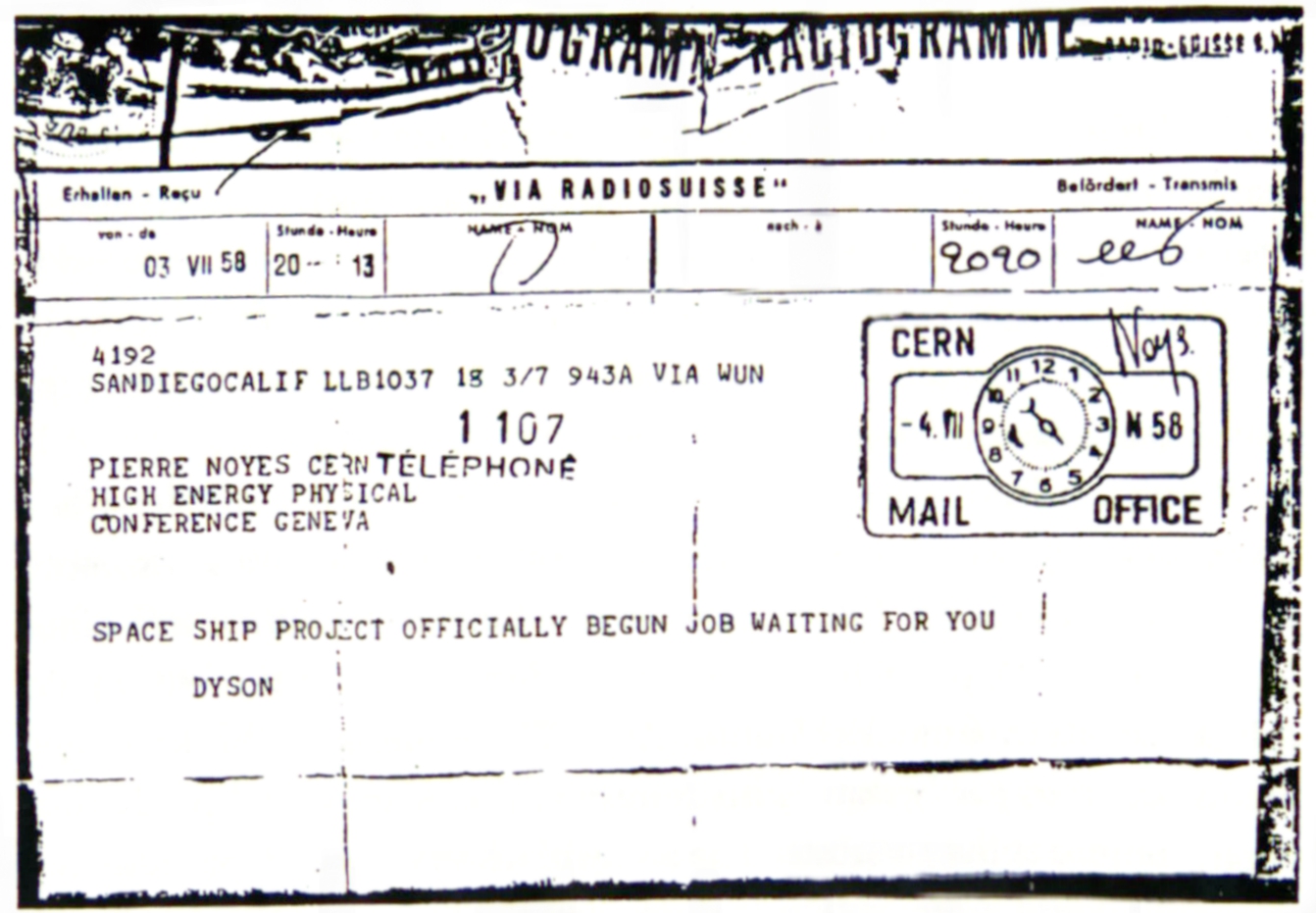

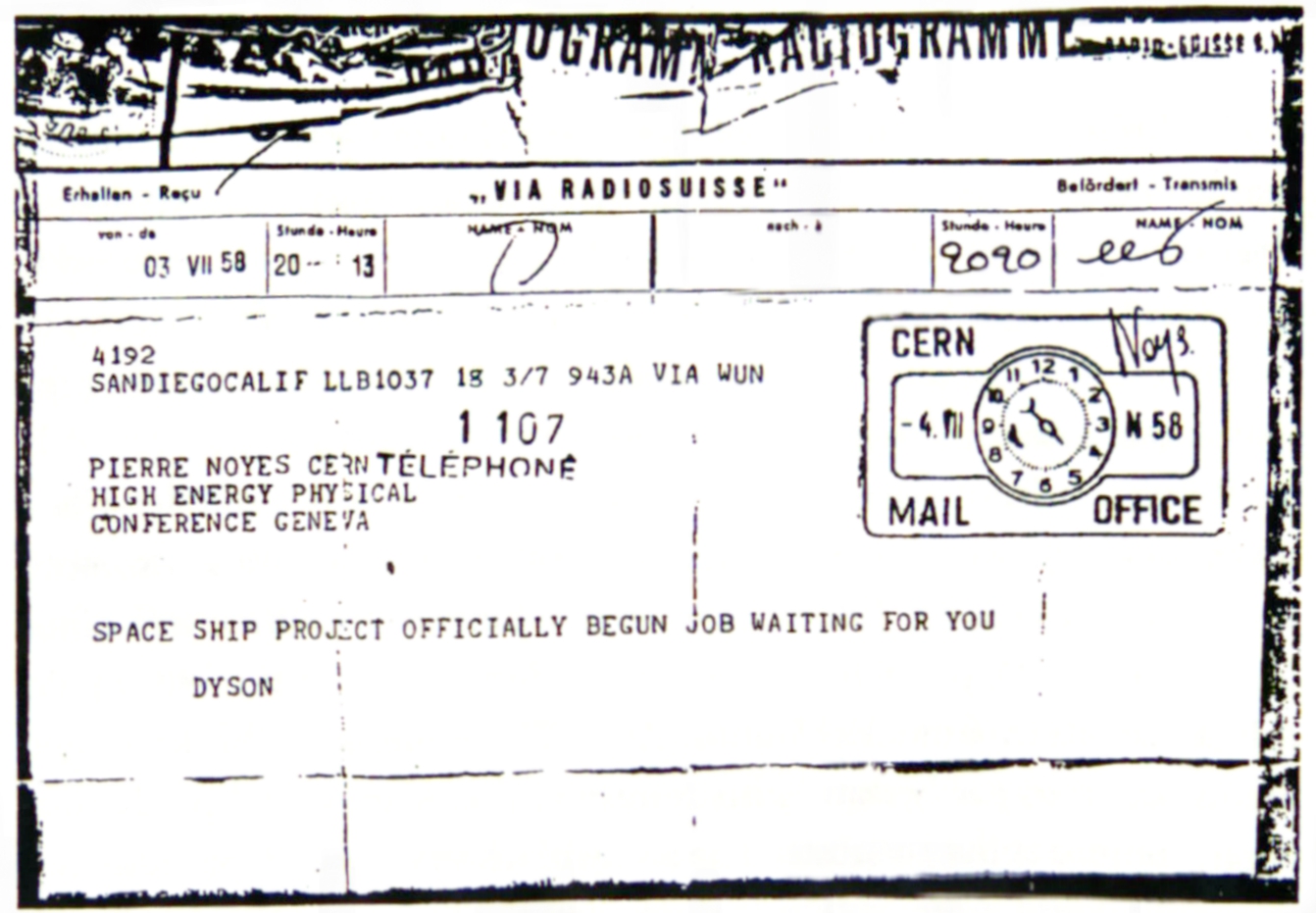

Telegram from Freeman Dyson to Pierre Noyes, July 3, 1958.

"These atomic bombs which science burst upon the world that night were strange even to the men who used them," wrote H. G. Wells in The World Set Free, a prophetic novel appearing at the dawn of World War I. Wells envisioned a future transformed by atomic energy, but feared that the lack of a requisite transformation of human nature would lead to the "Last War"—the one we still refer to, hoping that we have avoided it, as World War III. "The Central European bombs were the same, except that they were larger," Wells explained. "Nothing could have been more obvious to the people of the early twentieth century than the rapidity with which war was becoming impossible. And as certainly they did not see it. They did not see it until the atomic bombs burst in their fumbling hands.... Before the last war began it was a matter of common knowledge that a man could carry about in a handbag an amount of latent energy sufficient to wreck half a city... and yet the world still, as the Americans used to phrase it, 'fooled around' with the paraphernalia and pretensions of war."[14]

Nuclear fission was unknown in 1914. Wells's atomic bombs, releasing the energy known to fuel the sun, were closer to fusion (hydrogen) than to fission (uranium or plutonium) bombs. Orion would have been powered by small but otherwise conventional fission bombs. Before leaving General Atomic in September 1959, Freeman Dyson did give an informal talk exploring the limits of Orion, taking as an example a hydrogen-bomb driven interstellar ship that "could get a colony of several thousand people to Alpha Centauri, about 4 light years away, in about 150 years." Only one problem: it would require 25 million hydrogen bombs to get there—and 25 million more bombs if you wanted to stop.[15]

Project Orion's dreams of large-scale transport of passengers and freight around the solar system were, however, fueled by the expectation that small, clean, fission-free or extremely low-fission bombs would become available by the time fleets of bomb-propelled spaceships began to fly. Although small fusion bombs never materialized, the origins and development of Orion were intimately related to the origins and development of the hydrogen bomb. Fusion was in the air in 1956. Early that spring, my father and I were walking home from his office at the Institute for Advanced Study in Princeton, New Jersey, when I found a broken fan belt lying in the road. I asked him what it was. "It's a piece of the sun," he said.

It is not surprising that my father, who had come to America in 1947 as a student of Hans Bethe, would view a fan belt not as a remnant of an automobile but as a remnant of the nearest star. It was Bethe, in 1938, who elucidated the carbon cycle that produces energy via the fusion of hydrogen and helium within the interior of stars. Other reactions in older stars produce the heavier elements resulting in the entirety of our material existence, from Earth's iron core to fan belts thrown by the side of a New Jersey road. "Stars have a life cycle much like animals," Bethe explained when accepting the Nobel Prize. "They get born, they grow, they go through a definite internal development, and finally they die, to give back the material of which they are made so that new stars may live."[16]

"When Bethe's fundamental paper on the carbon cycle nuclear reactions appeared in 1939," explained Stan Ulam, "few, if any, could have guessed or imagined that within a very few years such reactions would be produced on Earth." At least three years before the first fission bombs were exploded, it was recognized that this would produce, for an instant, temperatures and pressures more extreme than those within the interior of the sun. If suitable nuclear fuel were subjected to these temperatures and pressures, a very small sun might be brought into existence, which in the next instant, without the gravity that holds the sun together, would blow itself cataclysmically apart. Splitting the nuclei of heavy elements like uranium (Hiroshima) or plutonium (Nagasaki) releases tremendous energy, but fusing the nuclei of light elements like hydrogen or helium might release a thousand times as much energy or more. Both Moscow and Washington were afraid they might be the next target on the list.[17]

Such a thermonuclear or "hydrogen" bomb could burn deuterium, a stable and easily separated isotope of hydrogen that constitutes the cheapest fuel available on Earth. The actual cost of deuterium was classified until 1955. "The basic built-in characteristic of all existing weapons," noted Freeman in 1960, "is that it is relatively much cheaper to make a big bang than a small one." In 1950, the cost of adding a kiloton's worth of deuterium to a hydrogen bomb was about sixty cents.[18]

Public debate over Truman's decision to fast-track the development of the hydrogen bomb, against J. Robert Oppenheimer's recommendations as chairman of the General Advisory Committee of the AEC, has eclipsed the preliminaries to these events. The first series of meetings on the prospects for fusion explosions had been held in Oppenheimer's office in Berkeley, early in the summer of 1942. "We were not bound by the known conditions in a given star but we were free within considerable limits to choose our own conditions. We were embarking on astrophysical engineering," remembers Edward Teller. "By the middle of the summer of 1942, we were all convinced that the job could be done and that it would be relatively easy... that the atomic bomb could be easily used for a stepping-stone toward a thermonuclear explosion, which we called a 'Super' bomb."[19]

During the Manhattan Project, a small group of physicists, spearheaded by Teller, kept working on the Super bomb and, according to Carson Mark, Hans Bethe's successor as director of Los Alamos's theoretical division, half the division's effort was devoted to the Super between 1946 and 1949. Members of the General Advisory Committee who voiced opposition to its further development, at that time, included not only Oppenheimer but Enrico Fermi, Isodore Rahi, and James Conant. "Its use would involve a decision to slaughter a vast number of civilians," they concluded on October 30, 1949. "In determining not to proceed to develop the Super bomb, we see a unique opportunity of providing by example some limitations on the totality of war and thus of limiting the fear and arousing the hopes of mankind."[20]

When the decisive technical breakthrough, known as the Teller-Ulam invention, appeared in early 1951, the first meeting to discuss its implications, including both Teller and Bethe, was held in Oppenheimer's office in Princeton. Oppenheimer served as director of the Institute for Advanced Study from 1947 until 1966. The genesis of the hydrogen bomb occurred partly during his tenure at Los Alamos and partly during his tenure at the Institute. The irony of history is that Oppenheimer was vilified for his opposition to deploying the hydrogen bomb, after so actively nurturing the circumstances that led to its design. During his security hearings in 1954, even Oppenheimer acknowledged that the Teller-Ulam invention was irresistible because it was "technically so sweet."[21]

The Institute for Advanced Study, in Princeton, New Jersey, but not at Princeton University, occupies 800 acres of fields and woodlands that until 1933 was still functioning as Olden Farm. Best known as the place where Albert Einstein spent his later years, it is less known for its contributions to digital computing and the hydrogen bomb. This was no coincidence. The Institute was also home to John von Neumann, who in November of 1945 persuaded the Institute trustees to break their rule of supporting only pure science and allow him to build what became the archetype of the modern digital computer, inoculating its 5,000 bytes of high-speed memory with the order codes and subroutines out of which the rudiments of an operating system and the beginnings of the software industry evolved. In 1950 and 1951, even before the machine was fully operational, von Neumann set it to work, once for as many as sixty days nonstop, on a series of calculations that led directly to the first thermonuclear bomb.

Telegram

from Freeman Dyson to Pierre Noyes, July 3, 1958.

In an inconspicuous brick building, paid for by the AEC, at the edge of the Institute woods and directly across Olden Lane from what later became my father's office in Building E, 40,960 bits of high-speed random access memory flickered to life. This was riot the superficial flickering of later computers equipped with banks of diagnostic indicator lights. What von Neumann termed the memory organ of the Institute computer consisted of forty cathode-ray "Williams tubes," each storing 1,024 bits of both data and instructions in a 32 x 32 array of charged spots whose state was read, written, and periodically refreshed by an electron beam scanning across its phosphorescent face. The resulting patterns, shifting 100,000 times per second, were the memory, completely unlike the cathode-ray tubes in later computers that merely display the contents of memory residing somewhere else. As the first shakedown runs of the new computer were executed, the digital revolution was ignited, but Teller's "Super" fizzled out.

The Super was, as Oppenheimer put it in 1949, "singularly proof against any form of experimental approach." How do you begin to build a hydrogen bomb, when you have no idea whether it is possible or not? You build it numerically, neutron by neutron and nanosecond by nanosecond, in the memory of a computer, first. Ulam, von Neumann, and Nick Metropolis developed the "Monte Carlo Method" of statistical approximation whereby a random sampling of events in an otherwise intractable branching process is followed through a series of representative slices in time, answering the otherwise incalculable question of whether a given configuration would go thermonuclear or not. Computers led to bombs, and bombs led to computers. Although kept largely secret, the push to develop the Super drove the initial development of the digital computers then adapted to other purposes by companies like IBM. Ralph Slutz, who worked with von Neumann on the Institute for Advanced Study computer project, remembers "a couple of people from Los Alamos" showing up as soon as the machine was provisionally operational, "with a program which they were terribly eager to run on the machine... starting at midnight, if we would let them have the time."[22]

"As the results of the von Neumann-Evans calculation on the big electronic Princeton machine started to come in, they confirmed broadly what we had shown," recalls Ulam. "In spite of an initial, hopeful-looking 'flare-up,' the whole assembly started to cool down. Every few days Johnny would call in some results. 'Icicles are forming,' he would say." This evidence that the classical Super was a dead end started Ulam thinking. He soon came up with an alternative approach to achieving thermonuclear ignition whose ingenuity, elaborated by Edward Teller, was chilling to those who feared that an enemy might be thinking up a similar design.[23]

The result was the first full-scale fusion device, "Ivy Mike," exploded at Eniwetok Atoll in the South Pacific on November 1, 1952. Mike consisted of an 82-ton tank of liquid deuterium, cooled to minus 250°K and ignited by a TX-5 fission bomb. It yielded 10.4 megatons—almost one thousand Hiroshimas—and a fireball three miles across. The Teller-Ulam invention removed the entire island of Elugelab from the map. Mike's solid-fuel, room-temperature lithium deuteride successors ("Why buy a cow when powdered milk is so cheap?") were soon weaponized, with the help of the Air Force Special Weapons Center (AFSWC), into deliverable packages that could be carried by conventional bombers and eventually by the Atlas and Titan rockets that were already in the works. In November of 1955 the Soviet Union dropped a hydrogen bomb yielding 1.6 megatons from a Tupelov bomber and the race for strategic thermonuclear weapons was on. As rockets grew larger and bombs grew smaller, both sides were pushing toward practical Intercontinental Ballistic Missiles, or ICBMs. Inaccurate guidance, limiting the effectiveness of ballistic missiles against hardened military targets, became less of an impediment as the radius of devastation went up. It was the threat of Soviet thermonuclear ICBMs that led the launch of the Soviet Sputniks to prompt such intense response. "Americans design better automobile tail fins, but we design the best intercontinental ballistic missiles," commented one Russian scientist upon the launch of Sputnik I.[24]

Childhood in the 1950s, enthused by dreams of space, was haunted by nightmares about hydrogen bombs. Sometimes my father went to Washington and came home visibly scared. Yet the architects of the H-bomb—people like Stan Ulam, Hans Bethe, Marshall Rosenbluth, and even Edward Teller—were as kind and likable as anyone else. Some, like Teller, were motivated by ideology and others were drawn to the subject simply because, to a physicist, the conditions produced by a thermonuclear explosion were just too interesting to resist. Freeman, a pacifist at the start of World War II, argued during the test-ban debate that "any country which renounces for itself the development of nuclear weapons, without certain knowledge that its adversaries have done the same, is likely to find itself in the position of the Polish Army in 1939, fighting tanks with horses."[25] He had not been involved with the development of atomic weapons, having spent the war as a theoretician at RAF Bomber Command, where he learned what conventional bombing could and could not do—and how a new idea, like radar, could tip the scales. "In 1940 we owed our skins to a small number of men who had persevered with the development of weapons through the years when this kind of work was unpopular," he wrote in 1958.[26]

Other physicists, who had helped to develop the A-bomb, thought the H-bomb was going too far. Leo Szilard, who brought the prospect of atomic weapons to Roosevelt's attention in 1939, summed up his thoughts twenty years later in The Voice of the Dolphins, a novella about disarmament and interspecies communication in which the dolphins succeed where physicists had failed. Oppenheimer, our neighbor in Princeton, did have a ghostlike quality, but this was the ghost of his broken spirit, not the ghost of "Death, the destroyer of worlds." When Freeman was invited to join Ted Taylor at Ceneral Atomic, he went to Oppenheimer to request a one-year leave. "He was sympathetic," noted Freeman, "and said he felt a certain nostalgia for the days in 1942."[27]

Despite occasional arguments in favor of first use of nuclear weapons, Los Alamos, Hiroshima, and Nagasaki produced a generation of peaceful weaponeers. Many who worked with large, thermonuclear weapons systems were more afraid of smaller, tactical nuclear weapons, because their limited power was deceptively tempting and more likely to be used. The details of how to build the small bombs that would have powered Orion remain secret, while the designs of large bombs are better known. RAND studies concluded, behind closed doors, that there could be no such thing as "limited" nuclear war. There was both a cold logic behind the doctrine of Mutual Assured Destruction and a hope that out of this madness a sense of shared humanity might emerge. Once you open Pandora's box, as Ted explains it, you have to keep going, because Hope emerges last. Thermonuclear weapons, while threatening Armageddon, may indeed, as their creators hoped, have prevented "the Last War" as envisioned by H. G. Wells in 1914.

Our only hope was fear. Just as we were building up huge stockpiles of tactical and strategic nuclear weapons and setting in place hair-trigger retaliatory mechanisms that appeared to be leading us from brinkmanship to use, along came the hydrogen bomb. Where kiloton-range weapons had plausible military applications to military targets, megaton-range weapons are useful for threatening wholesale destruction of population centers and little else. The incinerations of Dresden and Hamburg by conventional bombing in World War II were explained as fortuitous if unfortunate accidents; no one fully understood the fuel requirements and meteorological conditions that caused a firestorm to form. Thermonuclear weapons could deliver these results reliably every time. When the Soviet Union exploded a three-stage bomb yielding nearly 60 megatons on October 30, 1961, it was estimated that, for a moment, the energy flux exceeded 1 percent of the entire output of the sun. Enough was enough. It was this ability to produce temperatures of a hundred million degrees, and more, that kept the cold war cold.

The genesis of Project Orion was both technically and politically interwoven with the development of hydrogen bombs. Practical two-stage thermonuclear devices required compact fission primaries to trigger thermonuclear explosions, producing a renaissance in fission-weapon design. Instead of simply exploding a stockpile weapon and measuring its yield, weaponeers began to study how the energy of a fission explosion could be directed and channeled and how that energy might be transformed. This opened two new fields of theoretical and technical expertise: bomb physics, concerned with what happens immediately; and weapons effects, concerned with what happens next. Project Orion attracted some of weaponeering's most creative minds. Ted Taylor had a gift for designing bombs and gathered around him those with gifts for predicting the results. It was people like Marshall Rosenbluth, Burt Freeman, Charles Loomis, and Harris Mayer, among others, who had the theoretical and computational tools, developed for thermonuclear weapons, to investigate the feasibility of Ted Taylor's plan.

"By the time we started work on Orion," explains Ted, "there were a whole lot of checked-out possibilities for tailoring the effects of the primary by fiddling around with the design so it would change the relative fraction of energy, in neutrons, in penetrating gammas, high-speed debris, and so on. We could pick out what we wanted, which was momentum stretched out over a long enough time so the pressures weren't intolerable but short enough so that the heat transfer wasn't intolerable either. We had a whole array of possible ways of designing the explosives to do that. This was toward what many people called the golden age of nuclear weaponeering. Orion would have gotten nowhere without some deep familiarity, mostly by Burt Freeman and Marshall Rosenbluth and then Bud Pyatt. Without their knowledge of how to do all that, the whole project would not have made sense."

Orion was the answer to the question, "What's next?" The physicists who spent the war at Los Alamos found it the most exciting time of their lives. After the end of the war there was a period of declining excitement, and then along came the hydrogen bomb. And then after the hydrogen bomb had been developed, once again the spirit of excitement of Los Alamos went into decline. "That was a time of exodus from Los Alamos," says Burt Freeman, who left Los Alamos to work on Orion with Ted. "The programs had come to a certain point and a lot of the original people that were involved with the development had left, and there was this feeling of a loss of mission there. I was looking for a new challenge, and reigniting that sense of excitement and team spirit was a big plus. Those were the good old days. And, I must say, I haven't experienced that sort of environment since."

Orion was the Teller-Ulam invention turned inside out. How to use the energy of a nuclear explosion to drive a spaceship has much in common with the problem of how to use the energy of a nuclear explosion to drive a thermonuclear reaction in a hydrogen bomb. The difficulty with the classical Super—detonating a large fission bomb next to a container of deuterium—was that the fuel would both be physically disrupted by the explosion and lose energy through radiation before it could reach the temperatures and pressures required to ignite. This was described as comparable to lighting a lump of coal with a match. Ulam's insight, delivered by Teller, was to channel the radiation produced by the primary into a cavity between a heavy, opaque outer radiation case and an inner cylindrical uranium "pusher" propelled violently inward by the pressure on its outer surface—much like Orion's pusher plate receiving a kick from a bomb. This shock compresses and heats the thermonuclear fuel, including a central "spark plug" of fissionable material, strongly enough to ignite. Since the radiation from the explosion of the primary travels much faster than the hydrodynamic shock wave, the secondary has a chance to go thermonuclear before being blown apart.

This brought the interactions between matter and radiation into a regime that was largely unexplored. What happens at the suddenly shocked surface? How energetically is the pusher propelled as a result? How transparent or how opaque to radiation is the intervening material at the time? These are exactly the questions whose answers would determine whether it is possible to propel a spaceship with nuclear bombs.