The resulting mass delusions may last indefinitely, and they may produce wasteful or even detrimental laws and policies.

—TIMUR KURAN AND CASS SUNSTEIN

SCIENCE AND SOCIETY

To begin to make sense of the conflicting scientific findings and disputes bedeviling issues associated with public health, we need to start by recognizing that discourse about health risks and health benefits generated by biomedicine is deeply embedded in society. In a democracy, scientific research ultimately depends on support of citizen taxpayers and public opinion, which influence political support in the Congress and the budgetary process. There are interactions between the scientific community and the public sphere, and the relationship between science and society is reciprocal and complex, operating on many levels. Different groups—scientists (who themselves fall into different disciplines with different points of view), regulators, health officials, lay advocates, journalists, businessmen, lawyers—are shaped by different backgrounds and motivated by different beliefs and agendas. Depending on the issue at hand, the interests of these parties may conflict or may align and reinforce one another.

There is a busy “traffic” in scientific findings regarding health between the various sectors of society.

Figure 3.1 gives a schematic representation of how the findings of scientific studies are embedded in society and can be viewed as being at the center of a set of concentric circles. The encircling rings represent different “audiences” or communities, each with its own “reading”—or, more often, multiple readings—of the scientific evidence. As one moves outward from the center, the technical information at the center needs to be “translated” and adjusted to a particular audience. Translation for the news media takes one form; translation for the regulatory community takes another. Thus there are effects that radiate outward from one ring to another, as well as effects that skip an intervening ring. The information from individual circles can also combine and have a joint effect. For example, the combination of scientific findings plus media reporting and resulting public concern can set regulatory action in motion. Additionally, the three innermost circles acting in concert can shape the narrative—or competing narratives—that takes hold in the society at large on a particular question. But there are also feedbacks from the outer rings toward the center. Scientists are keenly aware that their work has effects beyond the scientific community, and this awareness can influence what research they undertake and how they interpret their findings. These bidirectional interactions are indicated by the two-headed arrows.

REPORTING OF RISKS IN THE MEDIA

Media reporting of the results of scientific studies bearing on health risks exerts an influence in a class of its own. In recent years there has been an explosion in the number of media channels beyond newspapers, magazines, and television networks. The proliferation of websites, blogs, forums, “feeds,” and television channels provides a constant stream of “content” tailored to the interests and orientations of consumers. This in turn has led to an increasing fragmentation or “cantonization” of the news.

The staples of media reporting are stories that have the appearance of being relevant to our lives. Thus a scientific finding that suggests an association between an exposure alleged to affect the general population and a disease is more likely to gain news coverage than findings from a negative study. Many players in the media see it as their mission, as well as a business necessity, to awaken interest in “new developments.” They can’t afford to devote resources to putting a research finding in context or reporting on the long slog of research on obscure topics, because this does not attract most readers or viewers. Thus much media reporting is the antithesis of a critical assessment of what the reported finding may actually mean. The implicit justification for these news items is that they provide information that will be of interest and of use to the public. But many, if not most, of these reports are simply misleading or wrong and convey “information” that is of no conceivable use.

It is not that there is no high-quality reporting in the area of health and health risks. On the contrary, there are many outstanding sources of informed and critical reporting on these issues. However, there are two problems. First, this more solid and more thoughtful journalism exists on a different plane from the much more salient reports that make headlines, and it cannot compete with the latter. Second, those who avail themselves of these more informed sources of information do so because they are looking for reliable information on complex and difficult questions. Thus this type of journalism is, to a large extent, preaching to the converted.

When a health-related issue is catapulted into the spotlight, it takes on a special status. The French sociologist of science Bruno Latour has taken an extreme and controversial view of “scientific facts” that is useful by virtue of its emphasis on the interpenetration of science and society.

1 Latour refers to objects of scientific study as

hybrids and argues that there is no such thing as a pure scientific fact that can be dissociated from its social context and the technology used to study it. For example, according to Latour, a natural phenomenon, such as a virus, cannot be dissociated from the observations, concepts, and apparatus used to measure and characterize it. Beyond this, he argues that the way in which “facts” are disseminated into the public sphere is inseparable from the many associations that accrete around them. Whatever one ultimately thinks of Latour’s view of science, his concept of “hybrids” is useful and apt in reference to the way in which scientific findings concerning public health risks get shaped in the public arena.

For whatever reasons, we tend to underestimate the power that such ideas have when they take hold in the wider society. To give just one example of what I mean, consider the widespread concern about the dangers of low-frequency electromagnetic fields (EMFs) that arose in the late 1970s and reached its apogee in the mid-1990s but still persists today. These fields are produced by electric current carried by power lines and generated by electrical appliances and motors, and as such are virtually ubiquitous in modern societies. However, the energy from these fields is extremely weak and falls off rapidly with increasing distance from the source. Given the a priori implausibility of these weak fields having dramatic effects on human health,

2 the way in which the issue was often dramatized for television audiences is not without significance. Typically, when a scientist appeared on the nightly news to discuss findings from the latest study, he would invariably be filmed against the backdrop of ominous-looking 735-kilovolt transmission lines. Whatever the validity of the reported findings—and these studies have been recognized as having serious flaws—the clear and effective message conveyed to the viewer was that there was a threat. The intense concern and uncertainty surrounding the question of the effects of these fields on health led to the conducting of hundreds of scientific studies and lawsuits to force electric utilities to relocate power lines. Although the scientific and regulatory communities have largely dismissed a risk from EMFs encountered in everyday life, many people, including some scientists, continue to this day to believe that there is a health risk.

3KEY FACTORS AFFECTING THE INTERPRETATION OF SCIENTIFIC STUDIES

Textbooks of epidemiology routinely discuss a range of basic concepts relating to the conduct and interpretation of epidemiologic studies, such as measurement of disease occurrence, risk factors, measures of association, study design, the role of chance, statistical significance, confounding, bias, and sample size, and how these factors can affect the validity of the results. Understanding these concepts as well as the statistical methods used in analyzing epidemiologic studies is part of the competence that epidemiologists acquire in the course of their training. However, other factors that can influence the conduct and interpretation of epidemiologic studies—and biomedical studies generally—tend to get less attention because they have less to do with technical matters and more to do with “softer” issues, including how the data are interpreted, what limitations are acknowledged, how the findings are placed in the context of a larger narrative, what other studies are cited, what contrary evidence is discussed, and even less tangible factors, like philosophical and political orientation. Some considerations are so general and applicable to any scientific endeavor that they escape mention, even though there is ample evidence that they represent cognitive pitfalls and need to be taken seriously (e.g., the danger of becoming wedded to one’s hypothesis; favoring certain findings and disregarding others that don’t fit with one’s position; having an investment in a particular result; allowing a political or ideological position to color one’s interpretation of the data; ignoring countervailing evidence).

In this section I describe some of the features of the landscape in which research concerning health risks is interpreted in order to give a more realistic picture of the tools scientists have at their disposal, the limitations of the kinds of studies that are done, and the principles, concepts, and distinctions that come into play when interpreting research results and communicating them to the wider public. This will help us to understand how bias and subjective motivations can creep into the presentation of research findings and their dissemination to the society at large. The remainder of the chapter will explore how a distorted account of the scientific evidence can be amplified by cognitive biases operating in the wider society, resulting in costly scares and ill-conceived policies and regulations.

We have seen that the associations reported in epidemiologic and environmental studies are not always what they appear to be at first glance, and that the identification of a new association is only the beginning of a long process requiring better measurements and improved study designs to verify the linkages that underlie the association. Most initial associations do not stand up to this process. If the interpretation of associations is challenging and treacherous for scientists, once news of an association emerges into the public arena, the association can be transformed into something very different from what the scientific evidence supports and can take on a life of its own.

ASSOCIATION VERSUS CAUSATION

As we saw in the previous chapter, we depend on observational studies to identify risk factors or protective factors associated with disease in humans. The associations reported in epidemiologic studies are simply correlations, and it is an axiom in epidemiology, as well as in philosophy going back to David Hume, that “association does not prove causation.” Many phenomena are correlated and tend occur together without one causing the other. In addition, an observed association could be due to chance, confounding, or bias in the design of the study. These are things that students of epidemiology learn at the outset and that textbooks emphasize. However, something strange can happen when researchers publish a scientific paper examining the association of exposure X with health condition Y. In spite of the acknowledged limitations of the data and the awareness that one is examining associations, the message can be conveyed, either more or less overtly, that the data suggest a causal association. It appears to be difficult for researchers to study something that has to do with health and disease without going beyond what the data warrant.

4 If researchers can slip into this way of interpreting and presenting the results of their studies, it becomes easier to understand how journalists, regulators, activists of various stripes, self-appointed health gurus, promoters of health-related foods and products, and the public can make the unwarranted leap that the study being reported provides evidence of a causal relationship and therefore is worthy of our interest.

DOSE, TIMING, AND THE PROPERTIES OF THE AGENT

Another axiom, which is the cornerstone of toxicology, is that “the dose makes the poison.” The magnitude of one’s exposure matters. This is true of micronutrients, such as iron, copper, selenium, and zinc, which our bodies need in minute quantities to make red blood cells and to manufacture enzymes, but which, taken in large amounts, are highly toxic. It is also true of lifestyle and personal exposures, such as cigarette smoking and consuming alcoholic beverages, and medications, as well as pollutants in the environment. Many toxins and carcinogens exhibit a dose-response relationship: that is, as the exposure increases, so too does the observed toxic or carcinogenic effect. Even compounds and foods we think of as healthy can be lethal when consumed in excess. For example, we need beta-carotene obtained from carrots and other vegetables and fruits for our bodies to make vitamin A. However, consuming carrots in excess can lead to the skin turning orange from hypercarotenosis and eventually to death. Similarly, consumption of excessive amounts of water can lead to an electrolyte imbalance and heart failure. Some compounds are much more toxic than others, and even minute amounts can have lethal effects—for example, polonium-210, ricin, and methyl mercury. In addition to the dose, different compounds have vastly different potencies, as I will discuss in relation to “endocrine disrupting chemicals.” All this, of course, needs to be taken into account when evaluating an exposure. Nevertheless, what is noteworthy and disturbing is how frequently scientists examining an exposure of concern to the public (which, in many cases, was initiated in the first place by a scientific study) can ignore the issues of dose and potency or imply that they are seeing an effect of an exposure at a dose that is so low as to make the claimed effect questionable. Although modern technology gives us the ability to measure the amount of a compound in a sample down to parts per billion, this does not necessarily mean that trace exposures are having detectable health effects.

It should also be mentioned that the same compound can have very different effects depending on the timing of exposure. For example, it is now clear that estrogen given to women close to the onset of menopause increases the risk of breast cancer, whereas when given to women who are five or more years beyond menopause, estrogen decreases the risk of breast cancer.

5Finally, the nature of the agent being studied also needs to be taken into account. Radiofrequency energy and microwaves are many orders of magnitude weaker in energy than X-rays or ultraviolet radiation, both of which can damage DNA. This doesn’t mean that radiofrequency and microwave energy do not merit study in terms of their potential health effects. But it does mean that, in studying them, one needs to be aware of the fact that one is likely to be dealing with more subtle and difficult-to-detect effects. Similar considerations apply to the study of “endocrine disrupting chemicals” in the environment. For example, the chemical BPA can act as a weak estrogen, but its potency is many orders of magnitude less than that of the natural estrogen estradiol. Again, it is striking how often, in scientific studies of health effects of such agents, these considerations are lost sight of.

FALSE POSITIVE RESULTS

It is fair to say that researchers are motivated to identify relationships that play an

important role in health and disease. Few researchers are likely to devote years to demonstrating that some factor does

not play an important role in disease. Furthermore, if a study is suggestive of a weak association, or if a subgroup within the study population shows an association, there is an understandable tendency to not want to discount what could be an indication of a real risk. A small risk applied to a large population can translate into a substantial number of cases of disease. However, at the same time, an emphasis on blips in the data can mean giving undue weight to what only appears to be a positive result. Marcia Angell, a former editor of the

New England Journal of Medicine, has said that “authors and investigators are worried that there’s a bias against negative studies. And so they’ll try very hard to convert what is essentially a negative study into a positive study by hanging on to very, very small risks or seizing on one positive aspect of a study which is by and large negative.”

6One of the consistent findings of the studies conducted by Ioannidis and colleagues is that there are many more statistically significant positive findings reported in the medical literature than would be expected based on the ability to detect true findings. Nevertheless, the issue of false positives has been a topic of heated debate among researchers.

7What is beyond dispute is that positive results spur other researchers to attempt to confirm an association, and this process can lead to cascades of research findings that may be weakly suggestive of an association but largely spurious. What is also beyond dispute is that such false positive findings are likely to get attention from the media and, in some cases, the regulatory community. And this can lead to “availability cascades” in the wider society.

8POSITIVE FINDINGS GET MORE ATTENTION THAN FINDINGS OF NO RELATIONSHIP

Positive findings linking an exposure to a disease are a valuable commodity and are of interest to other researchers, health and regulatory agencies, the media, and the general public. Where such findings are confirmed and become established as important influences on health (e.g., smoking and lung cancer; human papillomavirus and cervical cancer; obesity and diabetes), they have enormous importance for public health and may provide the means for reducing the toll of disease and death. However, research routinely generates positive findings that have a very different status. Many are weak or inconsistent findings that may conflict with other lines of evidence. Yet, in the researcher’s eye, the preliminary positive finding may point to a new and important risk.

It is simply a fact of human nature that positive findings get more attention than findings of no relationship obtained in studies of comparable quality. And this applies to researchers, regulators, the media, and the general public. Positive associations appear to be more psychologically satisfying than studies that show no effect. They give us something to hold on to; they give us the illusion of knowledge on issues where we are eager for clear-cut information. Perhaps for these reasons, positive findings seem more credible—they feel truer than findings of no effect. Thus there is an important asymmetry between reports showing an effect and those showing no effect. This asymmetry is synonymous with bias.

“HAZARD” VERSUS “RISK”

A crucial distinction lurking in the background in the assessment of health risks but rarely made explicit is that between hazard and risk. A

hazard is a potential source of harm or adverse health effects. In contrast,

risk is the likelihood that exposure to a hazard causes harm or some adverse effect.

9 The drain cleaner underneath one’s sink is a hazard, but it will pose a risk only if one drinks it or gets it on one’s hands. Thus a hazard’s potential to pose a risk of detectable effects is realized only under specific circumstances, namely, sufficiently frequent exposure to a sufficient dose for a sufficient period of time. The confusion between hazard and risk afflicts many scientific publications and, particularly, regulatory “risk assessments.” The most glaring example of this is the International Agency for Research on Cancer (IARC), an arm of the World Health Organization that publishes influential assessments of carcinogens in its series “IARC Monographs on the Evaluation of Carcinogenic Risks to Humans.” In spite of the reference to “risk” in the title, IARC actually is concerned only with hazard and not with risk.

10 In other words, any evidence—whether from animal experiments, studies in humans, or mechanistic studies in the laboratory—that a compound or agent might be carcinogenic is emphasized in IARC’s assessment, no matter what the magnitude of risk involved or the relevance to actual human exposure. This explains how in October 2015 the agency was able to classify processed meats, such as bacon, sausage, and salami, as “known human carcinogens,” in the same category as tobacco, asbestos, and arsenic.

11PUBLICATION BIAS

Publication bias refers to the fact that studies finding no evidence of an association between an exposure and a disease are less likely to be submitted for publication and, if submitted, are less likely to be published. Scientific and medical journals have a bias in favor of publishing positive and “interesting” findings. This is a well-documented phenomenon. Publication bias is simply the result of positive findings being more likely to be published. If carefully executed studies that produce “null” results (i.e., no support for the examined association) are not published, this distorts the scientific record and produces a skewed picture. Recently, the importance of publishing well-executed studies that report “null” findings has been gaining attention at the highest levels of the science establishment.

12THE “MAGNIFYING GLASS EFFECT” OR BLINKERING EFFECT

Research and regulation tend to address risks one at a time, rather than placing a putative risk in the context of other risks that may be more important or considering countervailing effects of other exposures that may offset them. In this way, the risk that is focused on becomes the whole world and can blot out consideration of other, potentially more important risk factors that should be taken into account. A good example of this is the case with regard to “endocrine disrupting chemicals,” where much of the research has had a narrow focus and has ignored factors, such as the recent increase in obesity, that could be expected to dwarf any effect of the exposure under study.

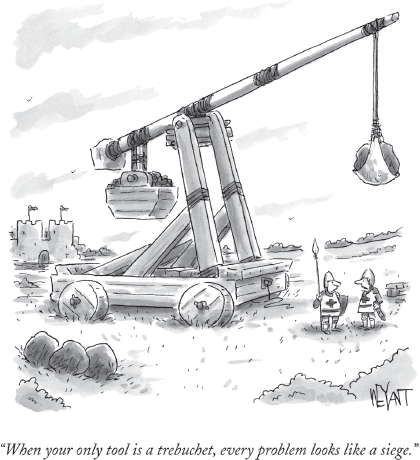

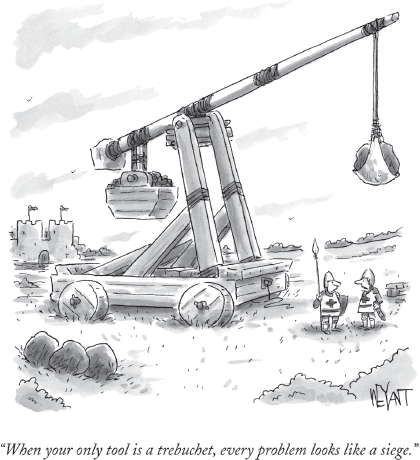

13 By focusing on risks one at time, isolated from their relevant context, one forgoes the opportunity to put them in perspective and make sense of them. This bias has been popularized in the adage, “When your only tool is a hammer, everything looks like a nail.” A

New Yorker cartoon (

fig. 3.2) has cleverly refreshed this insight by transposing it to the Middle Ages. Another name for this blinkering effect is confirmation bias.

THE PUBLIC IS SENSITIZED TO CERTAIN KINDS OF THREATS

We are predisposed to want to identify external causes rather than focusing on things closer to home—personal behaviors that we might be able to do more about. Studies by the psychologist Paul Slovic and others have shown that certain types of risks have much more salience for the public than others.

14 In general, threats that are invisible and not under our control (such as ionizing radiation or trace amounts of chemicals in food and water) tend to elicit a strong reaction from the public. Strikingly, other exposures that are much more important at the population level, such as cigarette smoking, weight gain, excessive alcohol consumption, and excessive sun exposure, do not elicit anywhere near the same reaction. This may be because we have the illusion that they are under our control but also because they are widespread and familiar—they have been “domesticated,” so to speak. Thus news reports based on scientific studies implying that we and our children are being poisoned by chemicals in our water and food or being threatened by “radiation” from power lines and cell phone masts strike an exposed nerve.

THE EXISTENCE OF AN ALARMED AND SENSITIZED PUBLIC IS USEFUL TO SCIENTISTS AND REGULATORS

When a question arises about the potential contribution of some environmental exposure to human health, the ability to obtain funds to conduct research depends in theory on the strength of the scientific case one can make for the value of the proposed study. Obtaining funding from the National Institutes of Health—by far the largest source of grant support for scientific research on health—is extremely competitive, and only a small percentage of submitted proposals are successful. However, if the media has picked up a question and made it a focus of public concern, this can influence the perception of the value of the proposed research. Special programs can be set up within federal agencies to focus attention on the topic, and in some cases special funds are even earmarked for research on it. The creation of a program within an agency, or supported by several agencies, gives the topic a special status. Furthermore, elevating a potential risk in this way sends a message to the public, since if something is being studied in this way, “it must be important.” This is what happened with DDT/PCBs and breast cancer and with electromagnetic fields and breast cancer in the 1990s, and something similar happened with “endocrine disruption” in the 2000s. Testifying at a meeting of the California Air Resources Board regarding proposed regulation of diesel emissions, the University of California at Irvine air pollution researcher Robert Phalen commented, “It benefits us personally to have the public be afraid, even if these risks are trivial.”

15At the same time, it is important to recognize that, in some circumstances, agencies have set up programs to support high-quality research to address a high-profile issue. This is what happened at the Food and Drug Administration and the Environmental Protection Agency in funding studies to elucidate population exposure to BPA as well as the pharmacokinetics of the chemical in animals and humans.

ADVOCACY AND POLITICAL CORRECTNESS

As the role of science has grown in the sphere of public policy, advocacy for a specific policy position has become increasingly prevalent among scientists. Whether the issue is exposure to trace amounts of contaminants in the environment, smokers’ access to potentially less harmful products that can replace cigarettes, concern about the role of certain foods and beverages in obesity, or something else, an adherence to a particular policy position can color one’s reading of the science. This poses a serious threat to science, which must be independent of the personal beliefs and predilections of scientists.

On certain topics scientists, advocates, and members of the public can invoke the moral high ground by claiming that particular findings are beneficial for human health and society and that anyone who questions the solidity of the scientific evidence for a claim can be characterized as not having the public’s best interests at heart. This is a form of political correctness and has been referred to as “white hat bias.”

16 Mark Cope and David Allison described the use of this strategy to assert that intake of nutritively sweetened beverages disposed toward obesity and that breastfeeding provided protection against obesity, claims that, according to them, are not supported by the evidence. The authors documented instances in which results that are “politically correct” were more likely to be accepted by scientists and that findings “that do not agree with prevailing opinion may not be published.” According to the authors, the bias was also reflected in inaccurate descriptions of results of studies to make them conform to the desired view.

THE PEER-REVIEW SYSTEM

The peer-review system is supposed to serve as a bulwark against the publication of flawed and misleading research. However, all that stands between the publication of a poor piece of work and the public are journal editors and the peer reviewers, who agree to donate their time to evaluate a paper for publication. As is true of any system dependent on human beings, peer review is imperfect. It is only as good as the critical acumen that editors and reviewers bring to the task, and there are inevitable failures, namely, papers that get published that should never have been published.

The quality of review is variable. Often reviewers are insufficiently critical, and this can lead to the acceptance of papers that should have been rejected outright or accepted only after further evidence was provided in support of the conclusions or a fuller discussion of the limitations was provided. The work of John Ioannidis and others has underscored the poor quality of much of what gets published, even in highly respected medical journals.

And the sad truth is that virtually anything—no matter how bad—can get published somewhere. When a paper deals with a topic that has received widespread publicity and caused public alarm, this may have the effect of lowering the threshold for publication. In other words, to the extent that the reviewers and editors were aware that the paper was weak and its results questionable, they may have overridden these reservations on the grounds that the paper was on a topic of great interest and would stimulate further research. Finally, the standards for what is publishable and the level of scientific rigor and overall quality may be lower in certain areas, such as environmental health, where there is great public and media interest, as opposed to other research areas that do not evoke the same level of interest.

17In 1998 the British journal

Lancet published a paper by the surgeon Andrew Wakefield purporting to show that children who had received the measles, mumps, and rubella (MMR) vaccine developed intestinal problems and autism. The paper’s publication represents a staggering failure of peer review. It was reviewed by six peer reviewers and the editor, none of whom saw any reason not to publish it. In retrospect, it is hard to understand how anyone could think that any conclusion could be drawn from a series of twelve cases. Nevertheless, it took twelve years for the journal to retract the paper, and it was only due to the persistence of an investigative reporter that the fraud perpetrated by Wakefield was uncovered.

18 The disastrous effects of this paper are still very much with us. While the Wakefield case represents an extreme example, much that is published has not been held to a very high standard.

Today, when one submits a paper to a biomedical journal, one is routinely asked to declare any potential “conflicts of interest.” This practice began in the 1990s owing to an increasing awareness that the reporting of results might be distorted due to influence of the sponsor of the research. At the outset this concern applied first and foremost to studies funded by industry, including the pharmaceutical, chemical, and tobacco industries. There is no question that there is the potential for abuse when the research findings may conflict with the interests of the commercial sponsor. In 1993, however, the epidemiologist Ken Rothman pointed out that financial conflicts of interest are only the most obvious, and therefore the easiest to spot instances of factors that could distort the research process.

19 Rothman argued that political, ideological, and professional motives could also affect the reporting of results. Since it is impossible to assess all the psychological and ideological factors that could influence the presentation of one’s results, he proposed that a piece of work should be evaluated on its merits rather than on extrinsic factors. More recently, others have argued that nonfinancial conflicts of interest may be more important than financial ones.

20 Still, alleged financial conflicts of interest as well as ad hominem arguments are routinely used to counter opponents in controversies involving human health.

Ironically, the emphasis on financial relationships has created a situation in which advocates with strong partisan views who are aligned with a cause routinely declare “no conflict of interest” because it does not fit the prevailing narrow definition. This state of affairs underscores how deeply subjective factors and attitudes are intertwined with the reporting of results of scientific studies.

THE DANGER OF BELIEVING ONE’S HYPOTHESIS

Science is propelled by new ideas, or hypotheses, that represent attempts to answer important questions by building on what is known, collecting new data, and making new connections. Once a worthwhile hypothesis is formulated, all that matters is to collect the most informative data possible to either support or refute it. Progress can be made only by bringing the best data to bear on the hypothesis. The new data may provide unqualified support for the hypothesis, require modification of the hypothesis, or flat out contradict the hypothesis. Progress depends on the researcher’s maintaining a tension between attachment to his or her idea and willingness to be ruthlessly critical in evaluating the data that bear on it. One has to consider the evidence that goes counter to the hypothesis as well as that which supports it.

21 A strong hypothesis is one that stands up to strong tests to disprove it. The UCLA epidemiologist Sander Greenland has given a penetrating formulation of this tension: “There is nothing sinful about going out and getting evidence, like asking people how much do you drink and checking breast cancer records. There’s nothing sinful about seeing if the evidence correlates. There’s nothing sinful about checking for confounding variables. The sin comes in in believing a causal hypothesis is true because your study came up with a positive result, or believing the opposite because your study was negative.”

22We are all susceptible to being fooled by ideas that appear to offer an explanation for a mysterious phenomenon we are eager to understand. That is why we require science—the careful, painstaking work of excluding alternative explanations and verifying each link in the causal chain as a safeguard against error. If scientists are susceptible to eliding these distinctions and believing in their hypotheses, it is hardly surprising that the media and the public are susceptible to the same slippage.

THE PRECAUTIONARY PRINCIPLE

In the past few decades it has become routine for the so-called precautionary principle to be invoked whenever a question arises regarding the impact of a potential threat to health or the environment. The precautionary principle or precautionary approach to risk management states that if an action or policy has a suspected risk of causing harm to the public or the environment, in the absence of scientific consensus that the action or policy is not harmful, the burden of proof that it is

not harmful falls on those taking the action.

23 The principle implies that there is a social responsibility to protect the public from exposure to harm, when scientific investigation has found a plausible risk. Since the 1980s the precautionary principle has been adopted by the United Nations (World Charter for Nature, 1982) and the European Commission of the European Union (2000) and has been incorporated into many national legal systems.

In spite of its prominence in discussions of novel exposures and their effects on health and the environment, as the extensive literature on the topic makes clear, there are numerous problems with this principle, which sounds so reasonable and commonsensical. First, the precautionary principle is difficult to define, and there are numerous interpretations that are incompatible with one another.

24 Second, it does not provide a clear guide to action, and it encourages taking a narrow view of risks, rather than considering them in context, together with alternatives, mitigation, trade-offs, and costs versus benefits.

25 Third, where there is disagreement among scientists and regulatory bodies regarding the existence of a risk to the public, those who believe the evidence pointing to a risk are apt to invoke the precautionary principle as a means of mobilizing public opinion. Finally, application of the principle tends to provoke public anxiety and can end up causing harm.

It is hard to quarrel with the maxim that “it’s better to be safe than sorry.” For our purposes, however, what is crucial is how invocation of the precautionary principle has influenced the assessment of the available scientific evidence on highly visible questions regarding health and the environment. Statements justifying use of the principle imply that the available scientific evidence bearing on a question will be evaluated in a rigorous and unbiased manner. In practice, a reflex emphasis on precaution can often favor poor science that appears to point to a risk and ignore higher-quality science that, if appreciated, would allay fears. Too often invocation of the precautionary principle avoids the challenge of critically evaluating the scientific evidence, making judgments about the magnitude and probability of a risk, and balancing costs and benefits. Too often it amounts to a rhetorical device, which is used to arouse the public on a particular issue. The assumption that the science will be judged on its merits is hard to maintain when there are numerous examples of influential organizations issuing assessments that favor positive findings over null findings and ignore the distinction between “hazard” and “risk” (see above).

26CONTROVERSIES CONCERNING ENVIRONMENTAL HEALTH

Scientists routinely disagree in their assessment of the evidence on a given question, and questions pertaining to environmental, lifestyle, and dietary factors that may affect health are particularly subject to controversy. As we have seen, studies on these questions tend to have serious limitations, and scientists can have a strong investment in their work that goes beyond the science. As a result, what often characterizes these disputes is the intense attachment of some researchers to a given position—often based on favoring the evidence from particular studies that appear to show positive results. But it is important to recognize that the two parties in disputes are not necessarily equal in standing. In some cases, scientists on one side of the issue will make a concerted effort to evaluate all the relevant evidence in order to make sense of anomalous findings and come to a reasoned assessment based on the totality of the evidence. We will see examples of this when we discuss cell phone radiation and “endocrine disrupting chemicals.”

In other cases, controversy on a topic of public health importance may be implicit, rather than being overtly acknowledged. For example, a recent evaluation of the controversy concerning salt intake and health documented a “strong polarization” in published studies.

27 Published reports on either side of the hypothesis—that salt reduction leads to public health benefits—were less likely to cite contradictory papers. Most striking was that, rather than acknowledging the existence of conflicting studies, the two camps tended to ignore each other and to be “divided into two silos.” The tendency to ignore contradictory studies represents an abdication, since it is only by critically evaluating the relative merits of different studies and identifying the reasons for the conflicting conclusions that progress can be made. The authors pointed out that this pattern of researchers being divided into different camps, or “silos,” characterizes other controversies, such as that concerning electronic cigarettes.

Needless to say, it would be very hard for the uninitiated to penetrate what is going on and to sort out the science from the rhetoric. And so disputes of this kind further confuse the public’s understanding of the science.

CONSENSUS

Compounding the many vexed issues surrounding questions relating to health and the environment is the simplistic notion that the “consensus among scientists” is always correct. This is a widely invoked criterion or shortcut for determining who is “right” in a scientific controversy. However, the results of a scientific study should not be expected to line up on one side or the other of a neat yes/no dichotomy. Unfortunately, the science is not always clear-cut, and the consensus on a particular question at any given moment may not be correct. Until the 1980s the consensus was that stress or eating spicy foods caused stomach ulcers. For roughly a decade, virologists believed that herpes simplex virus was the cause of cervical cancer. For more than three decades, the medical community believed that the use of hormone therapy by postmenopausal women protected against heart disease. The history of medical science is littered with long-held dogmas that, when confronted by better evidence, turned out to be wrong. We have to realize that appeals to the consensus are motivated by politics and have little to do with science. All it takes is for one or more scientists to come up with a better hypothesis and do the right experiment or make the right observation to overturn the reigning consensus.

* * *

This inventory of biases and tendencies affecting the interpretation and reporting of research findings is not exhaustive. Nevertheless, one can see that many of these tendencies can be at work simultaneously and can reinforce each other. This makes it easier to see how information cascades and bandwagon processes can get started. What is most surprising is that, as was said earlier, the items listed above are either axioms of research or pitfalls that are tacitly assumed to be guarded against by individual investigators and the scientific process as a whole. Thus it seems that there is a collective blind spot, and that in order continue to do these kinds of studies there is an unquestioned assumption that somehow, in spite of the well-known “threats to validity,” informative research can still be carried out. Since we have examples of robust, transformative research findings, a crucial question is: how does one distinguish between research that is on a productive track and research that is focused on a problem that is unlikely to yield strong results or that, worse, is stuck in a blind alley? Why does work on certain questions get traction and make undreamed of strides, whereas work on other questions remains stalled?

Beyond the kinds of factors, principles, and tendencies described above that pertain to studies of health risks, to understand how certain interpretations and messages get imposed on the science, we need to step back and put science in the area of health studies in a framework that takes account of the psychological factors, professional circumstances, societal influences, incentives, pressures, fears, and agendas affecting what research gets done and how it gets interpreted and disseminated. For this purpose, work in the area of psychology and behavioral economics over the past four decades provides a crucial pillar of such a framework. Scholars in this area have produced seminal studies on errors in judgment, the perception of risk, and the mechanisms by which unfounded health scares can evolve and gain widespread acceptance, leading to misguided policies and counterproductive regulation.

COGNITIVE SHORTCUTS AND BIASES: TWO SYSTEMS OF THOUGHT

In his book

Thinking, Fast and Slow (2011), the psychologist Daniel Kahneman summarized decades of work with his colleague Amos Tversky on cognitive biases and pitfalls that affect judgment and to which we are all susceptible.

28 Kahneman distinguished between two different faculties of thought, which function according to very different rules. He referred to the two faculties as System I and System II. System I involves our automatic response to our environment and enables us to navigate our surroundings and to recognize many situations on the basis of stored experience without having to consciously analyze what is going on. It is System I that endowed our ancestors in the African savannah with the capacity to survive against omnipresent threats. This system is attuned to any possible danger and mobilizes the fight-or-flight response. If a hunter-gatherer heard a rustling in the grass, he had to decide instantaneously whether the sound signified the presence of a lion or merely the wind. In this situation, it made sense to react as if the rustling pointed to the presence of a lion, since the cost of being wrong was likely to be fatal. Even though the environment we live in is dramatically different from that of our forebears, System I has remained intact and is an integral part of our apparatus for interpreting the world. It is constantly at work, responding to cues from our surroundings, making split-second decisions, rendering judgments, which are generated below the level of our awareness. Most of the time System I serves us well and allows us to pay conscious attention to certain things, while carrying out many routine operations without consciously thinking about them. However, because it involves fast thinking, at times it can cause us to miss crucial information and make mistakes. System I is characterized by what feels right and natural. A compelling story, which engages us and confirms our expectations, will appeal to System I. It may be wrong, it may not be logical, and it may conflict with other things that we know, but if it is powerful and vivid and conforms to our deepest beliefs, it is likely to have a strong influence on our judgment.

In contrast to System I, the operation of System II, which acts on the inputs from System I, involves slow thinking. It can either endorse or rationalize the immediate thoughts and actions of System I or it can pause to take a slower, deliberate look at a situation. This latter course does not come naturally but rather involves reasoning, the weighing of possible outcomes, and judgments about future utility. Unlike the reflex responses produced by System I, Kahneman stresses that for System II to be able to correct errors made by System I requires continual vigilance and effort. And reasoning is an arduous process, in which our spontaneous reactions and feelings are of little help. All that matters is taking into account the relevant aspects of a choice or a situation and trying to decide what the outcome will be. Since System II requires effort and energy, we have a tendency to resist its demands and to slip back into accepting the judgments of System I. A key observation of Kahneman’s is that, when we are confronted by a difficult question that demands serious thought, we often tend to substitute for it a simpler question to which we have a ready-made answer.

In a series of “thought experiments” carried out over many years, Kahneman and Tversky compiled an extensive inventory of cognitive errors that has transformed our understanding of everyday judgment and decision making. Such errors are the result of mental shortcuts, or “heuristics” (that is, rules of thumb) governed by System I, which work reasonably well in everyday life. However, especially when we are dealing with matters that are removed from our firsthand experience, they can lead to cognitive errors and biases. This is because our judgment is easily influenced by extraneous factors—our mood at a given moment, conspicuous information, vivid events that make an impression on us. All this happens below the level of our awareness.

The most fundamental shortcut that interacts with many other shortcuts to influence judgment is the availability heuristic, which is simply a mental shortcut or error whereby we judge the likelihood of an event by how easily we summon up instances of that event. The more “available” it is in our consciousness, the greater the importance we assign to it. For example, a recent, highly publicized crash of an airliner may affect our feelings about flying, even though we know that flying is far safer than other modes of transportation. Thus how easily we can summon up examples of a given event may bear little relation to its actual importance.

An appreciation of cognitive biases helps in understanding how distorted information regarding health risks can gain currency. The public relies on specialists to provide the interpretation of highly technical research findings. However, as we have seen, many results that are published are either tentative or wrong, and, furthermore, there is a strong bias toward positive results, even though these are likely to be false. Scientists are human, and their judgment in these difficult matters can be influenced by a variety of factors that have nothing to do with the strength of the science. (Kahneman points out that even statisticians are susceptible to these kinds of errors.) Thus the difficulties and biases inherent in the conduct of studies in the area of health risks are embedded within an even more fundamental set of biases inherent in human behavior and society.

Although Ioannidis and Kahneman approach the question of sound judgment from very different perspectives—the one via statistical analysis, the other via the analysis of psychological processes operating at the most elementary cognitive level—these two bodies of work dovetail in unexpected and remarkable ways and provide a framework for understanding the confusion surrounding health risks. In both domains, there is a strong preference for positive results and coming up with a clear-cut and psychologically satisfying answer to a difficult question.

AVAILABILITY CASCADES

Under the influence of the kinds of biases and shortcuts documented by behavioral psychologists, findings of scientific studies regarding threats to our health can gather momentum, becoming what Timur Kuran and Cass Sunstein have termed an

availability cascade, or information cascade, or simply a bandwagon process.

29 This phenomenon is mediated by the availability heuristic, which interacts with social mechanisms to generate cascades “through which expressed perceptions trigger chains of individual responses that make these perceptions appear increasingly plausible through their rising availability in public discourse.”

30 According to Kuran and Sunstein, this process comes into play in many social movements, as increasingly people respond to information from sources that appear to have some degree of authority. Information cascades can result in the mobilization of public opinion for positive ends, as in the civil rights movement and the spread of affirmative action. At other times, however, they can be triggered by “availability errors” (i.e., false information that gains prominence), and such cascades involving the mobilization of specific groups and the population at large can result in misguided policies or ill-conceived regulation.

Kuran and Sunstein’s prime example of an availability cascade is the Love Canal incident of the mid-1970s, in which news reports concerning the contamination of a residential neighborhood in upstate New York by industrial chemicals snowballed into a national story, leading ultimately to federal legislation regarding Superfund sites. In this case, preliminary tests seemed to point to the existence of an imminent threat, and this alarming information was widely accepted and led to the evacuation of the neighborhood and compensation of homeowners. Only later, after years of more careful study by many scientists and agencies, did it turn out that, in fact, there was no evidence of any abnormal exposure among residents or any ill effects.

31The authors use the term

availability entrepreneurs to refer to individuals or groups that play a major role in publicizing a risk or an issue and generating an availability cascade. In the Love Canal incident, an extremely vocal housewife played a key role in organizing homeowners and drawing media attention to the urgency of the health threat to the community. Responding to the community’s concern, the New York State Department of Health declared a public health emergency, characterizing Love Canal as a “great and imminent peril.”

32According to Kuran and Sunstein, availability entrepreneurs may believe what they are saying, or, more likely, they may be tailoring their public pronouncements to further a personal agenda, whether ideological, professional, philosophical, or moral. In the latter case, they are practicing what the authors term “preference falsification”

33 and others might call bad faith. If the results of a scientific study regarding a potential risk appear to conform to prevailing views in society, people will tend to accept the cited scientific findings as a result of this framing, even if the study reporting the result is weak. Those who question the solidity of the result or its importance on scientific grounds may be characterized as being “anti-environment” and “pro-industry,” even when their skepticism is restricted to the evidence at hand. The concept of the availability heuristic helps explain how, as individuals and groups seek information about a given risk, the formulation of the issue by those with special knowledge can “cascade” through different groups and become solidified, becoming a widely accepted truth. One can easily see how these processes can have the effect of reinforcing the claim that the science is clear-cut and that a given risk is indeed a serious threat that we should pay attention to.

While a discussion of the role of scientists in the initiation and amplification of informational cascades is beyond the scope of their article, much of what Kuran and Sunstein say about availability entrepreneurs applies to scientists as well. Any account of the formation of public opinion on scientific questions must recognize that scientists are human and are social beings, as well as scientists. Their judgments about the importance of a given question can be influenced by factors that are extraneous to the science, including professional standing, the need to obtain funding, and moral and political beliefs. In other words, scientists themselves can be biased in their assessments and especially their public pronouncements regarding a particular hazard. This can lead to an emphasis on studies that show a positive association and ignoring studies that do not support the existence of a hazard. Scientists who act as availability entrepreneurs can count on the public’s disposition to perceive their statements regarding an environmental threat as more trustworthy than statements that question the existence of a hazard.

SCIENCE THAT APPEALS TO THE PUBLIC VERSUS SCIENCE THAT FOCUSES ON THE NEXT EXPERIMENT OR OBSERVATION

In this and the previous chapter, we have seen that findings from observational studies linking exposures to disease are only indications for further study and are not to be taken at face value. We have been badly misled by many intriguing findings that turned out to not be replicated when more careful studies were done. For this reason, the results of the “latest study” that get reported by the media have little claim to providing solid knowledge that is apt to make a difference in our life. To begin to be meaningful, the latest study needs to be seen in the context of all relevant work bearing on the question of interest. Most published findings turn out to be either wrong or overstated. Furthermore, the fact that a question is being studied is no guarantee that it is important or that a new hazard that has surfaced is something we need to worry about. Thus the assumptions implicit in much of the media reporting of studies in the area of health and disease are often directly at odds with the essence of the scientific process.

However, owing to the intense interest in anything that is possibly associated with our health, the perception of risks in the larger society can feed back on the science by giving undeserved support to certain lines of research and reinforcing certain fears or hopes.

* * *

Science that deals with factors that affect our health takes place in a different context from other fields of science. This is because we are all eager for tangible progress in preventing and curing disease—a promise that is constantly reinforced by the media, medical journals, health and regulatory agencies, the pharmaceutical industry, and the health foods/health supplements industry. Until recently, medicine could do very little to treat or prevent most diseases, and people had a fatalistic attitude toward illness and death. But, with the enormous advances in biomedicine in the past fifty years, our desire for knowledge that will enable us to combat or stave off disease has become a distinguishing characteristic of modern society.

There are many urgent questions on which, in spite of an enormous investment of research funds and public interest, little progress has been made. We still do not understand what causes cancers of the breast, prostate, colorectum, pancreas, and brain, leukemia and lymphoma, Alzheimer’s disease, Parkinson’s disease, amyotrophic lateral sclerosis (ALS), scleroderma, autism, or many other conditions. Although we have learned a great deal about breast cancer over the past forty years, we still cannot predict who will develop the disease and who will not, and this applies to most other diseases. In contrast, there are other areas where dramatic progress has been made. These include the transformation of AIDS from a fatal disease to a manageable chronic condition; advances in the treatment of heart disease and breast cancer; the development of vaccines against human papillomavirus and hepatitis B virus—two major causes of cancer worldwide—and the identification of the bacterium that is responsible for most cases of stomach ulcer and stomach cancer. What this scorecard tells us is just how difficult it is to gain an understanding of these complex, multifactorial chronic diseases. The difficulty of making progress in answering urgent questions highlights the importance of identifying real issues and real problems by formulating new hypotheses and excluding possibilities by rigorous experimentation and observation.

* * *

In the following four chapters I examine two instances in which science has made dramatic progress in uncovering new knowledge that has translated into the ability to save lives and improve health (

chapters 6 and

7) and two instances in which, in spite of abundant public attention, little progress has been made and little relevance to health has been demonstrated (

chapters 4 and

5). By examining these two sharply contrasting outcomes of the research that attempts to identify factors that affect health, I hope to shed light on how, simultaneously, we expect too much and too little of science. Both sets of stories convey just how challenging it is to come up with good ideas and to make inroads into solving these problems.

Chapters 4 and

5 alert us to the waste and confusion that result from poorly specified hypotheses that generate bandwagon effects by promoting an unproductive line of research. Not all questions that are studied by scientists and receive both media coverage and funding are well-formulated or are based on strong prior evidence. And it is therefore not always surprising that, in spite of the hype, they do not lead to productive lines of discovery. (This does not mean that they may not merit study.) In fact, it is a reasonable working hypothesis that when a question is weak on purely scientific grounds but has salience for other reasons (such as its ability to inspire fear, or because it is associated with a political, ideological, or moral cause), this can compensate for its scientific weakness and can attract scientists, funding, and the interest of regulatory agencies and the public.

In contrast to such high-profile issues, there are other lines of investigation that have little resonance with the public but which, for purely scientific reasons, become the focus of sustained and rigorous, collaborative work that, over time, can yield results that could never have been foreseen at the outset. These represent productive veins of research, which prompt the development of new methods, the confirmation of results, and a deepening of understanding that can lead incrementally to important new knowledge. Where some instances of poorly defined research questions attract a huge amount of public attention, these other lines of research often do not relate to the common categories that evoke public interest and therefore tend to receive little media attention and to be confined to academic journals and professional meetings. They tend to get funded based on their scientific merit rather than on their appeal to the public. We can summarize this dichotomy by reversing Leo Tolstoy’s formula about happy families in the opening sentence of Anna Karenina: “all poorly justified areas of study are alike; each truly important area of study is important in its own way.”

This provides further support for the existence of a disjunction between “newsworthiness” and scientific value. It is easy to see why the latter stories don’t have the same visceral appeal as the stories alerting us to a threat. Here we are talking about incremental steps, which may, in time, lead to a dramatic advances or even a breakthrough, but which don’t stand out as starkly against the continuum of everyday life as the fears that erupt into the headlines.

* * *

We can now see why certain questions that grab headlines and generate public concern have such enormous power and can take on a life of their own, casting a shadow over people’s lives—remaining present in people’s consciousness for a long period of time and, like a latent virus, being periodically reactivated by new stories that appear to point to the existence of a hazard. These scares appeal to our deep-seated instincts that react to a threat. Because the fear comes first—before a reasoned assessment of the evidence, which it bypasses—there is a sameness to the news reports that present evidence on the question. For this reason, the work that has addressed certain questions over a period of decades can appear essentially static. It never really gains traction, deepens, or evolves. Now this could be because the phenomenon under study is so weak as to be unimportant, and the hypothesis regarding its role in health or disease is wrong. Or it may be that the phenomenon is actually important, but researchers have not yet uncovered some crucial aspect of how it operates. In either case, the cause is not advanced by appeals to the public via the media, press releases, and health advisories. Progress can come only from the hard work of excluding alternative hypotheses and forging strong links in the chain of causation.

The demands of identifying and pursuing a productive line of research—one that eventually yields important new knowledge—leave no room for self-indulgent appeals to the public and solemn, self-serving warnings about the potential relevance of some unconfirmed, weak, and questionable finding to the public at large. As we will see in the two final case studies, the trajectory leading to major discoveries appears simple and straightforward only in hindsight. In reality, it is typically fraught with methodological obstacles, disputes between rival groups, efforts to improve methods, and uncertainty that the whole undertaking is really going to lead somewhere and not fall apart. For these reasons, in contrast to questions that invoke the specter of an insidious and imminent threat to our well-being, the stories that follow the tortuous “long and winding road” leading to a major discovery are not simple and have little visceral, emotional appeal.

My reason for contrasting two sets of stories with very different trajectories and very different outcomes is this. If we have in mind a model of what a true advance in the area of public health looks like, this might provide a much-needed reference point for judging the many sensationalized findings that get so much attention.