The Evangelization of Peoples

In December 1952, Reader’s Digest published an article titled “Cancer by the Carton,” which presented the growing evidence of a link between cigarette smoking and lung cancer.1 The article pulled no punches: it asserted that deaths from lung cancer had increased by a factor of 10 from 1920 to 1948 and that the risk of lung cancer in smokers older than forty-five increased in direct proportion to the number of cigarettes smoked. It quoted a medical researcher who speculated that lung cancer would soon become the most common form of human cancer—precisely because of the “enormous increase” in smoking rates per capita in the United States and elsewhere. Perhaps most important of all—at least from the perspective of the tobacco industry—the article called the increase in lung cancer “preventable” and suggested that the public needed to be warned of the dangers of smoking.

The article was a public relations doomsday scenario for the tobacco industry. At the time, Reader’s Digest had a circulation of tens of millions of copies and was one of the most widely read publications in the world.2 The dramatic headline, clear and concise prose, and unambiguous assessment of the scientific evidence made a greater impact than any public health campaign could have done. The article left no uncertainty: smokers were slowly killing themselves.

Soon more evidence came in. During the summer of 1953, a group of doctors at Sloan Kettering Memorial Hospital completed a study in which they painted mice with cigarette tar. The mice reliably developed malignant carcinomas.3 Their paper provided a direct and visceral causal link between a known by-product of smoking and fatal cancer, where previous studies had shown only statistical relationships. It produced a media frenzy, with articles appearing in national and international newspapers and magazines. (Time magazine ran the story under the title “Beyond Any Doubt.”)4 That December, four more studies bolstering the case were presented at a research meeting in New York; one doctor told the New York Times that “the male population of the United States will be decimated by cancer of the lung in another fifty years if cigarette smoking increases as it has in the past.”5

The bad press had immediate consequences. The day after it reported on the December research meeting, the Times ran an article contending that a massive sell-off in tobacco stocks could be traced to the recent coverage. The industry saw three consecutive quarters of decline in cigarette purchases, beginning shortly after the Reader’s Digest article.6 (This decline had followed nineteen consecutive quarters of record sales.) As National Institutes of Health statistician Harold Dorn would write in 1954, “Two years ago cancer of the lung was an unfamiliar and little discussed disease outside the pages of medical journals. Today it is a common topic of discussion, apparently throughout the entire world.”7

The tobacco industry panicked. Recognizing an existential threat, the major US firms banded together to launch a public relations campaign to counteract the growing—correct—perception that their product was killing their customers. Over the two weeks following the market sell-off, tobacco executives held a series of meetings at New York’s Plaza Hotel with John Hill, cofounder of the famed public relations outfit Hill & Knowlton, to develop a media strategy that could counter a steady march of hard facts and scientific results.

As Oreskes and Conway document in Merchants of Doubt, the key idea behind the revolutionary new strategy—which they call the “Tobacco Strategy”—was that the best way to fight science was with more science.8

Of course, smoking does cause lung cancer—and also cancers of the mouth and throat, heart disease, emphysema, and dozens of other serious illnesses. It would be impossible, using any legitimate scientific method, to generate a robust and convincing body of evidence demonstrating that smoking is safe. But that was not the goal. The goal was rather to create the appearance of uncertainty: to find, fund, and promote research that muddied the waters, made the existing evidence seems less definitive, and gave policy makers and tobacco users just enough cover to ignore the scientific consensus. As a tobacco company executive put it in an unsigned memo fifteen years later: “Doubt is our product since it is the best means of competing with the ‘body of fact’ that exists in the mind of the public.”9

At the core of the new strategy was the Tobacco Industry Research Committee (TIRC), ostensibly formed to support and promote research on the health effects of tobacco. In fact it was a propaganda machine. One of its first actions was to produce, in January 1954, a document titled “A Frank Statement to Cigarette Smokers.”10 Signed by the presidents and chairmen of fourteen tobacco companies, the “Frank Statement” ran as an advertisement in four hundred newspapers across the United States. It responded to general allegations that tobacco was unsafe—and explicitly commented on the Sloan Kettering report that tobacco tar caused cancer in mice. The executives asserted that this widely reported study was “not regarded as conclusive in the field of cancer research” and that “there is no proof that cigarette smoking is one of the causes” of lung cancer. But they also claimed to “accept an interest in people’s health as a basic responsibility, paramount to every other consideration” in their business. The new committee would provide “aid and assistance to the research efforts into all phases of tobacco use and health.”

The TIRC did support research into the health effects of tobacco, but its activities were highly misleading. Its main goal was to promote scientific research that contradicted the growing consensus that smoking kills.11 The TIRC sought out and publicized the research of scientists whose work was likely to be useful to them—for instance, those studying the links between lung cancer and other environmental factors, such as asbestos.12 It produced pamphlets such as “Smoking and Health,” which in 1957 was distributed to hundreds of thousands of doctors and dentists and which described a very biased sample of the available research on smoking. It consistently pointed to its own research as evidence of an ongoing controversy over the health effects of tobacco and used that putative controversy to demand equal time and attention for the industry’s views in media coverage.

This strategy meant that even as the scientific community reached consensus on the relationship between cigarettes and cancer—including, as early as 1953, the tobacco industry’s own scientists—public opinion remained torn.13 After significant drops in 1953 and into 1954, cigarette sales began rising again and did so steadily for more than two decades—until long after the science on the health risks of tobacco was completely settled.14

In other words, the Tobacco Strategy worked.

The term “propaganda” originated in the early seventeenth century, when Pope Gregory XV established the Sacra Congregatio de Propaganda Fide—the Sacred Congregation for the Propagation of the Faith. The Congregation was charged with spreading Roman Catholicism through missionary work across the world and, closer to home, in heavily Protestant regions of Europe. (Today the same body is called the Congregation for the Evangelization of Peoples.) Politics and religion were deeply intertwined in seventeenth-century Europe, with major alliances and even empires structured around theological divides between Catholics and Protestants.15 The Congregation’s activities within Europe were more than religious evangelization: they amounted to political subversion, promoting the interests of France, Spain, and the southern states of the Holy Roman Empire in the Protestant strongholds of northern Europe and Great Britain.

It was this political aspect of the Catholic Church’s activities that led to the current meaning of propaganda as the systematic, often biased, spread of information for political ends. This was the sense in which Marx and Engels used the term in the Communist Manifesto, when they said that the founders of socialism and communism sought to create class consciousness “by our propaganda.”16 Joseph Goebbels’s title as “propaganda minister” under the German Third Reich also invokes this meaning. The Cold War battles between the United States and the Soviet Union over “hearts and minds” are aptly described as propaganda wars.

Many of the methods of modern propaganda were developed by the United States during World War I. From April 1917 until August 1919, the Committee on Public Information (CPI) conducted a systematic campaign to sell US participation in the war to the American public.17 The CPI produced films, posters, and printed publications, and it had offices in ten countries, including the United States. In some cases it fed newspapers outright lies about American activities in Europe—and occasionally got caught, leading the New York Times to run an editorial calling it the Committee on Public Misinformation. One member of the group later described its activities as “psychological warfare.”18

After the war, the weapons of psychological warfare were turned on US and Western European consumers. In a series of books in the 1920s, including Crystallizing Public Opinion (1923) and Propaganda (1928), CPI veteran Edward Bernays synthesized results from the social sciences and psychology to develop a general theory of mass manipulation of public opinion—for political purposes, but also for commerce.

Bernays’s postwar work scarcely distinguished between the political and commercial. One of his most famous campaigns was to rebrand cigarettes as “torches of freedom,” a symbol of women’s liberation, with the goal of breaking down social taboos against women’s smoking and thus doubling the market for tobacco products. In 1929, under contract with the American Tobacco Company, makers of Lucky Strike cigarettes, he paid women to smoke while marching in the Easter Sunday Parade in New York.

The idea that industry—including tobacco, sugar, corn, healthcare, energy, pest control, firearms, and many others—is engaged in propaganda, far beyond advertising and including influence and information campaigns addressed at manipulating scientific research, legislation, political discourse, and public understanding, can be startling and deeply troubling. Yet the consequences of these activities are all around us.

Did (or do) you believe that fat is unhealthy—and the main contributor to obesity and heart disease? The sugar industry invested heavily in supporting and promoting research on the health risks of fat, to deflect attention from the greater risks of sugar.19 Who is behind the long-term resistance to legalizing marijuana for recreational use? Many interests are involved, but alcohol trade groups have taken a particularly strong and effective stand.20 There are many examples of such industry-sponsored beliefs, from the notion that opioids prescribed for acute pain are not addictive to the idea that gun owners are safer than people who do not own guns.21

Bernays himself took a rosy view of the role that propaganda, understood to include commercial and industrial information campaigns, could play in a democratic society. In his eyes it was a tool for beneficial social change: a way of promoting a more free, equal, and just society. He particularly focused on how propaganda could aid the causes of racial and gender equality and education reform. Its usefulness for lining the pockets of Bernays and his clients was simply another point in its favor. After all, he was writing in the United States at the peak of the Roaring Twenties; he had no reason to shy away from capitalism. Propaganda was the key to a successful democracy.

Today it is hard not to read Bernays’s books through the lens of their own recommendations, as the work of a public relations spokesman for the public relations industry. And despite his reassurances, a darker side lurks in his pages. He writes, for instance, that “those who manipulate this unseen mechanism of society constitute an invisible government which is the true ruling power of our country. We are governed, our minds molded, our tastes formed, our ideas suggested, largely by men we have never heard of.”22 This might sound like the ramblings of a conspiracy theorist, but in fact it is far more nefarious: it is an invitation to the conspiracy, drafted by one of its founding fathers, and targeted to would-be titans of industry who would like to have a seat on his shadow council of thought leaders.

Perhaps Bernays overstated his case, but his ideas have deeply troubling consequences. If he is right, then the very idea of a democratic society is a chimera: the will of the people is something to be shaped by hidden powers, making representative government meaningless. Our only hope is to identify the tools by which our beliefs, opinions, and preferences are shaped, and look for ways to re-exert control—but the success of the Tobacco Strategy shows just how difficult this will be.

The Tobacco Strategy was wildly successful in slowing regulation and obscuring the health risks of smoking. Despite strong evidence of the link between smoking and cancer by the early 1950s, the Surgeon General did not issue a statement linking smoking to health risks until 1964—a decade after the TIRC was formed.23 The following year, Congress passed a bill requiring a health warning on tobacco products. But it was not until 1970 that cigarette advertising was curtailed at the federal level, and not until 1992 that the sale of tobacco products to minors was prohibited.24

All of this shows that the industry had clear goals, it adopted a well-thought-out strategy to accomplish them, and the goals were ultimately reached. What is much harder to establish by looking at the history alone is the degree to which the Tobacco Strategy contributed to those goals. Did industry propaganda make a difference? If so, which aspects of its strategy were most effective?

There were many reasons why individuals and policy makers might prefer to delay regulation and disregard the evidence about links between cancer and smoking. Was anyone ever as cool as Humphrey Bogart with a cigarette hanging from his lips? Or Audrey Hepburn with an arm-length cigarette holder? Smoking was culturally ubiquitous during the 1950s and 1960s, and it was difficult to imagine changing this aspect of American society by government order. Worse, many would-be regulators of the tobacco industry were smokers themselves. Clear conflicts of interest arise, independently of any industry intervention, when the users of an addictive product attempt to regulate the industry that produces it. And in addition to funding research, the tobacco industry poured millions of dollars into funding political campaigns and lobbying efforts.

Subtle sociological factors can also influence smoking habits in ways that are largely independent of the Tobacco Strategy. In a remarkable 2008 study, Harvard public health expert Nicholas Christakis and UC San Diego political scientist James Fowler looked at a social network of several thousand subjects to see how social ties influenced their smoking behavior.25 They found that smokers often cluster socially: those with smoking friends were more likely to be smokers and vice versa. They also found that individuals who stopped smoking had a big effect on their friends, on friends of friends, and even on friends of friends of friends. Clusters of individuals tended to stop smoking together. Of course, the converse is that groups who keep smoking tend to do so together as well.26 When the cancer risks of cigarettes were first becoming clear, roughly 45 percent of US adults were smokers. Who wants to be the first to stop?27

To explore in more detail how propagandists can manipulate public belief, we turn once again to the models we looked at in the last chapter. We can adapt them to ask: Should we expect the Tobacco Strategy and similar propaganda efforts to make a significant difference in public opinion? Which features of the Tobacco Strategy are most effective, and why do they work? How can propaganda combat an overwhelming body of scientific work? Working with Justin Bruner, a philosopher and political scientist at the Australian National University, and building on his work with philosopher of science Bennett Holman (which we discuss later), we have developed a model that addresses these questions.28

We begin with the basic Bala-Goyal model we described in the last chapter. This, remember, involves a group of scientists who communicate with those in their social network. They are all trying to figure out whether one of two actions—A or B—will yield better results on average. And while they know exactly how often A leads to good outcomes, they are unsure about whether B is better or worse than A. The scientists who think A is better perform that action, while those who lean toward B test it out. They use Bayes’ rule to update their beliefs on the basis of the experiments they and their colleagues perform. As we saw, the most common outcome in this basic model is that the scientific community converges on the better theory, but the desire to conform and the mistrust of those with different beliefs can disrupt this optimistic picture.

In the last chapter, we used this model and variations on it to understand social effects in scientific communities. But we can vary the model to examine how ideas and evidence can flow from a scientific community to a community of nonscientists, such as policy makers or the public, and how tobacco strategists can interfere with this process.

We do this by adding a new group of agents to the model, whom we call policy makers. Like scientists, policy makers have beliefs, and they use Bayes’ rule to update them in light of the evidence they see. But unlike scientists, they do not produce evidence themselves and so must depend on the scientific network to learn about the world. Some policy makers might listen to just one scientist, others to all of them or to some number in between.

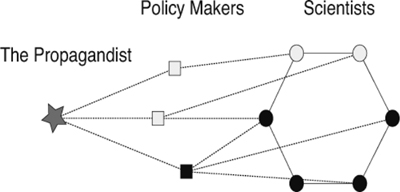

Figure 8 shows this addition to the model. On the right we have our community of scientists as before, this time arranged in a cycle. As before, some of them favor theory A (the light nodes) and others B (the dark ones). On the left, we add policy makers (squares instead of circles), each with their own belief about whether theory B is better than A. The dotted lines indicate that while they have connections with scientists, these are one-sided. This figure shows one policy maker who listens to a single scientist, one who listens to two, and one who listens to three.

With just this modification to the framework, we find that policy makers’ beliefs generally track scientific consensus. Where scientists come to favor action B, policy makers do as well. This occurs even if the policy makers are initially skeptical, in the sense that they start with credences that favor A (less than .5). When policy makers are connected to only a small number of scientists, they may approach the true belief more slowly, but they always get there eventually (as long as the scientists do).

Figure 8. An epistemic network with policy makers and scientists. Although both groups have beliefs about whether action A (light nodes) or action B (dark nodes) is better, only scientists actually test these actions. Policy makers observe results from some set of scientists and update their beliefs. Dotted lines between scientists and policy makers reflect this one-sided relationship.

Now consider what happens when we add a propagandist to the mix. The propagandist is another agent who, like the scientists, can share results with the policy makers. But unlike the scientists, this agent is not interested in identifying the better of two actions. This agent aims only to persuade the policy makers that action A is preferable—even though, in fact, action B is. Figure 9 shows the model with this agent. Propagandists do not update their beliefs, and they communicate with every policy maker.

The Tobacco Strategy was many faceted, but there are a handful of specific ways in which the TIRC and similar groups used science to fight science.29 The first is a tactic that we call “biased production.” This strategy, which may seem obvious, involves directly funding, and in some cases performing, industry-sponsored research. If industrial forces control the production of research, they can select what gets published and what gets discarded or ignored. The result is a stream of results that are biased in the industry’s favor.

Figure 9. An epistemic network with scientists, policy makers, and a propagandist. The propagandist does not hold beliefs of their own. Instead, their goal is to communicate only misleading results to all the policy makers. Light nodes represent individuals who prefer action A, and dark nodes, B.

The tobacco companies invested heavily in such research following the establishment of the TIRC. By 1986, according to their own estimates, they had spent more than $130 million on sponsored research, resulting in twenty-six hundred published articles.30 Awards were granted to researchers and projects that the tobacco industry expected to produce results beneficial to them. This research was then shared, along with selected independent research, in industry newsletters and pamphlets; in press releases sent to journalists, politicians, and medical professionals; and even in testimony to Congress.

Funding research benefited the industry in several ways. It provided concrete (if misleading) support for tobacco executives’ claims that they cared about smokers’ health. It gave the industry access to doctors who could then appear in legal proceedings or as industry-friendly experts for journalists to consult. And it produced data that could be used to fight regulatory efforts.

It is hard to know what sorts of pressure the tobacco industry placed on the researchers it funded. Certainly the promise of future funding was an incentive for those researchers to generate work that would please the TIRC. But there is strong evidence that the tobacco industry itself produced research showing a strong link between smoking and lung cancer that it did not publish. Indeed, as we noted, the industry’s own scientists appear to have been convinced that smoking causes cancer as early as the 1950s—and yet the results of those studies remained hidden for decades, until they were revealed through legal action in the 1990s. In other words, industry scientists were not only producing studies showing that smoking was safe, but when their studies linked tobacco and cancer, they buried them.

Let us add this sort of biased production to our model. In each round, we suppose the propagandist always performs action B but then shares only those outcomes that happen to suggest action A is better. Suppose that in each study, the propagandist takes action B ten times. Whenever this action is successful four times or fewer, they share the results. Otherwise not. This makes it look like action B is, on average, worse than A (which tends to work five times out of ten). The policy makers then update their beliefs on this evidence using Bayes’ rule. (The policy makers also update their beliefs on any results shared by the scientists they are connected to, just as before.)31

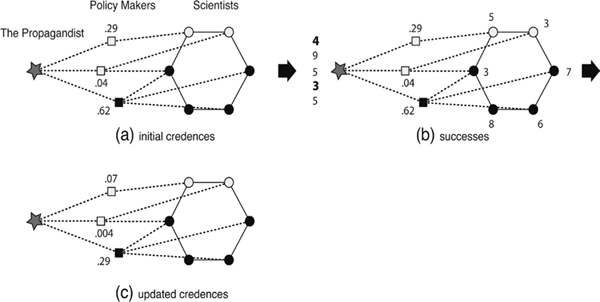

Figure 10 gives an example of what this might look like. In (a) we see that the policy makers have different credences (scientist credences are omitted from the figure for simplicity’s sake). In (b) both the propagandist and the scientists test their beliefs. Scientists “flip the coin” ten times each. The propagandist, in this example, has enough funding to run five studies, each with ten test subjects, and so sees five results. Then, the scientists share their results, and the propagandist shares just the two bolded results, that is, the ones in which B was not very successful. In (c) the policy makers have updated their beliefs.

Figure 10. An example of policy-maker belief updating in a model in which a propagandist engages in biased production. In (a) we see the initial credences of the policy makers. In (b) the scientists test their beliefs, and the propagandist tests theory B. The propagandist chooses to share only those trials (bolded) that spuriously support A as the better theory. In (c) we see how policy makers update their credences in light of this evidence. Light nodes represent individuals who prefer action A, and dark nodes, B.

We find that this strategy can drastically influence policy makers’ beliefs. Often, in fact, as the community of scientists reaches consensus on the correct action, the policy makers approach certainty that the wrong action is better. Their credence goes in precisely the wrong direction. Worse, this behavior is often stable, in the sense that no matter how much evidence the scientific community produces, as long as the propagandist remains active, the policy makers will never be convinced of the truth.

Notice that in this model, the propagandist does not fabricate any data. They are performing real science, at least in the sense that they actually perform the experiments they report, and they do so using the same standards and methods as the scientists. They just publish the results selectively.

Even if it is not explicit fraud, this sort of selective publication certainly seems fishy—as it should, since it is designed to mislead. But it is important to emphasize that selective publication is common in science even without industrial interference. Experiments that do not yield exciting results often go unpublished, or are relegated to minor journals where they are rarely read.32 Results that are ambiguous or unclear get left out of papers altogether. The upshot is that what gets published is never a perfect reflection of the experiments that were done. (This practice is sometimes referred to as “publication bias” or the “file drawer effect,” and it causes its own problems for scientific understanding.)33 This observation is not meant to excuse the motivated cherry-picking at work in the biased production strategy. Rather, it is to emphasize that, for better or worse, it is continuous with ordinary scientific practice.34

One way to think about what is happening in these models is that there is a tug-of-war between scientists and the propagandist for the hearts and minds of policy makers. Over time, the scientists’ evidence will tend to recommend the true belief: more studies will support action B because it yields better results on average. So the results the scientists share will, on average, lead the policy makers to the true belief.

On the other hand, since the propagandist shares only those results that support the worse theory, their influence will always push policy makers’ beliefs the other way. This effect always slows the policy makers’ march toward truth, and if the propagandist manages to pull hard enough, they can reverse the direction in which the policy makers’ credences move. Which side can pull harder depends on the details of the scientific community and the problem that scientists tackle.

For instance, it is perhaps unsurprising that industrial propagandists are less effective when policy makers are otherwise well-informed. The more scientists the policy makers are connected to, the greater the chance that they get enough evidence to lead them to the true theory. If we imagine a community of doctors who scour the medical literature for the dangers of tobacco smoke, we might expect them to be relatively unmoved by the TIRC’s work. On the other hand, when policy makers have few independent connections to the scientific community, they are highly vulnerable to outside influence.

Likewise, the propagandist does better if they have more funding. More funding means that they can run more experiments, which are likely to generate more spurious results that the propagandist can then report.

Perhaps less obvious is that given some fixed amount of funding, how the propagandist chooses to allocate the funds to individual studies can affect their success. Suppose the propagandist has enough money to gather sixty data points—say, to test sixty smokers for cancer. They might allocate these resources to running one study with sixty subjects. Or they might run six studies with ten subjects each, or thirty studies, each with only two data points. Surprisingly, the propagandist will be most effective if they run and publicize the most studies with as few data points as possible.

Why would this be the case? Imagine flipping a coin that comes up heads 70 percent of the time, and you want to figure out whether it is weighted toward heads or toward tails. (This, of course, is analogous to the problem faced by scientists in our models.) If you flip this coin sixty times, the chances are very high that there will be more heads overall. Your study is quite likely to point you in the right direction. But if you flip the coin just once, there is a 30 percent chance that your study will mislead you into thinking the coin is weighted toward tails. In other words, the more data points you gather, the higher the chances that they will reflect the true effect.

Figure 11. Breaking one large study into many smaller ones can provide fodder for propagandists. On the left we see a trial with sixty data points, which reflects the underlying superiority of action B (dark). On the right, we see the same data points separated into six trials. Three of these spuriously support action A (light). A propagandist can share only these studies and mislead policy makers, which would not be possible with the larger study.

Figure 11 shows an example of this. It represents possible outcomes for one study with sixty subjects and for six studies with ten subjects each. In both cases, we assume that the samples exactly represent the real distribution of results for action B, meaning that the action worked 70 percent of the time. In other words, the results are the same, but they are broken up differently. While the sixty-subject study clearly points toward the efficacy of B, three of the smaller studies point toward A. The propagandist can share just these three and make it look as if the total data collected involved nineteen failures of B and only eleven successes.

Likewise, in an extreme case the propagandist could use their money to run sixty studies, each with only one data point. They would then have the option to report all and only the studies in which action B failed—without indicating how many times they flipped the coin and got the other result. An observer applying Bayes’ rule with just this biased sample of the data would come away thinking that action A is much better, even though it is actually worse. In other words, the less data they have, the better the chances that each result is spurious—leading to more results that the propagandist can share.

Biased production is in many ways a crude tool—less crude, perhaps, than outright fraud, but not by much. It is also risky. A strategy like this, if exposed, makes it look as if the propagandist has something to hide. (And indeed, they do—all the studies tucked away in their file drawers.) But it turns out that the propagandist can use more subtle tools that are both cheaper and, all things considered, more effective. One is what we call “selective sharing.” Selective sharing involves searching for and promoting research that is conducted by independent scientists, with no direct intervention by the propagandist, that happens to support the propagandist’s interests.

As Oreskes and Conway show, selective sharing was a crucial component of the Tobacco Strategy. During the 1950s, when a growing number of studies had begun to link smoking with lung cancer, a group called the Tobacco Institute published a regular newsletter called Tobacco and Health that presented research suggesting there was no link.35 This newsletter often reported independent results, but in a misleadingly selective way—with the express purpose of undermining other widely discussed results in the scientific literature.

For instance, in response to the Sloan Kettering study showing that cigarette tar produced skin cancer in mice, Tobacco and Health pointed to later studies by the same group that yielded lower cancer incidences, implying that the first study was flawed but not giving a complete account of the available data. The newsletter ran headlines such as “Five Tobacco-Animal Studies Report No Cancers Induced” without mentioning how many studies did report induced cancers. This strategy makes use of a fundamental public misunderstanding of how science works. Many people think of individual scientific studies as providing proof, or confirmation, of a hypothesis. But the probabilistic nature of evidence means that real science is far from this ideal. Any one study can go wrong, a fact Big Tobacco used to its advantage.

Tobacco and Health also reported on links between lung cancer and other substances, such as asbestos, automobile exhaust, coal smoke, and even early marriage, implying that the recent decades’ rise in lung cancer rates could have been caused by any or all of these other factors.

A closely related strategy involved extracting and publishing quotations from research papers and books that, on their face, seemed to express uncertainty or caution about the results. Scientists are sometimes modest about the significance of their studies, even when their research demonstrates strong links. For instance, Richard Doll, a British epidemiologist who conducted one of the earliest studies establishing that smoking causes cancer, was quoted in Tobacco and Health as writing, “Experiments in which animals were exposed to the tar or smoke from tobacco have uniformly failed to produce any pulmonary tumors comparable to the bronchial carcinoma of man.”36 The newsletter did not mention that these experiments had shown that animals exposed to tar and smoke would get carcinomas elsewhere. Doll was in fact drawing precise distinctions, but industry painted him as expressing uncertainty.37

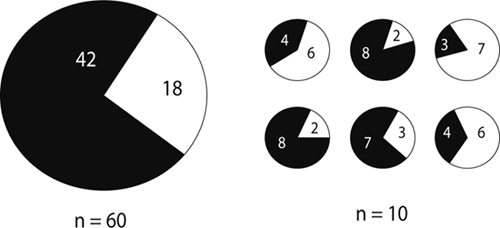

In our model, the propagandist implements selective sharing by searching through the results produced by the scientific community and then passing along all and only those that happen to support their agenda. In many ways this ends up looking like biased production, in that the propagandist is sharing only favorable results, but with a big difference. The only results that get shared in this model are produced by independent researchers. The propagandist does not do science. They just take advantage of the fact that the data produced by scientists have a statistical distribution, and there will generally be some results suggesting that the wrong action is better.

Figure 12 shows an example of what happens to policy-maker beliefs under selective sharing by the propagandist.38 (We reduce the number of scientists and policy makers in this figure to keep things legible.) Notice here that the propagandist is now observing the beliefs of all the scientists as well as communicating with the policy makers. In (a) we see their initial credences. In (b) the scientists test only their preferred actions, with three of them trying B. Two scientists happen to observe only four successes in this case, which each make it look like B is worse than A. The propagandist shares only these two results. In (c) we can see that, as a result, the policy makers now have less accurate beliefs.

In this strategy, the propagandist does absolutely nothing to interfere with the scientific process. They do not buy off scientists or fund their own research. They simply take real studies, produced independently, that by chance suggest the wrong answer. And they forward these and only these studies to policy makers.

It turns out that selective sharing can be extremely effective. As with biased production, we find that in a large range of cases, a propagandist using only selective sharing can lead policy makers to converge to the false belief even as the scientific community converges to the true one. This may occur even though the policy makers are also updating their beliefs in light of evidence shared by the scientists themselves.

The basic mechanism behind selective sharing is similar to that behind biased production: there is a tug-of-war. Results shared by scientists tend to pull in the direction of the true belief, and results shared by the propagandist pull in the other direction. The difference is that how hard the propagandist pulls no longer depends on how much money they can devote to running their own studies, but only on the rate at which spurious results appear in the scientific community.

Figure 12. An example of policy-maker belief updating under selective sharing by a propagandist. In (a) we see the initial credences of the policy makers. In (b) scientists test their preferred actions. (Light nodes represent individuals taking action A, dark ones, B.) Some of these tests happen to spuriously support theory A, and the propagandist chooses only these (bolded) to share with policy makers. In (c) we see that policy makers have updated their beliefs on the basis of both the evidence directly from scientists and the spurious results from the propagandist.

For this reason, the effectiveness of selective sharing depends on the details of the problem in question. If scientists are gathering data on something where the evidence is equivocal—say, a disease in which patients’ symptoms vary widely—there will tend to be more results suggesting that the wrong action is better. And the more misleading studies are available, the more material the propagandist has to publicize. If every person who smoked had gotten lung cancer, the Tobacco Strategy would have gone nowhere. But because the connections between lung cancer and smoking are murkier, tobacco companies had something to work with. (Something similar is true in the biased production case: generally, to produce the same number of spurious results, the propagandist needs to perform more studies—that is, spend more money—as evidence gets less equivocal.)

The practices of the scientific community can also influence how effective selective sharing will be—even if industry in no way interferes with the scientific process. The propagandist does especially well when scientists produce many studies, each with relatively little data. How much data is needed to publish a paper varies dramatically from field to field. Some fields, such as particle physics, demand extremely high thresholds of data quantity and quality for publication of experimental results, while other fields, such as neuroscience and psychology, have been criticized for having lower standards.39

Why would sparse data gathering help the propagandist? The answer is closely connected to why studies with fewer participants are better for the propagandist in the biased production strategy. If every scientist “flips their coin” one hundred times for each study, the propagandist will have very few studies to publicize, compared with a situation in which each scientist flips their coin, say, five times. The lower the scientific community’s standards, the easier it is for the propagandist in the tug-of-war for public opinion.40

Of course, the more data you demand, the more expensive each study becomes, and this sometimes makes doing studies with more data prohibitive. But consider the difference between a case in which one scientist flips the coin one hundred times, and the propagandist can choose whether to share the result or not; and a case in which twenty scientists flip the coin five times each, and the propagandist can pick and choose among the studies. In the latter case, the propagandist’s odds of finding something to share are much higher—even though the entire group of scientists in both cases performed the same total number of flips. Mathematically, this is the same as what we saw in figure 11, but for an independent community.

This observation leads to a surprising lesson for how we should fund and report science. You might think it is generally better to have more scientists work on a problem, as this will generate more ideas and greater independence. But under real-world circumstances, where a funding agency has a fixed pot of money to devote to a scientific field, funding more scientists is not always best. Our models suggest that it is better to give large pots of money to a few groups, which can use the money to run studies with more data, than to give small pots of money to many people who can each gather only a few data points. The latter distribution is much more likely to generate spurious results for the propagandist.

Of course, this reasoning can go too far. Funding just one scientist raises the risk of choosing someone with an ultimately incorrect hypothesis. Furthermore, there are many benefits to a more democratically structured scientific community, including the presence of a diversity of opinions, beliefs, and methodologies. The point here is that simply adding more scientists to a problem can have downsides when a propagandist is at work. Perhaps the best option is to fund many scientists, but to publish their work only in aggregation, along with an assessment of the total body of evidence.

Most disciplines recognize the importance of studies in which more data are gathered. (All else being equal, studies with more data are said to have higher statistical power, which is widely recognized as essential to rigorous science.)41 Despite this, low-powered studies seem to be a continuing problem.42 The prevalence of such studies is related to the so-called replication crisis facing the behavioral and medical sciences. In a widely reported 2010 study, a group of psychologists were able to reproduce only thirty-six of one hundred published results from their field.43 In a 2016 poll run by the journal Nature, 70 percent of scientists across disciplines said they had failed to reproduce another scientist’s result (and 50 percent said they had failed to reproduce a result of their own).44 Since replicability is supposed to be a hallmark of science, these failures to replicate are alarming.

Part of the problem is that papers showing a novel effect are easier to publish than those showing no effect. Thus there are strong personal incentives to adopt standards that sometimes lead to spurious, but surprising, results. Worse, since, as discussed, studies that show no effect often never get published at all, it can be difficult to recognize which published results are spurious. Another part of the problem is that many journals accept underpowered studies in which spurious results are more likely to arise. This is why an interdisciplinary research team has recently advocated tighter minimal standards of publishability.45

Given this background, the possibility that studies with less data are fodder for the Tobacco Strategy is worrying. It goes without saying that we want our scientific communities to follow the practices most likely to generate accurate conclusions. That demanding experimental studies with high statistical power makes life difficult for propagandists only adds to the argument for more rigorous standards.

The success of selective sharing is striking because, given that it is such a minimal intervention into the scientific process, arguably it is not an intervention at all. In fact, in some ways it is even more effective than biased production, for two reasons. One is that it is much cheaper: the industry does not need to fund science, just publicize it. It is also less risky, because propagandists who share selectively do not hide or suppress any results. Furthermore, the rate at which the community of scientists will produce spurious results will tend to scale with the size of the community, which means that as more scientists work on a problem, the more spurious results they will produce, even if they generally produce more evidence for the true belief. Biased production, on the other hand, quickly becomes prohibitively expensive as more scientists join the fray.

Given the advantages to selective sharing, why does industry bother funding researchers at all? It turns out that funding science can have more subtle effects that shift the balance within the scientific community, and ultimately make selective sharing more effective as well.

In the summer of 2003, the American Medical Association (AMA) was scheduled to vote on a resolution, drafted by Jane Hightower, that called for national action on methylmercury levels in fish. The resolution would have demanded large-scale tests of mercury levels and a public relations campaign to communicate the results to the public. But the vote never took place.

As Hightower reports in her book Diagnosis Mercury, the day the resolution was set to be heard, the California delegate responsible for presenting it received word of a “new directive” stating that mercury in fish was not harmful to people. This new directive never actually materialized—but somehow the mere rumor of new evidence was enough to derail the hearing. Rather than vote on the resolution, the committee responsible passed it along to the AMA’s Council for Scientific Affairs for further investigation, meaning at least a year’s wait before the resolution could be brought to the floor again.46

A year later, at the AMA’s 2004 meeting, the council reported back. After extensive study, it concurred with the original resolution, recommending that fish be tested for methylmercury and the results publicly reported, and then went further—resolving that the FDA require the results of this testing to be posted wherever fish is sold.

But how did a rumor derail the presentation of the resolution in the first place? Where had the rumor come from?

This mystery is apparently unsolved, but in trying to understand what had happened, Hightower began to dig deeper into the handful of scientific results purporting to show that methylmercury in fish was not harmful after all. One research group in particular stood out. Based at the University of Rochester, this group had run a longitudinal study on a population with high fish consumption in the African nation of Seychelles. The researchers were investigating the possible effects of methylmercury on child development by comparing maternal mercury levels with child development markers.47 They had published several papers showing no effect of methylmercury on the children involved—even as another large longitudinal study in the Faroe Islands reported the opposite result.48

Not long after the AMA meeting at which the resolution was originally scheduled to be discussed, a member of the Rochester group named Philip Davidson gave a presentation on the group’s research. A friend of Hightower’s faxed her a copy of the presentation, noting that the acknowledgments thanked the Electric Power Research Institute (EPRI)—a lobbying entity for the power industry, including the coal power industry responsible for the methylmercury in fish.49

Hightower discovered that the EPRI had given a $486,000 grant to a collaborative research project on methylmercury that included the Seychelles study. The same project had received $10,000 from the National Tuna Foundation and $5,000 from the National Fisheries Institute.50 And while Davidson had thanked the EPRI in his presentation, he and his collaborators had not mentioned this funding in several of their published papers on children and methylmercury.

Hightower writes that she turned this information over to the Natural Resources Defense Council (NRDC) in Washington, D.C. After further investigation, the NRDC wrote to several journals that had published the Seychelles research, noting the authors’ failure to reveal potential conflicts of interest. Gary Myers, another member of the Rochester group, drafted a response arguing that although the EPRI had funded the group, the papers in question were supported by other sources. The EPRI and fisheries interests, he wrote, “played no role in this study nor did they have any influence upon data collection, interpretation, analysis or writing of the manuscript.”51

One might be skeptical that a significant grant from the coal industry would not influence research into whether the by-products of coal power plants affect child development. But we have no reason to think that the Rochester group did not act in good faith. So let us assume that the researchers were, at every stage, able to perform their work exactly as they would have without any industrial influence. Might industry funding have still had an effect? Philosophers of science Bennett Holman and Justin Bruner have recently argued that the answer is “yes”: the mere fact that certain scientists received industry funding can dramatically corrupt the scientific process.

Holman and Bruner contend that industry can influence science without biasing scientists themselves by engaging in what they call “industrial selection.” Imagine a community of scientists working on a single problem where they are trying to decide which of two actions is preferable. (They work in the same Bala-Goyal modeling framework we have already described; once again, assume that action A is worse than action B.) One might expect these scientists, at least initially, to hold different beliefs and hypotheses, and even to perform different sorts of tests on the world. Suppose further that some scientists use methods and hold background beliefs that are more likely to erroneously favor action A over action B.

To study this possibility, Holman and Bruner use a model in which each scientist “flips a coin” with a different level of bias. Most coins correctly favor action B, but some happen to favor A. The idea is that one could adopt methodologies in science that are not particularly well-tuned to the world, even if, on balance, most methods are. (Which methods are best is itself a subtle question in science.) In addition, Holman and Bruner assume that different practices mean that some scientists will be more productive than others. Over time, some scientists leave the network and are replaced—a regular occurrence when scientists retire or move on to other things. And it is more likely, in their models, that the replacement scientists will imitate the methods of the most productive scientists already in the network.

This sort of replacement dynamic is not a feature of the other models we have discussed. But the extra complication makes the models in some ways more realistic. The community of scientists in this model is a bit like a biological population undergoing natural selection: scientists who are more “fit” (in this case, producing more results) are also better at reproducing—that is, replicating themselves in the population by training successful students and influencing early-career researchers.52

Holman and Bruner also add a propagandist to the model. This time, however, the propagandist can do only one thing: dole out research money. The propagandist finds the scientist whose methods are most favorable for the theory they wish to promote and gives that scientist enough money to increase his or her productivity. This does two things. It floods the scientific community with results favorable to action A, changing the minds of many other scientists. And it also makes it more likely that new labs use the methods that are more likely to favor action A, which is better for industry interests. This is because researchers who are receiving lots of funding and producing lots of papers will tend to place more students in positions of influence. Over time, more and more scientists end up favoring action A over action B, even though action B is objectively superior.

In this way, industrial groups can exert pressure on the community of scientists to produce more results favorable to industry. And they do it simply by increasing the amount of work produced by well-intentioned scientists who happen to be wrong. This occurs even though the idealized scientists in the Holman-Bruner model are not people but just lines of computer code and so cannot possibly be biased or corrupted by industry lucre.

As Holman and Bruner point out, the normal processes of science then exacerbate this process. Once scientists have produced a set of impressive results, they are more likely to get funding from governmental sources such as the National Science Foundation. (This is an academic version of the “Matthew effect.”)53 If industry is putting a finger on the scales by funding researchers it likes, and those researchers are thus more likely to gain funding from unbiased sources, the result is yet more science favoring industry interests.

Notice also that if industrial propagandists are present and using selective sharing, they will disproportionately share the results of those scientists whose methods favor action A. In this sense, selective sharing and industrial selection can produce a powerful synergy. Industry artificially increases the productivity of researchers who happen to favor A, and then widely shares their results. They do this, again, without fraud or biased production.

The upshot is that when it comes to methylmercury, even though we have no reason to think the researchers from the University of Rochester were corrupted by coal industry funding, the EPRI likely still got its money’s worth. Uncorrupt scientists can still be unwitting participants in a process that subverts science for industry interests.

Holman and Bruner describe another case in which the consequences of industrial selection were even more dire. In 1979, Harvard researcher Bernard Lown proposed the “arrhythmic suppression hypothesis”—the idea that the way to prevent deaths from heart attack was to suppress the heart arrhythmias known to precede heart attacks.54 He pointed out, though, that it was by no means clear that arrhythmia suppression would have the desired effect, and when it came to medical therapies, he advocated studies that would use patient death rates as the tested variable, rather than simply the suppression of arrhythmia, for this reason.

But not all medical researchers agreed with Lown’s cautious approach. Both the University of Pennsylvania’s Joel Morganroth and Stanford’s Robert Winkle instead used the suppression of arrhythmia as a trial endpoint to test the efficacy of drugs aimed at preventing heart attacks.55 This was a particularly convenient measure, since it would take only a short time to assess whether a new drug was suppressing arrhythmia, compared with the years necessary to test a drug’s efficacy in preventing heart attack deaths. These researchers and others received funding from pharmaceutical companies to study antiarrhythmic drugs, with much success. Their studies formed the basis for a new medical practice of prescribing antiarrhythmic drugs for people at risk of heart attack.

The problem was that far from preventing heart attack death, antiarrhythmics had the opposite effect. It has since been estimated that their usage may have caused hundreds of thousands of premature deaths.56 The Cardiac Arrhythmia Suppression Trial, conducted by the National Heart, Lung, and Blood Institute, began in 1986 and, unlike previous studies, used premature death as the endpoint. The testing of antiarrhythmics in this trial actually had to be discontinued ahead of schedule because of the significant increase in the death rate among participants assigned to take them.57

This case is a situation in which pharmaceutical companies were able to shape medical research to their own ends—the production and sale of antiarrhythmic drugs—without having to bias researchers. Instead, they simply funded the researchers whose methods worked in their favor. When Robert Winkle, who originally favored arrhythmia suppression as a trial endpoint, began to study antiarrhythmic drugs’ effects on heart attack deaths, his funding was cut off.58

Notice that, unlike the Tobacco Strategy, industrial selection does not simply interfere with the public’s understanding of science. Instead, industrial selection disrupts the workings of the scientific community itself. This is especially worrying, because when industry succeeds in this sort of propaganda, there is no bastion of correct belief.

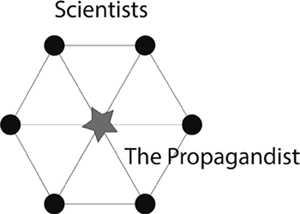

While industrial selection is a particularly subtle and effective way to intervene directly on scientific communities, Holman and Bruner point out in an earlier article that industry can also successfully manipulate beliefs within a scientific community if it manages to buy off researchers who are willing to produce straightforwardly biased science.59 In these models, one member of the scientific network is a propagandist in disguise whose results are themselves biased. For example, the probability that action B succeeds might be .7 for real scientists but only .4 for the propagandist. Figure 13 shows the structure of this sort of community.

An embedded propagandist of this sort can permanently prevent scientists from ever reaching a correct consensus. They do so by taking advantage of precisely the network structure that, as we saw in Chapter 2, can under many circumstances help a community of scientists converge to a true consensus. When the propagandist consistently shares misleading data, they bias the sample that generic scientists in the network update on. Although unbiased scientists’ results favoring B tend to drive their credences up, the propagandist’s results favoring A simultaneously drive them down, leading to indefinite uncertainty about the truth. In a case like this, there is no need for industry to distort the way results are transmitted to the public because scientists themselves remain deeply confused.

Figure 13. The structure of a model in which the propagandist directly shares biased research with scientists. Notice that unlike the network structures in figures 9, 10, and 12, the propagandist here does not focus on policy-maker belief, but poses as a scientist to directly sway consensus within the scientific community.

One important issue that Holman and Bruner discuss is how other scientists in the network can come to recognize a propagandist at work. They find that by looking at the distributions of their own results and those of their neighbors, scientists can under some circumstances identify agents whose results are consistently outliers and begin to discount those agents’ results. Unfortunately, this is a difficult and time-consuming process—and it takes for granted that there are not too many propagandists in one’s network. On the other hand, it highlights an important moral. In Chapter 2, we considered a model in which scientists chose whom to trust on the basis of their beliefs, under the assumption that successful scientists would share their own beliefs. But Holman and Bruner’s work suggests that a different approach, though more difficult to implement in practice, might be more effective: namely, choose whom to trust on the basis of the evidence they produce.

The propaganda strategies we have discussed so far all involve the manipulation of evidence. Either a propagandist biases the total evidence on which we make judgments by amplifying and promoting results that support their agenda; or they do so by funding scientists whose methods have been found to produce industry-friendly results—which ultimately amounts to the same thing. In the most extreme case, propagandists can bias the available evidence by producing their own research and then suppressing results that are unfavorable to their position. In all of these cases, the propagandist is effective precisely because they can shape the evidence we use to form our beliefs—and thus manipulate our actions.

It is perhaps surprising how effective these strategies can be. They can succeed without manipulating any individual scientist’s methods or results, by biasing the way evidence is shared with the public, biasing the distribution of scientists in the network, or biasing the evidence seen by scientists. This subtle manipulation works because in cases where the problem we are trying to solve is difficult, individual studies, no matter how well-conducted, tend to support both sides, and it is the overall body of evidence that ultimately matters.

But manipulating the evidence we use is not the only way to manipulate our behavior. For instance, propagandists can play on our emotions, as advertising often does. Poignancy, nostalgia, joy, guilt, and even patriotism are all tools for manipulation that have nothing to do with evidence.

The famous Marlboro Man advertising campaign, for instance, involved dramatic images of cowboys wrangling cattle and staring off into the wide open spaces of the American West. The images make a certain kind of man want to buy Marlboro cigarettes on emotional grounds. The television show Mad Men explored these emotional pleas and the way they created contemporary Western culture. Still, these sorts of tools lie very close to the surface. While it may be hard to avoid emotional manipulation, there is no great mystery to how it works. Rather than discuss these sorts of effects, we want to draw attention to a more insidious set of tools available to the propagandist.

One of Bernays’s principal insights, both in his books and in his own advertising and public relations campaigns, was that trust and authority play crucial roles in shaping consumers’ actions and beliefs. This means that members of society whose positions grant them special authority—scientists, physicians, clergy—can be particularly influential. Bernays argued that one can and should capitalize on this influence.

During the 1920s, Bernays ran a campaign for the Beech-Nut Packing Company, which wanted to increase its sales of bacon. According to Bernays, Americans had tended to eat light breakfasts—coffee, a pastry or roll, perhaps some juice. In seeking to change this, he invented the notion of the “American breakfast” as bacon and eggs. As he writes in Propaganda:

The newer salesmanship, understanding the group structure of society and principles of mass psychology, would first ask: “Who is it that influences the eating habits of the world?” The answer, obviously, is: “The physicians.” The new salesman will then suggest to physicians to say publicly that it is wholesome to eat bacon. He knows as a mathematical certainty, that large numbers of persons will follow the advice of their doctors.60

Bernays reports that he found a physician who was prepared to say that a “hearty” breakfast, including bacon, was healthier than a light breakfast. He persuaded this physician to sign a letter sent to thousands of other physicians, asking them whether they concurred with his judgment. Most did—a fact Bernays then shared with newspapers around the country.

There was no evidence to support the claim that bacon is in fact beneficial—and it is not clear that the survey Bernays conducted was in any way scientific. We do not even know what percentage of the physicians he contacted actually agreed with the assertion. But that was of no concern: what mattered was that the strategy moved rashers. Many tobacco firms ran similar campaigns through the 1940s and into the 1950s, claiming that some cigarettes were healthier than others or that physicians preferred one brand over others. There was no evidence to support these claims either.

Of course, the influence of scientific and medical authority cuts both ways. If the right scientific claims can help sales, the wrong ones can decimate an industry—as we saw earlier when the appearance of the ozone hole soon led to a global ban on CFCs. In such cases, a public relations campaign has little choice but to undermine the authority of scientists or doctors—either by invoking other research, real or imaginary, that creates a sense of controversy or by directly assaulting the scientists via accusations of bias or illegitimacy.

We emphasized in Chapter 1 that the perception of authority is not the right reason to pay attention to the best available science. Ultimately, what we care about is taking action, both individual or collective, that is informed by the best available evidence and therefore most likely to realize our desired ends. Under ideal circumstances, invoking—or undermining—the authority of science or medicine should not make any difference. What should matter is the evidence.

Of course, our circumstances are nowhere near ideal. Most of us are underinformed and would struggle to understand any given scientific study in full detail. And as we pointed out in the last chapter, there are many cases in which even scientists should evaluate evidence with careful attention to its source. We are forced to rely on experts.

But this role of judgment and authority in evaluating evidence has a dark side. The harder it becomes for us to identify reliable sources of evidence, the more likely we are to form beliefs on spurious grounds. For precisely this reason, the authority of science and the reputations both of individual scientists and of science as an enterprise are prime targets for propagandists.

Roger Revelle was one of the most distinguished oceanographers of the twentieth century.61 During World War II, he served in the Navy, eventually rising to the rank of commander and director of the Office of Naval Research—a scientific arm of the Navy that Revelle helped create. He oversaw the first tests of atomic bombs following the end of World War II, at Bikini Atoll in 1946. In 1950, Revelle became director of the Scripps Institute of Oceanography.

In 1957, he and his Scripps colleague Hans Suess published what was probably the most influential article of their careers.62 It concerned the rate at which carbon dioxide is absorbed into the ocean.

Physicists had recognized since the mid-nineteenth century that carbon dioxide is what we now call a “greenhouse gas”: it absorbs infrared light. This means it can trap heat near the earth’s surface, which in turn raises surface temperatures. You have likely experienced precisely this effect firsthand, if you have ever compared the experience of spending an evening in a dry, desert environment with an evening in a humid environment. In dry places, the temperature drops quickly when the sun goes down, but not in areas of high humidity. Likewise, without greenhouse gases in our atmosphere, the earth would be far colder, with average surface temperatures of about 0 degrees Fahrenheit (or −18 degrees Celsius).

When Revelle and Suess were writing, there had already been half a century of work on the hypothesis—originating with the Swedish Nobel laureate Svante Arrhenius and the American geologist T. C. Chamberlin63—that the amount of carbon dioxide in the atmosphere was directly correlated with global temperature and that variations in atmospheric carbon dioxide explained climactic shifts such as ice ages. A British steam engineer named Guy Callendar had even proposed that carbon dioxide produced by human activity, emitted in large and exponentially growing quantities since the mid-nineteenth century, was contributing to an increase in the earth’s surface temperature.

But in 1957 most scientists were not worried about global warming. It was widely believed that the carbon dioxide introduced by human activity would be absorbed by the ocean, minimizing the change in atmospheric carbon dioxide—and global temperature. It was this claim that Revelle and Suess refuted in their article.

Using new methods for measuring the amounts of different kinds of carbon in different materials, Revelle and Suess estimated how long it took for carbon dioxide to be absorbed by the oceans. They found that the gas would persist in the atmosphere longer than most other scientists had calculated. They also found that as the ocean absorbed more carbon dioxide, its ability to hold the carbon dioxide would degrade, causing it to evaporate out at higher rates. When they combined these results, they realized that carbon dioxide levels would steadily rise over time, even if rates of emissions stayed constant. Things would only get worse if emissions rates continued to increase—as indeed they have done over the sixty years since the Revelle and Suess article appeared.

This work gave scientists good reasons to doubt their complacency about greenhouse gases. But just as important was Revelle’s activism, beginning around the time he wrote the article. He helped create a program on Atmospheric Carbon Dioxide at Scripps and hired a chemist named Charles David Keeling to lead it. Later, Revelle helped Keeling get funding to collect systematic data concerning atmospheric carbon dioxide levels. Keeling showed that average carbon dioxide levels were steadily increasing—just as Revelle and Suess had predicted—and that the rate of increase was strongly correlated with the rate at which carbon dioxide was being released into the atmosphere by human activity.

In 1965, Revelle moved to Harvard. There he encountered a young undergraduate named Al Gore, who took a course from Revelle during his senior year and was inspired to take action on climate change. Gore went on to become a US congressman and later a senator. Following an unsuccessful presidential run in 1988, he wrote a book, Earth in the Balance, in which he attributed to Revelle his conviction that the global climate was deeply sensitive to human activity. The book was published in 1992, a few weeks before Gore accepted the Democratic nomination for vice president.

Gore’s book helped make environmental issues central to the election. And he distinguished himself as an effective and outspoken advocate for better environmental policy. Those who wished to combat Gore’s message could hardly hope to change Gore’s mind, and as a vice presidential candidate, he could not be silenced. Instead, they adopted a different strategy—one that went through Revelle.

In February 1990, Revelle gave a lecture at the annual meeting of the American Association for the Advancement of Science, the world’s largest general scientific society. The session in which he spoke was specifically devoted to policy issues related to climate change, and Revelle’s talk was about how the effects of global warming might be mitigated.64 Afterward, it seems that Fred Singer, whose service on the Acid Rain Review Panel we described in Chapter 1, approached Revelle and asked whether he would be interested in coauthoring an article based on the talk.

The details of what happened next are controversial and have been the subject of numerous contradictory op-eds and articles, and at least one libel suit.65 But this much is clear. In 1991, an article appeared in the inaugural issue of a journal called Cosmos, listing Singer as first author and Revelle as a coauthor. The article asserted (with original emphasis), “We can sum up our conclusions in a simple message: The scientific base for a greenhouse warming is too uncertain to justify drastic action at this time.”66 (If this sounds identical to Singer’s message on acid rain, that is because it was.)

What was much less clear was whether Revelle truly endorsed this claim, which in many ways contradicted his life’s work. (Revelle never had a chance to set the record straight: he died on July 15, 1991, shortly after the article appeared in print.)

It is certainly true that Revelle did not write the quoted sentence. What Cosmos published was an expanded version of a paper Singer had previously published, as sole author, in the journal Environmental Science and Technology; whole sentences and paragraphs of the Cosmos article were reproduced nearly word for word from the earlier piece. Among the passages that were lifted verbatim was the one quoted above.

Singer claimed that Revelle had been a full coauthor, contributing ideas to the final manuscript and endorsing the message. But others disagreed. Both Revelle’s personal secretary and his long-term research assistant claimed that Revelle had been reluctant to be involved and that he contributed almost nothing to the text. And they argued that when the article was finalized, Revelle was weak following a recent heart surgery—implying that Singer had taken advantage of him.67 (Singer sued Revelle’s research assistant, Justin Lancaster, for libel over these statements. The suit was settled in 1994, with Lancaster forced to retract his claim that Revelle was not a coauthor. In 2006, after a ten-year period during which he was not permitted to comment under the settlement, Lancaster retracted his retraction and issued a statement on his personal website in which he “fully rescind[ed] and repudiate[d] [his] 1994 retraction.” Singer told his own version of the story, which disagreed with Lancaster’s in crucial respects, in a 2003 essay titled “The Revelle-Gore Story.”)

Ultimately, though, what Revelle believed did not matter. The fact that his name appeared on the article was enough to undermine Gore’s environmental agenda. In July 1992, New Republic journalist Gregg Easterbrook cited the Cosmos article, writing, “Earth in the Balance does not mention that before his death last year, Revelle published a paper that concludes: ‘The scientific base for a greenhouse warming is too uncertain to justify drastic action at this time.’”68 A few months later, the conservative commentator George Will wrote essentially the same thing in the Washington Post.

It was a devastating objection: it seemed that Revelle, Gore’s own expert of choice, explicitly disavowed Gore’s position.

Admiral James Stockdale—running mate of Reform Party candidate Ross Perot—later took up the issue during the vice presidential debate. “I read where Senator Gore’s mentor had disagreed with some of the scientific data that is in his book. How do you respond to those criticisms of that sort?” he asked Gore.69 Gore tried to respond—first over laughter from the audience, but then, when he claimed Revelle had “had his remarks taken completely out of context just before he died,” to boos and jeers. He was made to look foolish, and his environmental activism naïve.

What happened to Gore was a weaponization of reputation. The real reason to be concerned about greenhouse gases has nothing to do with Roger Revelle or his opinion. One should be concerned because there is strong evidence that carbon dioxide levels are rapidly rising in the atmosphere, because increased carbon dioxide leads to dramatic changes in global climate, and because there will be (indeed there already are) enormous human costs if greenhouse gas emissions continue. There is still uncertainty about the details of what will happen or when—but that uncertainty goes in both directions. The chance is just as good that we have grossly underestimated the costs of global warming as that we have overestimated them. (Recall how scientists underestimated the dangers of CFCs.)

More, although the conclusion of the Cosmos article was regularly quoted, no evidence to support that conclusion was discussed by Easterbrook or Will in their articles. Indeed, the article offered no novel arguments at all. If Revelle had devastating new evidence that led him to change his mind about global warming, surely that should have been presented. But it was not.

But Gore himself had elevated Revelle’s status by basing his environmentalism on Revelle’s authority. This gave Singer—along with Will, Stockdale, and the many others who subsequently quoted the Cosmos article—new grounds for attacking Gore. Indeed, anyone who tended to agree with Gore was particularly vulnerable to this sort of argument, since it is precisely them who would have given special credibility to Revelle’s opinion.

The details of how Singer and others used Revelle’s reputation to amplify their message may seem like a special case. But this extreme case shows most clearly a pattern that has played a persistent role in the history of industrial propaganda in science.70 It shows that how we change our beliefs in light of evidence depends on the reputation of the evidence’s source. The propagandist’s message is most effective when it comes from voices we think we can trust.

Using the reputations of scientists was an essential part of the Tobacco Strategy. Industry executives sought to staff the TIRC with eminent scientists. They hired a distinguished geneticist named C. C. Little to run it, precisely because his scientific credentials gave their activities stature and credibility. Likewise, they established an “independent” board of scientific advisors that included respected experts. These efforts were intended to make the TIRC look respectable and to make its proindustry message more palatable. This is yet another reason why selective sharing can be more effective than biased production—or even industrial selection. The more independence a researcher has from industry, the more authority he or she seems to have.

Even when would-be propagandists are not independent, there are advantages in presenting themselves as if they were. For instance, in 2009 Fred Singer, in collaboration with the Heartland Institute, a conservative think tank, established a group called the Nongovernmental International Panel on Climate Change (NIPCC). The NIPCC is Singer’s answer to the UN’s Intergovernmental Panel on Climate Change (IPCC). In 2007 the IPCC (with Gore) won the Nobel Peace Prize for its work in systematically reviewing the enormous literature on climate change and establishing a concrete consensus assessment of the science.71

The NIPCC produces reports modeled exactly on the IPCC’s reports: the same size and length, the same formatting—and, of course, reaching precisely the opposite conclusions. The IPCC is a distinguished international collaboration that includes the world’s most renowned climate scientists. The NIPCC looks superficially the same, but of course has nothing like the IPCC’s stature. Such efforts surely mislead some people—including journalists who are looking for the “other side” of a story about a politically sensitive topic.

It is not hard to see through something as blatant as the NIPCC. On the other hand, when truly distinguished scientists turn to political advocacy, their reputations give them great power. Recall, for instance, that the founders of the Marshall Institute—mentioned in Chapter 1—included Nierenberg, who had taken over as director of the Scripps Institute after Revelle moved to Harvard; Robert Jastrow, the founding director of NASA’s Goddard Institute for Space Studies; and Frederick Seitz, the former president of both the National Academy of Sciences and Rockefeller University, the premier biomedical research institution in the United States.