Figure 3.1

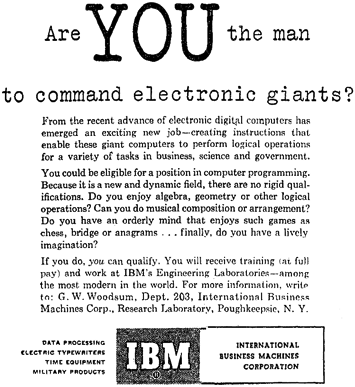

IBM Advertisement, New York Times, May 31, 1969.

3

Chess Players, Music Lovers, and Mathematicians

In one inquiry it was found that a successful team of computer specialists included an ex-farmer, a former tabulating machine operator, an ex-key punch operator, a girl who had done secretarial work, a musician and a graduate in mathematics. The last was considered the least competent.

—Hans Albert Rhee, Office Automation in a Social Perspective, 1968

In Search of “Clever Fellows”

The “Talk of the Town” column in the New Yorker magazine is not generally known for its coverage of science and technology. But in January 1957, the highbrow gossip column provided for its readers an unusual but remarkably prescient glimpse into the future of electronic computing. Already there were more than fifteen hundred of the electronic “giants” scattered around the United States, noted the column editors, with many more expected to be installed in the near future. Each of these computers required between thirty and fifty programmers, the “clever fellows” whose job it was to “figure out the proper form for stating whatever problem a machine is expected to solve.” And as there were currently only fifteen thousand professional computer programmers available worldwide, many more would have to be trained or recruited immediately. After expressing “modest astonishment” over the size of this strange new “profession we’d never heard of,” the “Talk of the Town” went on, in its inimitable breezy style, to accurately describe a problem that industry observers were only just beginning to recognize: namely, that the looming shortage of computer programmers threatened to strangle in its cradle the nascent commercial computer industry.1

The impetus for the “Talk of the Town” vignette was a series of advertisements that the IBM Corporation had recently placed in the New York Times. At first glance the ads read as rather conventional help-wanted fare. Promising the usual “exciting new jobs” in a “new and dynamic field,” they sought out candidates for a series of positions in programming research. That particularly promising candidates might be those who “enjoy algebra, geometry and other logical operations” was also not remarkable, given the context. What caught the eye of the “Talk of the Town” columnists, however, was the curious addition of an appeal to candidates who enjoyed “musical composition and arrangement,” liked “chess, bridge or anagrams,” or simply possessed “a lively imagination.”2 Struck by the incongruity between these seemingly different pools of potential applicants, one technical and the other artistic, the columnists themselves “made bold to apply” to the IBM manager in charge of programmer recruitment. “Not that we wanted a programming job, we told him; we just wondered if anyone else did.”3

Figure 3.1

IBM Advertisement, New York Times, May 31, 1969.

The IBM manager they spoke to was Robert W. Bemer, a “fast-talking, sandy-haired man of about thirty-five,” who by virtue of his eight-years experience was already considered, in the fast-paced world of electronic computing, “an old man with a long beard.” It was from Bemer that they learned of the fifteen thousand existing computer programmers. An experienced programmer himself, Bemer nevertheless confessed astonishment at the unforeseen explosion into being of a programming profession, which even to him seemed to have “happened overnight.” And for the immediate future, at least, it appeared inevitable that the demand for programmers would only increase. With obvious enthusiasm, Bemer described a near future in which computers were much more than just scientific instruments, where “every major city in the country will have its community computer,” and where citizens and businesspeople of all sorts—“grocers, doctors, lawyers”—would “all throw problems to the computer and will all have their problems solved.” The key to achieving such a vision, of course, was the availability of diverse and well-written computer programs. Therein lay the rub for recruiters like Bemer: in response to the calls for computer programmers he had circulated in the New York Times, Scientific American, and the Los Angeles Times, he had received exactly seven replies. That IBM considered this an excellent return on its investment highlights the peculiar nature of the emerging programming profession.

Of the seven respondents to IBM’s advertisements, five were experienced programmers lured away from competitors. This kind of poaching occurred regularly in the computer industry, and although this was no doubt a good thing from the point of view of these well-paid and highly mobile employees, it only exacerbated the recruitment and retention challenges faced by their employers. The other two were new trainees, only one of whom proved suitable in the long-term. The first was a chess player who was really “interested only in playing chess,” and IBM soon “let him go back to his board.” The second “knew almost nothing about computing,” but allegedly had an IQ of 172, and according to Bemer, “he had the kind of mind we like. . . . [He] taught himself to play the piano when he was ten, working on the assumption that the note F was E. Claims he played that way for years. God knows what his neighbors went through, but you can see that it shows a nice independent talent for the systematic translation of values.”4

Eventually the ad campaign and subsequent New Yorker coverage did net IBM additional promising programmer trainees, including an Oxford-trained crystallographer, an English PhD candidate from Columbia University, an ex-fashion model, a “proto-hippie,” and numerous chess players, including Arthur Bisguier, the U.S. Open Chess champion, Alex Bernstein, a U.S. Collegiate champion, and Sid Noble, the self- proclaimed “chess champion of the French Riviera.”5 The only characteristics that these aspiring programmers appeared to have in common were their top scores on a series of standard puzzle-based aptitude tests, the ability to impress Bemer as being clever, and the chutzpah to respond to vague but intriguing help-wanted ads.

The haphazard manner in which IBM recruited its own top programmers, and the diverse character and backgrounds of them, reveals much about the state of computer programming at the end of its first decade of existence. On the one hand, computer programming had successfully emerged from the obscurity of its origins as low-status, feminized clerical work to become the nation’s fastest-growing and highest-paid technological occupation.6 The availability of strong programming talent was increasingly recognized as essential to the success of any corporate computerization effort, and individual programmers were able to exert an inordinate amount of control over the course of such attempts.

But at the same time, the “long-haired programming priesthood”—the motley crew of chess players, music lovers, and mathematicians who comprised the programming profession in this period—fit uncomfortably into the traditional power structures of the modern corporate organization.7 The same arcane and idiosyncratic abilities that made them well-paid and highly sought-after individuals also made them slightly suspect. How could the artistic sensibilities and artisanal practices of programmers be reconciled with the rigid demands of corporate rationality? How could corporate managers predict and control the course of computerization efforts when they were so dependent on specific individuals? If good programmers “were born, not made,” as was widely believed, then how could the industry ensure an adequate supply?8

The tension between art and science inherent in contemporary programming practices, unwittingly but ably captured by the “Talk of the Town” gossip columnists, would drive many of the most significant organizational, technological, and professional developments in the history of computing over the course of the next few decades. This chapter will deal with early attempts to use aptitude tests and personality profiles to manage the growing “crisis” of programmer training and recruitment.

The Persistent Personnel Problem

The commercial computer industry came of age in the 1960s. At the beginning of that decade the electronic computer was still a scientific curiosity, its use largely confined to government agencies as well as a few adventurous and technically sophisticated corporations; by the decade’s end, the computer had been successfully reinvented as a mainstream business technology, and companies such as IBM, Remington Rand, and Honeywell were selling them by the thousands.

But each of these new computers, if we are to take Bemer’s reckoning seriously, would require a support staff of at least thirty programmers. Since almost all computer programs in this period were effectively custom developed—the packaged software industry would only begin to emerge in the late 1960s—every purchase of a computer required the corresponding hire of new programming personnel. Even if we were to halve Bemer’s estimates, the predicted industry demand for computer programmers in 1960 would top eighty thousand.

In truth, no one really knew for certain exactly how many programmers would be required. Contemporary estimates ranged from fifty thousand to five hundred thousand.9 What was abundantly clear, however, was that whatever the total demand for programmers might eventually turn out to be, it would be impossible to satisfy using existing training and hiring practices. By the mid-1960s the lack of availability of trained computer programmers threatened to stifle the adoption of computer technology—a grave concern for manufacturers and employers alike. Warnings of a “gap in programming support” caused by the ever-worsening “population problem” pervade the industry literature in this period.10 In 1966, the personnel situation had degraded so badly that Business Week magazine declared it a “software crisis”—the first appearance of the crisis mentality that would soon come to dominate and define the entire industry.11

Wayne State Conference

It did not take long after the invention of the first electronic computers for employers and manufacturers to become aware of the “many educational and manpower problems” associated with computerization. In 1954, leaders in industry, government, and education gathered at Wayne State University for the Conference on Training Personnel for the Computing Machine Field. The goal was to discuss what Elbert Little, of the Wayne State Computational Laboratory, suggested was a “universal feeling” among industry leaders that there was “a definite shortage” of technically trained people in the computer field.12 This shortage, variously described by an all-star cast of scientists and executives from General Motors, IBM, the RAND Corporation, Bell Telephone, Harvard University, MIT, the Census Bureau, and the Office of Naval Research, as “acute,” “unprecedented,” “multiplying dramatically,” and “astounding compared to the [available] facilities,” represented a grave threat to the future of electronic computing. Already it was serious enough to demand a “cooperative effort” on the part of industry, government, and educational institutions to resolve.13

The proceedings of the Conference on Training Personnel for the Computing Machine Field provide the best data available on the state of the labor market in the electronic computer industry during its first decade. Representatives from almost every major computer user or manufacturer were in attendance; those who could not be present were surveyed in advance about their computational requirements and personnel practices.

The most obvious conclusion to be drawn from these data are that the computer industry in this period was growing rapidly, not just in size, but also in scope. The survey of the five hundred largest manufacturing companies in United States, compiled by Milton Mengel of the Burroughs Corporation, revealed that almost one-fifth were already using electronic computers by 1954, with another fifth engaged in studying their feasibility. The extent of this early and widespread adoption of the computer by large corporations is confirmed by other sources, and is a reflection of the increased availability of low(er)-cost and more reliable technology. By 1954, for example, IBM had already released its first mass-produced computer, the IBM 650, which sold so many units that it became known as the “Model T” of electronic computing. The IBM 650 and successors were in many ways evolutionary developments, designed specifically to integrate smoothly into already-existing systems and departments of computation.

This increase in the number of installed computers was, in and of itself, enough to cause a serious shortage of experienced computer personnel. Truman Hunter, of the IBM Applied Sciences Division (an entirely separate group from that headed by Bemer), anticipated doubling his programming staff (from fifty to a hundred) by the end of the year.14 Similar rates of growth were reported in the aircraft, automobile, and petroleum industries, with one survey respondent expected to triple its number of programmers.15 Charles Gregg, of the Air Force Materiel Command, declined to even estimate the demand for trained computer personnel in the U.S. government, suggesting only that “we sure need them badly,” and that as far as training was concerned, “we have a rough row to hoe.”16 If we include in our understanding of computer personnel not just programmers but also keypunch and machine operators, technicians, and supervisory staff, the personnel shortage appears even more dramatic.

Figure 3.2

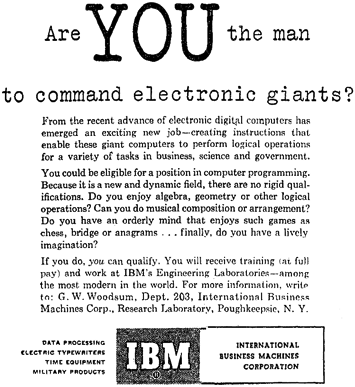

Cartoon from Datamation magazine, 1968.

In the face of this looming crisis, the existing methods for training programmers and other computer personnel were revealed as ludicrously insufficient. At this point, there were no formal academic programs in computer science in existence, and those few courses in computer programming that were offered in universities were at the master’s or PhD level. Computer manufacturers, who had a clear stake in ensuring that their customers could actually use their new machines, provided some training services. But in the fifteen months prior to the 1954 conference, confessed M. Paul Chinitz of Remington Rand Univac (at that point the largest manufacturer of computers in the world), the company had only managed to train a total of 162 programmers.17 He estimated that the total training capacity of all of the manufacturers combined at a mere 260 programmers annually. And so the majority of computer users were left to train their own personnel.18 This in-house training was expensive, time-consuming, and generally inadequate.19

Part of the problem, of course, was that computer programming was inherently difficult. As was described in the previous chapter, programming in the 1950s—particularly in the early 1950s—was an inchoate discipline, a jumble of skills and techniques drawn from electrical engineering, mathematics, and symbolic logic. It was also intrinsically local and idiosyncratic: each individual computer installation had its own unique software, practices, tools, and standards. There were no programming languages, no operating systems, no best-practice guidelines, and no textbooks. The problem with the so-called electronic brains, as Truman Hunter of IBM noted, is that they were anything but: computers might be powerful tools, yet they were “completely dependent slaves” to the human mind. The development of these machines was resulting in “even greater recognition of, and paying a greater premium for,” the skilled programmers who transformed their latent potential into real-world applications.20

It was one thing to identify, as Truman Hunter did, the increasing need for “men [programmers] . . . who were above average in training and ability” to accomplish this transformation, but what kind of training, and what kind of abilities?21 Although government laboratories and engineering firms remained the primary consumers of computer technology through the early 1950s, a growing number were being sold to insurance companies, accounting firms, and other, even less technically oriented customers. Not only were these users less technically proficient and less likely to have their own in-house technical specialists but they also used their computers for different and in many ways more complicated types of applications. The Burroughs study, for example, suggested an interesting shift in the way in which computers were being used in this period, and by whom. While the majority of computers (95 percent) currently in service were being used for engineering or scientific purposes, the data on anticipated future purchases indicated a shift toward business applications.22 The next generation of computers, the survey suggested, would be used increasingly (16 percent) for business data processing rather than scientific computation.23

These new business users saw the electronic computer as more than mere number crunchers; they saw them as payroll processing devices, data processing machines, and management information systems. This broader vision of an integrated “information machine” demanded of the computer new features and capabilities, many of them software rather than hardware oriented.24

As the computer became more of a tool for business than a scientific instrument, the nature of its use—and its primary user, the computer programmer—changed dramatically. The projects that these business programmers worked on tended to be larger, more highly structured (while at the same time less well defined), less mathematical, and more tightly coupled with other social and technological systems than were their scientific counterparts. Were the programmers who worked on heterogeneous business data processing systems technologists, managers, or accountants? As Charles Gregg of the Air Force Materiel Command jokingly suggested, the people who made the best programmers were “electronics engineers with an advanced degree in business administration.” Such multitalented individuals were obviously in short supply. “If anyone can energize an educational program to produce such people in quantity,” he quickly added, “we would certainly like to be put on their mailing list.” His fellow conference participants no doubt agreed with this assessment: the needs of business demanded a whole new breed of programmers, and plenty of them.25

The 1954 Conference on Training Personnel for the Computing Machine Field was to be the first of many. The “persistent personnel problem,” as it soon became known in the computing community, would only get worse over the course of the next decade.26 It was clear that recruiting programmers a half dozen at the time with cute advertisements in the New York Times was not a sustainable strategy. But what was the alternative? If employers truly believed, as was argued in the previous chapter, that computer programmers formed a unique category of technical specialists—more creative than scientific, artisanal rather than industrial, born and not made—then how could they possibly hope to ensure an adequate supply to meet a burgeoning demand? How did they reconcile contemporary beliefs about the idiosyncratic nature of individual programming ability with the rigid demands of corporate management and control?

Aptitude Tests and Psychological Profiles

So how did companies deal with the need to train and recruit programmers on a large scale? Here the case of the System Development Corporation (SDC) is particularly instructive.

SDC was the RAND Corporation spin-off responsible for developing the software for the U.S. Air Force’s Semi-Automated Ground Environment (SAGE) air-defense system. SAGE was perhaps the most ambitious and expensive of early cold war technological boondoggles. Comprised of a series of computerized tracking and communications centers, SAGE cost approximately $8 billion to develop and operate, and required the services of over two hundred thousand private contractors and military operators.

A major component of the SAGE project was the real-time computers required to coordinate its vast, geographically dispersed network of observation and response centers. IBM was hired to develop the computers themselves but considered programming them to be too difficult. In 1955 the RAND Corporation took over software development. It was estimated that the software for the SAGE system would require more than one million lines of code to be written. At a time when the largest programming projects had involved at most fifty thousand lines of code, this was a singularly ambitious undertaking.27

Within a year, there were more programmers at RAND than all other employees combined. Overwhelmed, RAND spun-off SDC to take over the project. By 1956, SDC employed seven hundred programmers, which at the time represented three-fifths of the available programmers in the entire United States.28 Over the next five years, SDC would hire and train seven thousand more.29 In the space of a few short years the personnel department at SDC had effectively doubled the number of trained programmers in the country. “We trained the industry,” SDC executives were later fond of saying, and in many respects they were correct; for the next decade, at the very least, any programming department of any size was likely to contain at least two or three SDC alumni.30

In order to effectively recruit, train, and manage an unprecedented number of programmers, SDC pursued three interrelated strategies. The first involved the construction of an organizational and managerial structure that reduced its reliance on highly skilled, experienced programmers. The second focused on the development and use of aptitude tests and personality profiles to filter out the most promising potential programmers. And finally, SDC invested heavily in internal training and development programs. In a period when the computer manufacturers combined could only provide twenty-five hundred student weeks of instruction annually, SDC devoted more than ten thousand student weeks to instructing its own personnel to program.31

The engineers who founded SDC explicitly rejected what they called the “nostalgic” notion, common in the industry at that time, that programmers were “different,” and “could not work and would not prosper” under the rigid structures of engineering management.32 They organized SDC along the lines of a “software factory” that relied less on skilled workers, and more on centralized planning and control. The principles behind this approach were essentially those that had proven so successful in traditional industrial manufacturing: replaceable parts, simple and repetitive tasks, and a strict division of labor. The assumption was that a complex computer program like the SAGE control system could be neatly broken down into simple, modular components that could be easily understood by any programmer with the appropriate training and experience. Programmers in the software factory were mere machine operators; they had to be trained, but only in the basic mechanisms of implementing someone else’s design. In the SDC hierarchy, managers made all of the important decisions.33

The hierarchical approach to software development was attractive to SDC executives for a number of reasons. To begin with, it was a familiar model for managing government and military subcontractors. Engineering management promised scientific control over the often-unpredictable processes of research and development. It allowed for the orderly production of cutting-edge science and technology.34 In the language used by the managers themselves, it was a solution that “scaled” well, meaning that it could accommodate the rapid and unanticipated growth typical of cold war–era military research. Scientific management techniques and production technologies could be substituted for human resources. It was not a system dependent on individual genius or chance insight. It replaced skilled personnel with superior process. For these and other reasons, it seemed the perfect solution to the problem posed by the mass production of computer programs. (Coincidentally, it was easier to justify billing the government for a large number of mediocre low-wage employees than a smaller number of excellent but expensive contractors.)

It is important to note that the SDC approach did not attempt to solve its programmer personnel problem by reducing the number of programmers it required. On the contrary, the SDC software factory strategy (or as detractors dismissively referred to it, the “Mongolian Horde” approach to software development) probably demanded more programmers than was otherwise necessary. But the programmers that SDC was interested in were not the idiosyncratic “black artists” that most employers were desperately in search of. SDC still expected to hire and train large numbers of programmers, yet it hoped that these programmers would be much easier to identify and recruit. Most of its trainees had little or no experience with computers; in fact, many managers at SDC preferred it that way.35

The solution that SDC ultimately employed to identify and recruit potential programmers was to become standard practice in the industry. Building on techniques pioneered at RAND and MIT’s Lincoln Laboratory in the early 1950s, SDC developed a suite of aptitude tests and psychological profiles that were used to screen large numbers of potential trainees.36 Candidates who scored well on the tests were then interviewed, tested a second time—this time for desirable psychological characteristics—and then assuming that all went well, offered a position. The aptitude tests were meant to filter for traits thought essential to good programming, such as the ability to think logically and do abstract reasoning. The psychological profiles were meant to identify individuals with the appropriate personality for programming work.37

The use of psychometric tools such as aptitude tests and psychological profiles was not unique to computing. Such tests had long been used by the U.S. military in the recruitment of soldiers. The SDC exams, for example, were based on the Thurstone Primary Mental Abilities Test and the Thurstone Temperament Schedule, which had both been in wide use since the 1930s.38 In the period following the end of the Second World War, similar metrics had been enthusiastically adopted by the advocates of scientific personnel research.39 SDC was able to choose from more than thirty available tests when it established its test battery in the late 1950s.40

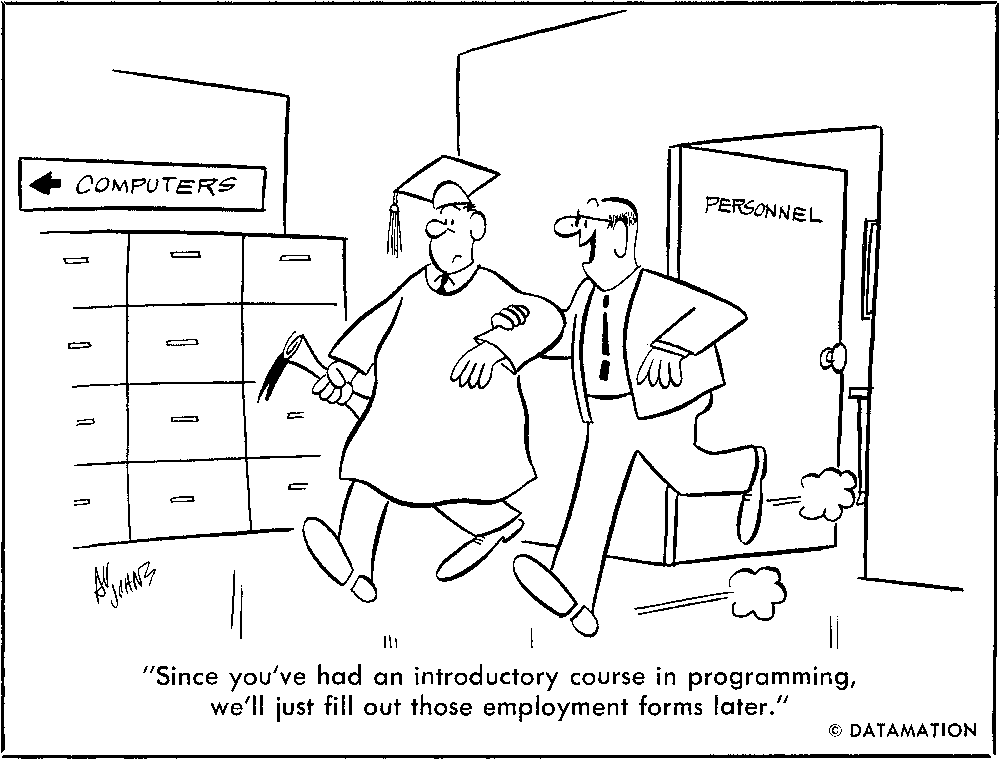

Figure 3.3

Honeywell Corporation Aptitude Test, 1965.

The central assumption of all such aptitude tests was that there was a particular innate characteristic, or set of characteristics, that could be positively correlated with occupational performance. These traits were necessarily innate—otherwise they could simply be taught, rather then only identified—and tended to be cognitive, personality related, or some combination of both. The Thurstone Primary Mental Abilities Test, for example, claimed to evaluate specific skills, such as “verbal meaning” and “reasoning,” as well as more general qualities such as “emotional stability.” The verbal meaning section presented a series of words for which the test taker would have to identify the closest synonym. The reasoning section involved the completion of number series using rules implicit in the given portion of the series. The emotional stability questions purported to measure an amalgam of desirable personality traits, including patience and a willingness to pay close attention to detail.

The scientific validity of aptitude testing was at best equivocal. At an Association for Computing Machinery conference in 1957, the company’s own psychometrician, Thomas Rowan, presented a paper concluding that “in every case,” the correlation between test scores and subsequent performance reviews “was not significantly different from zero.”41 The best he could say was that scores on the aptitude test did correlate somewhat with grades in the programming course. Nevertheless, SDC continued to use aptitude tests, including those tests that Rowan had identified as unsatisfactory, as the primary basis for its selection procedures at least until the late 1960s.

Why persist in using aptitude testing when it was so obviously inadequate? The simple answer seems to be that SDC had no other option. Having accepted a $20 million contract from the Air Defense Command to develop the SAGE software, SDC necessarily had to expand rapidly. Even had SDC managed to hire away all of the computer programmers then working in the United States, it could still not have adequately staffed its growing programming division. The entire SDC development strategy had been constructed around the notion that complex software systems could be readily broken down into simpler modules that even relatively novice programmers—properly managed—could adequately develop. The SDC software factory was a deliberate attempt to industrialize the programming process, to impose on it the lessons learned from traditional industrial manufacturing. Like all industrial systems, the software factory required not only new organizational forms and production technologies (in this case, automated development and testing utilities) but also new forms of workers. As with the replacement of skilled machinists with unskilled machine operators in the automobile factories of the early twentieth century, these new software workers would require less experience and training than their predecessors, but the availability of large numbers of them was essential. The mass production of computer programs necessitated the mass production of programmers.

As will be discussed further below, it is questionable whether the SDC vision of the software factory was ever truly realized—by SDC itself or any of its many imitators. But for the time being it is enough to say that the aptitude testing methods that SDC originated and then disseminated throughout the industry assumed programming to be a well-defined, largely mechanical process. In the words of Thomas Rowan, the person primarily responsible for the SDC personnel selection process, programming was only “that activity occurring after an explicit statement of the problem had been obtained.”42 Specifically excluded from programming were any of the creative activities of planning or design. In other words, SDC had redefined computer programming as exactly the type of skill that aptitude tests were meant to accurately identify: straightforward, mechanical, and easily isolated. The SDC aptitude tests were not so much an attempt to identify programmer skill and ability as to embody it.

IBM PAT

Despite the seeming inability of the SDC aptitude testing regime to accurately capture the essence of programming ability, similar tests continued to be widely developed and adopted, not only by SDC, but also increasingly by other large employers. Of these second-generation tests, the most significant was the IBM Programmer Aptitude Test (PAT). In 1955, IBM contracted with two psychologists, Walter McNamara and John Hughes, to develop an aptitude test to identify programming talent. The programmer test was based on an earlier exam for card punch operators. Originally called the Aptitude Test for EDPM (Electronic Data Processing Machine) Programmers, it was renamed PAT in 1959.43

Over the next few decades, IBM PAT would become the industry standard instrument for evaluating programming ability. By 1962 an estimated 80 percent of all businesses used some form of aptitude test when hiring programmers, and half of these used IBM PAT.44 Most of the many vocational schools that emerged in this period to train programmers used PAT as a preliminary screening device. In 1967 alone, PAT was administered to more than seven hundred thousand individuals.45 Well into the 1970s, IBM PAT served as the de facto gateway into the programming occupation.

Like the SDC exams, IBM PAT focused primarily on mathematical aptitude, with most of the questions dealing with number series, figure analogies, and arithmetic reasoning. Although several minor variations of PAT were introduced over the course of the next several decades, the overall structure of the exam remained surprisingly consistent. The first section required examinees to identify the underlying rule defining the pattern of a series of numbers. The second section was similar to the first, but involved geometric forms rather than number series. The third and final section posed word problems that could be reduced to algebraic forms, such as “How many apples can you buy for sixty cents at the rate of three for ten cents?”46 Examinees had fifty minutes to answer roughly one hundred questions, and so speed as well as accuracy was required.

Critics of PAT argued that its emphasis on mathematics made it increasingly irrelevant to contemporary programming practices. It might once have been the case, as Gerald Weinberg acknowledged in his acerbic critique of IBM PAT in 1971, that programmers would have to add two or three hexadecimal numbers in order to find an address in a dump of a machine or assembly language program. But even then the arithmetic involved was relatively trivial, and the development of high-level programming languages had largely eliminated the need for such mental mathematics. And as for an aptitude for understanding geometric relationships, Weinberg noted sarcastically, “I’ve never met a programmer who was asked to tell whether two programs were the same if one was rotated 90 degrees.”47 At best such measures of basic mathematical ability were a proxy for more general intelligence; more likely, however, they were worse than useless, a deliberate form of self-deception practiced by desperate employers and the “personnel experts” who preyed on them.48

Weinberg was not alone in his critique of the mathematical focus of PAT and other exams. As early as the late 1950s, a Bureau of Labor report had identified the growing sense of corporate disillusionment with the mathematical approach to computing, contending that “many employers no longer stress a strong background in mathematics for programming of business or other mass data if candidates can demonstrate an aptitude for the work.”49 As more and more computers were used for business data processing rather than scientific computation, the types of problems that programmers were required to solve changed accordingly. The mathematical tricks that were so crucial in trimming valuable processor cycles in scientific and engineering applications had no place in the corporate environment, which privileged legibility and ease of maintenance over performance.50 Not surprisingly, scientific programmers scored better on PAT than business programmers.51

The relevance of mathematical aptitude to programming ability remained, and still does, a perennial question in the industry. At least one study of programmers identified no significant difference in performance between those with a background in science or engineering and those who studied humanities or the social sciences.52 Even the authors of IBM PAT concluded that at best, mathematical ability was associated with particular applications and not programming ability in general.53

Some observers went so far as to suggest that by privileging mathematical aptitude, PAT was downright pathological, selecting for “a type of logical mind which . . . is not very often supported by maturity or reasoned thinking ability.”54 As a result, these selection processes tended to segregate individuals whose personality traits made it difficult to cooperate with management and fellow employees. At the very least, the mathematical mind-set frequently precluded the kinds of complex solutions typical of business programming applications.

As will be described in more detail in chapter 5, the emerging discipline of computer science, in its own quest for academic respectability, continued to emphasize mathematics, while industry leaders regularly dismissed it as irrelevant.55 For the time being, it is enough to note that the continuing controversy over mathematics reflected deeper disagreement, or at least ambiguity, about the true nature of programming ability.

The larger question, of course, was whether or not scores on PAT corresponded with real-world programming performance. On this question the data are ambiguous. Most employers did not even attempt to correlate test scores with objective measures of performance such as supervisor ratings.56 The small percentage that did concluded that there was no relationship between PAT scores and programming performance at all, at least in the context of business programming.57 At best, these studies identified a small correlation between PAT scores and academic success in training programs. Few argued that such correlations translated into accurate indicators of future success in the workplace.58 Even IBM recommended that PAT be used only in the context of a larger personnel screening process.

Over the course of the next decade, there were several attempts to recalibrate the tests to make them more directly relevant to real-world programming. IBM itself created several modifications to its original PAT, including the Revised Programmer Aptitude Test (1959) and the Data Processing Aptitude Test (1964), although neither successfully replaced the popular PAT.59 The Computer Usage Company’s version of a programmer aptitude test required examinees to solve logical problems using the console lights on an IBM 1401 computer.60 The Aptitude Assessment Battery: Programming, developed in 1967 by Jack Wolfe, a prominent critic of IBM PAT, eliminated mathematics and concentrated on an applicant’s ability to focus intensively on complex, multiple-step problems.61 The Programmer Aptitude and Competence System required examinees to develop actual programs using a simplified programming language.62 The Basic Programmer Knowledge Test (1966) tested everything from design and coding to testing and documentation.63

Personality Profiles

Since even their most enthusiastic advocates recognized the limitations of aptitude testing, most particularly their narrow focus on mathematics and logic, many employers also developed personality profiles that they hoped would help isolate the less tangible characteristics that made for a good programmer trainee. Some of these characteristics, such as being task oriented or detail minded, overlapped with the skills measured by more conventional aptitude tests. Many simply reinforced the conventional wisdom captured by the “Talk of the Town” column almost a decade earlier. “Creativity is a major attribute of technically oriented people,” suggested one representative profile. “Look for those who like intellectual challenge rather than interpersonal relations or managerial decision-making. Look for the chess player, the solver of mathematical puzzles.”64 But other profiles emphasized different, less obvious personality traits such as imagination, ingenuity, strong verbal abilities, and a desire to express oneself.65 Still others tested for even more elusive qualities, such as emotional stability.66 Such traits were obviously difficult to capture in a standard, skills-oriented aptitude test. Personality profiles relied instead on a combination of psychological testing, vocational interest surveys, and personal histories to provide a richer, more nuanced set of criteria on which to evaluate programmers.

The idea that particular personality traits might be useful indicators of programming ability was clearly a legacy of the origins of programming in the early 1950s. The central assumption was that programming ability was an innate rather than a learned ability, something to be identified rather than instilled. Good programming was believed to be dependent on uniquely qualified individuals, and that what defined these people was some indescribable, impalpable quality—a “twinkle in the eye,” an “indefinable enthusiasm,” or what one interviewer depicted as “the programming bug that meant . . . we’re going to take a chance on him despite his background.”67 The development of programmer personality profiles seemed to offer empirical evidence for what anecdote had already determined: the best programmers appeared to have been born, not made.

The use of personality profiles to identify programmers began, as with other industry-standard recruiting practices, at SDC. Applicants at SDC were first tested for aptitude, then interviewed in person, and only then profiled for desirable personality characteristics. Like other psychological profiles from this period, the SDC screens identified as valuable only those skills and characteristics that would have been assets in any white-collar occupation: the ability to think logically, work under pressure, and get along with people; a retentive memory and the desire to see a problem through to completion; and careful attention to detail.

By the start of the 1960s, however, SDC psychologists had developed more sophisticated models based on the extensive employment data that the company had collected over the previous decade as well as surveys of members of the Association for Computer Machinery and the Data Processing Management Association. In a series of papers published in serious academic journals such as the Journal of Applied Psychology and Personnel Psychology, SDC psychologists Dallis Perry and William Cannon provided a detailed profile of the “vocational interests of computer programmers.”68 The scientific basis for their profile was the Strong Vocational Interest Bank (SVIB), which had been widely used in vocational testing since the late 1920s.

The basic SVIB in this period consisted of four hundred questions aimed at eliciting an emotional response (“like,” “dislike,” or “indifferent”) to specific occupations, work and recreational activities, types of people, and personality types. By the 1960s, more than fifty statistically significant collections of preferences (“keys”) had been developed for such occupations as artist, mathematician, police officer, and airplane pilot. Perry and Cannon were attempting to develop a similar interest key for programmer. They hoped to use this key to correlate a unique programmer personality profile with self-reported levels of job satisfaction. In the absence of direct measures of job performance, such as supervisors’ evaluations, it was assumed that satisfaction tracked closely with performance. The larger assumption behind the use of the SVIB profiles was that candidates who had interests in common with those individuals who were successful in a given occupation were themselves also likely to achieve similar success.

Many of the traits that Perry and Cannon attributed to successful programmers were unremarkable: for the most part programmers enjoyed their work, disliked routine and regimentation, and were especially interested in problem and puzzle-solving activities.69 The programmer key that they developed bore some resemblance to the existing keys for engineering and chemistry, but not to those of physics or mathematics, which Perry and Cannon saw as contradicting the traditional focus on mathematics training in programmer recruitment. A slight correlation with the musician key offered “some, but not very strong,” support for “the prevalent belief in a relationship between programming and musical ability.”70 Otherwise, programmers resembled other white-collar professionals in such diverse fields as optometry, public administration, accounting, and personnel management.

In fact, there was only one really “striking characteristic” about programmers that the Perry and Cannon study identified. This was “their disinterest in people.” Compared with other professional men, “programmers dislike activities involving close personal interaction. They prefer to work with things rather than people.”71 In a subsequent study, Perry and Cannon demonstrated this to be true of female programmers as well.72

The idea that computer programmers lacked people skills quickly became part of the lore of the computer industry. The influential industry analyst Richard Brandon suggested that this was in part a reflection of the selection process itself, with its emphasis on mathematics and logic. The “Darwinian selection” mechanism of personnel profiling, Brandon maintained, selected for personality traits that performed well in the artificial isolation of the testing environment, but that proved dysfunctional in the more complex social environment of a corporate development project. Programmers were “excessively independent,” argued Brandon, to the point of mild paranoia. The programmer type is “often egocentric, slightly neurotic, and he borders upon a limited schizophrenia. The incidence of beards, sandals, and other symptoms of rugged individualism or nonconformity are notably greater among this demographic group. Stories about programmers and their attitudes and peculiarities are legion, and do not bear repeating here.”73

Although Brandon’s evidence was strictly anecdotal, his portrayal of the neurotic programmers was convincing enough that the psychologist Theodore Willoughby felt compelled to refute it on scientific grounds in his 1972 article “Are Programmers Paranoid.”74 But whether or not Brandon’s paranoia was, from a strictly medical perspective, an accurate diagnosis is irrelevant. The idea that “detached” individuals made good programmers was embodied, in the form of the psychological profile, into the hiring practices of the industry.75 Possibly this was a legacy of the murky origins of programming as a fringe discipline in the early 1950s; perhaps it was self-fulfilling prophecy. Nevertheless, the idea of the programmer as being particularly ill equipped for or uninterested in social interaction did become part of the conventional wisdom of the industry. Although the short-term effect of this particular occupational stereotype was negligible, it would later come back to haunt the programming community as it attempted to professionalize later in the decade. As we will see in later chapters, the stereotype of the computer programmer as a machine obsessed and antisocial was used to great effect by those who wished to undermine the professional authority of the computer boys.

For the most part, however, the personality profiles that Perry and Cannon as well as others developed simply became one component of a larger set of tools used by employers to evaluate potential programmers.76 According to one survey of Canadian employers, more than two-thirds used a combination of aptitude and general intelligence tests, personality profiles, and interest surveys in their selection processes.77

The Situation Can Only Get Worse

Despite the massive amount of effort that went into developing the science of programmer personnel selection, the labor market in computing only seemed to deteriorate. Many of the technological and demographic trends identified at the Wayne State Conference in 1954 continued to accelerate. By 1961, industry analysts were fretting publicly about a “gap in programming support” that “will get worse in the next several years before it gets better.”78 In 1962, the editors of the powerful industry journal Datamation declared that “first on anyone’s checklist of professional problems is the manpower shortage of both trained and even untrained programmers, operators, logical designers and engineers in a variety of flavors.”79 At a conference held that year at the MIT School of Industrial Management, the “programming bottleneck” was identified as the central dilemma in computer management.80 In 1966, the labor situation had gotten so bad that Business Week declared it a “software crisis.”81 An informal survey in 1967 of management information systems (MIS) managers identified as the primary hurdle “handicapping the progress of MIS” to be “the shortage of good, experienced people.”82 By the late 1960s, the demand for programmers was increasing by more than 50 percent annually, and it was predicted that “the software man will be in even greater demand in 1970 than he is today.”83 Indeed, estimates of the number of programmers that would be required by 1970 ranged as high as 650,000.84

It would be difficult to overstate the degree to which concern about the software labor crisis dominated the industry in this period. The popular and professional literature during this time was obsessed with the possible effects of the personnel crisis on the future of the industry. “Competition for programmers has driven salaries up so fast,” warned a contemporary article in Fortune magazine, “that programming has become probably the country’s highest paid technological occupation. . . . Even so, some companies can’t find experienced programmers at any price.”85 A study in 1965 by Automatic Data Processing, Inc., then one of the largest employers of programmers, predicted that average salaries in the industry would increase 40 to 50 percent over the next five years.86 The ongoing “shortage of capable programmers,” argued Datamation in 1967, “had profound implications, not only for the computer industry as it is now, but for how it can be in the future.”87 These potentially profound implications included everything from financial collapse to software-related injury or death to the emergence of a packaged software application industry.

Faced with a growing shortage of skilled programmers, employers were forced to expand their recruitment efforts and lower their hiring standards. Although by 1967 IBM alone was training ten thousand programmers annually (at a cost of $90 to $100 million), it was becoming increasingly clear that computer manufacturers alone could not produce trained programmers fast enough.88 As a result, many companies reluctantly assumed the costs of expensive internal training programs, “not because they want to do it, but because they have found it to be an absolute necessary adjunct to the operation of their business.”89 It is difficult to find accurate data on the size of such programs, as many organizations refused to disclose details about them to outsiders, “on the theory that to do so would only invite raiding” from other employers.90 The job market was so competitive in this period that as many as half of all programmer trainees would leave within a year to pursue more lucrative opportunities.91 And since the cost of training or recruiting a new programmer was estimated at almost an entire year’s salary, such high levels of turnover were expensive.92 Many employers were thus extremely secretive about their training and recruitment practices; some even refused to allow their computer personnel to attend professional conferences because of the rampant headhunting that occurred at such gatherings.93 Because of the low salaries that it paid relative to the industry, the U.S. government had a particular problem retaining skilled employees, and so in 1963, Congress passed the Vocational Education Act, which made permanent the provisions of Title VIII of the National Defense Education Act of 1959 for training highly skilled technicians. By 1966, the act had paid for the training of thirty-three thousand computer personnel—requiring in exchange only that they work for a certain time in government agencies.94

Figure 3.4

Cartoon from Datamation magazine, 1962.

In numerous cases, the aptitude tests that many corporations hoped would alleviate their personnel problems had entirely the opposite effect. Whatever small amount of predictive validity the tests had was soon compromised by applicants who cheated or took them multiple times. Since many employers relied on the same basic suite of tests, would-be programmers simply applied for positions at less-desirable firms, mastered the aptitude tests and application process, and then transferred their newfound testing skills to the companies they were truly interested in. Taking the same test repeatedly virtually assured top scores.95 Copies of IBM PAT were also stolen and placed in fraternity files.96 By the late 1960s it appeared that all of the major aptitude tests had been thoroughly compromised. One widely circulated book contained versions of the IBM, UNIVAC, and NCR exams. Updated versions were published almost annually.97

Paradoxically, even as the value of the aptitude tests diminished, their use began to increase. All of the major hardware vendors developed their own versions, such as the National Cash Register Programmer Aptitude Test and the Burroughs Corporation Computer Programmer Aptitude Battery.98 Aptitude testing became the “Hail Mary pass” of the computer industry. Some companies tested all of their employees, including the secretaries, in the hope that hidden talent could be identified.99 A group called the Computer Personnel Development Association was formed to scour local community centers for promising programmer candidates.100 Local YMCAs offered the test for a nominal fee, as did local community colleges.101 In 1968 computer service bureaus in New York City, desperate to fill the demand for more programmers, began testing inmates at the nearby Sing-Sing Prison, promising them permanent positions pending their release.102 That same year, Cosmopolitan Magazine urged “Cosmo Girls” to go out and become “computer girls” making “$15,000 a year” as programmers. Not only did the widespread personnel problem in computing make it possible for women to break into the industry but the field was also currently “overrun with males,” making it easy to find desirable dating prospects. Programming was “just like planning a dinner,” the article quoted software pioneer Admiral Grace Hopper as saying. “Women are ‘naturals’ at computer programming.” And in true Cosmopolitan fashion, the article was also accompanied by a quiz: in this case, a mini programmer aptitude test adapted from an exam developed at NCR.103 The influx of new programmer trainees and vocational school graduates into the software labor market only exacerbated an already-dire labor situation. The market was flooded with aspiring programmers with little training and no practical experience. As one study by the Association for Computing Machinery’s (ACM) SIGCPR warned, by 1968 there was a growing oversupply of a certain undesirable species of software specialist. “The ranks of the computer world are being swelled by growing hordes of programmers, systems analysts and related personnel,” the SIGCPR argued. “Educational, performance and professional standards are virtually nonexistent and confusion grows rampant in selecting, training, and assigning people to do jobs.”104

It was not just employers who were frustrated by the confused state of the labor market. “As long as I have been programming, I have heard about this ‘extreme shortage of programmers,’” wrote one Datamation reader, whose husband had unsuccessfully tried to break into the computer business. “How does a person . . . get into programming?”105 “Could you answer for me the question as to what in the eyes of industry constitutes a ‘qualified’ programmer?” pleaded another aspiring job candidate. “What education, experience, etc. are considered to satisfy the ‘qualified’ status?”106 A background in mathematics seemed increasingly irrelevant to programming, particularly in the business world, and even the emerging discipline of computer science appeared to offer no practical solution to the problem of training programmers en masse. In the absence of clear educational standards or functional aptitude exams, would-be programmers and employers alike were preyed on by a growing number of vocational schools that promised to supply both programmer training and trained programmers. During the mid-1960s these schools sprang up all over the country, promising high salaries and dazzling career opportunities, and flooding the market with candidates who were prepared to pass programming aptitude tests but nothing more. Advertisements for these vocational schools, which appeared everywhere from the classified section of newspapers to the back of paper matchbooks, emphasized the desperate demand for programmers and the low barriers of entry to the discipline: “There’s room for everyone. The industry needs people. You’ve got what it takes.”107

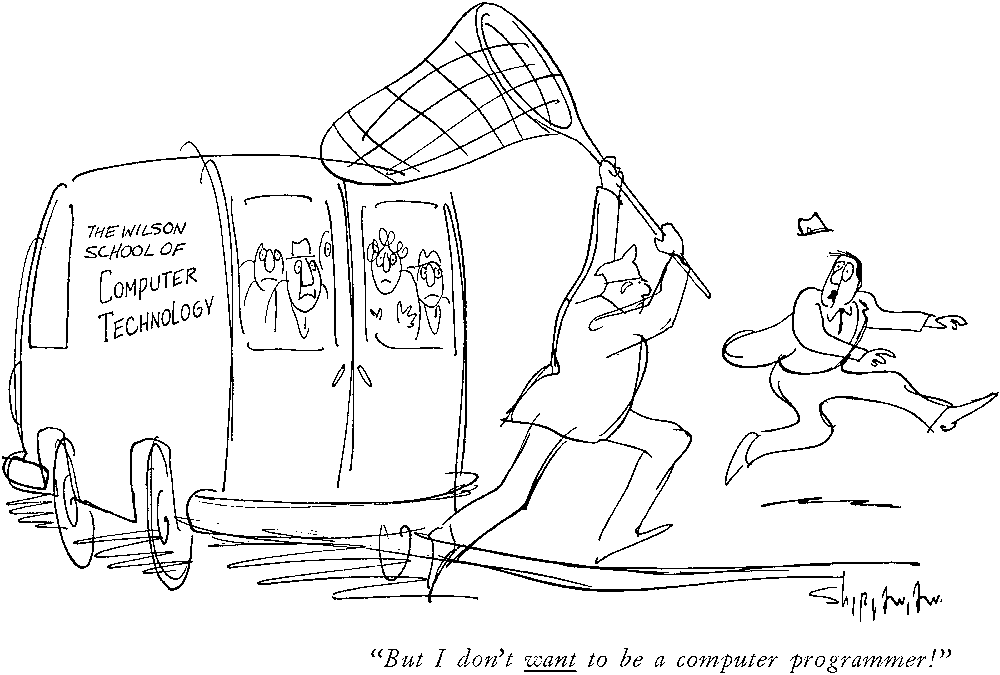

Figure 3.5

Cartoon from New Yorker magazine, May 31, 1969. © Vahan Shirvanian/The New Yorker Collection/www.cartoonbank.com.

The typical vocational school offered between three and nine months of training, and cost between $1,000 and $2,500. Students at these schools would receive four to five hours a day of training in various aspects of electronic data processing, including programming but also more basic tasks such as keypunch and tabulating machine operation. What programming training they did receive focused on the memorization of syntax rather than hands-on problem solving. Because of the high costs associated with renting computer time, the curriculum was often padded with material only tangentially related to computing—such as several days’ worth of review of basic arithmetic. A few schools did lease their own computers, but these were typically the low-end IBM/360 Model 20, which did not possess its own disk or tape mechanism. At some schools students could expect to only receive as little as one hour total of machine time, which had to be shared among a class of up to fifteen students.108

These schools were generally profit-oriented enterprises more interested in quantity than quality. The entrance examinations, curriculum, and fee structure of these programs were carefully constructed to comply with the requirements of the GI Bill. Aggressive salespeople promised guaranteed placement and starting salaries of up to $700 per week—at a time when the industry average weekly salary for junior programmers was closer to $400 to $500. Since these salespeople were paid on commission, and could earn as much as $150 for every student who enrolled in a $1,000 course of study, they encouraged almost anyone to apply; for many of the vocational schools, the “only meaningful entrance requirements are a high school diploma, 18 years of age . . . and the ability to pay.”109 Instructors were also compensated on a pay-as-you-go basis, which encouraged them to retain even the least competent of their students. Some of these instructors were working programmers moonlighting for additional cash, but given the overall shortage of experienced programmers in this period, most had little, if any, industry experience. Some had only the training that they had received as students in the very programs in which they were now serving as instructors.

Since these schools had an interest in recruiting as many students as possible, they made wide use of aptitude testing. Most included watered-down versions of IBM PAT in their marketing brochures, although a few offered coupons for independent testing bureaus. The version of PAT that many schools relied on was graded differently from the standardized test. A student could receive a passing grade after answering as few as 50 percent of the questions correctly, and a grade of A required only a score of 70 percent. The scores on these entrance examinations was basically irrelevant, with C and D students frequently receiving admission, but graduating students were required take the full version of PAT. Only the top-scoring students were passed on to employment agencies, thereby boosting the school’s claims about placement records.110

There were some vocational training programs that were legitimate. The Chicago-based Automation Institute, for example—sponsored by the Council for Economic and Industrial Research (itself largely sponsored by the computer manufacturer Control Data Corporation)—maintained relatively strict standards in its nationwide chain of franchises. In 1967, the Automation Institute became the first EDP school to be accredited by the Accrediting Commission for Business Schools. There were also programs offered by community colleges and junior colleges (and even some high schools) that at least attempted to provide substantial EDP training. The more legitimate schools oriented their curricula toward the requirements of industry. But the requirements of the industry were poorly understood or articulated, and vocational schools suffered from many of the same problems that plagued industry personnel managers: a shortage of experienced instructors, the lack of established standards and curricula, and general uncertainty about what skills and aptitudes made for a qualified programmer. For the most part, the conditions at most vocational EDP schools was so scandalous that by the end of the decade many companies imposed strict “no EDP school graduate” policies.111 A 1970 report by an ACM ad-hoc committee on private EDP schools confirmed this reluctance on the part of employers and concluded that fewer than 60 percent of EDP school graduates were able to land jobs in the EDP field.112

Making Programming Masculine

One unintended consequence of the uncertainty in the labor market for programming personnel reflected in—and in part created by—the widespread use of aptitude tests and personality profiles by corporate employers and vocational schools was the continued masculinization of the computing professions. We have already seen how the successful (re)construction of programming in the 1950s as a black art depended, in part, on particularly male notions of mastery, creativity, and autonomy. The increasingly male subculture of computer hacking (an anachronistic term in this period, but appropriately descriptive nevertheless) was reinforced and institutionalized by the hiring practices of the industry.

At first glance, the representation of programming ability as innate, rather than an acquired skill or the product of a particular form of technical education, might be seen as gender neutral or even female friendly. The aptitude tests for programming ability were, after all, widely distributed among female employees, including clerical workers and secretaries. And according to one 1968 study, it was found that a successful team of computer specialists included an “ex-farmer, a former tabulating machine operator, an ex-key punch operator, a girl who had done secretarial work, a musician and a graduate in mathematics.” The last, the mathematician, “was considered the least competent.”113 As hiring practices went, aptitude testing at least had the virtue of being impersonal and seemingly objective. Being a member of the old boys’ club does not do much for one’s scores on a standardized exam (Except to the extent that fraternities and other male social organizations served as clearinghouses for stolen copies of popular aptitude tests such as IBM PAT. Such theft and other forms of cheating were rampant in the industry, and taking the test more than once was almost certain to lead to a passing grade.)

Yet aptitude tests and personality profiles did embody and privilege masculine characteristics. For instance, despite the growing consensus within the industry (especially in business data processing) that mathematical training was irrelevant to the performance of most commercial programming tasks, popular aptitude tests such as IBM PAT still emphasized mathematical ability.114 Some of the mathematical questions tested only logical thinking and pattern recognition, but others required formal training in mathematics—a fact that even Cosmopolitan recognized as discriminating against women. Still, the kinds of questions that could be easily tested using multiple-choice aptitude tests and mass-administered personality profiles necessarily focused on mathematical trivia, logic puzzles, and word games. The test format simply did not allow for any more nuanced, meaningful, or context-specific problem solving. And in the 1950s and 1960s at least, such questions did privilege the typical male educational experience.

Figure 3.6

According to the original caption for this cartoon, “Programmers are crazy about puzzles, tend to like research applications and risk-taking, and don’t like people.” William M. Cannon and Dallis K. Perry, “A Vocational Interest Scale for Computer Programmers,” Proceedings of the Fourth SIGCPR Conference on Computer Personnel Research (Los Angeles: ACM, 1966), 61–82.

Even more obviously gendered were the personality profiles that reinforced the ideal of the “detached” (read male) programmer. It is almost certainly the case that these profiles represented, at best, deeply flawed scientific methodology. But they almost equally certainly created a gender-biased feedback cycle that ultimately selected for programmers with stereotypically masculine characteristics. The primary selection mechanism used by the industry selected for antisocial, mathematically inclined males, and therefore antisocial, mathematically inclined males were overrepresented in the programmer population; this in turn reinforced the popular perception that programmers ought to be antisocial and mathematically inclined (and therefore male), and so on ad infinitum. Combined with the often-explicit association of programming personnel with beards, sandals, and scruffiness, it is no wonder that women felt increasingly excluded from the center of the computing community.

Finally, the explosion of unscrupulous vocational schools in this period may also have contributed to the marginalization of women in computing. Not only were these schools constructed deliberately on the model of the older—and female-oriented—typing academies and business colleges, but they also preyed specifically on those aspirants to the programming professions who most lacked access to traditional occupational and financial assets, such as those without technical educations, college degrees, personal connections, or business experience. It was frequently women who fell into this category. At the very least, by sowing confusion in the programmer labor market through encouraging false expectations, inflating standards, and rigging aptitude tests, the schools made it even more difficult for women and other unconventional candidates to enter the profession.

This bias toward male programmers was not so much deliberate as it was convenient—a combination of laziness, ambiguity, and traditional male privilege. The fact that the use of lazy screening practices inadvertently excluded large numbers of potential female trainees was simply never considered. But the increasing assumption that the average programmer was also male did play a key role in the establishment of a highly masculine programming subculture.

The Search for Solutions

Given that aptitude tests were perceived by many within the industry to be inaccurate, irrelevant, and susceptible to widespread cheating, why did so many employers continue to make extensive use of them well into the 1980s? The most obvious reason is that they had few other options. The rapid expansion of the commercial computer industry in the early 1960s demanded the recruitment of large armies of new professional programmers. At the same time, the increasing diversity and complexity of software systems in this period—driven in large part by the shift in focus from scientific to business computing—meant that traditional measures of programming ability, most specifically formal training in mathematics or logic, were becoming ever less relevant to the quotidian practice of programming. The general lack of consensus about what constituted relevant knowledge or experience in the computer fields undermined attempts to systematize the production of programmers. Vocational EDP schools were seen as being too lax in their standards, and the emerging academic discipline of computer science was viewed as too stringent. Neither was believed to be a reliable short-term solution to the burgeoning labor shortage in programming.

In the face of such uncertainty and ambiguity, aptitude testing and personality profiling promised at least the illusion of managerial control. While many of the methods used by employers at this time appear hopelessly naive to modern observers, they represented the cutting edge of personnel research. Since at least the 1920s, personnel managers had been attempting to professionalize along the lines of a scientific discipline.115 The large-scale use of psychometric technologies for personnel selection during the first and second world wars had seemed to many to validate their claims to scientific legitimacy.116 In the immediate postwar period, personnel researchers established new academic journals, professional societies, and academic programs. It is no coincidence that the heyday of aptitude testing in the software industry corresponded with this period of intense professionalization in the fields that would eventually come to be known collectively as human resources management. The programmer labor crisis of the 1950s provided the perfect opportunity for these emerging experts to practice their craft.

On an even more pragmatic level, however, aptitude testing offered a significant advantage over the available alternatives. To borrow a phrase from contemporary computer industry parlance, aptitude testing was a solution that scaled efficiently. That is to say, the costs of aptitude testing grew only linearly (as opposed to exponentially) with the number of applicants. It was possible, in short, to administer aptitude tests quickly and inexpensively to thousands of aspiring programmers. Compared to such time-consuming and expensive alternatives such as individual interviews or formal educational requirements, aptitude testing was a cheap and easy solution. And since the contemporary emphasis on individual genius over experience or education meant that a star programmer was as likely to come from the secretarial pool as the engineering department, the ability to screen large numbers of potential trainees was preeminent.

Finally, in addition to its practical economic advantages, large-scale aptitude testing programs represented for many corporate employers a small but important step toward the eventual goal of mass-producing programmer trainees. Such tests were obviously not intended to evaluate the skills and abilities of experienced programmers; they were clearly tools for identifying the lowest common denominator among programmer talent. The explicit goal of testing programs at large employers like SDC was to reduce the overall level of skill among the programming workforce. By identifying the minimum level of aptitude required to be a competent programmer, SDC could reduce its dependence on individual programmers. It could construct a software factory out of the interchangeable parts produced by the impersonal and industrial processes of its aptitude test regimes.

It is this last consequence of aptitude testing that is the most interesting and perplexing. Like all of the proposed solutions to the labor shortage in programming, aptitude testing also embodied certain assumptions about the nature of the underlying problem. At first glance, the continued emphasis that aptitude tests and personality profiles placed on innate ability and creativity appeared to have served the interests of programming professionals. By reinforcing the contemporary belief that good programmers were born, not made, they provided individual programmers with substantial leverage in the job market. Experienced programmers made good money, had numerous opportunities for horizontal mobility within the industry, and were relatively immune from managerial imperatives. On the other hand, aptitude tests and personality profiles also emphasized the negative perception of programmers as idiosyncratic, antisocial, and potentially unreliable. Many computer specialists were keenly aware of the crisis of labor and the tension it was producing in their industry and profession as well as in their own individual careers. Although many appreciated the short-term benefits of the ongoing programmer shortage, many believed that the continued crisis threatened the long-term stability and reputation of their industry and profession.

As aptitude tests were increasingly used in a haphazard and irresponsible fashion, their value to both employers and computer specialists degraded considerably. Over the course of the late 1960s, new approaches to solving the personnel crisis emerged, each of which embodied different attitudes toward the nature of programming expertise. Beginning in the early 1960s aspiring professional societies, such as the Data Processing Management Association, developed certification programs for specific fields in computer programming, systems analysis, and software design.117 These were really certification exams, intended to validate the credentials of society members, not aptitude tests. But they suggested that a new approach to personnel management—the cultivation of professional norms and institutions—might be the solution to the personnel crisis. At the same time, academically minded researchers worked to elaborate a theory of computer science that would place the discipline of programming on a firm scientific foundation. For the time being, however, the preferred solution was technological rather than professional or theoretical: drawing from traditional industrial approaches to increasing productivity and eliminating human labor, computer manufacturers worked to automate the programming process. For managers and employers in the late 1950s and early 1960s, the development of “automatic programming systems” seemed to offer the perfect solution to the labor crisis in programming.