Figure 4.1

“Susie Meyers Meets PL/1” advertisement, IBM Corporation, 1968.

4

Tower of Babel

Is a language really going to solve this problem? Do we really design languages for use by what we might call professional programmers or are we designing them for use by some sub-human species in order to get around training and having good programmers? Is a language ever going to get around the training and having good programmers?

—RAND Symposium on Programming Languages, 1962

Automatic Programmers

The first commercial electronic digital computers became available in the early 1950s. For a short period, the focus of most manufacturers was on the development of innovative hardware. Most of the users of these early computers were large and technically sophisticated corporations and government agencies. In the middle of the decade, however, users and manufacturers alike became increasingly concerned with the rising cost of software development. By the beginning of the 1960s, the origins of “software turmoil” that would soon become a full-blown software crisis were readily apparent.1

As larger and more ambitious software projects were attempted, and the shortage of experienced programmers became more pronounced, industry managers began to look for ways to reduce costs by simplifying the programming process. A number of potential solutions were proposed: the use of aptitude tests and personnel profiles to identify the truly gifted superprogrammers; updated training standards and computer science curricula; and new management methods that would allow for the use of less-skilled laborers. The most popular and widely adopted solution, however, was the development of automatic programming technologies. These new tools promised to “eliminate the middleman” by allowing users to program their computers directly, without the need for expensive programming talent.2 The computer would program itself.

Despite their associations with deskilling and routinization, automatic programming systems could also work to the benefit of occupational programmers and academic computer scientists. High-level programming promised to reduce the tedium associated with machine coding, and allowed programmers to focus on more system-oriented—and high-status—tasks such as analysis and design. Language design and development served as a focus for productive theoretical research, and helped establish computer science as a legitimate academic discipline. And automatic programming systems never did succeed in eliminating the need for skilled programmers. In many ways, they contributed to the elevation of the profession, rather than the reverse, as was originally intended by some and feared by others.

In order to understand why automatic programming languages were such an appealing solution to the software crisis as well as why they apparently had so little effect on the outcome or severity of the crisis, it is essential to consider these languages as parts of larger social and technological systems. This chapter will describe the emergence of programming languages as a means of managing the complexity of the programming process. It will trace the development of several of the most prominent automatic programming languages, particularly FORTRAN and COBOL, and situate these technologies in their appropriate historical context. Finally, it will explore the significance of these technologies as potential solutions to the ongoing software crisis of the late 1950s and early 1960s.

Assemblers, Compilers, and the Origins of the Subroutine

At the heart of every automatic programming system was the notion that a computer could be used, at least in certain limited situations, to generate the machine code required to run itself or other computers. This was an idea with great practical appeal: although programming was increasingly seen as a legitimate and challenging intellectual activity, the actual coding of a program still involved tedious and painstaking clerical work. For example, the single instruction to “add the short number in memory location 25,” when written out in the machine code understood by most computers, was stored as a binary number such as 111000000000110010. This binary notation was obviously difficult for humans to remember and manipulate. As early as 1948, researchers at Cambridge University began working on a system to represent the same instruction in a more comprehensible format. The same instruction to “add the short number in memory location 25” could be written out as A 25 S, where A stood for “add,” 25 was the decimal address of the memory location, and S indicated that a “short” number was to be used.3 A Cambridge PhD student named David Wheeler wrote a small program called Initial Orders that automatically translated this symbolic notation into the binary machine code required by the computer.

The focus of early attempts to develop automatic programming utilities was on eliminating the more unpleasant aspects of computer coding. Although in theory the actual process of programming was relatively straightforward, in practice it was quite difficult and time-consuming. A single error in any one of a thousand instructions could cause an entire program to fail. It often took hours or days of laborious effort simply to get a program to work properly. The lack of tools made finding errors next to impossible. As Maurice Wilkes, another Cambridge researcher, would later vividly recall, “It had not occurred to me that there was going to be any difficulty about getting programs working. And it was with somewhat of a shock that I realized that for the rest of my life I was going to spend a good deal of my time finding mistakes that I had made in my programs.”4

These errors, or bugs as they soon came to be known, were often introduced in the process of transcribing or reusing code fragments. Wilkes and others quickly realized that there was a great deal of code that was common to different programs—a set of instructions to calculate the sine function, for example. In addition to assigning his student Wheeler to the development of the Initial Orders program, Wilkes set him to the task of assembling a library of such common subroutines. This method of reusing previously existing code became one of the most powerful techniques available for increasing programmer efficiency. The publication in 1951 of the first textbook on the Preparation of Programs for an Electronic Digital Computer by Wilkes, Wheeler, and Cambridge colleague Stanley Gill helped disseminate these ideas throughout the nascent programming community.5

While Wilkes, Wheeler, and Gill were refining their notions of a subroutine library, programmers in the United States were developing their own techniques for eliminating some of the tedium associated with coding. In 1949, John Mauchly of UNIVAC created his Short Order Code for the BINAC computer. The Short Order Code allowed Mauchly to directly enter equations into the BINAC using a fairly conventional algebraic notation. The system did not actually produce program code, however: it was an interpretative system that merely called up predefined subroutines and displayed the result. Nevertheless, the Short Order Code represented a considerable improvement over the standard binary instruction set.

In 1951 Grace Hopper, another UNIVAC employee, wrote the first automatic program compiler. Although Hopper, like many other programmers, had benefited from the development of a subroutine library, she also perceived the limitations connected with its use. In order to be widely applicable, subroutines had to be written as generically as possible. They all started at line 0 and were numbered sequentially from there. They also used a standard set of register addresses. In order to make use of a subroutine, a programmer had to both copy the routine code exactly and make the necessary adjustments to the register addresses by adding an offset appropriate to the particular program at hand. And as Hopper was later fond of asserting, programmers were both “lousy adders” and “lousy copyists.”6 The process of utilizing the subroutine code almost inevitably added to the number of errors that eventually had to be debugged.

To avoid the problems associated with manually copying and manipulating subroutine libraries, Hopper developed a system to automatically gather subroutine code and make the appropriate address adjustments. The system then compiled the subroutines into a complete machine program. Her A-0 compiler dramatically reduced the time required to put together a working application. In 1952 she extended the language to include a simpler mnemonic interface. For example, the mathematical statement X + Y = Z could be written as ADD 00X 00Y 00Z. Multiplying Z by T to give W was MUL 00Z 00T 00W. The combination of an algebraic-language interface and a subroutine compiler became the basis for almost all modern programming languages. By the end of 1953 the A-2 compiler, as it was then known, was in use at the Army Map Service, Lawrence Livermore Laboratories, New York University, the Bureau of Ships, and the David Taylor Model Basin. Although it would take some time before automatic programming systems were universally adopted, by the mid-1950s the technology was well on its way to becoming an essential element of programming practice.

Over the course of the next several decades, more than a thousand code assemblers, programming languages, and other automatic programming systems were developed in the United States alone. Understanding how these systems were used, how and to whom they were marketed, and why there were so many of them is a crucial aspect of the history of the programming professions. Automatic programming languages were the first and perhaps the most popular response to the burgeoning software crisis of the late 1950s and early 1960s. In many ways the entire history of computer programming—both social and technical—has been defined by the search for a silver bullet capable of slaying what Frederick Brooks famously referred to as the werewolf of “missed schedules, blown budgets, and flawed products.”7 The most obvious solution to what was often perceived to be a technical problem was, not surprisingly, the development of better technology.

Automatic programming languages were an appealing solution to the software crisis for a number of reasons. Computer manufacturers were interested in making software development as straightforward and inexpensive as possible. After all, as an early introduction to programming on the UNIVAC pointedly reminded its readers, “The sale and acceptance of these machines is, to some extent, related to the ease with which they can be programmed. As a result, a great deal of research has been done, or is being done, to make programming simpler and more understandable.”8 Advertisements for early automatic programming systems made outrageous and unsubstantiated claims about the ability of their systems to simplify the programming process.9 In many cases, they were specifically marketed as a replacement for human programmers. Fred Gruenberger noted this tendency as early as 1962 in a widely disseminated transcript of a RAND Symposium on Programming Languages: “You know, I’ve never seen a hot dog language come out yet in the last 14 years—beginning with Mrs. Hopper’s A-0 compiler . . . that didn’t have tied to it the claim in its brochure that this one will eliminate all programmers. The last one we got was just three days ago from General Electric (making the same claim for the G-WIZ compiler) that this one will eliminate programmers. Managers can now do their own programming; engineers can do their own programming, etc. As always, the claim seems to be made that programmers are not needed anymore.”10

Advertisements for these new automatic programming technologies, which appeared in management-oriented publications such as Business Week and the Wall Street Journal rather than Datamation or the Communications of the ACM, were clearly aimed at a pressing concern: the rising costs associated with finding and recruiting talented programming personnel. This perceived shortage of programmers was an issue that loomed large in the minds of many industry observers. “First on anyone’s checklist of professional problems,” declared a Datamation editorial in 1962, “is the manpower shortage of both trained and even untrained programmers, operators, logical designers and engineers in a variety of flavors.”11 The so-called programmer problem became an increasingly important feature of contemporary crisis rhetoric. “The number of computers in use in the U.S. is expected to leap from the present 35,000 to 60,000 by 1970 and to 85,000 in 1975,” Fortune magazine ominously predicted in 1967; “The software man will be in even greater demand in 1970 than he is today.”12 Automatic programming systems held an obvious appeal for managers concerned with the rising costs of software development.

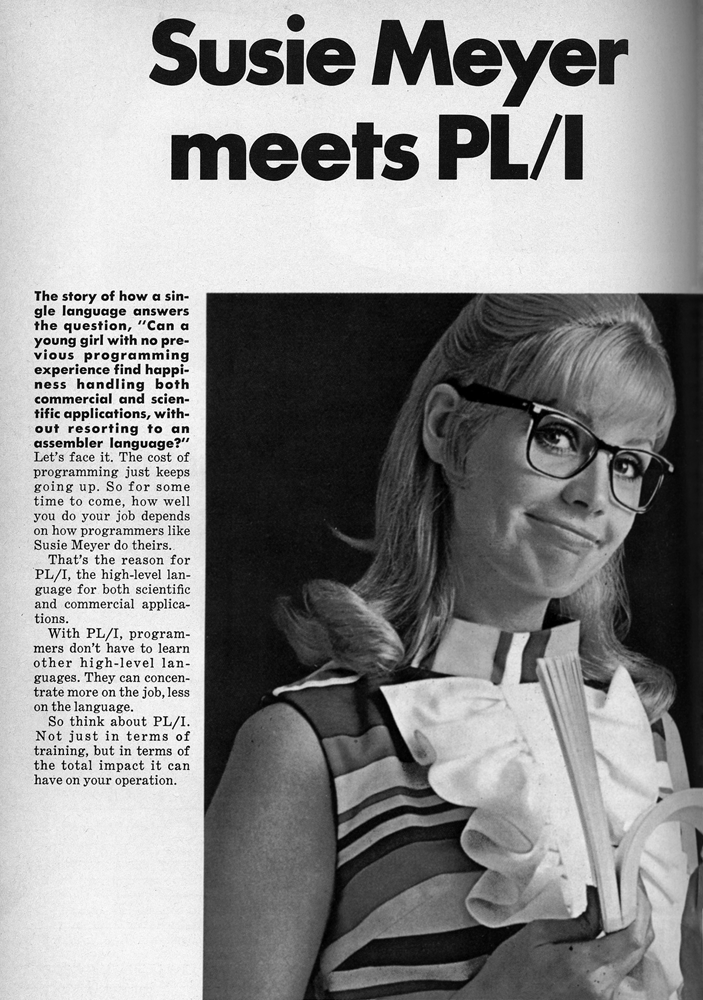

Figure 4.1 shows one of a series of advertisements that presented an unambiguous appeal to gender associations: machines could not only replace their human female equivalents but also were an improvement on them. In its “Meet Susie Meyers” advertisements for its PL/1 programming language, the IBM Corporation asked its users an obviously rhetorical question: “Can a young girl with no previous programming experience find happiness handling both commercial and scientific applications, without resorting to an assembler language?” The answer, of course, was an enthusiastic “yes!” Although the advertisement promised a “brighter future for your programmers” (who would be free to “concentrate more on the job, less on the language”), it also implied a low-cost solution to the labor crisis in software. The subtext of appeals like this was none too subtle: if pretty little Susie Meyers, with her spunky miniskirt and utter lack of programming experience, could develop software effectively in PL/1, so could just about anyone.

Figure 4.1

“Susie Meyers Meets PL/1” advertisement, IBM Corporation, 1968.

It should be noted that use of women as proxies for low-cost or low-skill labor was not confined to the computer industry. One of the time-honored strategies for dealing with labor “problems” in the United States has been the use of female workers. There is a vast historical literature on this topic; from the origins of the U.S. industrial system, women have been seen as a source of cheap, compliant, and undemanding labor.13 The same dynamic was at work in computer programming. In a 1963 Datamation article lauding the virtues of the female computer programmer, for example, Valerie Rockmael focused specifically on her stability, reliability, and relative docility: “Women are less aggressive and more content in one position. . . . Women consider fringe benefits of more importance than their male peers and are more prone to stay on the job if they are content, regardless of a lack of advancement. They also maintain their original geographic roots and are less willing to travel or change job locations, particularly if they are married or engaged.” In an era in which turnover rates for programmers averaged 20 percent annually, this was a compelling argument for employers, since their substantial initial expenditures on training “pays a greater dividend” when invested in female employees. Note that this was something of a backhanded compliment, aimed more at the needs of employers than female programmers. In fact, the “most undesirable category of programmers,” Rockmael contended, was “the female about 21 years old and unmarried,” because “when she would start thinking about her social commitments for the weekend, her work suffered proportionately.”14

Whatever the motivation behind the development and adoption of any particular automatic programming system, by the mid-1950s, a number of these systems were being proposed by various manufacturers. Two of the most popular and significant were FORTRAN and COBOL, each developed by different groups and intended for different purposes.

FORTRAN

Although Hopper’s A-2 compiler was arguably the first modern automatic programming system, the first widely used and disseminated programming language was FORTRAN, developed in 1954–1957 by a team of researchers at the IBM Corporation. As early as 1953, the mathematician and programmer John Backus had proposed to his IBM employers the development of a new, scientifically oriented programming language. This new system for mathematical FORmula TRANslation would be designed specifically for use with the soon-to-be-released IBM 704 scientific computer. It would “enable the IBM 704 to accept a concise formulation of a problem in terms of a mathematical notation and [would] produce automatically a high-speed 704 program for the solution of the problem.”15 The result would be faster, more reliable, and less expensive software development. FORTRAN would not only “virtually eliminate programming and debugging” but also reduce operation time, double machine output, and provide a means of feasibly investigating complex mathematical models. In January 1954 Backus was given the go-ahead by his IBM superiors, and a completed FORTRAN compiler was released to all 704 installations in April 1957.

From the beginning, development of the FORTRAN language was focused around a single overarching design objective: the creation of efficient machine code. Project leader Backus was highly critical of existing automatic programming systems, which he saw as little more than mnemonic code assemblers or collections of subroutines. He also felt little regard for most contemporary human programmers, who he often derisively insisted on referring to as coders. When asked about the transformation of the coder into the programmer, for instance, Backus dismissively suggested that “it’s the same reason that janitors are now called ‘custodians.’ ‘Programmer’ was considered a higher class enterprise than ‘coder,’ and things have a tendency to move in that direction.”16

A truly automatic programming language, believed Backus, would allow scientists and engineers to communicate directly with the computer, thus eliminating the need for inefficient and unreliable programmers.17 The only way that such a system would be widely adopted, however, was to ensure that the code it produced would be at least as efficient, in terms of size and performance, as that produced by its human counterparts.18 Indeed, one of the primary objections raised against automatic programming languages in this period was their relative inefficiency: one of the higher-level languages used by SAGE developers produced programs that ran an order of magnitude slower than those hand coded by a top-notch programmer.19 In an era when programming skill was considered to be a uniquely creative and innate ability, and when the state of contemporary hardware made performance considerations paramount, users were understandably skeptical of the value of automatically generated machine code.20

The focus of the FORTRAN developers was therefore on the construction of an efficient compiler, rather than on the design of the language.

In order to ensure that the object code produced by the FORTRAN compiler was as efficient as possible, several design compromises had to be made. FORTRAN was originally intended primarily for use on the IBM 704, and contained several device-specific instructions. Little thought was given to making FORTRAN machine independent, and early implementations varied greatly from computer to computer, even those developed by the same manufacturer. The language was also designed solely for use in numerical computations, and was therefore difficult to use for applications requiring the manipulation of alphanumeric data. The first FORTRAN manual made this focus on mathematical problem solving clear: “The FORTRAN language is intended to be capable of expressing any problem of numerical computation. In particular, it deals easily with problems containing large sets of formulae and many variables and it permits any variable to have up to three independent subscripts.” For problems in which machine words have a logical rather than numerical meaning, however, FORTRAN is less satisfactory, and it may fail entirely to express some such problems. Nevertheless many logical operations not directly expressible in the FORTRAN language can be obtained by making use of provisions for incorporating library routines.21

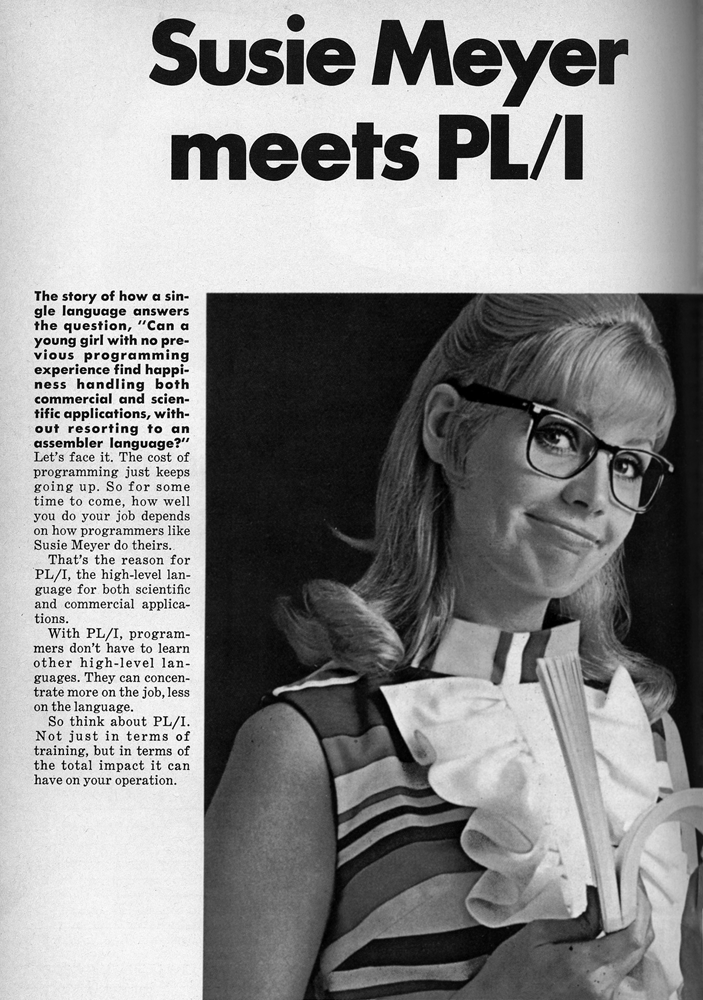

The power of the FORTRAN language for scientific computation can be clearly demonstrated by a simple real-world example. The mathematical expression described by the function

could be written in FORTRAN using the following syntax:

Z(I) = SQRTF(A(I)*X(I)**2 + B(I)*Y(I))

Using such straightforward algorithmic expressions, a programmer could write extremely sophisticated programs with relatively little training and experience.22

Although greeted initially with skepticism, the FORTRAN project was enormously successful in the long term. A report on FORTRAN usage written just one year after the first release of the language indicated that “over half [of the 26 installations of the 704] used FORTRAN for more than half of their problems.”23 By the end of 1958, IBM produced FORTRAN systems for its 709 and 650 machines. As early as January 1961 Remington Rand UNIVAC became the first non-IBM manufacturer to provide FORTRAN, and by 1963 a version of the FORTRAN compiler was available for almost every computer then in existence.24 The language was updated substantially in 1958 and again in 1962. In 1962, FORTRAN became the first programming language to be standardized through the American Standards Association, which further established FORTRAN as an industrywide standard.25

The academic community was an early and crucial supporter of FORTRAN, contributing directly to its growing popularity. The FORTRAN designers in general, and Backus in particular, were regular participants in academic forums and conferences. Backus himself had delivered a paper at the seminal Symposium on Automatic Programming for Digital Computers hosted by the Office of Naval Research in 1954. One of his top priorities, after the compilation of the FORTRAN Programmer’s Reference Manual (itself a model of scholarly elegance and simplicity), was to publish an academically oriented article that would introduce the new language to the scientific community.26 Backus would later become widely known throughout the academic community as the codeveloper of the Backus-Naur Form, the notational system used to describe most modern programming languages.

FORTRAN was appealing to scientists and other academics for a number of reasons. First of all, it was designed and developed by one of their own. Backus spoke their language, published in their journals, and shared their disdain for coders and other “technicians.” Second, FORTRAN was designed specifically to solve the kinds of problems that interested academics. Its use of algebraic expressions greatly simplified the process of defining mathematical problems in machine-readable syntax. Finally, and perhaps most significantly, FORTRAN provided them more direct access to the computer. Its introduction “caused a partial revolution in the way in which computer installations were run because it became not only possible but quite practical to have engineers, scientists, and other people actually programming their own problems without the intermediary of a professional programmer.”27 The use of FORTRAN actually became the centerpiece of an ongoing debate about “open” versus “closed” programming “shops.” The closed shops allowed only professional programmers to have access to the computers; open shops made these machines directly available to their users.

The association of FORTRAN with scientific computing was a self-replicating phenomenon. Academics preferred FORTRAN to other languages because they believed it allowed them to do their work more effectively and they therefore made FORTRAN the foundation of their computing curricula. Students learned the language in university courses and were thus more effective at getting their work done in FORTRAN. A positive feedback loop was established between FORTRAN and academia. A survey in 1973 of more than thirty-five thousand students taking college-level computing courses revealed that 70 percent were learning to program using FORTRAN. The next most widely used alternative, BASIC, was used by only 13 percent, and less than 3 percent were exposed to business-oriented languages such as COBOL.28 Throughout the 1960s and 1970s, FORTRAN was clearly the dominant language of scientific computation.

COBOL

On April 8, 1959, a group of computer manufacturers, users, and academics met at the University of Pennsylvania’s Computing Center to discuss a proposal to develop “the specifications for a common business language [CBL] for automatic digital computers.”29 The goal was to develop a programming language specifically aimed at the needs of the business data processing community. This new language would rely on simple Englishlike commands, would be easier to use and understand than existing scientific languages, and would provide machine-independent compatibility: that is, the same program could be run on a wide variety of hardware with little modification.

Although this proposal originated in the ElectroData Division of the Burroughs Corporation, from the beginning it had broad industrial and governmental support. The director of data systems for the U.S. Department of Defense readily agreed to sponsor a formal meeting on the proposal, and his enthusiastic support indicates a widespread contemporary interest in business-oriented programming: “The Department of Defense was pleased to undertake this project: in fact, we were embarrassed that the idea for such a common language had not had its origin in Defense since we would benefit so greatly from such a project.”30

The first meeting to discuss a CBL was held at the Pentagon on May 28–29, 1959. Attending the meeting were fifteen officials from seven government organizations; fifteen representatives of the major computer manufacturers (including Burroughs, GE, Honeywell, IBM, NCR, Phillips, RCA, Remington Rand UNIVAC, Sylvania, and ICT); and eleven users and consultants (significantly, only one member of this last group was from a university). Despite the diversity of the participants, the meeting produced both consensus and a tangible plan of action. The group not only decided that CBL was necessary and desirable but also agreed on its basic characteristics: a problem-oriented, Englishlike syntax; a focus on the ease of use rather than power or performance; and a machine-independent design. Three committees were established, under the auspices of a single Executive Committee of the Conference on Data Systems Languages (CODASYL), to suggest short-term, intermediate, and long-range solutions, respectively. As it turned out, it was the short-term committee that produced the most lasting and influential proposals.

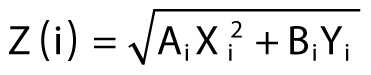

Figure 4.2

NCR, Quickdraw programming language, 1968.

The original purpose of the Short-Range Committee was to evaluate the strengths and weaknesses of existing automatic compilers, and recommend a “short term composite approach (good for the next year or two) to a common business language for programming digital computers.”31 There were three existing compiler systems that the committee was particularly interested in considering: FLOW-MATIC, which had been developed for Remington Rand UNIVAC by Grace Hopper (as an outgrowth of her A-series algebraic and B-series business compilers), and which was actually in use by customers at the time; AIMACO, developed for the Air Force Air Materiel Command; and COMTRAN (soon to be renamed the Commercial Translator), a proposed IBM product that existed only as a specification document. Other manufacturers such as Sylvania and RCA were also working on the development of similar languages. Indeed, one of the primary goals of the Short-Range Committee was to “nip these projects in the bud” and provide incentives for manufacturers to standardize on the CBL rather than pursue their own independent agendas. Other languages considered were Autocoder III, SURGE, FORTRAN, RCA 501 Assembler, Report Generator, and APG-1.32 At the first meeting of one of the Short-Range Committee task groups, for example, most of the time was spent getting statements of commitment from the various manufacturers.33

From the start, the process of designing the CBL was characterized by a spirit of pragmatism and compromise. The Short-Range Committee, referred to by insiders as the PDQ (“pretty darn quick”) Committee, took seriously its charge to work quickly to produce an interim solution. Remarkably enough, less than three months later the committee had produced a nearly complete draft of a proposed CBL specification. In doing so, the CBL designers borrowed freely from models provided by Remington Rand UNIVAC’s FLOW-MATIC language and the IBM Commercial Translator. In a September report to the Executive Committee of CODASYL, the Short-Range Committee requested permission to continue development on the CBL specification, to be completed by December 1, 1959. The name COBOL (Common Business Oriented Language) was formally adopted shortly thereafter. Working around the clock for the next several months, the PDQ group was able to produce its finished report just in time for its December deadline. The report was approved by CODASYL, and in January 1960 the official COBOL-60 specification was released by the U.S. Government Printing Office.

The structure of the COBOL-60 specification reveals its mixed origins and commercial orientation. Although from the beginning the COBOL designers were concerned with “business data processing,” there was never any attempt to provide a real definition of that phrase.34 It was clearly intended that the language could be used by novice programmers and read by managers. For example, an instruction to compute an employee’s overtime pay might be written as follows:

MULTIPLY NUMBER-OVTIME-HRS BY OVTIME-PAY-RATE

GIVING OVTIME-PAY-TOTAL

It was felt that this readability would result from the use of English- language instructions, although no formal criteria or tests for readability were provided. In many cases, compromises were made that allowed for conflicting interpretations of what made for “readable” computer code. Arithmetic formulas, for instance, could either be written using a combination of arithmetic verbs—that is, ADD, SUBTRACT, MULTIPLY, or DIVIDE—or as symbolic formulas. The use of arithmetic verbs was adapted directly from the FLOW-MATIC language, and reflected the belief that business data processing users could not—and should not—be forced to use formulas. The capability to write symbolic formulas was included (after much contentious debate) as a means of providing power and flexibility to more mathematically sophisticated programmers. Such traditional mathematical functions such as SINE and COSINE, however, were deliberately excluded as being unnecessary to business data processing applications.

Another concession to the objective of readability was the inclusion of extraneous “noise words.” These were words or phrases that were allowable but not necessary: for example, in the statement

READ file1 RECORD INTO variable1 AT END goto procedure2

the words RECORD and AT are syntactically superfluous. The statement would be equally valid written as

READ file1 INTO variable1 END goto procedure2.

The inclusion of the noise words RECORD and AT was perceived by the designers to enhance readability. Users had the option of including or excluding them according to individual preference or corporate policy.

In addition to designing COBOL to be Englishlike and readable, the committee was careful to make it as machine-independent as possible. Most contemporary programming systems were tied to a specific processor or product line. If the user wanted to replace or upgrade their computer, or switch to machines from a different manufacturer, they had to completely rewrite their software from scratch, typically an expensive, risky, and time-consuming operation. Users often became bound to outdated and inefficient hardware systems simply because of the enormous costs associated with upgrading their software applications. This was especially true for commercial data processing operations, where computers were generally embedded in large, complex systems of people, procedures, and technology. A truly machine- independent language would allow corporations to reuse application code, thereby reducing the programming and maintenance costs. It would also allow manufacturers to sell or lease more of their most recent (and profitable) computers.

The COBOL language was deliberately organized in such a way as to encourage portability from one machine to another. Every element of a COBOL application was assigned to one of four functional divisions: IDENTIFICATION, ENVIRONMENT, DATA, and PROCEDURE. The IDENTIFICATION division offered a high-level description of the program, including its name, author, and creation date. The ENVIRONMENT division contained information about the specific hardware on which the program was to be compiled and run. The DATA division described the file and record layout of the data used or created by the rest of application. The PROCEDURE division included the algorithms and procedures that the user wished the computer to follow. Ideally, this rigid separation of functional divisions would allow a user to take a deck of cards from one machine to another without making significant alterations to anything but the ENVIRONMENT description. In reality, this degree of portability was almost impossible to achieve in real-world applications in which performance was a primary consideration. For example, the most efficient method of laying out a file for a twenty-four-bit computer was not necessarily optimal for a thirty-six-bit machine. Nevertheless, machine independence “was a major, if not the major,” design objective of the Short-Range Committee.35 Achieving this objective proved difficult both technically and politically, and greatly influenced both the design of the COBOL specification and its subsequent reception within the computing community.

One of the greatest obstacles to achieving machine independence was the computer manufacturers themselves. Each manufacturer wanted to make sure that COBOL included only features that would run efficiently on their devices. For instance, a number of users wanted the language to include the ability to read a file in reverse order. For those machines that had a basic machine command to read a tape backward this was an easy feature to implement. Even those computers without this explicit capability could achieve the same functionality by backing the tape up two records and then reading forward one. Although this potential READ REVERSE command could therefore be logically implemented by everyone, it significantly penalized those devices without the basic machine capability. It was therefore not included in the final specification.

There were other compromises that were made for the sake of machine independence. In order to maintain compatibility among different machines with different arithmetic capabilities, eighteen decimal digits were chosen as the maximum degree of precision supported. This particular degree of precision was chosen “for the simple reason that it was disadvantageous to every computer thought to be a potential candidate for having a COBOL compiler.”36 No particular manufacturer would thus have an inherent advantage in terms of performance. In a similar manner, provisions were made for the use of binary computers, despite the fact that such machines were generally not considered appropriate for business data processing. The decision to allow only a limited character set in statement definitions—using only those characters that were physically available on almost all data-entry machines—was a self-imposed constraint that had “an enormous influence on the syntax of the language,” but was nevertheless considered essential to widespread industry adoption. The use of such a minimal character set also prevented the designers from using the sophisticated reference language techniques that had so enamored theoretical computer scientists of the ALGOL 58 specification.

This dedication to the ideal of portability set the Short-Term Committee at odds with some of its fellow members of CODASYL. In October 1959, the Intermediate-Range Committee passed a motion declaring that the FACT programming language—recently released by the Honeywell Corporation—was a better language than that produced by the Short-Range Committee and hence should form the basis of the CBL.37 Although many members of the Short-Range Committee agreed that FACT was indeed a technically advanced and superior language, they rejected any solution that was tied to any particular manufacturer. In order to ensure that the CBL would be a truly common business language, elegance and efficiency had to be compromised for the sake of readability and machine independence. Despite the opposition of the Intermediate-Range Committee (and the Honeywell representatives), the Executive Committee of CODASYL eventually agreed with the design priorities advocated by the PDQ group.

The first COBOL compilers were developed in 1960 by Remington Rand UNIVAC and RCA. In December of that year, the two companies hosted a dramatic demonstration of the cross-platform compatibility of their individual compilers: the same COBOL program, with only the ENVIRONMENT division needing to be modified, was run successfully on machines from both manufacturers. Although this was a compelling demonstration of COBOL’s potential, other manufacturers were slow to develop their own COBOL compilers. Honeywell and IBM, for example, were loath to abandon their own independent business languages. Honeywell’s FACT had been widely praised for its technical excellence, and the IBM Commercial Translator already had an established customer base.38 By the end of 1960, however, the U.S. military had put the full weight of its prestige and purchasing power behind COBOL. The Department of Defense announced that it would not lease or purchase any new computer without a COBOL compiler unless its manufacturer could demonstrate that its performance would not be enhanced by the availability of COBOL.39 No manufacturer ever attempted such a demonstration, and within a year COBOL was well on its way toward becoming an industry standard.

It is difficult to establish empirically how widely COBOL was adopted, but anecdotal evidence suggests that it is by far the most popular and widely used computer language ever.40 A recent study undertaken in response to the perceived Y2K crisis suggests that there are seventy billion lines of COBOL code currently in operation in the United States alone. Despite its obvious popularity, though, from the beginning COBOL has faced severe criticism and opposition, especially from within the computer science community. One programming language textbook from 1977 judged COBOL’s programming features as fair, its implementation dependent features as poor, and its overall writing as fair to poor. It also noted its “tortuously poor compactness and poor uniformity.”41 The noted computer scientist Edsger Dijkstra wrote that “COBOL cripples the mind,” and another of his colleagues called it “terrible” and “ugly.”42 Several notable textbooks on programming languages from the 1980s did not even include COBOL in the index.

There are a number of reasons why computer scientists have been so harsh in their evaluation of COBOL. Some of these objections are technical in nature, but most are aesthetic, historical, or political. Most of the technical criticisms have to do with COBOL’s verbosity, its inclusion of superfluous noise words, and its lack of certain features (such as protected module variables). Although many of these shortcomings were addressed in subsequent versions of the COBOL specification, the academic world continued to vilify the language. In an article from 1985 titled “The Relationship between COBOL and Computer Science,” the computer scientist Ben Schneiderman identified several explanations for this continued hostility. First of all, no academics were asked to participate on the initial design team. In fact, the COBOL developers apparently had little interest in the academic or scientific aspects of their work. All of the articles included in a May 1962 Communications of the ACM issue devoted to COBOL were written by industry or government practitioners. Only four of the thirteen included even the most basic references to previous and related work; the lack of academic sensibilities was immediately apparent. Also noticeably lacking was any reference to the recently developed Backus-Naur Form notation that had already become popular as a metalanguage for describing other programming languages. No attempt was made to produce a textbook explaining the conceptual foundations of COBOL until 1963. Most significant, however, was the sense that the problem domain addressed by the COBOL designers—that is, business data processing—was not theoretically sophisticated or interesting. One programming language textbook from 1974 portrayed COBOL as having “an orientation toward business data processing . . . in which the problems are . . . relatively simple algorithms coupled with high-volume input-output (e.g., computing the payroll for a large organization).” Although this dismissive account hardly captures the complexities of many large-scale business applications, it does appear to accurately represent a prevailing attitude among computer scientists. COBOL was considered a “trade-school” language rather than a serious intellectual accomplishment.43

Despite these objections, COBOL has proven remarkably successful. Certainly the support of the U.S. government had a great deal to do with its initial widespread adoption. But COBOL was attractive to users—business corporations in particular—for other reasons as well. The belief that Englishlike COBOL code could be read and understood by nonprogrammers was appealing to traditional managers who were worried about the dangers of “letting the ‘computer boys’ take over.”44 It was also hoped that COBOL would achieve true machine independence—arguably the holy grail of language designers—and of all its competitors, COBOL did perhaps come closest to achieving this ideal. Although critics have derided COBOL as the inelegant result of “design by committee,” the broad inclusiveness of CODASYL helped ensure that no one manufacturer’s hardware would be favored. Committee control over the language specification also prevented splintering: whereas numerous competing dialects of FORTRAN and ALGOL were developed, COBOL implementations remained relatively homogeneous. The CODASYL structure also provided a mechanism for ongoing language maintenance with periodic “official” updates and releases.

ALGOL, Pascal, ADA, and Beyond

Although FORTRAN and COBOL were by far the most popular programming languages developed in the United States during this period, they were by no means the only ones to appear. Jean Sammet, editor of one of the first comprehensive treatments of the history of programming languages, has estimated that by 1981, there were a least one thousand programming languages in use nationwide. It would be impossible to even enumerate, much less describe, the history and development of each of these languages. Figure 4.3contains a “genealogical” listing of some of the more widely used programming languages developed prior to 1970. This section will focus on a few of the more historically significant alternatives to FORTRAN and COBOL.

Figure 4.3

Programming languages, 1952–1970. Based on a chart first developed by Jean Sammet. Reproduced with the permission of the ACM.

More than a year before the Executive Committee of CODASYL convened to discuss the need for a common business-oriented programming language, an ad hoc committee of users, academics, and federal officials met to study the possibility of creating a universal programming language. This committee, which was brought together under the auspices of the ACM, could not have been more different from the group organized by CODASYL. Whereas the fifteen-member Executive Committee had contained only one university representative, the identically sized ACM-sponsored committee was dominated by academics. At itsr first meeting, this committee decided to follow the model of FORTRAN in designing an algebraic language. FORTRAN itself was not acceptable because of its association with IBM.

The ACM “universal language” project soon expanded into an international initiative. Europeans in particular were deeply interested in a language that would both transcend political boundaries and help avoid the domination of Europe by the IBM Corporation. During an eight-day meeting in Zurich, a rough specification for the new International Algebraic Language (IAL) was hashed out. Actually, three distinct versions of the IAL were created: reference, publication, and hardware. The reference language was the abstract representation of the language as envisioned by the Zurich committee. The publication and hardware languages would be isomorphic implementations of the abstract reference language. Since these specific implementations required careful attention to such messy details as character sets and delimiters (decimal points being standard in the United States and commas being standard in Europe), they were left for a later and unspecified date. The reference language was released in 1958 under the more popular and less pretentious name ALGOL (from ALGOrithmic Language).

In many ways, ALGOL was a remarkable achievement in the nascent discipline of computer science. ALGOL 58 was something of a work in progress; ALGOL 60, which was released shortly thereafter, is widely considered to be a model of completeness and clarity. The ALGOL 60 version of the language was described using an elegant metalanguage known as Backus Normal Form (BNF), developed specifically for that purpose. BNF, which resembles the notation used by linguists and logicians to describe formal languages, has since become the standard technique for representing programming languages. The elegant sophistication of the ALGOL 60 report appealed particularly to computer scientists. In the words of one well-respected admirer, “The language proved to be an object of stunning beauty. . . . Nicely organized, tantalizingly incomplete, slightly ambiguous, difficult to read, consistent in format, and brief, it was a perfect canvas for a language that possessed those same properties. Like the Bible, it was meant not merely to be read, but interpreted.”45 ALGOL 60 soon became the standard by which all subsequent language developments were measured and evaluated.

Despite its intellectual appeal, and the enthusiasm with which it was greeted in academic and European circles, ALGOL was never widely adopted in the United States. Although many Americans recognized that ALGOL was an elegant synthesis, most saw language design as just one step in a lengthy process leading to language acceptance and use. In addition, in the United States there were already several strong competitors currently in development. IBM and its influential users group SHARE supported FORTRAN, and business data processors preferred COBOL. Even those installations that preferred ALGOL often used it only as a starting point for further development, more “as a rich set of guidelines for a language than a standard to be adhered to.”46 Numerous dialects or spin-off languages emerged, most significantly JOVIAL, MAD, and NELIAC, developed at the SDC, the University of Michigan, and the Naval Electronics Laboratory, respectively. Although these languages benefited from ALGOL, they only detracted from its efforts to emerge as a standard. With a few noticeable exceptions—the ACM continued to use it as the language of choice in its publications, for example—ALGOL was generally regarded in the United States as an intellectual curiosity rather than a functional programming language.

The real question of historical interest, of course, is not so much why specific individual programming languages were created but rather why so many. In the late 1940s and early 1950s there was no real programming community per se, only particular projects being developed at various institutions. Each project necessarily developed its own techniques for facilitating programming. By the mid-1950s, however, there were established mechanisms for communicating new research and development, and there were deliberate attempts to promote industry-wide programming standards. Nevertheless, there were literally hundreds of languages developed in the decades of the 1950s and 1960s. FORTRAN and COBOL have emerged as important standards in the scientific and business communities, respectively, and yet new languages continued—and still do—to be created.47 What can explain this curious Cambrian explosion in the evolutionary history of programming languages?

Some of the many divergent species of programming languages can be understood by looking at their functional characteristics. Although general-purpose languages such as FORTRAN and COBOL were suitable for a wide variety of problem domains, certain applications required more specialized functions to perform most efficiently. The General-Purpose Simulation System was designed specifically for the simulation of system elements in discrete numerical analysis, for example. APT was commissioned by the Aircraft Industries Association and the U.S. Air Force to be used primarily to control automatic milling machines. Other languages were designed not so much for specialized problem domains as for particular pedagogical purposes—in the case of BASIC, for instance, the teaching of basic computer literacy. Some languages were known for their fast compilation times, and others for the efficiency of their object code. Individual manufacturers produced languages that were optimized for their own hardware, or as part of a larger marketing strategy.

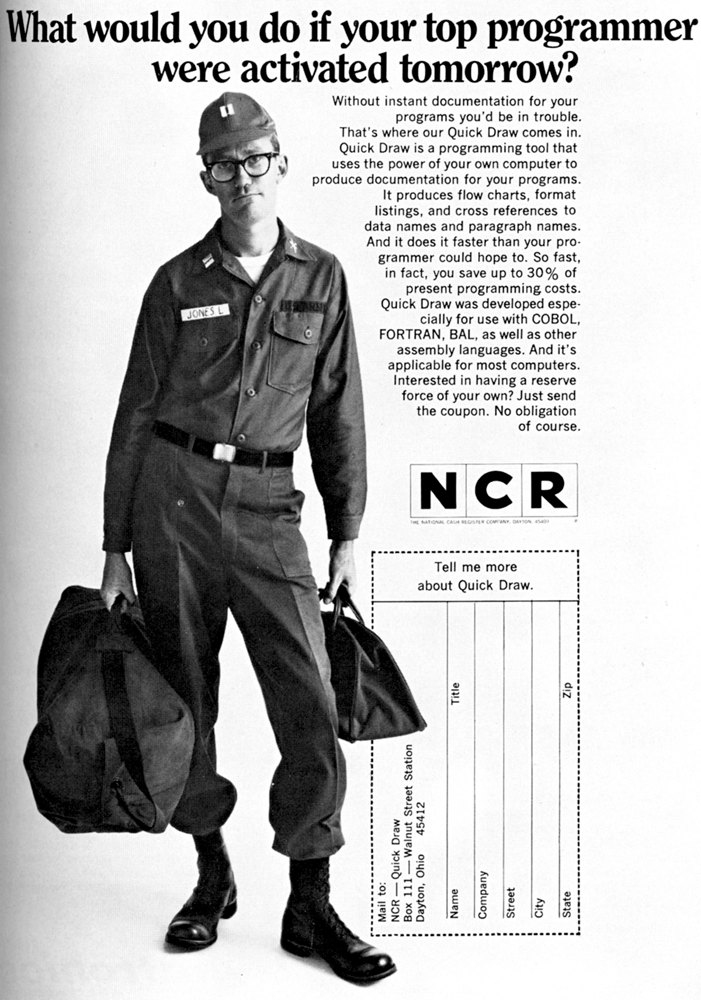

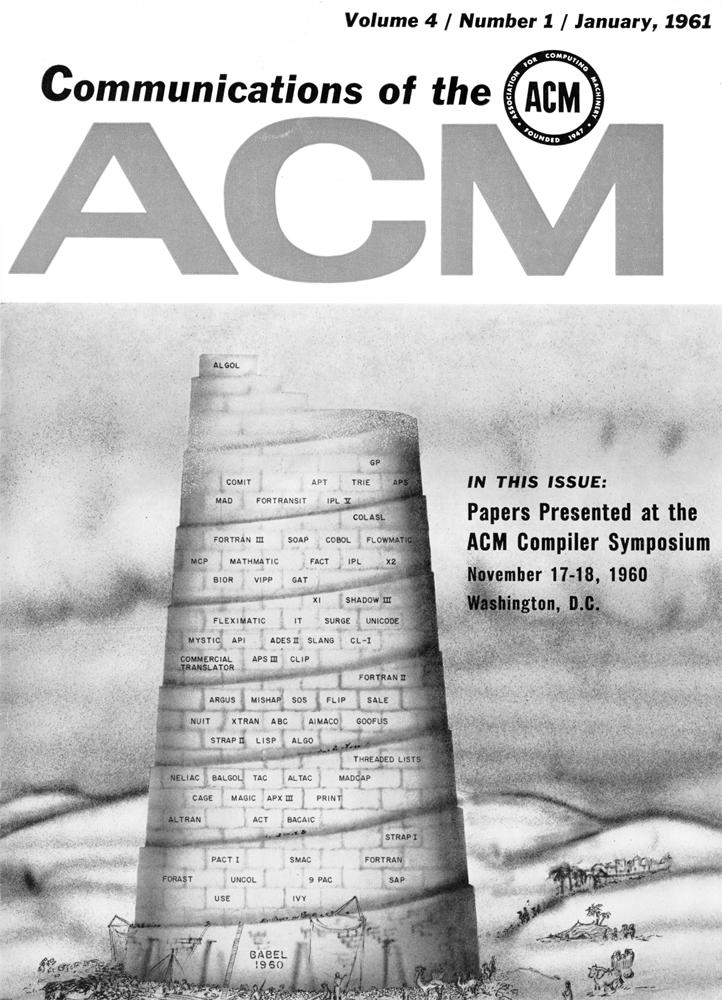

Figure 4.4

This now-famous “Tower of Babel” cover appeared first in the Communications of the ACM, January 1961. Reproduced with permission of the ACM.

Different languages were also developed with different users in mind. In this sense, they embodied the organizational and professional politics of programming in this period. At the RAND Symposium on Programming Languages in 1962, for example, Jack Little, a RAND consultant, lamented the tendency of manufacturers to design languages “for use by some sub-human species in order to get around training and having good programmers.”48 Dick Talmadge and Barry Gordon of IBM admitted to thinking in terms of an imaginary “Joe Accountant” user; the problem that IBM faced, according to Bernard Galler, of the University of Michigan Computing Center, was that “if you can design a language that Joe Accountant can learn easily, then you’re still going to have problems because you’re probably going to have a lousy language.”49 Fred Gruenberger, a staff mathematician at RAND, later summed up the essence of the entire debate: “COBOL, in the hands of a master, is a beautiful tool—a very powerful tool. COBOL, as it’s going to be handled by a low grade clerk somewhere, will be a miserable mess. . . . Some guys are just not as smart as others. They can distort anything.”50

There were also less obviously utilitarian reasons for developing new programming languages, however. Many common objections raised against existing languages were more matters of style rather than substance. The rationale given for creating a new language often boiled down to a declaration that “this new language will be easier to use or better to read or write than any of its predecessors.” Since there were generally no standards for what was meant by “easier to use or better to read or write,” such declarations can only be considered statements of personal preference. As Jean Sammet has suggested, although lengthy arguments have been advanced on all sides of the major programming language controversies, “in the last analysis it almost always boils down to a question of personal style or taste.”51

For the more academically oriented programmers, designing a new language was a relatively easy way to attract grant money and publish articles. There have been numerous languages that have been rigorously described but never implemented. They served only to prove a theoretical point or advance an individual’s career. In addition, many in the academic community seemed to be afflicted with the NIH (“not invented here”) syndrome: any language or technology that was designed by someone else could not possibly be as good as one that you invented yourself, and so a new version needed to be created to fill some ostensible personal or functional need. As Herbert Grosch lamented in 1961, filling these needs was personally satisfying yet ultimately self-serving and divisive: “Pride shades easily into purism, the sin of the mathematicians. To be the leading authority, indeed the only authority, on ALGOL 61B mod 12, the version that permits black letter as well as Hebrew subscripts, is a satisfying thing indeed, and many of us have constructed comfortable private universes to explore.”52

One final and closely related reason for the proliferation of programming languages is that designing programming languages was (and is) fun. The adoption of metalanguages and the BNF allowed for the rapid development and implementation of creative new languages and dialects. If programming was enjoyable, even more so was language design.53

No Silver Bullet

In 1987, Frederick Brooks published an essay describing the major developments in automatic programming technologies that had occurred over the past several decades. As an accomplished academic and experienced industry manager, Brooks was a respected figure within the programming community. Using characteristically vivid language, his “No Silver Bullet: Essence and Accidents of Software Engineering,” reflected on the inability of these technologies to bring an end to the ongoing software crisis:

Of all the monsters that fill the nightmares of our folklore, none terrify more than werewolves, because they transform unexpectedly from the familiar into horrors. For these, one seeks bullets of silver that can magically lay them to rest.

The familiar software project, at least as seen by the nontechnical manager, has something of this character; it is usually innocent and straightforward, but is capable of becoming a monster of missed schedules, blown budgets, and flawed products. So we hear desperate cries for a—silver bullet—something to make software costs drop as rapidly as computer hardware costs do.

But, as we look to the horizon of a decade hence, we see no silver bullet. There is no single development, in either technology or in management technique, that by itself promises even one order-of-magnitude improvement in productivity, in reliability, in simplicity.54

Brook’s article provoked an immediate reaction, both positive and negative. The object-oriented programming (OOP) advocate Brad Cox insisted, for example, in his aptly titled “There Is a Silver Bullet,” that new techniques in OOP promised to bring about “a software industrial revolution based on reusable and interchangeable parts that will alter the software universe as surely as the industrial revolution changed manufacturing.”55 Whatever they might have believed about the possibility of such a silver bullet being developed in the future, though, most programmers and managers agreed that none existed in the present. In the late 1980s, almost three decades after the first high-level automatic programming systems were introduced, concern about the software crisis was greater than ever. The same year that Brooks published his “No Silver Bullet,” the Department of Defense warned against the real possibility of “software-induced catastrophic failure” disrupting its strategic weapons systems.56 Two years later, Congress released a report titled “Bugs in the Program: Problems in Federal Government Computer Software Development and Regulation,” initiating yet another full-blown attack on the fundamental causes of the software crisis.57 Ironically, the Department of Defense decided that what was needed to deal with this most recent outbreak of crisis was yet another new programming language—in this case ADA, which was trumpeted as a means of “replacing the idiosyncratic ‘artistic’ ethos that has long governed software writing with a more efficient, cost-effective engineering mind-set.”58

Why have automatic programming languages and other technologies thus far failed to resolve—or apparently even mitigate—the seemingly perpetual software crisis? First of all, it is clear that many of these languages and systems were not able to live up to their marketing hype. Even those systems that were more than a “complex, exception-ridden performer of clerical tasks which was difficult to use and inefficient” (as John Backus characterized the programming tools of the early 1950s) could not eliminate the need for careful analysis and skilled programming.59 As Willis Ware portrayed the situation in 1965, “We lament the cost of programming; we regret the time it takes. What we really are unhappy with is the total programming process, not programming (i.e., writing routines) per se. Nonetheless, people generally smear the details into one big blur; and the consequence is, we tend to conclude erroneously that all our problems will vanish if we can improve the language which stands between the machine and the programmer. T’aint necessarily so.” All the programming language improvement in the world will not shorten the intellectual activity, thinking, and analysis that is inherent in the programming process. Another name for the programming process is “problem solving by machine; perhaps it suggests more pointedly the inherent intellectual content of preparing large problems for machine handling.”60

Although programming languages could reduce the amount of clerical work associated with programming, and did help eliminate certain types of errors (mostly those associated with transcription errors or syntax mistakes), they also introduced new sources of error. In the late 1960s, a heated controversy broke out in the programming community over the use of the “GOTO statement.”61 At the heart of this debate was the question of professionalism: although high-level languages gave the impression that just about anyone could program, many programmers felt this was a misconception disastrous to both their profession and the industry in general.

The designers and advocates of various automatic programming systems never succeeded in addressing the larger issues posed by the difficulties inherent in the programming process. High-level languages were necessary but not sufficient: that is, the use of these languages became an essential component of software development, but could not in themselves ensure a successful development effort. Programming remained a highly skilled occupation, and programmers continued to defy traditional methods of job categorization and management. By the end of the 1960s the search for a silver bullet solution to the software crisis had turned away from programming languages and toward more comprehensive techniques for managing the programming process. Many of these new techniques involved the creation of new automatic programming technologies, but most revolved around more systemic solutions as well as new methods of programmer education, management, and professional development.