Chapter 10. Topic Modeling

In the previous chapter we covered some of the techniques used to extract information from text. These techniques can be complicated to implement and may also be slow. If the application requires the information extracted to be readable by the users, these techniques are great. If we are looking to extract information as part of an intermediate processing step—for instance, building features for a classifier—then we don’t need to extract readable information. As we saw in Chapters 5 and 7, simply using our vocabulary will create an unwieldy number of features. Therefore, we want to reduce the dimensionality of our data. This is where distributional semantics comes in.

Distributional semantics is the study, using statistical distributions, of elements of language to characterize similarities between documents (e.g., email), speech acts (e.g., spoken or written sentences), or elements thereof (e.g., phrases, words). The idea for this field comes from John R. Firth, a linguist in the first half of the 20th century. He noted how semantics was dependent on context and coined the oft-repeated quote, “You shall know a word by the company it keeps.”

The idea is that you can represent a word as a probabilistic distribution over the contexts it appears in. The words will exist in a vector space where the dimensions are associated with these contexts. For example, “doctor” will have a larger value on the medical dimension than in the financial dimension. However, “bank” will have larger values on the financial dimension and the geological dimension. We can’t simply use hand-chosen contexts, though. These too must be learned from the data. Commonly, we learn the contexts by looking at what words occur with each other. This back-and-forth means that most such algorithms iterate until they find the model that best fits the data. These approaches are a kind of clustering. Indeed, we can use more generic clustering algorithms on text data, though they may not work well.

In the following examples, we have a document-term matrix in which the rows are documents, and columns are terms. The values can be binary, representing word presence, or the number of times a term occurs, or they can be TF.IDF values. We will be using TF.IDF values in this chapter. Once we have such a matrix, we will want to map our documents to a space with fewer dimensions. This clusters the documents into topics.

We will be using the Python library scikit-learn to introduce the techniques. This library will allow us to more easily examine the internal data of these models than would Spark’s implementation.

K-Means

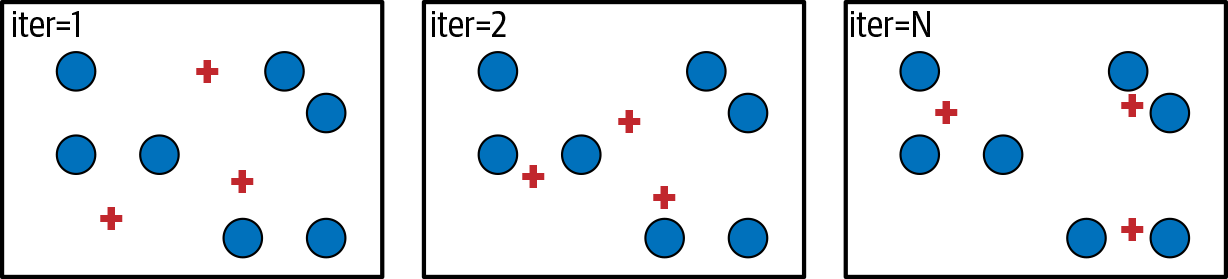

As a first attempt at topic modeling, let’s try a classic clustering technique—K-Means, as shown in Figure 10-1. Let’s say we have a number of data points in a vector space. We can pick K points in the vector space, called centroids, and assign each data point to the closest centroid. We want to find the K points that minimize the distance between the data points and their centroid.

Figure 10-1. Simple visualization of K-Means

In our situation, we have documents in a vector space defined by the TF.IDF values. When we find our K points, we can say that each K represents a topic. Let’s look at an example.

First, let’s build our data set. We will be using the Brown corpus, which is a collection of articles, periodicals, and reports from newspapers, journals, and academic institutions. It is a classic data set that has been used in NLP since the 1960s.

from collections import defaultdict, Counter, OrderedDict

import numpy as np

import pandas as pd

import scipy.sparse as sparse

from wordcloud import WordCloud

import matplotlib.pyplot as plt

%matplotlib inline

from nltk.corpus import stopwords

from nltk.corpus import brown

en_stopwords = set(stopwords.words('english'))

def detokenize(sentence):

text = ''

for token in sentence:

if text and any(c.isalnum() for c in token):

text += ' '

text += token

return text

We will want to remove the punctuation and the stop words so our algorithms use only the “useful” words.

def process(sentence):

terms = []

for term in sentence:

term = term.lower()

if term not in en_stopwords and term.isalnum():

terms.append(term)

return terms

Let’s gather our docs. Our documents will be lists of lists of terms.

docs = OrderedDict()

for fid in brown.fileids():

docs[fid] = brown.sents(fid)

Now we will construct indices. This is similar to what we did when we were exploring information retrieval.

ix2doc = list(docs)

doc2ix = {fid: i for i, fid in enumerate(ix2doc)}

vocabulary = set()

term_counts = defaultdict(Counter)

document_counts = Counter()

for fid, doc in docs.items():

unique_terms = set()

for sentence in doc:

sentence = process(sentence)

term_counts[fid].update(sentence)

unique_terms.update(sentence)

document_counts.update(unique_terms)

vocabulary.update(unique_terms)

ix2term = sorted(list(vocabulary))

term2ix = OrderedDict()

for i, term in enumerate(ix2term):

term2ix[term] = i

Now that we have our indices, let’s construct a matrix for TF and IDF.

term_count_mat = sparse.dok_matrix((len(doc2ix), len(term2ix)))

for fid, i in doc2ix.items():

for term, count in term_counts[fid].items():

j = term2ix[term]

term_count_mat[i, j] = count

term_count_mat = term_count_mat.todense()

doc_count_vec = np.array(

[document_counts[term] for term in term2ix.keys()])

tf = np.log(term_count_mat + 1) idf = len(doc2ix) / (1 + doc_count_vec) tfidf = np.multiply(tf, idf)

tfidf.shape

(500, 40881)

This is a rather large matrix for such a small data set. Apart from space efficiency concerns, having this many dimensions can worsen performance for some algorithms. This is when distributional semantics can help.

Now, we can build our model.

from sklearn.cluster import KMeans

K = 6

clusters = ['cluster#{}'.format(k) for k in range(K)]

model = KMeans(n_clusters=K, random_state=314)

clustered = model.fit_transform(tfidf)

clustered.shape

We can see that we have now clustered our documents using our six centroids. Each of these centroids is a vector over our vocabulary. We can look at which words are most influential on our centroids. We will use word clouds for this, as shown in Figure 10-2.

model.cluster_centers_.shape

(6, 40881)

cluster_term = pd.DataFrame(

model.cluster_centers_.T, index=ix2term, columns=clusters)

cluster_term = np.round(cluster_term, decimals=4)

font = {'weight' : 'bold', 'size' : 22}

fig, axs = plt.subplots(K // 2, 2, figsize=(10, 8))

k = 0

for i in range(len(axs)):

for j in range(len(axs[i])):

wc = WordCloud(colormap='Greys', background_color='white')

im = wc.generate_from_frequencies(cluster_term[clusters[k]])

axs[i][j].imshow(im, interpolation='bilinear')

axs[i][j].axis("off")

axs[i][j].set_title(clusters[k], **font)

k += 1

plt.tight_layout()

plt.show()

Figure 10-2. Word clouds built from the centroids

We can see some recognizable topics here. Cluster #5 seems to be about mathematical topics. Cluster #3 seems to be about food preparation, specifically pasteurization.

K-Means does not make many assumptions about our data—it just tries to find the K. One drawback to K-Means is that it tends to create equally sized clusters. This is an unrealistic expectation for a natural corpus. Additionally, we don’t get much in the way if characterizing the similarity between documents. Let’s try an algorithm that makes some more assumptions but that will give us a way to see the similarities between these documents.

Latent Semantic Indexing

Latent semantic indexing (LSI) is a technique for decomposing the document-term matrix using singular value decomposition (SVD). In SVD we decompose a matrix into three matrices.

Σ is a diagonal matrix of the singular values in descending order. We can take the top K, and this serves as an approximation of the original matrix. The first K columns of U are the representation of the documents in the K dimensional space. The first K columns of V are the representation of the terms in the K dimensional space. This allows us to compare the similarity of documents and terms. It is common to choose a larger number for the components because the more dimensions Σ has, the more dimensions we can use to approximate the original matrix. So we will set K to be the higher number here.

from sklearn.decomposition import TruncatedSVD

K = 100

clusters = ['cluster#{}'.format(k) for k in range(K)]

model = TruncatedSVD(n_components=K)

clustered = model.fit_transform(tfidf)

Let’s look at the K singular values we are keeping.

model.singular_values_

array([3529.39905473, 3244.51395305, 3096.10335704, 3004.8882987 ,

2814.77858204, 2778.96902533, 2754.2942512 , 2714.32865945,

2652.4119094 , 2631.64362227, 2578.41230573, 2496.86392987,

2478.31563312, 2466.82942537, 2465.83674175, 2450.22361278,

2426.99364435, 2417.13989816, 2407.40572992, 2394.21460258,

2379.89976747, 2369.78970648, 2344.36252585, 2337.77090924,

2324.76055049, 2319.07434771, 2308.81232676, 2304.85707171,

2300.6888689 , 2299.08592131, 2292.18931562, 2281.59638332,

2280.80535179, 2276.55977269, 2265.29827699, 2264.49999278,

2259.19162875, 2253.20088136, 2249.34547946, 2239.31921392,

2232.24240145, 2221.95468155, 2217.95110287, 2208.94458997,

2199.75216312, 2195.85509817, 2189.76213831, 2186.64540094,

2178.92705724, 2170.98276352, 2164.19734464, 2159.85021389,

2154.82652164, 2145.5169884 , 2142.3070779 , 2138.06410065,

2132.8723198 , 2125.68060737, 2123.13051755, 2121.25651627,

2119.0925646 , 2113.46585963, 2102.77888039, 2101.07116001,

2094.0766712 , 2090.41516403, 2086.00515811, 2080.55424737,

2075.54071367, 2070.03500007, 2066.78292077, 2064.93112208,

2056.24857815, 2052.96995192, 2048.62550688, 2045.18447518,

2038.27607405, 2032.74196113, 2026.9687453 , 2022.61629887,

2018.05274649, 2011.24594096, 2009.64212589, 2004.15307518,

2000.17006712, 1995.76552783, 1985.15438092, 1981.71380603,

1977.60898352, 1973.78806955, 1966.68359784, 1962.29604116,

1956.62028269, 1951.54510897, 1951.25219946, 1943.75611963,

1939.85749385, 1933.30524488, 1928.57205628, 1919.57447254])

The components_ of the model are the highest K diagonal values of Σ. So now, let’s look at the terms distributed over the components, as shown in Table 10-1.

cluster_term = pd.DataFrame(model.components_.T, index=ix2term, columns=clusters) cluster_term = np.round(cluster_term, decimals=4)

cluster_term.loc[['polynomial', 'cat', 'frankfurter']]

| term | cluster#0 | cluster#1 | ... | cluster#98 | cluster#99 |

|---|---|---|---|---|---|

| polynomial | 0.0003 | 0.0012 | ... | 0.0077 | -0.0182 |

| cat | 0.0002 | 0.0018 | ... | 0.0056 | -0.0026 |

| frankfurter | 0.0004 | 0.0018 | ... | -0.0025 | -0.0025 |

This table represents the distribution that the words have over each cluster.

Since we did not stem our words, let’s see if we can find “polynomials,” from the vector for “polynomial.” We will use cosine similarity for this. Cosine similarity is a technique for looking at the similarity between two vectors. The idea is that we want to look at the angle between two vectors. If they are parallel, the similarity should be 1; if they are orthogonal, the similarity should be 0; and if they are going in opposite directions, the similarity should be –1. So we want to look at the cosine of the angle between them. The dot product of two vectors is equal to the product of the magnitudes of the two vectors times the cosine of the angle between them. So, we can take the dot product divided by the product of the magnitudes.

SciPy has a function for cosine distance, which is one minus the cosine similarity we just defined. We want the similarity, so we have to undo this.

from scipy.spatial.distance import cosine

def cossim(u, v):

return 1 - cosine(u, v)

polynomial_vec = cluster_term.iloc[term2ix['polynomial']]

similarities = cluster_term.apply(

lambda r: cossim(polynomial_vec, r), axis=1)

similarities.sort_values(ascending=False)[:20]

polynomial 1.000000 nilpotent 0.999999 diagonalizable 0.999999 commute 0.999999 polynomials 0.999999 subspace 0.999999 divisible 0.999998 satisfies 0.999998 differentiable 0.999998 monic 0.999998 algebraically 0.999998 primes 0.999996 spanned 0.999996 decomposes 0.999996 scalar 0.999996 commutes 0.999996 algebra 0.999996 integers 0.999991 subspaces 0.999991 exponential 0.999991 dtype: float64

We see that “polynomials” is very close, as are a number of other mathematically themed words. This is the “semantics” that people often use when referring to distributional semantics capturing. We can use these representations as features. The larger and more diverse the corpus, the more generally applicable these representations will be.

Let’s build the word clouds for our LSI model now, as shown in Figure 10-3. We will look only at the first and last three because we have so many more clusters than our K-Means model.

chosen_ix = [0, 97, 1, 98, 2, 99]

fig, axs = plt.subplots(3, 2, figsize=(10, 8))

k = 0

for i in range(len(axs)):

for j in range(len(axs[i])):

wc = WordCloud(colormap='Greys', background_color='white')

im = wc.generate_from_frequencies(cluster_term[clusters[chosen_ix[k]]])

axs[i][j].imshow(im, interpolation='bilinear')

axs[i][j].axis("off")

axs[i][j].set_title(clusters[chosen_ix[k]], **font)

k += 1

plt.tight_layout()

plt.show()

Cluster #2 seems to be related to medical topics. The others don’t appear very informative. This makes sense because this is an approximation of the original matrix, not a clustering of the matrix. This does reduce the dimensions, though, so we could still use this for downstream processing.

The idea that our data is composed of some features relating terms to documents can be directly addressed.

Figure 10-3. Word clouds built from the term distributions

Nonnegative Matrix Factorization

In nonnegative matrix factorization (NMF), we assume that the document-term matrix is the product of two other matrices. The dimension along which these matrices are multiplied will have our clusters. This will give us a document matrix and a term matrix, each with distribution across clusters.

If we have M documents and N terms, and we want K clusters, X is a MxN matrix, D is a MxK matrix, and T is an KxM matrix. In scikit-learn, these matrices are approximated, since there is no closed-form way to calculate these matrices. Let’s try an example on some toy data.

from sklearn.decomposition import NMF

We will create our random matrix and then split into two clusters.

np.random.seed(314) X = np.array(np.random.randint(1, 20, size=21).reshape(7, 3)) m = NMF(n_components=2, init='random', random_state=314) D = m.fit_transform(X) T = m.components_ X

array([[ 9, 14, 10],

[11, 15, 17],

[ 8, 3, 8],

[17, 4, 13],

[ 8, 5, 1],

[ 5, 14, 9],

[17, 16, 10]])

Now, the product of the two matrices should approximate the original matrix.

X_hat = np.dot(D, T) X_hat

array([[ 8.82168512, 13.8817222 , 10.31193979],

[12.94642621, 16.30109226, 13.58360985],

[ 8.89151218, 3.59533529, 6.43588552],

[17.69416104, 4.46299878, 11.78275144],

[ 5.8818731 , 3.5849985 , 4.71678044],

[ 5.32011204, 14.21139378, 8.44192057],

[14.75882004, 14.50332786, 13.93210152]])

This looks close, but let’s look at the absolute percentage error.

100 * np.abs(X - X_hat) / X

array([[ 1.98127639, 0.84484142, 3.11939786],

[ 17.69478376, 8.67394839, 20.09641264],

[ 11.14390221, 19.8445095 , 19.55143095],

[ 4.08330023, 11.57496939, 9.3634505 ],

[ 26.47658629, 28.30002998, 371.67804413],

[ 6.40224086, 1.50995555, 6.20088253],

[ 13.1834115 , 9.35420086, 39.32101525]])

Most of the errors are less than 15%. There are some especially egregious errors, though. As with all these methods, you will need to tune to your needs. There is no one-size-fits-all.

Let’s run this on a TF.IDF matrix.

model = NMF(n_components=100, init='nndsvdar', random_state=314)

# This will take a few minutes D = model.fit_transform(tfidf) T = model.components_

Now let’s get our approximation of the tfidf.

tfidf_hat = np.dot(D, T)

Now we can calculate our absolute percentage error. Since our matrix has zeros, we need to add a fudge factor to avoid division by zero.

abs_pct_error = 100 * np.abs(tfidf - tfidf_hat + 1) / (tfidf + 1)

np.median(np.array(abs_pct_error))

76.86063392886243

This error seems high, but let’s see how it clustered the terms together.

cluster_term = pd.DataFrame(

model.components_.T,

index=ix2term,

columns=clusters)

cluster_term = np.round(cluster_term, decimals=4)

polynomial_vec = cluster_term.iloc[term2ix['polynomial']]

similarities = cluster_term.apply(

lambda r: 1-cosine(polynomial_vec, r), axis=1)

similarities.sort_values(ascending=False)[:20]

satisfies 1.0 polynomial 1.0 polynomials 1.0 spanned 1.0 divisible 1.0 differentiable 1.0 subspace 1.0 scalar 1.0 monic 1.0 commutes 1.0 commute 1.0 nilpotent 1.0 decomposes 1.0 algebraically 1.0 algebra 1.0 primes 1.0 integers 1.0 exponential 1.0 expressible 1.0 subspaces 1.0 dtype: float64

It seems that it has successfully grouped similar words together. Now let’s look at the word clouds, as shown in Figure 10-4.

chosen_ix = [0, 97, 1, 98, 2, 99]

fig, axs = plt.subplots(3, 2, figsize=(10, 8))

k = 0

for i in range(len(axs)):

for j in range(len(axs[i])):

wc = WordCloud(colormap='Greys', background_color='white')

im = wc.generate_from_frequencies(

cluster_term[clusters[chosen_ix[k]]])

axs[i][j].imshow(im, interpolation='bilinear')

axs[i][j].axis("off")

axs[i][j].set_title(clusters[chosen_ix[k]], **font)

k += 1

plt.tight_layout()

plt.show()

Figure 10-4. Word clouds built from the NMF clusters

It looks like Cluster #1 is related to medical terms and Cluster #97 is related to dance.

All of these techniques ultimately rely on finding transformations related to the space defined by the vocabulary. Let’s look at a technique that tries to model the generation of the text.

Latent Dirichlet Allocation

The idea behind latent Dirichlet allocation (LDA) is that documents are generated based on a set of topics. In this process, we assume that each document is distributed over the topics, and each topic is distributed over the terms. Each document, and each word, are generated from sampling these distributions. The LDA learner works backward and tries to identify the distributions where the observed is most probable.

This is a much more complicated set of assumptions than that of our previous technique. This is because it is trying to model the term-generation process instead of just reducing the dimensions in the vector model.

Let’s look at an example.

from sklearn.decomposition import LatentDirichletAllocation

model = LatentDirichletAllocation(

n_components=K,

random_state=314,

max_iter=100)

# this can take a few minutes clustered = model.fit_transform(tfidf)

cluster_term = pd.DataFrame(

model.components_.T,

index=ix2term,

columns=clusters)

cluster_term = np.round(cluster_term, decimals=4)

Let’s see what words are closest to “polynomial,” as we’ve done for other techniques.

polynomial_vec = cluster_term.iloc[term2ix['polynomial']]

similarities = cluster_term.apply(

lambda r: 1-cosine(polynomial_vec, r),

axis=1)

similarities.sort_values(ascending=False)[:20]

polynomial 1.0 secants 1.0 diagonalizable 1.0 hino 1.0 secant 1.0 nilpotent 1.0 invariant 1.0 congruence 1.0 jastrow 1.0 szold 1.0 commute 1.0 polynomials 1.0 involution 1.0 kayabashi 1.0 subspace 1.0 galaxies 1.0 quadric 1.0 jo 1.0 beckett 1.0 tangents 1.0 dtype: float64

This seems less focused than the results from the other techniques. Let’s look at the word clouds, as shown in Figure 10-5.

chosen_ix = [0, 97, 1, 98, 2, 99]

fig, axs = plt.subplots(3, 2, figsize=(10, 8))

k = 0

for i in range(len(axs)):

for j in range(len(axs[i])):

wc = WordCloud(colormap='Greys', background_color='white')

im = wc.generate_from_frequencies(

cluster_term[clusters[chosen_ix[k]]])

axs[i][j].imshow(im, interpolation='bilinear')

axs[i][j].axis("off")

axs[i][j].set_title(clusters[chosen_ix[k]], **font)

k += 1

plt.tight_layout()

plt.show()

These techniques give us ways to cluster documents, reduce the dimensionality of our document vector data, and even group words together. Recently, there has been great progress in distributional semantics using neural networks. In the next chapter, we will cover some of these newer techniques.

Figure 10-5. Word clouds built from topic-term distributions

Exercises

Let’s see how these techniques work on our classification problem from Chapter 9. We will be using Spark’s implementation of LDA for this.

First, let’s load the data.

import os import re import numpy as np import pandas as pd from pyspark.sql.types import * from pyspark.sql.functions import expr from pyspark.sql import Row from pyspark.ml import Pipeline from pyspark.ml.feature import * from pyspark.ml.clustering import LDA from pyspark.ml.classification import LogisticRegression from pyspark.ml.tuning import CrossValidator, ParamGridBuilder from pyspark.ml.evaluation import MulticlassClassificationEvaluator import sparknlp from sparknlp import DocumentAssembler, Finisher from sparknlp.annotator import * %matplotlib inline spark = sparknlp.start()

HEADER_PTN = re.compile(r'^[a-zA-Z-]+:.*')

def remove_header(path_text_pair):

path, text = path_text_pair

lines = text.split('\n')

line_iterator = iter(lines)

while HEADER_PTN.match(next(line_iterator)) is not None:

pass

return path, '\n'.join(line_iterator)

path = os.path.join('data', 'mini_newsgroups', '*')

texts = spark.sparkContext.wholeTextFiles(path).map(remove_header)

schema = StructType([

StructField('path', StringType()),

StructField('text', StringType()),

])

texts = spark.createDataFrame(texts, schema=schema) \

.withColumn('newsgroup', expr('split(path, "/")[7]')) \

.persist()

train, test = texts.randomSplit([0.8, 0.2], seed=123)

Now, let’s build our NLP pipeline.

assembler = DocumentAssembler()\

.setInputCol('text')\

.setOutputCol('document')

sentence = SentenceDetector() \

.setInputCols(["document"]) \

.setOutputCol("sentences")

tokenizer = Tokenizer()\

.setInputCols(['sentences'])\

.setOutputCol('token')

lemmatizer = LemmatizerModel.pretrained()\

.setInputCols(['token'])\

.setOutputCol('lemma')

normalizer = Normalizer()\

.setCleanupPatterns([

'[^a-zA-Z.-]+',

'^[^a-zA-Z]+',

'[^a-zA-Z]+$',

])\

.setInputCols(['lemma'])\

.setOutputCol('normalized')\

.setLowercase(True)

finisher = Finisher()\

.setInputCols(['normalized'])\

.setOutputCols(['normalized'])\

.setOutputAsArray(True)

Let’s remove stop words and use TF.IDF vectors.

stopwords = set(StopWordsRemover.loadDefaultStopWords("english"))

sw_remover = StopWordsRemover() \

.setInputCol("normalized") \

.setOutputCol("filtered") \

.setStopWords(list(stopwords))

count_vectorizer = CountVectorizer(

inputCol='filtered', outputCol='tf', minDF=10)

idf = IDF(inputCol='tf', outputCol='tfidf')

Spark has an implementation of LDA. Let’s use that in combination with logistic regression as our classifier. We will be combining the output of the LDA model with the TF.IDF vectors using the VectorAssembler.

lda = LDA(

featuresCol='tfidf',

seed=123,

maxIter=20,

k=100,

topicDistributionCol='topicDistribution',

)

vec_assembler = VectorAssembler(

inputCols=['tfidf', 'topicDistribution'])

logreg = LogisticRegression(

featuresCol='topicDistribution',

maxIter=100,

regParam=0.0,

elasticNetParam=0.0,

)

Finally, we assemble our pipeline.

label_indexer = StringIndexer(

inputCol='newsgroup', outputCol='label')

pipeline = Pipeline().setStages([

assembler, sentence, tokenizer,

lemmatizer, normalizer, finisher,

sw_remover, count_vectorizer, idf,

lda, vec_assembler,

label_indexer, logreg

])

evaluator = MulticlassClassificationEvaluator(metricName='f1')

model = pipeline.fit(train)

train_predicted = model.transform(train) test_predicted = model.transform(test)

print('f1', evaluator.evaluate(train_predicted))

f1 0.9956621119176594

print('f1', evaluator.evaluate(test_predicted))

f1 0.5957199376998746

This seems to overfit more than before. Try regularization, and try using only the topics.