Chapter 16. Object Character Recognition

So far, we’ve dealt with writing stored as text data. However, a large portion of written data is stored as images. To use this data we need to convert it to text. This is different than our other NLP problems. In this problem, our knowledge of linguistics won’t be as useful. This isn’t the same as reading; it’s merely character recognition. It is a much less intentional activity than speaking or listening to speech. Fortunately, writing systems tend to be easily distinguishable characters, especially in print. This means that image recognition techniques should work well on images of print text.

Object character recognition (OCR) is the task of taking an image of written language (with characters) and converting it into text data. Modern solutions are neural-network based, and are essentially classifying sections of an image as containing a character. These classifications are then mapped into a character or string of characters in the text data.

Let’s talk about some of the possible inputs.

Kinds of OCR Tasks

There are several kinds of OCR tasks. The tasks differ in what kind of image is the input, what kind of writing is in the image, and what is the target of the model.

Images of Printed Text and PDFs to Text

Unfortunately, there are many systems that export their documents as images. Some will export as PDFs, but since there is such a wide variety of ways in which a document can be coded into a PDF, PDFs may not be better than images. The good news is that in a PDF the characters are represented very consistently (except for font and size differences) with a high-contrast background. Converting documents like this to text data is the easiest OCR task.

This can be complicated if the images are actually scans of documents, which can introduce the following errors:

- Print errors

- The printer had a dirty head and produced blotches, or left lines in the text.

- Paper problems

- The paper is old, stained, or has creases. This can reduce the contrast and smudge or distort some parts of the image.

- Scanning problems

- The paper is skewed, which means that text is not in lines.

Images of Handwritten Text to Text

This situation still has the high-contrast background, but the consistency of characters is much worse. Additionally, the issue of text not being in lines can be much harder if a document has marginal notes. The well-worn data set of handwritten digits from the MNIST database is an example of this task.

You will need some way to constrain this problem. For example, the MNIST data set is restricted to just 10 characters. Some electronic pen software constrains the problem by learning one person’s handwriting. Trying to solve this problem for everyone’s handwriting would be significantly more difficult. There is so much variety in writing styles that it is not uncommon for humans to be unable to read a stranger’s handwriting. What the model learns in recognizing writing in letters from the American Civil War will be useless in recognizing doctors’ notes or parsing signatures.

Images of Text in Environment to Text

An example of images of text in an environment would be identifying what a sign says in a picture of a street. Generally, such text is printed, but the font and size can vary widely. There can also be distortions similar to the problems in our scanning example. The problem of skewed text in this type of image is more difficult than when scanned. When scanning, one dimension is fixed, so the paper can be assumed to be flat on the scanning bed. In the environment, text can be rotated in any way. For example, if you are building an OCR model for a self-driving car, some of the text will be to the right of the car, and some will be elevated above the car. There will also be text on the road. This means that the shapes of the letters will not be consistent.

This problem is also often constrained. In most jurisdictions, there are some regulations on signage. For example, important instructions are limited and are published. This means that instead of needing to convert images to text, you can recognize particular signs. This changes the problem from OCR to object recognition. Even if you want to recognize location signs—for example, addresses and town names—there are usually specific colors that the signs are printed in. This means that your model can learn in stages.

Is this part of an image...

- a sign

- if so, is it a) instructions, or b) a place of interest

- if a) classify it

- if b) convert to text

Images of Text to Target

In some situations, we may want to skip the text altogether. If we are classifying scanned documents, we can do this in two ways. First, we can convert to text and then use our text classification techniques. Second, we can simply do the classification directly on the images. There are couple of trade-offs.

Image to text to target

- Pro: we can examine the intermediate text to identify problems

- Pro: we can reuse the image-to-text and text-to-target models separately (especially valuable if some inputs are text and some are images)

- Cons: when converting to text, we may lose features in the image that could have helped us classify—for example, if there is an image in the letterhead that could give us a great signal

Image to target

- Pro: this is simpler—no need to develop and combine two separate models

- Pro: additional features, as mentioned previously

- Con: harder to debug problems, as mentioned previously

- Con: can only be reused on similar image-to-target problems

It is better to start with the two-part approach because this will let you explore your data. Most image-to-text models today are neural nets, so adding some layers and retraining later in the project should not be too difficult.

Note on Different Writing Systems

The difficulty of the task is very much related to the writing system used. If you recall, in Chapter 2 we defined the different families of writing systems. Logographic systems are difficult because there are a much larger number of possible characters. This is difficult for two reasons. First, the obvious reason is that there are more classes to predict and therefore more parameters. Second, logographic systems will have many similar-looking characters, since all characters are dots, lines, and curves in a small box. There are also other complications that make a writing system difficult. In printed English, each character has a single form, but in cursive English, characters can have up to four forms—isolated, initial, medial, and final. Some writing systems have multiple forms even in printed text—for example, Arabic. Also, if a writing system makes much use of diacritics it can exacerbate problems like smudging and skewing (see Figure 16-1). You will want to be wary of this with most abugidas—for example, Devanagari and some alphabets, like Polish and Vietnamese.

Figure 16-1. “Maksannyo” [Tuesday] in Amharic written in Ge’ez

Problem Statement and Constraints

In our example, we will be implementing an ETL pipeline for converting images to text. This tools is quite general in its purpose. One common use for a tool like this is to convert images of text from legacy systems into text data. Our example will be using a (fake) electronic medical record. We will be using Tesseract from Google. We will use Spark to spread the workload out so we can parallelize the processing. We will also use a pretrained pipeline to process the text before storing it.

- What is the problem we are trying to solve?

We will build a script that will convert the images to text, process the text, and finally store it. We will separate the functionality so that we can potentially augment or improve these steps in the future.

- What constraints are there?

We will be working only with images of printed English text. The documents will have only one column of text. In our fictitious scenario, we also know the content will be medically related, but that will not affect this implementation

- How do we solve the problem with the constraints?

We want a repeatable way to convert images to text, process the text, and store it.

Implement the Solution

Let’s start by looking at an example of using Tesseract. Let’s look at the usage output for the program.

! tesseract -h

Usage: tesseract --help | --help-extra | --version tesseract --list-langs tesseract imagename outputbase [options...] [configfile...] OCR options: -l LANG[+LANG] Specify language(s) used for OCR. NOTE: These options must occur before any configfile. Single options: --help Show this help message. --help-extra Show extra help for advanced users. --version Show version information. --list-langs List available languages for tesseract engine.

It looks like we simply need to pass it an image, imagename, and output name, outputbase. Let’s look at the text that is in the image.

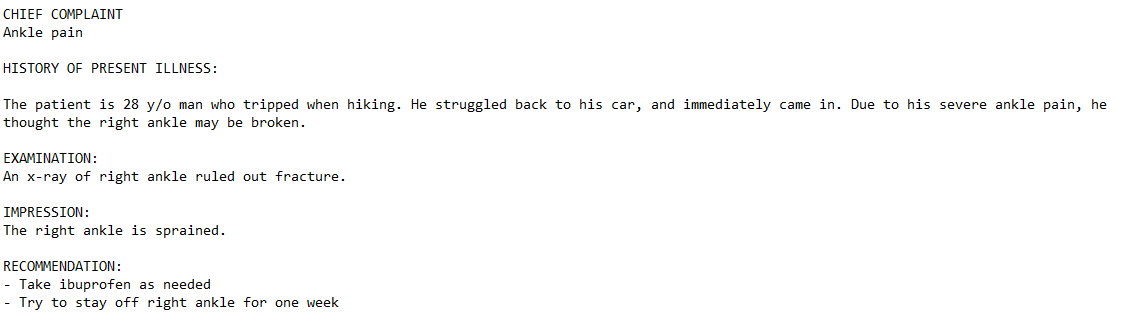

CHIEF COMPLAINT Ankle pain HISTORY OF PRESENT ILLNESS: The patient is 28 y/o man who tripped when hiking. He struggled back to his car, and immediately came in. Due to his severe ankle pain, he thought the right ankle may be broken. EXAMINATION: An x-ray of right ankle ruled out fracture. IMPRESSION: The right ankle is sprained. RECOMMENDATION: - Take ibuprofen as needed - Try to stay off right ankle for one week

Let’s look at the image we will be experimenting with (see Figure 16-2).

Now, let’s try and pass the image through Tesseract

! tesseract EHR\ example.PNG EHR_example

Figure 16-2. EHR image of text

Now let’s see what Tesseract extracted.

! cat EHR_example.txt

CHIEF COMPLAINT Ankle pain HISTORY OF PRESENT ILLNESS: The patient is 28 y/o man who tripped when hiking. He struggled back to his car, and immediately came in. Due to his severe ankle pain, he thought the right ankle may be broken. EXAMINATION: An x-ray of right ankle ruled out fracture. IMPRESSION: The right ankle is sprained. RECOMMENDATION: - Take ibuprofen as needed - Try to stay off right ankle for one week

This worked perfectly. Now, let’s put together our conversion script. The input to the script will be the type of image, and then the actual image will be encoded as a base64 string. We create a temporary image file and extract the text with Tesseract. This will also create a temporary text file, which we will stream into the stdout. We need to replace new lines with a special character, “~”, so that we can know which lines are from which input.

%%writefile img2txt.sh #!/bin/bash set -e # assumed input is lines of "image-type base64-encoded-image-data" type=$1 data=$2 file="img.$type" echo $data | base64 -d > $file tesseract $file text cat text.txt | tr '\n' '~'

Let’s try our script out.

! ! ./img2txt.sh "png" $(base64 EHR\ example.PNG |\

tr -d '\n') |\

tr '~' '\n'

Tesseract Open Source OCR Engine v4.0.0-beta.1 with Leptonica CHIEF COMPLAINT Ankle pain HISTORY OF PRESENT ILLNESS: The patient is 28 y/o man who tripped when hiking. He struggled back to his car, and immediately came in. Due to his severe ankle pain, he thought the right ankle may be broken. EXAMINATION: An x-ray of right ankle ruled out fracture. IMPRESSION: The right ankle is sprained. RECOMMENDATION: - Take ibuprofen when needed - Try to stay off right ankle for one week

Now let’s work on the full processing code. First, we will get a pretrained pipeline.

import base64 import os import sparknlp from sparknlp.pretrained import PretrainedPipeline spark = sparknlp.start()

pipeline = PretrainedPipeline('explain_document_ml')

explain_document_ml download started this may take some time. Approx size to download 9.4 MB [OK!]

Now let’s create our test input data. We will copy our image a hundred times into the EHRs folder.

! mkdir EHRs

for i in range(100):

! cp EHR\ example.PNG EHRs/EHR{i}.PNG

Now, we will create a DataFrame that contains the filepath, image type, and image data as three string fields.

data = []

for file in os.listdir('EHRs') :

file = os.path.join('EHRs', file)

with open(file, 'rb') as image:

f = image.read()

b = bytearray(f)

image_b64 = base64.b64encode(b).decode('utf-8')

extension = os.path.splitext(file)[1][1:]

record = (file, extension, image_b64)

data.append(record)

data = spark.createDataFrame(data, ['file', 'type', 'image'])\

.repartition(4)

Let’s define a function that will take a partition of data, as an iterable, and return a generator of filepaths and text.

def process_partition(partition):

for file, extension, image_b64 in partition:

text = sub.check_output(['./img2txt.sh', extension, image_b64])\

.decode('utf-8')

text.replace('~', '\n')

yield (file, text)

post_ocr = data.rdd.mapPartitions(process_partition)

post_ocr = spark.createDataFrame(post_ocr, ['file', 'text'])

processed = pipeline.transform(post_ocr)

processed.write.mode('overwrite').parquet('example_output.parquet/')

Now let’s put this into a script.

%%writefile process_image_dir.py

#!/bin/python

import base64

import os

import subprocess as sub

import sys

import sparknlp

from sparknlp.pretrained import PretrainedPipeline

def process_partition(partition):

for file, extension, image_b64 in partition:

text = sub.check_output(['./img2txt.sh', extension, image_b64])\

.decode('utf-8')

text.replace('~', '\n')

yield (file, text)

if __name__ == '__main__':

spark = sparknlp.start()

pipeline = PretrainedPipeline('explain_document_ml')

data_dir = sys.argv[1]

output_file = sys.argv[2]

data = []

for file in os.listdir(data_dir) :

file = os.path.join(data_dir, file)

with open(file, 'rb') as image:

f = image.read()

b = bytearray(f)

image_b64 = base64.b64encode(b).decode('utf-8')

extension = os.path.splitext(file)[1][1:]

record = (file, extension, image_b64)

data.append(record)

data = spark.createDataFrame(data, ['file', 'type', 'image'])\

.repartition(4)

post_ocr = data.rdd.map(tuple).mapPartitions(process_partition)

post_ocr = spark.createDataFrame(post_ocr, ['file', 'text'])

processed = pipeline.transform(post_ocr)

processed.write.mode('overwrite').parquet(output_file)

Now we have a script that will take a directory of images, and it will produce a directory of text files extracted from the images.

! python process_image_dir.py EHRs ehr.parquet

Model-Centric Metrics

We can measure the accuracy of an OCR model by character and word accuracy. You can measure this character error rate by calculating the Levenshtein distance between the expected and observed text then dividing by the size of the text.

In addition to monitoring the actual model error rates, you can capture statistics about output. For example, monitoring the distribution of words can potentially diagnose a problem.

Review

When you build an internal service, like an OCR tool may very well be, you will want to review the work with the teams that will need it. Ultimately, the success of your application requires that your users be satisfied with both the technical correctness and the support available. In some organizations, especially larger ones, there can be significant pressure to use in-house tools. If these tools are poorly engineered, under-documented, or unsupported, other teams will rightfully try and avoid them. This can potentially create hard feelings and lead to duplicated work and the siloing of teams. This is why it is a good idea to review the internal products and seek and accept feedback early and often.

Conclusion

In this chapter we looked at an NLP application that is not focused on extracting structured data from unstructured data but is instead focused on converting from one type of data to another. Although this is only tangentially related to linguistics, it is immensely important practically. If you are building an application that uses data from long-established industries, it is very likely you will have to convert images to text.

In this part of the book, we talked about building simple applications that apply some of the techniques we learned in Part II. We also discussed specific and general development practices that can help you succeed in building your NLP application. To revisit a point made previously about Spark NLP, a central philosophical tenet of this library is that there is no one-size-fits-all. You will need to know your data, and know how to build your NLP application. In the next part we will discuss some more general tips and strategies for deploying applications.