Now that we have used a Design Thinking approach to identify problems to be solved and then identified some appropriate potential solutions, we can begin creating technology-based prototypes to aid in the evaluation of these solutions. These technology prototypes can be viewed as early development attempts that might later evolve into full-fledged production-level solutions. At this stage, we need to control the scope of our development efforts and go just far enough to evaluate whether we are on the right track.

In this chapter, we begin by discussing possible prototyping approaches that we might use and then discuss the role of user interface prototypes and how they can guide our subsequent software and AI solutions development. We next evaluate whether pre-built applications might align to what is needed (with some customization) or whether the solution must be entirely custom-built. As we go through each of these steps, limiting the scope of these efforts will help us keep our stakeholders engaged.

We then explore where the solution might fit in our existing technology architecture and make use of available reference architectures as part of that effort. Finally, we take a deeper look at what takes place during prototype evaluation and describe some reasons why the evaluation might cause us to take another look at the potential solution.

Choosing a prototyping approach

User interface prototypes

Applications vs. custom build

Reference architectures

Prototype and solution evaluation

Summary

Choosing a Prototyping Approach

In Chapter 4, we introduced storyboards and mockups as part of the Design Thinking workshop. They provided a glimpse into what the desired potential solutions might provide and how they might be used. We did this to gain initial validation regarding the value of these solutions. After the Design Thinking workshop successfully concludes, we can begin prototype development using technology components. A prototype involving software will help drive more detailed specifications for full production design and development.

Software development life cycle (SDLC) models for prototypes are generally classified as either being rapid prototypes that are throwaway or as evolutionary prototypes that will change over time and become more aligned to user requirements as they become better known. Our earlier storyboards and mockups might be considered as very early stage throwaway prototypes serving as a prelude to taking an evolutionary approach incorporating software.

As prototypes are developed in software, our stakeholders and frontline workers will review them and suggest changes (or possibly suggest discarding the prototype entirely and starting anew if it doesn’t align at all to the solution that is needed). After several iterations using this approach, the prototype might be deemed as acceptable in delivering the scope of the solution that was envisioned. We can then move beyond this stage.

Some organizations choose to begin by developing a broad line-of-business solution prototype designed to impact all relevant business areas. They later focus on developing additional prototypes that provide line-of-business specific solutions. Others start by building prototype solutions within individual lines of business, sometimes working on these in parallel. They then create a cross line-of-business solution prototype by gathering the relevant data and output from the individual lines of business. The best approach to use depends on who the most important stakeholders are and where one can most quickly deliver prototypes that will demonstrate the potential value of the solution.

In all efforts, there is a need to collect relevant data required to demonstrate the prototype. In AI and machine learning projects, having real validated data directly impacts the success of prototype development. As a result, an early evaluation of data cleanliness and completeness is usually necessary in those types of projects.

In typical AI and machine learning projects, algorithms for developing early models are selected, the models are trained and tested, and the results are shared with stakeholders and frontline workers. Upon evaluation, a decision is made to further optimize the process (as needed) and move on or to repeat the process to improve the envisioned solution accuracy.

Faster access to needed software and AI resources

An ability to spin up or spin down additional storage and processing resources with a pay as you go processing model

An ability to support production-level workloads when moving beyond the prototype stage

Well-understood security capabilities that become especially important later when development efforts transition into production

User Interface Prototypes

Prototype development will often begin with a focus on the user interface since stakeholders can more easily relate to the usefulness of the solution when data is presented to them in this form. The data will sometimes come from spreadsheets already being used by frontline workers or other readily available data sources. Where such data is not readily available, datasets simulating real data are sometimes substituted.

Reports and dashboards are prepared that mimic the production-level solutions that will be built later. Automated actions (such as the sending of alerts, emails, and other triggers) might also be developed or simulated and demonstrated to stakeholders.

Key suppliers for products and the product components that they supply

Ratings of suppliers (based on previous orders delivered on time)

Components currently on hand within the plant(s)

Components on order including order status and expected delivery dates

Components in transit from suppliers to the plant(s)

Incoming product orders for the plant(s) with promised delivery dates

Anticipated component shortfalls, surplus, and timeline

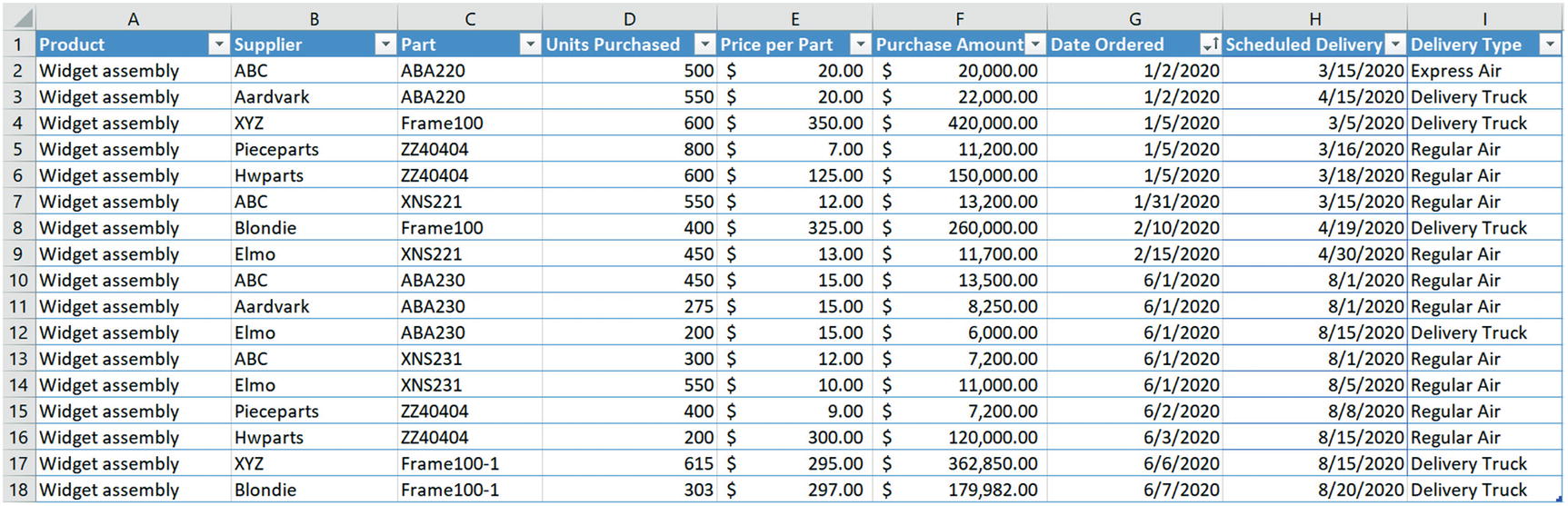

Sample supplier, parts, and orders data

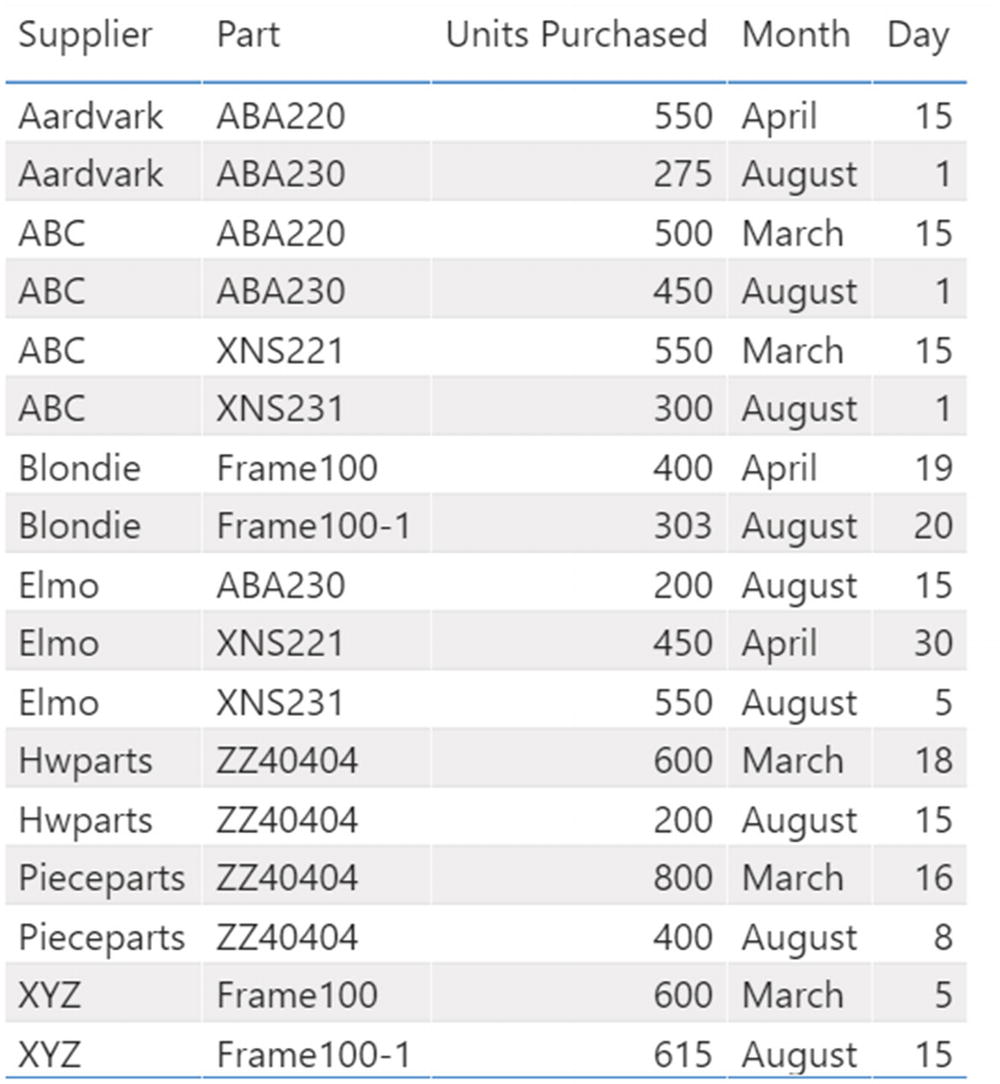

When tabular reports and visualizations are created to serve as user interfaces using sample datasets, they are reviewed with key stakeholders and frontline workers prior to further prototype development. Each group will likely want to focus on certain information that directly impacts their ability to make decisions, especially if it also impacts their ability to meet personal and broader business goals, provides opportunities for career advancement, and drives their compensation.

Tabular report showing suppliers, parts, units, and delivery dates

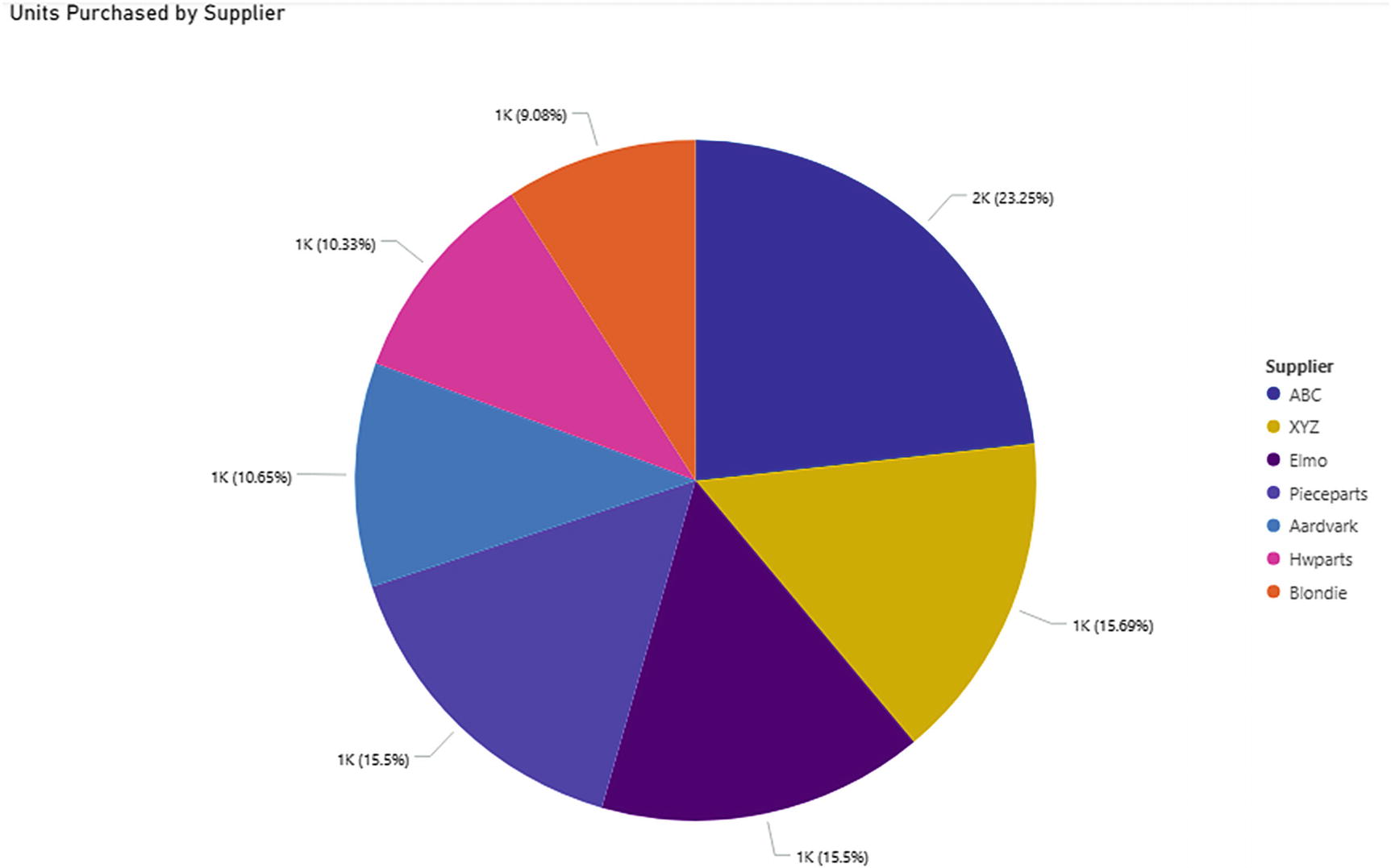

The plant managers and CFO also expressed an interest in seeing the total number of units expected from each supplier to better understand their share of providing critical parts. The pie chart visualization provided by Power BI gives us a nice way to quickly understand the distribution.

As shown in Figure 5-3, we can observe that there is a somewhat even volume distribution of units provided by the suppliers. As viewed here, supplier ABC provides the most parts (23 percent) and Blondie the fewest (9 percent) with others falling in between.

Share of number of parts provided by each supplier

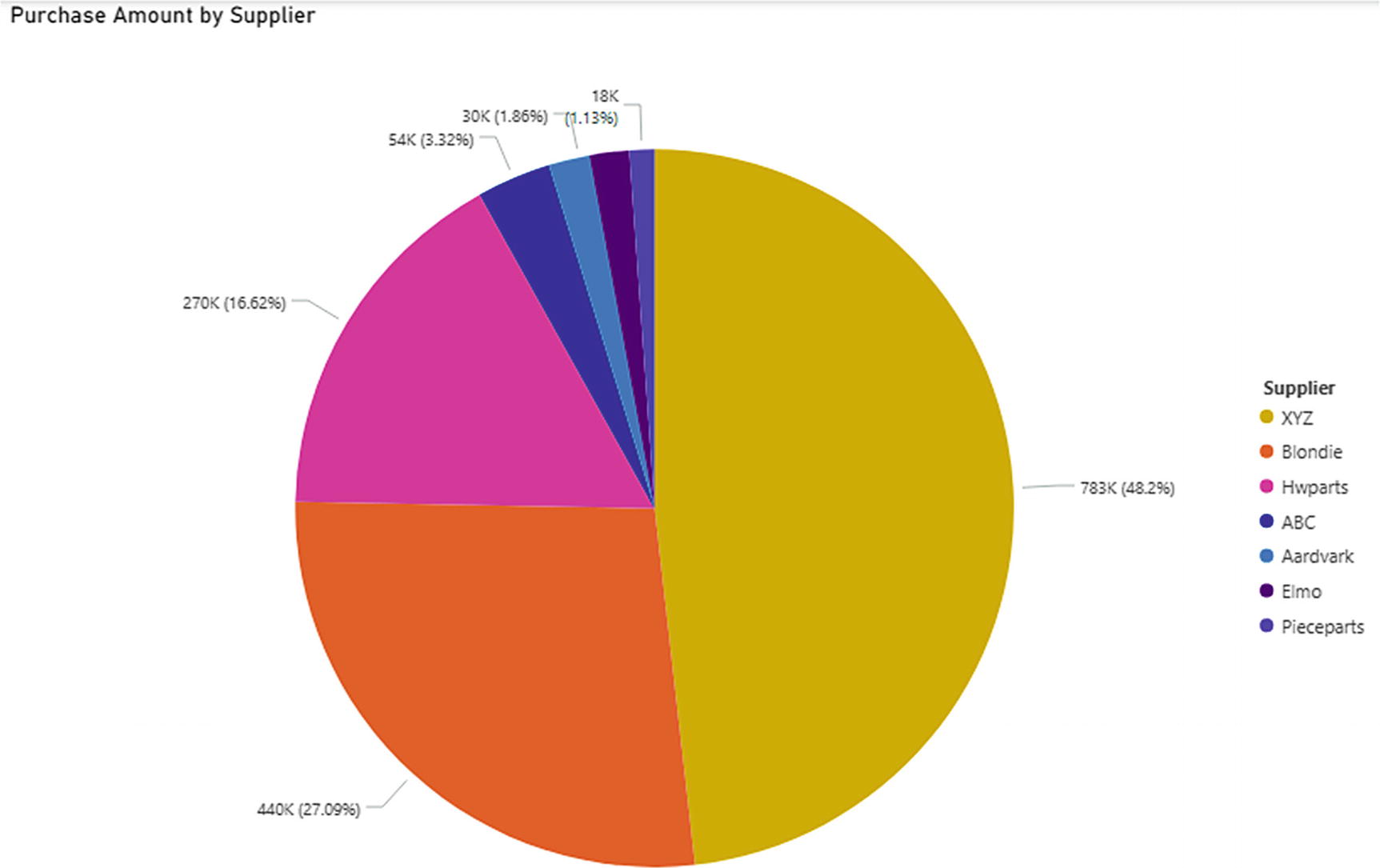

Share of spending with each supplier

After gathering feedback and modifying the reports and visualizations as needed, they can be published for wider distribution and further reviewed and utilized. They can also be inserted into dashboards that include many different reports and visualizations representing output from multiple datasets. Thus, a dashboard can provide a consolidated view of the entire situation enabling faster decision-making when solving the identified business problem.

Drilling to greater detail

Viewing or visualizing the data in additional ways

Adding more data (e.g., providing a longer history or additional data sources)

Cleansing the data present

Adding functionality (such as providing predictive capabilities or automated alerts)

Deployment to a wider group of users

Given that we are at a prototype stage, adding artificially created data and/or simulating additional functionality is sometimes deemed adequate at this time.

Though user interfaces can excite your audiences and lead them to believe that a production solution is near, it is important to remind them that they are looking at just a prototype. If you are a developer, you likely realize that much of the work is just beginning. But you now should have gained solid direction on what is important to each audience that you are developing for.

Applications vs. Custom Build

At this point, we might believe that the prototype is starting to resemble an off-the-shelf application. When off-the-shelf applications are deployed in a cloud environment, they are referred to as Software-as-a-Service (SaaS) offerings.

SaaS offerings remove significant requirements regarding IT’s involvement in deployment. SaaS providers are responsible for configuring and managing the applications, data, and underlying data management platforms, middleware, operating systems, virtualization, servers and storage, and networking. This can greatly speed time to implementation of these solutions and simplify support.

There are trade-offs that should be evaluated since the available applications may or may not closely align to the envisioned solution and could have limited flexibility in addressing the organization’s unique needs. It is a good idea to perform a gap analysis comparing what is required and what the SaaS application provides. As the analysis is performed, consider currently mandated requirements and possible future or deferred requirements.

Measure shortages and excess inventory within plants.

Predict shortages and excess inventory within plants.

Measure and manage shortages and excess inventory across multiple plants.

Predict shortages and excess inventory across multiple plants.

Alert plant managers as to emerging shortages or excess inventory.

Manage relationships with multiple suppliers.

Measure and rate suppliers based on previous on-time delivery performance.

Alert suppliers as to emerging shortages or excess inventory.

Set production goals within/across plants and measure outcomes.

Evaluate multiple production objectives and plans simultaneously.

Report using data from existing OLTP applications such as those used for demand management, order fulfillment, manufacturing management, supplier relationship management, and/or returns management.

Report using real-time manufacturing data from the plant floor.

Report using real-time supplies location data.

Provide multi-language support for plants/suppliers around the world.

Provide multi-currency support for plants/suppliers around the world.

Organizations typically involve key stakeholders in performing the gap analysis since they will understand the business implications of how the application operates and impact of functionality that is missing. Sometimes, as stakeholders better understand the capabilities that an application provides, new areas of interest are uncovered beyond what was defined as the problem and the desired solution in the Design Thinking workshop.

There is a danger of scope creep when evaluating the functionality provided by applications. Controlling the scope of the prototype development effort should remain top of mind. Staying true to the defined problem and solution definition identified in the Design Thinking workshop can help keep the project on track.

Developing and deploying custom solutions removes functionality limitations that could be present in applications, but also requires a more significant effort. When creating custom solutions, many choose to deploy using Platform-as-a-Service (PaaS) offerings from cloud vendors. The cloud vendor is then responsible for configuring and managing the underlying data management platforms, middleware, operating systems, virtualization, servers and storage, and networking. The organization that is building the custom solution assumes ownership of configuring and managing their applications and data.

Yet more flexibility is provided by Infrastructure-as-a-Service (IaaS) cloud-based offerings. Organizations can mix and match components from a wider variety of vendors and deploy them upon a choice of cloud platforms. However, greater configuration, management, and integration ownership is placed upon the IT organization. Though deploying IaaS components was very common in the earlier years of cloud adoption, more recently PaaS has gained significant mindshare by simplifying the role of IT in these efforts.

What might an architecture look like supporting a software and AI project deployed to the cloud? We explore a couple of common reference architectures in the next section.

Reference Architectures

A reference architecture can provide a technology template for a potential software and AI solution. Adopting a reference architecture can also help an organization adopt a common vocabulary used in describing key components and lead them toward proven best practices routinely used when deploying similar solutions.

There are many reference architectures available. Some align to specific applications and are provided by the applications vendors. Others can be used for completely custom solutions and where a variety of applications exist.

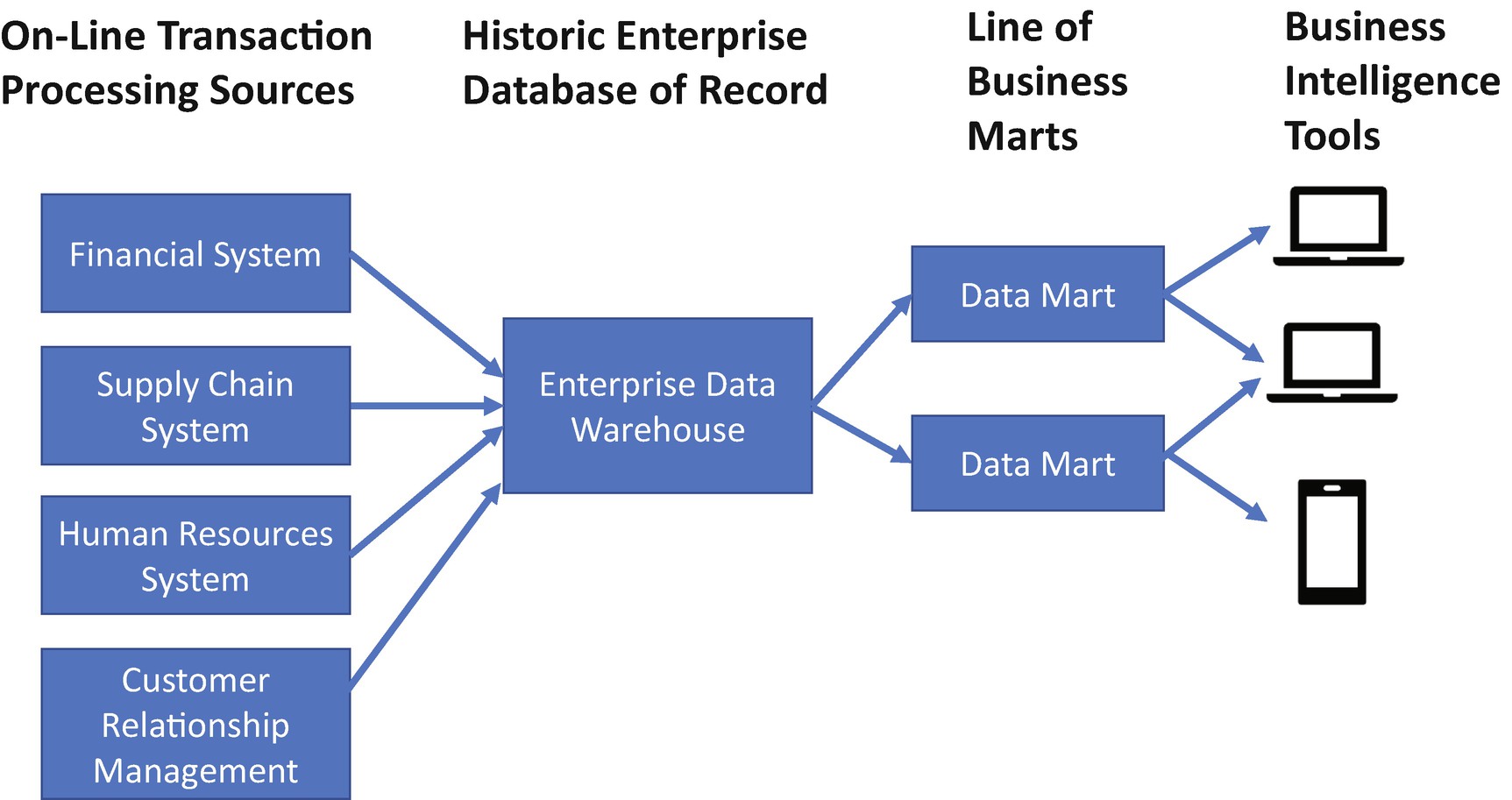

A classic reference architecture that could be relevant in our supply chain optimization example includes online transaction processing (OLTP) applications and a custom data warehouse and data marts. We might determine that we can build a viable solution by gathering data using batch feeds from financial, supply chain, human resources (HR), and customer relationship management (CRM) systems into an enterprise data warehouse. Business intelligence, machine learning, and other AI tools could be used to manipulate the data stored in the enterprise data warehouse or in data marts and deliver the information that we need.

As the data is sourced from only transactional systems in this architecture, all of it resides in tables. Hence, this architecture is comprised of relational databases for data management and the data is sourced from OLTP systems using data extraction, transformation, and loading (ETL) tools.

Typical OLTP applications and data warehouse footprint

In our supply chain optimization example, gathering data from these transactional data sources, building reports and dashboards, and incorporating machine learning and AI to make predictions might be all that is needed to solve the organization’s problems. In that situation, the architecture shown in Figure 5-5 would suffice.

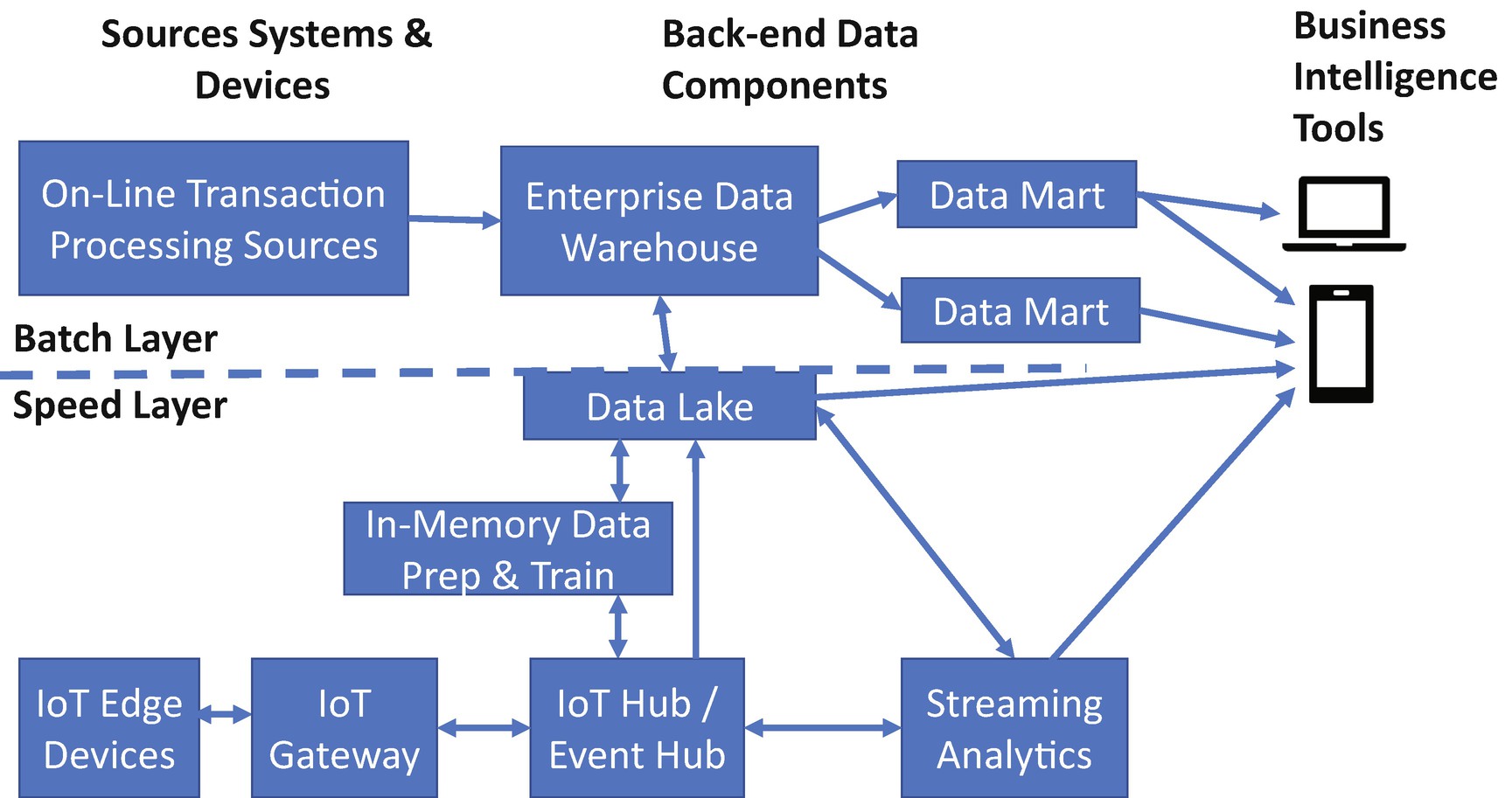

If there is a need to extend the data sources to Internet-of-Things (IoT) devices that produce streaming data and enable near real-time processing of the data, additional components must be introduced into the architecture. These would include components, such as the IoT edge devices themselves, IoT gateways, IoT hubs, streaming analytics engines, in-memory data preparation and training tools, and a data lake serving as an added data management system.

Typical footprint with IoT devices as streaming data sources

In good architecture design, form follows function. The type of architecture chosen is driven by the data required in the defined solution, the types of data sources and frequency of sourcing, operations that are performed upon the data, and how the data and information must be presented.

In our supply chain optimization example, if we plan to use data from IoT edge devices to report on the location and volume of the supplies and stream the data in near real time, we might choose to analyze this data at the edge (in the devices), along the data path, and/or in a data lake. Thus, the architecture that we would choose would likely resemble that shown in Figure 5-6.

So far, we have approached the reference architecture at a conceptual level. What follows next is a more detailed evaluation of individual components available from cloud providers and other vendors. In many organizations, there are standards established defining many of these components to take advantage of skills already present in the organization and/or previous experience. Sometimes, a project such as this one might cause the organization to reevaluate some or all the component choices previously made (especially if there is some dissatisfaction with previous technologies selected).

In the supply chain optimization example, the organization has decided that it wants to employ AI to predict future supply chain shortages. When embarking on these types of projects, the authors have found that organizations sometimes already have data scientists using existing machine learning and AI footprints. However, we have also frequently observed that organizations must hire new data scientists to take part in new projects due to limited skills or limited bandwidth available from those already onboard.

If faced with hiring new data science talent, it is useful to understand that there are a variety of deep learning and AI frameworks and toolkits available. Some popular examples include TensorFlow, PyTorch, and cognitive toolkits from the cloud vendors. Data scientists will very often have a strong preference to use one of these offerings based on their training. They usually also have a favorite programming language in mind. Thus, the talent hired can impact the specific components that are defined in the future state architecture.

Prototype and Solution Evaluation

Prototype development, determining alignment of the prototype with the desired solution, and evolving the organization’s architecture are not usually accomplished in a single sequential process. At each step in the incremental building process, a best practice is to have developers, key stakeholders, and frontline workers provide feedback and input into the direction of the effort. These reviews should take place frequently, typically every week or two.

As with the Design Thinking methodology that we covered in previous chapters, our intent in the prototype creation stage is to fail fast by quickly identifying mandated changes and fail forward continuing to improve the likelihood of solution success. The ongoing engagement of this group also serves to keep everyone up to date as to the progress being made and can help build a feeling of collaborative ownership for the project.

A lack of satisfaction with the prototype

A growing concern about eventual cost of a solution

A lack of belief in solution business benefits

Changing business conditions and requirements that are placing more emphasis on solving other problems

Turnover in organization sponsorships

A growing concern about the risk of solution success (especially among sponsors)

A realization that implementation and user skills are lacking in the organization

Discovery of major skills gaps in implementation partners determined vital to success

If the problem that the organization needs to focus on changes, it is time to revisit the problems that were identified in the Design Thinking workshop. It is possible that this new more important problem was also explored. If not, another Design Thinking workshop should be held to uncover more information about the new problem and define new potential solutions.

If the problem that we focused on is still considered to be the right one to solve but the selected solution no longer appears to be viable, we should revisit the other solutions proposed during the Design Thinking workshop. We might find a better path to follow there.

We might also determine that the solution could be viable but needs an adjustment. This can be especially true if new stakeholders enter the picture, skills gaps are determined, and/or cost containment becomes an overriding concern. Here, the nature and scope of the proposed solution and the envisioned project to build and deploy it should be reevaluated.

Summary

In this chapter, we explored how to use the problem space and solution space information that was gathered in the earlier Design Thinking workshop to create a solution prototype comprised of software and/or AI technology components. We emphasized doing just enough during this stage of development to prove the viability of the defined solution.

Approaches used in SDLC prototyping

Steps in rapid building and testing of user interface prototypes

When to consider applications vs. an entirely custom-built solution

How to leverage reference architectures

Evaluating the success of prototypes and possible reasons to revisit the solution

We also described how using these techniques and reference architectures could be applied in the supply chain optimization example. These illustrations should help you see how a software and AI project can become a natural outcome of a Design Thinking workshop and help you understand the value that the earlier workshop provides.

In the next chapter, we move beyond prototype development with a focus on what should be done after the prototype proves to be successful. In many organizations, a full-fledged project must be sold to senior executives to gain needed funding. We’ll describe how to do that. We’ll also explore moving the envisioned solution into the production development stage once project approval is gained.