Chapter 9. Chaos and Operations

If chaos engineering were just about surfacing evidence of system weaknesses through Game Days and automated chaos experiments, then life would be less complicated. Less complicated, but also much less safe!

In the case of Game Days, much safety can be achieved by executing the Game Day against a sandbox environment and ensuring that everyone—participants, observers, and external parties—is aware the Game Day is happening.1

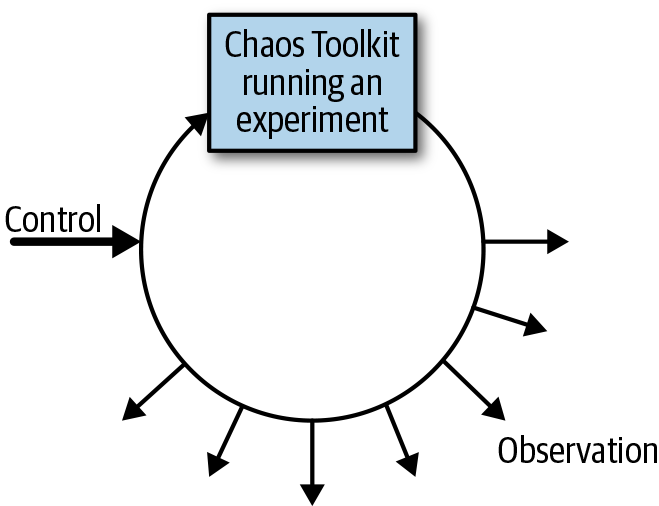

The challenge is harder with automated chaos experiments. Automated experiments could potentially be executed by anyone, at any time, and possibly against any system.2 There are two main categories of operational concern when it comes to your automated chaos experiments (Figure 9-1):

- Control

-

You or other members of your team may want to seize control of a running experiment. For example you may want to shut it down immediately, or you may just want to be asked whether a particularly dangerous step in the experiment should be executed or skipped.

- Observation

-

You want your experiment to be debuggable as it runs in production. You should be able to see what experiments are currently running, and what step they have just executed, and then trace that back to how other elements of your system are executing in parallel.

Figure 9-1. The control and observation operational concerns of a running automated chaos experiment

There are many implementations and system integrations necessary to support these two concerns, including dashboards, centralized logging systems, management and monitoring consoles, and distributed tracing systems; you can even surface information into Slack!3 The Chaos Toolkit can meet all these needs by providing both control and observation in one operational API.

Experiment “Controls”

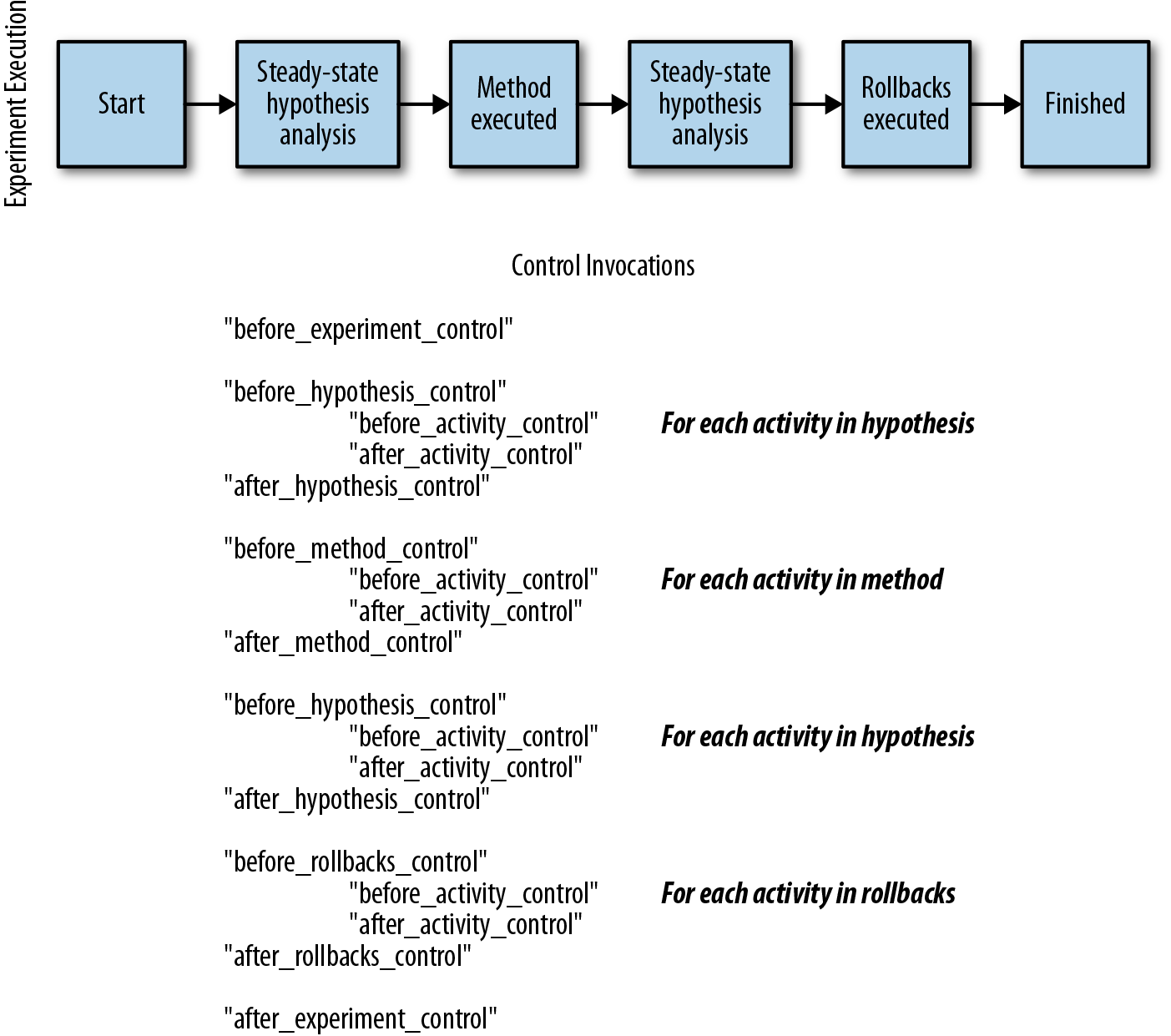

A Chaos Toolkit control listens to the execution of an experiment and, if it decides to do so, has the power to change or skip the execution of an activity such as a probe or an action; it is even powerful enough to abort the whole experiment execution!

The control is able to intercept the control flow of the experiment by implementing a corresponding callback function, and doing any necessary work at those points as it sees fit. When a control is enabled in the Chaos Toolkit, the toolkit will invoke any available callback functions on the control as an experiment executes (see Figure 9-2).

Figure 9-2. A control’s functions, if implemented, are invoked throughout the execution of an experiment

Each callback function is passed any context that is available at that point in the experiment’s execution. For example, the following shows the context that is passed to the after_hypothesis_control function:

defafter_hypothesis_control(context:Hypothesis,state:Dict[str,Any],configuration:Configuration=None,secrets:Secrets=None,**kwargs):

In this case, the steady-state hypothesis itself is passed as the context to the after_hypothesis_control function. The control’s callback function can then decide whether to proceed, to do work such as sending a message to some other system, or even to amend the hypothesis if it is useful to do so. This is why a control is so powerful: it can observe and control the execution of an experiment as it is running.

A Chaos Toolkit control is implemented in Python and provides the following full set of callback functions:

defconfigure_control(config:Configuration,secrets:Secrets):# Triggered before an experiment's execution.# Useful for initialization code for the control....defcleanup_control():# Triggered at the end of an experiment's run.# Useful for cleanup code for the control....defbefore_experiment_control(context:Experiment,configuration:Configuration=None,secrets:Secrets=None,**kwargs):# Triggered before an experiment's execution....defafter_experiment_control(context:Experiment,state:Journal,configuration:Configuration=None,secrets:Secrets=None,**kwargs):# Triggered after an experiment's execution....defbefore_hypothesis_control(context:Hypothesis,configuration:Configuration=None,secrets:Secrets=None,**kwargs):# Triggered before a hypothesis is analyzed....defafter_hypothesis_control(context:Hypothesis,state:Dict[str,Any],configuration:Configuration=None,secrets:Secrets=None,**kwargs):# Triggered after a hypothesis is analyzed....defbefore_method_control(context:Experiment,configuration:Configuration=None,secrets:Secrets=None,**kwargs):# Triggered before an experiment's method is executed....defafter_method_control(context:Experiment,state:List[Run],configuration:Configuration=None,secrets:Secrets=None,**kwargs):# Triggered after an experiment's method is executed....defbefore_rollback_control(context:Experiment,configuration:Configuration=None,secrets:Secrets=None,**kwargs):# Triggered before an experiment's rollback's block# is executed....defafter_rollback_control(context:Experiment,state:List[Run],configuration:Configuration=None,secrets:Secrets=None,**kwargs):# Triggered after an experiment's rollback's block# is executed....defbefore_activity_control(context:Activity,configuration:Configuration=None,secrets:Secrets=None,**kwargs):# Triggered before any experiment's activity# (probes, actions) is executed....defafter_activity_control(context:Activity,state:Run,configuration:Configuration=None,secrets:Secrets=None,**kwargs):# Triggered after any experiment's activity# (probes, actions) is executed....

Using these callback functions, the Chaos Toolkit can trigger a host of operational concern implementations that could passively listen to the execution of an experiment, and broadcast that information to anyone interested, or even intervene in the experiment’s execution.

Enabling Controls

By default, no controls are enabled when you run an experiment with the Chaos Toolkit. If a control implementation is present, it will not be used unless something enables it. A control can be enabled a few different ways:

-

By a declaration somewhere in an experiment definition

-

Globally so that it is applied to any experiment that instance of the Chaos Toolkit runs

Controls Are Optional

Controls are entirely optional as far as the Chaos Toolkit is concerned. If the Chaos Toolkit encounters a controls block somewhere in an experiment, the toolkit will try to enable it, but its absence is not considered a reason to abort the experiment. This is by design, as controls are seen as additional and optional.

Enabling a Control Inline in an Experiment

Enabling a control in your experiment definition at the top level of the document is useful when you want to indicate that your experiment ideally should be run with a control enabled:4

{..."controls":[{"name":"tracing","provider":{"type":"python","module":"chaostracing.control"}}],...

You can also specify that a control should be invoked only for the steady-state hypothesis, or even only on a specific activity in your experiment.

Summary

In this chapter you’ve learned how a Chaos Toolkit control can be used to implement the operational concerns of control and observation. In the next chapter you’ll look at how to use a Chaos Toolkit control to implement integrations that enable runtime observability of your executing chaos experiments.

2 That is, any system that the user can reach from their own computer.

3 Check out the free ebook Chaos Engineering Observability I wrote for O’Reilly in collaboration with Humio for an explanation of how information from running experiments can be surfaced in Slack using the Chaos Toolkit.

4 “Ideally” because, as explained in the preceding note, controls in the Chaos Toolkit are considered optional.