[T]he mind is in its own nature immortal.

TOWARD THE END OF HIS MEMOIR, Vladimir Nabokov expressed his frustration at “having developed an infinity of sensation and thought within a finite existence.” 1 It was a theme he had turned to in the last lines of Lolita: “I am thinking of aurochs and angels, the secret of durable pigments, prophetic sonnets, the refuge of art. And this is the only immortality you and I may share.”2Nabokov died in 1977 and—like all other forms of organic life—relinquished his conscious sensation of being. But many of his thoughts continue to exist, as they do on this page, and in this way Nabokov transcended his finite existence.

It is a power that almost all humans possess—to transcend their existence as organic beings by communicating thoughts that will endure after they die. The ideas of an individual may be communicated in writing or print or electronic media and, before the invention of writing, by oral tradition. In this way, the seemingly ephemeral thoughts that flow through the brain may endure for centuries or more.

Nonverbal thoughts may be communicated through art and technology. The aurochs mentioned by Nabokov refer to cave paintings of the Upper Paleolithic that were created more than 30,000 years ago.3 The visual imaginings of the individuals who crafted these paintings are as fresh today as the words written by Nabokov several decades ago. The earliest known examples of thoughts expressed in material form are the bifacial stone tools of the Lower Paleolithic, some of which are 1.7 million years old.4 Much of the archaeological record, like the historical written record, is a record of thought.

External Thought

[O]ur world is the product of our thoughts.

The immortality of artifact and art is based on a unique human ability to express complex thoughts outside the brain. Although language comes to mind first as the means by which people articulate their ideas, humans externalize thoughts in a wide variety of media. In addition to spoken and written language, these media include music, painting, dance, gesture, architecture, sculpture, and others. One of the most consequential forms of external thought is technology. The earliest humans used and modified natural objects, as do some other animals. But during the past million years, humans have externalized thoughts in the form of technologies that have become increasingly complex and powerful with far-reaching effects on themselves and their environment.

Other animals can communicate their emotional states and simple bits of information, but only humans can project complex structures of thought, or mental representations, outside the brain.5 Like those of other animals, the human brain receives what cognitive psychologists term natural representations. A natural representation might be the perceived or remembered visual image of a waterfall or the sound of the waterfall. Humans have not yet developed the technology to externalize a natural representation, but they can create and project artificial (or “semantic”) representations, such as a painting or verbal description of the perceived or remembered image of the waterfall.6 From 1.7 million years ago onward, the archaeological record is filled with artificial representations.

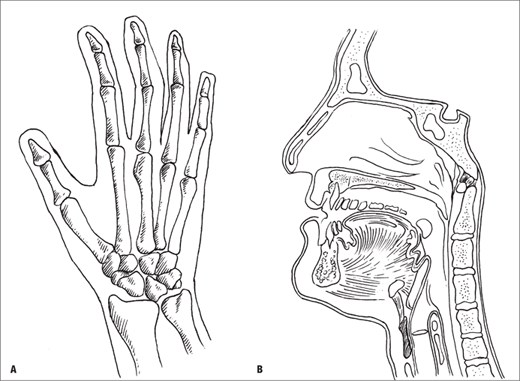

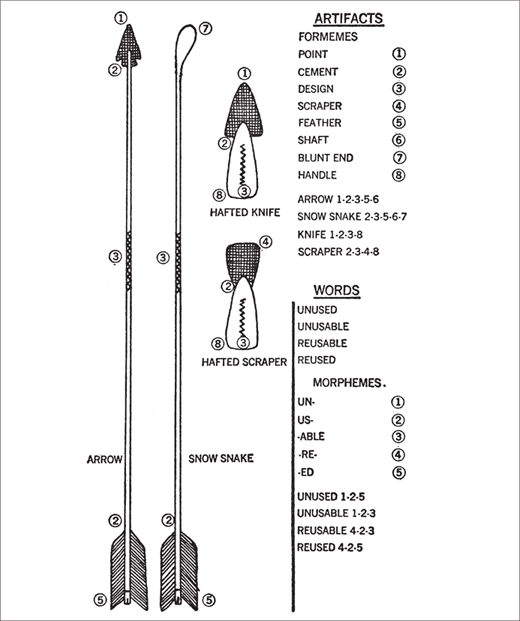

Humans have evolved two specialized organs that are used to communicate or externalize mental representations: the hand and the vocal tract (figure 1.1). The hand apparently evolved first as a means to project thoughts outside the brain, underscoring the seminal role of bipedalism in human origins. Although the primates have had a long history of manipulating objects with their forelimbs, it was upright walking among the earliest humans that freed up the hands to become the highly specialized instruments they are today.7 By roughly 3.5 million years ago, the australopithecine hand exhibits significant divergence from the ape hand—especially with respect to the length of the thumb—and further changes coincide with an increase in brain volume and the earliest known stone tools, at about 2.5 million years ago. The fully modern hand apparently was present at 1.7 to 1.6 million years ago (that is, at the time that the first bifacial tools appear).8

As did the hand, the human vocal tract diverged from the ape pattern. Among modern human adults, the larynx is positioned lower in the neck than it is in apes, and the epiglottis is not in contact with the soft palate. Like the hand, the human vocal tract is a highly specialized instrument for externalizing thought. Reconstructing the evolution of the vocal tract is more difficult, however, than tracing the development of the hand. The vocal tract is composed entirely of soft parts that do not preserve in the fossil record, so it has been reconstructed on the basis of associated bones such as the hyoid. These reconstructions have been controversial, and it remains unclear when the human vocal tract evolved.9 Furthermore, the relationship between the larynx and language is problematic. In theory, a syntactic language may be produced with a much smaller range of sounds than that offered by the human vocal tract.10 The critical question is: When did humans begin to create “artifacts” of sound with the vocal tract?

Figure 1.1 Humans evolved two specialized organs to project artificial or semantic mental representations outside the brain. Under fine motor control, both the hand (A) and the vocal tract (B) can execute a potentially infinite array of subtle and sequentially structured movements to transform information in the brain into material objects or symbolically coded sounds. (Drawn by Ian T. Hoffecker)

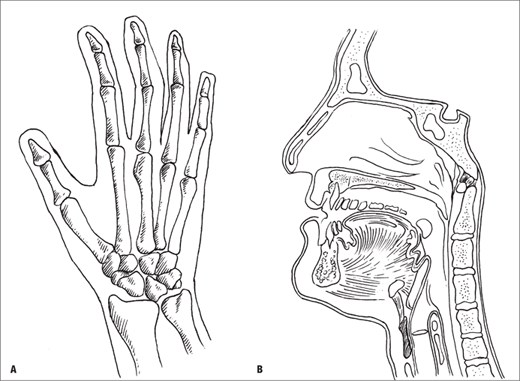

It is the archaeological record, not the human fossil record, that contains the essential clues to the development of external thought. Between about 2.5 and 1.7 million years ago, early forms of the genus Homo (and perhaps the last of australopithecines) were making stone tools that, while comparatively simple, were beyond the capacity of the living apes.11 Not until about 1.7 million years ago did the first recognizable examples of external thought appear, in the form of bifacial tools that exhibit a mental template imposed on rock (figure1.2).12 The finished tools bear little resemblance to the original stone fragments from which they were chipped. And it is not until after 500,000 years ago (among both Homo sapiens and Homo neanderthalensis) that more complex representations began to appear, in the form of composite tools and weapons—assembled from three or four components—reflecting a hierarchical structure.13

After 100,000 years ago, evidence for external thought on a scale commensurate with that of living humans finally emerges in the archaeological record: elaborate visual art in both two and three dimensions, remarkably sophisticated musical instruments, personal ornaments, innovative and increasingly complex technology, and other examples.14 Although spoken language leaves no archaeological traces, its properties are manifest in the art and other media that are preserved. These patterns in the archaeological record, which imply the presence of spoken language, are widely interpreted as the advent of modern behavior, often labeled modernity.15

Modernity has been defined as “the same cognitive and communication faculties” as those of living humans.16 This definition has been translated into archaeological terms in at least two ways. The first is a lengthy list of traits that are characteristic of living and recent humans but are not found in the earlier archaeological record. In addition to visual art and ornaments, they include the invasion of previously unoccupied habitats, the production of bone tools, the development of effective hunting techniques, and the organization of domestic space.17 This approach has been criticized as a “shopping list” that suffers from the absence of a theoretical framework regarding the human mind. An alternative archaeological definition of modernity is the “storage of symbols outside the brain” in the form of art, ornamentation, style, and formal spatial patterning.18

Figure 1.2 The earliest known externalized, or phenotypic, thoughts are objects of stone chipped into a three-dimensional ovate shape in accordance with a mental template. Appearing more than 1.5 million years ago, large bifaces (often termed hand axes) persisted with little change for 1 million years. They seem to reflect an earlier form of mind (or proto-mind) that lacked the unlimited creative potential of the modern mind. (Redrawn by Ian T. Hoffecker, from John Wymer, Lower Palaeolithic Archaeology in Britain as Represented by the Thames Valley [London: Baker, 1968], 51, fig. 14)

Both definitions, however, lack a focus on the core property of language and modern behavior in general—the creation of a potentially infinite variety of combinations from a finite set of elements. Living humans externalize thought in a wider range of media than did their predecessors more than 100,000 years ago, but it is the ability to generate an unlimited array of sculptures, paintings, musical compositions, ornaments, tools, and the other media that really sets modern behavior apart from that of earlier humans and all other animals. And it is creativity—so clearly manifest in the archaeological record after 100,000 years ago—that provides the firmest basis for inferring the arrival of language.

The Core Property

To conjure up an internal representation of the future, the brain must have an ability to take certain elements of prior experiences and reconfigure them in a way that in its totality does not correspond to any actual past experience.

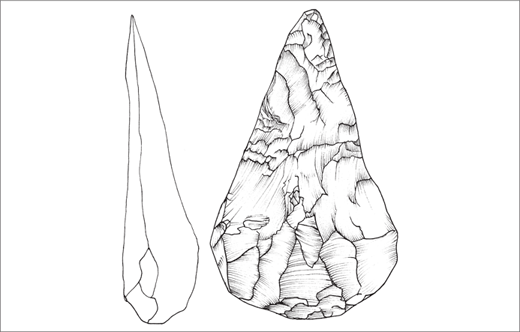

Noam Chomsky described the core property of language as discrete infinity (figure 1.3)19 Galileo apparently was the first to recognize and appreciate the significance of “the capacity to generate an infinite range of expressions from a finite set of elements”20 In all languages, people use “rules” of syntax21 to construct sentences and longer narratives—all of which are artificial representations—out of words that have, in turn, been constructed from a modest assortment of sounds. There seems to be no limit to the variety of sentences and narratives that may be created by individuals who speak a syntactic language.

An essential feature of discrete infinity is hierarchical structure. A syntactic language allows the speaker to create sentences with hierarchically organized phrases and subordinate clauses. Most words are themselves composed of smaller units. Without this hierarchical structure, language would be severely constrained. Experiments with nonhuman primates (for example, tamarins) have shown that while some animals can combine and recombine a small number of symbols only humans can generate an immense lexicon of words and a potentially infinite variety of complex sentences through phrase structure grammar.22 The same principle applies to the genetic code, which yields a potentially infinite variety of structures (phenotypes) through hierarchically organized combinations of an even smaller number of elements (DNA base pairs).23

Figure 1.3 The hierarchical structure of a sentence in the English language, illustrated by a tree representation, permits the generation of a potentially unlimited array of expressions. (Redrawn from Noam Chomsky, Language and Mind, 3rd ed. [Cambridge: Cambridge University Press, 2006], 129)

As the evolutionary psychologist Michael Corballis noted several years ago, the core property of language may be applied to all the various media through which humans externalize thoughts, or mental representations. He described the ability to generate a potentially infinite variety of hierarchically organized structures as “the key to the human mind.” This principle of creative recombination of elements is manifest, for example, in music, dance, sculpture, cooking recipes, personal ornamentation, architecture, and painting.24 Among most media, creativity may be expressed not only in digital form (that is, using discrete elements such as sounds and words), but also in analogical or continuous form, such as the curve of a Roman arch or the movements of a ballerina.

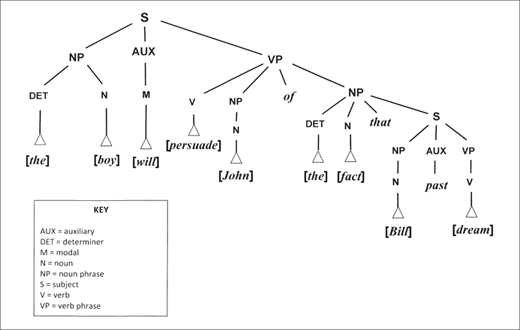

The medium of creative expression with the most profound consequences for humankind is technology. The ability to generate novel kinds of technology—externalized mental representations in the form of instruments or facilities used to manipulate the environment as an extension of the body—has had an enormous impact on individual lives and population histories. Most of human technology exhibits a design—some of it highly complex—based on nongenetic information. Humans have redesigned themselves as organic beings with everything from clothing stitched together from cut pieces of animal hide to eyeglasses to electronic pacemakers. They have also redesigned the environment, both abiotic and biotic, in ways that have completely altered their relationship to it as organic beings.

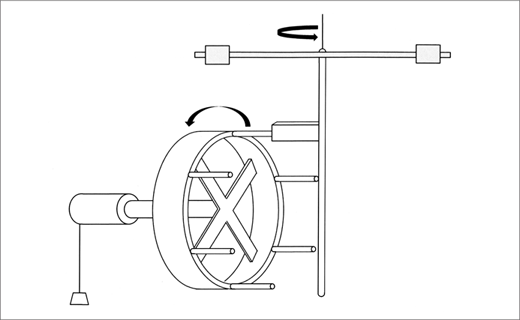

During the Upper Paleolithic (roughly 50,000 to 12,000 years ago), designed technology unfolded on a relatively modest scale with throwing darts, small-mammal traps, fishing nets, baking ovens, and other simple devices for acquiring, processing, and storing food. But widespread domestication (that is, redesigning) of plants and animals in the millennia that followed dramatically altered the relationship between humans and their modified environment. Population density increased during the later phases of the Upper Paleolithic and exploded in the postglacial epoch as villages and cities (and new forms of society) emerged. All these developments were tied to the generation of novel technologies, including those that became increasingly complex. While a spoken sentence composed of a million words is theoretically possible, the constraints of short-term memory and social relationships render it impractical and undesirable. Such biological constraints do not always apply to technology, which may be hierarchically organized on an immense scale like the Internet (composed of billions of elements) (figure 1.4).

By the mid-twentieth century, creativity had become a major topic in psychology, and numerous books and papers were written about it. Psychologists define it in much the same way as linguists define the core property of language: “The forming of associative elements into new combinations which either meet specified requirements or are in some way useful.”25 Beyond the everyday creative use of language, they distinguish various forms of creativity. “Exploratory creativity” entails coming up with a new idea within an established domain of thought, such as sculpture, chess, or clothing design. Another form is “making unfamiliar combinations of familiar ideas,” such as the above-noted comparison between the genetic code and language. A rarer form is “transformational,” which Margaret Boden described as people “thinking something which, with respect to the conceptual spaces in their minds, they couldn’t have thought before.”26 An example would be Darwin’s theory of natural selection.

As with external thought, the archaeological record reveals when and where creativity emerged in the course of human evolution. Unlike externalized mental representations, however, creativity cannot be recognized in a single artifact or feature in that record. It is only by comparison of artifacts and features in space and time that creativity can be observed in archaeological remains. The earliest externalized representations—large bifacial stone tools of the Lower Paleolithic—exhibit an almost total lack of design variation across time and space. But after 500,000 years ago, a limited degree of creativity is evident in the variations of small bifacial tools, core reduction sequences (that is, the succession of steps by which a piece of stone was broken down to smaller pieces to be used as blanks for tools), and, perhaps especially, the composite tools and weapons that appear by 250,000 years ago.27

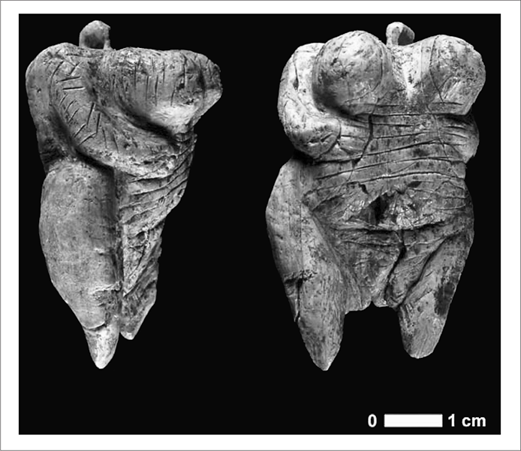

The unlimited creative potential of living humans is not visible in the archaeological record until after the emergence of anatomically modern humans in Africa. And although remains of anatomically modern humans have been dated in Africa to roughly 200,000 years ago, it is not until some-what later—after 100,000 years ago and perhaps not until after 50,000 years ago—that creativity on a scale commensurate with that of living humans is evident in the archaeological record. The most vivid illustrations are to be found among the examples of Upper Paleolithic visual art that date to between 35,000 and 30,000 years ago. They include two dimensional polychrome paintings and small three-dimensional sculptures that exhibit a conceivably unlimited recombination of elements within a complex hierarchical framework with embedded components (figure 1.5). Although expressed primarily in analogical rather than digital form, each work of art is like a narrative of words and sentences with the potential for infinite variation.28

Figure 1.4 Humans can create a potentially infinite variety of tools and other technology, structured hierarchically and much of it in analogical, rather than digital, form. Technology is the means by which the mind engages the external world. (Illustration by Eric G. Engstrom, from James Deetz, Invitation to Archaeology [Garden City, N.Y.: Natural History Press, 1967], 91, fig. 16. Reprinted with permission from Patricia Scott Deetz)

The creativity of the modern human mind is manifest in all the media of external thought that are preserved in the archaeological record of this period. The same pattern illustrated by visual art may be inferred from the presence of musical instruments of the early Upper Paleolithic, but in this case the compositions are lost—only the paint brushes have survived.29 In the case of technology, however, the creative recombination of elements—and the increasingly complex hierarchical structure of tools and other means for effecting changes on the world—is readily apparent from 50,000 years ago onward. Novel technologies include sewn clothing, heated artificial shelters, watercraft, and devices for harvesting small game and fish and/or waterfowl. By 25,000 years ago, there is evidence for winter houses, fired ceramics, refrigerated storage, and portable lamps, and during the late Upper Paleolithic (about 20,000 to 12,000 years ago), for domesticated animals (dogs) and mechanical devices.30

Figure 1.5 The earliest known examples of visual art date to the early Upper Paleolithic, more than 40,000 years ago. Each work of art is unique and is hierarchically structured like the representations generated with syntactic language. This female figurine was recently recovered from Hohle Fels Cave (southwestern Germany). (From Nicholas J. Conard, “A Female Figurine from the Basal Aurignacian of Hohle Fels Cave in South western Germany,” Nature 459 [2009]: 248, fig. 1. Reprinted by permission from Macmillan Publishers Ltd. Nature, copyright 2009)

It is creativity in the archaeological record that signals the advent of modernity, not the appearance of symbols (or the “storage of symbols” in artifacts and other material forms). Symbols in the strict sense are not especially common in the Upper Paleolithic record. Although the visual art is sometimes characterized assymbolic, these representations are actually iconic; that is, they have some form relationship to the objects they represent.31 Musical notes are rarely used as symbols. But Upper Paleolithic sites have yielded some engraved and painted signs that probably refer to things to which they lack any obvious form relationship. They include animal bones engraved with sequences of marks that have been interpreted as mnemonic devices (or “artificial memory systems”). Some of these artifacts may have been used as lunar calendars.32

The most symbolic form of external thought—syntactic language—is not directly represented in the archaeological record until the development of writing. And language is almost universally regarded as the centerpiece of modernity. It is the pattern of creativity in the archaeological record of anatomically modern humans after 50,000 years ago that provides the strongest indication of language. The variable structures of Upper Paleolithic art and technology exhibit a complex hierarchical organization with embedded components that resemble the subordinate clauses of phrase structure grammar.

The Phenomenon of Mind

One can hold that the mental is emergent relative to the merely physical without reifying the former. That is, one can maintain that the mind is not a thing composed of lower level things—let alone a thing composed of no things whatever—but a collection of functions and activities of certain neural systems that individual neurons presumably do not possess.

One of the most important technological breakthroughs in human history occurred in the late thirteenth century. In the years leading up to 1300, someone in Europe—whose identity remains a mystery—created the escapement mechanism for a weight-driven clock. The escapement controls the release of energy (in this case, stored in a suspended weight), which is essential to producing the regular beat of a clock. The perceived need for a timepiece more reliable than the water clock or sundial apparently arose from theology and the requirements of ritual—the obligation to offer prayers at specific times during the day. During the fourteenth century, as clocks spread across western Europe, they acquired secular functions as well.33

Clock makers generated a number of major improvements and innovations in the years that followed (for example, the spring drive around 1500) and clock making became the supreme technical art of Europe. The impact of the mechanical clock extended far beyond the making and using of clocks, however, and the historian of technology Lewis Mumford famously designated it the “key machine of the modern industrial age” (figure 1.6) The clock not only influenced other mechanical technologies after the thirteenth century, but became a model and metaphor for the universe—intimately connected to the mechanistic worldview that emerged in the sixteenth through eighteenth centuries. The Europeans began to think of the universe as a gigantic machine and God as a clock maker.34

Figure 1.6 The early weight-driven clocks were the digital computers of the sixteenth century and helped establish the mechanistic worldview of the modern age. (Redrawn from Donald Cardwell, The Norton History of Technology [New York: Norton, 1995], 39, fig. 2.1)

How did humans fit into this brave new world? René Descartes (1596–1650) thought that the human body—with its various organs circulating the blood, digesting food, and so forth—might indeed be regarded as “a machine made by the hand of God,” and he compared it directly with the “moving machines made by human industry.” But he made a fundamental distinction between the body and the mind, with its “freedom peculiar to itself.” Descartes could not explain the seemingly independent workings of the mind—so clearly manifest in the infinite possibilities of language—as part of a mechanical universe, and he placed it in a separate category.35 He was reaffirming a view of the mind compatible with both Greek philosophy and Judeo-Christian theology.

Descartes’s mind–body dualism set the stage for a debate that continued through the years of the Industrial Revolution to the present day.36 For the most part, his views have been subject to intense criticism and even ridicule. His contemporary Thomas Hobbes (1588–1679) believed that the mechanistic model could be applied to the mind: “nothing but motions in some part of an organical body.”37 Most of Descartes’ critics have been appalled at the implication that supernatural forces are at work in the brain—the “ghost in the machine,” wrote Gilbert Ryle (1900–1976). Some philosophers have sidestepped this problem by recasting dualism in terms of properties (rather than substances); they envision the human mind as a material object, but one that operates according to a unique set of principles or properties.

In the mid-nineteenth century, Charles Darwin and Alfred Russel Wallace proposed a “mechanism”—natural selection—to account for the structure and diversity of living organisms and to explain how they have adapted (often in remarkable ways) to the environments they inhabit. Perhaps the most revolutionary aspect of this idea is the recognition that populations of plant and animal species are composed of unique individuals—the variation on which selection acts.38 Once again, the insights offered by technology played a role in formulating new ideas about how the world works: Darwin devoted the first chapter of On the Origin of Species to the practice of plant and animal breeding (that is, artificial selection).39

Subsequent discoveries in genetics were necessary to explain how the variation among individual organisms in a population is produced. Darwin died without knowing the underlying genetic mechanisms of the evolutionary process. This did not preclude him, however, from developing a general explanation of how organic evolution works. The later discoveries in genetics were comfortably incorporated into the natural selection model and became part of the neo-Darwinian synthesis of the twentieth century.40

Evolutionary biology provided a new framework for understanding how humans fit into the universe, and Darwin was confident that the mind as well as the body could be explained as the product of the evolutionary process. He once characterized thought as “a secretion of the brain.” Body and mind alike could be understood as primarily the result of selection for adaptive characteristics in the context of the environments that humans had inhabited over the course of their evolutionary history. Although considered something of a footnote in life-science history, Wallace dissented from this view and endorsed a dualist perspective.41

Today, a Darwinian approach to the mind is aggressively pursued by a group of people who describe themselves as evolutionary psychologists. They reflect the marriage of cognitive psychology (which arose from the ashes of behaviorism in the 1950s and 1960s, in the form of the “cognitive revolution”) and evolutionary biology. But their “computational theory of the mind” may owe as much to the twentieth-century equivalent of the mechanical clock as it does to biology and psychology theory. The evolutionary psychologists view the mind as a “naturally selected neural computer”42 that has acquired an increasingly complex set of information processing functions in response to the demands of navigation, planning, foraging, hunting, toolmaking, communication, and sociality.43 According to Steven Pinker, art, music, and other creations of the mind that contribute little or nothing to individual fitness are “nonadaptive by-products” of the evolutionary process.44

Evolutionary biology provides an essential context for the modern human mind because, despite the curious properties that it eventually acquired (that is, the “freedom peculiar to itself”), the mind is clearly derived from the evolved animal brain. At the same time, an evolutionary context helps identify the point at which the mind departs from the brain and emerges as a unique phenomenon. The story has been pieced together from the fossil record and comparative neurobiology.

The brain evolved more than 500 million years ago among organisms that had become sufficiently complex and mobile that they required information about their environment in order to stay alive and reproduce (that is, continue to evolve). The basic function of the brain or central nervous system is to receive, process, and respond to information concerning changes in the world outside the organism. From the outset, the brain has generated symbols because the external conditions (for example, increased salinity or approach of a predator) have to be converted into information; they can be represented but not reproduced inside the brain. These symbols are created in electro-chemical form by specialized nerve cells that remain the fundamental unit of the brain.45

The brain made complex animal life possible, and its appearance triggered an evolutionary explosion of new taxa that began to lead increasingly complicated lives. Over the course of several hundred million years, some of these taxa—especially the vertebrates—evolved large and complex brains that could receive and process substantial quantities of information from the external world. These brains also could store vast amounts of information (in symbolic form) about the environment, including detailed maps, and they could compute the solutions to simple problems, such as how to climb a particular tree to retrieve an elusive fruit.

From an impressive group of primates, the Old World monkeys and apes, humans inherited a large brain with an exceptional capacity for processing visual information and solving problems. As already described, humans developed the highly unusual ability to externalize information in the brain—probably as an indirect consequence of bipedalism and fore-limb specialization related to toolmaking—and this ultimately became the basis for the mind. As their brains expanded far beyond the already large brain: body ratio of the higher primates, humans evolved the unique capacity to recombine bits of information in the brain in a manner analogous to the recombination of genes (Richard Dawkins suggested that the bits of information could be labeled memes).46

The human brain began to generate information, rather than merely process it, and to create representations of things that never were but might be. It began to devise not only structures that exhibit characteristics found in living organisms (for example, hierarchical organization), but also structures that are not found in living organisms (for example, geometric design). Moreover, humans had the means—first the hand and later the vocal tract—to reverse the function of the brain: instead of receiving information about the environment, they could imagine something different and create it. Most humans now dwell in a bizarre landscape largely structured by the mind rather than by geomorphology.

The principles that underlie the capacity for creativity—the core property of language and all other media through which humans can imaginatively combine and recombine elements into novel structures—remain an elusive fruit. They have been characterized as emergent properties of the human brain yet to be understood, just as the principles of organic evolution once lay beyond comprehension.47 There is a consensus of sorts among philosophers, psychologists, and neuroscientists (as well as artificial-intelligence researchers) that these properties emerged from the immense complexity of a brain, which has been described as “the most complicated material object in the known universe.” Among psychologists, this view is labeled connectionism. Each individual brain contains an estimated 100 billion neurons and a staggering 1 million billion connections (or synapses) among the neurons.48

Modern humans added yet another layer of complexity to this most complicated object; by externalizing information in symbolic form with language, they created a super-brain. Like a honeybee society, modern humans can share complex representations between two or more individual brains. With language (as well as visual art and other symbolic media), coded information can move from one brain to another in a manner analogous to the transmission of coded information within an individual brain. Each modern human social group, usually defined by a dialect or language, represents a “neocortical Internet” or integrated super-brain. And the archaeological record reveals that it is only with evidence for externalized symbols that traces of creativity, or discrete infinity, emerged roughly 75,000 years ago.

If the principles that underlie creativity continue to elude understanding, other unique aspects of the modern human mind are readily apparent. Because of the human capacity for externalizing thought, the mind transcends not only biological space (the individual brain), but also biological time (the life span of the individual). And because, as the psychologist Merlin Donald observed, of the human capacity for externalizing thought in the form of “symbolic storage” (for example, notation and art), information may be stored outside the brain altogether.49 Indeed, there is no practical limit to the size and complexity of the human mind because it exists—and has existed for 50,000 years or more—outside the evolved brain of the individual organism. The mind comprises an immense mass of information that has been accumulating and developing since the early Upper Paleolithic.

Until recently, the mind was dispersed across the globe in many local units ultimately derived from the initial dispersal of modern humans within and eventually out of Africa about 50,000 years ago.50 New technologies have begun to effect a global integration, leading back toward what probably was the original state of the mind—a single integrated whole.

Leviathan

Consciousness is, therefore, from the very beginning a social product.

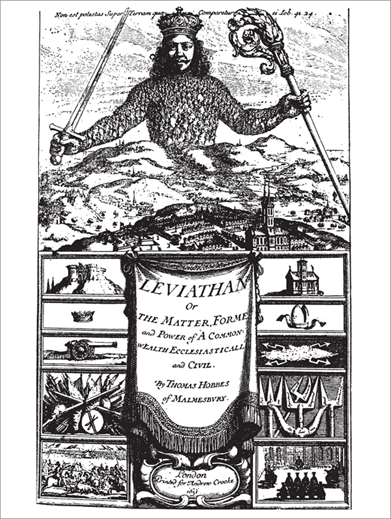

The primary focus of Thomas Hobbes’s attention was not the mind–body problem, but society and government. In 1651, he published his controversial book Leviathan, or the Matter, Forme and Power of a Common-wealth Ecclesiasticall and Civill (figure 1.7). Not long after his death in 1679, Oxford University called for burning copies of it.

Hobbes reversed Descartes’s comparison of the living body to a machine and asked: “[W]hy may we not say that all automata (engines that move themselves by springs and wheeles as doth a watch) have an artificiall life?”51 He promptly extended this notion to human society itself, which he described as “that great Leviathan … which is but an Artificiall Man.” He likened the various aspects of seventeenth-century European society to the functioning parts of the human body—magistrates as joints, criminal justice system as nerves, counselors as memory, and so forth—joined together by the ability of people to form a collective mind, “a reall unitie of them all, in one and the same person.”

By comparing human society to an organism, Hobbes was invoking a parallel with those well-known “Politicall creatures” bees and ants, which are today included among the eusocial, or true social, insects. At the beginning of the twentieth century, the entomologist William Morton Wheeler proposed that an ant colony be considered a form of organism, and some years later he introduced the term super-organism to describe it. An ant colony, he noted, functions as a unit, experiences a life cycle of growth and reproduction, and is differentiated into reproductive and nonreproductive components.52 The super-organism concept may also be applied to bees, wasps, and termites. It fell out of favor for some years, but has been revived by Edward O. Wilson and others in recent decades.53

Hobbes seems to have been aware of the differences as well as the parallels between human and insect society. While among ants and bees “the Common good differeth not from the Private,” in human society, “men are continually in competition for Honour and Dignity” and “there ariseth on that ground Envy and Hatred, and finally Warre.” Even worse, while ants and bees follow the administration program, “amongst men, there are very many, that thinke themselves wiser, and abler to govern the Publique, better than the rest; and these strive to reforme and innovate, one this way, another that way; and thereby bring it into Distraction and Civill warre.”54 In evolutionary biology terms, the differences between eusocial insect and human sociality reflect the contrast between a society based on a superorganism and one based largely on a super-brain.

Figure 1.7 Leviathan: The frontispiece of Thomas Hobbes’s famous book about society and government, published in 1651, depicts a monarch as being composed of all the individuals in his kingdom, which Hobbes described as “an Artificiall Man.” Modern human behavior seems to have emerged with the integration of brains through symbolic language, which allows representations to move from one brain to another analogous to the flow of information in an individual brain.

The key to the insect super-organism is the close genetic relationship among its members. The issue was addressed by Darwin, who—noting that the existence of nonreproductive castes among these species seems to contradict the natural selection model—observed that among social insects, “selection may be applied to the family.”55 The concept was eventually termed inclusive fitness and simply recognizes that cooperative behavior will evolve among close relatives when it increases the frequency of shared genes in the population—in these insects, among sibling workers.56 Reproductive biology is thus another important factor among eusocial animals; they must be capable of producing many offspring to fill the ranks of their societies.57

Outside the immediate family, of course, modern human society seems to be based more on the sharing of ideas than of genes. As the evolutionary geneticist Richard Lewontin discovered several decades ago, the overwhelming majority of genetic variability (85 percent) lies within rather than between living human populations.58 The perceived kinship of individuals within races and ethnic groups is largely illusory—these are shared mental representations rather than biological units. Even in hunter-gatherer societies, where the family and clan are the basic units of organization, marriage practices (that is, the incest taboo) ensure a comparatively low coefficient of relationship.

While honeybees evolved both a super-brain (sharing representations by means of the “honeybee dance”) and a super-organism, humans—constrained by their reproductive biology—evolved only the former. The result, it seems to me, is that human societies are inherently dynamic and unstable because the shared thoughts or information that creates the super-brain is constantly disrupted by the competing goals (“Civill warre”) of their organic constituents (individuals and/or families).

The modern human super-brain nevertheless seems essential to the existence of the mind. As noted earlier, archaeological evidence suggests that creativity—which has been critical to the growth and development of the mind—was possible only with the confluence of individual brains effected by language and other forms of symbolic communication. And the integrative mechanisms of the super-brain (that is, the externalization of representations in an individual brain) are the means by which the mind exists beyond biological space and time, accumulating non-genetic information (much of it stored outside individual brains) from one generation to the next. Why hasn’t the concept been more central to the social sciences? It must be remembered that while copies of Leviathan are no longer burned, Hobbes’s ideas remain controversial; they seem perfectly compatible with modern totalitarianism. Both the religious and the political doctrines of the modern age that he helped introduce stress the importance of individual choice and action.

Most people are confident, moreover, that creativity is an individual possession, not a collective phenomenon. Despite some notable collaborations in the arts and sciences, the most impressive acts of creative thought—from Archimedes to Jane Austen—appear to have been the products of individuals (and often isolated and eccentric individuals who reject commonly held beliefs). I think that this perception is something of an illusion, however. It cannot be denied that the primary source of novelty lies in the recombination of information within the individual brain, apparently concentrated in the prefrontal cortex of the frontal lobe.59 But I suspect that as individuals, we would and could accomplish little in the way of creative thinking outside the context of the super-brain. The heads of Archimedes, Jane Austen, and all the other original thinkers who stretch back into the Middle Stone Age in Africa were stuffed with the thoughts of others from early childhood onward, including the ideas of those long dead or unknown. How could they have created without the collective constructions of mathematics, language, and art?

A few years ago, the anthropologist Robin Dunbar suggested that the pronounced expansion of the human brain before the emergence of modernity was tied to human social behavior. Observing a correlation between the size of the neocortex and the size of the social group among various primates, Dunbar concluded that the enlarged Homo brain is a consequence of widening social networks and that language evolved as a means to maintain exceptionally large networks.60 I would suggest a slightly different version of the “social brain hypothesis.” Modern human sociality is based primarily on the sharing of information—massive quantities of information—which requires considerable memory-storage capacity. I think that humans evolved oversize brains when they began to share very large amounts of information.

The super-brain and the collective mind might explain fundamental aspects of modern human cognition, including what philosophers refer to as intentionality—the ability to think about objects and events outside the immediate experience of an individual—as well as the “sense of self” and even consciousness. I think it is possible that all these phenomena are consequences of a collective mind (even if it has no collective sense of self or consciousness). If we conceive of the super-brain as a cognitive hall of mirrors that permits each individual to reflect the thoughts of others, perhaps it provides the basis for mutual recognition of the thinking individual. Perhaps it is a biological imperative for the constituents of a super-brain—who are not components of a super-organism—to firmly distinguish themselves as individual thinkers within a collective mind.

In recent years, partly as a result of advances in brain-imaging technology, neuroscientists like Gerald Edelman have begun to invade areas once left to philosophers. Edelman draws a fundamental distinction between primary consciousness and higher-order consciousness, and he suggests that the more evolved metazoans like birds and mammals possess primary consciousness: “the ability to generate a mental scene in which a large amount of diverse information is integrated for the purpose of directing present or immediate behavior.”61 Animals with primary consciousness appear to lack a sense of self or a concept of the past or the future. In fact, they seem to lack any ability to think in conceptual or abstract terms.

Only modern humans possess what Edelman refers to as higher-order consciousness, which entails an awareness of self, abstract thinking, and the power to travel mentally to other times and places. This apparently unique form of consciousness is possible only with “semantic or symbolic capabilities.” In order to think about objects and events that are removed from the immediate environment of an organism, symbols shared among multiple brains like words and sentences are necessary. Thus Edelman links higher-order consciousness to language. But the uniquely human form of consciousness is also inextricably tied to social interactions because language is a social phenomenon; the meanings of words and sentences are established by convention (and become a component of the collective mind).62

Philosophie der Technik

Technology is a way of revealing. If we give heed to this, then another whole realm for the essence of technology will open itself up to us. It is the realm of revealing, i.e., of truth.

The earliest formal use of the term “philosophy of technology” is ascribed to a rather obscure German-Texan named Ernst Kapp (1808–1896). Kapp was a “left-wing Hegelian” who sought to develop a materialist version of Hegelian philosophy like his more famous contemporary Karl Marx. Charged with sedition, he fled Germany in the late1840s and settled in central Texas. Kapp eventually returned to Germany and, in 1877, published Grundlinien einer Philosophie der Technik, which outlines a philosophy of technology—apparently reflecting his experience in the Texas wilderness—based on the notion that tools and weapons are forms of “organ projection.”63

Although the influence of his ideas was limited, Kapp initiated a philosophical tradition in Germany that continued into the twentieth century. It drew on the thinking of major figures in German philosophy, especially Immanuel Kant. Frederick Dessauer (1881–1963) suggested that technology provides the means to engage what Kant had defined as “things-in-themselves” (noumena) as opposed to mere “things-as-they-appear” (phenomena). Dessauer perceived that only the craftsman and inventor encounter noumena, not the factory assembly-line worker and the consumer of mass-produced technologies.64 Like Kapp, Dessauer had to flee Germany for political reasons—in his case, after the Nazis came to power in 1933.

Ironically, perhaps, the clearest philosophical statement on technology may have come from Martin Heidegger (1889–1976), who was both a neo-Kantian and a member of the Nazi Party until 1945. Several years after the end of the war, Heidegger gave a series of lectures in Bremen that included thoughts on philosophie der technik. He was sharply critical of what he referred to as the “instrumental” view—that technology is merely “a means and a human activity” analogous to the use of natural objects by some animals. Instead he suggested, apparently building on both Kant and Dessauer, that technology is a “way of revealing” the world—of acquiring knowledge and learning the “truth” (Wahrheit). Technology “makes the demand on us to think in another way.”65

Why wasn’t technology more widely and fully addressed by philosophers in western Europe or other parts of the industrialized world? Given the pivotal role of technology in transforming European society and thought after 1200, the avoidance of a philosophy of technology outside Germany seems more than odd; it borders on the pathological. During the twentieth century, one factor was an acute awareness of the negative effects of many new technologies; between 1914 and 1945, the most striking practical applications of chemistry and physics seemed to be high-tech murder.

There was a tradition with deeper roots in the Industrial Revolution, expressed primarily through literature and later through film, of regarding technology as a separate and potentially threatening entity. The theme was woven into Mary Shelley’s Frankenstein in the early nineteenth century and taken up a few years later—in response to Darwin—by the novelist Samuel Butler, who warned that machines were evolving faster than plants and animals and subordinating humans to their needs.66 The dangers of runaway technology have remained a steady theme in Western popular culture.

But technology is an essential part of the mind, and a philosophy of technology must be incorporated into any theory of mind. Technology stands in relation to the mind—an integrated and hierarchically structured mass of information—as the phenotype of an organism stands in relation to its genotype. Technology is the means by which the mind engages the external world, as opposed to language and art, which are the means by which parts of the mind communicate with each other. The distinction reflects the observation that the world external to the brain is not the same as the world external to the mind, since much of the mind already exists outside the brains of individual humans.

Comparative ethology suggests that a lengthy history of animal “technology” probably exists among many lineages. It includes the use and modification of natural features and objects by a diverse array of complex animals—for example, the excavation of intricate burrow systems and the construction of nests from many parts. Humans inherited a particularly impressive pattern of tool use from the highly manipulative primates. As Jane Goodall revealed several decades ago, this pattern includes the making of simple tools, such as termite-fishing implements, by chimpanzees in the wild.67

The emergence of the human mind nevertheless transformed technology into a very different phenomenon. With their talent for externalizing mental representations by their hands—first evident among some African hominins by 1.7 million years ago—humans acquired the ability to translate their thoughts into phenotypic form.68 As noted earlier, they reversed the function of sensory perception by converting the electro-chemical symbols in the brain into objects that had no prior existence in the landscape. At first, the structure of these objects was simple, but larger-brained humans who evolved roughly 500,000 years ago began to make more complex, hierarchically structured implements. The creative potential of the modern human mind, evident in the archaeological record after 100,000 years ago, removed all practical limits to the complexity of technology.

From the outset, this new form of technology was a collective enterprise. If each Lower Paleolithic biface was unique, like each individual within a population, the mental template of each biface was the same (or very similar) and clearly was shared among many individual brains in space and time. The emergence of symbolic communication in the form of syntactic language created a super-brain and thus vastly increased, it seems, both the computational and the creative powers of human thought by pooling the intellectual resources of each social group.

The unprecedented size of a few middle Upper Paleolithic settlements indicates that the number of constituent brains within social groups in some places, even if assembled only temporarily, had increased significantly by 25,000 years ago. These groups possessed a larger and presumably more powerful super-brain. More permanent-looking settlements of comparable and even greater size appeared after the Last Glacial Maximum, and some of them anticipated the sedentary villages and farming communities of the postglacial epoch.

The number of individuals in residential farming communities, which experienced explosive growth in some places after 8,000 years ago, triggered a radical reorganization of society and economy along rigid hierarchical lines. The mind of the early civilizations reflected this hierarchical socioeconomic structure. Two trends already evident during the preceding period were greatly expanded: the specialization of technological knowledge and the (hierarchical) organization of collective undertakings in various technologies (for example, irrigation systems and public buildings). New technologies facilitated the communication, manipulation, and storage of information.

The size and organization of the civilized collective mind produced technology on a vastly greater scale of complexity, although the rigid hierarchies of the early civilizations seem to have constrained creativity and suppressed its potential for many centuries. West European societies somehow loosened these constraints after 1200 and began to create an industrial civilization. The complex technologies of late industrial and post-industrial societies reflect the enormous growth of specialized knowledge and the hierarchical organization of technology. Robert Pool described the construction of a complex piece of technology in the modern era: “No single person can comprehend the entire workings of, say, a Boeing 747. Not its pilot, not its maintenance chief, not any of the thousands of engineers who worked on its design. The aircraft contains six million individual parts assembled into hundreds of components and systems…. Understanding how and why just one subassembly works demands years of study.”69

The immense growth of human populations and the rise of complex organizations in the postglacial epoch were consequences of the steady accumulation of knowledge about the world—knowledge about how to manipulate and redesign the landscape to suit human needs and desires. And most of this knowledge was derived from what Heidegger referred to as the “revealing” power of technology. It was not accumulated by individual humans or even by the super-brain, but was incorporated into the collective mind from one generation to the next.

Today most humans inhabit an environment that bears little resemblance to the naturally evolved landscapes their ancestors occupied a million years ago. The processes of neither Earth history nor evolutionary biology yield the geometric patterns of cities, suburbs, and rural agricultural landscapes that now cover much of the land surface of the planet. These patterns are generated in the mind and projected onto the external world. When human societies were uniformly small and the ability to manipulate the world was limited, their impact was modest. But as populations grew and the body of collective external thought accumulated and expanded, entire landscapes were transformed—one of the most striking consequences of human evolution.

The roots of the mathematical patterns of thought lie deep in the evolutionary past of the brain. As the metazoan brain evolved for information processing and directing the responses of other organs, including navigation, selection clearly favored efficiency (for example, calculating and executing a straight-line path to or from an object or another animal). Thinking evolved along mathematical lines that are not characteristic of organic design, which may often exhibit symmetry, but not fundamental geometric patterns such as triangles and squares. When humans began to translate thought into material objects and features, they imposed new forms on the landscape. Many of these forms reflected the geometry of the mind.

More than a million years ago, humans apparently were externalizing thought only in the form of reshaped pieces of stone and (presumably) wood. The hand axes they produced exhibit a symmetrical oval design in three dimensions that does not necessarily reflect a geometric pattern of thought, and the “spheres” that date to this period seem to represent uniformly battered fragments of rock.70 During the later phases of the Middle Stone Age in Africa (after 100,000 years ago), simple geometric designs in two dimensions were engraved on mineral pigment, and similar designs appear on both engraved and painted surfaces during the early Upper Paleolithic in Eurasia (after 50,000 to 40,000 years ago). Occupation features that might reflect the imposition of simple geometric patterns on the ground include linear arrangements of hearths and circular pits in middle Upper Paleolithic sites (around 25,000 years ago).

It is not, however, until the late Upper Paleolithic (after 18,000 years ago) that unambiguous examples of geometric design appear on the landscape, in the form of square and rectilinear house floors. The art of the period is also rich in paintings, engravings, and carvings of various geometric patterns. In the early postglacial epoch, villages comprising an amalgamation of rectilinear houses were constructed in the Near East, and they eventually were superseded by towns and urban centers with street systems and public monuments and plazas. Villages, towns, and cities were surrounded by agricultural landscapes composed of orthogonal irrigation networks and linear crop rows.71

Most of the human population now resides in a bizarre environment structured by the collective mind. Rock, soil, and water bodies have been altered, and naturally evolved ecosystems have been recreated as lawns, gardens, and planted fields. Many humans spend the entire day within the confines of rectilinear walls, stairways, doors, and sidewalks. Moreover, humans born into this landscape of thought internalize it as they mature, and the process is reversed: the external structure is incorporated into the synaptic pathways of the developing mind.

A Fossil Record of Thought

The archaeological record is constituted of the fossilized results of human behavior, and it is the archaeologist’s business to reconstitute that behavior as far as he can and so to recapture the thoughts that behavior expressed. In so far as he can do that, he becomes a historian.

In the early twenty-first century, there is no field of research more exciting than neuroscience. This is largely a consequence of brain-imaging technologies developed in the 1970s and later, which provide measures of neural activity. The new technologies include computed tomography (CT), positron-emission tomography (PET), and functional magnetic resonance imaging (fMRI). For the first time, researchers can observe brain function—including proxy measures of thought itself—in a manner analogous to the examination of physiological functions like blood circulation and digestion. The increasingly broad application of the new techniques has produced many revelations and surprises,72 and some neuroscientists have begun to address questions once left to philosophers of the mind.

If current developments in neuroscience offer unprecedented insights into the brain and the mind, the field of archaeology would seem to be one of the least promising avenues. Archaeology began as a hobby for collectors of antiquities and emerged during the nineteenth century as a discipline devoted to the documentation of material progress. Human prehistory was initially subdivided into three successive ages: Stone, Bronze, and Iron. The emphasis on progress reflected a European perspective on history and humanity that dominated but did not survive the nineteenth century.73

V. Gordon Childe is often quoted for his observation that the material objects of the archaeological record must be treated “always and exclusively as concrete expressions and embodiments of human thoughts and ideas.”74 Archaeologists have tended to treat them, however, more along the lines of what Martin Heidegger labeled “instruments.”75 The artifacts and features of the archaeological record typically have been viewed as a means to an end—survival and progressive improvement—somewhat analogous to the computational theory of mind. The instrumental approach was on the march in the years following World War II, as many archaeologists adopted a systems ecology perspective.76

In the final decades of the twentieth century, a reaction arose against this approach (often condemned with the ultimate epithet “functionalism”), and the views of Childe were recalled.77 In the early 1980s, the British archaeologist Colin Renfrew urged his colleagues to develop an archaeology of mind, or cognitive archaeology.78 In 1991, the psychologist Merlin Donald published a book about the origin of the mind in which he observed that modern humans are storing thoughts outside the brain.79 This idea made its way into paleoanthropology and stimulated further reflection and debate among archaeologists. Renfrew and other cognitive archaeologists began to think about the way the mind engages the material world through the body.80

How does the cognitive archaeologist go about collecting and analyzing “human thoughts and ideas” from the archaeological record? If written records or accessible oral traditions are present, they provide an interpretive context for the archaeological materials. In most prehistoric settings, written and oral sources are absent, but other types of symbols are—at least after 75,000 years ago. If they are iconic (for example, a female figurine), their referents are knowable, if not their possible wider meaning (perhaps fertility). But if they are abstract symbols with an arbitrary relationship to their referents, their encoded meanings may be unknowable. And before 75,000 years ago, symbols seem to be absent altogether from the archaeological record.

Instead of trying to recover content and meaning, the cognitive archaeologist can focus his or her attention on the structure of externalized thoughts. This opens a wider and deeper field of inquiry because humans have been externalizing their thoughts in nonsymbolic form for more than 1.5 million years, creating a fossil record of thought that is unique in the history of life. How are these representations organized? What is the structural relationship among the elements—digital and/or analogical—from which they are composed? How many hierarchical levels and embedded components can be identified? How much creative recombination of elements is manifest among different artifacts? Moreover, the symbolic representations that emerge after 75,000 years ago exhibit their own structure.81

In fact, the formal analysis of structure had its origin in the study of language (that is, symbolic representation). It was initially developed by Swiss-born linguist Ferdinand de Saussure (1857–1913), who taught in Paris during the early years of the twentieth century. Structuralisme was applied in other fields by various French scholars, including the ethnologist Claude Lévi-Strauss (1908–2009), who analyzed the structure of kinship, myth, song, and other aspects of “primitive” culture.82 André Leroi-Gourhan (1911–1986) developed a structuralist approach to Paleolithic archaeology; not surprisingly, perhaps, he saw parallels between language and tool-making. Although he became famous for his study of cave art, his most significant contribution was the chaîne opératoire, or the sequence of steps involved in making an artifact.83 Structuralism later faded in popularity, but the sequential analysis of artifact production became a core element of archaeology. It is an important source of information about the increasingly hierarchical structure of artificial representation in human evolution.84

Much of this book is devoted to the modern mind, which emerged before 50,000 years ago in sub-Saharan Africa and spread across the Earth with anatomically modern humans (Homo sapiens). The advent of the modern mind is discernible only through the fossil record of thought (the modern human cranium had earlier acquired its volume and shape). As already noted, the archaeological record reveals the potentially infinite capacity for creative recombination of elements in various media among Homo sapiens after 50,000 years ago and at a level of structural complexity commensurate with that of living people. And this evidence coincides with archaeological traces of symbolism—the sharing of complex mental representations through icons and digital symbols in the form of art and, by implication, spoken language—which was a prerequisite for the super-brain and collective mind. In fact, there are now indications of the collective mind at least 75,000 years ago in the form of abstract designs incised into mineral pigment. But broader spectra of space and time are still required to document creativity on a modern scale (that is, the later Middle Stone Age of Africa and the early Upper Paleolithic of Eurasia and Australia).

With the emergence and dispersal of the modern mind, the process of accumulating knowledge in the form of information shared among individual brains and stored in many forms of external thought began in earnest. Much of this knowledge was acquired through the process of technological engagement with the external world (or with “things-in-themselves,” as Kant would say). The information was stored, communicated, and manipulated in many different forms (language, visual art, music, tools, and so on), and at least some of these media were preservable materials. Most of the subsequent growth and development of the modern mind occurred in the absence of written records. Nevertheless, the accumulating mass of information and knowledge is partially observable in the fossil record of thought—the archaeological record.

As human populations in some places experienced explosive growth after the Upper Paleolithic—a consequence of their accumulated knowledge, as well as favorable climate conditions—the mind became immense. Not only were hundreds of thousands of individual brains contributing to the collective mind in places like Mesopotamia and northern China, but the storage of information had reached vast proportions. These societies reorganized themselves, often with violence or threats of violence, along rigid hierarchical political and economic lines. The collective mind also was reorganized into a more hierarchically structured entity with specialized compartments of thought (for example, metallurgy and architecture). Writing was one of several means by which the sprawling mind of civilization was integrated and organized, and it eventually broadened the record of thought in profound ways.

This book also is about the origin of the mind, or what the archaeologist Steven Mithen described as the “prehistory of the mind.”85 The fossil record of thought provides critical data here as well, although in necessary conjunction with the other fossil record: the skeletal remains of earlier humans. This is because developments in human evolutionary biology—including changes in the brain, hand, and vocal tract—over several million years are implicated in the origin of the mind. The fossil record of thought offers a glimpse of the externalized mental representations of early humans, which not only are less structurally complex than our own, but also exhibit little creative variation; most of the latter appears to be random and aimless. I suspect that these early specimens of external thought played a role in the origin of mind and that they are more than a fossil record of early thought or products of what might be referred to as the proto-mind.

The emergence of the mind probably is connected to external thought; the projection of mental representations outside the brain by means of the hand and, later, the vocal tract is related to the expansion of the brain and the development of its unique properties of creativity and consciousness. As a result of bipedalism, the early human brain began to engage directly with the material world not simply by moving the body, but also by externalizing mental representations—imposing their nongenetic structure on the environment with the hand—and thus establishing a feedback relationship between the brain and the external thoughts, as well as among the multiple brains within each human social group. Making an artifact is a way of talking to oneself. As the brain expanded and the synaptic connections grew exponentially, the externalized thoughts became increasingly complex and hierarchical, as well as more creative.

Once humans began to shape natural materials in accordance with mental templates (around 1.7 to 1.5 million years ago), they began to populate their environment with physical objects that reflected the structure of mental representations (specifically, artificial representations). Semantic thoughts acquired a presence outside the brain, and they were visible not only to the individuals who made the artifacts but also to others; they became perceptual representations of unprecedented form (that is, the brain receiving representations of its own creation). The artifacts were talking back. These circumstances created a reciprocal relationship between internal and external thought, as well as a dynamic interaction among individuals projecting and receiving artificial representations.

As the thoughts externalized by humans became increasingly complex (beginning after 500,000 to 300,000 years ago), the synaptic pathways of the brain, where information is stored, contained a growing percentage of received representations generated by other human brains. Before the emergence of language, representations would have been communicated among individuals and across generations in the form of artifacts. With their hands, humans could manipulate and recombine the elements of these external representations. And at some point (perhaps not until after 500,000 years ago), external representations in the form of more efficient implements were almost certainly having a measurable impact on human ecology and individual fitness—another reinforcing feedback loop (that is, natural selection).

The critical transformation from the proto-mind to the mind probably began roughly 300,000 years ago, when brain volume had attained its modern level and the vocal tract was either evolving or had already evolved its modern human form. Changes in the shape and size of the brain probably were important, including relative expansion of the prefrontal cortex and temporal lobes. The archaeological evidence, which includes composite tools and weapons, as well as a variety of small biface forms, indicates the ability to externalize representations in a more complex and hierarchical form with greater variation in design (that is, more creative).86

Among unanswered questions about human evolution, the origin of language is still at the top of the list. In some respects, it is more of a mystery than consciousness itself. But if the immediate causal factors that lie behind the emergence of spoken language remain obscure (perhaps a genetic mutation), the ultimate source most probably is the increasingly creative manipulation of materials with the hands—the ability to recombine the elements of artifacts on multiple hierarchical levels. The same cognitive faculties apparently underlie language and toolmaking. And at some point (perhaps about 100,000 years ago or earlier in Africa), the vocal tract acquired a specialized motor function analogous to that of the hands.

Archaeologists have long speculated about the parallels and possible evolutionary relationship between creating artifacts and constructing sentences.87 The subject received a good deal of attention during the 1960s and 1970s, beginning with Leroi-Gourhan. In 1969, Ralph Holloway—a specialist on the evolution of the human brain—wrote a classic paper on the underlying similarities between language and toolmaking.88 Others who articulated this view included the American archaeologist James Deetz and the South African paleoanthropologist Glynn Isaac.89 In the decades that followed, the parallels were pursued with less vigor, owing perhaps to the rise of the “modular model” of the mind, which implies separate neural structures for each language and toolmaking.90 But in a major paper published in 1991, the psychologist Patricia Greenfield restated the case for a “common neural substrate” for language and “object combination.”91

Brain-imaging research during the past two decades has eroded support for the modular model and yielded new information on possible neural connections between language and tools. In general, brain-imaging studies have revealed a high degree of interconnectivity among the various regions of the brain.92 Some recent PET data indicate activation of many of the same areas for spoken and gestural language, supporting earlier views of neural connections between vocal tract and hand function.93

The turning point—the emergence of the modern mind—was the combined appearance of syntactic language, unlimited creativity, and the super-brain. The fossil record of thought seems to indicate that these developments were more or less coincident, and they are logically interrelated, if not inseparable. Syntactic language created the super-brain; earlier forms of shared thought would seem to have been too limited. At the same time, the creative manipulation of information that language represents was a social phenomenon that could exist only among multiple brains in biological space and time. And the exponential growth in neuronal network complexity engendered by the integration of brains, already oversize, in a human social group may have been the requisite basis for unlimited creativity expressed in language and other media.

The seeming suddenness of these developments has inspired the suggestion among some paleoanthropologists that a genetic mutation may have provided the trigger. Possible mutations have been hypothesized in the areas of speech and working memory.94 Moreover, there have been attempts to research this problem with genetic data from both living and fossil humans.95 It turns out that there is yet another fossil record—composed of DNA rather than mineralized bones or thought—that holds potential for understanding the origin of the mind.