Where there is great power there is great responsibility.

Winston Churchill (1906)

Design computing is just one of several names for the discipline emerging at the intersection of design, computer science, and the built environment. Others, each reflecting a slightly different viewpoint, include architectural computing and computational design, algorithmic design and responsive architecture. In this discipline issues of theoretical computability mix with questions of design cognition, and personal design intuition runs head-on into state-space search. The overlaps have spawned new areas of inquiry and reinvigorated existing ones, such as smart environments, pattern languages, sensors and machine learning, visual programming, and gesture recognition.

The study of design computing is concerned with the way these disciplinary threads interweave and strengthen one another; it is not about learning to use a particular piece of software. Of course, along the way you may acquire skills with several pieces of software, because the best way to understand computing machinery is to work with it, but the goal is to master the concepts that underpin the software, enabling you to attack new problems and new programs with confidence and get up to speed with them quickly.

To begin this study, we’ll look at each of the three major topics on its own, from the inside, and then consider their interaction; but let us begin by setting the broad cultural context—the outside view—for these subjects.

Design Computing: An Uneasy Juxtaposition

Design and computing are both perceived as moderately opaque topics by many people, for somewhat opposite reasons, and those who readily understand one seem especially likely to find the other challenging. Design is often described using poetic metaphors and similes (e.g., Goethe’s “Architecture is frozen music”), while computing is covered with various sorts of obscure detail-oriented jargon and techno-speak (e.g., “The Traveling Salesman Problem is NP-complete”). Both vocabularies, while meaningful to the insider, may leave others feeling cut off. Nonetheless, effective use of computing tools in pursuit of design or development of new digital tools for conducting design requires both kinds of knowledge. In this chapter we will explore the relevant parts of both vocabularies in language that most readers will be able to understand and utilize, setting both against the background of the built environment itself.

In an era heavily influenced by concepts drawn from science, design remains mysterious, often portrayed as producing new and engaging objects and environments from the whole cloth of “inspiration.” Within design professions, especially architecture, those who actually do design usually occupy the top of the power structure, in positions reached after many years of experience. Outside of the design professions there is increasing respect for the economic and social potential of design through innovation, creativity, and thinking “outside the box.” At the same time, more and more actual design work takes place inside the black boxes of our computing tools. Unfortunately, the fit between the mysterious design task and the highly engineered digital tools is poorly defined, with the result that users often revel in newfound expressivity and simultaneously complain about the tool’s rigid constraints.

Design remains mysterious in part due to the way designers are trained—while consistently described in terms of process and iteration, students commonly learn to design by being dropped into the deep end of the pool, told to do design, and then invited to reflect on the activity with a mentor, gradually developing a personal design process (Schön 1984). While that might seem to encourage simple willfulness, you instead find design described as reconciling the seemingly irreconcilable, and resistant to precise definition.

In contrast, computer technology, both hardware and software, is built on an explicit foundation of physics and electronics rooted in physical laws and absolute predictability. They do what we design them to do. Perhaps this means they cannot be creative, as Lady Ada Lovelace and others have avowed (see Chapter 12), but whatever the ultimate answer is to that question, systems have become so complex in their operation as to produce an increasing impression of intelligent creative results. In the end, the challenge seems to be how best to use these highly predictable machines to assist an unpredictable process.

Similarities

Though traditional views of design and computer programming display great differences, they both involve detailed development of complex systems. In addition, computer programming is often learned in much the same way as design—through doing and talking—and computer science has even adopted the term pattern language—a term originally coined by architect Christopher Alexander—to describe efforts in software design to name and organize successful strategies.

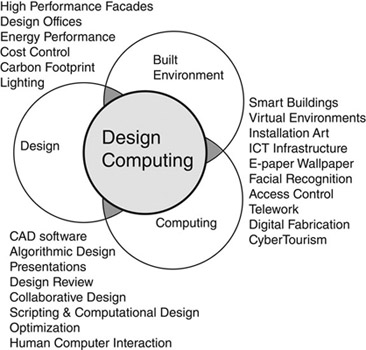

Design computing is concerned with the interaction of design, buildings, and computing. As illustrated in Figure 1.1, this can be broken down into three bi-directional pairings: computing and design, design and building, building and computing. Each contributes to design computing.

Computers are used heavily as a production technology in architectural practice, offering opportunities to the designer in the form of CAD software and digital media, but there is more to it than improved productivity. Digital tools enable entire new categories of shape to be conceived and constructed, but they also invite architects to participate in design of virtual environments for games and online activities. At the same time, the tools influence relationships within offices. Designing with digital tools is changing how we think about routine design and leading to whole new theories of “the digital in architecture” (Oxman and Oxman 2014).

Computing is already an important component of construction planning and execution, used in scheduling and cost estimating, checking for spatial conflicts or clashes, contributing to quality control, robotic fabrication of components, and progress tracking. These applications take advantage of data generated during the design process, and these construction insights can often improve design if incorporated into the process early on. As a result, traditional contractual relationships within the industry are under pressure to change.

Figure 1.1 The three components of design computing.

Less obviously, computing is one component a designer may use in fashioning a smart built environment, where it can contribute to many building systems, influencing shape, security, and energy use. Simulation tools forecast how a design will behave, but sophisticated sensors and building operations software also influence the user experience of the building’s real-time operation. Finally, as occupants of built environments and consumers of digital media, designers themselves are influenced by the changes the technology has already wrought and is in the process of delivering.

The emerging discipline of design computing is very broad, spanning the very wide set of interests outlined above, including overlaps or influences in each pair of concerns from Figure 1.1—influences that often flow in more than one direction. The investigation begins with a quick look at each of them.

The Built Environment

The built environment surrounds most of us most of the time. As a product of human culture, this environment is a slow-motion conversation between designers, builders, and users, involving designed objects from the scale of the doorknob to that of the city. This conversation is made up of personal manifestos, esoteric debate about style and importance, and public policy debate about how we should conduct our lives. Increasingly, these utterances are enabled and influenced by digital technologies used in design and construction. Digital tools are increasingly important, if not central, to both processes.

In recent years, in response to population growth and climate change, public discourse has focused attention on energy and material resources used in buildings. In 2014, according to the US Energy Information Administration, 41 percent of total US energy consumption went to residential and commercial buildings (USEIA 2015). Burning coal produces 44 percent of that energy (UCS 2016). The CO2 produced by that combustion is thought to be a significant contributor to climate change. These facts are of interest to the populace in general, as well as to designers, who must respond professionally to this situation. In fact, beginning in the 1970s, concerns about energy efficiency in buildings led to the development of sophisticated light- and energy-simulation software. These tools, in turn, have resulted in more simulation-based estimates of design performance and justification of design choices, in place of older, less precise prescriptive approaches.

At the same time, the built environment is also the frame within which most of our social and work life is played out. Its organization is widely recognized to influence social and economic behavior. Industrial activity, heavily dependent on movement of goods and materials, originally gave rise to time and motion studies and ideas of optimal layout. After World War II the idea that procedures might be more important than individual designers in determining design quality fostered the design methods movement, which was much more sympathetic to applying computational approaches to design. As computers have become more available to design researchers, techniques have grown up around complex computational or statistical approaches. Space Syntax researchers (Hillier 1984) have attempted to identify those qualities of the environment around us that delight, distract, or otherwise influence us as we move about the city or carry out work in an office setting (Peponis et al. 2007).

New technologies, from elevators to cell phones, have had both minor and profound impacts on what people do in a building, how the designers and builders work to deliver it, and how it impacts society at large. Computing plays a two-sided role, as both a tool for designers to work with (e.g., by enabling remote collaboration) and an influencer of that work. Further, as information and computing technologies (ICT) become less and less expensive, it has become possible to utilize them in different ways. Coffee shops serve as casual offices for many workers because of widespread broadband connectivity and cheap wireless access points. Many houses now include a home office to facilitate telecommuting. Smart buildings may soon recognize us, unlocking our office doors and signing us into the building as we approach. Mechanical systems already take into account weather forecasts and schedules to pre-cool or -warm meeting rooms. In the individual office, email and phone calls might be automatically held during periods of intense concentration or during meetings. Advanced packaging or marketing promises to make any surface in your environment an interactive order point for purchasing (Amazon 2015), but also for information display. It’s a very short step from there to the refrigerator that knows how old the leftovers are and when to order milk. Internet-connected toasters, thermostats, door locks, garage-door openers, energy meters, sprinklers, and lights are just part of the emerging “Internet of Things” (IoT). Provision of supporting infrastructure and selection of system elements for the IoT is very likely to fall into the space between occupant and builder—the space of the architect.

Design

It is widely held that design consists of an iterative cycle of activities in which a design idea is formulated in response to requirements and tested for sufficiency to those requirements. These activities are typically defined as analysis, in which the design requirements are formulated from a study of a problematic situation, synthesis, in which alternative solutions to the problem are conceived and documented, and evaluation, in which the solutions are tested for predicted performance and judged for suitability and optimality.

Clayton et al. 1994, citing Asimow, 1962

Investigation into how best to apply computing to design began in the 1960s, but attention to design process goes back much further. You probably already have at least a passing acquaintance with design process or products. You may have heard the Goethe quote about “frozen music” or the 2000-year-old Roman architect Vitruvius’ assertion that architecture’s goals are “commodity, firmness and delight” (Vitruvius 15 BC). Importantly, a distinction is often made between buildings with these features and what John Ruskin called “mere building” (Ruskin 1863). These aesthetic and poetic descriptions challenge us to deliver our best design, but they do not provide much of an operational or scientific understanding of design. To complicate things a bit more, architects are only licensed and legally charged to protect public safety in the process of modifying the built environment. Such concerns may seem more appropriate to “mere building,” but even those more prosaic challenges become substantial as systems for occupant comfort and safety grow more complex and consumption of materials, power, and water become more problematic.

The challenge of establishing a procedural model of design, while slippery, has been the subject of much attention. Nobel laureate economist and artificial intelligence visionary Herbert Simon, in his book The Sciences of the Artificial, argues that we should carefully study made things (the artificial) as well as natural things, and goes so far as to outline a curriculum for a “Science of Design.” Looking at design through its practice and pedagogy, philosopher and professional education theorist Donald Schön characterizes designers, along with doctors and musicians, as reflective practitioners, educated through a mix of mentoring and task immersion (Schön 1984). And, of course, while the nature of design remains a sometimes-contested subject, designers often exhibit observable behaviors that most professional designers and educators would recognize.

For example, architects often study existing environments and they may travel extensively to do so, at which times they tend to carry cameras and sketchbooks to record their experiences. They also study the behavior of people; they meet with users and owners of proposed buildings; they sketch, they observe, they paint; they draft detailed and carefully scaled plans, sections, and elevations as legal documents; they select colors, materials, and systems; they persuade community groups, bankers, and government agencies; and they consult with a variety of related specialists.

Armed with such observations, academics, software developers, and architects have developed many digital design tools by creating one-to-one digital replacements or assistants for the observed behaviors. While this has given us efficient tools such as word processors and CAD drafting, more revolutionary impacts might be obtained by understanding the complete package in terms of its goals, cognitive frames, frustrations and satisfactions. Nonetheless, the word processors and spreadsheets produced by this approach now go so far beyond their antecedents that they have significantly disrupted and reconfigured daily office work and power. Digital design tools seem destined to do the same to design processes and offices.

Design Processes

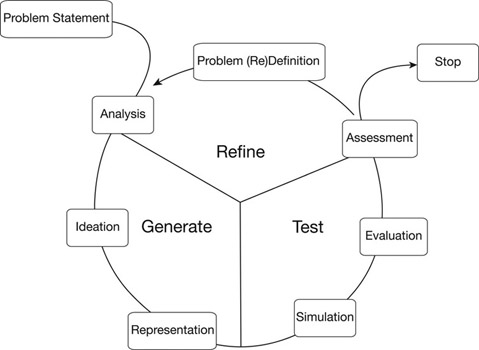

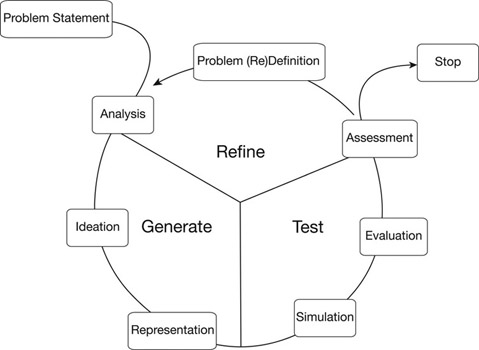

There is little debate that there are known processes for making design happen. They may not be the same for each designer, but they often include some or all of the stages shown in Figure 1.2, moving from a problem statement to analysis of the problem, generation of one or more ideas, representation of the ideas in a suitable medium, simulation of visual and/or other characteristics, evaluation of those results, assessment of the overall design, after which another cycle of refinement or redefinition carries forward until a suitable design is produced and the cycle stops. Design combines elements of both art and science, of spontaneous creativity and labored interaction with the limitations of the real world. While descriptions of art frequently reference inspiration, novelty, creativity, thinking outside the box, divergent thinking, or problem solving through problem subdivision and resolution, design is perhaps best characterized as an iterative process employing cycles of divergent and convergent thinking, aimed at solving a problem through both generation and refinement of ideas. In this process the designer analyzes the problem, establishes constraints and objectives, generates or synthesizes one or more possible solutions that meet some or all of the constraints and objectives, subjects the candidate solutions to assessment, and evaluates the results. The evaluation is used to refine the problem definition, help generate another possible solution, and so on. At some point the evaluation (or the calendar!) suggests that further change is not required or possible and the process stops.

Figure 1.2 A simplified model of the design cycle.

The description above invokes a number of concepts that connect to computing, including representation, transformation, collaboration, solution spaces, generative processes, simulation, evaluation, and optimization. Software exists to do these things with varying levels of ease and success, and more is appearing all the time. Designers may use the software because we have to or because it helps to do the job better. Our practices and professions may be changed by it, as we may individually benefit from it or be replaced by it, and we may out- or under-perform in comparison. Designers may also contribute to the development of new software, utilizing insights from practice, facilitating future practice. Finally, we may deploy software and hardware as part of our designs. To think further about these potentials we need to develop some computing concepts.

Computing

Humans have been interested in mechanical computation for centuries, but as the industrial era got rolling in the nineteenth century, Charles Babbage took on the challenge of actually producing an entirely mechanical computer, which he called the Analytical Engine. While not entirely successful in his lifetime, his designs have been realized in recent years as operational hardware. Babbage’s mechanical calculators are now seen as precursors to the electronic computers of the twentieth century, and his collaborator, Ada Lovelace, is often cited as the first programmer because of her insights into the possibilities of computation.

The Roles of Representation and Algorithm

Babbage’s names for the two main parts of his mechanical computing machine were “Store” and “Mill,” terms that still nicely capture the two main elements of computer systems, but which we now call random access memory (RAM) and central processing unit (CPU). The Store held numbers needed for subsequent calculations as well as finished results. The Mill was where the computational work was done. Information was shifted from Store to Mill, where it was processed, and then shifted back into storage. Since the Mill was capable of performing several different operations on the values from the Store, a list of instructions specifying which operations to perform and in what order was required as well. Today we would recognize that as a program.

There is no value in storing information that you aren’t going to use. But the information required to solve a problem may not be immediately obvious. In fact, the particular information chosen or used to “capture the essence” of a problem therefore models or represents the problem. It may seem that the appropriate representation is obvious, and it often is, but there are subtle interactions between cognition, representation, problems, and the programs that manipulate the representation.

The programs that control the Mill, or CPU, perform sequences of computations. Different sequences may be possible, and may produce different results or take different amounts of time. Certain commonly occurring and identifiable sequences of computational activity (sorting a list of numbers, for example, or computing the trigonometric sine of an angle) are called algorithms. The computations are both enabled and limited by the information stored in the representation, so there is a relationship between the representation used and the algorithms applied to it that also bears examination.

Of course, given an existing representation, if the software allows, we may be able to create a new set of operations to perform in order to produce new results. The contemporary interest in scripting in the field of architecture arises from this opportunity to manipulate geometry and related data through algorithms of our own devising, giving rise to terms such as computational design, emergent form, and form finding.

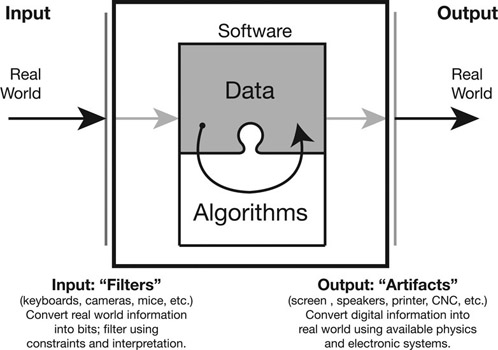

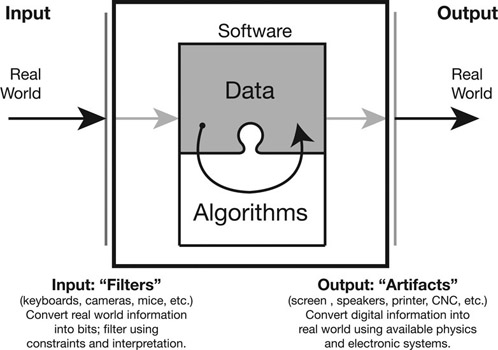

These relationships are illustrated in Figure 1.3. At the center are the interrelated data model and algorithms around which the application is built. The diagram also reminds us that the information the computer works with is not what exists in the real world, but information filtered by input hardware and software. Continuous things (time, shapes, sound) are sliced into pieces. Numbers representing sound, position, color, and even time all have finite range. There is finite storage and processing power. Mice and cameras record information to some finite pixel or step size. Commands—inputs that trigger change or output—too, are drawn from a finite palette of options. At the interface we also see adjustments to the real world—jitter taken out of video, parallax compensation on touch screens, hand-tremor and limited zoom options eliminated with object snaps and abstractions like straight line primitives, characters constrained by fonts and encoding schemes, etc. New information (e.g., action time-stamps, identity of user) may be added to the data at the same time. During output back into the real world, systems exhibit limitations as well. Displays have limited size and brightness for showing rendered scenes; they use pixels which adds “jaggies” to diagonal lines; output color and sound range are limited by the inks and hardware used; digital sound necessarily builds waveforms from square waves; step size on CNC routers limits the smoothness of surfaces, etc. While we overlook and accept many of these unintended artifacts, we ought not to forget that they occur.

Figure 1.3 The relationships between software as data + algorithm and the real world.

Symbol-Manipulating Machines

A century after Babbage, Alan Turing, World War II code-breaker and one of the founding fathers of computer science, described the more abstract symbol-processing nature of these machines and defined a hypothetical test for machine intelligence called the “Turing Test.” As with a nuts-bolts-gears-and-pulleys machine, a Turing machine is rigorously predictable in its basic operations, but like any very complex mechanism, it isn’t always apparent what the behavior will be after casual (or even careful) inspection. It is a machine, but results can still be surprising, and surprisingly useful, even smart. Turing knew this as well; his Turing Test, which defines artificial intelligence (AI) in terms of our ability to tell a human and a computer apart through communicative interactions, remains one of the standard litmus tests of AI (Turing 1950).

Virtual Machines

Computers are both familiar and mysterious for most of us—often used daily but understood only at a superficial level. In their inert, unpowered state they are just simple rectangular objects with a glass panel and buttons on them. But when powered-up, modern computers are chameleons, able to shift rapidly from role to role: typewriter, book-keeping ledger, artist’s canvas, designer’s drawing board, movie screen, or video-phone. This is often called virtuality—the quality of “acting like” something well enough that you don’t notice the difference. (Had this usage been popular sooner, the Turing Test for AI might be called a test of virtual intelligence instead). Virtuality arises from the computer’s ability to store and recall different programs, combined with its ability to present different visual environments on the screen, and our willingness to cognitively remap the (increasingly rare) “virtual” experience to the “non-virtual” one. Virtuality arises from both hardware and software features—our ability to capture, or model, interesting activities in digital form well enough to elicit a suspension of disbelief. We have been so successful at this that some familiar activities, such as word processing, spreadsheet manipulation, and web-browsing may seem inconceivable without a computer.

Design Computing

The application of computing to design began with high aspirations, but ultimately it was found that it was easier to progress by harvesting the low-hanging fruit, making one-to-one substitutions for existing tools. Beginning before the widespread access to graphic displays, the focus was on descriptive and analytical applications, not drafting applications. One-to-one replacements appeared for the most straightforward activities, including core business practices such as accounting and text-based communication in the form of spreadsheets and word processing. Research was focused on computationally heavy activities such as thermal and structural analysis, layout optimization, and specifications. While there were few interactive graphics displays, there were plotters, and plan drawings, wireframe perspectives and even animation frames produced from decks of punch cards. Interactive raster displays brought big changes.

For designers, one of the watershed moments in the history of computing came in the mid-1980s, when computers began to produce graphics. Until then computers were largely about text and numbers, not graphics, pictures, or drawings. While a graphic display was part of Ivan Sutherland’s 1963 Sketchpad research, and both vector-refresh and storage tube displays were available throughout the 1970s, graphic displays didn’t become widely available until the early 1980s. Only with the advent of the Apple Macintosh, in 1984, was the modern graphical user interface (GUI) firmly established, and the full potential of computer-as-simulacrum made visible.

Once interactive graphics appeared, much more attention was paid to drawing or drafting, to the extent that most readers may think about “computer aided design” (CAD) only in terms of drafting software. Several factors contributed to this situation in the 1980s, including the fact that 40 percent of design fees were paid for production of construction documents, two-dimensional CAD software was less demanding on hardware, and it was easy for firm management to understand and implement CAD (i.e., document production) workflows since CAD layers closely mimicked the pin-drafting paradigm in use at the time and much of the work was done by production drafters working from red-lined markups.

We can build a broader understanding of the computational approach to design by paying careful consideration to the other steps involved in the overall description of design and to the traditional strategies employed to address those challenges. These tend to include almost equal amounts of the artistic (creative ideation) and the analytical (numerical assessment). As we will see, purely computational solutions are elusive, so one of the great challenges of design computing is to find the appropriate distribution of responsibility in order to balance human and computer activities in the design process.

Computers can either facilitate or hinder design activities, depending on how they are used within the design process. While we often hear about “virtual” this and that, the truth is that computers are used to change the real world, even if that just means making us feel good about our video-gaming prowess. To accomplish this, they must transform the world (or some part of it) into a digital representation. This process depends on the features and opportunities, or affordances, of the input hardware (Gibson 1986), as well as the requirements of the internal digital model or representation, combined with the software affordances desired. Information will be added or lost in the process (for example, our imprecise hand motions might be corrected to produce perfectly horizontal or curved lines, or our image might be pixelated by our webcam’s image sensor). We might then transform that digital model in small or big ways before outputting it to the real world again, at which point the mechanical and electrical affordances of the output device (screen size, color-space of the printer, router speed or step-size, printer resolution, pen type or color in a plotter, etc.) further influence the result. By learning about these influences, we become computer literate. If we know about the internal representation and related transformations, we can take charge of our workflow.

Design Spaces

Complex representations are built up out of simpler elemental parts, in much the same way that your online gaming profile (which represents you) might contain a screen-name (text), age (number), and avatar picture (raster image). If our goal is to define a unique gameplay identity, it must be possible to represent it, giving it a value for each of the elemental parts. If you think of these as axes in a coordinate system, you can think of each profile as a (name, age, avatar) “coordinate.” The game’s profile representation can be said to offer a “space” of possible identities. The problem of creating, or designing, our unique identity requires “searching the design space” for a suitable representation that hasn’t already been claimed.

Architectural design problems involve a great many elemental components, many of which (e.g., room dimensions) can take on a very wide range of values, creating very large—even vast—design spaces (Woodbury and Burrow 2006). This means there is ample opportunity for creativity, but it complicates the question of automated computation of designs. Unfortunately, really creative human solutions sometimes involve defining entirely new representations, which is something of a problem for computers.

Interoperability: Data and Programs

Partly because of virtuality, we have to tell the computer what “tool” to be before we do something with it. That means we control what the machine does by selecting both the information we wish to process (the data) and the type of process (the program) we wish to utilize. The two parts fit together like a lock and key.

As a consequence, the data used by one program will often be useless for another, even when they might represent the same building. This characteristic has broken down somewhat in recent years, as developers have struggled to make interoperable representations, but it still remains largely the case, and it may be fundamental to the nature of computing, since alternative algorithms may require different representations and knowledge about that data. This tends to make data proprietary to the program with which it was created. It also makes it difficult to dramatically change the representation without breaking the program.

Exceptions to this general rule occur when data formats are documented and the documents made publicly available so that programs can be written to “Save As…” to the desired format, or when programs are written to accept data in a pre-existing public format, as when Industry Foundation Classes (IFC) or Initial Graphic Exchange Standard (IGES) is used to import CAD data into a construction scheduling program. Still, programmers acting with the best of intentions make alternative interpretations of documents, which can lead to incompatible implementations and frustrating responses by inflexible machines.

Computational Complexity

Even our super-fast modern computers require some time to compute a sum or compare two numbers, with the result that complex computations can take perceptible amounts of time. Since each operation requires a different amount of time to perform, each algorithm can be scored according to the time it takes. For example, during playback your MP3 player has to read the music data off the disk and perform the appropriate computations to play the next moment of music before the last one has died away. If it takes longer to compute than it does to play, there will be “drop outs” in the audio playback, or “rebuffering” in your streaming video experience.

The representation also has an impact on the time it takes to do something. Storing more data may require bigger files but reduce the complexity of the computations required to play them. Alternatively, more computation done as the music is being recorded may save file space and make the playback computations straightforward.

We can characterize many problems and their solution algorithms according to their complexity. For example, imagine you have to visit each of five cities and wish to find the lowest-cost driving route. One way to do this—one algorithm—is to compute the number of miles required for every possible route and select the route with the smallest value. Sounds simple enough, right? To investigate the complexity of this situation, you need to consider the number of possible routes. If there are five cities on our list then there are five possible “first cities.” Once a city has been visited, we don’t go back, so there are four possible “second cities.” That makes 20 combinations for cities 1 and 2. There are three “third” cities for a total of 60 combinations for 1, 2, and 3. Selecting one of the two remaining cities makes for 120 combinations. When we choose between the last two cities we indirectly choose the very last one, so the total is 120 = 5 · 4 · 3 · 2 · 1—five factorial (written as “5!”)—combinations. While other factors will contribute to the time required, the total time to compute the shortest route will depend on this “n-factorial” number. As long as we stick with the basic algorithm of “test all possible routes and select the shortest” we have to generate every route in order to test it, and n-factorial grows large very rapidly. For 20 cities it is 2.4 × 1018. Even computing 10,000 routes/second, you would need 7.7 million years to crunch the numbers. Ouch.

This problem is called the Traveling Salesman Problem and is one of the classics of computer science. The “test every route” solution algorithm is easy to describe and relies on simple computations, but due to the combinatorial explosion the total computation time required for realistic problems renders the approach useless. Unfortunately, in many design problems, as in the Traveling Salesman Problem, designers seek the “best” combination from among a very large number of alternatives. Seeking better rather than best is a more achievable goal, but it raises two questions: How do we improve a given design? And how do we know when to stop?

FIT, Optimization, and Refinement

The task of producing better designs, called optimization, requires that we solve two big problems: picking design moves and assessing design quality.

In calculus we learn that functions have minima and maxima that can be found by differentiating the equation and solving for the derivative’s zero values. Given a mathematical expression for the value of something, a direct mathematical, or analytic, means would exist for arriving at the best solution. We can, for example, compute the best depth for a beam carrying a given load. This doesn’t usually work for design because there is rarely a clear mathematical relationship between the changes you can make and the performance measures you wish to improve. If a cost estimate is too high, for example, you can’t simply make a smaller house—humans, furniture and appliances don’t come in continuously variable sizes. Instead, design involves trade-offs: Do you want more, smaller rooms or fewer, larger rooms? Smaller rooms or less expensive finishes?

Choosing the next move is not easy, nor is assessing its impact on the many dimensions of the design. Design quality is often described in terms of the “fit” or “misfit” between problem and proposed solution (Alexander 1964). Where it can be expressed as a numeric value (cost, energy use, volume, etc.), it provides a means of comparing alternatives, sometimes called a fitness, objective, or cost function, but mostly in narrow domains.

Lacking a calculus of design and condemned to incremental improvement searches, machines share with human designers the problem of needing a “stopping rule” that indicates when the design is “good enough.”

Fabrication, Automated Construction, and Mass Customization

Prior to the Industrial Revolution, products such as cookware, furniture, and housing were made by hand, and therefore pretty much unique. The Industrial Revolution ushered in the idea of factory-made objects using interchangeable parts. It also introduced the concepts of off-the-shelf or off-the-rack. By standardizing fabrication we benefit from economies of manufacturing scale that make devices such as tablet computers and smartphones affordable by most, but impossibly costly as single-unit hand-made products. In the building industry we use standardized bricks, blocks, studs, steel sections, HVAC units, and tract housing. Now, as fabrication processes become both more robotic and more digital, the cost of custom products, like a single page from your laser printer, is declining, delivering mass customization—economical, personal, unique production—at the level of buildings. While liberating designers to change the rules of design and production, these changes also force designers to take greater responsibility for their work in entirely new ways.

Summary

We have entered a period of rapid change in the character, design, and production of many products, including buildings. Many challenges will be encountered, wrestled with, and perhaps overcome. Designers need to develop or reinforce their understanding of what goes on both inside and outside the black boxes of design and computation. In Chapters 2–5 we will explore the state-of-the-art in design and computing in some depth. Then, in the remaining chapters, we will consider the fundamental questions and challenges that shape and motivate the emerging field of design computing.

Suggested Reading

Alexander, Christopher. 1964. Notes on the synthesis of form. Cambridge, MA: Harvard University Press.

Schön, Donald. 1984. The reflective practitioner: How professionals think in action. New York: Basic Books.

References

Alexander, Christopher. 1964. Notes on the synthesis of form. Cambridge, MA: Harvard University Press.

Amazon. 2015. Amazon dash button. Amazon.com, Inc. www.amazon.com/oc/dash-button

Asimow, M. 1962. Introduction to design. Upper Saddle River, NJ: Prentice-Hall.

Clayton, Mark, John C. Kunz, Martin A. Fischer, and Paul Teicholz. 1994. First drawings, then semantics. Proceedings of ACADIA 1994, 13–26.

Gibson, James J. 1986. The theory of affordances, in The Ecological Approach to Visual Perception, 127–146. Hillsdale, NJ: Lawrence Erlbaum Associates.

Hillier, Bill and J. Hanson. 1984. The social logic of space. New York, NY: Cambridge University Press.

Oxman, Rivka and Robert Oxman. 2014. Theories of the digital in architecture. London: Routledge.

Peponis, John, Sonit Bafna, Ritu Bajaj, Joyce Bromberg, Christine Congdon, Mahbub Rashid, Susan Warmels, Yan Zhang, and Craig Zimring. 2007. Designing space to support knowledge work. Environment and Behavior 39 (6): 815–840.

Ruskin, John. 1863. Selections from the writings of John Ruskin: With a portrait. London: Smith, Elder & Co., 184.

Schön, Donald. 1984. The reflective practitioner: How professionals think in action. New York: Basic Books.

Turing, Alan. 1950. Computing machinery and intelligence. Mind 49: 433–460.

UCS. 2016. Coal generates 44% of our electricity, and is the single biggest air polluter in the Union of Concerned Scientists. www.ucsusa.org/clean_energy/coalvswind/c01.html#.VWTM0eu24nQ.

USEIA. 2015. How much energy is consumed in residential and commercial buildings in the United States? US Energy Information Administration. http://eia.gov/tools/faqs/faq.cfm?id=86&t=1.

Woodbury, Robert and Andrew L. Burrow. 2006. Whither design space? AIE EDAM: Artificial Intelligence for Engineering Design, Analysis, and Manufacturing 20: 63–82.