Challenges and Opportunities

An expert is someone who knows some of the worst mistakes that can be made in his subject, and how to avoid them.

Werner Heisenberg (1971)

The built environment touches almost all domains of human knowledge: history (symbolism and culture), physical sciences (behavior of materials in complex configurations), biology (response to materials and environment), psychology (behavior of people in various settings), engineering (distribution of energy and support), and a host of other disparate fields of study. These are each subjects in which individuals earn doctoral degrees, so it is not surprising that those responsible for designing new environments do not possess all the required expertise. Their expertise lies in design—consulting, integrating, and coordinating the great many details connected to the expertise of others.

In the face of all the knowledge needed to design and construct anything, acting can be a challenge. Reducing expert decision-making to best practices, rules of thumb, prescriptive code guidelines, and some number of advisory consultations enables the designer to see past the trees to the forest and make needed decisions.

Most designers acknowledge that discovery and continuous application of expert advice beginning early in the design process might well save time and money over solutions based on rules-of-thumb, but also recognize, as we saw in Chapter 8, that current design and simulation tools are not necessarily easily applied at every stage from schematic design through design development and documentation, and that too much detail can be a distraction and hindrance to decision-making as well. Further, the fragmented structure of the industry may require incomplete specification of decisions in order to create price variation in the bidding process.

This chapter will look at the challenges and opportunities that accompany the application of expert knowledge and judgment within the design process. These questions necessarily interact with the topics of cognition (Chapter 7) and representation (Chapter 8).

Architects are Generalists

Architects are generalists; they rely to a great extent on the expertise of others to both design and build projects, and yet they must oversee the myriad decisions that are required during design. They may consult experts for advice on everything from code interpretations to structural systems to fireproofing products to lighting strategies to sustainability and siting. Some questions are simple knowledge questions, but others require expert, numeric analysis of a design proposal. Further, the specificity, or level of abstraction, of the questions can change depending on the phase of the project, so some come up repeatedly as scale and detail change.

When analysis is needed, projects may be evaluated in any of a large number of very different dimensions: including aesthetics, construction and operating cost, structural integrity, energy use, lighting quality (both day lighting and electric lighting), and egress (emergency exiting). Some analyses are mandated by architecture’s core professional commitment to life-safety, while some are mandated by governmental, client, or financing organizations. It is not necessary to evaluate every building in every dimension, and many evaluations (e.g., egress) produce simple binary assessments—the project either “meets code” or it doesn’t. Even where variability is expected (energy use), prescriptive component performance specifications are often used in place of an analysis that responds to the actual building geometry, materials, and site. Finally, analyses done during schematic design may be quite simplified compared to analysis performed during design development or construction document preparation.

The market dominance of a small number of productivity tools (CAD or BIM) may leave the impression that there is a single common representation of built environments. Though this is the direction in which software seems to be evolving, it is still far from true. As discussed in the chapter on representations, the information needed for one analysis is often quite different from that needed for another (e.g., early cost estimates might depend only on floor area and building type, design-development estimates might require precise area takeoffs and material/quality selections, while final construction estimates might add in localized cost data, and perhaps even seasonal weather adjustments). In the past, a separate representation (data set) would often be prepared for each analysis, featuring only the information required for that analysis. As the project advanced, the data set could be edited to reflect design changes and the analysis could be re-run, or a new data set could be generated from the then-current set of design documents.

Different analyses are often performed with both different spatial and temporal resolution; a simple daylight penetration analysis may consider only the noon position of the sun at the extremes of solar position (summer and winter solstice) plus the equinoxes; a mid-level analysis might check the twenty-first of each month; and a detailed analysis might consider hourly solar position in concert with occupant activity schedules, etc. Rough energy analysis might ask only for building area and occupancy. Early structural analysis will consider general structural system, occupancy, and column spacing, while advanced evaluations require precise building geometry, plus local seismic and wind-loading data in addition to soils and code information. However, even the advanced structural analysis will probably ignore the presence of non-load-bearing walls, though these may well define the boundaries of heating system zones, rentable areas, etc. Decisions about what is important rely on expert knowledge in the various consultant subjects.

Asking the Right Questions at the Right Time

While serving as generalists, architects must function as the integrators and coordinators of outside expertise provided by collaborators and consultants. They know that a great many questions need to be answered and that getting them addressed in the right order will save time and money. They know that the cyclic nature of design will cause them to revisit decisions, examining them from different frames of reference and at different scales as the project develops. Because decisions interlock and cascade (resonance or synergy between related decisions is often seen as a positive feature of a good concept) they cannot simply be ticked off some checklist. Design is both a process of asking and answering questions, using design propositions to explore and test possible futures and various analysis tools to evaluate the results. The nature of that evaluation is not a given; it depends on the question that has been asked. While it might be ideal if you could compare current results with earlier ones, testing design moves with “before” and “after” results, the time and money expense of doing this remains high enough that designers rarely work this way. Designers have (or can easily find online) simple rules of thumb for many standard building system elements. When it is necessary to consult an expert, the question asked is less likely to be “How much does this recent move change my performance metric?” It is more likely to be “What’s the annual energy demand of this configuration?” or “What level of daylight autonomy can I achieve with this façade?”

Within the disciplinary silos of design and construction—architecture, materials science, mechanical engineering, structural engineering, day-lighting, etc.—there are well-tested analysis and simulation tools able to address different levels of detail and kinds of question. However,

it should be realized that the building industry has characteristics that make the development of a “building product model” a huge undertaking.… Major obstacles are the scale and diversity of the industry and the “service nature” of the partnerships within it.

(Augenbroe 2003)

So, while there are suitable analysis tools and abundant expertise, the scenario in which off-the-shelf digital technology would be as easy to apply as a spell-checker does not emerge. Instead, “building assessment scenarios typically contain simulation tasks that … require skilled modeling and engineering judgment by their performers” (Augenbroe 2003). Automated production of feedback that is appropriate to the current phase of design and the designer’s shifting focus remains a serious challenge.

Drawings are Never Complete

While it may be obvious that “back of the envelope” sketches and even schematic design drawings are incomplete and rely heavily on numerous assumptions about material choice, dimensions, etc., it is also true that finished construction document sets are incomplete in various ways. First, while they may well conform to industry expectations and legal standards, they do not show every aspect of the finished project. Some details are left to the individual trades to sort out, with shop drawings sometimes being generated by the sub-contractor to show the precise configuration proposed. Other details are covered by general industry standards set by trade groups. Still others simply exist as best practices within trades.

Expertise and the Framing Problem

Every consultant sees different critical information in the building. The elevator consultant sees wait times, floor occupancies, weight limits, building height, and scheduling, while the lighting consultant sees window geometry, glazing material, finishes, tasks, and energy budgets. Each of these conceptual “frames” can be drawn around the same building, but not exactly the same data. Most require the addition of some specific information that derives from their point of view. The architect may select a floor material based on color, wear, and cost, but the lighting consultant wants to know the material’s reflectivity in the visible spectrum, and the sustainability consultant is concerned about the source, embodied energy, and transport of the material. All this information is available, somewhere, but probably not in a BIM model of the building, much less in the CAD drawings. Nonetheless, decisions will be made based on real or assumed values. Capturing and recording values as they become known is a challenge, but understanding what is assumed in your tools might be even more important. Filling in that information with appropriate informed (expert) choices is one of the roles of the consultant. Doing it as part of their analysis is common, and that’s a problem if the analysis needs to respond to, or propose, design changes.

Consultants and the Round-Trip Problem

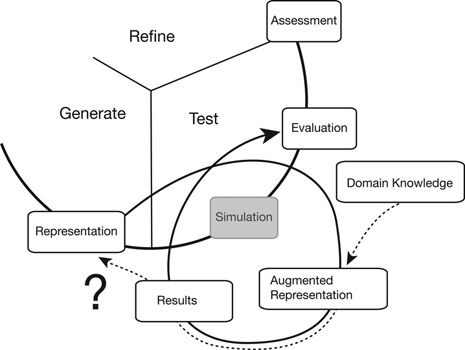

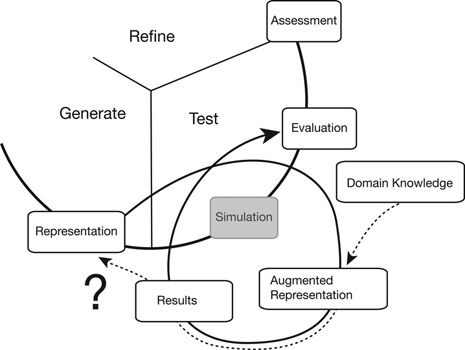

Figure 11.1 illustrates what is sometimes referred to as the “round-trip problem” by zooming in on the portion of the design cycle where outside expertise is often sought—simulation of performance. When an expert is brought in to perform analysis on the design they will receive data from the designer, but often need to bring additional domain knowledge or detail to the model, as indicated by the dashed line. Further, their field of expertise will very likely require a different framing of the design. In the end, their analysis model will resemble the design model, but not match it exactly. For example, they may have overlaid a set of thermal or acoustic zones on the building plan, zones not represented explicitly in the design representation of the architect. The results of their analysis, taking into account the added information, will feed back to the design evaluation, but their model, which is separate from the design model, will exist outside the loop. Capturing and incorporating into the design model all the specific changes required to enable their analysis is rarely done, or even attempted, as indicated by the question-mark. Reintegrating results into the building model in such a way that consistency and integrity of the model are maintained is problematic in practice and difficult to develop (Augenbroe 2003). This difficulty delivering a “round trip” for detailed analysis data remains one of the roadblocks to efficient use of building models with consultants, and becomes increasingly important as workflows begin to incorporate expert knowledge earlier and execute multiple cycles of simulation (Kensek 2014a, 2014b).

Figure 11.1 The Round-Trip Problem – integrating expert domain knowledge into the design model in preparation for further design cycles.

Currently, if a second analysis is required and the same consultant is used, they may simply update their original model, replacing or updating it as appropriate, depending on the number and extent of design changes, and re-run their analysis. This is neither a very fluid exploration (from the point of view of the architect) nor an inexpensive one, which is why much design work simply begins with heuristics (rules of thumb) and uses analysis just once at the end, to verify performance.

Growth and Change

Of course, design organizations seek to build in-house expertise as well as consult outside expertise. And individuals gain experience that they then tap during future projects, but building institutional memory requires a process to extract and collect assessments of past work—best practice details or assemblies. Offices keep archival copies of their design documents for legal reasons, often in the form of CAD files, so one fairly common practice is to browse the archives for similar projects and then extract decisions from the old project for use in new ones. The difficulty here is the absence of feedback loops—the CAD manager at one prominent Seattle firm once complained about the difficulty of “killing off” a particular roofing detail that kept cropping up in projects even though it had proven problematic in the field. Explicit project-reflection or knowledge-management systems remain a work in progress.

While designers tend to be generalists, there is increasing need for data manipulation skills connected to expertise, and more young designers who are building reputations around data visualization, parametric design, and related questions. This shift has moved data visualization and manipulation much closer to the core of design.

Another change that is occurring is in the level of expertise that designers are expected to bring to and exercise in the design process. In the twentieth century, as building-wide heating, lighting, and ventilating became common and high-rise steel construction was widely used, analysis tended to focus on establishing minimum threshold levels of light, heat, strength, etc. In the twenty-first century, as we become more sensitive to the waste associated with over-design, there is a trend toward more sophisticated analysis employing higher-resolution data and more complex models, further isolating such work from routine design activity. Individuals and firms that specialize in the relationship of data, designers, and expertise are increasingly common.

Harvesting and Feeding Leaf-Node Knowledge

Architects are not master masons or steelworkers; they rely on the expertise of laborers or supervisors to build projects. In the flow of knowledge from the architectural trunk through the various branches of contracts and sub-contracts these workers are the “leaf nodes.” They are the ultimate eyes, ears, and hands of the design team; they know how to nail, drill, bolt, weld, cut, and assemble. And while traditional construction documents do not tell contractors how to execute a project (that’s left to their expertise), sometimes the expertise out at the leaf would help the designer make a better choice. Unfortunately, the economics of bringing such fabrication expertise into the architectural design process, where each building is a “one-off” design, is daunting.

To illustrate the potential value of such information, consider the Boeing Company’s “Move to the Lake” project (NBBJ 2016). Designed by NBBJ, the project combined factory and office facilities into one co-located space, dramatically reducing the overhead of moving people and information from manufacturing to engineering or vice versa. They not only won praise and awards for locating the design engineers’ offices adjacent to the actual plane production area, the time to produce a plane was cut by 50 percent in the process. This architectural approach dramatically changed costs. The potential for information technology to do something similar within environmental design is compelling, if challenging. Whether such tactics can be put to work in the United States building construction industry, where most construction is done by constantly shifting constellations of small firms, remains unclear.

If changing information systems might not make expertise more available to designers, it is fairly clear that changing contractual relationships can. Alternative models of contracting, including integrated project delivery (IPD) and design–build–operate (DBO) models, are gaining popularity, appealing in part because they offer the greatest synergy between current information technology and other users of the information. By bringing fabrication knowledge into the design process sooner, the MacLeamy Curve may actually begin to pay off in terms of impact on costs.

The benefits are not limited to the harvesting of leaf-node knowledge; more and better information can make the contractor’s job easier as well. M.A. Mortenson has adopted a strategy of bringing 3D visualizations to their daily construction-management meetings, after seeing a reduction in errors related to placing steel embeds during construction of building cores when the folks in the field had a clearer understanding of the ultimate configuration of the building structure.

Summary

The design process invariably leads designers to engage with expertise. Most design evaluation depends on digital models, each of which requires a uniquely flavored blend of information from the design data, depending on design phase and nature of the question(s) being asked. Answers should often be fed back into the design for the next iteration. Bringing efficiency to the process of tapping expertise, including constructors, is a serious challenge as it touches on relatively fluid representation and process needs. Evidence from other industries suggests how valuable such information might be and changes in the AEC industry are occurring as different industry players attempt to position themselves to best use the tools currently available.

Use of 2D drawings to represent designs creates “unstructured” data (Augenbroe 2003), which means that experts familiar with the design are needed to interpret drawings and prepare data for analyses. Even where 3D BIM models are available, exceptional care must be exercised in their preparation if automated transfer is expected (Augenbroe 2003). The labor-intensive character of data preparation inhibits repeat analysis, pushing analyses toward a “design check” role rather than a central driver of design decisions. CAD system vendors have provided proprietary CAD-to-analysis software to simplify data preparation for their users, but this tends to create isolated systems. Interoperable design and analysis systems are still works in progress.

BIM models hold more information than CAD models, in a more structured way. Automatic extraction of analysis data sets from BIM models is increasing, and BIM vendors are increasingly providing conversion software and/or suites of analysis software tailored to their system. Given the breadth of analysis types, and the use of a few well-tested analysis packages (e.g., in structures and energy), there is an emerging convergence in BIM-to-analysis options.

As analysis becomes more tightly coupled to design, the volume of data generated and required will only increase. Whether current software models of design in the AEC market are robust and efficient enough to handle these volumes of data is uncertain. Whether a highly subdivided and litigious industry will share its intellectual property easily is also a question. Certain legal structures such as project-specific corporation structures, in addition to design–build and IPD may be better positioned to benefit from the use of information technology and expertise.

Suggested Reading

Augenbroe, Godfried. 2003. Developments in interoperability, in Advanced building simulation. Edited by A. Malkawi and G. Augenbroe, 189–216. New York, NY: Spon.

Kensek, Karen. 2014. Analytical BIM: BIM fragments, domain gaps, and other impediments, in Building information modeling: BIM in current and future practice. Edited by K. Kensek and D. Noble, 157–172. Hoboken, NJ: John Wiley.

References

Augenbroe, Godfried. 2003. Developments in interoperability, in Advanced building simulation. Edited by A. Malkawi and G. Augenbroe, 189–216. New York, NY: Spon.

Heisenberg, Werner. 1971. Physics and beyond: Encounters and conversation. Translated by Arnold Pomerans. New York, NY: Harper & Row.

Kensek, Karen. 2014a. Building information modeling. New York, NY: Routledge.

Kensek, Karen. 2014b. Analytical BIM: BIM fragments, domain gaps, and other impediments, in Building information modeling: BIM in current and future practice. Edited by K. Kensek and D. Noble, 157–172. Hoboken, NJ: John Wiley.

NBBJ. 2016. Move to the lake. www.nbbj.com/work/boeing-move-to-the-lake