Introduction to Quadrant 4

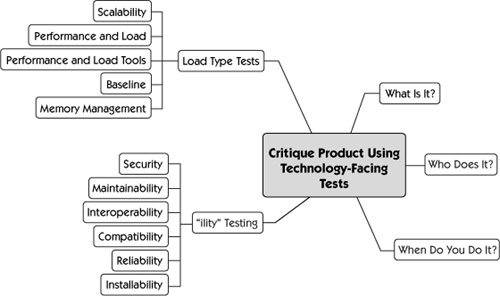

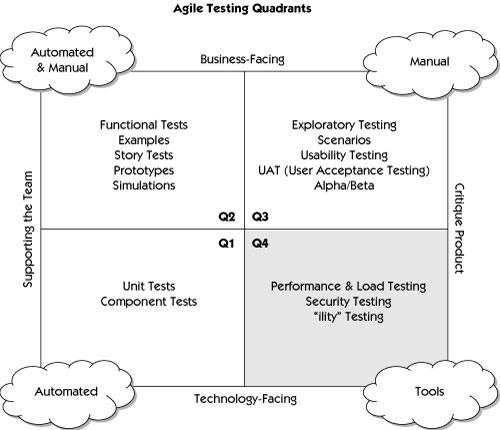

Individual stories are pieces of the puzzle, but there’s more to an application than that. The technology-facing tests that critique the product are more concerned with the nonfunctional requirements than the functional ones. We worry about deficiencies in the product from a technical point of view. Rather than using the business domain language, we describe requirements using a programming domain vocabulary. This is the province of Quadrant 4 (see Figure 11-1).

Nonfunctional requirements include configuration issues, security, performance, memory management, the “ilities” (e.g., reliability, interoperability, and scalability), recovery, and even data conversion. Not all projects are concerned about all of these issues, but it is a good idea to have a checklist to make sure the team thinks about them and asks the customer how important each one is.

Our customer should think about all of the quality attributes and factors that are important and make informed trade-offs. However, many customers focus on the business side of the application and don’t understand the criticality of many nonfunctional requirements in their role of helping to define the level of quality needed for the product. They might assume that the development team will just take care of issues such as performance, reliability, and security.

We believe that the development team has a responsibility to explain the consequences of not addressing these nonfunctional or cross-functional requirements. We’re really all part of one product team that wants to deliver good value, and these technology-oriented factors might expose make-or-break issues.

Many of these nonfunctional and cross-functional issues are deemed low-risk for many applications and so are not added to the test plan. However, when you are planning your project, you should think about the risks in each of these areas, address them in your test plan, and include the tools and resources needed for testing them in your project plan.

Because the types of testing in this quadrant are so diverse, we’ll give examples of tools that might be helpful as we go along instead of a separate toolkit section. Tools, whether homegrown or acquired, are essential to succeed with Quadrant 4 testing efforts. Still, the people doing the work count, so let’s consider who on an agile team can perform these tests.

All of the agile literature talks about teams being generalists; anyone should be able to pick up a task and do it. We know that isn’t always practical, but the idea is to be able to share the knowledge so that people don’t become silos of information.

However, there are many tasks that need specialized knowledge. A good example is security testing. We’re not talking about security within an application, such as who has access rights to administer it. Because that type of security is really part of the functional requirements and will be covered by regular stories, verifying that it works falls within the first three quadrants. We’re talking about probing for external security flaws and knowing the types of vulnerabilities in systems that hackers exploit. That is a specialized skill set.

Performance testing can be done by testers and programmers collaborating and building simple tools for their specific needs. Some organizations purchase load-testing tools that require team members who specialize in that tool to build the scripts and analyze and interpret the results. It can be difficult for a software development organization, especially a small one, to have enough resources to duplicate an accurate production-level load for a test, so external providers of performance testing may be needed.

Larger organizations may have groups such as database experts that your team can use to help with data conversion, security groups that will help you identify risks to your application, or a production support team that can help you test recovery or failover. Build a close relationship with these specialists. You’ll need to work together as a virtual team to gather the information you need about your product.

The more diverse the skill sets are in your team, the less likely you are to need outside consultants to help you. Identify the resources you need for each project. Many teams find that a good technical tester or toolsmith can take on many of these tasks. If someone already on the team can learn whatever specialized knowledge is required, great; otherwise, bring in the expertise you need.

Regardless of whether or not your team brings in additional resources for these types of tests, your team is still responsible for making sure the minimum testing is done. The information these tests provide may result in new stories and tasks in areas such as changing the architecture for better scalability or implementing a system-wide security solution. Be sure to complete the feedback loop from tests that critique the product to tests that drive changes that will improve the nonfunctional aspects of the product.

Just because this is the fourth out of four agile testing quadrants doesn’t mean these tests come last. Your team needs to think about when to do performance, security, and “ility” tests so that you ensure your product delivers the right business value.

As with functional testing, the sooner technology-facing tests that support the team are completed, the cheaper it is to fix any issues that are found. However, many of the cross-functional tests are expensive and hard to do in small chunks.

Technical stories can be written to address specific requirements, such as: “As user Abby, I need to retrieve report X in less than 20 seconds so that I can make a decision quickly.” This story is about performance and requires specialized tests to be written, and it can be done along with the story to code the report, or in a later iteration.

Consider a separate row on your story board for tasks needed by the product as a whole. Lisa’s team uses this area to put cards such as “Evaluate load test tools” or “Establish a performance test baseline.” Janet has successfully used different colored cards to show that the story is meant for one of the expert roles borrowed from other areas of the organization.

Some performance tests might need to wait until much of the application is built if you are trying to baseline full end-to-end workflows. If performance and reliability are a top priority, you need to find a way to test those early in the project. Prioritize stories so that a steel thread or thin slice is complete early. You should be able to create a performance test that can be run and continue to run as you add more and more functionality to the workflow. This may enable you to catch performance issues early and redesign the system architecture for improvements. For many applications, correct functionality is irrelevant without the necessary performance.

The time to think about your nonfunctional tests is during release or theme planning. Plan to start early, tackling small increments as needed. For each iteration, see what tasks your team needs in order to determine whether the code design is reliable, scalable, usable, and secure. In the next section, we’ll look at some different types of Quadrant 4 tests.

If we could just focus on the desired behavior and functionality of the application, life would be so simple. Unfortunately, we have to be concerned with qualities such as security, maintainability, interoperability, compatibility, reliability, and installability. Let’s take a look at some of these “ilities.”

OK, it doesn’t end in -ility, but we include it in the “ility” bucket because we use technology-facing tests to appraise the security aspects of the product. Security is a top priority for every organization these days. Every organization needs to ensure the confidentiality and integrity of their software. They want to verify concepts such as no repudiation, a guarantee that the message has been sent by the party that claims to have sent it and received by the party that claims to have received it. The application needs to perform the correct authentication, confirming each user’s identity, and authorization, in order to allow the user access only to the services they’re authorized to use. Testing so many different aspects of security isn’t easy.

In the rush to deliver functionality, both business experts and development teams in newly started organizations may not be thinking of security first. They just want to get some software working so they can do business. Authorization is often the only aspect of security testing that they consider as part of business functionality.

Budgeting this type of work has to be a business priority. There’s a range of alternatives available, depending on your company’s priorities and resources. Understand your needs and the risks before you invest a lot of time and energy.

Testers who are skilled in security testing can perform security risk-based testing, which is driven by analyzing the architectural risk, attack patterns, or abuse and misuse cases. When specialized skills are required, bring in what you need, but the team is still responsible for making sure the testing gets done.

There are a variety of automated tools to help with security verification. Static analysis tools, which can examine the code without executing the application, can detect potential security flaws in the code that might not otherwise show up for years. Dynamic analysis tools, which run in real time, can test for vulnerabilities such as SQL injection and cross-site scripting. Manual exploratory testing by a knowledgeable security tester is indispensable to detect issues that automated tests can miss.

Just this brief look at security testing shows why specialized training and tools are so important to do a good job of it. For most organizations, this testing is absolutely required. One security intrusion might be enough to take a company out of business. Even if the probability were low, the stakes are too high to put off these tests.

Code that costs a lot to maintain might not kill an organization’s profitability as quickly as a security breach, but it could lead to a long, slow death. In the next section we consider ways to verify maintainability.

Maintainability is not something that is easy to test. In traditional projects, it’s often done by the use of full code reviews or inspections. Agile teams often use pair programming, which has built-in continual code review. There are other ways to make sure the code and tests stay maintainable.

We encourage development teams to develop standards and guidelines that they follow for application code, the test frameworks, and the tests themselves. Teams that develop their own standards, rather than having them set by some other independent team, will be more likely to follow them because they make sense to them.

The kinds of standards we mean include naming conventions for method names or test names. All guidelines should be simple to follow and make maintainability easier. Examples are: “Success is always zero and failure must be a negative value,” “Each class or module should have only one single responsibility,” or “All functions must be single entry, single exit.”

Standards for developing the GUI also make the application more testable and maintainable, because testers know what to expect and don’t need to wonder whether a behavior is right or wrong. It also adds to testability if you are automating tests from the GUI. Simple standards such as, “Use names for all GUI objects rather than defaulting to the computer assigned identifier” or “You cannot have two fields with the same name on a page” help the team achieve a level where the code is maintainable, as are the automated tests that provide coverage for it.

Maintainable code supports shared code ownership. It is much easier for a programmer to move from one area to another if all code is written in the same style and easily understood by everyone on the team. Complexity adds risk and also makes code harder to understand. The XP value of simplicity should be applied to code. Simple coding standards can also include guidelines such as, “Avoid duplication—Don’t copy-paste methods.” These same concepts apply to test frameworks and the tests themselves.

Maintainability is an important factor for automated tests as well. Test tools have lagged behind programming tools in features that make them easy to maintain, such as IDE plug-ins to make writing and maintaining test scripts simpler and more efficient. That’s changing fast, so look for tools that provide easy refactoring and search-and-replace, and for other utilities that make it easy to modify the scripts.

Database maintainability is also important. The database design needs to be flexible and usable. Every iteration might bring tasks to add or remove tables, columns, constraints, or triggers, or to do some kind of data conversion. These tasks become a bottleneck if the database design is poor or the database is cluttered with invalid data.

Plan time to evaluate the database’s impact on team velocity, and refactor it just as you do production and test code. Maintainability of all aspects of the application, test, and execution environments is more a matter of assessment and refactoring than direct testing. If your velocity is going down, is it because parts of the code are hard to work on, or is it that the database is difficult to modify?

Interoperability refers to the capability of diverse systems and organizations to work together and share information. Interoperability testing looks at end-to-end functionality between two or more communicating systems. These tests are done in the context of the user—human or a software application—and look at functional behavior.

In agile development, interoperability testing can be done early in the development cycle. We have a working, deployable system at the end of each iteration so that we can deploy and set up testing with other systems.

Quadrant 1 includes code integration tests, which are tests between components, but there is a whole other level of integration tests in enterprise systems. You might find yourself integrating systems through open or proprietary interfaces. The API you develop for your system might enable your users to easily set up a framework for them to test easily. Easier testing for your customer makes for faster acceptance.

In one project Janet worked on, test systems were set up at the customer’s site so that they could start to integrate them with their own systems early. Interfaces to existing systems were changed as needed and tested with each new deployment.

If the system your team works on has to work together with external systems, you may not be able to represent them all in your test environments except with stubs and drivers that simulate the behavior of the other systems or equipment. This is one situation where testing after development is complete might be unavoidable. You might have to schedule test time in a test environment shared by several teams.

Consider all of the systems with which yours needs to communicate, and make sure you plan ahead to have an appropriate environment for testing them together. You’ll also need to plan resources for testing that your application is compatible with the various operating systems, browsers, clients, servers, and hardware with which it might be used. We’ll discuss compatibility testing next.

The type of project you’re working on dictates how much compatibility testing is required. If you have a web application and your customers are worldwide, you will need to think about all types of browsers and operating systems. If you are delivering a custom enterprise application, you can probably reduce the amount of compatibility testing, because you might be able to dictate which versions are supported.

As each new screen is developed as part of a user interface story, it is a good idea to check its operability in all supported browsers. A simple task can be added to the story to test on all browsers.

One organization that Janet worked at had to test compatibility with reading software for the visual impaired. Although the company had no formal test lab, it had test machines available near the team area for easy access. The testers made periodic checks to make sure that new functionality was still compatible with the third-party tools. It was easy to fix problems that were discovered early during development.

Having test machines available with different operating systems or browsers or third-party applications that need to work with the system under test makes it easier for the testers to ensure compatibility with each new story or at the end of an iteration. When you start a new theme or project, think about the resources you might need to verify compatibility. If you’re starting on a brand new product, you might have to build up a test lab for it. Make sure your team gets information on your end users’ hardware, operating systems, browsers, and versions of each. If the percentage of use of a new browser version has grown large enough, it might be time to start including that version in your compatibility testing.

When you select or create functional test tools, make sure there’s an easy way to run the same script with different versions of browsers, operating systems, and hardware. For example, Lisa’s team could use the same suite of GUI regression tests on each of the servers running on Windows, Solaris, and Linux. Functional test scripts can also be used for reliability testing. Let’s look at that next.

Reliability of software can be referred to as the ability of a system to perform and maintain its functions in routine circumstances as well as unexpected circumstances. The system also must perform and maintain its functions with consistency and repeatability. Reliability analysis answers the question, “How long will it run before it breaks?” Some statistics used to measure reliability are:

Mean time to failure: The average or mean time between initial operation and the first occurrence of a failure or malfunction. In other words, how long can the system run before it fails the first time?

Mean time to failure: The average or mean time between initial operation and the first occurrence of a failure or malfunction. In other words, how long can the system run before it fails the first time?

Mean time between failures: A statistical measure of reliability, this is calculated to indicate the anticipated average time between failures. The longer the better.

Mean time between failures: A statistical measure of reliability, this is calculated to indicate the anticipated average time between failures. The longer the better.

In traditional projects, we used to schedule weeks of reliability testing that tried to run simulations that matched a regular day’s work. Now, we should be able to deliver at the end of every iteration, so how can we schedule reliability tests?

We have automated unit and acceptance tests running on a regular basis. To do a reliability test, we simply need to use those same tests and run them over and over. Ideally, you would use statistics gathered that show daily usage, create a script that mirrors the usage, and run it on a stable build for however long your team thinks is adequate to prove stability. You can input random data into the tests to simulate production use and make sure the application doesn’t crash because of invalid inputs. Of course, you might want to mirror peak usage to make sure that it handles busy times as well.

You can create stories in each iteration to develop these scripts and add new functionality as it is added to the application. Your acceptance tests could be very specific such as, “Functionality X must perform 10,000 operations in a 24-hour period for a minimum of 3 days.”

Beware: Running a thousand tests without any serious problems doesn’t mean you have reliable software. You have to run the right tests. To make a reliability test effective, think about your application and how it is used all day, every day, over a period of time. Specify tests that are aimed at demonstrating that your application will be able to meet your customers’ needs, even during peak times.

Ask the customer team for their reliability criteria in the form of measurable goals. For example, they might consider the system reliable if ten or fewer errors occur for every 10,000 transactions, or the web application is available 99.999% of the time. Recovery from power outages and other disasters might be part of the reliability objectives, and will be stated in the form of Service Level Agreements. Know what they are. Some industries have their own software reliability standards and guidelines.

Driving development with the right programmer and customer tests should enhance the application’s reliability, because this usually leads to better design and fewer defects. Write additional stories and tasks as needed to deliver a system that meets the organization’s reliability standards.

Your product might be reliable after it’s up and running, but it also needs to be installable by all users, in all supported environments. This is another area where following agile principles gives us an advantage.

One of the cornerstones of a successful agile team is continuous integration. This means that a build is ready for testing anytime during the day. Many teams choose to deploy one or more of the successful builds into test environments on a daily basis.

Automating the deployment creates repeatability and makes deployment a non-event. This is exciting to us because we have experienced weeks of trying to integrate and install a new system. We know that if we build once and deploy the same build to multiple environments, we have developed consistency.

As with any other functionality, risks associated with installation need to be evaluated and the amount of testing determined accordingly. Our advice is to do it early and often, and automate the process if possible.

There are other “ilities” to test, depending on your product’s domain. Safety-critical software, such as that used in medical devices and aircraft control systems, requires extensive safety testing, and the regression tests probably would contain tests related to safety. System redundancy and failover tests would be especially important for such a product. Your team might need to look at industry data around software-related safety issues and use extra code reviews. Configurability, auditability, portability, robustness, and extensibility are just a few of the qualities your team might need to evaluate with technology-facing tests.

Whatever “ility” you need to test, use an incremental approach. Start by eliciting the customer team’s requirements and examples of their objectives for that particular area of quality. Write business-facing tests to make sure the code is designed to meet those goals. In the first iteration, the team might do some research and come up with a test strategy to evaluate the existing quality level of the product. The next step might be to create a suitable test environment, to research tools, or to start with some manual tests.

As you learn how the application measures up to the customers’ requirements, close the loop with new Quadrant 1 and 2 tests that drive the application closer to the goals for that particular property. An incremental approach is also recommended for performance, load, and other tests that are addressed in the next section.

Performance, Load, Stress, and Scalability Testing

Performance, load, stress, and scalability testing all fall into Quadrant 4 because of their technology focus. Often specialized skills are required, although many teams have figured out ways to do their own testing in these areas. Let’s talk about scalability first, because it is often forgotten.

Scalability testing verifies the application remains reliable when more users are added. What that really means is, “Can your system handle the capacity of a growing customer base?” It sounds simple, but really isn’t, and is a problem that an agile team usually can’t solve by itself.

It is important to think about the whole system and not just the application itself. For example, the network is often the bottleneck, because it can’t handle the increased throughput. What about the database? Will it scale? Will the hardware you are using handle the new loads being considered? Is it simple just to add new hardware, or is it the bottleneck?

You will need to go outside the team to get the answers you require to address scalability issues, so plan ahead.

Performance and Load Testing

Performance testing is usually done to help identify bottlenecks in a system or to establish a baseline for future testing. It is also done to ensure compliance with performance goals and requirements, and to help stakeholders make informed decisions related to the overall quality of the application being tested.

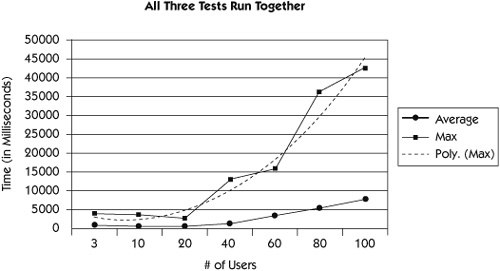

Load testing evaluates system behavior as more and more users access the system at the same time. Stress testing evaluates the robustness of the application under higher-than-expected loads. Will the application scale as the business grows? Characteristics such as response time can be more critical than functionality for some applications.

Grig Gheorghiu [2005] emphasizes the need for clearly defined expectations to get value from performance testing. He says, “If you don’t know where you want to go in terms of the system, then it matters little which direction you take (remember Alice and the Cheshire Cat?).” For example, you probably want to know the number of concurrent users and the acceptable response time for a web application.

Performance and Load-Testing Tools

After you’ve defined your performance goals, you can use a variety of tools to put a load on the system and check for bottlenecks. This can be done at the unit level, with tools such as JUnitPerf, httperf, or a home-grown harness. Apache JMeter, The Grinder, Pounder, ftptt, and OpenWebLoad are more examples of the many open source performance and load test tools available at the time of this writing. Some of these, such as JMeter, can be used on a variety of server types, from SOAP to LDAP to POP3 mail. Plenty of commercial tool options are available too, including NeoLoad, WebLoad, eValid LoadTest, LoadRunner, and SOATest.

See the bibliography for links to sites where you can research tools.

Use these tools to look for performance bottlenecks. Lisa’s team uses JProfiler to look for application bottlenecks and memory leaks, and JConsole to analyze database usage. Similar tools exist for .NET and other environments, including .NET Memory Profiler and ANTS Profiler Pro. As Grig points out, there are database-specific profilers to pinpoint performance issues at the database level; ask your database experts to work with you. Your system administrators can help you use shell commands such as top, or tools such as PerfMon to monitor CPU, memory, swap, disk I/O, and other hardware resources. Similar tools are available at the network level, for example, NetScout.

You can also use the tools the team is most familiar with. In one project, Janet worked very closely with one of the programmers to create the tests. She helped him to define the tests needed based on customer’s performance and load expectations, and he automated them using JUnit. Together they analyzed the results to report back to the customer.

Establishing a baseline is a good first step for evaluating performance. The next section explores this aspect of performance testing.

Performance tuning can turn into a big project, so it is essential to provide a baseline that you can compare against new versions of the software on performance. Even if performance isn’t your biggest concern at the moment, don’t ignore it. It’s a good idea to get a performance baseline so that you know later which direction your response time is headed. Lisa’s company hosts a website that has had a small load on it. They got a load test baseline on the site so that as it grew, they’d know how performance was being affected.

If there are specific performance criteria that have been defined for specific functionality, we suggest that performance testing be done as part of the iteration to ensure that issues are found before it is too late to fix them.

Benchmarking can be done at any time during a release. If new functionality is added that might affect the performance, such as complicated queries, rerun the tests to make sure there are no adverse effects. This way, you have time to optimize the query or code early in the cycle when the development team is still familiar with the feature.

Any performance, load, or stress test won’t be meaningful unless it’s run in an environment that mimics the production environment. Let’s talk more about environments.

Final runs of the performance tests will help customers make decisions about accepting their product. For accurate results, tests need to be run on equipment that is similar to that of production. Often teams will use smaller machines and extrapolate the results to decide if the performance is sufficient for the business needs. This should be clearly noted when reporting test results.

Stressing the application to see what load it can take before it crashes can also be done anytime during the release, but usually it is not considered high-priority by customers unless you have a mission-critical system with lots of load.

One resource that is affected by increasing load is memory. In the next section, we discuss memory management.

Memory is usually described in terms of the amount (normally the minimum or maximum) of memory to be used for RAM, ROM, hard drives, and so on. You should be aware of memory usage and watch for leaks, because they can cause catastrophic failures when the application is in production during peak usage. Some programming languages are more susceptible to memory issues, so understanding the strengths and weaknesses of the code will assist you in knowing what to watch for. Testing for memory issues can be done as part of performance, load, and stress testing.

Garbage collection is one tool used to release memory back to the program. However, it can mask severe memory issues. If you see the available memory steadily decreasing with usage and then all of a sudden increasing to maximum available, you might suspect the garbage collection has kicked in. Watch for anomalies in the pattern or whether the system starts to get slow under heavy usage. You may need to monitor for a while and work with the programmers to find the issue. The fix might be something simple, such as scheduling the garbage collection more often or setting the trigger level higher.

When you are working with the programmers on a story, ask them if they expect problems with memory. You can test specifically if you know there might be a risk in the area. Watching for memory leaks is not always easy, but there are tools to help. This is an area where programmers should have tools easily available. Collaborate with them to verify that the application is free of memory issues. Perform the performance and load tests described in the previous section to verify that there aren’t any memory problems.

You don’t have to be an expert on how to do technology-facing testing that critiques the product to help your team plan for it and execute it. Your team can evaluate what tests it needs from this quadrant. Talk about these tests as you plan your release; you can create a test plan specifically for performance and load if you’ve not done it before. You will need time to obtain the expertise needed, either by acquiring it through identifying and learning the skills, or by bringing in outside help. As with all development efforts, break technology-facing tests into small tasks that can be addressed and built upon each iteration.