Chapter 4. The Software Paradox at Work

Because the implications of the Software Paradox are wide-ranging, it is important to study organizations that differ in both size and context to get a broad understanding of the potential impacts. In this chapter, we’ll look at how very different companies are looking at the value of software, both in the commercial and functional senses of the word. Some will serve as cautionary tales, others will provide constructive feedback for how to view software moving forward. All, however, should be useful for testing your own assumptions about what software is worth.

Adobe

In the year 2000, it was difficult to foresee just how powerful the idea of delivering Software-as-a-Service—a model in which browser-based applications replaced their native, operating system–specific counterparts—would become. Even following the initial public offering of Salesforce in June of 2004, skepticism remained regarding the existential threat the browser posed to native application development. Eventually, however, it became clear that this was not only a viable model, it was an inevitable one for most applications. Today, whether an application’s backend is hosted on premises or remotely, the overwhelming majority of interfaces are consumed via a browser.

There is one notable exception to this trend, however: Photoshop. It was such an obvious counterpoint that “everything short of Photoshop” became a cliché among industry watchers discussing the ascent of the browser-based software delivery model. Photoshop, and the other portions of what Adobe eventually packaged and marketed as the Creative Suite, was both sold and delivered as a traditional standalone software application. Buyers purchased a perpetual license, downloaded and installed a closed source software product, and the model changed little in spite of the tectonic shifts in the software world around it.

Today, this is largely still true. Photoshop did have a limited-functionality edition become available in the browser, but the majority of its users still consume the native versions of the product. Adobe did make one important change in 2013, however. Instead of selling perpetual licenses to a shipped product, it transitioned the majority of its user base to monthly subscriptions. This may seem like a difference without a distinction, but the implications for Adobe and its users were profound.

Instead of buying Photoshop, for example, for $650 outright, Adobe sold subscriptions to the software for $20 a month. At this subscription level, Photoshop users would have paid the full retail price over a period of roughly 32 months. Presumably to upsell buyers, the payback period of the full suite—normally priced at around $2,600 but sold in a subscription format at $50—was 52 months rather than the less than three years for Photoshop.

The most common reaction to this sea change in Adobe’s business model was outrage. Design professionals penned scathing reviews on their blogs, and comment sections on media items covering the change were one vitriolic comment after another. One user even went so far as to create a petition on Whitehouse.gov asking the Obama administration to look into the subscription pricing, alleging that it was predatory in nature. (The petition did not accumulate enough signatures to meet the response threshold and was removed.)

While a great deal of the anger cited cost as the primary complaint, then-columnist for the New York Times David Pogue wrote in Scientific American:

Paying a monthly fee for software doesn’t feel the same. We download a program, and there it sits. Month after month we pay to use it, but we get nothing additional in return.

— David Pogue

This assertion is debatable, because aside from evening out Adobe’s cash flow, a monthly subscription model allows vendors to develop iteratively rather than withholding these features to incent the purchase of major upgrades every few years. It’s easy to make the argument, in fact, that companies like Adobe shifting to this model must develop this way to limit churn.

But even assuming no changes in Adobe’s development model, a subscription model is by definition more accessible for casual users, and like public cloud pricing, may even allow capital-constrained designers to amortize the product’s cost over a longer period of time. From the level and tone of public rhetoric, however, it would appear that these proposed advantages were less than apparent to the majority of users.

The psychology of the price change would predict, in any event, a major downturn in Adobe’s business, accompanied by perceptible shifts toward even more functionally limited or harder to use but free alternatives such as the Gimp. The crucial question for Adobe—and though they may not have been aware of it, other vendors of shrink-wrapped software—was simple: did this happen?

The short answer seems to be no. As summarized by Bloomberg’s Joshua Bruste in March of 2014:

Adobe now has 1.8 million customers paying for these software subscriptions, and it added 405,000 in the last quarter, the company said on Tuesday in its quarterly earnings report. It is making more money selling monthly subscriptions to its Creative Cloud software—the family of programs that includes Photoshop and Illustrator—than it is by selling the software outright.

— Joshua Bruste

By making the change to a subscription model, Adobe effectively abandoned its traditional model—the same traditional model of software sales that built it into a $30 billion company—in favor of recurring revenue. This isn’t a change made lightly, nor one without costs: by transitioning to subscription revenue, Adobe is deliberately extending its revenue recognition period out over time while taking an upfront capital expense hit. Which, in turn, tells us several things. First, that Adobe expects the profits on a customer’s lifetime value to exceed the return it could realize from the revenue up front. Second, that such a model has the potential to expand Adobe’s addressable market by creating an avenue for casual users to become paying customers, if only for a brief period. And third, and perhaps most important, the shift in the model is a signal that the days of shrink-wrapped, paid upfront software are nearing an end.

Amazon

Incorporated in 1994 as Cadabra, Amazon.com debuted to the world in 1995. At the time, Amazon was focused strictly on the online sale of books. Over time, as Amazon outlasted a sea of competitors online and offline, the merchant’s scope expanded dramatically to what we know today: Amazon as a retailer of virtually every type of good, physical, virtual, and otherwise. It was this identity, Amazon as a mere retailer, that persisted for years even after the company transparently entered the technology services market and began competing directly with the industry’s massive incumbents.

When Amazon launched its Elastic Compute Cloud (EC2) and Simple Storage Service (S3) in 2006, most of its competitors at the time considered them “toy” applications, academically interesting but of no real import. Most focused on what the services could not do, and in their initial incarnations, that list was long. Comparatively fewer observers paid attention to what Amazon could do: spin up compute and storage instances in 90 seconds or less, and charge for them by the hour to anyone holding a credit card. But who was going to make money charging pennies on the hour? As one senior technology executive responded when questioned about Amazon at the time, “I don’t want to be in the hosting business.” Even technology companies directly in Amazon’s path seemed unable to perceive the threat and react accordingly. As Microsoft’s Ray Ozzie acknowledged in an interview in 2008, “[the cloud market] really isn’t being taken seriously right now by anybody except Amazon.”

Eight years later, and Amazon is now correctly regarded as the dominant player in one of the most important emerging markets in the history of the technology industry. Like Microsoft in operating systems or office productivity software or VMware in virtualization, Amazon is the vendor whom other players must relate themselves to in some way; whether it’s as a sanctioned API-compatible ally in Eucalyptus or an open source alternative in OpenStack. Unlike Microsoft and VMware, however, Amazon sells no software. Or more accurately, it sells no software in the traditional distributed fashion. Besides its existing software-powered infrastructure businesses in compute, storage, and so on, Amazon also sells existing software products as a service. From discrete databases like MySQL, PostgreSQL, and SQL Server to packaged, all-in-one offerings such as Amazon Redshift (which the company recently said was the fastest growing service in the history of AWS), Amazon deliberately eschews labels like IaaS or PaaS but is the pre-eminent service business.

As such, the company’s value from a technology perspective isn’t software, strictly speaking, but rather outsourced effort. Any business can download and run software like MySQL or PostgreSQL at no cost. But hosting it, keeping it up and running, backing up the databases, and exposing them safely to other applications requires expertise and effort. For many customers, and AWS customers in particular, then, the value isn’t in the software itself—because that is available at no cost—but the saved expertise and effort of consuming the infrastructure software as a service.

Amazon, in other words, is making money with software, rather than from software. This may seem like a difference without a distinction to some, but it is actually an excellent illustration of one path forward for software monetization, and thus for mitigating the Software Paradox. By combining software with another, more readily monetized product—services, in this case—Amazon is able to efficiently extract profit from a growing, volume market. What’s more impressive, however, is that because Amazon is building primarily from either free software (in the economic sense) or software it developed internally, it is paying out minimal premiums to third parties for the services it offers. Which means that not only is AWS a volume business, it may be a high-margin business at the same time. Amazon does not break out its AWS revenues, so we’re forced to rely on estimates, but UBS analysts Brian Fitzgerald and Brian Pitz projected in 2010 that AWS’s margins would grow from 47% in 2006 to 53% in 2014. Last year, Andreas Gauger, the chief marketing officer for Amazon competitor ProfitBricks, estimated Amazon’s margins were better than 80%.

If Gauger is correct, that would put AWS in software territory from a margin perspective; IBM’s software margins in 2013, for example, were 88.8%. But even if the more conservative UBS figures are closer to the mark, AWS has found a reasonable middle ground between volume and margin. And if one assumes the Software Paradox to be correct, this is particularly true, because the high end of software margins is likely to be unsustainable. By leveraging software to generate revenue without having to sell it directly, however, AWS is inherently hedged against this prospect.

Apple

While it’s easy to forget today, given the company’s unprecedented success and growth in recent years, for many years, Apple’s approach was considered a case study of how not to operate a technology business. For the better part of its early existence, Apple had resisted calls to license its software to other manufacturers, even as Microsoft grew explosively on the backs of just that model. In describing why he left Apple for Microsoft in 1981, Jeff Raikes told a group of Albers School of Business students in 2004 that “I wasn’t sure who was going to win in the hardware business, but it sure looked like Microsoft was doing the software for all of them.”

The implication was simple: when software is where the value lies, it’s incumbent on businesses to maximize the addressable market for that software by making it available on whatever hardware it’s practical to run it on. Apple, and more specifically Steve Jobs, believed that it was the combination of Apple hardware and software that delivered the kind of experience users expected. The market, however, did not share that opinion, propelling Microsoft to lofty heights and punishing Apple at the same time.

Predictably, this led the post-Jobs Apple to experiment with licensing its operating system to other manufacturers in an attempt to increase penetration. For two years in the mid-1990’s, manufacturers from Bandai to Motorola were producing Mac clones with Apple’s blessing. This decision was effectively killed by Jobs following his return in 1997, and to this day, Apple has never returned to a software licensing model, with rare exceptions such as CarPlay.

As during his original tenure, Jobs pursued an integrated software and hardware model as opposed to software alone. The results some 16 years after Jobs returned to the company he founded will not surprise current followers of the company. In June of 2010, Apple’s market capitalization surpassed that of its long-time nemesis Microsoft, and the one-time underdog has become instead the biggest player in an industry full of them.

Why did the Jobs strategy fail initially only to succeed years later? Some of it undoubtedly is attributable to execution on both companies; Microsoft was astute in parlaying its overwhelming success in one market (operating systems) into dominance in another (office productivity). Apple, meanwhile, stalled after investing without focus in markets from digital cameras to tablets (though they would obviously have the last laugh there). But the most important factor in the shift may have less to do with either company and more to do with the wider market context.

In the nearly two decades that Microsoft dominated Apple, the underlying software platform was an all-important consideration because of application lock-in. It was difficult for Apple’s operating system to achieve mainstream traction because it was perpetually caught in a chicken-or-egg situation with respect to applications. There weren’t enough applications for mainstream consumers to adopt the platform, and because there weren’t enough mainstream consumers, the incentive for application manufacturers to support the Mac along with Windows was low. Two important shifts altered the fundamentals of this market enabling Apple’s ascent.

While the trend had begun considerably beforehand, the launch of Gmail and the initial public offering of Salesforce in 2004 together heralded the arrival of browser-based applications. It would take years for the default desktop application to become browser based rather than native, but the writing was on the wall. For would-be adopters of Apple’s hardware, the rise of browser-based applications was transformative. Because browsers supported the major available operating systems, it no longer mattered as much what the underlying operating system was. Serve your application up via a browser and it didn’t matter whether a user was on Windows, Mac, or even Linux. Coupled with Apple’s aesthetically superior user interface, this gave Apple new life in its battle against Windows hegemony.

Even still, the sheer inertia behind Windows would be difficult to overcome. So Apple instead applied innovative software and hardware to net new markets—MP3 players first, then smartphones, and finally tablets. In none of these markets did Apple license the software to exterior manufacturers; the value instead accrued to the company that could combine unparalleled software design expertise with a growing competence in supply chain management (courtesy their current CEO, Tim Cook). Interestingly, Apple’s strategy relied initially on the browser-based application delivery model before replicating, effectively, the native lock-in strategy Microsoft had previously leveraged to great effect against Apple. When the iPhone was launched in 2006, it launched without any ability for companies other than Apple to add applications to the platform. A year later—against Steve Jobs’ initial preferences, according to some reports—Apple announced the availability of its SDK. Billions of applications later, Apple now benefits from the same application investment protection that Microsoft once enjoyed with Windows.

The lesson here is simple: software is an important piece of the equation, but only a piece. It’s difficult to imagine Apple, for example, enjoying the same financial success that Microsoft enjoyed with Windows had they licensed the platform to third parties. Google’s Android project is the closest to replicating the Windows model in the mobile world, and indeed is now the volume leader over iOS in most markets, but it has never tried to monetize the platform directly, and any such attempt would have likely prevented its current market dominance. Android is similarly valuable to Windows strategically, it’s simply worth billions less in licensing fees.

Whether we’re looking at Apple or Google, then, the lesson is that software is once again a means to an end as opposed to an end in and of itself. Which is a profound statement about the commercial value of the software powering the devices that we all use today.

Atlassian

Founded in 2002 by Mike Cannon-Brookes and Scott Farquhar, Atlassian was a small Australian software company bootstrapped on $10,000 in credit card funding. By 2011, the private company announced its annual revenues had eclipsed $100 million. As of March 2014, the company had 35,000 organizations using its products and was valued by the private market at $3.3 billion.

This growth trajectory is impressive but hardly unprecedented by technology industry standards; at the same age, for example, Microsoft was worth over $20 billion, not adjusted for inflation. Atlassian has achieved this valuation, however, in spite of some potential strategic limitations.

Most famously, Atlassian doesn’t employ sales people. This likely isn’t technically true, as they report 15–20% of annual revenue is applied to sales and marketing resources. But there is no question that relative to its peers, Atlassian is substantially under-resourced with respect to salespeople. Instead, Atlassian has relied on clear, accessible pricing, bottom-up adoption, and developer-enabled word-of-mouth to progress from a two-person startup to an 800-strong, pre-IPO software vendor.

More interesting, however, was the nature of the product itself. When Atlassian was founded in 2002, selling strictly proprietary software was not unusual. Quite the contrary: Red Hat, one of the few commercial open source vendors at the time, had only been a publicly traded entity for three years. By 2014, however, it became more and more unusual for organizations not to make some portion of their portfolio open source for competitive, strategic, or simply practical reasons. Atlassian, however, has not chosen to leverage open source as part of their strategy, possibly because they haven’t had to. Sales growth has clearly not been an issue, and the company has generated goodwill in a variety of communities due to their decision to make their software available for free for open source projects.

Growth aside, however, it’s difficult to imagine the company achieving its $3+ billion valuation without the October 2011 addition of OnDemand. Prior to its introduction, the majority of the Atlassian product catalog was made available as software-only, rather than as a service. This meant that the burden of installation, configuration, and maintenance was principally on the user. With OnDemand, Atlassian made virtually its entire product catalog available as a remotely hosted and managed service.

It has become increasingly important for all companies to be capable of delivering their software as a service, to cater to customers that prefer to consume it in that fashion. But this was even more true of Atlassian. Given the base for its typical adoption cycle, in which one or more components are acquired by an individual or small team, minimizing the friction of adoption is enormously important. SaaS is an ideal way to accomplish this, because it shifts the operational burden from user to vendor.

What this means for Atlassian moving forward is that if the observed downward trajectory of available commercial software licensing revenue in the wider market continues, the company is inherently hedged with its OnDemand line. Longer term, as well, the company stands to gain by having operational visibility into a growing percentage of its user base’s implementations. Instead of having its customers’ usage patterns of the software be opaque, Atlassian can collect and store telemetry on hundreds of thousands of running instances to inform its product planning and support, and potentially even retailing the aggregated and anonymized data back to customers—thus creating a new revenue stream from what is essentially waste data today.

IBM

While it’s clear in retrospect that IBM misjudged the potential of the software market and left an enormous amount of value on the table for Microsoft, the technology giant did adjust its strategy over time. IBM Software Group (SWG) is the business unit principally responsible for its standalone software—and as of 2010, solutions—product lines, and while software accounted for only 26% of its 2013 revenue, it contributed 47% of its total profit. IBM’s long-term goal, in fact, is for the majority of its profit to be derived from its software business.

To get an understanding of how realistic that is, it’s worth looking at the software group’s revenue growth over the last five years. The Compound Annual Growth Rate (CAGR) for the period is just shy of 5% (4.92%), but if we look at the data by year, we can better evaluate actual trajectory, as shown in Table 4-1.

| YEAR | REVENUE GROWTH | PROFIT GROWTH |

2009 | -3.1% | .6% |

2010 | 4.84% | 1.37% |

2011 | 9.86% | 0.68% |

2012 | 1.98% | 0.23% |

2013 | 1.87% | 0.11% |

The decline in 2009 is best viewed as an anomaly, given the context of the global financial crisis that negatively impacted spending across the technology industry. This decision is validated by the fact that, as with many of its technology industry counterparts, IBM rebounded nicely for two consecutive years following the panic. Since that time, however, it’s become apparent that software revenue growth has stalled in recent years. Certainly growth is preferable to declines such as 2009, but for a business division expected to be a major engine of growth for the company, it’s less impressive.

There are many potential explanations for the less-than-robust performance, but IBM’s current strategy suggests that one component at least is a challenge to the traditional shrink-wrapped software business.

As much as any software provider in the industry, IBM’s software business was optimized and built for a traditional enterprise procurement model. This typically involves lengthy evaluations of software, commonly referred to as “bake-offs,” followed by the delivery of a software asset, which is then installed and integrated by some combination of buyer employees, IBM services staff, or third-party consultants.

This model, as discussed previously, has increasingly come under assault from open source software, software offered as a pure service or hosted and managed on public cloud infrastructure, or some combination of the two. Following the multi-billion dollar purchase of Softlayer, acquired to beef up IBM’s cloud portfolio, IBM continued to invest heavily in two major cloud-related software projects: OpenStack and Cloud Foundry. The latter, which is what is commonly referred to as a Platform-as-a-Service (PaaS) offering, may give us both an idea of how IBM’s software group is responding to disruption within the traditional software sales cycle and their level of commitment to it.

Specifically, IBM’s implementation of Cloud Foundry, a product called Bluemix, makes a growing portion of IBM’s software portfolio available as a consumable service. Rather than negotiate and purchase software on a standalone basis, then, IBM customers are increasingly able to consume the products in a hosted fashion. And in case it wasn’t clear how seriously IBM is committed to this directionally, the company publicly committed to investing a billion dollars in the Cloud Foundry ecosystem. This is not a first for IBM; the company has committed a billion to projects before, most notably with Linux. But as a signal of intent, it is notable that the company is making a Linux-sized commitment to an application layer that is optimized for making applications portable between many types of environments, including on-premise to cloud.

Obviously some, perhaps even a majority, of the company’s customers will prefer to acquire and implement software in the traditional manner. But if IBM’s revenue fortunes are any guide, its network-enabled strategy can’t be rolled out quickly enough.

Nest

Five years ago, people who claimed that a company producing two products—and unloved products at that, in thermostats and smoke detectors—would exit for $3.2 billion would have been laughed at and publicly mocked. Today, they might instead have been regarded as witches, because that’s exactly what Google paid in the transaction that landed them Nest. How did the company accomplish this?

Most credit, at least in part, the design acumen of Tony Fadell, who is commonly regarded as one of the fathers of the original iPod, which indeed the Nest bears more than a passing resemblance to. Fadell, this argument goes, built a team that was able to emphasize aesthetics and usability, which when combined with intelligent software, produced a product unlike anything the market had seen before. It was an industrial device with legitimate aesthetic appeal.

The most important decision Nest ever made, however, might have been to become what Chris Dixon, an investor with Marc Andreessen’s Andreessen Horowitz venture firm, describes as a “full stack startup.” Nest could certainly have pursued a less ambitious and lower risk strategy of developing its learning software and user interface, then license it to organizations with greater logistical and manufacturing capabilities but less competence within software.

The problem with this approach is that, by sacrificing control of the stack, you introduce opportunities for partners and customers to negatively impact the overall offering. Consider the difference between a Curb, for example, and an Uber: the latter controls the experience top to bottom, and is thus generally able to deliver a superior experience. Dixon lists multiple areas of concern for startups pursuing less than a full stack approach:

- Bad product experience. Nest is great because of deep, Apple-like integration between software, hardware, design, services, etc., something they couldn’t have achieved licensing to Honeywell, etc.

- Cultural resistance to new technologies. The media industry is notoriously slow to adopt new technologies, so Buzzfeed and Netflix are (mostly) bypassing them.

- Unfavorable economics. Your slice of the stack might be quite valuable but without control of the end customer it’s very hard to get paid accordingly.

— Chris Dixon

This argument, then, is establishing a value for software that is dependent on context. As a standalone offering, the software that makes the Nest a class-leading device has value. But it is likely a fraction of the value that can be realized by combining that software with other components—hardware and backend services, in Nest’s case—to create an interesting and distinct whole. E pluribus unum, in other words.

This value is challenging to realize, of course. Achieving operational excellence in categories above and around software is exponentially more difficult to achieve than in software alone. But the rewards are much greater, and in many cases, the Software Paradox may mean that would-be providers of software have no choice but to expand their product focus. Nor has this trend gone unobserved by investors; per the Wall Street Journal, “US venture capitalists completed a record 31 fundraising deals for consumer-electronics makers last year, eclipsing the previous high of 29 in 1999, according to DJX VentureSource. They pumped $848 million into hardware startups, nearly twice the prior record of $442 million set in 2012.”

Although the greater risk and challenge of a full stack startup may be necessary, it also offers the potential for much higher upside. In the margins on the actual product, yes, but also in under-realized and under-appreciated ancillary lines of business. In the case of Nest, this is data. As we’ll see later, this is by itself an enormous potential revenue engine.

Which is why it’s logical to expect this trend to continue as VCs and startups alike grapple with a shrinking outlook for software-alone startups.

Oracle

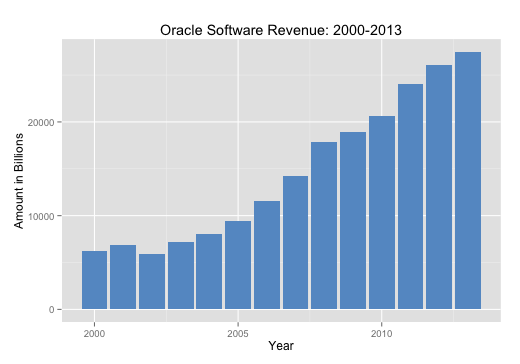

On paper, the story of Oracle’s software business is a success story. Not only has software, which accounted for 74% of the company’s revenue in 2013, built Oracle into one of the largest businesses in the world, it has demonstrated a consistent ability to grow revenue even amid challenging economic conditions. In every year of the last decade, Oracle has bettered its software revenue of the year prior. Revenue growth was more limited in some years than others, such as during the global financial crisis, but still present in spite of such catastrophes. The following chart depicts that growth.

This, for Oracle, is the good news. The bad news is that if one looks beneath the surface, there are questions about how sustainable this growth is over the longer term.

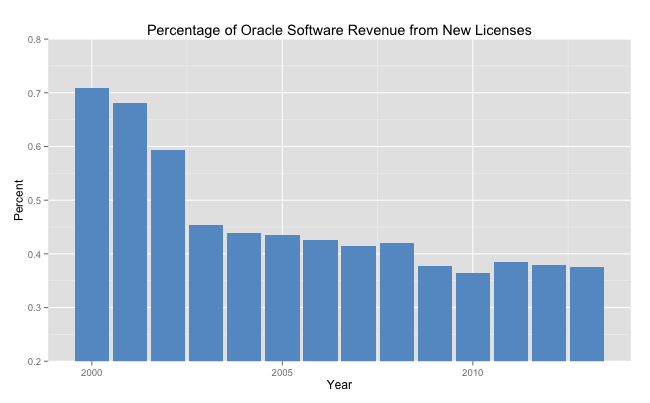

In a fashion relatively unique among large technology organizations, Oracle provides an unusual level of detail in its financial information. Among other distinctions, Oracle makes available to the public the precise breakdown in revenue between what it terms “new software licenses and cloud software subscriptions” (note: the inclusion of “cloud software subscriptions” is new as of 2013) and “software license updates and product support.” What this means in practical terms is that we can attempt to discern how much of Oracle’s revenue derives from new customers versus its monetization of existing customers, and what this trajectory looks like over time.

In its 2013 financial year, for example, Oracle’s filings state that $10.3 billion of its $27.5 billion in total software revenue came from the sale of new licenses and, in a new development, “cloud software subscriptions.” But how does this number compare to Oracle’s past history in the sales of new licenses? Unfortunately for the company, the answer is: not well.

In the year 2000, about 71 cents of every dollar Oracle generated from software revenue came from the sale of a new license. As of 2013, that figure was down to less than 38 cents. Which means that in less than 15 years, Oracle’s software revenue has shifted from almost three quarters from new licensing to just over a third. One logical explanation for this is that basic arithmetic says that growth is hard to scale. While it’s simple for a startup to double its revenues, for example, it is not realistic to expect a company of Oracle’s size to accomplish the same feat. But attributing Oracle’s decreasing ability to sell new perpetual licenses strictly to the revenue plateaus large companies inevitably face as their market becomes saturated would be a mistake. In the year 2000, when it was still posting new licensing figures above 70%, Oracle had already been selling databases for well over two decades.

Oracle’s decision in 2005, after two years of major drops in new license sales, to sell all-you-can-eat Enterprise Licensing Agreements clearly and demonstrably accelerated its revenue growth. But this somewhat artificial growth came with a catch; the audit teams that are a product of the ELAs are a constant reminder for customers to consider other, less costly (and unmonitored) alternatives, further depressing the sales of new licenses. Which means that growth must come from an ever-shrinking pool as customers driven by costs, functionality, or both aggressively examine other options. Even for a company able to consistently and impressively generate revenue growth from the sale of software, this is a problematic trend.

While the causal mechanisms are more difficult to prove, it’s certainly plausible that this trend in the area of its business that makes the largest contributions to its revenue pool is contributing to Oracle’s repeated misses of financial estimates over the last two years. Even if it’s not directly responsible, it certainly is not helping to mitigate the difficulties Oracle is having competing in a rapidly changing landscape. At the very least, it’s a more plausible explanation than Oracle’s famously aggressive sales force having suddenly been afflicted with what CFO Safra Catz characterized as a “lack of urgency” in March of 2013.

The irony of the Software Paradox for Oracle is that on paper, the company should be booming. As hundreds of new applications come online and struggle to scale to meet the demand of millions of new users, the opportunities for a battle-tested, production-quality database should be virtually limitless. But in a market where commercial software is worth less than it once was and customers have lower cost and more available options, you’d expect to see stalling license sales and a decreased ability to meet analyst expectations. What we’re seeing at Oracle, in other words.

To its credit, the company is responding to some of the more notable disruptive challenges it’s facing. By acquiring Sun Microsystems in January 2008, Oracle—the owner of the most successful proprietary database in the world—acquired ownership of the trademark, copyright, and significant developmental resources of the most widely used relational database in the world, MySQL. This gave it visibility into, and to some extent ownership of, the open source relational database market that had the theoretical ability to impact its flagship database project. More recently, the company has been aggressively bolstering its cloud portfolio inorganically via acquisition. Since October of 2011, the list of companies Oracle has acquired for their cloud or SaaS-related business model includes the following:

- BigMachines

- BlueKai

- ClearTrial

- Collective Intellect

- Compendium

- Corente

- DataRaker

- Eloqua

- Instantis

- Involver

- Nimbula

- RightNow Technologies

- SelectMinds

- Skire

- Taleo

- Tekelec

- Vitrue

- Xsigo Systems

If acquisition patterns can be assumed to be a manifestation of strategy, the narrative emerging from Oracle is quite clear: cloud and SaaS are where the dollars are going, and Oracle needs to get there as quickly as possible.

Salesforce

Salesforce’s toll-free contact number today is 1-800-667-6389. One potential alphabetical translation of that—the one the company features prominently on its website, in fact—is 1-800-NO-SOFTWARE. For a decade now, Salesforce has been investing substantial portions of its marketing revenue toward campaigns, conferences, and messaging about the idea of “no software.” Taken literally, this assertion is absurd. Without software, of course, there is no Salesforce: it’s not as if the company makes a tangible, physical product. Based on adoption rates of the service, however, and attendance at their conferences, the average customer seems perfectly fine with a message of no software.

What Salesforce is capitalizing on with this campaign, in part, is the appalling failure rates of on premise implementations of Customer Relationship Management (CRM) software packages. In a piece for ZDNet from 2009, Michael Krigsman collected these varying analyst estimates for the percentage of failed CRM projects:

- 2001 Gartner Group: 50%

- 2002 Butler Group: 70%

- 2002 Selling Power, CSO Forum: 69.3%

- 2005 AMR Research: 18%

- 2006 AMR Research: 31%

- 2007 AMR Research: 29%

- 2007 Economist Intelligence Unit: 56%

- 2009 Forrester Research: 47%

Whichever survey you choose, something close to every other CRM customer failed to implement their software successfully. Definitions of failure vary, clearly, and undoubtedly some functional implementations were considered failures for what might be non-issues for other customers. Still, it’s not difficult to understand why Salesforce would choose to go to market with a message of “no software”: CRM software was not then, nor is it now, popular.

What if, however, there was no software to implement, just a service to consume? Pedants might argue with the semantics of the terminology, but it cannot be argued that there is a material difference between traditional shrink-wrapped CRM software and the browser-based alternative Salesforce and other properties are built upon.

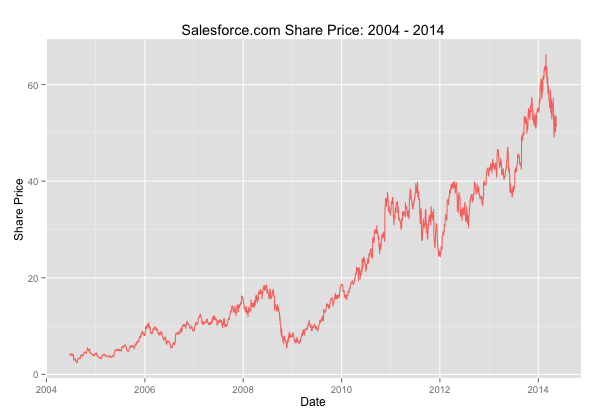

How has this model performed over time? From a bottom line perspective, it’s consistently been a money loser. In each of the last three financial years, Salesforce generated no profits, and its losses actually doubled in fiscal year 2014. Based on these facts alone, it would be reasonable to expect the market to have punished Salesforce. Instead, it has consistently rewarded it. Consider the following chart:

What possible explanation is there for the underperformance in profit and outperformance in share price? It’s difficult to infer market intent with any degree of precision, of course, but it seems reasonable to assume that investors are simply applying a different set of expectations to Salesforce than to the wider market. Much as is the case with Amazon.

It is often said, with a great deal of justification, that the market is tremendously shortsighted. This lack of patience, in turn, manifests itself as companies who manage themselves from quarter to quarter and thus have difficulty occasionally taking one step back in order to take two forward. This is, in many respects, a foundation of Clayton Christensen’s Innovator’s Dilemma. Microsoft might have been best served by cannibalizing its own operating system business, for example, to competitively respond to the disruptions from the public cloud. This is enormously difficult to do in practice, however, because it runs counter to the markets’ expectation for consistent profit and reward.

In both Amazon and Salesforce, however, the market appears to be taking a longer view. Recognizing that the addressable market for each is in part a function of scale, investors have to date been willing to trade current profit for revenue growth and capital investments aimed at building for the longer term. How long this will continue is unclear, but it’s a multi-year trend at this point. Fred Wilson of Union Square Ventures discusses the company’s strategy from an investor’s perspective as follows:

The lesson here is that you can’t just value a company by taking its current performance into account. You really need to have a view towards its future performance. And you need to understand why the company is not currently profitable….

In the case of Salesforce…they are making huge investments in sales and marketing to secure additional customers. They are also making significant annual investments in R&D to maintain the market leadership of their existing products and bring new ones to market. If you think that Salesforce…can continue to grow their revenues at or near their current growth rates, then you ignore the current P&L and think about what a future P&L might look like.

— Fred Wilson

This special treatment is an important and under-appreciated competitive advantage for the company. As IBM’s senior vice president, Steve Mills, said in an interview with GigaOm’s Derrick Harris, “We don’t have permission to not make money. We’re not Amazon.” Setting the implied sarcasm aside, this is an important point.

Responding to the Software Paradox is, for many companies, likely to be an expensive proposition. Developing software is an expensive business to be in, but developing it and delivering it as a service is that much more so.

Those companies that have market permission to invest in the necessary operational scale at present have an important advantage over those that are slaves to quarterly results, because sacrificing profit now for opportunity later may hold big dividends as the market for software as a standalone entity continues to decline.

VMware/Pivotal

It was 2006 when Amazon launched what would later come to be known as the Infrastructure as a Service (IaaS) market. IaaS made available in an on-demand fashion the basic building blocks for a wide range of workloads in compute and storage. While IaaS offered users fine grained control of their infrastructure, however, it did little to offload the operational workload from users. Perceiving an opportunity for customers who were willing to trade control for the convenience of offloading management tasks, however, Salesforce launched its Force.com PaaS platform a year after EC2, and Google followed seven months later with App Engine. Both of these products were aimed at users who wanted to deploy applications without worrying about the operational details. For all of their promise of lower operational overhead, the products failed to see the traction that many expected. Particularly when measured against the meteoric rise of Amazon Web Services, growth within the PaaS category was anemic.

Not everyone had given up on the category, however. Then CEO of VMware Paul Maritz hired Mark Lucovsky away from Google and put him together with Derek Collison and Vadim Spivak to build, in Lucovsky’s words, “something in cloud, for developers.” The end result of these efforts ended up being Cloud Foundry, software intended to offer many of the same features that Google App Engine and Force.com offered.

When launching Cloud Foundry—what Maritz has termed “the 21st-century equivalent of Linux”—VMware made two important operational decisions. The first and most obvious was to release the project as open source, specifically under the permissive Apache license. This is an important decision in the abstract, but even more so given the context that it was created by VMware, a company whose financial fortunes were and are largely dependent on the sale of closed source, proprietary software. According to a November 2011 interview in Wired, this decision was driven by Collison and Lucovsky, and the ramifications are interesting to consider.

The biggest impact of the decision to open source the software, and later create an independent foundation around the asset, is that erstwhile competitors like IBM and VMware can collaborate with one another on the project. Reducing the friction associated with adoption of Cloud Foundry, meanwhile, has unquestionably fueled its rapid growth.

Less heralded than its open source availability, however, was the decision to make available a hosted version of the product from day one. Understanding, perhaps, that merely making the source code available is increasingly insufficient in a world in which the cloud has set an expectation of near-instant provisioning, VMware and subsequently Pivotal chose to invest in the infrastructure necessary to at least tinker with the software, if not host production applications.

It is a statement indeed when one of the largest providers of proprietary software creates a piece of software it compares in significance to the Linux kernel and chooses not only to release it as open source, but to work with competitors to improve it. To recognize that software’s commercial value may have changed from the principles the company was founded on, and to act on this, is impressive. To go one step further and combine the software with services to ease adoption is even more so. Both are suggestive of an organization that understands the Software Paradox, and is actively adjusting to it.