Science fiction author Isaac Asimov famously devised the Three Laws of Robotics, first introduced in his 1942 short story ‘Runaround’. Alongside coining the term robotics, Asimov’s laws formed an underlying basis for his ongoing robotics-based fiction. Initially intended as a safety feature, they cannot be bypassed by the robots within these stories. These have over the years been altered and elaborated upon, but the basic laws are as follows:

1. First Law: A robot may not injure a human being or, through inaction, allow a human being to come to harm.

2. Second Law: A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

3. Third Law: A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

In later fiction, Asimov even added another preceding law, a Zeroth Law, to account for the robots responsible for governing whole planets and human civilisations, and interacting in human societies to a far greater extent than previously written. This additional law is:

0. Zeroth Law: A robot may not harm humanity, or, by inaction, allow humanity to come to harm.

This basic framework, made to ensure robots served humanity and not the other way around, was very clever for its time. Asimov imagined a future world in which robots were commonplace, and created these laws before rudimentary forms of artificial intelligence – or even the first PC – existed. They did, however, also embody one fundamental misconception: that robotics always come packaged with AI, and vice versa.

Before we go any further, we need to sort out what constitutes a robot and what the difference is between a robot and AI. To make it even more fun, definitions vary wildly between experts and sources. But to me, a robot is as an autonomous, semi-autonomous, or remote-controlled device or machine that perform tasks otherwise done by people, one that is able to sense, compute and act.

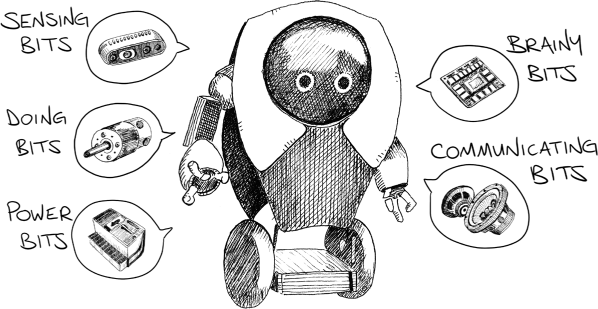

I’ve come to think of it as five common bits that make up a robot (although a robot doesn’t necessarily need all of these, they will vary broadly from robot to robot).

1. The sensing bits

Sensors that absorb information about the environment – these are the inputs

2. The brainy bits

Computation and control systems – whether intelligent or not

3. The doing bits

Various movers, actuators, manipulators, propulsion systems, end effectors – these are the outputs

4. The communicating bits

From simple speakers and microphones to wireless communication systems – these help the robot communicate and be communicated with

5. The power bits

Often in the form of batteries, these systems provide the energy so all other bits can do their thing.

Figure 1: Five common robot bits (RB-5)

Their form (how they look), function (what things they can do) and dexterity (how fancily they do all those things and how difficult those things are), change from robot to robot, so starting with a solid purpose is a good way to figure out how all the rest of these building blocks will play out.

Another couple of quick definitions before we go on. An android is an artificial human, a robot made to look as human as possible – nigh indistinguishable; while a humanoid is simply a machine whose shape is inspired by the human form – you won’t be tricked, it’s clearly a robot.

As for the difference between a robot and AI, let’s think of the robot as the body and AI as the mind. Sure, they may often go hand in hand, and the presence of AI can enhance a robot’s capabilities, potentially to unfathomable heights. But robots can be and have been very useful without AI, and likewise AI has a vast range of uses without the physical manifestations of robotic bodies.

Asimov’s laws are still often referred to today, as robotics and AI agendas mature and more of these discussions pervade our day-to-day lives. While Asimov’s laws clearly combine both AI and robotics, and assume robots have some form of understanding of the world around them, some of the robots discussed in this part of the book have AI and some do not.

The etymology of the word ‘robot’ dates back to the early twentieth century, when Polish playwright Karel C˘apek presented a uniquely futuristic play called R.U.R., or Rossum’s Universal Robots. The word ‘robot’ was based on the Slavic-language term robota, which basically translates to ‘forced labour’. In this play, robots were created to serve humans, but over time adopted their own ideologies and attempted to overtake them through extermination . . . Bad homicidal robots, bad! Our understanding of the term ‘robot’ has changed since then, and more recent discussions edge closer to these entities even developing into human companions, which is clearly a big promotion from forced labour. Where a great level of fear lies is in the idea that they may one day, through sentient-like AI, promote themselves to human overlords, as has been the case in many sci-fi stories since R.U.R. – in which case we’re all just doomed . . . doooooooooomed!

Really, though, it’s more likely we’ll make friends with our emerging non-biological counterparts than be destroyed by them. But that’s also partly due to how we as humans have evolved what we want and need from these entities. Our understanding of robots has changed over time. These days the key defining factor tends to be automation. They are mostly seen as machines of some form that can provide assistance or even take over physical tasks – of a heavy, strenuous, dangerous, menial or repetitive nature. In more recent times, however, they’re not just automating these physical tasks for efficiency. They’re also starting to be used in roles where there’s a deficit of human physical entities, such as in aged care, when people can’t always be present to keep residents company.

Early signs of people thinking about creating machines that exhibit forms of human characteristics or intellect date as far back as Ancient Greece, with their ideas of robotic men. Greek philosopher Aristotle wrote, ‘If every tool, when ordered, or even of its own accord, could do the work that befits it . . . then there would be no need either of apprentices for the master workers or of slaves for the lords.’ In the late fifteenth century, Leonardo da Vinci designed the first recorded ‘humanoid robot’ – a robot that takes on human form – with his complex mechanical Automaton Knight, operated by pulleys to give it the ability to sit up and stand, move its arms independently and even raise its visor. Although no one knows if this was ever actually built, da Vinci’s technical design notes (whose style I just love) have been used in recent years to build the knight and it functions completely as Leonardo intended. An astounding feat of technical brilliance for the time.

In examining the history of robots, we need to look at both fictional and real-life approaches, as each has influenced the other. In 1881, Italian author Carlo Collodi introduced Pinocchio, a story about a wooden marionette, made by a woodcarver named Geppetto, who has his wish granted by a fairy of becoming a real boy. It wasn’t exactly a robot story, but the theme of a human-made creation having the desire for life has woven its way through many robot-centred stories ever since. Back in the real world, at Madison Square Garden in New York in 1898, Nikola Tesla demonstrated a new invention he called a ‘teleautomaton’ – a radio-controlled boat. He was so far ahead of his time that his audience believed it to be a trick. Years would pass before this technology became more commonly used, such as in remote-controlled weapons in World War I during the 1910s. Fictional robots appeared in silent film in 1927 with the release of Metropolis, based on a novel from a few years earlier. Set in an urban dystopic future the film featured an iconic humanoid female robot, Maschinenmensch (German for ‘machine-human’), who takes the form of a human woman, Maria, in order to destroy a labour movement. Again with the destroying!

Around this same time, the Westinghouse Electric and Manufacturing Company built the first real humanoid robot, called Herbert Televox, albeit a flat cut-out human shape over mechanical parts. This robot could pick up a phone receiver to answer incoming phone calls, with a few basic words and actions added over time. Not long after the Herbert Televox, Japan also saw its first robot, the Gakutensoku (Japanese for ‘learning from the laws of nature’) created in 1929. He could move his head and arms, write with a large pen and change facial expressions – and the country has continued to have a great interest in robotics ever since.

But the company that built Herbert Televox did not stop there either, bringing to life newer designs, including Elektro the Moto-Man in 1937. This humanoid built of aluminium and steel could perform a range of routines – walking, talking, moving his head and arms, and even smoking cigarettes and blowing up balloons, just to be super useful. The 1930s also saw early designs of big arm-like industrial robots being used in production lines in the United States.

Slot in here Asimov’s Three Laws of Robotics from 1942. Following this, in 1945 Vannevar Bush predicted the rise of computers, data processing, digital word processing and voice recognition, among other developments, in his essay ‘As We May Think’. American mathematician and philosopher Norbert Wiener in 1948 defined the futuristic term ‘cybernetics’ as ‘the scientific study of control and communication in the animal and the machine’ – a broad field advancing our understanding of how biological entities like humans and animals can connect, control and communicate with machine entities such as computers and robotics.

In 1949, American computer scientist and popular writer Edmund Berkeley published a hugely popular book, Giant Brains or Machines That Think. Just two years later he released Squee, a wheeled metal and electronic robot ‘squirrel’ that used two light sensors and two contact switches to hunt for nuts (actually tennis balls) and drag them back to its nest. It was the first of its kind . . . squeeeee!

The now well-known Japanese manga character Astro Boy first appeared in the early 1950s. This young boy android with human emotions was created in the various versions of the story by the head of the Ministry of Science following the death of his son. Originally written and illustrated by Osamu Tezuka, the Astro Boy stories have taken on many storylines – depicting a futuristic world where robots and humans co-exist. This may also have played a role in the Japanese cultural approach to robots, which they most often regard as helpmates and human counterparts.

George Devol invented Unimate in 1954, the first digitally operated and programmable industrial robot. It was first sold to General Motors to assist in car manufacturing in 1960 and put into use the following year, lifting hot pieces of metal from a die-casting machine and placing them in cooling liq-uid. Devol patented the technology and the product was the basis for him and Joseph Engelberger founding the world’s first robotics company in 1962, called Unimation. Electronic computer-controlled robotics soon followed as others built new ideas and wars including the Vietnam War became testing grounds for smart-weapon systems.

And now to 1977, the year George Lucas released the epic film Star Wars (later renamed Episode IV: A New Hope), the first of what would quickly become a global pop-culture sensation. The franchise is set ‘A long time ago in a galaxy far, far away’, where humans and several species of aliens co-exist, along with robots known as ‘droids’, and where hyperspace technology makes space travel possible. Two droids, among the most recognisable robot characters ever created in science fiction, instantly carved their way into human hearts – R2-D2, a smart, cheeky droid like a bin with wheels, and C-3PO, a polite, fussy, intelligent and worry-prone metallic humanoid droid harking back (in form not character) to the Maschinenmensch of Metropolis. Their personalities inspired a new wave of ideas about what robot companions could one day become.

Moving on to 1993, an eight-legged, spider-like robot named Dante, made by Carnegie Mellon University and remotely controlled from the United States, was deployed to Antarctica in an attempt to explore the active Mount Erebus volcano. This tethered walking robot, designed for a real exploration mission and capable of climbing steep slopes, demonstrated rough terrain locomotion, environmental survival and self-sustained operation in the harsh Antarctic climate. Although not everything with Dante went quite as hoped, and it didn’t reach the bottom of the crater or collect any gas samples, it still partially demonstrated that a remote-controlled robot could carry out a credible exploration mission, and the landmark effort ushered in a new era of robotic exploration of hazardous environments.

Only a few years later, in 1997, Sojourner, the first robotic planetary rover, successfully landed on the surface of Mars aboard the Mars Pathfinder lander. Unlike landers, which cannot move away from the landing site, Sojourner managed to travel a distance of just over 100 metres before communication was lost three months later. It featured six wheels, three cameras (two monochrome – single-coloured – cameras in the front and a colour camera in the rear), solar panels, a non-rechargeable battery and other hardware instruments to conduct scientific experiments on the surface of the red planet.

Since then, the robotics industry has diversified into many new waves of products and designs. These range from fuzzy, bat-like, gibberish-to-English-speaking, evolving Furby toys to Honda Motor Company’s walking, stair-scaling, interacting humanoid; from robotic vacuums like Roomba to later Mars rovers like NASA’s Spirit and Opportunity; from driverless vehicles to Robonaut becoming the first humanoid in space; from Sophia becoming the first robot with citizenship and Boston Dynamics showcasing a wide range of incredibly dexterous robots to the widespread use of commercial and industrial robots for tasks considered too dull, dirty, difficult or dangerous for humans. Explorations in robot design have seen them become giant, smaller all the way down to the nanoscale, more intri-cate, dexterous and precise, softer, lighter, aerodynamic, waterproof, human-like and even social. And all these developments will only continue to advance and broaden in use and function.

Although, all of these applications bring up numerous ethical questions. How will robots assimilate into our society? Who will be at fault if an accident occurs with, say, a self-driving car? What are the ethical and moral implications of robots being able to uphold social connection?

The big challenge in any type of framework for robotics or AI is that Asimov’s laws – or any other rules we wish to impose – are described in a human language and are thus open to individual subjective interpretation. Basically, these rules are incredibly difficult to program for a variety of reasons, including the fact that even a group of humans may not agree on what the laws could mean in any given situation. Let’s say we’re discussing autonomous vehicles, which brings up the dilemma of human morality encapsulated in the trolley problem. This famous thought experiment describes scenarios along the following lines.

A runaway tram/trolley is hurtling down the track and cannot be stopped. Straight ahead of the trolley on the track are five people, all of whom are about to get wiped out. Before the trolley reaches them, however, there is a side-track that could divert the trolley away from them, but that would kill one unaware person who is chilling out on this alternative track. This turn can be made simply by pulling a lever. In this scenario you have randomly found yourself as an onlooker some distance away from the tracks, and said lever is right in front of you! There’s no time to warn anyone, just to act – or not. What do you do? Do you let the trolley kill the five people it’s already headed towards? Or do you make the conscious decision to pull that lever, saving the five yet killing one?

British philosopher Philippa Foot first introduced this kind of thought experiment in a 1967 paper entitled ‘The Problem of Abortion and the Doctrine of the Double Effect’. The dilemma has taken on many forms since, making appearances in pop culture (such as in the film I, Robot, where robots make choices in similar situations), a range of analyses and philosophical adaptations and even in patents on decision-making for driverless vehicles. A common situation in recent times being debated is what should happen in the event of a driverless collision.

In 2017 I took a ride in a NIO EP9, the world’s fastest electric hypercar at the time. With Macanese motor-racing driver André Couto – who instantly became a friend – behind the wheel, we flew around the Shanghai International Circuit. I’ve never felt anything like the G-forces, or my complete disbelief in the fact that we were still on the road when taking corners with such speed and every part of me felt we should have been flipping right off the track. It was an amazing feat for electric vehicles and, impressively, earlier that year an autonomous version of the EP9 shot through a record-breaking lap of the Circuit of the Americas, travelling up to 256 kilometres per hour and completing it flawlessly, setting a new tone for the potential of autonomous driving.

But let’s apply the trolley problem to an autonomous vehicle. What if it suddenly has to make a choice to swerve to avoid five pedestrians who have ended up in front of it that it hasn’t previously sensed, and in doing so it will kill a pedestrian on the street? In this unlikely yet possible scenario, Asimov’s laws are very difficult to go by. Firstly, the robot in this scenario actually has no choice but to injure or harm a human being – in fact, it is instantly being forced to break the First Law. Secondly, even if we were given details on each of the people in this situation, unless in truly extreme cases – such as Princess Diana versus a serial killer – we’d likely be unable to reach consensus as a small group, let alone a society, as to who should survive. And finally, even if we did somehow have consensus, we’re also assuming that the vehicle is somehow able to recognise and obtain all this information in the fraction of a second it might have to make this decision, which is absolutely possible with the speed of modern computing. A solid framework to adhere to is difficult. And the extensive, all-possible-scenarios decision-making process definitely shouldn’t fall on the shoulders of the software engineers who wrote the code. So who makes these decisions and why is it such an unnerving conversation to have in the first place? Well, it’s because of the double effect, invoked by Philippa Foot to explain the permissibility of an action that can cause harm or death, as a side effect of promoting some seemingly good end. We find it strange that objectively we can decide ahead of time that taking one life to save five makes sense, but subjectively (and emotionally) we know we’re making a decision that could one day preserve or take life, and that intrinsically does not feel right to many people.

This does, however, raise an important ethical challenge Asimov started to address long ago. With incredible foresight, he knew one day we would need to design ethical frameworks and draw up laws to govern the implementation of evolving technology like robotics. These would be necessary to ensure safety to humanity, but even in his own writing robots find ways to defy the laws.

To this day, Asimov’s laws in the field of robotics are still frequently referred to and well known. Maybe it’s time to rethink these laws, not just limiting them to robotics, and hopefully even finding ways of democratising the decision-making process. Let’s raise discussions to have a say in how these technologies should be governed, because regulatory bodies can struggle to wrap their heads around what’s here now, let alone plan for what’s yet to come.

The field of robotics has progressed in leaps and bounds over the decades. Robots have typically been designed for duties such as repetitive physical tasks, heavy-duty work and precision procedures, so we see robots on production lines, in medical procedures and in freight logistics. These days, however, robots are slowly starting to infiltrate many more aspects of our lives. Coupled with various types and levels of AI they are now moving into making and acting on decisions, facilitating automated transportation, helping in physical rehabilitation and even providing humans with companionship.

Robots are starting to traverse uncharted territory in human interaction. While autonomous robots with human-like, extensive capabilities are still only being approached step by step, European lawmakers, legal experts and manufacturers have for some time been debating their future legal status: whether these machines or human beings should bear ultimate responsibility for their actions.

I mean, is it too big a leap of the imagination to envisage a day when robots are given rights? What if they become intelligent and independent learners, show awareness and understanding of themselves and others, and even develop an ability to empathise? By that stage, who are we to then say a robot shouldn’t have rights? This is definitely a debate we’ll visit more frequently in the years to come. With the humanoid robot Sophia, made by Hong Kong–based company Hanson Robotics, being the first robot to ever be granted citizenship – to Saudi Arabia in 2017 – it seems we have also begun exploring these possibilities. Maybe upon reaching later stages of such rights, Asimov’s laws may be more easily understood by robots, for which he clearly assumed a very advanced set of cognitive capabilities to reason through these laws. Either way, we know they’ll remain iconic. Despite future robotic rights being a topic of debate, our world should definitely address and resolve human rights and inequalities first and foremost.

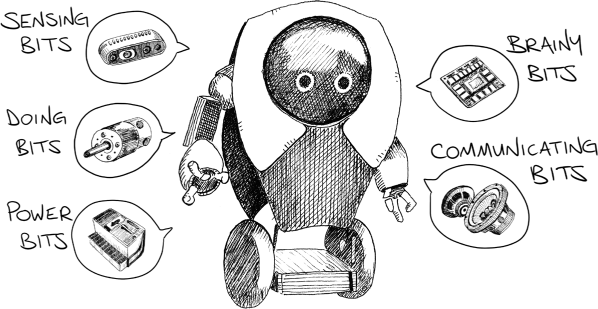

I believe we should have a way of classifying robots based on their purpose, to help us understand where all these entities sit and what they can do. If we bucket them all in together then it would become the equivalent of doing a case study on a few predator species of fish and then concluding that all fish must be killers. Similarly, robots can be ethically questionable according to laws such as Asimov’s, for example, in military applications, and more clearly beneficial in others, such as those used in medical surgery or space exploration.

To help gain an overarching picture of the purposes of robots, I’ve categorised A Human’s Guide to Five Robot Purposes (RP-5). These span anything considered robotic, going beyond the traditional android idea and including some types like space probes, which you may or may not have previously thought of as robots. All robot types and purposes can be placed into these five broad categories; some may even traverse a few, but their main purpose will be the defining factor.

A: Exploration, discovery and transportation

e.g. space probes, environment explorers, autonomous vehicles

B: Medical, health and quality of life

e.g. surgery and health monitoring, exoskeletons for rehabilitation, social companions

C: Service, labour and efficiency

e.g. industry, logistics, agriculture, hospitality

D: Education and entertainment

e.g. programmable education bots, teachers, battle bots

E: Security, military and tactical

e.g. bomb defusers, robot soldiers and scouts, police.

These five categories span a wealth of robotic creations. Let’s examine them in more depth.

Figure 2: Five robot purposes (RP-5)

This covers robots that can really travel, whether they be moving humans or cargo, heading to remote regions, unknown locations or places humans cannot yet go. As we have seen, robots like NASA’s Spirit and Opportunity have already been used to roam Mars and send back data to advance our knowledge about the planet and to plan future missions. Some robotic space probes – Pioneer 10, Pioneer 11, Voyager 1, Voyager 2 and New Horizons – have even flown out through our solar system (completing flyby missions along the way), achieved escape velocity and soared beyond the influence of our sun. Missions continue as we head towards exploring moons with rich organic chemistry, icy landscapes and ocean worlds in search for the building blocks of life. These missions suit robotic systems incredibly well.

The Dragonfly mission aims to explore Titan – Saturn’s giant moon, larger than the planet Mercury – as part of NASA’s New Frontiers program, which has already seen the New Horizons mission to Pluto and the Kuiper Belt, the Juno mission to Jupiter and OSIRIS-Rex mission to the asteroid Bennu. Another exciting NASA mission is Europa Clipper, a spacecraft made to orbit Jupiter and frequently sail past its fourth-largest moon, Europa, capturing high-resolution images and investigating its composition on these flybys. This moon is significant not because of what we can see so far, but because of what we have not yet seen beneath its icy crust. This mission’s aim is to discover whether Europa possesses all three ingredients necessary to enable biological life – liquid water, chemical ingredients and sufficient energy sources. Advancements in robotic technology and me-thods only help serve all of these endeavours.

Autonomous vehicles, drones from standard size through to passenger megadrones, smart wheelchairs, environment explorers and passenger-carrying spacecraft all fall into this category.

This group encapsulates a range of robotics mostly aimed at improving quality of life, from simple assistance around the home to companionship and robots that can perform surgery. An example of the latter is the ‘da Vinci surgical system’ for minimally invasive robot-aided surgery. The various designs of this system’s main ‘Patient Cart’ look like multiple-armed metal aliens – a little reminiscent of the giant arachnids from the 1997 sci-fi film Starship Troopers – with each arm wielding a precision surgical instrument. One arm holds a special camera that feeds high-definition 3D video to a surgeon, who sits at a separate ‘Surgeon Console’ and uses controllers to translate their hand movements into precision control over the complex, dexterous instruments. Named after Leonardo da Vinci, this surgical system was launched in 1999, received US Food and Drug Administration approval the following year, and has performed millions of surgeries globally since. Although these have come with a range of challenges (and lawsuits when things have gone wrong), the evidence of success across the vast multitude of surgeries performed has seen it really pave the way for advancing robotics in healthcare. Robotic surgeries will continue big moves through more complicated and intricate procedures, including automated surgeries, brain surgery and precision bionic and biomedical device implantation.

As we now know, robotics shows up in many forms, not just those that look like robots. One area of recent advancement is human augmentation. Humans will increasingly use such systems for medical purposes, in the form of bionics and prostheses to replace lost limbs, exoskeletons for physical assistance and much more – as we will see later in this book.

This category also includes robots for health and quality of life, and many of these feature a growing robotic phenomenon – the age of social robotics is upon us. Robot utility is not just limited to efficiency and automation – they’re also being created with direct human-interaction purposes in mind. Increasingly, bots are being used to assist around the home – as emotional companions as well as healthcare, security, exercise and as personal assistants. Ever-evolving examples include cuddly robotic seals (named Paro) for dementia therapy, education companions for kids, giant friendly smiling bear bots (named Robear) from Japan that lift patients in hospitals and transfer them between beds and wheelchairs, and mentally stimulating companionship robots for aged and palliative care.

This is one of the most common and easily imagined purposes for robots. Common industrial robots are mostly articulated robotic arms, with large rotating joints, somewhat comparable to our own shoulder and wrist joints. They have a wide range of uses in industrial applications, like moving things that are too hot, heavy or dangerous for humans; doing repetitive and accurate tasks like welding on production lines; and even performing operations in space like collecting samples off the surface of Mars (e.g. the InSight lander and Curiosity rover).

Traditional industrial robots tend to operate within a small area, as they do not tend to get up and move. Breaking these confines requires more advanced robotics, many taking humanoid form and moving towards forms of labour humans could do, but mainly used for tasks that are too difficult or hazardous for humans to perform. In some cases they simply improve efficiency and allow round-the-clock operation. Some of these conduct tasks like delivering packages, navigating complex and dangerous environments, palletising and de-palletising delivery trucks, operating power tools and automatically identifying tools, objects and people.

SoftBank Robotics’ Pepper is a social robot that really made trendy the spread of recognisable, closer-to-human-size social humanoids. Outside the research lab, you’ll typically find Pepper in retail stores and conference centres. It has been adopted by thousands of companies around the world mostly due to its ability to interact with customers.

Atlas by Boston Dynamics really set a new benchmark for possibilities in humanoid movement. First unveiled to the public in 2013 for search-and-rescue–type tasks, it has performed many impressive stunts for camera since. In 2017, Atlas both thrilled and creeped out viewers when it performed parkour-style manoeuvres – it jumped across obstacles with amazing fluidity, and then did something humans had never previously seen a robot do: it backflipped off a high box. I mean, it was a freakin’ humanoid doing a freakin’ backflip! Until this point, bipedal robots had enough trouble standing on two feet for extended periods of time, let alone landing a sweet move beyond what most humans can do – though I’m sure if we weren’t afraid of landing right on our head we’d eventually get there too. But this was not something humanoids were supposed to be able to achieve by this stage. Yet again the world was shocked.

Advancements in humanoid labourers have also seen countries like Japan develop their own designs, particularly in tough times, to address labour shortages resulting from birth-rate restrictions and ageing populations. Beyond industrial arms and service-oriented humanoids, we also find robots in this category climbing around cleaning bridges by sand-blasting; dog-like and other biologically inspired robots helping to move payloads of equipment around industrial sites; non-humanoid, friendly wheeled bots assisting around hotels as robotic butlers; and underwater operations robots mapping out surroundings, performing structure inspections, operating valves and using subsea tools.

Helping out with filming aboard the International Space Station (ISS) was Int-Ball, a cartoony, large-eyed, very cute-looking (would we expect any less from Japan?) robot created by JAXA (Japan Aerospace Exploration Agency). Delivered from SpaceX’s CRS-11 commercial resupply mission in 2017, it floats around in the station’s zero-gravity environment as an autonomous (or earth-controlled), self-propelled ball camera for recording and remote viewing. A year later, CIMON (Crew Interactive Mobile companion, developed by European aerospace company Airbus on behalf of the German space agency DLR) was delivered from SpaceX’s Dragon cargo capsule to the ISS. Another floating ball camera robot (this time with a flat-screen sketch-like face on a white background) built to assist crew, it was the first AI-equipped machine ever sent into space.

So although this category is often what comes first to mind when people think about the purpose of robots, their vast array of capabilities and ever-growing uses continue to amaze and expand – well beyond factories and production lines and even beyond our earth.

This is a category where developers are making interesting strides. These range from robots for entertainment, all the way through to those that help people learn to program and some that directly act as teachers. I remember Dad getting us the first Lego Mindstorms, the original Robotics Invention System, released in 1998. This had a programmable brick called the RCX (Robotic Command eXplorers), with peripherals you could connect – touch-sensors and an optical sensor, to build basic robots like small vehicles that could drive around and change direction if a sensor was triggered. They taught us simple cause-and-effect concepts of robotic programming. Later Mindstorms models continued to evolve, with major upgrades at each step, and a flurry of other programmable education robotics hit the market in the 2010s. Many have been designed to teach children to code, even from a primary school age, and to build an understanding of the fundamentals of robotics. Other educational robots, from toys to professional large android designs, have been made to teach directly. These will find their place over time, but one of the biggest challenges faced by large androids is the uncanny valley, a topic explored in Chapter 23.

In the entertainment space, toy robots can show a lot of personality and be entertaining as well as educational. Entertainment robotics have progressed from animatronic dinosaurs in Hollywood films like Jurassic Park in the 1990s to humanoids performing live superhero stunts. In early 2020, Disney theme parks released vision of autonomous audio-animatronic stunt robots developed by Walt Disney Imagineering Research and Development as part of the Stuntronics project. These life-size humanoids can perform precision aerial stunts when flung high into the air, so dressing them as Marvel superheroes, like Spider-Man swinging across the sky, will create a real wow factor for visitors.

Toy robots, social robots, humanoids and androids will increasingly find their way into our day-to-day education and entertainment as we step into the future. This includes pleasure (I’m sure your imagination can fill in the blanks here) – which may just slow down that population growth a little bit . . .

This area is filled by a real sci-fi list of robots designed for security patrolling, surveillance, reconnaissance, investigation, negotiation, search and rescue, radiation and contamination monitoring and detection, bomb detection and defusing, target acquisition and attack. RoboCop first graced our screens as a 1987 film that became a franchise, also featuring a TV series I watched when I was young. It’s about an experimental cyborg cop on patrol in crime-frenzied Detroit in a dystopian future. In the 2010s, police robots became reality, somewhat . . . patrolling parks, malls and airports. Some are humanoid – like a Dubai police force member deployed in 2017, creatively named RoboCop, designed by Spanish firm PAL Robotics and featuring a touchscreen on its chest that allows people to pay fines and report crimes. It’s not quite as active as the character it was named after, but it’s a step in that direction.

Others don’t look human at all, like a large wheeled bullet-shaped robot called K5, released in 2015 by Knightscope. These machines tend to get off to shaky starts, with questionable effectiveness and unforeseen incidents. These bullet-bots have had a few mishaps, like bowling over a toddler in California and ignoring a woman attempting to report an emergency in a park. In a Washington DC office building, one bullet-bot, nicknamed ‘Steve’, had a bit of an unfortunate day. While carrying out his duty on patrol, Steve tumbled down some steps and into a water fountain, drowning himself face down. The internet had a field day sending pictures of the event viral. People in the office took it further, making a memorial shrine for Steve the drowned security robot.

Seriously though, these kinds of events happen whenever a big leap is taken in technology into fairly uncharted territory, and as these problems are fixed massive improvements occur over time.

Field testing also provides real-world insights. I have personally witnessed a K5 rolling around an international airport in 2017. It was the first time I’d seen one, so I just stopped to watch it move around its patrol area. A moment later a boy around three years old walked over to it and stared at it, his parents hanging back. The robot rotated on the spot and started rolling in the other direction, and instantly the boy walked with it. Failing to find anything resembling a hand attached to this large glossy white wandering bullet, the boy placed his hand on its side. Walking back and forth for a whole ten minutes in view of his parents, the boy stayed beside this robot, always with a hand on its side, as if being taken for a tour by a family member. This really got my mind going. A different generation is emerging and robots are going to be part of their society, their world, even companions in their lives. It was an emotion difficult to describe in seeing this moving pair – a juxtaposition of law-enforcement machine and the innocence of a child seeking connection.

Beyond security, robots in this category have been moving into spaces that truly shake the foundations of Asimov’s laws. Military applications of robotics are a large source of advancement in the field, but to what end? Take small tactical armed robots as an example. Some look as if a mini Johnny Five actually came to life, in the form of small caterpillar-wheeled robot that pack a punch with their firepower – from tear gas to non-lethal lasers for blinding enemies to grenade launchers . . . grenade launchers! On strangely cute robots. They felt a little like Buzz Lightyear up until that point but then it gets real and my emotions go into a flurry. That thing is a machine made to destroy Asimov’s laws, along with any enemies it decides aren’t tear-gas or laser worthy. But how does it decide?

This is where the programming comes in. If it’s remotely operated a human decides. If not, the system is autonomous and it’s up to either the coded decisions or AI to make the judgement calls. It’s a scary new frontier. And there are many more where it came from – autonomous tanks, unmanned ships, weaponised drones. The fact that robots can be utilised in this way, to become lethal autonomous weapons (LAWs) that can advance so far as to become weapons of mass destruction, has prompted thousands of experts around the world, across science, technology, robotics and AI, to sign petitions to ban LAWs. It’s one thing when a person who can be accountable is behind it all, making the kill command, but combining the ability to kill with independent decision-making crosses a threshold into far too dangerous territory.

Science fiction often posits worlds where technology has been abused to inflict tyranny or to control populations, in some cases without them even knowing. Those dark visions of where robots could go (in most cases as an embodiment of AI) make enthralling stories: whether they’re about robots manipulating humans (Ex Machina), robots trying to be human (Blade Runner), robots imprisoning humans as a power source (The Matrix), or robots destroying humans (The Terminator). Of course, there are also uplifting films about robots that can help us reflect on our humanity too: WALL-E, Big Hero 6, A.I. Artificial Intelligence and Short Circuit, through contrasting tech and humanity, all lend a softer edge to high-tech robotics and AI. Sometimes the robots can seem, as Blade Runner puts it, ‘more human than human’. I find all these films mind-opening in their own way, as parallels can be drawn to our own potential future. Some depict social robots as being viably interactive with humans, inclusive of humour, helpfulness and – sometimes, perhaps – empathy. But the reality will probably turn out to be, as they say, stranger than fiction.

At the very least, as various fields of robotics continue to advance, these kinder depictions can provide comfort and maybe even positive opportunities for humanity. Whether we build them to reach the depths of our own oceans, endure the harshest and most hazardous conditions on Earth, or travel to our stations in orbit around Earth, to the planets and moons around us, or to even venture out to worlds beyond our solar system, robots will continue to advance and be utilised to avoid risking human life, to see the places we cannot yet go and to expand our geographical reach and understanding of the universe around us. One thing is certain: the uses for robots are colossally vast and varied, and are ever-broadening.

I’ve had my own interesting experiences with robotics, giving me many lessons along the way – through the design and building of robots, and through reflecting on what it is to be human. My connection with robots has definitely been unique among those of my generation.