In many ways a computer is a good analogy to human memory. Its sytems — information processing and retrieval — parallel those of memory’s, and the role of the programmer, both as a source of the information (including the possibility of misinformation) and as an influence that can be altered or improved, is much like a person’s relationship to his or her memory. There are things we can do to improve our memory, just as we must realize there is the factor of malleability to be reckoned with. Later in this chapter we shall offer some of these helpful “tricks.”

But it is the computer, in fact, which offers us the best and most sophisticated model of memory’s malleable nature. Computers are capable of storing large amounts of data efficiently and retrieving needed information rapidly. The tasks faced by computer memories and the manner in which material is recalled from computer memory closely parallel those of human memory.

A medical data bank, for example, may store detailed information about the course of thousands of patients. The data are organized so that a physician may type the characteristics of a new patient into the computer, and the computer will search its files, locate the most similar patients residing in those files, compute certain attributes about those patients, analyze their subsequent medical courses, and summarize this organized experience back on the computer screen. This process consists of identifying a specific memory, comparing it with other memories, and developing a sophisticated conclusion based upon previous experience, a problem frequently faced by human memory.

There are four requirements which underlie such computer magic. First, there must be a place to store the information. This may be on magnetic tape, on magnetic disk, or in a variety of other forms. Second, there has to be a way to know what is stored where. This function is served by a file structure and by one or several indexes. Third, there must be a method to get the information back out. This function is served by retrieval programs and subroutines. Fourth, there must be a method for aggregating raw data into meaningful concepts. This is accomplished by analysis programs and subroutines. Any memory system, biological or mechanical, must be designed to do these four things.

Computer hardware has evolved, for reasons which are more than coincidental, analogously to human memory. The computer has a central thinking area called “core.” The core contains a relatively small amount of data at any particular time, but these data are immediately available for manipulation. Core corresponds, more or less, to the sensory store where human memory briefly holds incoming information. The newer computers have extended this area to include so-called virtual core. This memory facility resembles human short-term memory; information in residence here can be processed almost instantaneously. Human long-term memory is represented by storage of information in the computer data bank on magnetic disk. The information is very accessible, with random access to any desired item. But a well-organized index is required to locate a particular piece of information on the magnetic disk. Information that need not be accessed so quickly may be stored on magnetic tape instead of disk. The tape can serve as a back-up in case of loss of data from magnetic disk and can hold information that is not needed very often. It can provide redundancy and security for data. To find a desired piece of information on a tape, you must start at one end of the tape and run through it until you find what you want. These concepts (random access, back-up, redundancy, and security) must be a part of human memory. For example, removal of any portion of a mammalian brain does not disturb a particular memory. Thus, memory must be maintained redundantly and be backed up against loss due to injury to any set of brain cells.

An important difference exists between the short-term memory and the long-term memory of the computer. The computer stores information in core electrically. However, when information must be maintained and accessed over a long period of time, it is represented by physical configurations of chemicals upon a magnetic disk. Like the human brain, electrical forms of data storage are easy to manipulate, but are too fragile to allow for long-term use. If the electrical power to the computer goes off, or some other external influence interrupts the computer’s normal functioning, information stored electrically is lost; that represented chemically remains.

Computer information is stored as “bits,” simple switches which are either “on” or “off,” but have no other values. A sequence of bits forms a code which can be a number, a letter, a word, or a concept. Computer languages exist to translate instructions given in human languages to the storage language of the machine. The machine language may perform binary operations, on bits. A programming language may enable communication of certain instructions; and a language of prompts designed for the naive user may allow the comprehension of commands phrased in the form of rather complicated constructions.

Human memory is likely to have many of these same features. Ultimately, information will be stored in some form of biological code that may not be binary, but must have a finite number of possible configurations which is less than the number of possible memories. At some level of human memory, routines must exist for the translation of the biologically coded information by “reading” into electrical form, and for progressive aggregation of that information into ever more complicated concepts. Human long-term memories may be represented by protein codes much like genetic information or by the physical connections between brain cells forming unique patterns, or by some other theory yet to be suggested. But the four basic operations of storage, indexing, retrieval, and analysis must be present.

Two of these computer requirements which must also exist in human memory have been neglected. First, there must be a file structure. Probably this file structure exhibits a branching structure or hierarchy. Second, there must be an index. There must be a map to what is stored where. The actual memory traces may be widely scattered for reasons of protection or security, but the index must be able to locate them and bring them together, and it must be able to do this within microseconds.

Many other features of the computer data retrieval process are of particular interest as we study human memory. For example, a retrieval program may read from a record stored on disk into core. It thus creates an electronic copy of the chemical memory, but makes no change in the original record stored on the disk. After that memory is used in core, the electronic copy is allowed to be destroyed, without any effect upon the original memory.

More frequently, however, a different option is employed after a memory is retrieved. The memory is brought from chemical storage to electronic storage, is modified to some extent in core, and then the new memory is replaced in the old file! After this operation, the original memory may no longer be accessed. In some systems it is kept for a while but not indexed, but ultimately it is entirely destroyed. This crucial system feature, without which no computer data bank could operate effectively, has close parallels in the malleability of human memory. With a finite brain, the malleable memory system is far more efficient than an inflexible one.

A final option is to retrieve a memory, manipulate the memory in core, and then store both the original version of the memory and the modified one. Thus, for example, one might store a series of memories about how one’s opinions on a particular subject had shifted over the years. Again, such operations are critical for certain uses and must be part of any well-designed data retrieval system: a flexible system with finite capacity.

Some other tidbits of interest: Computer retrieval does not allow equal access to all possible pieces of information. Frequently retrieved items or high-priority items are usually kept maximally accessible. The hierarchy of storage allows the system to branch quickly to the appropriate subject, and data associated with that subject are often stored in the same position, in the same record. This suggests that human memory might depend strongly upon the quality of the organization of the hierarchy.

When a particular retrieval is performed several times, the programmer may devise a “macro,” a subroutine which will perform that search automatically. Thus, a particular chain of reasoning need not be repeated every time the same problem is encountered. Programs that are called to the core area when they are needed serve to abstract the data, to organize the data, to compute, and to conclude. These programs, subroutines, and macros process the data. In human terms, we might forget a chain of reasoning but remember the conclusion; this use of a human “macro” would be more efficient but less flexible. We sometimes see this phenomenon in aging persons.

Usually more data are recalled to core than are actually used. Data are stored in units called “records,” and even if one needs only a portion of the record, the entire record must be recalled. This inefficiency in the use of space is compensated by the ease of programming and file structure, just another of many trade-offs. For the human, calling up a memory of, for example, a book one has read seems to bring with it many more facts about the book than had been originally sought. Additional questions about that book can now be answered readily. Some waste, and some increased efficiency.

In a similar way, redundancy in computer files is usually good rather than bad. Sometimes enough improvement can be gained in the security of data or in the speed of access to data to justify the storage of high-priority information two or more times. The human brain almost certainly does this. The control theme of these points is that design of a computer memory system involves the balancing of one priority against another, speed versus size, for example, or available space versus competing priorities for that space. And any design decisions that are made will cause the memory system to operate better for some tasks than for others. The designer must choose which tasks to optimize.

Some of the possible parallels between computers and humans are listed on the table on page 177. The hippocampus might well be the physical location for the index file to human memory. It is activated each time a memory is sought, but activation of the hippocampus nerve cell is nonspecific as to the memory requested. When the power goes off to the computer, the equivalent of a seizure or electric shock to the human probably occurs. The electrical portion of memory, including the index, is obliterated. Long-term memory is not affected. During the period post-seizure, the index may be being reloaded electrically from back-up sources. Hippocampal seizures in particular appear to result in temporary loss of an index.

Short-term memory is represented in the computer by virtual core. Long-term storage is often held on magnetic disk in the computer. Temperature change in the computer alters the functions of the machine in ways similar to those in which drugs such as alcohol, marijuana, or LSD may affect the operation of the brain. Certain processes may be speeded or slowed, and certain short-circuits may be caused. The updating of files overnight, when the computer manager renders retrieval more efficient by organizing data acquired during the previous day for optimal retrieval, might be represented in some form of the human dreaming process.

Data bank |

Human brain |

Index file |

Hippocampus |

Power off |

ECT/seizure |

Reloading the index |

Post-seizure period |

Virtual core |

Short-term memory |

Disk storage |

Cortex |

Temperature change |

Systemic drug |

“Save file replace” |

The malleability of memory |

Updating files overnight |

Dreaming |

Some possible parallels

The central message of this part of the chapter is that the human brain and the computer data bank are finite resources. Models of human memory have frequently ignored constraints upon the amount of data which might be stored and retrieved. The human brain, over many ages, has increased in size, possibly because of the increased likelihood of survival afforded the organism with increased powers of mentation. But the cranial cavity is clearly a space with a finite volume. To use portions of that space to store information that will never be accessed, or to fail to include provision for pruning unneeded data from time to time, or to fail to provide for the replacement of one memory item by another, would be very poor design indeed.

If the malleability of memory is a given, how are we to process the ever-expanding ocean of knowledge that comes our way? Magazines and newspapers, movies and plays — the complexities of modern life — immerse us in a flood of information. We are living in a world of information, and success functioning requires that we master a great deal of knowledge. Like a computer programmer, we are forced to design “programs” that make optimum use of our memory capacities. Most people want to know something about politics and economics, about literature and art. To do this well, we must call upon our memory.

People also want to remember names and facts. Some of the top experts on memory training have said that forgetting things can be more than just an irritant; it can also be costly for a busy person. It creates stress and takes up valuable time. An unreliable memory also hurts a person’s self-confidence and peace of mind. People dodge other people at parties because they can’t for the life of them remember names. When people can’t recall things they have read, it affects their conversations. It can make an unfavorable impression on one’s boss or a potential client.

Memory is important, no doubt about it. But given that our memories are continually subject to distortions and transformations, how can we hang on to the truth? If it is natural and normal for people’s memories to become altered, is there anything that one can do?

Probably the most important thing a person can do to improve memory is learn how to pay attention. Every time you allow your attention to wander, you miss something. In my work, it is important to read a great deal; it is not uncommon for me to read an entire page of a book and then remember nothing from it. This happens because I start daydreaming and allow my attention to wander to some other matter — what I need to buy at the grocery store, where I am going on my next trip, why someone has been nasty to me. Sometimes I have to read the same material several times before it sinks in. This same thing happens to people while they are driving. Most people have had the experience of driving a few miles in a car and suddenly realizing they cannot remember anything of the scenery for the previous few minutes. Attention wanders, and memory suffers. But there is something that can be done.

Two factors affect attention and to the extent that we understand these factors we will be better able to control our attention. The first has to do with the stimuli in our environment. Some environmental stimuli force themselves on us more than others. Advertisers understand this. They use bright colors, catchy phrases, movement — all known to be grabbers of attention. Novelty gets our attention.

The second factor that affects our attention is our own interest. We have some freedom in what we choose to focus on. It is possible to focus hard on some information and effectively block out most other stimuli and thoughts.

Increased attention raises the chances that everyday information will be remembered. It is, in part, the reason why trivia whizzes — those people who seem to be able to remember the star of every movie they’ve ever seen or the batting average of every player on their favorite team — do so well. The trivia whizzes typically have an interest in this sort of trivia, or they wouldn’t have focused their attention on it in the first place.

Once interest in a topic is strong enough, attention to that topic becomes almost automatic. Every time we hear the topic mentioned, our ears perk up. The more interest we have, the more we can learn. And the more we learn, the more interest we have. The cycle continues.

Many times, however, people must learn things that they are not overwhelmingly interested in. This can certainly be done. By paying attention to the topic under discussion, you can learn more about it. This may occur slowly at first, but as you get to know more — about football, about operas, about an individual — the easier it becomes for you to tell when people are talking about it and when you should pay attention. Once attention becomes automatic, picking up information about a topic becomes easier.

Paying attention has another benefit. Interesting information enters our memory more securely, is more resistant to distortion, and is better able to fend off potential suggestions for future malleability.

Let’s say you’re a tourist in New York and have just asked for directions to the Museum of Modern Art. One of the important techniques at this stage is to try to organize the information by relating it to what you already know. This forces you to think about the information to understand it better. It also makes you dredge up old information from memory and think about it again.

For getting to the Museum of Modern Art, it helps if your information source tells you of some familiar place near where you want to go — say Saks Fifth Avenue. Then you can remember the new place in relation to what you already know. (Another trick for getting back to your hotel: As you walk toward the Museum look back over your shoulder every so often so that you can see how things will look when you are on your way back. The world looks very different coming and going.)

If you hear a story about someone, or see some unusual event, it also helps to relate it to what you already know. But there is more you can do. Rehearsing the information, either aloud or to yourself, will do much to help transfer it to long-term memory where it can be retrieved later on.

Memory experts have developed a number of different tricks — called mnemonic devices — for helping people fight forgetting. Many people who suffer from what they think is a poor memory turn to these tricks, but do they really work?

“The horror of that moment,” the King went on, “I shall never, never forget!”

“You will, though,” the Queen said, “if you don’t make a memorandum of it.”

— Lewis Carroll

One evening the Greek poet Simonides was reciting poetry at a huge banquet given by a well-to-do nobleman. Midway through the banquet, Simonides was abruptly drawn away from the banquet by a messenger of the gods. Barely a few moments passed and the entire roof of the banquet hall collapsed, crushing all the guests. Not a single guest survived this horror. The grief-stricken relatives of the guests faced a further tragedy: The bodies of their loved ones were so mutilated that they could not be identified. How were these people to be buried properly? At first, the situation seemed to have no solution, but then Simonides discovered that the task might not be impossible. He found he could mentally picture the exact place at which each guest had been sitting, and in this way he could identify the bodies. Through this tragic experience, Simonides discovered a technique that could be used to remember all sorts of objects and ideas. He simply assigned these items fixed positions in space. This powerful method has come to be known as the “method of loci,” loci meaning roughly “locations.”

The method of loci has been discovered and successfully used by persons who are able to memorize stupefying amounts of material. One example is the newspaper reporter S, the subject of Alexander Luria’s pioneering work mentioned in Chapter 1. S could visualize dozens of items that he wanted to remember by placing them along a familiar street in Moscow. When he wanted to remember the items, he would simply mentally walk along the street, “seeing” the items as he passed. He rarely made a mistake, but when he did he tended to blame it on a misperception rather than an error of memory. Once the word egg was one he wished to remember but instead forgot. Why? Because he had imagined it against a white wall. It completely blended into the background and he did not “see” it when he took his mental walk.

The method of loci is easy. First you memorize a series of mental snapshots, or locations that are familiar to you, a series that follows in a regular order. For example, you might think of the distinct places you pass as you walk from your house to the nearest bus stop. Or you might imagine the distinct locations you see each morning — your bed, the bathroom door, the bathroom sink, the top of the stairs, the breakfast table.

The locations will serve as pigeonholes for any items you want to learn. Say it is a shopping list of butter, eggs, hamburger, and toothpaste. First you will need to convert each item into a visual image. Then put an image of each item in a specific location on your mental snapshot. Thus, you might imagine a pound of butter sitting at the end of your bed, then some eggs on the floor in front of the bathroom door, then a hamburger in the bathroom sink, and finally toothpaste squeezed over the top step of the stairway. Think about each item in location for about five or ten seconds. Then, when you want to remember the list later, simply take a “mental walk” past the various locations and you will be able to note what you have placed in each one.

The method of loci is an example of a mnemonic device, or a memory trick. Your memory associates new items with old ones. Some new items are complex and require a good deal of effort and concentration to master, but most are relatively simple and easy to learn. These techniques allow people to remember a good deal more information than they would ordinarily be able to using rote memorizing techniques. Occasionally people are found who have exceptional abilities to memorize by rote. One example is a man dubbed V. P. by the psychologists who studied him. Before he had reached the age of five, V. P. had memorized the street map of a city of half a million people. By the time he was ten, he memorized 150 poems as part of a contest. V. P. was raised in Latvia and was schooled in a system that emphasized rote learning. It is tempting to conclude that his early training may have provided V. P. with the impetus to improve his mnemonic skills. Most of us with ordinary memories cannot remember a lot of information by rote. But using mnemonic devices, we can remember a good deal more than we might otherwise be able to.

Another efficient way to remember a series of items is the peg word method. The first step is to learn a list of peg words that correspond to the numbers one through twenty. Here’s a list that has been commonly used for the first ten numbers:

One is a bun |

Six is sticks |

Two is a shoe |

Seven is heaven |

Three is a tree |

Eight is a gate |

Four is a door |

Nine is wine |

Five is a hive |

Ten is a hen |

Basically, the method works by having you “hang” the items you want to remember on the pegs. Each item goes with one peg, and the two are imagined interacting with each other. Thus, if you wanted to remember the shopping list given before — butter, eggs, hamburger, toothpaste . . . — you might compose an image of butter resting between the two halves of a bun, the eggs spilling over the edge of a shoe, the hamburger lying next to a tree, then toothpaste dripping over a door. The pairs become associated in your mind. Then when you attempt to remember the list, you go through the peg words and note what object you visually placed on each peg. The pegs, like the locations in the method of loci, give you cues necessary to recover your memories.

Another technique for remembering a list is to make up a story. Weave the items into a story that ties them together. The crazier the story, the better. So, to ensure that I will be able to remember the shopping list, I might imagine that I am a cook in a local coffee shop. One day I am busy spreading butter over the sandwich bread when two people come in, both nagging each other. The man says he wants some eggs. His wife says, “It’s dinner time. Wouldn’t you rather have a hamburger?” He tells her no. When she presses him for an explanation, he says, “Stop nagging” and pulls out a tube of toothpaste and hits her over the head. This technique has been called the “narrative-chaining” method. It is effective for learning a single list, but its real power comes when several different lists have to be learned. In one study, people who had used narrative chaining remembered over six times as much as people who learned by ordinary rote memorization. While the method of loci and the peg word methods are just as good as narrative chaining when a single list has to be remembered, the latter method is more efficient when many lists must be remembered. This is one example of the fact that the kind of technique that works best depends upon the material to be learned.

Suppose you are planning a trip to China and are nervous about the fact that you won’t know the men’s room from the ladies’. Or your company has just assigned you to spend six months in Mexico City and many of your customers won’t know a word of English. Or perhaps you’ve always wanted to read certain Swedish novels in their original language. Whatever your reason, for the first time in your life you have a strong desire to master a foreign language. How much effort you have to put in depends upon whether you are trying to learn a difficult language like Chinese or an easier one like Spanish.

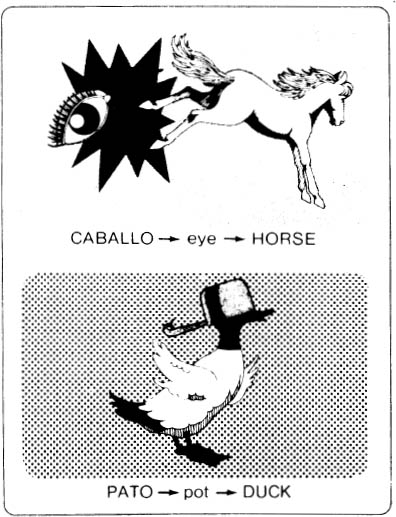

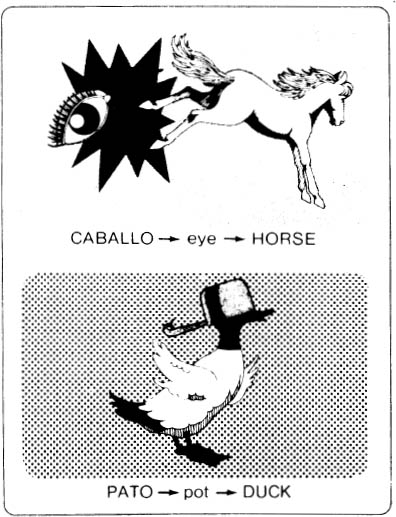

There are many approaches you can take. You might consider going to a commercial school such as Berlitz, the largest chain. You might plan on taking an extension course at a nearby college or university. You might try doing it on your own, either with a private tutor, or with an easy mnemonic system and a set of vocabulary cards. The mnemonic system involves essentially two steps. The first is to find a part of the foreign word that you want to remember that sounds like an English word. The Spanish caballo, which means “horse,” is pronounced cob-eye-yo. Thus, eye can be used as the key word. The next step is to use an image to connect the key word and the English equivalent. So, you might imagine a horse kicking a giant eye, as you see below. Later on, when you are trying to remember what the word caballo means, you would first retrieve eye and then the stored image that links it to horse. Similarly the Spanish word pato, which means “duck,” is pronounced pot-o. Thus, pot can be used as the key word. An image that connects the key word to the English equivalent might be a duck with a pot over its head. When asked for the meaning of pato you would first retrieve pot, and then the stored image that links it to duck. At first this may sound quite complicated, but studies have shown that the key word method makes learning a foreign language a lot easier.

Mental images used to associate Spanish words with corresponding English terms.

(From Introduction to Psychology, 7th ed., by Ernest R. Hilgard, Rita L. Atkinson, and Richard C. Atkinson. Copyright © 1979 by Harcourt Brace Jovanovich, Inc. Reproduced by permission of the publisher.)

People commonly report having problems remembering the names of others. A face looks familiar, but the name escapes us. This problem, too, can be helped with a simple trick.

The overall problem breaks down into three subproblems: remembering the face, the name, and the connection between the two. For the first part, remembering the face, look at it carefully while focusing on some distinctive feature such as bushy eyebrows. For the second part, remembering the name, try to find some meaning in it. As in the case of foreign language, you might think about a part of the name that sounds like an English word. Finally, to remember the connection between the face and name, think of an image that links the key word and the distinctive feature in the face. If you have just been introduced to Mr. Clausen, the man with the bushy eyebrows, you might think of the English word claws as a key word for Clausen. Now imagine a large lobster claw tearing away at Mr. Clausen’s bushy eyebrows. Now, when you see Mr. Clausen the next time, conjure up the image of the claw at his eyebrow, and the name Clausen should come to mind.

Studies have shown that this method helps the facial feature, the key word, and the name to become tightly woven together. And the result is that people with no previous practice remember many more faces when they use the method as when they do not. In one study, if a person remembered the facial feature and the key word, the chances of remembering the name were about 90 percent. Sometimes people make errors, but these are usually the result of poor association of the key word to the name. Most people who forget the key word or the facial feature will not be able to remember the name. And people who forget both the feature and the key word are unlikely to remember the name at all. The trick in using this technique is to practice being able to convert a name to some memorable key word. After that, it is easy.

Another technique for remembering people’s names involves the use of an expanding pattern of rehearsal, an idea developed by Tom Landauer of Bell Laboratories and Robert Bjork of UCLA. When you are introduced to someone, repeat the name immediately. You might say something like “Betty Johnson? Hello, Betty.” About ten or fifteen seconds later, look at the person and rehearse the name silently. Do this again after one minute, and then three minutes, and the name will have a very good chance of becoming lodged in your long-term memory. Part of the reason this spacing technique works is because most forgetting occurs within a very short time after you learn some new fact.

There seems to be no doubt that the memory systems help people recall information that they could not otherwise remember. They work because they make use of principles that are well established, such as helping retrieval of information by developing a retrieval plan, and exploiting the beneficial effects of imagery on memory. They may also work because they improve a person’s motivation to learn and guarantee that the material to be learned is as well organized as possible. If a person is motivated to learn, he or she will pay more attention to items that have to be remembered. If a person is asked to imagine the items, this may make the whole job more interesting, more significant, which in turn will help in the long run.

A major difficulty with many of these memory tricks is that they are largely useful for recalling simple lists such as lists of grocery items. This may help in a pinch, but in general they don’t help a great deal when it comes to the kinds of memories that play a major part in everyday life. The two exceptions, of course, are the memory aids in foreign language learning, and the aids for linking names and faces. The study of memory aids is important, in part because it will undoubtedly lead to a greater understanding of how best to help those with memory problems.

Most of the mnemonic tricks that psychologists study have made use of what we might call an “internal aid.” But these turn out to be not what people typically use in everyday life. In one study conducted in England, people were interviewed about which memory aids they used and how often. They were asked about the extent to which they used internal aids (such as the method of loci, face-name associations, and others) and the extent to which they used external aids, such as shopping lists, diaries, writing on their hand, and so on. Most people claimed to use external aids such as diaries or calendars while very few ever used the internal aids advocated by memory experts such as Harry Lorayne, who has often gone on TV talk shows and reeled off the names and addresses of the studio audience. Lorayne claims he has no special gift, but that he is highly motivated and has trained himself. He insists that his techniques work because they rely on the mind’s natural tendency to create associations. He shuns the external aids, like writing things on a piece of paper. “You can lose a piece of paper,” he says, “but you can’t lose your mind.”1 Lorayne is partly right and partly wrong. Your mind can’t fall behind a desk and accidentally into a wastebasket, the way a piece of paper can. But the mind cannot hold information unfailingly. Memory is malleable; the words on a piece of paper are not. Thus, the best advice for enhancing memory is to develop both the internal and external aids.

One of the most widely discussed types of memory is “photographic” memory. Although people use this term freely, many do not know what a photographic memory actually is. The closest approximation to what we might call a photographic memory is “eidetic imagery.” People with eidetic imagery can visualize a scene with almost photographic clarity. A person with eidetic imagery experiences images as if they were “out there” in space rather than as if they were inside the mind. The eyes move over a visualized scene as if it had the quality of a real perception, and the person can at the same time describe the scene in uncanny detail.

Robert A. Lovett, former U.S. Secretary of Defense, is one such person. He first became aware of his unusual ability in high school, where he excelled in foreign languages and mathematics. His memory continued to flourish during his days at Harvard Law School, where he participated in several moot court cases. Just coincidentally, the final examination in one course asked about one of the cases that he had prepared for moot court. Lovett answered the exam question by quoting the case verbatim, with case numbers and all. He was strongly suspected of cheating and confronted with this suspicion by his professor, Dean Roscoe Pound. “Mr. Lovett, you realize I must give you either an A or a zero.” Lovett answered: “Would it help, Dean Pound, if I quoted the case to you now?” — which he proceeded to do, once again, line by line.

Lovett’s memory, despite occasional problems of leaving him feeling his mind was cluttered, was rather exceptional. This ability is extremely rare, despite the attention it has drawn.

It is a fact that forgetting is commonly a case of temporary rather than permanent failure. And it is precisely because of this fact that people have argued that no memory is ever completely lost. An experience leaves its mark on memory as clearly as a burglar leaves fingerprints. Yet the basic proposition that nothing a person experiences is ever lost is itself untestable. Even Freud was later forced to modify his position, albeit slightly, and he became content to say that nothing that was in the mind need ever perish. But it is quite possible, as we have seen, that things do perish. Whenever some new information is registered in memory, it is possible that the previous relevant memories are reactivated to assimilate the new information and are modified in the process.

No doubt there are good evolutionary reasons for a human being to be designed with a malleable memory. No one knows what the limits are on the amount of information that the human mind can store. But it is highly likely that beyond certain limits, the more information that is stored in memory, the longer it takes to find a particular bit of information, and the greater the chance of incorrectly storing a fact or retrieving an incorrect fact. There may therefore be sound economic reasons for discarding some information altogether, for updating memory, and for relegating less useful memories to rear dusty mental files. Only the memories that are biologically useful or that have personal value and interest need stay alive and active.

We can minimize potential distortions in important memories through a few simple techniques. Rehearsing the experience in our minds or out loud will help preserve the memory. Research has shown that people who are warned that someone may try to change their memories can better resist these influences than people who are not warned. The mechanism by which people resist is twofold: They rehearse the experience, and they better scrutinize the new information to detect inconsistencies with their already-stored memory.

The malleability of human memory represents a phenomenon that is at once perplexing and vexing. It means that our past might not be exactly as we remember it. The very nature of truth and of certainty is shaken. It is more comfortable for us to believe that somewhere within our brain, however well hidden, rests a bedrock of memory that absolutely corresponds with events that have passed. Unfortunately, we are simply not designed that way. It is time to start figuring out how to put the malleable memory to work in ways that can serve us well. Now that we are beginning to understand this phenomenon, new research may begin to tell us how to control it. It would be nice, for example, if an individual could decide whether he or she wanted to have an accurate memory versus a “rosy” memory. By controlling the inputs into memory, it should theoretically be possible to achieve what we wish. For accuracy, we need balance; for a rosy memory, we need one-sided inputs. We need not be afraid of or disquieted by the nature of our memories; we need only to make them work on our behalf.