Röntgen not only provided an example of brilliantly meticulous science; he also reminded scientists that the periodic table is never empty of surprises. There’s always something novel to discover about the elements, even today. But with most of the easy pickings already plucked by Röntgen’s time, making new discoveries required drastic measures. Scientists had to interrogate the elements under increasingly severe conditions—especially extreme cold, which hypnotizes them into strange behaviors. Extreme cold doesn’t always portend well for the humans making the discoveries either. While the latter-day heirs of Lewis and Clark had explored much of Antarctica by 1911, no human being had ever reached the South Pole. Inevitably, this led to an epic race among explorers to get there first—which led just as inevitably to a grim cautionary tale about what can go wrong with chemistry at extreme temperatures.

That year was chilly even by Antarctic standards, but a band of pale Englishmen led by Robert Falcon Scott nonetheless determined that they would be the first to reach ninety degrees south latitude. They organized their dogs and supplies, and a caravan set off in November. Much of the caravan was a support team, which cleverly dropped caches of food and fuel on the way out so that the small final team that would dash to the pole could retrieve them on the way back.

Little by little, more of the caravan peeled off, and finally, after slogging along for months on foot, five men, led by Scott, arrived at the pole in January 1912—only to find a brown pup tent, a Norwegian flag, and an annoyingly friendly letter. Scott had lost out to Roald Amundsen, whose team had arrived a month earlier. Scott recorded the moment curtly in his diary: “The worst has happened. All the daydreams must go.” And shortly afterward: “Great God! This is an awful place. Now for the run home and a desperate struggle. I wonder if we can do it.”

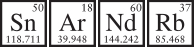

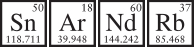

Dejected as Scott’s men were, their return trip would have been difficult anyway, but Antarctica threw up everything it could to punish and harass them. They were marooned for weeks in a monsoon of snow flurries, and their journals (discovered later) showed that they faced starvation, scurvy, dehydration, hypothermia, and gangrene. Most devastating was the lack of heating fuel. Scott had trekked through the Arctic the year before and had found that the leather seals on his canisters of kerosene leaked badly. He’d routinely lost half of his fuel. For the South Pole run, his team had experimented with tin-enriched and pure tin solders. But when his bedraggled men reached the canisters awaiting them on the return trip, they found many of them empty. In a double blow, the fuel had often leaked onto foodstuffs.

Without kerosene, the men couldn’t cook food or melt ice to drink. One of them took ill and died; another went insane in the cold and wandered off. The last three, including Scott, pushed on. They officially died of exposure in late March 1912, eleven miles wide of the British base, unable to get through the last nights.

In his day, Scott had been as popular as Neil Armstrong—Britons received news of his plight with gnashing of teeth, and one church even installed stained-glass windows in his honor in 1915. As a result, people have always sought an excuse to absolve him of blame, and the periodic table provided a convenient villain. Tin, which Scott used as solder, has been a prized metal since biblical times because it’s so easy to shape. Ironically, the better metallurgists got at refining tin and purifying it, the worse it became for everyday use. Whenever pure tin tools or tin coins or tin toys got cold, a whitish rust began to creep over them like hoarfrost on a window in winter. The white rust would break out into pustules, then weaken and corrode the tin, until it crumbled and eroded away.

Unlike iron rust, this was not a chemical reaction. As scientists now know, this happens because tin atoms can arrange themselves inside a solid in two different ways, and when they get cold, they shift from their strong “beta” form to the crumbly, powdery “alpha” form. To visualize the difference, imagine stacking atoms in a huge crate like oranges. The bottom of the crate is lined with a single layer of spheres touching only tangentially. To fill the second, third, and fourth layers, you might balance each atom right on top of one in the first layer. That’s one form, or crystal structure. Or you might nestle the second layer of atoms into the spaces between the atoms in the first layer, then the third layer into the spaces between the atoms in the second layer, and so on. That makes a second crystal structure with a different density and different properties. These are just two of the many ways to pack atoms together.

What Scott’s men (perhaps) found out the hard way is that an element’s atoms can spontaneously shift from a weak crystal to a strong one, or vice versa. Usually it takes extreme conditions to promote rearrangement, like the subterranean heat and pressure that turn carbon from graphite into diamonds. Tin becomes protean at 56°F. Even a sweater evening in October can start the pustules rising and the hoarfrost creeping, and colder temperatures accelerate the process. Any abusive treatment or deformation (such as dents from canisters being tossed onto hard-packed ice) can catalyze the reaction, too, even in tin that is otherwise immune. Nor is this merely a topical defect, a surface scar. The condition is sometimes called tin leprosy because it burrows deep inside like a disease. The alpha–beta shift can even release enough energy to cause audible groaning—vividly called tin scream, although it sounds more like stereo static.

The alpha–beta shift of tin has been a convenient chemical scapegoat throughout history. Various European cities with harsh winters (e.g., St. Petersburg) have legends about expensive tin pipes on new church organs exploding into ash the instant the organist blasted his first chord. (Some pious citizens were more apt to blame the Devil.) Of more world historical consequence, when Napoleon stupidly attacked Russia during the winter of 1812, the tin clasps on his men’s jackets reportedly (many historians dispute this) cracked apart and left the Frenchmen’s inner garments exposed every time the wind kicked up. As with the horrible circumstances faced by Scott’s little band, the French army faced long odds in Russia anyway. But element fifty’s changeling ways perhaps made things tougher, and impartial chemistry proved an easier thing to blame* than a hero’s bad judgment.

There’s no doubt Scott’s men found empty canisters—that’s in his diary—but whether the disintegration of the tin solder caused the leaks is disputed. Tin leprosy makes so much sense, yet canisters from other teams discovered decades later retained their solder seals. Scott did use purer tin—although it would have to have been extremely pure for leprosy to take hold. Yet no other good explanation besides sabotage exists, and there’s no evidence of foul play. Regardless, Scott’s little band perished on the ice, victims at least in part of the periodic table.

Quirky things happen when matter gets very cold and shifts from one state to another. Schoolchildren learn about just three interchangeable states of matter—solid, liquid, and gas. High school teachers often toss in a fourth state, plasma, a superheated condition in stars in which electrons detach from their nucleic moorings and go roaming.* In college, students get exposed to superconductors and superfluid helium. In graduate school, professors sometimes challenge students with states such as quark-gluon plasma or degenerate matter. And along the way, a few wiseacres always ask why Jell-O doesn’t count as its own special state. (The answer? Colloids like Jell-O are blends of two states.* The water and gelatin mixture can either be thought of as a highly flexible solid or a very sluggish liquid.)

The point is that the universe can accommodate far more states of matter—different micro-arrangements of particles—than are dreamed of in our provincial categories of solid, liquid, and gas. And these new states aren’t hybrids like Jell-O. In some cases, the very distinction between mass and energy breaks down. Albert Einstein uncovered one such state while fiddling around with a few quantum mechanics equations in 1924—then dismissed his calculations and disavowed his theoretical discovery as too bizarre to ever exist. It remained impossible, in fact, until someone made it in 1995.

In some ways, solids are the most basic state of matter. (To be scrupulous, the vast majority of every atom sits empty, but the ultra-quick hurry of electrons gives atoms, to our dull senses, the persistent illusion of solidity.) In solids, atoms line up in a repetitive, three-dimensional array, though even the most blasé solids can usually form more than one type of crystal. Scientists can now coax ice into forming fifteen distinctly shaped crystals by using high-pressure chambers. Some ices sink rather than float in water, and others form not six-sided snowflakes, but shapes like palm leaves or heads of cauliflower. One alien ice, Ice X, doesn’t melt until it reaches 3,700°F. Even chemicals as impure and complicated as chocolate form quasi-crystals that can shift shapes. Ever opened an old Hershey’s Kiss and found it an unappetizing tan? We might call that chocolate leprosy, caused by the same alpha–beta shifts that doomed Scott in Antarctica.

Crystalline solids form most readily at low temperatures, and depending on how low the temperature gets, elements you thought you knew can become almost unrecognizable. Even the aloof noble gases, when forced into solid form, decide that huddling together with other elements isn’t such a bad idea. Violating decades of dogma, Canadian-based chemist Neil Bartlett created the first noble gas compound, a solid orange crystal, with xenon in 1962.* Admittedly, this took place at room temperature, but only with platinum hexafluoride, a chemical about as caustic as a superacid. Plus xenon, the largest stable inert gas, reacts far more easily than the others because its electrons are only loosely bound to its nucleus. To get smaller, closed-rank noble gases to react, chemists had to drastically screw down the temperature and basically anesthetize them. Krypton put up a good fight until about −240°F, at which point super-reactive fluorine can latch onto it.

Getting krypton to react, though, was like mixing baking soda and vinegar compared with the struggle to graft something onto argon. After Bartlett’s xenon solid in 1962 and the first krypton solid in 1963, it took thirty-seven frustrating years until Finnish scientists finally pieced together the right procedure for argon in 2000. It was an experiment of Fabergé delicacy, requiring solid argon; hydrogen gas; fluorine gas; a highly reactive starter compound, cesium iodide, to get the reaction going; and well-timed bursts of ultraviolet light, all set to bake at a frigid −445°F. When things got a little warmer, the argon compound collapsed.

Nevertheless, below that temperature argon fluorohydride was a durable crystal. The Finnish scientists announced the feat in a paper with a refreshingly accessible title for a scientific work, “A Stable Argon Compound.” Simply announcing what they’d done was bragging enough. Scientists are confident that even in the coldest regions of space, tiny helium and neon have never bonded with another element. So for now, argon wears the title belt for the single hardest element humans have forced into a compound.

Given argon’s reluctance to change its habits, forming an argon compound was a major feat. Still, scientists don’t consider noble gas compounds, or even alpha–beta shifts in tin, truly different states of matter. Different states require appreciably different energies, in which atoms interact in appreciably different ways. That’s why solids, where atoms are (mostly) fixed in place; liquids, where particles can flow around each other; and gases, where particles have the freedom to carom about, are distinct states of matter.

Still, solids, liquids, and gases have lots in common. For one, their particles are well-defined and discrete. But that sovereignty gives way to anarchy when you heat things up to the plasma state and atoms start to disintegrate, or when you cool things down enough and collectivist states of matter emerge, where the particles begin to overlap and combine in fascinating ways.

Take superconductors. Electricity consists of an easy flow of electrons in a circuit. Inside a copper wire, the electrons flow between and around the copper atoms, and the wire loses energy as heat when the electrons crash into the atoms. Obviously, something suppresses that process in superconductors, since the electrons flowing through them never flag. In fact, the current can flow forever as long as the superconductor remains chilled, a property first detected in mercury at −450°F in 1911. For decades, most scientists assumed that superconducting electrons simply had more space to maneuver: atoms in superconductors have much less energy to vibrate back and forth, giving electrons a wider shoulder to slip by and avoid crashes. That explanation’s true as far as it goes. But really, as three scientists figured out in 1957, it’s electrons themselves that metamorphose at low temperatures.

When zooming past atoms in a superconductor, electrons tug at the atoms’ nuclei. The positive nuclei drift slightly toward the electrons, and this leaves a wake of higher-density positive charge. The higher-density charge attracts other electrons, which in a sense become paired with the first. It’s not a strong coupling between electrons, more like the weak bond between argon and fluorine; that’s why the coupling emerges only at low temperatures, when atoms aren’t vibrating too much and knocking the electrons apart. At those low temperatures, you cannot think of electrons as isolated; they’re stuck together and work in teams. And during their circuit, if one electron gets gummed up or knocks into an atom, its partners yank it through before it slows down. It’s like that old illegal football formation where helmetless players locked arms and stormed down the field—a flying electron wedge. This microscopic state translates to superconductivity when billions of billions of pairs all do the same thing.

Incidentally, this explanation is known as the BCS theory of superconductivity, after the last names of the men who developed it: John Bardeen, Leon Cooper (the electron partners are called Cooper pairs), and Robert Schrieffer.* That’s the same John Bardeen who coinvented the germanium transistor, won a Nobel Prize for it, and dropped his scrambled eggs on the floor when he heard the news. Bardeen dedicated himself to superconductivity after leaving Bell Labs for Illinois in 1951, and the BCS trio came up with the full theory six years on. It proved so good, so accurate, they shared the 1972 Nobel Prize in Physics for their work. This time, Bardeen commemorated the occasion by missing a press conference at his university because he couldn’t figure out how to get his new (transistor-powered) electric garage door open. But when he visited Stockholm for the second time, he presented his two adult sons to the king of Sweden, just as he’d promised he would back in the fifties.

If elements are cooled below even superconducting temperatures, the atoms grow so loopy that they overlap and swallow each other up, a state called coherence. Coherence is crucial to understanding that impossible Einsteinian state of matter promised earlier in this chapter. Understanding coherence requires a short but thankfully element-rich detour into the nature of light and another once impossible innovation, lasers.

Few things delight the odd aesthetic sense of a physicist as much as the ambiguity, the two-in-oneness, of light. We normally think of light as waves. In fact, Einstein formulated his special theory of relativity in part by thinking about how the universe would appear to him—what space would look like, how time would (or wouldn’t) pass—if he rode sidesaddle on one of those waves. (Don’t ask me how he imagined this.) At the same time, Einstein proved (he’s ubiquitous in this arena) that light sometimes acts like particle BBs called photons. Combining the wave and particle views (called wave-particle duality), he correctly deduced that light is not only the fastest thing in the universe, it’s the fastest possible thing, at 186,000 miles per second, in a vacuum. Whether you detect light as a wave or photons depends on how you measure it, since light is neither wholly one nor the other.

Despite its austere beauty in a vacuum, light gets corrupted when it interacts with some elements. Sodium can slow light down to 38 mph, almost twenty times slower than sound. Praseodymium can even catch light, hold on to it for a few seconds like a baseball, then toss it in a different direction.

Lasers manipulate light in subtler ways. Remember that electrons are like elevators: they never rise from level 1 to level 3.5 or drop from level 5 to level 1.8. Electrons jump only between whole-number levels. When excited electrons crash back down, they jettison excess energy as light, and because electron movement is so constrained, so too is the color of the light produced. It’s monochromatic—at least in theory. In practice, electrons in different atoms are simultaneously dropping from level 3 to 1, or 4 to 2, or whatever—and every different drop produces a different color. Plus, different atoms emit light at different times. To our eyes, this light looks uniform, but on a photon level, it’s uncoordinated and jumbled.

Lasers circumvent that timing problem by limiting what floors the elevator stops at (as do their cousins, masers, which work the same way but produce non-visible light). The most powerful, most impressive lasers today—capable of producing beams that, for a fraction of a second, produce more power than the whole United States—use crystals of yttrium spiked with neodymium. Inside the laser, a strobe light curls around the neodymium-yttrium crystal and flashes incredibly quickly at incredibly high intensities. This infusion of light excites the electrons in the neodymium and makes them jump way, way higher than normal. To keep with our elevator bit, they might rocket up to the tenth floor. Suffering vertigo, they immediately ride back down to the safety of, say, the second floor. Unlike normal crashes, though, the electrons are so disturbed that they have a breakdown and don’t release their excess energy as light; they shake and release it as heat. Also, relieved at being on the safe second floor, they get off the elevator, dawdle, and don’t bother hurrying down to the ground floor.

In fact, before they can hurry down, the strobe light flashes again. This sends more of the neodymium’s electrons flying up to the tenth floor and crashing back down. When this happens repeatedly, the second floor gets crowded; when there are more electrons on the second floor than the first, the laser has achieved “population inversion.” At this point, if any dawdling electrons do jump to the ground floor, they disturb their already skittish and crowded neighbors and knock them over the balcony, which in turn knocks others down. And notice the simple beauty of this: when the neodymium electrons drop this time, they’re all dropping from two to one at the same time, so they all produce the same color of light. This coherence is the key to a laser. The rest of the laser apparatus cleans up light rays and hones the beams by bouncing them back and forth between two mirrors. But at that point, the neodymium-yttrium crystal has done its work to produce coherent, concentrated light, beams so powerful they can induce thermonuclear fusion, yet so focused they can sculpt a cornea without frying the rest of the eye.

Based on that technological description, lasers may seem more engineering challenges than scientific marvels, yet lasers—and masers, which historically came first—encountered real scientific prejudice when they were developed in the 1950s. Charles Townes remembers that even after he’d built the first working maser, senior scientists would look at him wearily and say, Sorry, Charles, that’s impossible. And these weren’t hacks—small-minded naysayers who lacked the imagination to see the Next Big Thing. Both John von Neumann, who helped design the basic architecture of modern computers (and modern nuclear bombs), and Niels Bohr, who did as much to explain quantum mechanics as anyone, dismissed Townes’s maser to his face as simply “not possible.”

Bohr and von Neumann blew it for a simple reason: they forgot about the duality of light. More specifically, the famous uncertainty principle of quantum mechanics led them astray. Because Werner Heisenberg’s uncertainty principle is so easy to misunderstand—but once understood is a powerful tool for making new forms of matter—the next section will unpack this little riddle about the universe.

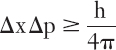

If nothing tickles physicists like the dual nature of light, nothing makes physicists wince like hearing someone expound on the uncertainty principle in cases where it doesn’t apply. Despite what you may have heard, it has (almost*) nothing to do with observers changing things by the mere act of observing. All the principle says, in its entirety, is this:

That’s it.

Now, if you translate quantum mechanics into English (always risky), the equation says that the uncertainty in something’s position (Δx) times the uncertainty in its speed and direction (its momentum, Δp) always exceeds or is equal to the number “h divided by four times pi.” (The h stands for Planck’s constant, which is such a small number, about 100 trillion trillion times smaller than one, that the uncertainty principle applies only to tiny, tiny things such as electrons or photons.) In other words, if you know a particle’s position very well, you cannot know its momentum well at all, and vice versa.

Note that these uncertainties aren’t uncertainties about measuring things, as if you had a bad ruler; they’re uncertainties built into nature itself. Remember how light has a reversible nature, part wave, part particle? In dismissing the laser, Bohr and von Neumann got stuck on the ways light acts like particles, or photons. To their ears, lasers sounded so precise and focused that the uncertainty in the photons’ positions would be nil. That meant the uncertainty in the momentum had to be large, which meant the photons could be flying off at any energy or in any direction, which seemed to contradict the idea of a tightly focused beam.

They forgot that light behaves like waves, too, and that the rules for waves are different. For one, how can you tell where a wave is? By its nature, it spreads out—a built-in source of uncertainty. And unlike particles, waves can swallow and combine with other waves. Two rocks thrown into a pond will kick up the highest crests in the area between them, which receives energy from smaller waves on both sides.

In the laser’s case, there aren’t two but trillions of trillions of “rocks” (i.e., electrons) kicking up waves of light, which all mix together. The key point is that the uncertainty principle doesn’t apply to sets of particles, only to individual particles. Within a beam, a set of light particles, it’s impossible to say where any one photon is located. And with such a high uncertainty about each photon’s position inside the beam, you can channel its energy and direction very, very precisely and make a laser. This loophole is difficult to exploit but is enormously powerful once you’ve got your fingers inside it—which is why Time magazine honored Townes by naming him one of its “Men of the Year” (along with Pauling and Segrè) in 1960, and why Townes won a Nobel Prize in 1964 for his maser work.

In fact, scientists soon realized that much more fit inside the loophole than photons. Just as light beams have a dual particle/wave nature, the farther you burrow down and parse electrons and protons and other supposed hard particles, the fuzzier they seem. Matter, at its deepest, most enigmatic quantum level, is indeterminate and wavelike. And because, deep down, the uncertainty principle is a mathematical statement about the limits of drawing boundaries around waves, those particles fall under the aegis of uncertainty, too.

Now again, this works only on minute scales, scales where h, Planck’s constant, a number 100 trillion trillion times smaller than one, isn’t considered small. What embarrasses physicists is when people extrapolate up and out to human beings and claim that ΔxΔp ≥ h/4π really “proves” you cannot observe something in the everyday world without changing it—or, for the heuristically daring, that objectivity itself is a scam and that scientists fool themselves into thinking they “know” anything. In truth, there’s about only one case where uncertainty on a nanoscale affects anything on our macroscale: that outlandish state of matter—Bose-Einstein condensate (BEC)—promised earlier in this chapter.

This story starts in the early 1920s when Satyendra Nath Bose, a chubby, bespectacled Indian physicist, made an error while working through some quantum mechanics equations during a lecture. It was a sloppy, undergraduate boner, but it intrigued Bose. Unaware of his mistake at first, he’d worked everything out, only to find that the “wrong” answers produced by his mistake agreed very well with experiments on the properties of photons—much better than the “correct” theory.*

So as physicists have done throughout history, Bose decided to pretend that his error was the truth, admit that he didn’t know why, and write a paper. His seeming mistake, plus his obscurity as an Indian, led every established scientific journal in Europe to reject it. Undaunted, Bose sent his paper directly to Albert Einstein. Einstein studied it closely and determined that Bose’s answer was clever—it basically said that certain particles, like photons, could collapse on top of each other until they were indistinguishable. Einstein cleaned the paper up a little, translated it into German, and then expanded Bose’s work into another, separate paper that covered not just photons but whole atoms. Using his celebrity pull, Einstein had both papers published jointly.

In them, Einstein included a few lines pointing out that if atoms got cold enough—billions of times colder than even superconductors—they would condense into a new state of matter. However, the ability to produce atoms that cold so outpaced the technology of the day that not even far-thinking Einstein could comprehend the possibility. He considered his condensate a frivolous curiosity. Amazingly, scientists got a glimpse of Bose-Einstein matter a decade later, in a type of superfluid helium where small pockets of atoms bound themselves together. The Cooper pairs of electrons in superconductors also behave like the BEC in a way. But this binding together in superfluids and superconductors was limited, and not at all like the state Einstein envisioned—his was a cold, sparse mist. Regardless, the helium and BCS people never pursued Einstein’s conjecture, and nothing more happened with the BEC until 1995, when two clever scientists at the University of Colorado conjured some up with a gas of rubidium atoms.

Fittingly, one technical achievement that made real BEC possible was the laser—which was based on ideas first espoused by Bose about photons. That may seem backward, since lasers usually heat things up. But lasers can cool atoms, too, if wielded properly. On a fundamental, nanoscopic level, temperature just measures the average speed of particles. Hot molecules are furious little clashing fists, and cold molecules drag along. So the key to cooling something down is slowing its particles down. In laser cooling, scientists cross a few beams, Ghostbusters-like, and create a trap of “optical molasses.” When the rubidium atoms in the gas hurtled through the molasses, the lasers pinged them with low-intensity photons. The rubidium atoms were bigger and more powerful, so this was like shooting a machine gun at a screaming asteroid. Size disparities notwithstanding, shooting an asteroid with enough bullets will eventually halt it, and that’s exactly what happened to the rubidium atoms. After absorbing photons from all sides, they slowed, and slowed, and slowed some more, and their temperature dropped to just 1/10,000 of a degree above absolute zero.

Still, even that temperature is far too sweltering for the BEC (you can grasp now why Einstein was so pessimistic). So the Colorado duo, Eric Cornell and Carl Wieman, incorporated a second phase of cooling in which a magnet repeatedly sucked off the “hottest” remaining atoms in the rubidium gas. This is basically a sophisticated way of blowing on a spoonful of soup—cooling something down by pushing away warmer atoms. With the energetic atoms gone, the overall temperature kept sinking. By doing this slowly and whisking away only the few hottest atoms each time, the scientists plunged the temperature to a billionth of a degree (0.000000001) above absolute zero. At this point, finally, the sample of two thousand rubidium atoms collapsed into the Bose-Einstein condensate, the coldest, gooeyest, and most fragile mass the universe has ever known.

But to say “two thousand rubidium atoms” obscures what’s so special about the BEC. There weren’t two thousand rubidium atoms as much as one giant marshmallow of a rubidium atom. It was a singularity, and the explanation for why relates back to the uncertainty principle. Again, temperature just measures the average speed of atoms. If the molecules’ temperature dips below a billionth of a degree, that’s not much speed at all—meaning the uncertainty about that speed is absurdly low. It’s basically zero. And because of the wavelike nature of atoms on that level, the uncertainty about their position must be quite large.

So large that, as the two scientists relentlessly cooled the rubidium atoms and squeezed them together, the atoms began to swell, distend, overlap, and finally disappear into each other. This left behind one large ghostly “atom” that, in theory (if it weren’t so fragile), might be capacious enough to see under a microscope. That’s why we can say that in this case, unlike anywhere else, the uncertainty principle has swooped upward and affected something (almost) human-sized. It took less than $100,000 worth of equipment to create this new state of matter, and the BEC held together for only ten seconds before combusting. But it held on long enough to earn Cornell and Wieman the 2001 Nobel Prize.*

As technology keeps improving, scientists have gotten better and better at inducing matter to form the BEC. It’s not like anyone’s taking orders yet, but scientists might soon be able to build “matter lasers” that shoot out ultra-focused beams of atoms thousands of times more powerful than light lasers, or construct “supersolid” ice cubes that can flow through each other without losing their solidity. In our sci-fi future, such things could prove every bit as amazing as light lasers and superfluids have in our own pretty remarkable age.