IN TIMES OF CHANGE, MANAGERS ALMOST ALWAYS KNOW WHICH DIRECTION THEY SHOULD GO IN, BUT USUALLY ACT TOO LATE AND DO TOO LITTLE. CORRECT FOR THIS TENDENCY: ADVANCE THE PACE OF YOUR ACTIONS AND INCREASE THEIR MAGNITUDE. YOU’LL FIND THAT YOU’RE MORE LIKELY TO BE CLOSE TO RIGHT.

ANDY GROVE, ONLY THE PARANOID SURVIVE, 1995

Combine the ability to exert control with the need to outperform rivals, and what happens? Now it’s not just possible to influence outcomes, but often necessary.

For a stark example, let’s look at the world of professional cycling. The Tour de France has been held every year since 1903, aside from a few interruptions during wartime. It’s a grueling race with twenty-one stages over three weeks, some long and flat, winding through fields and villages, and others rising over steep mountain passes in the Alps and the Pyrénées. Going fast is a matter of strong technique and stamina as well as positive thinking. As for performance, not only is it relative—the cyclist with the lowest total time is crowned champion and wears the maillot jaune—but payoffs are highly skewed, with a huge prize and massive prestige for the winning cyclist and his team, and lesser rewards for others. (Several other prizes are awarded—best sprinter, best climber—as well as for members of the winning team.) There is also a clear end point to each stage and a culmination on the last Sunday with the final sprint on the Champs Elysées in Paris.

In this context, cyclists naturally do all they can to improve their performance. There’s constant pressure for innovation in equipment, training, nutrition, and so forth. Not surprisingly, the temptation to find less ethical ways to go faster is also present.

The use of illicit drugs was already common in the 1960s and 1970s, with amphetamines and other stimulants in vogue. But in the 1990s, with the advent of erythropoietin, better known as EPO, the game changed completely. EPO stimulates the production of red blood cells, crucial to carrying oxygen to muscles in a long and arduous race, and can significantly improve racing times. American cyclist Tyler Hamilton estimated that EPO could boost performance by about 5 percent. That may not seem like much, but at the very highest levels of competitive racing, where everyone is fit, talented, and works hard, a 5 percent gain is massive. It was roughly the difference between finishing in first place and being stuck in the middle of the pack.1

For a few years in the mid-1990s, as EPO found its way into the peleton, reporters noted that there seemed to be two groups of cyclists: those who continued to race at standard times, and a small but growing number who were noticeably faster, their legs and lungs able to tap what appeared to be an extra reserve of energy. The cyclists were running at two speeds, a deux vitesses. Sports Illustrated’s Austin Murphy wrote: “Rampant EPO use had transformed middling talents into supermen. Teams riding pan y agua—bread and water—had no chance.”2 No wonder so many professional cyclists resorted to doping. The performance difference was so clear that those who resisted had no chance of winning. Many of them quit altogether.

From 1999 through 2005, while Lance Armstrong was winning the Tour de France an unprecedented seven years in a row, there were persistent rumors of doping. Suspicion was widespread but nothing was proven. By 2008, thanks to persistent investigations, evidence of doping began to emerge. One of the first American cyclists to admit wrongdoing, Kayle Leogrande, was asked if he thought Armstrong had taken performance-enhancing drugs. To Leogrande there could be little doubt: “He’s racing in these barbaric cycling races in Europe. If you were a rider at that level, what would you do?”3

When Armstrong finally admitted in 2013 that he had used performance-enhancing drugs, he acknowledged the full menu. EPO? “Yes.” Blood doping? “Yes.” Testosterone? “Yes.” Asked if he could have won the Tour de France without resorting to these measures, Armstrong said “No.” There would have been no way to win such a competitive race without using all means at his disposal. The sad fact is that Armstrong was probably correct, although by doping he exacerbated the problem, making it impossible for others to ride clean.4

None of this is meant to justify the use of illegal drugs, of course. Many cyclists refused to dope, and their careers suffered as a consequence. We should condemn both the cyclists who used drugs and the officials who were slow to insist on stronger controls. The advent of a biological passport, which establishes a baseline for each athlete and permits the detection of deviations in key markers, is an encouraging step forward.

For our purposes, the example illustrates something else: that even a small improvement in absolute performance can make an outsize difference in relative performance, in effect between winning and losing.

ABSOLUTE IMPROVEMENT AND RELATIVE SUCCESS

To illustrate how improvements in (absolute) performance can affect (relative) success, let’s return to the example in Chapter Two, when people were asked to putt toward a hole. (We’ll skip the projector and the circles that made the hole appear larger or smaller.)

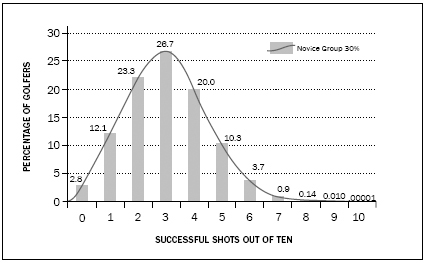

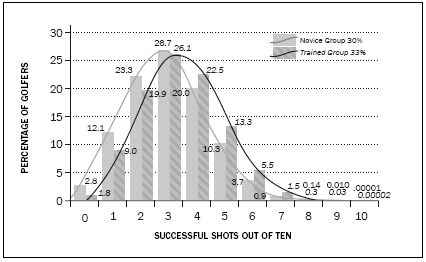

Let’s suppose that a group of novice golfers, shooting from a distance of six feet, have a 30 percent chance of sinking each putt. If we ask them to take 10 shots each (and if we assume each shot is independent, meaning there is no improvement from one shot to the next) they’ll produce the distribution on Figure 4.1. A very few (2.8 percent of the golfers) will miss all ten shots, while 12.1 percent will sink one putt, 23.3 percent will make two, and 26.7 percent (the most common result) will sink three putts. From there the distribution ramps down, with 20 percent sinking four putts, 10.3 percent making five, and 3.7 percent making six. Fewer than one percent will sink seven putts out of ten, and doing even better than that is not impossible but less and less probable.

FIGURE 4.1 NOVICE GROUP, 30% SUCCESS RATE

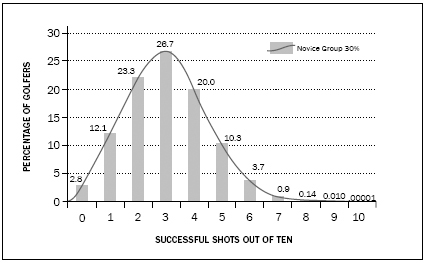

Suppose we assemble another group and provide them with putting lessons. We train them to make a smooth swing with a good follow-through. We teach them to focus their minds and to derive the benefits of positive thinking. Let’s assume that members of the Trained group have a 40 percent success rate, a considerable improvement over the 30 percent rate for Novices but still far short of the 54.8 percent for professionals golfers mentioned in Chapter Two. If members of this group take 10 shots each, they’ll produce the distribution in Figure 4.2. Now almost none will miss all ten, 4 percent will sink just one, 12.1 percent will make two, 21.5 percent will sink three, 25.1 percent will make four, and so on.

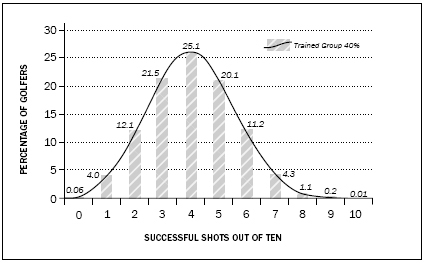

If we bring together the Novice and Trained golfers, as shown in Figure 4.3, we see quite a bit of overlap between the two groups. In any given competition, some Novice golfers will do better than Trained golfers.

FIGURE 4.2 TRAINED GROUP: 40% SUCCESS RATE

FIGURE 4.3 NOVICE 30% AND TRAINED 40% GROUPS, COMBINED

Here’s the question: If we hold a contest between Novice and Trained golfers—let’s say, 30 Novice and 30 Trained golfers each taking 20 shots—what’s the chance that a member of one or the other group will finish in first place out of all 60 contestants? Of course it’s more likely a Trained golfer will win, but just how likely? Is there a good chance that a Novice will come out on top, or only a slim one?

To find out, I used a Monte Carlo simulation, a technique developed in the 1940s when scientists at the Manhattan Project needed to predict the outcome of nuclear chain reactions. The physics of chain reactions is so complicated that a precise calculation of what would happen in a given instance was impossible. A better method was to calculate what would happen in many trials, and then aggregate the results to get a sense of the distribution of possible outcomes. The scientists, John von Neumann and Stanislas Ulam, named their method after the Monte Carlo casino in Monaco, with its famous roulette wheel. Any single spin of a roulette wheel will land the ball in just one slot, which won’t tell us a great deal. Spin the roulette wheel a thousand times, however, and you’ll get a very good picture of what can happen.5

To examine the impact of a change in absolute performance on relative performance, I devised a Monte Carlo simulation to conduct one thousand trials of a competition where 30 Novice golfers and 30 Trained golfers take 20 shots each. The results showed that 86.5 percent of the time—865 out of 1,000 trials—the winner came from the Trained group. There was a tie between a Trained and a Novice golfer 9.1 percent of the time, and only 4.4 percent of the time—just 44 times out of 1,000 trials—did the top score come from the Novice group. The Trained group’s absolute advantage, a 40 percent success rate versus 30 percent, gave its members an almost insurmountable relative advantage. Less than one time in twenty would the top Novice finish ahead of all 30 Trained golfers.

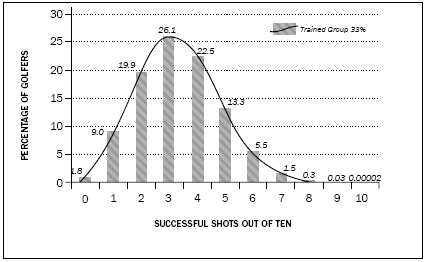

What if the gain from training was much smaller—say, from a success rate of 30 percent to just 33 percent? Now the Trained group would have a distribution as shown in Figure 4.4, and there would be much more overlap with the Novice group, in Figure 4.5. The chance that a Novice might win should go up, and that’s what we find. Even so, the Monte Carlo simulation showed that in a competition where 30 members of each group take 20 shots, a member of the Novice group would finish first 19.9 percent of the time (199 out of 1,000 trials). A member of the Trained group would win 55.5 percent of the time (555 out of 1,000), with 24.6 percent resulting in a tie. Even with a relatively small improvement, from 30 percent to 33 percent, the winner would be more than twice as likely to come from Trained group.

FIGURE 4.4 TRAINED GROUP: 33% SUCCESS RATE

FIGURE 4.5 NOVICE 30% AND TRAINED 33% GROUPS, COMBINED

The lesson is clear: in a competitive setting, even a modest improvement in absolute performance can have a huge impact on relative performance. And conversely, failing to use all possible advantages to improve absolute performance has a crippling effect on the likelihood of winning. Under these circumstances, finding a way to do better isn’t just nice to have. For all intents and purposes, it’s essential.*

BETTER PERFORMANCE IN THE BUSINESS WORLD

The examples of EPO in cycling and the Monte Carlo golf simulation point to the same conclusion: even small improvements in absolute performance can have an outsize effect on relative performance. Still, we should be careful not to generalize too broadly. Surely the “barbaric” pressures of competitive cycling are unusual, and though a simulated putting tournament is illustrative, it’s nevertheless contrived. It’s therefore open to question whether we would find quite the same impact of modest absolute gains in real-world decisions.

For a point of comparison, let’s look at the business world. It’s very different from a bicycle race or putting competition, of course. The distribution of payoffs in business is rarely stated explicitly, with fixed prizes for first, second, and third place. Nor is there usually a precise end point when a company needs to be among the leaders or face elimination. Nor is there anything quite like EPO, a powerful drug that, all else being equal, can raise performance by 5 percent. Companies can’t pop a pill to become 5 percent more efficient or 5 percent more innovative.

Yet for all these differences, business nevertheless shares many of the same competitive dynamics. Although there may not be a clearly defined payoff structure, payoffs in business are often highly skewed, with a great disparity between top and low performers. There may be no precise end point, but that’s not necessarily a source of comfort, because the threat of elimination can be constant. Furthermore, unlike sports, in which the rules are known to all and the standings are clear for all to see, competition in business has many sources of uncertainty. Technologies can change suddenly and dramatically, new competitors can enter the fray at any time, consumer preferences can shift from one week to the next, and rivals can merge or form alliances. If anything, competition in business is more dynamic and less forgiving than in sports. Not surprisingly, there is incessant pressure to find ways of doing better, whether through innovative technology, new products and services, or simply better execution. Only by taking chances, by pushing the envelope, can companies hope to stay ahead of rivals. Michael Raynor of Deloitte Consulting calls this “the strategy paradox”: that strategies with the greatest possibility of success also run the greatest chance of failure. “Behaviorally, at least,” Raynor observes, “the opposite of success isn’t failure, but mediocrity. . . . Who dares wins . . . or loses.”6 A commitment to bold action may not be sufficient to guarantee success, but when performance is relative and payoffs are highly skewed, one thing is assured: playing it safe will almost guarantee failure. You’re bound to be overtaken by rivals who are willing to take big risks to come out ahead.

The importance of taking action in business—who dares wins—isn’t a new idea. Already in 1982, the first principle of success in Tom Peters and Robert Waterman’s In Search of Excellence was “a bias for action,” which they defined as “a preference for doing something—anything—rather than sending a question through cycles and cycles of analyses and committee reports.”7 One of Stanford professor Robert Sutton’s rules for innovation is to “reward success and failure, punish inaction.”8 The failure to act is a greater sin than taking action and failing, because action brings at least a possibility of success, whereas inaction brings none. Virgin founder Richard Branson titled one of his books Screw It, Let’s Do It—a provocative title, and surely not meant as an ironclad rule, but nevertheless conveying a crucial insight. In the highly competitive industries in which Branson operated, such as retail and airlines, the willingness to take bold action was a necessity. Standing pat would inevitably lead to failure. Heike Bruch and Sumantra Ghoshal, in A Bias for Action, took this thinking one step further: “While experimentation and flexibility are important for companies, in our observation the most critical challenge for companies is exactly the opposite: determined, persistent, and relentless action-taking to achieve a purpose, against all odds.”9 Why “against all odds”? Because in a competitive game with skewed payoffs, only those who are willing to defy the odds will be in a position to win.

The use of bias by Peters and Waterman and by Bruch and Ghoshal is worthy of note. A great deal of decision research has been concerned with guarding against biases. Why, then, does bias seem to be discussed here in a favorable way?

For that, we have to come back to the word itself. Decision research has often been concerned with cognitive biases, which are mental shortcuts that sometimes lead people to make incorrect judgments. Cognitive biases are unconscious. Once we’re aware of them, we can try (although often with difficulty) to correct for them. In everyday speech, however, a bias isn’t just an unconscious cognitive error. It refers more generally to a preference or predisposition and can even be deliberate. You might have a tendency to, say, vote for incumbents, perhaps on the theory that they have valuable experience that shouldn’t be lost. (Or maybe, given the gridlock in Washington, your bias is in the opposite direction—to throw the rascals out.) Or you might have a preference for aisle seats on airplanes, because you like to get up and walk around during flights. These are biases in the sense that they reflect a consistent preference or inclination. They’re programmed responses that let you act quickly and efficiently, without having to think carefully each time the question comes up. They’re rules of thumb. If your predisposition is to choose an aisle seat, you can be said to have a bias, although such a bias is neither unconscious nor dangerous.

In strategic management, a bias for action simply means a preference for action over inaction. Such a preference arises from the recognition that when performance is relative and payoffs are highly skewed, only those who take outsize risks will be in a position to win. The University of Alberta team displayed a bias for risk taking when it pursued what it admitted was “aggressive and sometimes extremely risky strategy.” They weren’t wrong. Their bias for taking risks reflected an astute understanding of the competitive context.

WHY IT’S OFTEN BETTER TO ACT

In Chapter Two we saw that contrary to popular thinking, it’s not true that people suffer from a pervasive illusion of control. The more serious error may be a Type II error, the failure to understand how much control they have. Similarly, in Chapter Three we saw that when it comes to understanding performance, the more serious error may be Type II, the failure to recognize the extent to which payoffs are skewed.

Put them together, and not only can we improve outcomes by taking action, but given the nature of competitive forces we’re much better off erring on the side of action. That’s what Intel chairman Andy Grove meant by the title of his book, Only the Paranoid Survive. Grove didn’t say that all who are paranoid will be sure to survive. He made no claim that paranoia leads predictably to survival. His point was merely that in the highly competitive industry he knew best—semiconductors—only companies that push themselves to be among the very best and are willing to take risks will have a chance of living to see another day. The choice of words was deliberate. Grove knew from experience that paranoia may not ensure success, but any companies that survive will have exhibited something resembling paranoia.

Is Grove’s dictum applicable to all industries? Not to the same extent as to semiconductors. It might not make sense for, say, running a restaurant or a law firm, or a company in whose industry the pace of change and the distribution of payoffs is more forgiving. There’s no need for the makers of candy bars or razor blades, products where technology is relatively stable and consumer tastes don’t change much, to gamble everything on a risky new approach. But high-tech companies, like makers of smartphones, absolutely need to. In fact, in many industries the intensity of competition, coupled with an acceleration in technological change, means that the need to outperform rivals is more intense than even a few years ago.10 As a rule, preferring to risk a Type I error rather than a Type II error makes more sense. As Grove remarked in the quote at the start of this chapter, the natural tendency of many managers is to act too late and to do too little. We should, he urged, correct this mistake. The best course of action is not only to go faster but to do more. True, you may not always win, but at least you will improve your chances. That was a good rule of thumb in the 1990s, when Grove took a gamble on moving into microprocessors, and it’s still true today. In 2013 Intel was under pressure as its position in microprocessors for PCs was fading because of the growing popularity of tablets, smartphones, and cloud computing. Chief executive Paul Ottelini announced that he was stepping down three years short of the company retirement age, citing the need for new leadership. Meanwhile, Intel chairman Andy Bryant told employees to prepare for major change. Past success didn’t guarantee future profits, he reminded them. Bryant pointed out that customers had changed, and that Intel had to change as well. Where revenues come from today is not where they will in the future.11 Once again, the greater mistake would be to err on the side of complacency. That way extinction lies.

WHICH ONE IS THE SPECIAL CASE?

If we want to understand the mechanisms of judgment and choice, it makes good sense to design experiments that remove the ability to influence outcomes and the need to outperform rivals. That way, to use Dan Ariely’s metaphor, we can create the equivalent of a strobe light to capture a single frame.

Thanks to the abundance of such experiments and the insights they have given us about judgment and choice, it’s easy to imagine that such decisions represent a sort of baseline or norm. Decisions for which we can influence outcomes and for which we have to outperform rivals might be thought of as a special case, worth noting but hardly representative of most decisions.

But we could just as easily turn this logic on its head. In the real world, the combination of these two features—an ability to exert control over outcomes and the need to outperform rivals—isn’t a special case at all. In many domains it’s the norm. If anything, those carefully designed experiments that remove control and competition are more accurately seen as the special case. True, their findings can be applied to many real world decisions, including consumer behavior, in which people make discrete choices from explicit options, and financial investing, in which we cannot easily influence the value of an asset. But they should not be thought to capture the dynamics of many real-world decisions.

Unfortunately, in our desire to conduct careful research that conforms to the norms of social science, with its rigorous controls, we have sometimes generalized findings to situations that are markedly different. As an example, a recent study in Strategic Management Journal, a leading academic publication, contended that better strategic decisions could result from a technique called similarity-based forecasting.12 To demonstrate the effectiveness of this approach, it tested the accuracy of predictions about the success of Hollywood movies. A model that looked at the past performance of similar movies, rather than relying on hunches or anecdotes, produced better predictions of box office success. Decision models can be enormously powerful tools (as we’ll see in Chapter Nine), and predicting movie success is surely one kind of real-world decision. Yet predicting the success of The Matrix or The War of the Worlds calls for an accurate judgment, period. It’s a mistake to equate the prediction of an event we cannot influence with the broader field of strategic management, which not only affords the change to influence outcomes but also involves a competitive dimension. In our desire to devise predictive models, we sometimes overlook the stuff of management.

I recently observed a similar oversight at my institute, IMD. One of our most successful programs, “Advanced Strategic Management,” attracts a range of senior executives who seek to boost company performance. Their desire, as the program title suggests, is to achieve better strategic management of their companies. In recent years two of my colleagues have added sessions about decision making. The finance professor showed how financial decisions are often distorted by cognitive biases, and the marketing professor did the same for consumer choices. That’s not a bad thing. Executives are surely better off knowing about common errors, and no doubt they enjoyed the sessions. But understanding decisions in finance and marketing does not adequately capture what is distinctive about strategic decisions. For a program about strategic management, we should consider situations in which executives can influence outcomes, and in which performance is not only relative but highly skewed.

Once we do that, we have to look at decision making very differently. In the next chapter we take a fresh look at a topic that’s widely mentioned but poorly understood: overconfidence.

![]()

* The improvements in these examples, from 30 to 40 percent (an increase of 33 percent) or from 30 to 33 percent (an increase of 10 percent) were greater than the gain attributed to EPO, which was estimated to boost performance by 5 percent. Does it follow that the overlap between the two groups in cycling would be greater, therefore weakening the claim that the winner very likely would have doped? The answer is no, because there’s another variable to consider. In my simulated golf competition, each participant putted twenty times. Had I asked for, say, one hundred putts, the overlap between the populations would have been much less, and the chance that a member of the Novice group would have outperformed members of the Trained group would have been far lower. Given that the Tour de France is a multistage bicycle race held over several thousand kilometers, the chance that a lower performer can beat a higher performer is very small. All else being equal, a 5 percent improvement, whether due to EPO or some other enhancement, would produce an almost insurmountable competitive advantage.