Chapter 13. Data Persistence

I want my programs to be able to share their data, or even reuse those data on their next run. For big applications, that might mean that I use a database server such as PostgreSQL or MariaDB, but that also means every application needs access to the database, whether that involves getting the permission to use it or being online to reach it. There are plenty of books that cover those solutions, but not many cover the other situations that don’t need that level of infrastructure.

In this chapter, I cover the lightweight solutions that don’t require a database server or a central resource. Instead, I can store data in regular files and pass those around liberally. I don’t need to install a database server, add users, create a web service, or keep everything running. My program output can become the input for the next program in a pipeline.

Perl-Specific Formats

Perl-specific formats output data that makes sense only to a single programming language and are practically useless to other programming languages. That’s not to say that some other programmer can’t read it, just that they might have to do a lot of work to create a parser to understand it.

pack

The pack built-in takes data and turns it into a single string according to

a template that I provide. It’s similar to sprintf,

although as the pack name suggests,

the output string uses space as efficiently as it can:

#!/usr/bin/perl # pack.pl my $packed = pack( 'NCA*', 31415926, 32, 'Perl' ); print 'Packed string has length [' . length( $packed ) . "]\n"; print "Packed string is [$packed]\n";

The string that pack creates in

this case is shorter than just stringing together the characters that

make up the data, and it is certainly not as easy to read for

humans:

Packed string has length [9] Packed string is [öˆ Perl]

The format string NCA* has one

(Latin) letter for each of the rest of my arguments to pack, with an optional modifier, in this case

the *, after the last letter. My

template tells pack how I want to

store my data. The N treats its

argument as a network-order unsigned long. The C treats its argument as an unsigned char, and

the A treats its argument as an ASCII

character. After the A I use a

* as a repeat

count to apply it to all the characters in its argument.

Without the *, I would only pack the

first character in Perl.

Once I have my packed string, I can write it to a file, send it

over a socket, or do anything else I can with a chunk of data. When I

want to get back my data, I use unpack with the same template string:

my( $long, $char, $ascii ) = unpack( "NCA*", $packed ); print <<"HERE"; Long: $long Char: $char ASCII: $ascii HERE

As long as I’ve done everything correctly, I get back the data I started with:

Long: 31415926 Char: 32 ASCII: Perl

There are many other formats I can use in the template string,

including many sorts of number format and storage. If I want to inspect

a string to see exactly what’s in it, I can unpack it with the H format to

turn it into a hex string. I don’t have to unpack the string in $packed with the same template I used to

create it:

my $hex = unpack( "H*", $packed ); print "Hex is [$hex]\n";

I can now see the hex values for the individual bytes in the string:

Hex is [01df5e76205065726c]

Fixed-Length Records

Since I can control the length of the packed string through its template, I can

pack several data together to form a record for a flat-file database

that I can randomly access. Suppose my record comprises the ISBN, title,

and author for a book. I can use three different A formats, giving each a length specifier. For

each length, pack will either

truncate the argument if it is too long or pad it with spaces if it’s

shorter:

#!/usr/bin/perl

# isbn_record.pl

my( $isbn, $title, $author ) = (

'0596527241', 'Mastering Perl', 'brian d foy'

);

my $record = pack( "A10 A20 A20", $isbn, $title, $author );

print "Record: [$record]\n";The record is exactly 50 characters long, no matter which data I give it:

Record: [144939311XMastering Perl brian d foy ]

When I store this in a file along with several other records, I

always know that the next 50 bytes is another record. The seek built-in puts

me in the right position, and I can read an exact number of bytes with

sysread:

open my $fh, '<', 'books.dat' or die ...; sysseek $fh, 50 * $ARGV[0], 0; # move to right record sysread $fh, my( $record ), 50; # read next record

Unpacking Binary Formats

The unpack built-in is handy

for reading binary formats quickly. Here’s a bit of code

to read Bitmap (PBM) data from the Image::Info distribution. The while loop reads a chunk of 8 bytes and

unpacks them as a long and a 4-character ASCII string. The number is the

length of the next block of data, and the string is the block type.

Further in, the subroutine uses even more unpacks:

package Image::Info::BMP;

use constant _CAN_LITTLE_ENDIAN_PACK => $] >= 5.009002;

sub process_file {

my($info, $source, $opts) = @_;

my(@comments, @warnings, @header, %info, $buf, $total);

read($source, $buf, 54) == 54 or die "Can't reread BMP header: $!";

@header = unpack((_CAN_LITTLE_ENDIAN_PACK

? "vVv2V2Vl<v2V2V2V2"

: "vVv2V2V2v2V2V2V2"

), $buf);

$total += length($buf);

...;

}Data::Dumper

With almost no effort I can serialize Perl data structures as (mostly) human-readable

text. The Data::Dumper module, which comes with Perl, turns its arguments into

Perl source code in a way that I can later turn back into the original

data. I give its Dumper function a

list of references to stringify:

#!/usr/bin/perl

# data_dumper.pl

use Data::Dumper qw(Dumper);

my %hash = qw(

Fred Flintstone

Barney Rubble

);

my @array = qw(Fred Barney Betty Wilma);

print Dumper( \%hash, \@array );The program outputs text that represents the data structures as Perl code:

$VAR1 = {

'Barney' => 'Rubble',

'Fred' => 'Flintstone'

};

$VAR2 = [

'Fred',

'Barney',

'Betty',

'Wilma'

];I have to remember to pass it references to hashes or arrays;

otherwise, Perl passes Dumper a

flattened list of the elements, and Dumper won’t be able to preserve the data

structures. If I don’t like the variable names, I can specify my own. I

give Data::Dumper->new an

anonymous array of the references to dump and a second anonymous array

of the names to use for them:

#!/usr/bin/perl

# data_dumper_named.pl

use Data::Dumper;

my %hash = qw(

Fred Flintstone

Barney Rubble

);

my @array = qw(Fred Barney Betty Wilma);

my $dd = Data::Dumper->new(

[ \%hash, \@array ],

[ qw(hash array) ]

);

print $dd->Dump;I can then call the Dump method

on the object to get the stringified version. Now my references have the

name I gave them:

$hash = {

'Barney' => 'Rubble',

'Fred' => 'Flintstone'

};

$array = [

'Fred',

'Barney',

'Betty',

'Wilma'

];The stringified version isn’t the same as what I had in the

program, though. I had a hash and an array before, but now I have

scalars that hold references to those data types. If I prefix my names

with an asterisk in my call to Data::Dumper->new, Data::Dumper

stringifies the data with the right names and types:

my $dd = Data::Dumper->new(

[ \%hash, \@array ],

[ qw(*hash *array) ]

);The stringified version no longer has references:

%hash = (

'Barney' => 'Rubble',

'Fred' => 'Flintstone'

);

@array = (

'Fred',

'Barney',

'Betty',

'Wilma'

);I can then read these stringified data back into the program, or

even send them to another program. It’s already Perl code, so I can use

the string form of eval to run it.

I’ve saved the previous output in data-dumped.txt, and now I want to load it

into my program. By using eval in its

string form, I execute its argument in the same lexical scope. In my

program I define %hash and @array as lexical variables but don’t assign

anything to them. Those variables get their values through the eval, and strict has no reason to complain:

#!/usr/bin/perl

# data_dumper_reload.pl

use strict;

my $data = do {

if( open my $fh, '<', 'data-dumped.txt' ) { local $/; <$fh> }

else { undef }

};

my %hash;

my @array;

eval $data;

print "Fred's last name is $hash{Fred}\n";Since I dumped the variables to a file, I can also use do. We covered this partially in

Intermediate

Perl, although in the context of loading subroutines

from other files. We advised against it then, because require or use work better for that. In this case we’re reloading data

and the do built-in has

some advantages over eval. For this

task, do takes a filename, and it can

search through the directories in @INC to find that

file. When it finds it, it updates %INC with the path to the file. This is almost

the same as require, but do will reparse the file every time,

whereas require or

use only do

that the first time. They both set %INC, so they know

when they’ve already seen the file and don’t need to do it again. Unlike

require or use, do doesn’t mind returning a false value,

either. If do can’t find the file, it

returns undef and sets $! with the error message. If it finds the

file but can’t read or parse it, it returns undef and sets $@. I modify my previous program to use

do:

#!/usr/bin/perl

# data_dumper_reload_do.pl

use strict;

use Data::Dumper;

my $file = "data-dumped.txt";

print "Before do, \$INC{$file} is [$INC{$file}]\n";

{

no strict 'vars';

do $file;

print "After do, \$INC{$file} is [$INC{$file}]\n";

print "Fred's last name is $hash{Fred}\n";

}When I use do, I lose out on

one important feature of eval. Since eval executes the code in the current context,

it can see the lexical variables that are in scope. Since do can’t do that, it’s not strict

safe and it can’t populate lexical variables.

I find the dumping method especially handy when I want to pass

around data in email. One program, such as a CGI program, collects the

data for me to process later. I could stringify the data into some

format and write code to parse that later, but it’s much easier to use

Data::Dumper,

which can also handle objects. I use my Business::ISBN module to parse a book number, then use Data::Dumper

to stringify the object, so I can use the object in another program. I

save the dump in isbn-dumped.txt:

#!/usr/bin/perl

# data_dumper_object.pl

use Business::ISBN;

use Data::Dumper;

my $isbn = Business::ISBN->new( '0596102062' );

my $dd = Data::Dumper->new( [ $isbn ], [ qw(isbn) ] );

open my $fh, '>', 'isbn-dumped.txt'

or die "Could not save ISBN: $!";

print $fh $dd->Dump();When I read the object back into a program, it’s like it’s been

there all along, since Data::Dumper

outputs the data inside a call to bless:

$isbn = bless( {

'common_data' => '0596102062',

'publisher_code' => '596',

'group_code' => '0',

'input_isbn' => '0596102062',

'valid' => 1,

'checksum' => '2',

'type' => 'ISBN10',

'isbn' => '0596102062',

'article_code' => '10206',

'prefix' => ''

}, 'Business::ISBN10' );I don’t need to do anything special to make it an object, but I still need to load the appropriate module to be able to call methods on the object. Just because I can bless something into a package doesn’t mean that package exists or has anything in it:

#!/usr/bin/perl

# data_dumper_object_reload.pl

use Business::ISBN;

my $data = do {

if( open my $fh, '<', 'isbn_dumped.txt' ) { local $/; <$fh> }

else { undef }

};

my $isbn;

eval $data; # Add your own error handling

print "The ISBN is ", $isbn->as_string, "\n";Similar Modules

The Data::Dumper

module might not be enough for every task, and there are several other

modules on CPAN that do the same job a bit differently. The concept is

the same: turn data into text files and later turn the text files back

into data. I can try to dump an anonymous subroutine with Data::Dumper:

use Data::Dumper;

my $closure = do {

my $n = 10;

sub { return $n++ }

};

print Dumper( $closure );I don’t get back anything useful, though. Data::Dumper

knows it’s a subroutine, but it can’t say what it does:

$VAR1 = sub { "DUMMY" };The Data::Dump::Streamer module can handle this situation to a limited

extent:

use Data::Dump::Streamer;

my $closure = do {

my $n = 10;

sub { return $n++ }

};

print Dump( $closure );Since Data::Dump::Streamer

serializes all of the code references in the same scope, all of the

variables to which they refer show up in the same scope. There are some

ways around that, but they may not always work:

my ($n);

$n = 10;

$CODE1 = sub {

return $n++;

};If I don’t like the variables Data::Dumper

has to create, I might want to use Data::Dump, which simply creates the data:

#!/usr/bin/perl use Business::ISBN; use Data::Dump qw(dump); my $isbn = Business::ISBN->new( '144939311X' ); print dump( $isbn );

The output is almost just like that from Data::Dumper,

although it is missing the $VARn

stuff:

bless({

article_code => 9311,

checksum => "X",

common_data => "144939311X",

group_code => 1,

input_isbn => "144939311X",

isbn => "144939311X",

prefix => "",

publisher_code => 4493,

type => "ISBN10",

valid => 1,

}, "Business::ISBN10")When I eval this, I won’t

create any variables. I have to store the result of the eval to use the variable. The only way to get

back my object is to assign the result of eval to $isbn:

#!/usr/bin/perl

# data_dump_reload.pl

use Business::ISBN;

my $data = do {

if( open my $fh, '<', 'data_dump.txt' ) { local $/; <$fh> }

else { undef }

};

my $isbn = eval $data; # Add your own error handling

print "The ISBN is ", $isbn->as_string, "\n";There are several other modules on CPAN that can dump data, so if I don’t like any of these formats I have many other options.

Storable

The Storable module is one step up from the human-readable data dumps

from the last section. The output it produces might be human-decipherable,

but in general it’s not for human eyes. The module is mostly written in C,

and part of this exposes the architecture on which I built perl, and the byte order of the data will depend

on the underlying architecture in some cases. On a big-endian machine I’ll

get different output than on a little-endian machine. I’ll get around that

in a moment.

The store function serializes the

data and puts it in a file. Storable

treats problems as exceptions (meaning it tries to die rather than recover), so I wrap the call to

its functions in eval and look at the

eval error variable $@ to see if something serious went wrong. More

minor errors, such as output errors, don’t die and return undef, so I check those too and find the error

in $! if it was related to something

with the system (i.e., couldn’t open the output):

#!/usr/bin/perl

# storable_store.pl

use Business::ISBN;

use Storable qw(store);

my $isbn = Business::ISBN->new( '0596102062' );

my $result = eval { store( $isbn, 'isbn_stored.dat' ) };

if( defined $@ and length $@ )

{ warn "Serious error from Storable: $@" }

elsif( not defined $result )

{ warn "I/O error from Storable: $!" }When I want to reload the data I use retrieve. As with store, I wrap my call in eval to catch any errors. I also add another

check in my if structure to ensure I

get back what I expect, in this case a Business::ISBN object:

#!/usr/bin/perl

# storable_retrieve.pl

use Business::ISBN;

use Storable qw(retrieve);

my $isbn = eval { retrieve( 'isbn_stored.dat' ) };

if( defined $@ and length $@ )

{ warn "Serious error from Storable: $@" }

elsif( not defined $isbn )

{ warn "I/O error from Storable: $!" }

elsif( not eval { $isbn->isa( 'Business::ISBN' ) } )

{ warn "Didn't get back Business::ISBN object\n" }

print "I loaded the ISBN ", $isbn->as_string, "\n";To get around this machine-dependent format, Storable

can use network order, which is

architecture-independent and is converted to the local order as

appropriate. For that, Storable

provides the same function names with a prepended n. Thus, to store the data in network order I

use nstore. The retrieve function figures it out on its own, so

there is no nretrieve function. In this

example, I also use Storable’s

functions to write directly to filehandles instead of a filename. Those

functions have fd in their name:

my $result = eval { nstore( $isbn, 'isbn_stored.dat' ) };

open my $fh, '>', $file or die "Could not open $file: $!";

my $result = eval{ nstore_fd $isbn, $fh };

my $result = eval{ nstore_fd $isbn, \*STDOUT };

my $result = eval{ nstore_fd $isbn, \*SOCKET };

$isbn = eval { fd_retrieve(\*SOCKET) };Now that you’ve seen filehandle references as arguments to Storable’s

functions, I need to mention that it’s the data from those filehandles

that Storable

affects, not the filehandles themselves. I can’t use these functions to

capture the state of a filehandle or socket that I can magically use

later. That just doesn’t work, no matter how many people ask about it on

mailing lists.

Freezing Data

The Storable

module can also freeze data

into a scalar. I don’t have to store it in a file or send

it to a filehandle; I can keep it in memory, although serialized. I

might store that in a database or do something else with it. To turn it

back into a data structure, I use thaw:

#!/usr/bin/perl

# storable_thaw.pl

use Business::ISBN;

use Data::Dumper;

use Storable qw(nfreeze thaw);

my $isbn = Business::ISBN->new( '0596102062' );

my $frozen = eval { nfreeze( $isbn ) };

if( $@ ) { warn "Serious error from Storable: $@" }

my $other_isbn = thaw( $frozen ); # add your own error handling

print "The ISBN is ", $other_isbn->as_string, "\n";This has an interesting use. Once I serialize the data, it’s completely disconnected from the variables in which I was storing it. All of the data are copied and represented in the serialization. When I thaw it, the data come back into a completely new data structure that knows nothing about the previous data structure.

Before I show this copying, I’ll show a shallow copy, in which I copy the top level of the data structure, but the lower levels are the same references. This is a common error in copying data. I think they are distinct copies, but later I discover that a change to the copy also changes the original.

I’ll start with an anonymous array that comprises two other

anonymous arrays. I want to look at the second value in the second

anonymous array, which starts as Y. I

look at that value in the original, and the copy before and after I make

a change in the copy. I make the shallow copy by dereferencing $AoA and using its elements in a new anonymous

array. Again, this is the naïve approach, but I’ve seen it quite a bit

and probably have even done it myself a couple or 50 times:

#!/usr/bin/perl

# shallow_copy.pl

my $AoA = [

[ qw( a b ) ],

[ qw( X Y ) ],

];

# Make the shallow copy

my $shallow_copy = [ @$AoA ];

# Check the state of the world before changes

show_arrays( $AoA, $shallow_copy );

# Now, change the shallow copy

$shallow_copy->[1][1] = "Foo";

# Check the state of the world after changes

show_arrays( $AoA, $shallow_copy );

print "\nOriginal: $AoA->[1]\nCopy: $shallow_copy->[1]\n";

sub show_arrays {

foreach my $ref ( @_ ) {

print "Element [1,1] is $ref->[1][1]\n";

}

}When I run the program, I see from the output that the change to

$shallow_copy also changes $AoA. When I print the stringified version of

the reference for the corresponding elements in each array, I see that

they are actually references to the same data:

Element [1,1] is Y Element [1,1] is Y Element [1,1] is Foo Element [1,1] is Foo Original: ARRAY(0x790c9320) Copy: ARRAY(0x790c9320)

To get around the shallow copy problem I can make a deep

copy by freezing and immediately thawing, and I don’t have to

do any work to figure out the data structure. Once the data are frozen,

they no longer have any connection to the source. I use nfreeze to get the data in network order, just

in case I want to send it to another machine:

use Storable qw(nfreeze thaw); my $deep_copy = thaw( nfreeze( $isbn ) );

This is so useful that Storable

provides the dclone function to do it

in one step:

use Storable qw(dclone); my $deep_copy = dclone $isbn;

Storable

is much more interesting and useful than I’ve shown in this section. It

can also handle file locking and has hooks to integrate it with classes,

so I can use its features for my objects. See the Storable

documentation for more details.

The Clone::Any module by Matthew Simon Cavalletto provides the same functionality through a façade to

several different modules that can make deep copies. With Clone::Any’s

unifying interface, I don’t have to worry about which module I actually

use, or which is installed on a remote system (as long as one of

them is):

use Clone::Any qw(clone); my $deep_copy = clone( $isbn );

Storable’s Security Problem

Storable

has a couple of huge security problems related to Perl’s

(and Perl programmers’!) trusting nature.

If you look in Storable.xs,

you’ll find a couple of instances of a call to load_module. Depending on what you’re trying

to deserialize, Storable

might load a module without you explicitly asking for it. When Perl

loads a module, it can run code right away. I know a file with the right

name loads, but I don’t know if it’s the code I intend.

My Perl module can define serialization hooks that replace the

default behavior of Storable

with my own. I can serialize the object myself and give Storable

the octets it should store. Along with that, I can take the octets from

Storable

and re-create the object myself. Perhaps I want to reconnect to a

resource as I rehydrate the object.

Storable

notes the presence of a hook with a flag set in the serialization

string. As it deserializes and notices that flag, it loads that module

to look for the corresponding STORABLE_thaw method.

The same thing happens for classes that overload

operators. Storable

sets a flag, and when it notices that flag, it loads the overload module too. It might need it when it re-creates the

objects.

It doesn’t really matter that a module actually defines hooks or

uses overload.

The only thing that matters is that the serialized data sets those

flags. If I store my data through the approved interface, bugs aside, I

should be fine. If I want to trick Storable,

though, I can make my own data and set whatever bits I like. If I can

get you to load a module, I’m one step closer to taking over your

program.

I can also construct a special hash serialization that tricks perl into running a method. If I use the approved interface to serialize a hash, I know that a key is unique and will only appear in the serialization once.

Again, I can muck with the serialization myself to construct

something that Storable

would not make itself. I can make a Storable

string that repeats a key in a hash:

#!/usr/bin/perl

# storable_dupe_key.pl

use v5.14;

use Storable qw(freeze thaw);

use Data::Printer;

say "Storable version ", Storable->VERSION;

package Foo {

sub DESTROY { say "DESTROY while exiting for ${$_[0]}" };

}

my $data;

my $frozen = do {

my $pristine = do {

local *Foo::DESTROY = sub {

say "DESTROY while freezing for ${$_[0]}" };

$data = {

'key1' => bless( \ (my $n = 'abc'), 'Foo' ),

'key2' => bless( \ (my $m = '123'), 'Foo' ),

};

say "Saving...";

freeze( $data );

};

$pristine =~ s/key2/key1/r;

};

my $unfrozen = do {

say "Retrieving...";

local *Foo::DESTROY = sub {

say "DESTROY while inflating for ${$_[0]}" };

thaw( $frozen );

};

say "Done retrieving, showing hash...";

p $unfrozen;

say "Exiting next...";In the first do block, I create

a hash with key1 and key2, both of which point to scalar references

I blessed into Foo. I freeze that and

immediately change the serialization to replace the literal key2 with key1. I can do that because I know things

about the serialization and how the keys show up in it. The munged

version ends up in $frozen.

When I want to thaw that string, I create a local version of

DESTROY to watch what happens. In the

output I see that while inflating, it handles one instance of key1 then destroys it when it handles the next

one. At the end I have a single key in the hash:

% perl storable_dupe_key.pl

Storable version 2.41

Saving...

Retrieving...

DESTROY while inflating for abc

Done retrieving, showing hash...

Exiting next...

DESTROY while exiting for 123

DESTROY while exiting for 123

DESTROY while exiting for abc

\ {

key1 Foo {

public methods (1) : DESTROY

private methods (0)

internals: 123

}

}If I haven’t already forced Storable

to load a module, I might be able to use the DESTROY method from a class that I know is

already loaded. One candidate is the core module CGI.pm. It

includes the CGITempFile

class, which tries to unlink a file when it cleans up an object:

sub DESTROY {

my($self) = @_;

$$self =~ m!^([a-zA-Z0-9_ \'\":/.\$\\~-]+)$! || return;

my $safe = $1; # untaint operation

unlink $safe; # get rid of the file

}Although this method untaints the filename, remember that taint

checking is not a prophylactic; it’s a development tool. Untainting

whatever I put in $$self isn’t going

to stop me from deleting a file—including, perhaps, the Storable

file I used to deliver my malicious payload.

Sereal

Booking.com, one of Perl’s big supporters, developed a new serialization format that avoids some of

Storable’s

problems. But the site developers wanted to preserve some special Perl

features in their format, including references, aliases, objects, and

regular expressions. And although it started in Perl, they’ve set it up so

it doesn’t have to stay there. Best of all, they made it really

fast.

The module use is simple. The encoders and decoders are separate by

design, but I only need to load Sereal to

get Sereal::Encoder:

use Sereal; my $data = ...; my $encoder = Sereal::Encoder->new; my $serealized = $encoder->encode( $data );

To go the other way, I use Sereal::Decoder:

my $decoder = Sereal::Decoder->new; my $unserealized = $decoder->decode( $serealized );

In the previous section, I showed that Storable

had a problem with duplicated keys. Here’s the same program using Sereal:

#!/usr/bin/perl

# sereal_bad_key.pl

use v5.14;

use Sereal;

use Data::Printer;

say "Sereal version ", Sereal->VERSION;

package Foo {

sub DESTROY { say "DESTROY while exiting for ${$_[0]}" };

}

my $data;

my $frozen = do {

my $pristine = do {

local *Foo::DESTROY = sub {

say "DESTROY while freezing for ${$_[0]}" };

$data = {

'key1' => bless( \ (my $n = 'abc'), 'Foo' ),

'key2' => bless( \ (my $m = '123'), 'Foo' ),

};

say "Saving...";

Sereal::Encoder->new->encode( $data );

};

$pristine =~ s/key2/key1/r;

};

my $unfrozen = do {

say "Retrieving...";

local *Foo::DESTROY = sub {

say "DESTROY while inflating for ${$_[0]}" };

Sereal::Decoder->new->decode( $frozen );

};

say "Done retrieving, showing hash...";

p $unfrozen;

say "Exiting next...";The output shows that a DESTROY

isn’t triggered during the inflation. I wouldn’t be able to trick CGITempFile

into deleting a file, as I could do with Storable.

Also, since Sereal

doesn’t support special per-class serialization and deserialization hooks,

I won’t be able to trick it into loading classes or running code.

Sereal,

unlike the other serializers I have shown so far, makes a deliberate and

conscious effort to create a small string. Imagine a data structure that

is an array of hashes: the keys in each hash are the same; only the values

are different.

The Sereal

specification includes a way for a later hash to reuse the string already

stored for that key, so it doesn’t have to store it again, as with JSON or

Storable.

I wrote a tiny benchmark to try this, comparing Data::Dumper, JSON, Sereal::Encoder, and Storable,

using the defaults from each:

#!/usr/bin/perl

use v5.18;

use Data::Dumper qw(Dumper);

use Storable qw(nstore_fd dclone);

use Sereal::Encoder qw(encode_sereal);

use JSON qw(encode_json);

my $stores = {

dumper => sub { Dumper( $_[0] ) },

jsoner => sub { encode_json( $_[0] ) },

serealer => sub { encode_sereal( $_[0] ) },

storer => sub {

open my $sfh, '>:raw', \ my $string;

nstore_fd( $_[0], $sfh );

close $sfh;

$string;

},

};

my $max_hash_count = 10;

my $hash;

my @keys = get_keys();

my @values = get_values();

@{$hash}{ @keys } = @values;

my %lengths;

my @max;

foreach my $hash_count ( 1 .. $max_hash_count ) {

my $data = [ map { dclone $hash } 1 .. $hash_count ];

foreach my $type ( sort keys %$stores ) {

my $string = $stores->{$type}( $data );

my $length = length $string;

$max[$hash_count] = $length if $length > $max[$hash_count];

$max[0] = $length if $length > $max[0]; # grand max

if( 0 == $length ) {

warn "$type: length is zero!\n";

}

push @{$lengths{$type}}, $length;

}

}

###########

# make a tab separated report with the normalized numbers

# for each method, in columns suitable for a spreadsheet

say join "\t", sort keys %$stores;

open my $per_fh, '>:utf8', "$0-per.tsv" or die "$!";

open my $grand_fh, '>:utf8', "$0-grand.tsv" or die "$!";

foreach my $index ( 1 .. $max_hash_count ) {

say { $per_fh } join(

"\t",

map { $lengths{$_}[$index - 1] / $max[$index] }

sort keys %$stores

);

say { $grand_fh } join(

"\t",

map { $lengths{$_}[$index - 1] / $max[0] }

sort keys %$stores

);

}

# make some long keys

sub get_keys {

map { $0 . time() . $_ . $$ } ( 'a' .. 'f' );

}

# make some long values

sub get_values {

map { $0 . time() . $_ . $$ } ( 'f' .. 'k' );

}This program serializes arrays of hashes, starting with an array

that has 1 hash and going up to an array with 10 hashes, all of them

exactly the same but not references of each other (hence the dclone). This way, the keys and values for each

hash should be repeated in the serialization.

To make the relative measures a bit easier to see, I keep track of the maximum string length for all serializations (the grand) and the per-hash-count maximum. I use those to normalize the numbers and create two graphs of the same data.

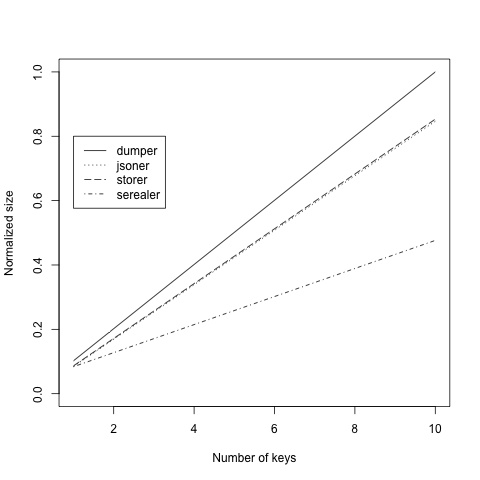

The plot in Figure 13-1 uses the

grand normalization and shows linear growth in each

serialization. For size, Data::Dumper

does the worst, with JSON and Storable

doing slightly better, mostly because they use much less whitespace. The

size of the Sereal

strings grows much more slowly.

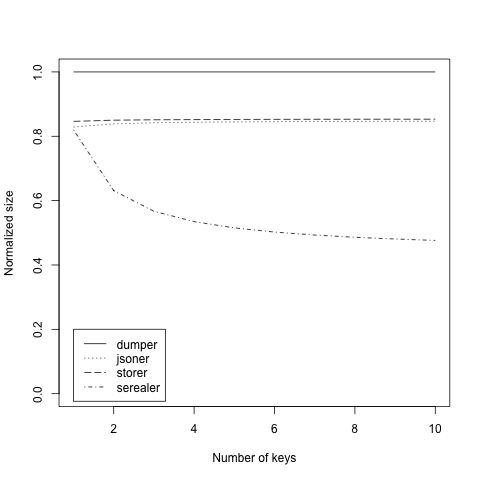

Many people would be satisfied with that plot, but I like the one

from the per-keys normalization, where the numbers are normalized just for

the maximum string size of the same hash size count, as in Figure 13-2. The Data::Dumper

size is always the largest, so it is always normalized to exactly 1. JSON

and Storable

still normalize to almost the same number (to two decimal places) and look

like a straight line. The Sereal curve

is more interesting: it starts at the same point as JSON and Storable

for one hash, when every serialization has to store the keys and values at

least once, then drops dramatically and continues to drop, although more

slowly, as the number of keys increases.

But, as I explained in Chapter 5, all benchmarks

have caveats. I’ve chosen a particular use case for this, but that does

not mean you would see the same thing for another problem. If all the

hashes had unique keys that no other hash stored, I expect that Sereal

wouldn’t save as much space. Create a benchmark that mimics your use to

test that.

DBM Files

The next step after Storable

is tiny, lightweight databases. These don’t require a database server but

still handle most of the work to make the data available in my program.

There are several facilities for this, but I’m only going to cover a

couple of them. The concept is the same even if the interfaces and fine

details are different.

dbmopen

Since at least Perl 3 I’ve been able to connect to DBM files,

which are hashes stored on disk. In the early days of Perl, when the

language and practice was much more Unix-centric, DBM access was

important, since many system databases used that format. The DBM was a

simple hash where I could specify a key and a value. I use dbmopen to connect

a hash to the disk file, then use it like a normal hash. dbmclose ensures that all of my changes make it to the disk:

#!/usr/bin/perl

# dbmopen.pl

dbmopen my %HASH, "dbm_open", 0644;

$HASH{'0596102062'} = 'Intermediate Perl';

while( my( $key, $value ) = each %HASH ) {

print "$key: $value\n";

}

dbmclose %HASH;In modern Perl the situation is much more complicated. The DBM format branched off into several competing formats, each of which had their own strengths and peculiarities. Some could only store values shorter than a certain length, or only store a certain number of keys, and so on.

Depending on the compilation options of the local perl binary, I might be using any of these

implementations. That means that although I can safely use dbmopen on the same machine, I might have

trouble sharing it between machines, since the next machine might use a

different DBM library.

None of this really matters, because CPAN has something much better.

DBM::Deep

Much more popular today is DBM::Deep, which I use anywhere that I would have previously used

one of the other DBM formats. With this module, I can create arbitrarily

deep, multilevel hashes or arrays. The module is pure Perl so I don’t

have to worry about different library implementations, underlying

details, and so on. As long as I have Perl, I have everything I need. It

works without worry on a Mac, Windows, or Unix, any of which can share

DBM::Deep

files with any of the others. And, best of all, it’s pure Perl.

Joe Huckaby created DBM::Deep

with both an object-oriented interface and a tie interface (see Chapter 16). The documentation recommends the object

interface, so I’ll stick to that here. With a single argument, the

constructor uses it as a filename, creating the file if it does not

already exist:

use DBM::Deep;

my $isbns = DBM::Deep->new( "isbns.db" );

if( ref $isbns ) {

warn 'Could not create database!\n";

}

$isbns->{'1449393098'} = 'Intermediate Perl';Once I have the DBM::Deep

object, I can treat it just like a hash reference and use all of the

hash operators.

Additionally, I can call methods on the object to do the same thing. I can even set additional features, such as file locking and flushing when I create the object:

#!/usr/bin/perl

use DBM::Deep;

my $isbns = DBM::Deep->new(

file => "isbn.db",

locking => 1,

autoflush => 1,

);

unless( defined $isbns ) {

warn "Could not create database!\n";

}

$isbns->put( '1449393098', 'Intermediate Perl' );

my $value = $isbns->get( '1449393098' );The module also handles objects based on arrays, which have their own set of methods. It has hooks into its inner mechanisms so I can define how it does its work.

By the time you read this book, DBM::Deep

should already have transaction support thanks to the work of Rob

Kinyon, its current maintainer. I can create my object, then use

the begin_work method to start a

transaction. Once I do that, nothing happens to the data until I call

commit, which writes all of my

changes to the data. If something goes wrong, I just call rollback to get to where I was when I

started:

my $db = DBM::Deep->new( 'file.db' );

eval {

$db->begin_work;

...

die q(Something didn't work) if $error;

$db->commit;

};

if( defined $@ and length $@ ) {

$db->rollback;

}Perl-Agnostic Formats

So far in this chapter I’ve used formats that are specific to Perl. Sometimes that works out, but more likely I’ll want something that I can exchange with other languages so I don’t lock myself into a particular language or tool. If my format doesn’t care about the language, I’ll have an easier time building compatible systems and integrating or switching technologies later.

In this section, I’ll show some other formats and how to work with them in Perl, but I’m not going to provide a tutorial for each of them. My intent is to survey what’s out there and give you an idea when you might use them.

JSON

JavaScript Object Notation, or JSON, is a very attractive format for data interchange because I can have my Perl (or Ruby or Python or whatever) program send the data as part of a web request, so a browser can use it easily and immediately. The format is actually valid Javascript code; this is technically language-specific for that reason, but the value of a format understandable by a web browser is so high that most mainstream languages already have libraries for it.

A JSON data structure looks similar to a Perl data structure,

although much simpler. Instead of => there’s a :, and strings are

double-quoted:

{

"meta" : {

"established" : 1991,

"license" : "416d656c6961"

},

"source" : "Larry's Camel Clinic",

"camels" : [

"Amelia",

"Slomo"

]

}I created that JSON data with a tiny program. I started with a Perl data structure and turned it into the JSON form:

#!/usr/bin/perl

# json_data.pl

use v5.10;

use JSON;

my $hash = {

camels => [ qw(Amelia Slomo) ],

source => "Larry's Camel Clinic",

meta => {

license => '416d656c6961',

established => 1991,

},

};

say JSON->new->pretty->encode( $hash );To load that data into my Perl program, I need to decode it.

Although the JSON specification allows several Unicode encodings, the

JSON module only handles UTF-8 text. I have to read that as

raw octets though:

#!/usr/bin/perl

# read_json.pl

use v5.10;

use JSON;

my $json = do {

local $/;

open my $fh, '<:raw', '/Users/Amelia/Desktop/sample.json';

<$fh>;

};

my $perl = JSON->new->decode( $json );

say "Camels are [ @{ $perl->{camels} } ]";Going the other way is much easier. I give the module a data structure and get back the result as JSON:

#!/usr/bin/perl

use v5.10;

# simple_json.pl

use JSON;

my $hash = {

camels => [ qw(Amelia Slomo) ],

source => "Larry's Camel Clinic",

meta => {

license => '416d656c6961',

established => 1991,

},

};

say JSON->new->encode( $hash );The output is compact with minimal whitespace. If machines are exchanging data, they don’t need the extra characters:

{"camels":["Amelia","Slomo"],"meta":{"license":"416d656c6961","established":1991}

,"source":"Larry's Camel Clinic"}The module has many options to specify the encoding, the style,

and other aspects of the output that I might want to control. If I’m

sending my data to a web browser, I probably don’t care if the output is

easy for me to read. However, if I want to be able to read it easily, I

can use the pretty option, as I did

in my first example:

say JSON->new->pretty->encode( $hash );

The JSON module

lists other options you might need. Read its documentation to see what

else you can do.

CPAN has other JSON implementations, such as JSON::Syck. This is based on libsyck, a YAML parser (read the next

section). Since some YAML parsers have some of the same problems that

Storable has, you might want to avoid parsing JSON, which doesn’t

create local objects, with a parser that can. See CVE-2013-0333 for an example of this

causing a problem in Ruby.

YAML

YAML (YAML Ain’t Markup

Language) seems like the same idea as Data::Dumper, although more concise and easier to read. The YAML 1.2

specification says, “There are hundreds of different languages

for programming, but only a handful of languages for storing and

transferring data.” That is, YAML aims to be much more than

serialization.

YAML was popular in the Perl community when I wrote the first

edition of this book, but JSON has largely eaten its lunch. Still, some

parts of the Perl toolchain use it, and it does have some advantages

over JSON. The META.yml file

produced by various module distribution creation tools is YAML.

I write to a file that I give the extension .yml:

#!/usr/bin/perl

# yaml_dump.pl

use Business::ISBN;

use YAML qw(Dump);

my %hash = qw(

Fred Flintstone

Barney Rubble

);

my @array = qw(Fred Barney Betty Wilma);

my $isbn = Business::ISBN->new( '144939311X' );

open my $fh, '>', 'dump.yml' or die "Could not write to file: $!\n";

print $fh Dump( \%hash, \@array, $isbn );The output for the data structures is very compact although still

readable once I understand its format. To get the data back, I don’t

have to go through the shenanigans I experienced with Data::Dumper:

--- Barney: Rubble Fred: Flintstone --- - Fred - Barney - Betty - Wilma --- !!perl/hash:Business::ISBN10 article_code: 9311 checksum: X common_data: 144939311X group_code: 1 input_isbn: 144939311X isbn: 144939311X prefix: '' publisher_code: 4493 type: ISBN10 valid: 1

YAML can preserve Perl data structures and objects because it has a way to label things (which is basically how Perl blesses a reference). This is something I couldn’t get (and don’t want) with plain JSON.

The YAML module

provides a Load function to do it for

me, although the basic concept is the same. I read the data from the

file and pass the text to Load:

#!/usr/bin/perl

# yaml_load.pl

use Business::ISBN;

use YAML;

my $data = do {

if( open my $fh, '<', 'dump.yml' ) { local $/; <$fh> }

else { undef }

};

my( $hash, $array, $isbn ) = Load( $data );

print "The ISBN is ", $isbn->as_string, "\n";YAML isn’t

part of the standard Perl distribution, and it relies on several other

noncore modules as well. Since it can create Perl objects, it has some

of the same problems as Storable.

YAML module variants

YAML has three common versions, and they aren’t necessarily compatible with each other. Parsers (and writers) target particular versions, which means that I’m likely to have a problem if I create a YAML file in one version and try to parse it as another.

YAML 1.0 allows unquoted dashes, -, as data, but YAML 1.1 and later do not.

This caused problems for me when I

created many files with an older dumper and tried to use a newer

parser. YAML::Syck, based on libsyck,

handles YAML 1.0 but not YAML 1.1.

YAML::LibYAML includes YAML::XS. Kirill Siminov’s libyaml is arguably the best YAML implementation. The C library is

written precisely to the YAML 1.1 specification. It was originally

bound to Python and was later bound to Ruby. For most things, I stick

with YAML::XS.

YAML::Tiny handles a subset of YAML 1.1 in pure Perl. Like the

other ::Tiny modules, YAML::Tiny

has no noncore dependencies, does not require a compiler to install,

is backward-compatible to Perl 5.004, and can be inlined into other

modules if needed. If you aren’t doing anything tricky, want a very

small footprint, or want minimal dependencies, this module might be for you.

MessagePack

The MessagePack format is

similar to JSON, but it’s smaller and faster. It’s a

serialization format (so it can be much smaller) that has

implementations in most of the mainstream languages. It’s like a

cross-platform pack that’s also

smarter. The Data::MessagePack module handles it:

#!/usr/bin/perl

# message_pack.pl

use v5.10;

use Data::MessagePack;

use Data::Dumper;

my %hash = qw(

Fred Flintstone

Barney Rubble

Key 12345

);

my $mp = Data::MessagePack->new;

$mp->canonical->utf8->prefer_integer;

my $packed = $mp->pack( \%hash );

say 'Length of packed is ', length $packed;

say Dumper( $mp->unpack( $packed ) );The Data::MessagePack

module comes with some benchmark programs (although remember what I

wrote in Chapter 5):

% perl benchmark/deserialize.pl-- deserialize JSON::XS: 2.34 Data::MessagePack: 0.47 Storable: 2.41 Rate storable json mp storable 64577/s -- -21% -45% json 81920/s 27% -- -30% mp 117108/s 81% 43% --% perl benchmark/serialize.pl-- serialize JSON::XS: 2.34 Data::MessagePack: 0.47 Storable: 2.41 Rate storable json mp storable 91897/s -- -22% -50% json 118154/s 29% -- -35% mp 182044/s 98% 54% --

Summary

By stringifying Perl data, I have a lightweight way to pass data between invocations of a program and even between different programs. Binary formats are slightly more complicated, although Perl comes with the modules to handle those, too. No matter which one I choose, I have some options before I decide that I have to move up to a full database server.

Further Reading

Programming the

Perl DBI by Tim Bunce and Alligator Descartes covers the Perl Database Interface (DBI). The

DBI is a generic interface to most popular database servers. If

you need more than I covered in this chapter, you probably need DBI. I could

have covered SQLite, an extremely lightweight, single-file relational

database in this chapter, but I access it through the

DBI just as I would any other database so I left it

out. It’s extremely handy for quick persistence tasks, though.

The BerkeleyDB module provides an interface to the BerkeleyDB library, which

provides another way to store data. It’s somewhat complex to use but very

powerful.

Alberto Simões wrote “Data::Dumper and Data::Dump::Streamer” for The Perl Review 3.1 (Winter 2006).

Vladi Belperchinov-Shabanski shows an example of Storable in “Implementing

Flood Control” for Perl.com.

Randal Schwartz has some articles on persistent data: “Persistent Data”, Unix Review, February 1999; “Persistent Storage for Data”, Linux Magazine, May 2003; and “Lightweight Persistent Data”, Unix Review, July 2004.

The JSON website explains the data format, as does RFC 4627. JavaScript: The Definitive Guide has a good section on JSON. I also like the JSON appendix in JavaScript: The Good Parts.

Randal Schwartz wrote a JSON parser as a single Perl Regex.

The YAML website has link to all the YAML projects in different languages.

There’s a set of Stack Overflow answers to “Should I use YAML or JSON to store my Perl data?” which discuss the costs and benefits of YAML, JSON, and XML.

Steffen Müller writes about Booking.com’s development of Sereal in “Sereal—a

binary data serialization format”.

The documentation for AnyDBM_File discusses the various implementations of DBM files.