3

THE SIMPLICITY, UNITY, AND COMPLEXITY OF LIFE

As emphasized in the opening chapter, living systems, from the smallest bacteria to the largest cities and ecosystems, are quintessential complex adaptive systems operating over an enormous range of multiple spatial, temporal, energy, and mass scales. In terms of mass alone, the overall scale of life covers more than thirty orders of magnitude (1030) from the molecules that power metabolism and the genetic code up to ecosystems and cities. This range vastly exceeds that of the mass of the Earth relative to that of our entire galaxy, the Milky Way, which covers “only” eighteen orders of magnitude, and is comparable to the mass of an electron relative to that of a mouse.

Over this immense spectrum, life uses essentially the same basic building blocks and processes to create an amazing variety of forms, functions, and dynamical behaviors. This is a profound testament to the power of natural selection and evolutionary dynamics. All of life functions by transforming energy from physical or chemical sources into organic molecules that are metabolized to build, maintain, and reproduce complex, highly organized systems. This is accomplished by the operation of two distinct but closely interacting systems: the genetic code, which stores and processes the information and “instructions” to build and maintain the organism, and the metabolic system, which acquires, transforms, and allocates energy and materials for maintenance, growth, and reproduction. Considerable progress has been made in elucidating both of these systems at levels from molecules to organisms, and later I will discuss how it can be extended to cities and companies. However, understanding how information processing (“genomics”) integrates with the processing of energy and resources (“metabolics”) to sustain life remains a major challenge. Finding the universal principles that underlie the structures, dynamics, and integration of these systems is fundamental to understanding life and to managing biological and socioeconomic systems in such diverse contexts as medicine, agriculture, and the environment.

The extraordinary scale of life from complex molecules and microbes to whales and sequoias in relation to galactic and subatomic scales.

A unified framework for understanding genetics has been developed that can account for phenomena from the replication, transcription, and translation of genes to the evolutionary origin of species. Slower to emerge has been a comparable unified theory of metabolism that links processes by which the energy and material transforming processes that are generated by biochemical reactions within cells are scaled up to sustain life, power biological activities, and set the timescales of vital processes at levels from organisms to ecosystems.

The search for fundamental principles that govern how the complexity of life emerges from its underlying simplicity is one of the grand challenges of twenty-first-century science. Although this has been, and will continue to be, primarily the purview of biologists and chemists, it is becoming an activity where other disciplines, and in particular physics and computer science, are playing an increasingly important role. Understanding more generally the emergence of complexity from simplicity, an essential characteristic of adaptive evolving systems, is one of the founding cornerstones of the new science of complexity.

The field of physics is concerned with fundamental principles and concepts at all levels of organization that are quantifiable and mathematizable (meaning amenable to computation), and can consequently lead to precise predictions that can be tested by experiment and observation. From this perspective, it is natural to ask if there are “universal laws of life” that are mathematizable so that biology could also be formulated as a predictive, quantitative science much like physics. Is it conceivable that there are yet-to-be-discovered “Newton’s Laws of Biology” that would lead, at least in principle, to precise calculations of any biological process so that, for instance, one could accurately predict how long you and I would live?

This seems very unlikely. After all, life is the complex system par excellence, exhibiting many levels of emergent phenomena arising from multiple contingent histories. Nevertheless, it may not be unreasonable to conjecture that the generic coarse-grained behavior of living systems might obey quantifiable universal laws that capture their essential features. This more modest view presumes that at every organizational level average idealized biological systems can be constructed whose general properties are calculable. Thus we ought to be able to calculate the average and maximum life span of human beings even if we’ll never be able to calculate our own. This provides a point of departure or baseline for quantitatively understanding actual biosystems, which can be viewed as variations or perturbations around idealized norms due to local environmental conditions or historical evolutionary divergence. I will elaborate on this perspective in much greater depth below, as it forms the conceptual strategy for attacking most of the questions posed in the opening chapter.

1. FROM QUARKS AND STRINGS TO CELLS AND WHALES

Before launching into some of the big questions that have been raised, I want to make a short detour to describe the serendipitous journey that led me from investigating fundamental questions in physics to fundamental questions in biology and eventually to fundamental questions in the socioeconomic sciences that have a bearing on pivotal questions concerning global sustainability.

In October 1993 the U.S. Congress with the consent of President Bill Clinton officially canceled the largest scientific project ever conceived after having spent almost $3 billion on its construction. This extraordinary project was the mammoth Superconducting Super Collider (SSC), which, together with its detectors, was arguably the greatest engineering challenge ever attempted. The SSC was to be a giant microscope designed to probe distances down to a hundred trillionth of a micron with the aim of revealing the structure and dynamics of the fundamental constituents of matter. It would provide critical evidence for testing predictions derived from our theory of the elementary particles, potentially discover new phenomena, and lay the foundations for what was termed a “Grand Unified Theory” of all of the fundamental forces of nature. This grand vision would not only give us a deep understanding of what everything is made of but would also provide critical insights into the evolution of the universe from the Big Bang. In many ways it represented some of the highest ideals of mankind as the sole creature endowed with sufficient consciousness and intelligence to address the unending challenge of unraveling some of the deepest mysteries of the universe—perhaps even providing the very reason for our existence as the agents through which the universe would know itself.

The scale of the SSC was gigantic: it was to be more than fifty miles in circumference and would accelerate protons up to energies of 20 trillion electron volts at a cost of more than $10 billion. To give a sense of scale, an electron volt is a typical energy of the chemical reactions that form the basis of life. The energy of the protons in the SSC would have been eight times greater than that of the Large Hadron Collider now operating in Geneva that was recently in the limelight for discovering the Higgs particle.

The demise of the SSC was due to many, almost predictable, factors, including inevitable budget issues, the state of the economy, political resentment against Texas where the machine was being built, uninspired leadership, and so on. But one of the major reasons for its collapse was the rise of a climate of negativity toward traditional big science and toward physics in particular.1 This took many forms, but one that many of us were subjected to was the oft-repeated pronouncement I have already quoted earlier that “while the nineteenth and twentieth centuries were the centuries of physics, the twenty-first century will be the century of biology.”

Even the most arrogant hard-nosed physicist had a hard time disagreeing with the sentiment that biology would very likely eclipse physics as the dominant science of the twenty-first century. But what incensed many of us was the implication, oftentimes explicit, that there was no longer any need for further basic research in this kind of fundamental physics because we already knew all that was needed to be known. Sadly, the SSC was a victim of this misguided parochial thinking.

At that time I was overseeing the high energy physics program at the Los Alamos National Laboratory, where we had a significant involvement with one of the two major detectors being constructed at the SSC. For those not familiar with the terminology, “high energy physics” is the name of the subfield of physics concerned with fundamental questions about the elementary particles, their interactions and cosmological implications. I was a theoretical physicist (and still am) whose primary research interests at that time were in this area. My visceral reaction to the provocative statements concerning the diverging trajectories of physics and biology was that, yes, biology will almost certainly be the predominant science of the twenty-first century, but for it to become truly successful, it will need to embrace some of the quantitative, analytic, predictive culture that has made physics so successful. Biology will need to integrate into its traditional reliance on statistical, phenomenological, and qualitative arguments a more theoretical framework based on underlying mathematizable or computational principles. I am embarrassed to say that I knew very little about biology at that time, and this outburst came mostly from arrogance and ignorance.

Nevertheless, I decided to put my money where my mouth was and started to think about how the paradigm and culture of physics might help solve interesting challenges in biology. There have, of course, been several physicists who made extremely successful forays into biology, the most spectacular of which was probably Francis Crick, who with James Watson determined the structure of DNA, which revolutionized our understanding of the genome. Another is the great physicist Erwin Schrödinger, one of the founders of quantum mechanics, whose marvelous little book titled What Is Life?, published in 1944, had a huge influence on biology.2 These examples were inspirational evidence that physics might have something of interest to say to biology, and had stimulated a small but growing stream of physicists crossing the divide, giving rise to the nascent field of biological physics.

I had reached my early fifties at the time of the demise of the SSC, and as I remarked at the opening of the book, I was becoming increasingly conscious of the inevitable encroachment of the aging process and the finiteness of life. Given the poor track record of males in my ancestry, it seemed natural to begin my thinking about biology by learning about aging and mortality. Because these are among the most ubiquitous and fundamental characteristics of life, I naively assumed that almost everything was known about them. But, to my great surprise, I learned that not only was there no accepted general theory of aging and death but the field, such as it was, was relatively small and something of a backwater. Furthermore, few of the questions that would be natural for a physicist to ask, such as those I posed in the opening chapter, seemed to have been addressed. In particular, where does the scale of one hundred years for human life span come from, and what would constitute a quantitative, predictive theory of aging?

Death is an essential feature of life. Indeed, implicitly it is an essential feature of the theory of evolution. A necessary component of the evolutionary process is that individuals eventually die so that their offspring can propagate new combinations of genes that eventually lead to adaptation by natural selection of new traits and new variations leading to the diversity of species. We must all die so that the new can blossom, explore, adapt, and evolve. Steve Jobs put it succinctly3:

No one wants to die. Even people who want to go to heaven don’t want to die to get there. And yet death is the destination we all share. No one has ever escaped it, and that is how it should be, because death is very likely the single best invention of life. It’s life’s change agent. It clears out the old to make way for the new.

Given the critical importance of death and of its precursor, the aging process, I assumed that I would be able to pick up an introductory biology textbook and find an entire chapter devoted to it as part of its discussion of the basic features of life, comparable to discussions of birth, growth, reproduction, metabolism, and so on. I had expected a pedagogical summary of a mechanistic theory of aging that would include a simple calculation showing why we live for about a hundred years, as well as answering all of the questions I posed above. No such luck. Not even a mention of it, nor indeed was there any hint that these were questions of interest. This was quite a surprise, especially because, after birth, death is the most poignant biological event of a person’s life. As a physicist I began to wonder to what extent biology was a “real” science (meaning, of course, that it was like physics!), and how it was going to dominate the twenty-first century if it wasn’t concerned with these sorts of fundamental questions.

This apparent general lack of interest by the biological community in aging and mortality beyond a relatively small number of devoted researchers stimulated me to begin pondering these questions. As it appeared that almost no one was thinking about them in quantitative or analytic terms, there might be a possibility for a physics approach to lead to some small progress. Consequently, during interludes between grappling with quarks, gluons, dark matter, and string theory, I began to think about death.

As I embarked on this new direction, I received unexpected support for my ruminations about biology as a science and its relationship to mathematics from an unlikely source. I discovered that what I had presumed was subversive thinking had been expressed much more articulately and deeply almost one hundred years earlier by the eminent and somewhat eccentric biologist Sir D’Arcy Wentworth Thompson in his classic book On Growth and Form, published in 1917.4 It’s a wonderful book that has remained quietly revered not just in biology but in mathematics, art, and architecture, influencing thinkers and artists from Alan Turing and Julian Huxley to Jackson Pollock. A testament to its continuing popularity is that it still remains in print. The distinguished biologist Sir Peter Medawar, the father of organ transplants, who received the Nobel Prize for his work on graft rejection and acquired immune tolerance, called On Growth and Form “the finest work of literature in all the annals of science that have been recorded in the English tongue.”

Thompson was one of the last “Renaissance men” and is representative of a breed of multi- and transdisciplinary scientist-scholars that barely exists today. Although his primary influence was in biology, he was a highly accomplished classicist and mathematician. He was elected president of the British Classical Association, president of the Royal Geographical Society, and was a good enough mathematician to be made an honorary member of the prestigious Edinburgh Mathematical Society. He came from an intellectual Scottish family and had a name, much like Isambard Kingdom Brunel, that one might associate with a minor fictional character in a Victorian novel.

Thompson begins his book with a quote from the famous German philosopher Immanuel Kant, who had remarked that the chemistry of his day was “eine Wissenschaft, aber nicht Wissenschaft,” which Thompson translates as: chemistry is “a science, but not Science,” implying that “the criterion of true science lay in its relation to mathematics.” Thompson goes on to discuss how there now existed a predictive “mathematical chemistry” based on underlying principles, thereby elevating chemistry from “science” with a small s to “Science” with a capital S. On the other hand, biology had remained qualitative, without mathematical foundations or principles, so that it was still just “a science” with a lowercase s. It would only graduate to becoming “Science” when it incorporated mathematizable physical principles. Despite the extraordinary progress that has been made in the intervening century, I began to discover that the spirit of Thompson’s provocative characterization of biology still has some validity today.

Although he was awarded the prestigious Darwin Medal by the Royal Society in 1946, Thompson was critical of conventional Darwinian evolutionary theory because he felt that biologists overemphasized the role of natural selection and the “survival of the fittest” as the fundamental determinants of the form and structure of living organisms, rather than appreciating the importance of the role of physical laws and their mathematical expression in the evolutionary process. The basic question implicit in his challenge remains unanswered: are there “universal laws of life” that can be mathematized so that biology can be formulated as a predictive quantitative Science? He put it this way:

It behoves us always to remember that in physics it has taken great men to discover simple things. . . . How far even then mathematics will suffice to describe, and physics to explain, the fabric of the body, no man can foresee. It may be that all the laws of energy, and all the properties of matter, and all the chemistry of all the colloids are as powerless to explain the body as they are impotent to comprehend the soul. For my part, I think it is not so. Of how it is that the soul informs the body, physical science teaches me nothing; and that living matter influences and is influenced by mind is a mystery without a clue. Consciousness is not explained to my comprehension by all the nerve-paths and neurons of the physiologist; nor do I ask of physics how goodness shines in one man’s face, and evil betrays itself in another. But of the construction and growth and working of the body, as of all else that is of the earth earthy, physical science is, in my humble opinion, our only teacher and guide.

This pretty much expresses the credo of modern-day “complexity science,” including even the implication that consciousness is an emergent systemic phenomenon and not a consequence of just the sum of all the “nerve-paths and neurons” in the brain. The book is written in a scholarly but eminently readable style with surprisingly little mathematics. There are no pronouncements of great principles other than the belief that the physical laws of nature, written in the language of mathematics, are the major determinant of biological growth, form, and evolution.

Although Thompson’s book did not address aging or death, nor was it particularly helpful or sophisticated technically, its philosophy provided support and inspiration for contemplating and applying ideas and techniques from physics to all sorts of problems in biology. In my own thinking, this led me to perceive our bodies as metaphorical machines that need to be fed, maintained, and repaired but which eventually wear out and “die,” much like cars and washing machines. However, to understand how something ages and dies, whether an animal, an automobile, a company, or a civilization, one first needs to understand what the processes and mechanisms are that are keeping it alive, and then discern how these become degraded with time. This naturally leads to considerations of the energy and resources that are required for sustenance and possible growth, and their allocation to maintenance and repair for combating the production of entropy arising from destructive forces associated with damage, disintegration, wear and tear, and so on. This line of thinking led me to focus initially on the central role of metabolism in keeping us alive before asking why it can’t continue doing so forever.

2. METABOLIC RATE AND NATURAL SELECTION

Metabolism is the fire of life . . . and food, the fuel of life. Neither the neurons in your brain nor the molecules of your genes could function without being supplied by metabolic energy extracted from the food you eat. You could not walk, think, or even sleep without being supplied by metabolic energy. It supplies the power organisms need for maintenance, growth, and reproduction, and for specific processes such as circulation, muscle contraction, and nerve conduction.

Metabolic rate is the fundamental rate of biology, setting the pace of life for almost everything an organism does, from the biochemical reactions within its cells to the time it takes to reach maturity, and from the rate of uptake of carbon dioxide in a forest to the rate at which its litter breaks down. As was discussed in the opening chapter, the basal metabolic rate of the average human being is only about 90 watts, corresponding to a typical incandescent lightbulb and equivalent to the approximately 2,000 food calories you eat every day.

Like all of life, we evolved by a process of natural selection, interacting with and adapting to our fellow creatures, whether bacteria and viruses, ants and beetles, snakes and spiders, cats and dogs, or grass and trees, and everything else in a continuously challenging and evolving environment. We have been coevolving together in a never-ending multidimensional interplay of interaction, conflict, and adaptation. Each organism, each of its organs and subsystems, each cell type and genome, has therefore evolved following its own unique history in its own ever-changing environmental niche. The principle of natural selection, introduced independently by Charles Darwin and Alfred Russell Wallace, is key to the theory of evolution and the origin of species. Natural selection, or the “survival of the fittest,” is the gradual process by which a successful variation in some inheritable trait or characteristic becomes fixed in a population through the differential reproductive success of organisms that have developed this trait by interacting with their environment. As Wallace expressed it, there is sufficiently broad variation that “there is always material for natural selection to act upon in some direction that may be advantageous,” or as put more succinctly by Darwin: “each slight variation, if useful, is preserved.”

Out of this melting pot, each species evolves with its suite of physiological traits and characteristics that reflect its unique path through evolutionary time, resulting in the extraordinary diversity and variation across the spectrum of life from bacteria to whales. So after many millions of years of evolutionary tinkering and adaptation, of playing the game of the survival of the fittest, we human beings have ended up walking on two legs, being around five to six feet high, living for up to one hundred years, having a heart that beats about sixty times a minute, with a systolic blood pressure of about 100 mm Hg, sleeping about eight hours a day, an aorta that’s about eighteen inches long, having about five hundred mitochondria in each of our liver cells, and a metabolic rate of about 90 watts.

Is all of this solely arbitrary and capricious, the result of millions of tiny accidents and fluctuations in our long history that have been frozen in place by the process of natural selection, at least for the time being? Or is there some order here, some hidden pattern reflecting other mechanisms at work?

Indeed there is, and explaining it is the segue back to scaling.

3. SIMPLICITY UNDERLYING COMPLEXITY: KLEIBER’S LAW, SELF-SIMILARITY, AND ECONOMIES OF SCALE

We need about 2,000 food calories a day to live our lives. How much food and energy do other animals need? What about cats and dogs, mice and elephants? Or, for that matter, fish, birds, insects, and trees? These questions were posed at the opening of the book where I emphasized that despite the naive expectation from natural selection, and despite the extraordinary complexity and diversity of life, and despite the fact that metabolism is perhaps the most complex physical-chemical process in the universe, metabolic rate exhibits an extraordinarily systematic regularity across all organisms. As was shown in Figure 1, metabolic rate scales with body size in the simplest possible manner one could imagine when plotted logarithmically against mass, namely, as a straight line indicative of a simple power law scaling relationship.

The scaling of metabolic rate has been known for more than eighty years. Although primitive versions of it were known before the end of the nineteenth century, its modern incarnation is credited to the distinguished physiologist Max Kleiber, who formalized it in a seminal paper published in an obscure Danish journal in 1932.5 I was quite excited when I first came across Kleiber’s law because I had presumed that the randomness and unique historical path dependency implicit in how each species had evolved would have resulted in a huge uncorrelated variability among them. Even among mammals, after all, whales, giraffes, humans, and mice don’t look very much alike except for some very general features, and each operates in a vastly different environment facing very different challenges and opportunities.

In his pioneering work, Kleiber surveyed the metabolic rates for a spectrum of animals ranging from a small dove weighing about 150 grams to a large steer weighing almost 1,000 kilograms. Over the ensuing years his analysis has been extended by many researchers to include the entire spectrum of mammals ranging from the smallest, the shrew, to the largest, the blue whale, thereby covering more than eight orders of magnitude in mass. Remarkably, and of equal importance, the same scaling has been shown to be valid across all multicellular taxonomic groups including fish, birds, insects, crustacea, and plants, and even to extend down to bacteria and other unicellular organisms.6 Overall, it encompasses an astonishing twenty-seven orders of magnitude, perhaps the most persistent and systematic scaling law in the universe.

Because the range of animals in Figure 1 spans well over five orders of magnitude (a factor of more than 100,000), from a little mouse weighing only 20 grams (0.02 kg) to a huge elephant weighing almost 10,000 kilograms, we are forced to plot the data logarithmically, meaning that the scales on both axes increase by successive factors of ten. For instance, mass increases along the horizontal axis from 0.001 to 0.01 to 0.1 to 10 to 100 kilograms, and so on, rather than linearly from 1 to 2 to 3 to 4 kilograms, et cetera. Had we tried to plot this on a standard-size piece of paper using a conventional linear scale, all of the data points except the elephant would pile up in the bottom left-hand corner of the graph because even the next lightest animals after the elephant, the bull and the horse, are more than ten times lighter. To be able to distinguish all of them with any reasonable resolution would require a ridiculously large piece of paper more than a kilometer wide. And to resolve the eight orders of magnitude between the shrew and the blue whale it would have to be more than 100 kilometers wide.

So as we saw when discussing the Richter scale for earthquakes in the previous chapter, there are very practical reasons for using logarithmic coordinates for representing data such as this which span many orders of magnitude. But there are also deep conceptual reasons for doing so related to the idea that the structures and dynamics being investigated have self-similar properties, which are represented mathematically by simple power laws, as I will now explain.

We’ve seen that a straight line on a logarithmic plot represents a power law whose exponent is its slope (⅔ in the case of the scaling of strength, shown in Figure 7). In Figure 1 you can readily see that for every four orders of magnitude increase in mass (along the horizontal axis), metabolic rate increases by only three orders of magnitude (along the vertical axis), so the slope of the straight line is ¾, the famous exponent in Kleiber’s law. To illustrate more specifically what this implies, consider the example of a cat weighing 3 kilograms that is 100 times heavier than a mouse weighing 30 grams. Kleiber’s law can straightforwardly be used to calculate their metabolic rates, leading to around 32 watts for the cat and about 1 watt for the mouse. Thus, even though the cat is 100 times heavier than the mouse, its metabolic rate is only about 32 times greater, an explicit example of economy of scale.

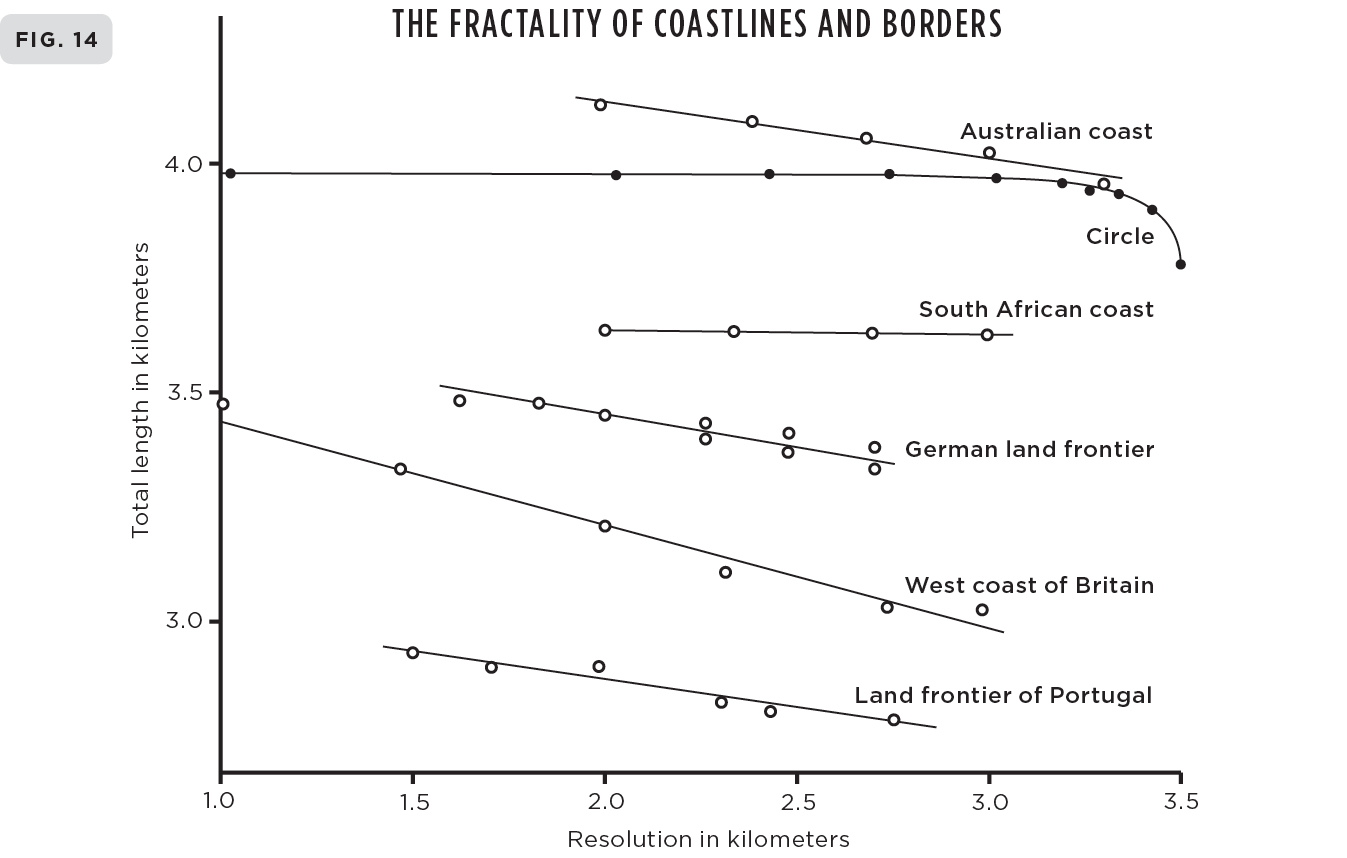

If we now consider a cow that is 100 times heavier than the cat, then Kleiber’s law predicts that its metabolic rate is likewise 32 times greater than the cat’s, and if we extend this to a whale that is 100 times heavier than the cow, its metabolic rate would be 32 times greater than the cow’s. This repetitive behavior, the recurrence in this case of the same factor 32 as we move up in mass by the same repetitive factor of 100, is an example of the general self-similar feature of power laws. More generally: if the mass is increased by any arbitrary factor at any scale (100, in the example), then the metabolic rate increases by the same factor (32, in the example) no matter what the value of the initial mass is, that is, whether it’s that of a mouse, cat, cow, or whale. This remarkably systematic repetitive behavior is called scale invariance or self-similarity and is a property inherent to power laws. It is closely related to the concept of a fractal, which will be discussed in detail in the following chapter. To varying degrees, fractality, scale invariance, and self-similarity are ubiquitous across nature from galaxies and clouds to your cells, your brain, the Internet, companies, and cities.

We just saw that a cat that is 100 times heavier than a mouse requires only about 32 times as much energy to sustain it even though it has approximately 100 times as many cells—a classic example of an economy of scale resulting from the essential nonlinear nature of Kleiber’s law. Naive linear reasoning would have predicted the cat’s metabolic rate to have been 100 times larger, rather than only 32 times. Similarly, if the size of an animal is doubled it doesn’t need 100 percent more energy to sustain it; it needs only about 75 percent more—thereby saving approximately 25 percent with each doubling. Thus, in a systematically predictable and quantitative way, the larger the organism the less energy has to be produced per cell per second to sustain a gram of tissue. Your cells work less hard than your dog’s, but your horse’s work even less hard. Elephants are roughly 10,000 times heavier than rats but their metabolic rates are only 1,000 times larger, despite having roughly 10,000 times as many cells to support. Thus, an elephant’s cells operate at about a tenth the rate of a rat’s, resulting in a corresponding decrease in the rates of cellular damage, and consequently to a greater longevity for the elephant, as will be explained in greater detail in chapter 4. This is an example of how the systematic economy of scale has profound consequences that reverberate across life from birth and growth to death.

4. UNIVERSALITY AND THE MAGIC NUMBER FOUR THAT CONTROLS LIFE

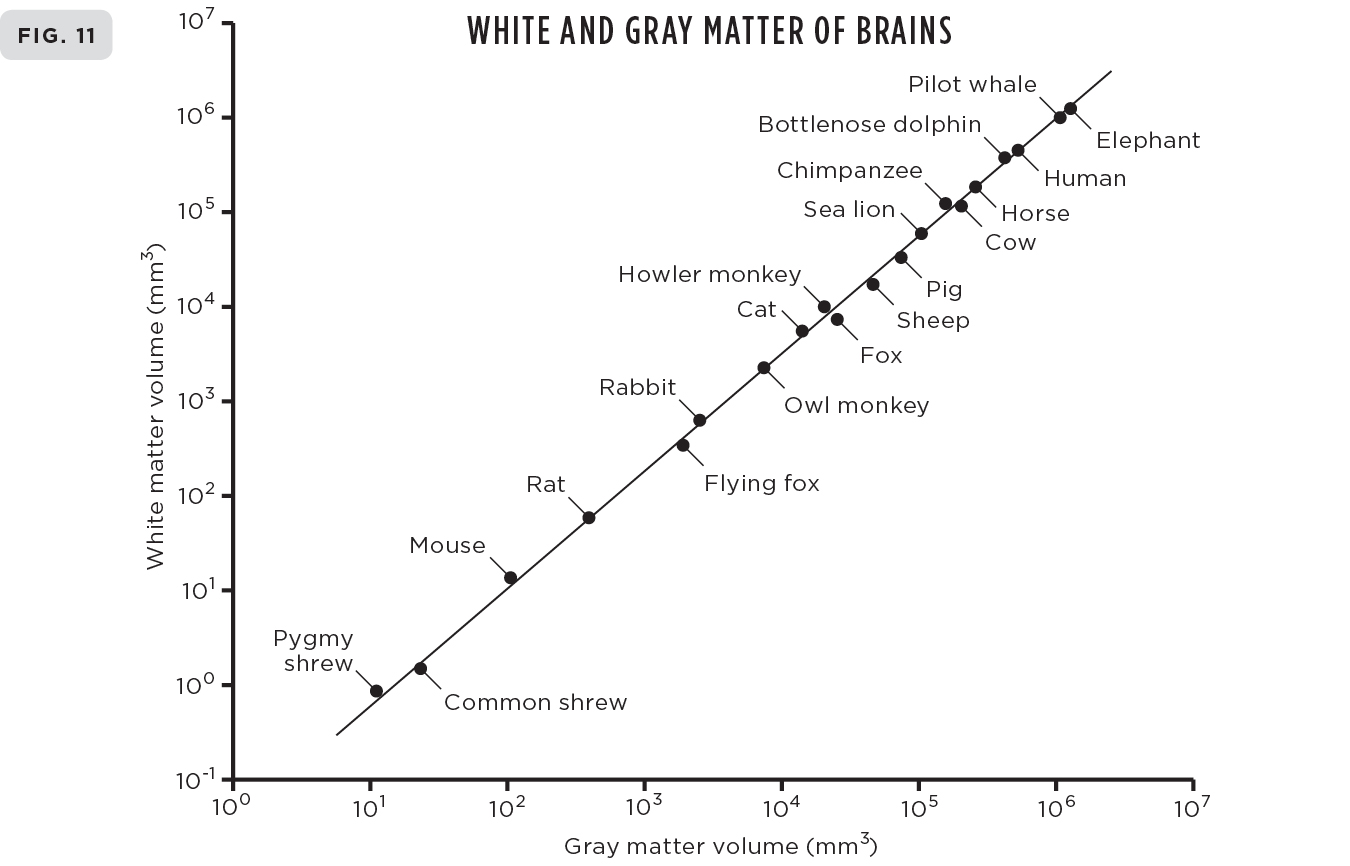

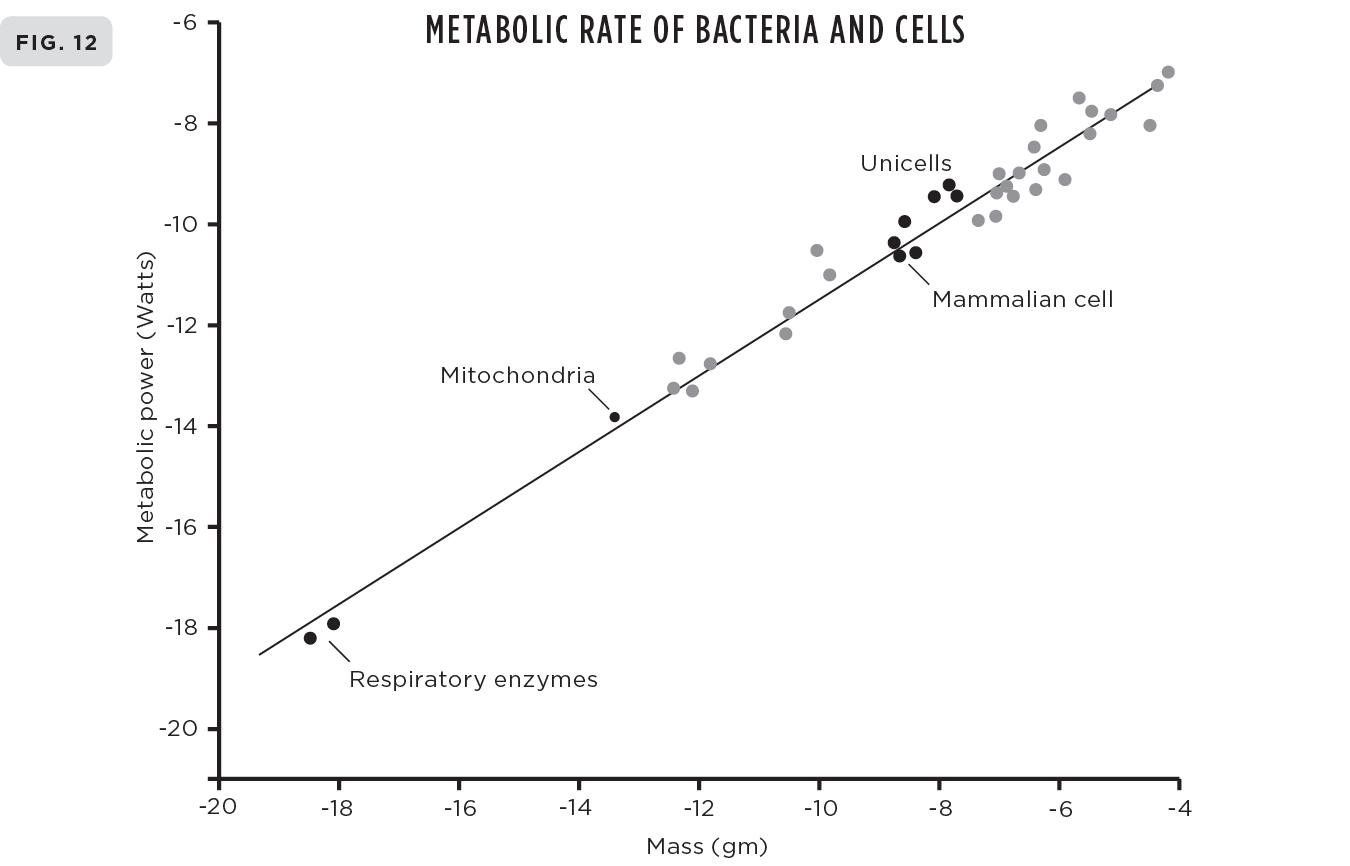

The systematic regularity of Kleiber’s law is pretty amazing, but equally surprising is that similar systematic scaling laws hold for almost any physiological trait or life-history event across the entire range of life from cells to whales to ecosystems. In addition to metabolic rates, these include quantities such as growth rates, genome lengths, lengths of aortas, tree heights, the amount of cerebral gray matter in the brain, evolutionary rates, and life spans; a sampling of these is illustrated in Figures 9-12. There are probably well over fifty such scaling laws and—another big surprise—their corresponding exponents (the analog of the ¾ in Kleiber’s law) are invariably very close to simple multiples of ¼.

For example, the exponent for growth rates is very close to ¾, for lengths of aortas and genomes it’s ¼, for heights of trees ¼, for cross-sectional areas of both aortas and tree trunks ¾, for brain sizes ¾, for cerebral white and gray matter 5⁄4, for heart rates minus ¼, for mitochondrial densities in cells minus ¼, for rates of evolution minus ¼, for diffusion rates across membranes minus ¼, for life spans ¼ . . . and many, many more. The “minus” here simply indicates that the corresponding quantity decreases with size rather than increases, so, for instance, heart rates decrease with increasing body size following the ¼ power law, as shown in Figure 10. I can’t resist drawing your attention to the intriguing fact that aortas and tree trunks scale in the same way.

Particularly fascinating is the emergence of the number four in the guise of the ¼ powers that appear in all of these exponents. It occurs ubiquitously across the entire panoply of life and seems to play a special, fundamental role in determining many of the measurable characteristics of organisms regardless of their evolved design. Viewed through the lens of scaling, a remarkably general universal pattern emerges, strongly suggesting that evolution has been constrained by other general physical principles beyond natural selection.

These systematic scaling relationships are highly counterintuitive. They show that almost all the physiological characteristics and life-history events of any organism are primarily determined simply by its size. For example, the pace of biological life decreases systematically and predictably with increasing size: large mammals live longer, take longer to mature, have slower heart rates, and have cells that work less hard than those of small mammals, all to the same predictable degree. Doubling the mass of a mammal increases all of its timescales such as its life span and time to maturity by about 25 percent on average and, concomitantly, decreases all rates, such as its heart rate, by the same amount.

Whales live in the ocean, elephants have trunks, giraffes have long necks, we walk on two legs, and dormice scurry around, yet despite these obvious differences, we are all, to a large degree, nonlinearly scaled versions of one another. If you tell me the size of a mammal, I can use the scaling laws to tell you almost everything about the average values of its measurable characteristics: how much food it needs to eat each day, what its heart rate is, how long it will take to mature, the length and radius of its aorta, its life span, how many offspring it will have, and so on. Given the extraordinary complexity and diversity of life, this is pretty amazing.

Here and here: A small sampling of the many examples of scaling showing their remarkable universality and diversity. (9) Biomass production of both individual insects and insect colonies showing how they both scale with mass with an exponent of ¾, just like metabolic rate of animals shown in Figure 1. (10) Heart rates of mammals scale with mass with an exponent of -¼. (11) The volume of white matter in mammalian brains scales with the volume of gray matter with an exponent of 5⁄4. (12) The scaling of metabolic rate of single cells and bacteria with their mass following the classic ¾ exponent of Kleiber’s law for multicellular animals.

I was very excited when I realized that my quest to learn about some of the mysteries of death had unexpectedly led me to learn about some of the more surprising and intriguing mysteries of life. For here was an area of biology that was explicitly quantitative, expressible in mathematical terms, and, at the same time, manifested a spirit of “universality” beloved of physicists. In addition to the surprise that these “universal” laws seemed to be at odds with a naive interpretation of natural selection, it was equally surprising that they seemed not to have been fully appreciated by most biologists, even though many were aware of them. Furthermore, there was no general explanation for their origin. Here was something ripe for a physicist to get his teeth into.

Actually, it isn’t quite true that scaling laws had been entirely unappreciated by biologists. Scaling laws had certainly maintained an ongoing presence in ecology and, until the advent of the molecular and genomics revolution in biology in the 1950s, had attracted the attention of many eminent biologists, including Julian Huxley, J. B. S. Haldane, and D’Arcy Thompson.7 Indeed, Huxley coined the term allometric to describe how physiological and morphological characteristics of organisms scale with body size, though his focus was primarily on how that occurred during growth. Allometric was introduced as a generalization of the Galilean concept of isometric scaling, discussed in the previous chapter, where body shape and geometry do not change as size increases, so all lengths associated with an organism increase in the same proportion; iso is Greek for “the same,” and metric is derived from metrikos, meaning “measure.” Allometric, on the other hand, is derived from allo, meaning “different,” and refers to the typically more general situation where shapes and morphology change as body size increases and different dimensions scale differently. For example, the radii and lengths of tree trunks, or for that matter the limbs of animals, scale differently from one another as size increases: radii scale as the ⅜ power of mass, whereas lengths scale more slowly with ¼ power (that is, as the 2⁄8 power). Consequently, trunks and limbs become more thickset and stockier as the size of a tree or animal increases; just think of an elephant’s legs relative to those of a mouse. This is a generalization of Galileo’s original argument regarding the scaling of strength. Had it been isometric, then radii and lengths would have scaled in the same way and the shape of trunks and limbs would have remained unchanged, making the support of the animal or tree unstable as it increases in size. An elephant whose legs have the same spindly shape as a mouse would collapse under its own weight.

Huxley’s term allometric was extended from its more restrictive geometric, morphological, and ontogenetic origins to describe the kinds of scaling laws that I discussed above, which include more dynamical phenomena such as how flows of energy and resources scale with body size, with metabolic rate being the prime example. All of these are now commonly referred to as allometric scaling laws.

Julian Huxley, himself a very distinguished biologist, was the grandson of the famous Thomas Huxley, the biologist who championed Charles Darwin and the theory of evolution by natural selection, and the brother of the novelist and futurist Aldous Huxley. In addition to the word allometric, Julian Huxley brought several other new words and concepts into biology, including replacing the much-maligned term race with the phrase ethnic group.

In the 1980s several excellent books were written by mainstream biologists summarizing the extensive literature on allometry.8 Data across all scales and all forms of life were compiled and analyzed and it was unanimously concluded that quarter-power scaling was a pervasive feature of biology. However, there was surprisingly little theoretical or conceptual discussion, and no general explanation was given for why there should be such systematic laws, where they came from, or how they related to Darwinian natural selection.

As a physicist, it seemed to me that these “universal” quarter-power scaling laws were telling us something fundamental about the dynamics, structure, and organization of life. Their existence strongly suggested that generic underlying dynamical processes that transcend individual species were at work constraining evolution. This therefore opened a possible window onto underlying emergent laws of biology and led to the conjecture that the generic coarse-grained behavior of living systems obeys quantifiable laws that capture their essential features.

It would seem impossible, almost diabolical, that these scaling laws could be just a coincidence, each an independent phenomenon, a “special” case reflecting its own unique dynamics and organization, a wicked series of accidents of evolutionary dynamics, so that the scaling of heart rates is unrelated to the scaling of metabolic rates and the heights of trees. Of course, every individual organism, biological species, and ecological assemblage is unique, reflecting differences in genetic makeup, ontogenetic pathways, environmental conditions, and evolutionary history. So in the absence of any additional physical constraints, one might have expected that different organisms, or at least each group of related organisms inhabiting similar environments, might exhibit different size-related patterns of variation in structure and function. The fact that they don’t—that the data almost always closely approximate a simple power law across a broad range of size and diversity—raises some very challenging questions. The fact that the exponents of these power laws are nearly always simple multiples of ¼ poses an even greater challenge.

The question as to what the underlying mechanism for their origin could be seemed a wonderful conundrum to think about, especially given my morbid interest in aging and death and the fact that even life spans seem to scale allometrically with ¼ power (albeit with large variance).

5. ENERGY, EMERGENT LAWS, AND THE HIERARCHY OF LIFE

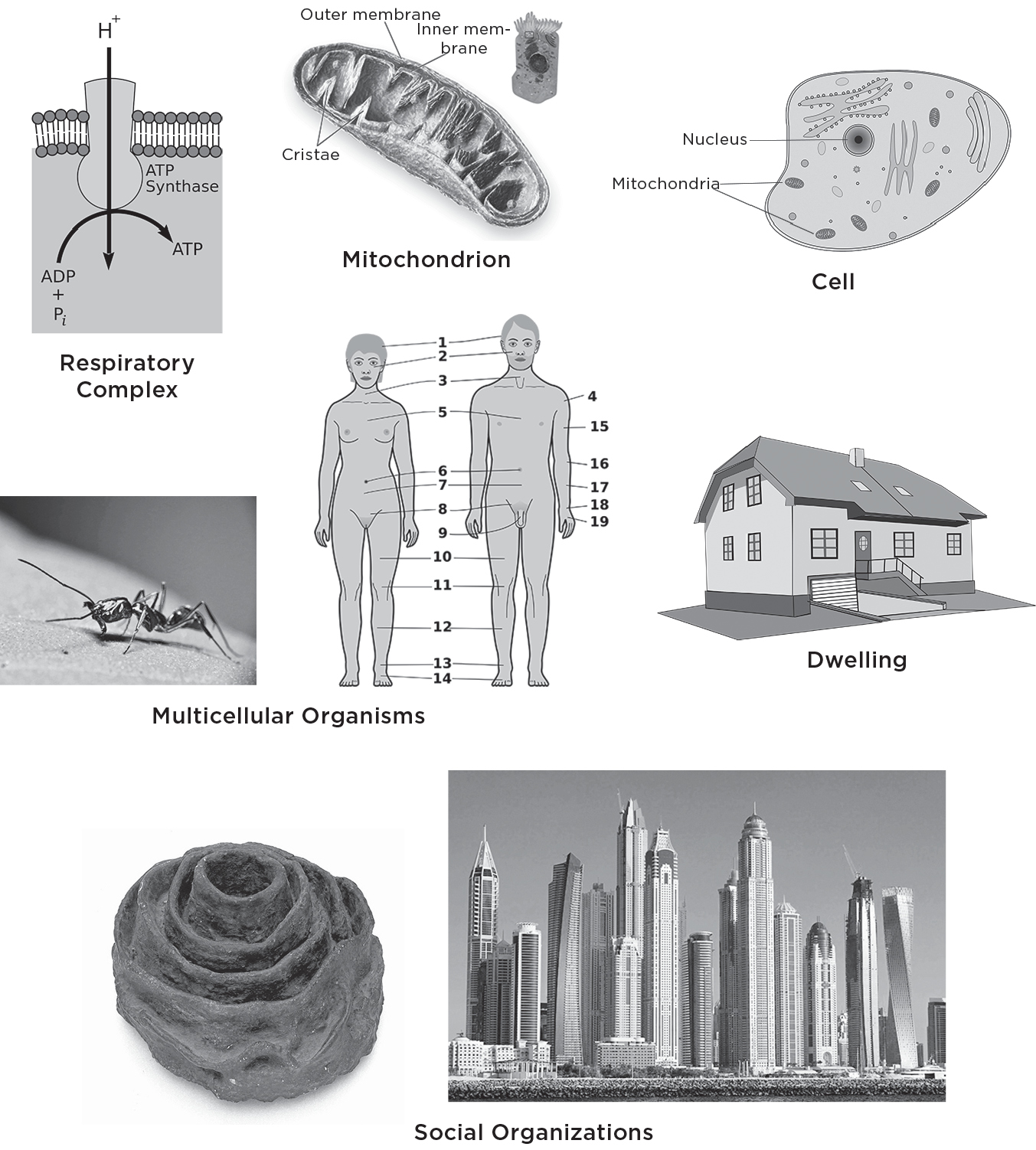

As I have emphasized, no aspect of life can function without energy. Just as every muscle contraction or any activity requires metabolic energy, so does every random thought in your brain, every twitch of your body even while you sleep, and even the replication of your DNA in your cells. At the most fundamental biochemical level metabolic energy is created in semiautonomous molecular units within cells called respiratory complexes. The critical molecule that plays the central role in metabolism goes by the slightly forbidding name of adenosine triphosphate, usually referred to as ATP. The detailed biochemistry of metabolism is extremely complicated but in essence it involves the breaking down of ATP, which is relatively unstable in the cellular environment, from adenosine triphosphate (with three phosphates) into ADP, adenosine diphosphate (with just two phosphates), thereby releasing the energy stored in the binding of the additional phosphate. The energy derived from breaking this phosphate bond is the source of your metabolic energy and therefore what is keeping you alive. The reverse process converts ADP back into ATP using energy from food via oxidative respiration in mammals such as ourselves (that’s why we have to breathe in oxygen), or via photosynthesis in plants. The cycle of releasing energy from the breakup of ATP into ADP and its recycling back from ADP to store energy in ATP forms a continuous loop process much like the charging and recharging of a battery. A cartoon of this process is shown here. Unfortunately it doesn’t do justice to the beauty and elegance of this extraordinary mechanism that fuels most of life.

Given its central role, it’s not surprising that the flux of ATP is often referred to as the currency of metabolic energy for almost all of life. At any one time our bodies contain only about half a pound (about 250 g) of ATP, but here’s something truly extraordinary that you should know about yourself: every day you typically make about 2 × 1026 ATP molecules—that’s two hundred trillion trillion molecules—corresponding to a mass of about 80 kilograms (about 175 lbs.). In other words, each day you produce and recycle the equivalent of your own body weight of ATP! Taken together, all of these ATPs add up to meet our total metabolic needs at the rate of the approximately 90 watts we require to stay alive and power our bodies.

These little energy generators, the respiratory complexes, are situated on crinkly membranes inside mitochondria, which are potato-shaped objects floating around inside cells. Each mitochondrion contains about five hundred to one thousand of these respiratory complexes . . . and there about five hundred to one thousand of these mitochondria inside each of your cells, depending on the cell type and its energy needs. Because muscles require greater access to energy, their cells are densely packed with mitochondria, whereas fat cells have many fewer. So on average each cell in your body may have up to a million of these little engines distributed among its mitochondria working away night and day, collectively manufacturing the astronomical number of ATPs needed to keep you viable, healthy, and strong. The rate at which the total number of these ATPs is produced is a measure of your metabolic rate.

Your body is composed of about a hundred trillion (1014) cells. Even though they represent a broad range of diverse functionalities from neuronal and muscular to protective (skin) and storage (fat), they all share the same basic features. They all process energy in a similar way via the hierarchy of respiratory complexes and mitochondria. Which raises a huge challenge: the five hundred or so respiratory complexes inside your mitochondria cannot behave as independent entities but have to act collectively in an integrated coherent fashion in order to ensure that mitochondria function efficiently and deliver energy in an appropriately ordered fashion to cells. Similarly, the five hundred or so mitochondria inside each of your cells do not act independently but, like respiratory complexes, have to interact in an integrated coherent fashion to ensure that the 1014 cells that constitute your body are supplied with the energy they need to function efficiently and appropriately. Furthermore, these hundred trillion cells have to be organized into a multitude of subsystems such as your various organs, whose energy needs vary significantly depending on demand and function, thereby ensuring that you can do all of the various activities that constitute living, from thinking and dancing to having sex and repairing your DNA. And this entire interconnected multilevel dynamic structure has to be sufficiently robust and resilient to continue functioning for up to one hundred years!

The energy flow hierarchy of life beginning with respiratory complexes (top left) that produce our energy up through mitochondria and cells (middle and top right) to multicellular organisms and community structures. From this perspective, cities are ultimately powered and sustained by the ATP produced in our respiratory complexes. Although each of these looks quite different with very different engineered structures, energy is distributed through each of them by space-filling hierarchical networks having similar properties.

It’s natural to generalize this hierarchy of life beyond individual organisms and extend it to community structures. Earlier I talked about how ants collectively cooperate to create fascinating social communities that build remarkable structures by following emergent rules arising from their integrated interactions. Many other organisms, such as bees and plants, form similar integrated communities that take on a collective identity.

The most extreme and astonishing version of this is us. In a very short period of time we have evolved from living in small, somewhat primitive bands of relatively few individuals to dominating the planet with our mammoth cities and social structures encompassing many millions of individuals. Just as organisms are constrained by the integration of the emergent laws operating at the cellular, mitochondrion, and respiratory complex levels, so cities have emerged from, and are constrained by, the underlying emergent dynamics of social interactions. Such laws are not “accidents” but the result of evolutionary processes acting across multiple integrated levels of structure.

This hugely multifaceted, multidimensional process that constitutes life is manifested and replicated in myriad forms across an enormous scale ranging over more than twenty orders of magnitude in mass. A huge number of dynamical agents span and interconnect the vast hierarchy ranging from respiratory complexes and mitochondria to cells and multicellular organisms and up to community structures. The fact that this has persisted and remained so robust, resilient, and sustainable for more than a billion years suggests that effective laws that govern their behavior must have emerged at all scales. Revealing, articulating, and understanding these emergent laws that transcend all of life is the great challenge.

It is within this context that we should view allometric scaling laws: their systematic regularity and universality provides a window onto these emergent laws and underlying principles. As external environments change, all of these various systems must be scalable in order to meet the continuing challenges of adaptability, evolvability, and growth. The same generic underlying dynamical and organizational principles must operate across multiple spatial and temporal scales. The scalability of living systems underlies their extraordinary resilience and sustainability both at the individual level and for life itself.

6. NETWORKS AND THE ORIGINS OF QUARTER-POWER ALLOMETRIC SCALING

As I began to ponder what the origins of these surprising scaling laws might be, it became clear that whatever was at play had to be independent of the evolved design of any specific type of organism, because the same laws are manifested by mammals, birds, plants, fish, crustacea, cells, and so on. All of these organisms ranging from the smallest, simplest bacterium to the largest plants and animals depend for their maintenance and reproduction on the close integration of numerous subunits—molecules, organelles, and cells—and these microscopic components need to be serviced in a relatively “democratic” and efficient fashion in order to supply metabolic substrates, remove waste products, and regulate activity.

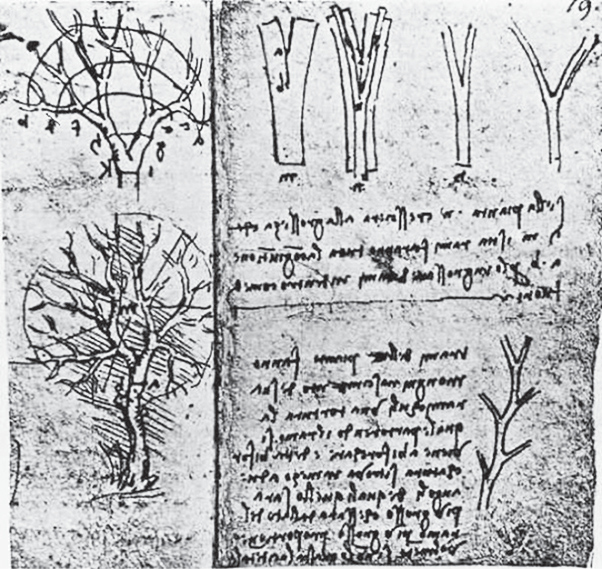

Natural selection has solved this challenge in perhaps the simplest possible way by evolving hierarchical branching networks that distribute energy and materials between macroscopic reservoirs and microscopic sites. Functionally, biological systems are ultimately constrained by the rates at which energy, metabolites, and information can be supplied through these networks. Examples include animal circulatory, respiratory, renal, and neural systems, plant vascular systems, intracellular networks, and the systems that supply food, water, power, and information to human societies. In fact, when you think about it, you realize that underneath your smooth skin you are effectively an integrated series of such networks, each busily transporting metabolic energy, materials, and information across all scales. Some of these are below.

Examples of biological networks, counterclockwise from the top left-hand corner: the circulatory system of the brain; microtubial and mitochondrial networks inside cells; the white and gray matter of the brain; a parasite that lives inside elephants; a tree; and our cardiovascular system.

Because life is sustained at all scales by such hierarchical networks, it was natural to conjecture that the key to the origin of quarter-power allometric scaling laws, and consequently to the generic coarse-grained behavior of biological systems, lay in the universal physical and mathematical properties of these networks. In other words, despite the great diversity in their evolved structure—some are constructed of tubes like the plumbing in your house, some are bundles of fibers like electrical cables, and some are just diffusive pathways—they are all presumed to be constrained by the same physical and mathematical principles.

7. PHYSICS MEETS BIOLOGY: ON THE NATURE OF THEORIES, MODELS, AND EXPLANATIONS

As I was struggling to develop the network-based theory for the origin of quarter-power scaling, a wonderful synchronicity occurred: I was serendipitously introduced to James Brown and his then student Brian Enquist. They, too, had been thinking about this problem and had also been speculating that network transportation was a key ingredient. Jim is a distinguished ecologist (he was president of the Ecological Society of America when we met) and is well known, among many other things, for his seminal role in inventing an increasingly important subfield of ecology called macroecology.9 As its name suggests, this takes a large-scale, top-down systemic approach to understanding ecosystems, having much in common with the philosophy inherent in complexity science, including an appreciation of using a coarse-grained description of the system. Macroecology has whimsically been referred to as “seeing the forest for the trees.” As we become more concerned about global environmental issues and the urgent need for a deeper understanding of their origins, dynamics, and mitigation, Jim’s big-picture vision, articulated in the ideas of macroecology, is becoming increasingly important and appreciated.

When we first met, Jim had only recently moved to the University of New Mexico (UNM), where he is a Distinguished Regents Professor. He had concomitantly become associated with the Santa Fe Institute (SFI), and it was through SFI that the connection was made. Thus began “a beautiful relationship” with Jim, SFI, and Brian and, by extension, with the ensuing cadre of wonderful postdocs and students, as well as other senior researchers who worked with us. Over the ensuing years, the collaboration between Jim, Brian, and me, begun in 1995, was enormously productive, extraordinarily exciting, and tremendous fun. It certainly changed my life, and I venture to say that it did likewise for Brian and Jim, and possibly even for some of the others. But like all excellent, fulfilling, and meaningful relationships, it has also occasionally been frustrating and challenging.

Jim, Brian, and I met every Friday beginning around nine-thirty in the morning and finishing in mid-afternoon by around three with only short breaks for necessities (neither Jim nor I eat lunch). This was a huge commitment, as both of us ran sizable groups elsewhere—Jim had a large ecology group at UNM and I was still running high energy physics at Los Alamos. Jim and Brian very generously drove up most weeks from Albuquerque to Santa Fe, which is about an hour’s drive, whereas I did the reverse trip only every few months or so. Once the ice was broken and some of the cultural and language barriers that inevitably arise between fields were crossed, we created a refreshingly open atmosphere where all questions and comments, no matter how “elementary,” speculative, or “stupid,” were encouraged, welcomed, and treated with respect. There were lots of arguments, speculations, and explanations, struggles with big questions and small details, lots of blind alleys and an occasional aha moment, all against a backdrop of a board covered with equations and hand-drawn graphs and illustrations. Jim and Brian patiently acted as my biology tutors, exposing me to the conceptual world of natural selection, evolution and adaptation, fitness, physiology, and anatomy, all of which were embarrassingly foreign to me. Like many physicists, I was horrified to learn that there were serious scientists who put Darwin on a pedestal above Newton and Einstein. Given the primacy of mathematics and quantitative analysis in my own thinking, I could hardly believe it. However, since I became seriously engaged with biology my appreciation for Darwin’s monumental achievements has grown enormously, though I must admit that it’s still difficult for me to see how anyone could rank him above the even more monumental achievements of Newton and Einstein.

For my part, I tried to reduce complicated nonlinear mathematical equations and technical physics arguments to relatively simple, intuitive calculations and explanations. Regardless of the outcome, the entire process was a wonderful and fulfilling experience. I particularly enjoyed being reminded of the primal excitement of why I loved being a scientist: the challenge of learning and developing concepts, figuring out what the important questions were, and occasionally being able to suggest insights and answers. In high energy physics, where we struggle to unravel the basic laws of nature at the most microscopic level, we mostly know what the questions are and most of one’s effort goes into trying to be clever enough to carry out the highly technical calculations. In biology I found it to be mostly the other way around: months were spent trying to figure out what the problem actually was that we were trying to solve, the questions we should be asking, and the various relevant quantities that were needed to be calculated, but once that was accomplished, the actual technical mathematics was relatively straightforward.

In addition to a strong commitment to solving a fundamental long-standing problem that clearly needed close collaboration between physicists and biologists, a crucial ingredient of our success was that Jim and Brian, as well as being outstanding biologists, thought a lot like physicists and were appreciative of the importance of a mathematical framework grounded in underlying principles for addressing problems. Of equal importance was their appreciation that, to varying degrees, all theories and models are approximate. It is often difficult to see that there are boundaries and limitations to theories, no matter how successful they might have been. This does not mean that they are wrong, but simply that there is a finite range of their applicability. The classic case of Newton’s laws is a standard example. Only when it was possible to probe very small distances on the atomic scale or very large velocities on the scale of the speed of light did serious deviations from the predictions from Newton’s laws become apparent. And these led to the revolutionary discovery of quantum mechanics to describe the microscopic, and to the theory of relativity to describe ultrahigh speeds comparable to the speed of light. Newton’s laws are still applicable and correct outside of these two extreme domains. And here’s something of great importance: modifying and extending Newton’s laws to these wider domains led to a deep and profound shift in our philosophical conceptual understanding of how everything works. Revolutionary ideas like the realization that the nature of matter itself is fundamentally probabilistic, as embodied in Heisenberg’s uncertainty principle, and that space and time are not fixed and absolute, arose out of addressing the limitations of classical Newtonian thinking.

Lest you think that these revolutions in our understanding of fundamental problems in physics are just arcane academic issues, I want to remind you that they have had profound consequences for the daily life of everyone on the planet. Quantum mechanics is the foundational theoretical framework for understanding materials and plays a seminal role in much of the high-tech machinery and equipment that we use. In particular, it stimulated the invention of the laser, whose many applications have changed our lives. Among them are bar code scanners, optical disk drives, laser printers, fiber-optic communications, laser surgery, and much more. Similarly, relativity together with quantum mechanics spawned atomic and nuclear bombs, which changed the entire dynamic of international politics and continue to hang over all of us as a constant, though often suppressed and sometimes unacknowledged, threat to our very existence.

To varying degrees, all theories and models are incomplete. They need to be continually tested and challenged by increasingly accurate experiments and observational data over wider and wider domains and the theory modified or extended accordingly. This is an essential ingredient in the scientific method. Indeed, understanding the boundaries of their applicability, the limits to their predictive power, and the ongoing search for exceptions, violations, and failures has provoked even deeper questions and challenges, stimulating the continued progress of science and the unfolding of new ideas, techniques, and concepts.

A major challenge in constructing theories and models is to identify the important quantities that capture the essential dynamics at each organizational level of a system. For instance, in thinking about the solar system, the masses of the planets and the sun are clearly of central importance in determining the motion of the planets, but their color (Mars red, the Earth mottled blue, Venus white, etc.) is irrelevant: the color of the planets is irrelevant for calculating the details of their motion. Similarly, we don’t need to know the color of the satellites that allow us to communicate on our cell phones when calculating their detailed motion.

However, this is clearly a scale-dependent statement in that if we look at the Earth from a very close distance of, say, just a few miles above its surface rather than from millions of miles away in space, then what was perceived as its color is now revealed as a manifestation of the huge diversity of the Earth’s surface phenomena, which include everything from mountains and rivers to lions, oceans, cities, forests, and us. So what was irrelevant at one scale can become dominant at another. The challenge at every level of observation is to abstract the important variables that determine the dominant behavior of the system.

Physicists have coined a concept to help formalize a first step in this approach, which they call a “toy model.” The strategy is to simplify a complicated system by abstracting its essential components, represented by a small number of dominant variables, from which its leading behavior can be determined. A classic example is the idea first proposed in the nineteenth century that gases are composed of molecules, viewed as hard little billiard balls, that are rapidly moving and colliding with one another and whose collisions with the surface of a container are the origin of what we identify as pressure. What we call temperature is similarly identified as the average kinetic energy of the molecules. This was a highly simplified model which in detail is not strictly correct, though it captured and explained for the first time the essential macroscopic coarse-grained features of gases, such as their pressure, temperature, heat conductivity, and viscosity. As such, it provided the point of departure for developing our modern, significantly more detailed and precise understanding not only of gases, but of liquids and materials, by refining the basic model and ultimately incorporating the sophistication of quantum mechanics. This simplified toy model, which played a seminal role in the development of modern physics, is called the “kinetic theory of gases” and was first proposed independently by two of the greatest physicists of all time: James Clerk Maxwell, who unified electricity and magnetism into electromagnetism, thereby revolutionizing the world with his prediction of electromagnetic waves, and Ludwig Boltzmann, who brought us statistical physics and the microscopic understanding of entropy.

A concept related to the idea of a toy model is that of a “zeroth order” approximation of a theory, in which simplifying assumptions are similarly made in order to give a rough approximation of the exact result. It is usually employed in a quantitative context as, for example, in the statement that “a zeroth order estimate for the population of the Chicago metropolitan area in 2013 is 10 million people.” Upon learning a little more about Chicago, one might make what could be called a “first order” estimate of its population of 9.5 million, which is more precise and closer to the actual number (whose precise value from census data is 9,537,289). One could imagine that after more detailed investigation, an even better estimate would yield 9.54 million, which would be called a “second order” estimate. You get the idea: each succeeding “order” represents a refinement, an improved approximation, or a finer resolution that converges to the exact result based on more detailed investigation and analysis. In what follows, I shall be using the terms “coarse-grained” and “zeroth order” interchangeably.

This was the philosophical framework that Jim, Brian, and I were exploring when we embarked on our collaboration. Could we first construct a coarse-grained zeroth order theory for understanding the plethora of quarter-power allometric scaling relations based on generic underlying principles that would capture the essential features of organisms? And could we then use it as a point of departure for quantitatively deriving more refined predictions, the higher order corrections, for understanding the dominant behavior of real biological systems?

I later learned that compared with the majority of biologists, Jim and Brian were the exception rather than the rule in appreciating this approach. Despite some of the seminal contributions that physics and physicists have made to biology, a prime example being the unraveling of the structure of DNA, many biologists appear to retain a general suspicion and lack of appreciation of theory and mathematical reasoning.

Physics has benefited enormously from a continuous interplay between the development of theory and the testing of its predictions and implications by performing dedicated experiments. A great example is the recent discovery of the Higgs particle at the Large Hadron Collider at CERN in Geneva. This had been predicted many years earlier by several theoretical physicists as a necessary and critical component of our understanding of the basic laws of physics, but it took almost fifty years for the technical machinery to be developed and the large experimental team assembled to mount a successful search for it. Physicists take for granted the concept of the “theorist” who “only” does theory, whereas by and large biologists do not. A “real” biologist has to have a “lab” or a field site with equipment, assistants, and technicians who observe, measure, and analyze data. Doing biology with just pen, paper, and laptop, in the way many of us do physics, is considered a bit dilettantish and simply doesn’t cut it. There are, of course, important areas of biology, such as biomechanics, genetics, and evolutionary biology, where this is not the case. I suspect that this situation will change as big data and intense computation increasingly encroach on all of science and we aggressively attack some of the big questions such as understanding the brain and consciousness, environmental sustainability, and cancer. However, I agree with Sydney Brenner, the distinguished biologist who received the Nobel Prize for his work on the genetic code and who provocatively remarked that “technology gives us the tools to analyze organisms at all scales, but we are drowning in a sea of data and thirsting for some theoretical framework with which to understand it. . . . We need theory and a firm grasp on the nature of the objects we study to predict the rest.” His article begins, by the way, with the astonishing pronouncement that “biological research is in crisis.”10

Many recognize the cultural divide between biology and physics.11 Nevertheless, we are witnessing an enormously exciting period as the two fields become more closely integrated, leading to new interdisciplinary subfields such as biological physics and systems biology. The time seems right for revisiting D’Arcy Thompson’s challenge: “How far even then mathematics will suffice to describe, and physics to explain, the fabric of the body, no man can foresee. It may be that all the laws of energy, and all the properties of matter, all . . . chemistry . . . are as powerless to explain the body as they are impotent to comprehend the soul. For my part, I think it is not so.” Many would agree with the spirit of this remark, though new tools and concepts, including closer collaboration, may well be needed to accomplish his lofty goal. I would like to think that the marvelously enjoyable collaboration between Jim, Brian, and me, and all of our colleagues, postdocs, and students has contributed just a little bit to this vision.

8. NETWORK PRINCIPLES AND THE ORIGINS OF ALLOMETRIC SCALING

Prior to this digression into the interrelationship between the cultures of biology and physics, I argued that the mechanistic origins of scaling laws in biology were rooted in the universal mathematical, dynamical, and organizational properties of the multiple networks that distribute energy, materials, and information to local microscopic sites that permeate organisms, such as cells and mitochondria in animals. I also argued that because the structures of biological networks are so varied and stand in marked contrast to the uniformity of the scaling laws, their generic properties must be independent of their specific evolved design. In other words, there must be a common set of network properties that transcends whether they are constructed of tubes as in mammalian circulatory systems, fibers as in plants and trees, or diffusive pathways as in cells.

Formulating a set of general network principles and distilling out the essential features that transcend the huge diversity of biological networks proved to be a major challenge that took many months to resolve. As is often the case when moving into uncharted territory and trying to develop new ideas and ways of looking at a problem, the final product seems so obvious once the discovery or breakthrough has been made. It’s hard to believe that it took so long, and one wonders why it couldn’t have been done in just a few days. The frustrations and inefficiencies, the blind alleys, and the occasional eureka moments are all part and parcel of the creative process. There seems to be a natural gestation period, and this is simply the nature of the beast. However, once the problem comes into focus and it’s been solved it is extremely satisfying and enormously exciting.

This was our collective experience in deriving our explanation for the origin of allometric scaling laws. Once the dust had settled, we proposed the following set of generic network properties that are presumed to have emerged as a result of the process of natural selection and which give rise to quarter-power scaling laws when translated into mathematics. In thinking about them it might be useful to reflect on their possible analogs in cities, economies, companies, and corporations, to which we will turn in some detail in later chapters.

I. Space Filling

The idea behind the concept of space filling is simple and intuitive. Roughly speaking, it means that the tentacles of the network have to extend everywhere throughout the system that it is serving, as is illustrated in the networks here. More specifically: whatever the geometry and topology of the network is, it must service all local biologically active subunits of the organism or subsystem. A familiar example will make it clear: Our circulatory system is a classic hierarchical branching network in which the heart pumps blood through the many levels of the network beginning with the main arteries, passing through vessels of regularly decreasing size, ending with the capillaries, the smallest ones, before looping back to the heart through the venal network system. Space filling is simply the statement that the capillaries, which are the terminal units or last branch of the network, have to service every cell in our body so as to efficiently supply each of them with sufficient blood and oxygen. Actually, all that is required is for capillaries to be close enough to cells for sufficient oxygen to diffuse efficiently across capillary walls and thence through the outer membranes of the cells.

Quite analogously, many of the infrastructural networks in cities are also space filling: for example, the terminal units or end points of the utility networks—gas, water, and electricity—have to end up supplying all of the various buildings that constitute a city. The pipe that connects your house to the water line in the street and the electrical line that connects it to the main cable are analogs of capillaries, while your house can be thought of as an analog to cells. Similarly, all employees of a company, viewed as terminal units, have to be supplied by resources (wages, for example) and information through multiple networks connecting them with the CEO and the management.

II. The Invariance of Terminal Units

This simply means that the terminal units of a given network design, such as the capillaries of the circulatory system that we just discussed, all have approximately the same size and characteristics regardless of the size of the organism. Terminal units are critical elements of the network because they are points of delivery and transmission where energy and resources are exchanged. Other examples are mitochondria within cells, cells within bodies, and petioles (the last branch) of plants and trees. As individuals grow from newborn to adult, or as new species of varying sizes evolve, terminal units do not get reinvented nor are they significantly reconfigured or rescaled. For example, the capillaries of all mammals, whether children, adults, mice, elephants, or whales, are essentially all the same despite the enormous range and variation of body sizes.

This invariance of terminal units can be understood in the context of the parsimonious nature of natural selection. Capillaries, mitochondria, cells, et cetera, act as “ready-made” basic building blocks of the corresponding networks for new species, which are rescaled accordingly. The invariant properties of the terminal units within a specific design characterize the taxonomic class. For instance, all mammals share the same capillaries. Different species within that class such as elephants, humans, and mice are distinguished from one another by having larger or smaller, but closely related, network configurations. From this perspective, the difference between taxonomic groups, that is, between mammals, plants, and fish, for example, is characterized by different properties of the terminal units of their various corresponding networks. Thus while all mammals share similar capillaries and mitochondria, as do all fish, the mammalian ones differ from those of fish in their size and overall characteristics.

Analogously, the terminal units of networks that service and sustain buildings in a city, such as electrical outlets or water faucets, are also approximately invariant. For example, the electrical outlets in your house are essentially identical to those of almost any building anywhere in the world, no matter how big or small it is. There may be small local variations in detailed design but they are all pretty much the same size. Even though the Empire State Building in New York City and many other similar buildings in Dubai, Shanghai, or São Paulo may be more than fifty times taller than your house, all of them, including your house, share outlets and faucets that are very similar. If outlet size was naively scaled isometrically with the height of buildings, then a typical electrical outlet in the Empire State Building would have to be more than fifty times larger than the ones in your house, which means it would be more than ten feet tall and three feet wide rather than just a few inches. And as in biology, basic terminal units, such as faucets and electrical outlets, are not reinvented every time we design a new building regardless of where or how big it is.

III. Optimization

The final postulate states that the continuous multiple feedback and fine-tuning mechanisms implicit in the ongoing processes of natural selection and which have been playing out over enormous periods of time have led to the network performance being “optimized.” So, for example, the energy used by the heart of any mammal, including us, to pump blood through the circulatory system is on average minimized. That is, it is the smallest it could possibly be given its design and the various network constraints. To put it slightly differently: of the infinite number of possibilities for the architecture and dynamics of circulatory systems that could have evolved, and that are space filling with invariant terminal units, the ones that actually did evolve and are shared by all mammals minimize cardiac output. Networks have evolved so that the energy needed to sustain an average individual’s life and perform the mundane tasks of living is minimized in order to maximize the amount of energy available for sex, reproduction, and the raising of offspring. This maximization of offspring is an expression of what is referred to as Darwinian fitness, which is the genetic contribution of an average individual to the next generation’s gene pool.

This naturally raises the question as to whether the dynamics and structure of cities and companies are the result of analogous optimization principles. What, if anything, is optimized in their multiple network systems? Are cities organized to maximize social interactions, or to optimize transport by minimizing mobility times, or are they ultimately driven by the ambition of each citizen and company to maximize their assets, profits, and wealth? I will return to these issues in chapters 8, 9, and 10.

Optimization principles lie at the very heart of all of the fundamental laws of nature, whether Newton’s laws, Maxwell’s electromagnetic theory, quantum mechanics, Einstein’s theory of relativity, or the grand unified theories of the elementary particles. Their modern formulation is a general mathematical framework in which a quantity called the action, which is loosely related to energy, is minimized. All the laws of physics can be derived from the principle of least action which, roughly speaking, states that, of all the possible configurations that a system can have or that it can follow as it evolves in time, the one that is physically realized is the one that minimizes its action. Consequently, the dynamics, structure, and time evolution of the universe since the Big Bang, everything from black holes and the satellites transmitting your cell phone messages to the cell phones and messages themselves, all electrons, photons, Higgs particles, and pretty much everything else that is physical, are determined from such an optimization principle. So why not life?