The most important goal for coaches and triathletes is to increase the physical, technical, and psychological abilities of the athletes to the highest possible levels and to develop a precisely controlled training program to ensure that maximal performance is attained at the right moment of the season (i.e., at each point of a major triathlon competition). In many competitive endurance events such as triathlon, these top performances are often associated with a marked reduction in the training load undertaken by the athletes during the days before the competition. This period, known as the taper, has been defined as “a progressive, nonlinear reduction of the training load during a variable amount of time that is intended to reduce physiological and psychological stress of daily training and optimize sport performance” (Mujika and Padilla 2003).

In this perspective, the taper is of paramount importance to a triathlete’s performance and the outcome of the event. However, in this training phase coaches are the most insecure about the best training strategies for each individual triathlete, as they rely almost exclusively on a trial-and-error approach. Indeed, only recently have sport scientists increased their understanding of the relationships between the reduction of the training load before a competition and the associated performance changes.

A comprehensive and integrated analysis of the available scientific literature on tapering allows us to make a contribution to the optimization of tapering programs for triathletes. Although we acknowledge that designing training and tapering programs remains an art rather than a science, this chapter intends to establish the scientific bases for the precompetition tapering strategies in triathlon. We hope the following information will help individual triathletes, coaches, and sport scientists in their goal of achieving the optimal training mix during the taper, leading to more peak performances at the expected time of the season. Because the taper is also dependent on removal or minimization of the triathlete’s habitual stressors, permitting physiological systems to replenish or further enhance their capabilities, this chapter also addresses recovery strategies and acclimatization to stressful environments before competition.

The training load, or training stimulus, in a competitive sport such as triathlon can be described as a combination of training intensity, volume, and frequency (Wenger and Bell 1986). This training load is markedly reduced during the taper in an attempt to reduce accumulated fatigue, but reduced training should not be detrimental to training-induced adaptations. An insufficient training stimulus could result in a partial loss of training-induced anatomical, physiological, and performance adaptations, also known as detraining (Mujika and Padilla 2000). Therefore, triathletes and their coaches must determine the extent to which the training load can be reduced at the expense of the training components while retaining or even improving adaptations. A meta-analysis conducted by Bosquet et al. (2007) combined the results of tapering studies on highly trained athletes to establish the scientific bases for successfully reducing precompetition training loads to achieve peak performances at the desired point of the season. Although most of the studies were conducted on single activities (e.g., swimming, cycling, or running), they are certainly relevant for triathletes. Bosquet et al. assessed the effects of altering components of the taper on performance. The dependent variable analyzed was the performance change during the taper, whereas the independent variables included reductions in training intensity, volume, and frequency; taper pattern; and taper duration.

Overall, performance remains stable or drops slightly when training intensity is reduced, whereas it improves substantially when intensity is maintained or increased. Therefore, the training load of triathletes should not be reduced at the expense of training intensity during a taper (Bosquet et al. 2007).

With regard to training volume, several investigations have shown that this training component can be markedly reduced without a risk of losing training-induced adaptations or hampering performance. For instance, Hickson et al. (1982) reported that subjects trained in either cycling or treadmill running for 10 weeks retained most of their physiological and endurance performance adaptations during 15 subsequent weeks of reduced training, during which the volume of the sessions was diminished by as much as two-thirds. Studying highly trained middle-distance runners, both Shepley et al. (1992) and Mujika et al. (2000) reported better physiological and performance outcomes with low-volume than with moderate-volume tapers. Bosquet et al. (2007) determined through their meta-analysis that performance improvement during the taper was highly sensitive to the reduction in training volume. These researchers determined that maximal performance gains are obtained with a total reduction in training volume of 41 to 60 percent of pretaper value and that such a reduction should be achieved by decreasing the duration of the training sessions rather than decreasing the frequency of training. This finding suggests that triathletes would maximize taper-associated benefits by roughly dividing their training volume by half.

According to Bosquet et al. (2007), decreasing training frequency (i.e., the number of weekly training sessions) has not been shown to significantly improve performance. However, the decrease in training frequency interacts with other training variables, particularly training volume and intensity, which makes it difficult to isolate the precise effect of a reduction in training frequency on performance. Although further investigations are required, this result suggests that triathletes would benefit from maintaining a similar number of training sessions per week during the taper.

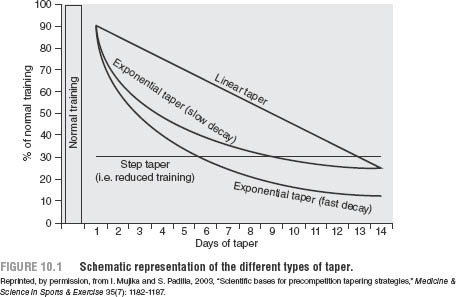

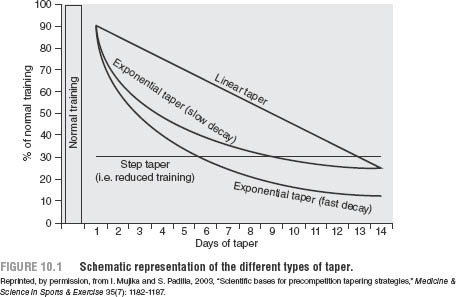

Mujika and Padilla (2003) identify three types of taper patterns: (1) linear taper, in which the training load is progressively reduced by a similar amount each day; (2) exponential taper, characterized by a large initial reduction of the training load followed by a leveling off of the training load; and (3) step taper, which is also referred to as reduced training and is characterized by a sudden constant reduction of the training load (see figure 10.1).

The majority of available studies used a progressive decrease of the training load. The studies of Banister, Carter, and Zarkadas (1999) and Bosquet et al. (2007) reported bigger performance improvements after a progressive taper (i.e., linear or exponential) when compared with a step taper. Nevertheless, Bosquet et al. (2007) were not able to address the effect of the kind of progressive taper on performance. Recommendations rely on the work of Banister et al. (1999) with triathletes, who suggest that a fast decay, which implied a lower training volume, was more beneficial to cycling and running performance than a slow decay of the training load. Thomas, Mujika, and Busso (2009) recently reported that the taper may be optimized by increasing the training load by 20 to 30 percent during the final 3 days of the taper, by allowing additional adaptations without compromising the removal of fatigue.

Bosquet et al. (2007) found a dose–response relationship between the duration of the taper and the performance improvement. A taper duration of 8 to 14 days seems to represent the borderline between the positive influence of fatigue disappearance and the negative influence of detraining on performance. Performance improvements can also be expected after 1-, 3-, or 4-week tapers. However, negative results may be experienced by some triathletes. This interindividual variability in the optimal taper duration has already been highlighted by some mathematical modeling studies (Mujika, Busso, et al. 1996; Thomas and Busso 2005). Differences in the physiological and psychological adaptations to reduced training (Mujika, Busso, et al. 1996; Mujika, Chatard, et al. 1996; Mujika, Padilla, and Pyne 2002), as well as the use of an overload intervention (i.e., a voluntary increase in the fatigue level of the athlete) in the weeks before the taper (Thomas and Busso 2005), are some of the variables that can account for this variability.

Recent mathematical modeling simulations suggest that the training performed in the lead-up to the taper greatly influences the optimal individual duration of the taper. A 20 percent increase over normal training during 28 days before the taper requires a step-load reduction of around 65 percent during 3 weeks, instead of 2 weeks when no overload training is performed. A progressive taper requires a smaller load reduction over a longer duration than a step taper, whatever the pretaper training. The impact of the pretaper training on the duration of the optimal taper seems obvious in regard to the reduction of the accumulated fatigue. Overload training before the taper causes a greater stress and needs a longer recovery time. Nevertheless, the more severe training loads could make adaptations peak at a higher level (Thomas, Mujika, and Busso 2008). In other words, greater training volume, intensity, or both before the taper would allow bigger performance gains but would also demand a reduction of the training load over a longer taper.

This hypothesis was strengthened by Coutts, Slattery, and Wallace (2007), who compared physiological, biochemical, and psychological markers of overreaching in well-trained triathletes after either 4 weeks of overload training and a 2-week taper or 4 weeks of normal training and a similar taper. Overreaching was diagnosed in the intensified training group after the 4 weeks of overload training, with a worsened (–3.7 percent) 3-kilometer (1.9 mile) running time-trial performance. In contrast, a gain in performance (+3.0 percent) was observed in the normal training group during the same period. During the taper, gains (+7.0 percent) in 3-km running time-trial performance were observed in the intensified training group. These findings suggest that a 2-week taper was enough for the intensified training group to recover and experience a positive training adaptation. Nevertheless, there was no difference in performance improvement between both training groups, suggesting that the length of the taper for the intensified group was not sufficient to allow for full recovery. Future work should compare different strategies for the implementation of physical training load in preparation for triathlon competition.

Millet et al. (2005) used mathematical modeling to describe the relationships between training loads and anxiety and perceived fatigue as a new method for assessing the effects of training on the psychological status of athletes, in this case four professional triathletes. The time for self-perceived fatigue to return to its baseline level was 15 days, which was close to the time modeled by previous researchers as optimal for tapering (Busso et al. 2002; Busso, Candau, and Lacour 1994; Fitz-Clarke, Morton, and Banister 1991). Millet et al. concluded that a simple questionnaire to assess anxiety and perceived fatigue could be used to adjust the optimal duration of tapering in triathlon.

Taken together, these results suggest that, in general, the optimal taper duration in triathlon is 2 weeks, even though positive performance outcomes can occur with both shorter and longer tapers. Testing different taper durations (from 1 to 4 weeks) while using a training log will help triathletes determine their own optimal taper duration.

Although the data on swimming, cycling, and running were insufficient in the meta-analysis conducted by Bosquet et al. (2007) to provide specific recommendations for each sport, it was possible to identify some trends that may help optimize taper in triathlon. The first, indisputable one is the need to maintain training intensity, whatever the mode of locomotion. Substantial small to moderate improvements were indeed achieved only when training intensity was not decreased during the taper in swimming, cycling, and running. Although a 41 to 60 percent decrease in training volume seems to be optimal in swimming, Bosquet et al. (2007) were not able to find a similar cutoff value in cycling and running, the optimal decrease being somewhere between 21 and 60 percent. A period of 8 to 14 days seems to represent the optimal taper duration in cycling and running. It should be noted, however, that significant improvements can be expected from longer taper durations in swimming, but the number of cycling and running subjects for such durations was insufficient to test this hypothesis with adequate statistical power (n = 10 in running and 0 in cycling for tapers lasting 15 to 21 days and 22 days or more). Finally, there is limited evidence indicating that cyclists seem to respond particularly well to step tapers in which training frequency is reduced (Bosquet et al. 2007).

Maintaining training intensity is absolutely necessary to retain and enhance training-induced adaptations during tapering in triathlon, but it is obvious that reductions in other training variables should allow for sufficient recovery to optimize performance. Lowering training volume appears to induce positive physiological, psychological, and performance adaptations in highly trained triathletes. A safe bet in terms of training volume reduction would be 41 to 60 percent, but performance benefits could be attained with somewhat smaller or bigger volumes. A final increase of 20 to 30 percent of the training load during the last 3 days before the triathlon race may be beneficial.

High training frequencies (greater than 80 percent) seem to be necessary to avoid detraining and “loss of feel” in highly trained triathletes. Conversely, training-induced adaptations can be readily maintained with very low training frequencies in moderately trained athletes (30 to 50 percent).

The optimal duration of the taper is not known. Indeed, positive physiological and performance adaptations can be expected as a result of tapers lasting 4 to 28 days, yet the negative effects of complete inactivity are readily apparent in athletes. When we are unsure about the individual adaptation profile of a particular triathlete (this is what determines the optimal taper duration), 2 weeks seems to be a suit-all taper duration. This period may be beneficially increased to 4 weeks if a temporary increase of about 20 percent over the normal training load is planned during the month preceding the taper. Testing different taper methods while using a training log may also help a triathlete determine his own optimal training strategy during the precompetition period.

Achieving an appropriate balance between training stress and recovery is important in maximizing performance in triathlon. The cumulative effects of training-induced fatigue must be reduced during the weeks immediately preceding competition, and a wide range of recovery modalities can be used as an integral part of the taper to help optimize performance (see chapter 8 for more information). Long-lasting fatigue experienced during the taper in endurance sports such as triathlon may be related to exercise-induced muscle injury, delayed-onset muscle soreness (DOMS) (Cheung, Hume, and Maxwell 2003), or an imbalance of the autonomic nervous system (Garet et al. 2004; Pichot et al. 2000). This section discusses interventions likely to improve recovery processes.

Many studies examining the efficacy of recovery modalities have focused on exercise-induced muscle damage, usually associated with DOMS, a sensation of pain or discomfort occurring 1 or 2 days postexercise. Although the underlying mechanism is not well understood, full recovery of strength and power after a training session that causes DOMS may take several days (Cheung, Hume, and Maxwell 2003). Therefore, its occurrence may be detrimental to an ongoing training program. Modalities that enhance the rate of recovery from DOMS and exercise-induced muscle damage may enhance the beneficial effects of the taper for triathletes.

Massage therapy is a commonly used recovery treatment after eccentric exercise that resulted in DOMS. Weber, Servedio, and Woodall (1994) investigated the effects of massage, aerobic exercise, microcurrent stimulation, and passive recovery on force deficits after eccentric exercise. None of the treatment modalities had any significant effects on soreness, maximal isometric contraction, and peak torque production. Hilbert, Sforzo, and Swensen (2003) reported no effect of massage administered 2 hours after a bout of eccentric exercise on peak torque produced by the hamstring muscle; however, muscle soreness ratings were decreased 48 hours postexercise. Farr et al. (2002) also reported no effect of 30 minutes of leg massage on muscle strength in healthy males, although soreness and tenderness ratings were lower 48 hours postexercise. However, a significant improvement in vertical jump performance was reported after high-intensity exercise in female college athletes (Mancinelli et al. 2006).

Although the outcome measures of a wide range of massage techniques have been studied, very few investigations have examined the effect of massage on sport performance. However, some evidence suggests that massage after eccentric exercise may reduce muscle soreness (Weerapong, Hume, and Kolt 2005). Moraska (2007) showed that the training level of the therapist influences the effectiveness of massage after a 10-kilometer (6 mile) race. Many studies investigating massage and its relevance to recovery examined the mechanisms of massage, and thus there is slightly more research in this area compared with performance. Interestingly, recent research (Jakeman, Byrne, and Eston 2010) reported that a combined treatment of 30 minutes of manual massage and 12 hours of lower limb compression (i.e., wearing compressive clothing) significantly moderated perceived soreness at 48 and 72 hours after plyometric exercise in comparison with passive recovery or compression alone.

Clothing with specific compressive qualities is becoming increasingly popular, especially as competition approaches, and studies have shown improved performance and recovery after exercise-induced damage (Ali, Caine, and Snow 2007; Kraemer et al. 2001; Trenell et al. 2006). The use of lower limb compression for athletes derives from research in clinical settings showing positive effects of compression after trauma or some chronic diseases. Bringard et al. (2006) observed positive effects of calf compression on calf muscle oxygenation and venous pooling in resting positions, while Hirai, Iwata, and Hayakawa (2002) reported reduced foot edema in patients with varicose veins. These effects can be attributed to the alteration in hemodynamics resulting from the application of compression (Ibegbuna et al. 2003). Studies investigating whether these effects are transferable to athletic populations found some encouraging results (Ali, Caine, and Snow 2007; Bringard, Perrey, and Belluye 2006), but other research did not (French et al. 2008; Trenell et al. 2006). The positive effect reported by some studies may be associated with the ability of compression to moderate the formation of edema and accelerate muscle recovery.

Compression has also been suggested to offer mechanical support to the muscle, allowing faster recovery after damaging exercise (Kraemer et al. 2001). Kraemer et al. speculated that a dynamic casting effect caused by compression may promote stable alignment of muscle fibers and attenuate the inflammatory response. This would, therefore, reduce both the magnitude of muscle damage and recovery time after injury. Although further research is required to test these hypotheses, triathletes could be encouraged to use lower limb compression during the taper, notably when engaged in long-haul travel.

Triathletes usually endure very severe training loads that induce both adaptive effects and stress reactions. The high frequency of the stimuli imposed ensures that these adaptive effects are cumulative. Unfortunately, incomplete recovery from frequent training can make the stress-related side effects cumulative as well. One key aspect of the stress response is decreased activity of the autonomic nervous system (ANS), which regulates the basic visceral (organ) processes needed for the maintenance of normal bodily functions. Garet et al. (2004) reported that the reduction of ANS activity during intensive training was correlated with the loss in performance of seven well-trained swimmers, and the rebound in ANS activity during tapering paralleled the gain in performance. In this perspective, one of the main goals of recovery during the taper would be to increase the magnitude of ANS reactivation (Garet et al. 2004).

Several recovery methods enhance autonomic tone, including nutrition strategies (promoting fruits and vegetables with a low glycemic index), massage (Weerapong, Hume, and Kolt 2005), and cold-water immersion of the whole body or face (Al Haddad et al. 2010; Buchheit et al. 2009). Nevertheless, the most important factor determining ANS reactivation seems to be sleep duration and quality. Maximizing sleep in a dark, calm, relaxing, and fresh atmosphere is essential during the week preceding the race for optimal performance (Halson 2008). A warm shower may help initiate sleep. Naps may also be planned by the triathlete at the beginning of the afternoon but should not last more than 30 minutes to prevent a lethargic state during the remainder of the day (Reilly et al. 2006).

The main aim of the taper is to reduce the negative physiological and psychological impact of daily training (i.e., accumulated fatigue), although further improvements in the positive consequences of training (i.e., fitness gains) can also be achieved. In this perspective, particular attention should be given during the taper to recovery strategies, which may help induce parasympathetic reactivation (sleep, hydrotherapy, massage) and reduce muscle fatigue (massage, compression garments).

For triathletes, maintaining a good nutrition and hydration status remains critical for successful participation in competition. Starting a race with a poor hydration status or low glycogen stores directly endangers the performance level of athletes engaged in long-duration events, such as triathlons. Triathletes need to adopt both nutrition and hydration strategies during the precompetition period to maximize the taper-associated benefits.

Environmental heat stress can challenge the limits of a triathlete’s cardiovascular and temperature regulation systems, body fluid balance, and performance. Evaporative sweating is the principal means of heat loss in warm to hot environments where sweat losses frequently exceed fluid intakes. When dehydration exceeds 3 percent of total body water (2 percent of body mass), then aerobic performance may be consistently impaired independent of and additive to heat stress. Dehydration augments hyper-thermia and plasma volume reductions, which combine to accentuate cardiovascular strain and reduce  O2max (Cheuvront et al. 2010). Casa et al. (2010) showed that a small decrement in hydration status (body mass loss of 2.3 percent) at the start of a 12-kilometer (7.5 mile) race impaired physiological function and performance while running in the heat. This finding highlights that adequate hydration during the taper, especially during the 48 hours preceding a triathlon competition, is crucial for ensuring that work capacity is not diminished at the beginning of the race.

O2max (Cheuvront et al. 2010). Casa et al. (2010) showed that a small decrement in hydration status (body mass loss of 2.3 percent) at the start of a 12-kilometer (7.5 mile) race impaired physiological function and performance while running in the heat. This finding highlights that adequate hydration during the taper, especially during the 48 hours preceding a triathlon competition, is crucial for ensuring that work capacity is not diminished at the beginning of the race.

Urine color is an inexpensive and reliable indicator of hydration status (Armstrong et al. 1994). Normal urine color is described as light yellow, whereas moderate and severe dehydration are associated with a dark yellow and brownish-green color, respectively. Although urine color tends to underestimate the level of hydration, and it may be misleading if a large amount of fluid is consumed rapidly, it provides a valid means for triathletes to self-assess hydration level, notably during the taper period.

Energy metabolism can be altered during a taper. Reductions in the training load in favor of rest and recovery lower a triathlete’s daily energy expenditure, potentially affecting energy balance and body composition. Triathletes should therefore pay special attention to their energy intake during the taper to avoid energy imbalance and undesirable changes in body composition. To the best of our knowledge, no scientific reports are available on the nutrition pattern of triathletes undergoing a taper characterized by light daily training loads. Some studies indicate that training-load alterations are not necessarily accompanied by matched changes in dietary habits, and this has a direct impact on athletes’ body composition (Almeras et al. 1997; Mujika, Chaouachi, and Chamari 2010). It is therefore advisable for triathletes to take into account training schedules and loads, which can vary dramatically between peak training and the taper. In this context, triathletes need to be educated to match their energy and macronutrient intakes to their training loads.

Wilson and Wilson (2008) suggest not only matching energy intake to energy expenditure but also doing carbohydrate loading during this precompetition period to optimize muscle glycogen storage. Although adequate muscle glycogen stores may be achieved by 24 to 36 hours of high carbohydrate intake for sprint-distance triathlons (Pitsiladis, Duignan, and Maughan 1996; Sherman et al. 1981), longer carbohydrate loading in preparation for short- to long-distance triathlons is beneficial (Burke, Millet, and Tarnopolsky 2007). In a study examining energy balance of 10 male and 8 female triathletes participating in an Ironman event, Kimber et al. (2002) demonstrated a relatively high rate of energy expenditure in relation to energy intake in both female and male triathletes. Since energy intake was calculated to provide approximately 40 percent of total energy expenditure, endogenous fuel stores were estimated to supply more than half the energy expended during the Ironman. This finding illustrates the importance of consuming a high-carbohydrate diet before long-distance triathlons to maximize endogenous fuel stores.

Nutrition strategies should be implemented in two phases: (1) to match reduced energy expenditure in the first phase of the taper and (2) to induce a supercompensation of the glycogen stores during the second phase of the taper. Walker et al. (2000) reported that cyclists increased their performance during a time-to-fatigue exercise performed at 80 percent  O2max in response to a high-carbohydrate diet (approximately 78 percent carbohydrate) compared with a moderate-carbohydrate diet (approximately 48 percent carbohydrate) followed during the last 4 days of the taper. Interestingly, Sherman et al. (1981) showed that no glycogen-depleting period of exercise is needed to induce such supercompensation in well-trained runners undergoing 3 days of high carbohydrate intake during the taper. If a two-phase taper is planned (increase of the training load during the final days before competition), this strategy of matching energy intake to energy expenditure plus carbohydrate loading may be particularly beneficial (Mujika, Chaouachi, and Chamari 2010).

O2max in response to a high-carbohydrate diet (approximately 78 percent carbohydrate) compared with a moderate-carbohydrate diet (approximately 48 percent carbohydrate) followed during the last 4 days of the taper. Interestingly, Sherman et al. (1981) showed that no glycogen-depleting period of exercise is needed to induce such supercompensation in well-trained runners undergoing 3 days of high carbohydrate intake during the taper. If a two-phase taper is planned (increase of the training load during the final days before competition), this strategy of matching energy intake to energy expenditure plus carbohydrate loading may be particularly beneficial (Mujika, Chaouachi, and Chamari 2010).

Developing an adequate hydration strategy during the taper is crucial for triathletes to ensure good hydration status at the start of the race. Paying attention to morning urine color, which should be light yellow, is a practical solution for reaching this goal. Carbohydrate-loading strategies are recommended during race preparation to help triathletes cross the finish line matching their potential. For the well-trained competitor, this may be as simple as tapering exercise over the final days and ensuring daily carbohydrate intakes of 10 to 12 grams per kilogram of body mass over the 36 to 48 hours before a race (i.e., if your body mass is 70 kilograms, or 165 pounds, your daily carbohydrate intake should range between 700 and 840 grams). It is not absolutely necessary to undertake a depletion phase before carbohydrate loading.

A taper targets the removal or minimization of a triathlete’s habitual stressors, permitting physiological systems to replenish their capabilities or even undergo supercompensation. There is very little scientific information regarding the possible interactions of environmental variables on tapering processes in athletes, whether the stressor is heat, cold, or altitude. Experimental work on the additive effects of altitude on climatic stress and travel fatigue or jet lag is lacking (Pyne, Mujika, and Reilly 2009). This gap in knowledge is largely due to the enormous difficulties in addressing these problems adequately in experimental designs, as well as the challenges facing researchers in the field in controlling the many variables involved. Nevertheless, the likely effects of environmental factors must be considered in a systematic way when tapering is prescribed within a triathlete’s annual plan.

International travel is an essential part of the lives of elite triathletes both for competition and training. It is also becoming increasingly common among recreational triathletes, particularly those who participate in long-distance events. Long-distance travel is associated with a group of transient negative effects, collectively referred to as travel fatigue, that result from anxiety about the journey, changes in daily routine, and dehydration due to time spent in the dry air of the aircraft cabin. Travel fatigue lasts for only a day or so, but for those who fly across several time zones, there are longer-lasting difficulties associated with jet lag. The problems of jet lag can last for more than a week if the flight crosses 10 time zones or more, and performance can suffer. Knowledge of the properties of the body clock clarifies the cause of the difficulties (an unadjusted body clock) and forms the basis of using light in the new time zone to adjust the body clock (Waterhouse, Reilly, et al. 2007).

The timescale for adjustment of the body clock can be incorporated into the taper when competition requires travel across multiple meridians. It is logical to allow sufficient time for the triathlete to adjust completely to the new time zone before competing (Waterhouse, Reilly, et al. 2007). The period of readjustment might constitute a part of the lowered training volume integral to the taper. Since training in the morning is not advocated after traveling eastward, allowance should be made for the timing of training over the first few days so that a phase delay rather than the desired phase advance is not erroneously promoted (Reilly, Waterhouse, and Edwards 2005). There also seems little point in training hard at home before leaving, since arriving tired at the airport of departure may delay adjustment later (Waterhouse et al. 2003). Similarly, attempting to shift the phase of the body clock in the required direction before departure is counterproductive because performance (and hence training quality) may be disrupted by this strategy (Reilly and Maskell 1989).

Tapering should proceed as planned in the company of jet lag even if the interactions between body clock disturbances and the recovery processes associated with tapering have not been fully delineated. Although quality of sleep is an essential component of recovery, napping at an inappropriate time of day when adjusting to a new time zone may delay resynchronization (Minors and Waterhouse 1981), but in certain circumstances a short nap of about 30 minutes can be restorative (Waterhouse, Atkinson, et al. 2007). Suppression of immune responses is more likely to be linked with sleep disruption than with jet lag per se (Reilly and Waterhouse 2007). Therefore, readjustment of the body clock should be harmonized with the moderations of training during the taper. Triathletes, coaches, and support staff should implement strategies to minimize the effects of travel stress before departure, during long-haul international travel, and upon arrival at the destination.

Most triathlon competitions take place during summer and in warm environmental conditions, and exercising in the heat can lead to serious performance decrements. Because heat acclimatization seems to be the most effective strategy to limit the deleterious effects of heat on performance, triathletes need to take this into account to optimize the benefits of the taper. Tapering in hot conditions before competition is compatible with the 7 to 14 days’ reduction in training volume advocated when encountering heat stress. The increased glycogen utilization associated with exercise in the heat should be compensated for by the reduced training load—both intensity and duration (Armstrong 2006). Athletes should be acclimatized to the heat, otherwise performance in the forthcoming competition might be compromised.

Regular exposure to hot environments results in a number of physiological adaptations that reduce the negative effects associated with exercise in the heat. These adaptations include decreased core body temperature at rest, decreased heart rate during exercise, increased sweat rate and sweat sensitivity, decreased sodium losses in sweat and urine, and expanded plasma volume (Armstrong and Maresh 1991). The effect of acclimatization on plasma volume is extremely important in terms of cardiovascular stability as it allows for a greater stroke volume and a lowering of the heart rate (Pandolf 1998).

The process of acclimatization to exercise in the heat begins within a few days, and full adaptation takes 1 to 2 weeks for most people (Wendt, van Loon, and Lichtenbelt 2007). It is clear that the systems of the human body adapt at varying rates to successive days of heat exposure. The early adaptations during heat acclimatization primarily include improved control of cardiovascular function through an expansion of plasma volume and a reduction in heart rate. An increase in sweat rate and cutaneous vasodilation is seen during the later stages of heat acclimatization (Armstrong and Maresh 1991). Triathletes exhibit many of the characteristics of heat-acclimatized people and are therefore thought to be partially adapted; however, full adaptation is not seen until at least a week is spent training in the heat (Pandolf 1998). It is not necessary to train every day in the heat; exercising in the heat every 3rd day for 30 days results in the same degree of acclimatization as exercising every day for 10 days (Fein, Haymes, and Buskirk 1975).

Because maintenance of an elevated core body temperature and stimulation of sweating appear to be the critical stimuli for optimal heat acclimatization, strenuous interval training or continuous exercise should be performed at an intensity exceeding 50 percent of the maximal oxygen uptake (Armstrong and Maresh 1991). There is evidence that exercise bouts of about 100 minutes are most effective for the induction of heat acclimatization, and there is no advantage in spending longer periods exercising in the heat (Lind and Bass 1963).

Unfortunately, heat acclimatization is a transient process and will gradually disappear if not maintained by repeated exercise in the heat. It appears that the first physiological adaptations to occur during heat acclimatization are also the first to be lost (Armstrong and Maresh 1991). There is considerable variability concerning the rate of decay of heat acclimatization; some researchers report significant losses of heat acclimatization in less than a week, whereas others show that acclimatization responses are fairly well maintained for up to a month. In general, most studies show that dry-heat acclimatization is better retained than humid-heat acclimatization and that high levels of aerobic fitness are also associated with a greater retention of heat acclimatization (Pandolf 1998).

At altitude,  O2max is reduced according to the prevailing ambient pressure. An immediate consequence is that the exercise intensity or power output at a given relative aerobic load is decreased. In the first few days at altitude, a respiratory alkalosis (increased respiration which elevates the blood pH) occurs because of the increased ventilatory response to hypoxic (lack of oxygen) conditions. This condition is normally self-limiting due to a gradual renal compensation, meaning the kidneys can regulate the blood pH. Athletes in training camps at altitude resorts recognize that an initial reduction in training load is imperative at altitude as acclimatization begins. The extra hydration requirements due to the dry ambient air and the initial increased urination, combined with plasma volume changes (Rusko, Tikkanen, and Peltonen 2004), increased utilization of carbohydrate as a substrate for exercise (Butterfield et al. 1992), and tendency for sleep apnea (Pedlar et al. 2005), run counter to the benefits of tapering. In this instance, the reduced training load would not substitute for a taper. There is the added risk of illness because of decreased immunoreactivity associated with exposure to altitude (Rusko, Tikkanen, and Peltonen 2004). Maximal cardiac output may also be reduced in the course of a typical 14- to 21-day sojourn at altitude as a result of the impairment in training quality. Altitude training camps should therefore be lodged strategically in the annual plan to avoid unwanted, if unknown, interactions with environmental variables.

O2max is reduced according to the prevailing ambient pressure. An immediate consequence is that the exercise intensity or power output at a given relative aerobic load is decreased. In the first few days at altitude, a respiratory alkalosis (increased respiration which elevates the blood pH) occurs because of the increased ventilatory response to hypoxic (lack of oxygen) conditions. This condition is normally self-limiting due to a gradual renal compensation, meaning the kidneys can regulate the blood pH. Athletes in training camps at altitude resorts recognize that an initial reduction in training load is imperative at altitude as acclimatization begins. The extra hydration requirements due to the dry ambient air and the initial increased urination, combined with plasma volume changes (Rusko, Tikkanen, and Peltonen 2004), increased utilization of carbohydrate as a substrate for exercise (Butterfield et al. 1992), and tendency for sleep apnea (Pedlar et al. 2005), run counter to the benefits of tapering. In this instance, the reduced training load would not substitute for a taper. There is the added risk of illness because of decreased immunoreactivity associated with exposure to altitude (Rusko, Tikkanen, and Peltonen 2004). Maximal cardiac output may also be reduced in the course of a typical 14- to 21-day sojourn at altitude as a result of the impairment in training quality. Altitude training camps should therefore be lodged strategically in the annual plan to avoid unwanted, if unknown, interactions with environmental variables.

Many elite-level athletes use altitude training for conditioning purposes. For example, it is accepted as good practice among elite swimmers and rowing squads preparing for Olympic competition despite an absence of compelling evidence of its effectiveness. There remains a question as to the timing of the return to sea level for best effects, an issue relatively neglected by researchers in the field, with a few exceptions (Ingjer and Myhre 1992). Three phases have been observed by coaches (Millet et al. 2010). So far, however, these are not fully supported by scientific evidence and are therefore under debate:

1. A positive phase observed during the first 2 to 4 days, but not in all athletes.

2. A phase of progressive reestablishment of sea-level training volume and intensity, 2 to 4 days after the return to sea level. The probability of good performance is reduced. This decrease in performance fitness might be related to the altered energy cost and loss of the neuromuscular adaptations induced by training at altitude.

3. A third phase, 15 to 21 days after return to sea level, is characterized by a plateau in fitness. It is optimal to delay competition until this third phase, although some triathletes may reach their peak performance during the first phase. Improvement in energy cost and loss of the neuromuscular adaptations after several days at sea level, in conjunction with the further increase in oxygen transport and delayed ventilatatory benefits of altitude training, may explain this third phase.

In this context, a period of lowered training is observed before competing after altitude training, which constitutes a form of tapering. The extent of the benefits, as well as the variation between athletes, has not been adequately explored. Future investigations are required.

Most experimental and observation research on tapering has been conducted in the context of singular sports events (Pyne, Mujika, and Reilly 2009). Triathletes competing in sprint- and short-distance competitions, however, sometimes have reduced opportunities to taper because of the repetition of the races during the competitive period (e.g., seven World Championship Series races between the end of March and the beginning of September in 2010). Peaking for major competitions each month (even every other week) usually poses the problem of choosing between recovering from the previous competition and rebuilding fitness or maintaining intensive training and capitalizing on adaptations acquired during the previous training cycle. Both approaches can be valid, and the choice should depend on the level of fatigue triathletes present after a race (or a series of competitions) and the time frame between the last triathlon and the next one. Additional research is required to examine the taper in the context of multiple peaking. Nevertheless, some guidelines could be addressed.

Optimized taper periods associated with a large training volume reduction (approximately 50 percent) over a prolonged period (approximately 2 weeks) should be scheduled two or three times per year. Additional taper periods may be detrimental for performance improvement by minimizing the total time of the normal heavy training load, which is essential to induce training adaptations.

Optimized taper periods associated with a large training volume reduction (approximately 50 percent) over a prolonged period (approximately 2 weeks) should be scheduled two or three times per year. Additional taper periods may be detrimental for performance improvement by minimizing the total time of the normal heavy training load, which is essential to induce training adaptations.

Prioritizing a limited number of races each season (e.g., two or three major events) seems to be a good solution for planning the taper periods in the competitive season. Altitude camps may be adequately programmed before these competitions.

Prioritizing a limited number of races each season (e.g., two or three major events) seems to be a good solution for planning the taper periods in the competitive season. Altitude camps may be adequately programmed before these competitions.

A sufficient training block lasting at least 2 months should be planned between two major objectives to allow for appropriate recovery, training, and taper phases.

A sufficient training block lasting at least 2 months should be planned between two major objectives to allow for appropriate recovery, training, and taper phases.

Only short-duration tapers (4 to 7 days) should be programmed before minor events, paying special attention to recovery (nutrition, hydration, sleep, massage, hydrotherapy, compression garments). Because of the likely persistence of training-induced fatigue despite such short tapers, triathletes should be aware that this strategy may sometimes lead to below-optimal performances.

Only short-duration tapers (4 to 7 days) should be programmed before minor events, paying special attention to recovery (nutrition, hydration, sleep, massage, hydrotherapy, compression garments). Because of the likely persistence of training-induced fatigue despite such short tapers, triathletes should be aware that this strategy may sometimes lead to below-optimal performances.

Because the recovery period after minor competitions (associated with nonoptimal taper) should be as short as possible to allow a quick restoration of the training load, long-haul travel should be avoided.

Because the recovery period after minor competitions (associated with nonoptimal taper) should be as short as possible to allow a quick restoration of the training load, long-haul travel should be avoided.

The taper is a key element of a triathlete’s physical preparation in the weeks before a competition. Since the early 1990s, there has been substantial research interest in the taper and its importance in transitioning athletes from the preparatory to competitive phase of the season. Physiological and performance adaptations can be optimized during periods of taper preceding triathlon competitions by means of significant reduction in training volume, moderate reduction in training frequency, and maintenance of training intensity. Particular attention given to nutrition, hydration, and recovery strategies during the preevent taper may help maximize its associated positive effects. In this context, tapering strategies may be associated with a competition performance improvement of about 3 percent (usual range is .5 to 6.0 percent).

Future progress in sport science will play an important part in refining existing and developing tapering methodologies. These developments should involve a combination of research and practical experience of coaches and triathletes, experimental and observational research, and elegant mathematical models to refine our understanding of the physiological and performance elements of the taper.