Software architecture analysis deals with the analysis of structures and concepts of software systems, in order to evaluate the quality of the software in terms of known requirements. It supports developers, architects, and other involved parties in maintaining and improving quality. Architecture analysis provides an overview of the components in the software system and their dependencies.

As well as architecture analysis, other methods exist for ensuring the quality of the software architecture. These include code and architecture reviews, data and process analyses, and tests (performance tests, load tests, stress tests, and so on). However, we will not be addressing this type of quality assurance here.

Software architecture analysis allows the quality of a software project to be evaluated at any time, revealing risks and enabling quality improvement measures to be derived. Inadequate quality assurance and insufficient checking of the architecture can result in significant risks and losses in the course of software development.

In addition to the evaluation of development structures and concepts, architecture analysis also helps determine the level to which quality requirements are fulfilled.

Architecture analysis and its methods are necessary for continuous quality evaluation of all sizes of architectures. Automated tools can save a lot of time, but are only really suitable for pure code analysis. They should not be regarded as the sole and sufficient solution. Later changes to a bad architecture cost considerable time and money, but architecture analysis can help you address the risks involved in changeability and the fulfillment of quality requirements.

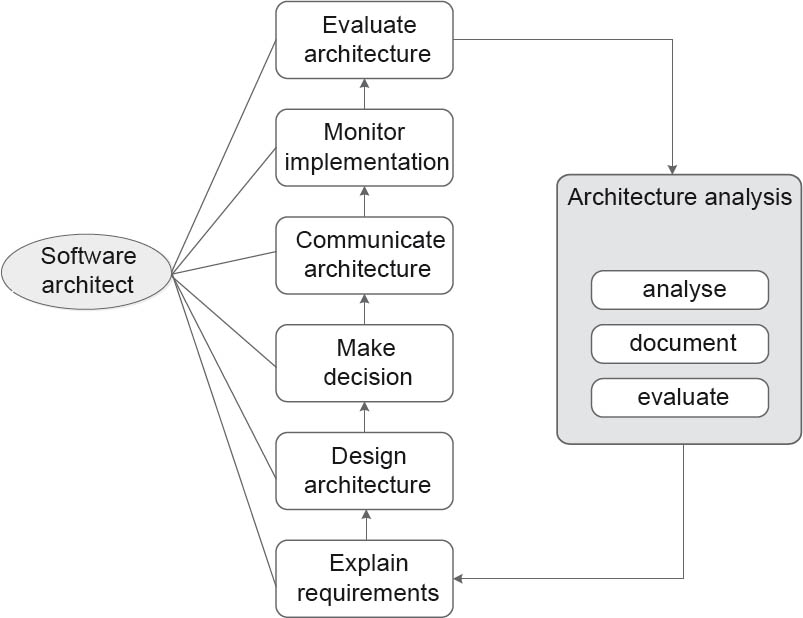

Figure 5–1 shows how architecture analysis is integrated with the architecture business cycle (ABC).

Figure 5-1Integration of architecture analysis

The sub-aspects of architecture analysis can be summarized as a collection of analysis methods, evaluations, and documentations. These are specifically used by the architect to evaluate, document, and communicate the characteristics of the existing architecture.

An extract from the Software architectures and quality section of the iSAQB curriculum [isaqb-curriculum] is provided below.

LG4-1:Discuss quality models and quality characteristics

LG4-1:Discuss quality models and quality characteristics LG4-2:Define quality requirements for software architectures

LG4-2:Define quality requirements for software architectures LG4-3:Perform qualitative evaluation of software architectures

LG4-3:Perform qualitative evaluation of software architectures LG4-4:Understand the quantitative evaluation of software architectures

LG4-4:Understand the quantitative evaluation of software architectures LG4-5:Understand how quality objectives can be achieved

LG4-5:Understand how quality objectives can be achievedA software project involves two types of “things” that can be evaluated:

Some of these artifacts (such as the source code) can be evaluated quantitatively (i.e., in numbers), while others that cannot be evaluated numerically are evaluated in terms of their properties or quality.

Various attributes and models exist for evaluating the quality of software architecture, each focusing on different aspects of the system. These quality models form the basis for evaluations and analyses, since they address the project’s quality requirements.

Various sources of information can be of assistance during qualitative evaluation of architectures—for example, requirements, quality trees and scenarios, architecture and design models, source code, and metrics.

DIN ISO/IEC 9126 [ISO/IEC 9126] defines software quality as the entirety of the features and relevant values of a software product that relate to its suitability to fulfill defined and presupposed requirements.

In 2005, this was replaced by the new DIN ISO/IEC 25010 [ISO/IEC 25010], which is part of the 250xx series of norms. Its official title is System and software engineering – Systems and software Quality Requirements and Evaluation (SQuaRE) — System and software quality models.

To ensure success, quality should be continuously assured. For object-oriented software development, this includes reviews, unit and regression tests, and architecture analysis.

Quality models use detail and specifications to make the concept of software quality measurable.

In the new ISO/IEC 25010 norm, the six main characteristics listed in ISO/IEC 9126 are supplemented by the new characteristics security and compatibility. Some sub-characteristics were added and other characteristics were renamed:

Does the software have sufficient functions to fulfill the specified and presupposed requirements?

Can the software maintain its level of performance under defined conditions for a specific period of time?

Is the program easy to learn and use? How attractive and user-friendly is the software for the user?

How economical is the software for the solution of a specific problem in terms of resources, query and processing response times, and storage space?

How good is data protection? Can unauthorized persons or systems read or modify data? Is access to data allowed for all authorized persons or systems?

Can two or more systems or components exchange information with each other and/or execute their required functions while sharing the same hardware or software environment?

What is the effort involved in error correction, implementation of improvements, or adaptation to changes in the environment? How high is the level of reusability? How stable is the software in terms of changeability without introducing undesired side effects?

Can the software be used on other (hardware and software) systems?

Each of these main characteristics is refined using sub-characteristics (see Table 5-1).

Table 5-1Quality characteristics in accordance with ISO/IEC 25010 [ISO/IEC 25010]

One example of a further quality characteristic is scalability, which describes the adaptability of the hardware and software to an increasing volume of requirements. A differentiation is made here between vertical and horizontal scaling. While vertical scaling replaces the system with a more performant solution (for example, by upgrading the server or replacing it with a more powerful model), in the case of horizontal scaling, additional hardware resources (for example, additional servers) are added to the system. Depending on its purpose, there can be other “characteristics” which are of relevance to the stakeholders as quality characteristics of a software system.

Although they are often regarded as self-evident, relevant quality characteristics should be explicitly documented. Only explicitly documented characteristics can be constructively used to improve quality and ensure transparency. Because he bears the responsibility for the quality of the entire system, it is essential for the software architect to call for explicit and specific quality characteristics or actively rework the existing ones.

The various quality characteristics or attributes of a system can have a wide range of impacts on each other. Some examples are:

For these reasons, you usually need to balance the priorities of the individual quality requirements for each specific piece of software.

So how do we fulfill specific quality characteristics?

There is no universal method for developing solution strategies for specific quality characteristics. As an architect, you have to develop appropriate measures in parallel with the development of views and technical concepts, based on the individual situation and context.

The following sections provide a selection of sample tactics and practices for achieving specific quality characteristics. These tactics often help, but don’t work in all cases.

As already explained, individual quality characteristics can mutually impact each other. The desire for increased performance can affect a system’s flexibility, storage space requirements, or timely completion. In this case, the following tactics can help:

This list is, of course, not exhaustive. Situations exist in which performance can be improved via distribution. In another example, increased flexibility can result in a runtime optimizer increasing performance.

As already explained, requirements for increased flexibility and adaptability often conflict with a requirement for increased performance. You therefore need to ask which aspect of the system needs to be more flexible. This could be:

The answer to this question can help to narrow the scope of the required flexibility. You can then develop various scenarios to check the suitability of alternative architectures.

To improve the flexibility of a system, the following measures can be of assistance:

However, all these tactics have their limits and don’t work in all cases. They are merely intended to convey the basic idea here of how specific quality characteristics can be achieved.

Another important point that is also worth mentioning is traceability. This is an important characteristic that is indispensable for quality-oriented software development. All system requirements must be traceable in forward and reverse directions—i.e., from their source (via their description, specification and implementation) to their verification.

The following attributes are necessary to achieve traceability:

In addition to a qualitative appraisal, quantitative evaluation measures the artifacts of an architecture numerically. If carried out consistently and recorded for extended periods, these measurements can provide good indications of structural changes. They provide no information, however, with respect to the operability or runtime quality of a system. To be comparable, the results of a quantitative evaluation also require a functional and technical context. However, quantitative evaluation of software (and particularly of source code) can help to identify critical elements within systems.

Quantitative methods for quality assurance of architectures include:

Design and implementation are subject to specific limits placed on them by the system’s software architecture. For example, the various levels of an architecture restrict the permitted relationships and import capabilities in the source code. It is therefore important that so-called “architecture standards” are also complied with, and that the design and implementation adapt themselves to the architecture. However, checking compliance with architecture standards is not a simple matter. Depending on the nature of the project and the size of the system, there can be a wide range of different architecture standards involved.

The architecture effectively forms the framework (or basic structure) of a software system, and places restrictions on its design and implementation. For example, the logical levels of an architecture restrict the number of possible relationships in the UML design models as well as dependencies between classes in the source code.

There is a range of tools available for checking architecture standards, and evaluating architecture. These tools can be configured to evaluate the compliance of the code based on the predefined architectural standards.

Some static analysis tools are discussed in more detail in Chapter 6.

A large number of metrics can be determined for a project and its source code. Here are some examples:

Cyclomatic complexity is also referred to as the McCabe metric (M) and was introduced in 1976 by Thomas J. McCabe [McC76].

It shows the number of linearly independent paths through a program’s source code and is calculated using the formula:

e − n + 2p

where e is the number of edges of the graph, n is the number of nodes in the graph, and p is the number of individual control flow graphs (one graph per function/procedure).

The McCabe value is a measure of the complexity of a module. It represents a lower limit for the number of possible paths through the module and an upper limit for the number of test cases needed to cover all edges of the control flow graph.

In general terms, low cyclomatic complexity means that the module is easy to understand, test, and maintain. High cyclomatic complexity means the module is complex and difficult to test. If, however, the complexity can only be reduced with difficulty or the module is easy to understand in spite of a high McCabe value (for example, due to extensive switch statements), an excessively high cyclomatic complexity warning can also be suppressed.

Many different types of problems can arise in the course of a software development project. Either the stakeholders have problems formulating the requirements explicitly (and, above all, completely), or cooperation between system users and developers doesn’t function properly. Usually, cooperation ends with the analysis and design phase, since the developers then withdraw and only present the results of their work when the software is complete.

Coordination between teams is very important if they are to learn from one another. Various solutions have to be tested and discussed with the customer, and some requirements cannot be guaranteed based on their theoretical description (for example, real-time requirements). Before the definition phase can be completed, it may therefore be necessary to evaluate individual aspects of the project in a prototype system.

A technical proof of concept is used to implement such a prototype and enables clarification of any technical issues that may arise. It is used to determine whether interaction and cooperation between the technical components functions correctly. Unlike with a prototype, real-world functionality doesn’t play a role in the technical proof of concept.

A prototype is a simplified experimental model of a planned product or building block. It includes all the functions necessary to fulfill its designed purpose. It may correspond to the planned end product purely in terms of its appearance or in specific technical terms. A prototype often serves as a preliminary step to mass production, but can also be planned as a one-off item whose purpose is solely to demonstrate a specific concept.

A software prototype shows how the selected functions of the target application will look in practical use. This enables you to better explain and demonstrate the associated requirements and/or development issues. Prototype software enables users to gain important experimental experience, and thus serves as a basis for discussion and further decision-making. This process (known as “prototyping”) produces swift results and provides early-stage feedback on the suitability of a solution approach. Early feedback reduces the development risk, and the quality assurance staff can be integrated into the software development process from the very start. With the aid of suitable tools, the prototyping process can be accelerated. The process is then referred to as “rapid prototyping”.

As well as the advantages already mentioned prototyping also has some disadvantages. It often increases the development effort (a prototype usually has to be developed in addition to the actual application). There is also the risk of planned “throwaway” prototypes not being junked after all and providing sub-optimal solutions. Prototypes can be regarded as an alternative to high-quality documentation, which is often neglected by the developers.

Types of software prototypes include:

In addition to reviews, unit tests, acceptance, and regression tests, architecture analysis is an important technique that supports the software architect in his day-to-day work. It enables evaluation of the quality of a software architecture. In addition to the use of a suitable quality model and definition of functional processes, one of the most important prerequisites for architecture analysis is requirements analysis and analysis of the architecture’s objectives.

The results of architecture analysis can be evaluated based on specific quality criteria such as robustness, availability, or security. These criteria must be defined and prioritized at an early stage of requirements specification.

ATAM stands for Architecture Tradeoff Analysis Method. It is a methodical approach to qualitative architecture evaluation and aids selection of an appropriate architecture for a planned system. ATAM was developed at the Software Engineering Institute (SEI) at Carnegie Mellon University.

ATAM is a leading method in the field of architecture evaluation.

ATAM breaks down the evaluation of a software architecture into four phases (see figure 5–2).

Figure 5-2The evaluation procedure

The first step of an architecture evaluation involves the customer or client identifying the relevant stakeholders for the procedure. This will usually be a small team that includes at least (client) management and project management.

Prior to definition of the evaluation objectives, the kick-off phase involves a brief presentation of the evaluation method to the stakeholders. It should be clear to all participants that the purpose is to determine risks (and non-risks) and to outline appropriate measures. The client presents the business objectives of the system for evaluation.

The responsible architect should then briefly present the architecture of the system. This presentation includes the complete context of the system (including all adjacent systems), top-level building blocks, and runtime views of the most important use cases or change scenarios.

The stakeholders should then compile all significant quality requirements and organize them hierarchically in a quality tree. To enable the evaluation team to begin work, they also need to describe scenarios for the most important quality objectives.

Following analysis of these scenarios, decisions should be classified into four aspects (see figure 5–3):

Risks are elements of the architecture that, depending on how things develop, can endanger the fulfillment of business objectives and cause other issues too.

At “sensitivity points” in an architecture, even minor changes can have wide-ranging consequences. These are the critical components in an architecture for fulfillment of a quality criterion.

Trade-offs specify whether (or how) a design decision could mutually affect multiple quality characteristics.

Which scenarios are fulfilled in all cases (i.e., risk-free)?

Risks are classified into themes that indicate how they may endanger the business objectives.

Figure 5-3ATAM (Source: Software Engineering Institute)

A quality tree hierarchically refines the system-specific or product-specific quality requirements (see figure 5–4). Major criteria are located at the top of the tree, while more specific requirements are found at the bottom. The “leaves” of the quality tree are scenarios (see the Scenarios section below) that describe individual characteristics in as specific and detailed a way as possible.

Figure 5-4The hierarchical form of a quality tree

The characteristics and their scenarios are prioritized by the leading stakeholders according to their respective business benefit (see figure 5–5), and also by the architects according their technical complexity. This provides the architects with prioritized scenarios during the actual evaluation.

Figure 5-5Prioritization within the quality tree

The evaluation of the quality characteristics1 usually takes place in a small group together with the architect, and in the order determined by the priorities.

The process involves answering a range of different questions:

On completion of the evaluation you should have a good overview of:

Scenarios

In an ATAM context, quality characteristics are described by means of scenarios (scenario-based architecture evaluation). These scenarios describe how a system reacts in specific situations, they characterize the interaction of stakeholders with the system, and they enable assessment of the risks involved in achieving these quality characteristics. They are used to specify precisely what the parties involved in the project understand by specific quality characteristics—for example, what “reliability” actually means to all those involved.

Types of scenarios

Types of scenarios include:

Scenarios normally consist of the following main elements (quoted from [HS11]. The original list comes from [BCK03]):

Figure 5–6 provides an overview of the component elements of a scenario.

Figure 5-6Elements of a scenario

Sample scenarios

Here are some examples of the use of scenarios for detailed specification of quality requirements.

Table 5-2 lists some sample elements of performance scenarios.

Table 5-2Sample elements of performance scenarios

Table 5-3 lists some sample elements of reliability scenarios.

Table 5-3Sample elements of reliability scenarios

Here are some detailed excerpts from the Software architectures and quality section of the iSAQB curriculum [isaqb-curriculum] to help you consolidate what you have learned.

LG4-1:Discuss quality models and quality characteristics.

LG4-1:Discuss quality models and quality characteristics.

LG4-2:Define quality requirements for software architectures.

LG4-2:Define quality requirements for software architectures.

LG4-3:Perform qualitative evaluation of software architectures.

LG4-3:Perform qualitative evaluation of software architectures.

LG4-4:Understanding the quantitative evaluation of software architectures.

LG4-4:Understanding the quantitative evaluation of software architectures.

LG4-5:Understanding how quality objectives can be achieved.

LG4-5:Understanding how quality objectives can be achieved.

1Strictly speaking, the risks associated with fulfilment of the each scenario are evaluated.