The term, metadata, was first coined in 1968 by an American computer scientist, Philip Bagley, who used the term in his technical report, “Extension of Programming Language Concepts.” Since that time, many fields, such as Information Technology, Information Science, Librarianship, and of course the Computer and Video fields, have adopted this term. The prefix, meta, is Greek for “among,” “after,” or “beyond.” In English, meta is used to indicate a concept, which is an abstraction from another concept. So metadata is data developed about other data. It’s often defined simply as “data about data,” or “information about information.”

Types of Metadata

In general, metadata information describes, explains, locates and makes it easier to retrieve, use, or manage a resource. A book contains information, but there is also information about the book, such as the author’s name, book title, subtitle, published date, publisher’s name, and call number so you know where to find the book on a library shelf. This extra information outside of the book content is its metadata. Logging this information in a library system allows a user to search for the book by any of the criteria logged without knowing one word of the book content itself.

The same is true for media such as a movie on a DVD. You can’t hold up a DVD to see the movie that’s on it, so you have to search for information about it online or read the DVD label or case. While reading that information, you might become aware of the different types of metadata. In fact, there are three basic types of metadata: Structural, Descriptive, and Administrative. Using the DVD as an example, Descriptive metadata often includes the information you might search for first, such as the movie title and main actors, perhaps a byline that further describes the film (Figure 17.1). Structural metadata includes how the movie data is organized, for example what slots of information the metadata will contain. The DVD will also contain Administrative information, often in small print, such as the creator, studio or production company that produced it, the date it was created, perhaps the type, or format, and so on.

As you look at this information, it’s quite possible you might never give a second thought to the type of metadata you’re reviewing. In truth, the type is less important than becoming aware of the different ways metadata can be used.

NOTE The ISO had a hand in standardizing metadata. In its report, ISO/IEC 11179, it specifies the kind and quality of meta-data necessary to describe data, and it specifies the management and administration of that metadata in a metadata registry (MDR).

Metadata in Photos

Have you ever looked at an old photograph that’s started to yellow with age? If you turned the photo over, it’s very likely you saw something written on its back, usually the date and the person in the photo. Before metadata became widely used, this was the “human” or analog way to enter metadata. Someone wrote down what they knew about the person, event or time. In whatever form it took, that information was kept with the photo. Today, we do the same thing but we do it digitally. Using photo programs such as Apple’s iPhoto, Windows Live Photo Gallery, and Picasa, we are able to label and rate photos, enter captions, and add key words about a photo. This makes it very easy to organize and retrieve images.

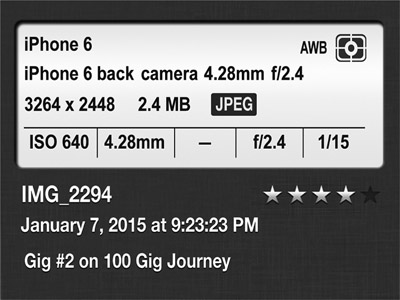

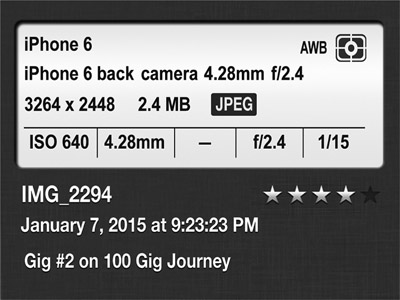

While humans can enter descriptive data about the photos, there is other information, let’s call it structural or sometimes administrative metadata, which the camera that captured the photo knows about the image. For example, the moment you snap a picture, the camera embeds information into the image such as the file type (e.g. JPEG, TIFF), image resolution (3264 × 2448), date and time the image was taken, perhaps even the name of the camera and size of the file (Figure 17.2). This metadata can also be used as search criteria alongside the descriptive information you manually entered yourself.

Figure 17.2

iPhoto Metadata for Image Imported from an iPhone

Metadata in Video

Like the DVD movie example sited previously, metadata is particularly useful in video because its contents aren’t directly decipherable by computers. And since video has become a primary form of communication and expression in the 21st Century, the need to organize it and access it quickly and easily is very important. One of the first forms of metadata used in video was timecode. Timecode is a labelling system that provides a way to search and edit individual frames of video. (Timecode is discussed in more detail later in this chapter.)

As video has become digital, metadata has become an essential part of the video data stream. Metadata in the serial digital video stream contains the information about what video standard is being used, the frame rate, line count, the number and type of audio channels, and the compression and encoding system used. Any necessary information to allow the digital data to be correctly interpreted for use and display is contained in the metadata. Incorrect metadata can cause the rest of the data itself to be unusable. Data that is incorrectly identified cannot be retrieved. For example, if the metadata indicates that the audio is Dolby surround sound when it is actually two channel stereo, the audio will not be reproduced correctly as the system will be trying to recreate a signal that is not there.

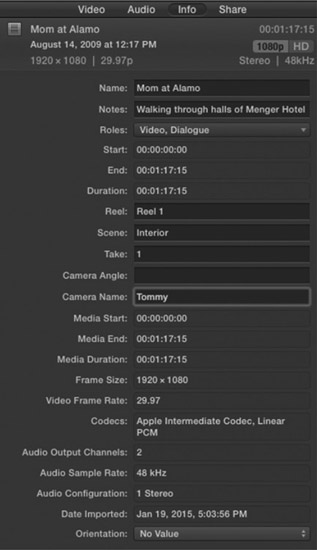

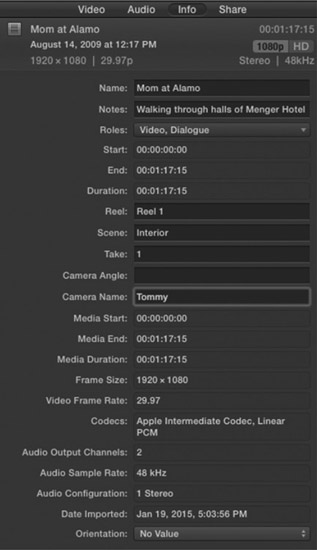

As with still images, there are two ways metadata can be attached to a video file: it can be captured automatically or entered manually. If it’s automatically generated and captured, it’s most likely administrative type of metadata, while the manually entered meta-data tends to be more descriptive. Most video metadata is automatically generated in the camera or through the use of software. The information captured is contained within the video file and can easily be accessed in a video editing system (Figure 17.3).

NOTE When working with metadata, you may see a reference to a standard format called Exchangeable Information File Format, or EXIF. This is a standard that makes it possible for different systems to read image and video metadata. Most EXIF data cannot be edited after capture but sometimes the GPS and date/time stamp can be changed.

Logging footage has been a customary stage in the editing process, both film and video. Today, the video editor or producer can go way beyond just naming clips. Editors can add keywords that further identify the clip, such as who is in the clip, what scene the clip is from, who the client is, as well as notes or excerpts from the narration or script (Figure 17.3). Some editing systems can recognize the number of people in a shot and what type of shot it is—wide, medium, close-up, and so on. When this option is selected, the information is entered automatically during the capture process. More and more, editing software is including metadata entry options that increase the amount and variety of information you can add to a video file.

Figure 17.3

Clip Information in Apple’s Final Cut Pro X Editing Application.

In this video clip information window, notice that some fields are gray and some are black. The gray fields contain the camera-recorded data, which has been entered automatically. Those fields are not editable. The descriptive fields, such as Name, Notes, Reel, Scene, Take, and so on, are customizable fields where you can enter information manually within the editing application.

TIP Remember, metadata travels with video files as it does with still images. So include information that will make it easy to find or access that video in other projects or applications.

Metadata in Broadcast and on the Web

If you are the only person looking at your video, and you know where everything is, it may be less important to think about creating a structured metadata system. But when video is being broadcast or uploaded to the Internet, it’s very important to think about how the metadata will be used.

For example, a worldwide sports event might be shot by a major broadcaster and archived for future broadcast. But what if the News Department at the broadcast station wanted to air a clip of the winning touchdown? The automatically captured metadata might provide clip names, durations, and running timecode, and other information. But if a logging assistant entered keywords such as touchdown, great catch, or quarterback sack at the locations they occurred during the game, editors and operators who wanted to access that information later would have no trouble finding what they need.

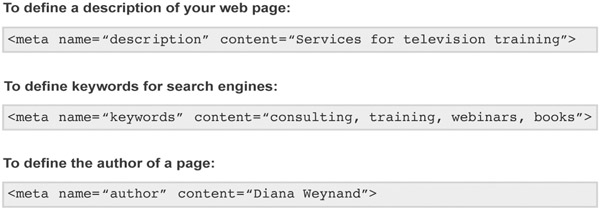

The same holds true for the Internet. Metadata on the web contains descriptions of the web page contents and keywords that link to the content. This metadata is referred to as metatags, which are tags or coding statements in HTML that describe some aspect of the contents of the Web page (Figure 17.4). That information might include the language the site is written in, the tools used to create it, and where to go for more information. Some metatags are only visible to search engines looking for that information. Some sites, such as Wikipedia, encourage the use of metadata by asking editors to add hyperlinks to category names in the articles, and to include information with citations such as title, source and access date.

Metatags and keywords not only provide ways of organizing and accessing media, but they offer great marketing potential as well. For example, keywords allow YouTube users to easily find specific videos even though 100 hours of video are uploaded every minute (as of this writing). YouTube, along with other video sharing sites, requires metadata for videos being uploaded so the over 3 billion unique users a month can find what they’re looking for quickly and learn more about the video they’re viewing.

Timecode Metadata

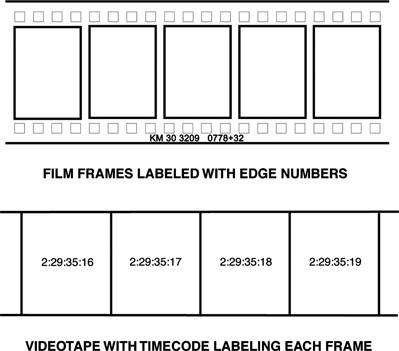

In the early days of television, all programs were broadcast live. The only means of archiving a television program was by using a process called kinescope. Kinescoping was the process of focusing a 16mm motion picture camera at a television monitor and photographing the images. Starting in the mid-1950s, when videotape recording was invented, programs were taped live and then played back for broadcast at a different time. These programs were played in real time without the benefit of instant replays or freeze frames. When videotape began to be used for editing purposes, it became critical to identify specific frames of video, access those frames, then cue and edit them to specific locations in an edited master tape. Film frames traditionally could be identified by numbers imprinted along the

edge of the film. Unlike film, at the time of manufacture, video had no numbering that could be used to identify individual video frames.

In 1967, EECO—a video equipment manufacturing company—created timecode, a system by which videotape could be synchronized, cued, identified, and controlled. This format was adopted by SMPTE (the Society of Motion Picture and Television Engineers) as a standard. SMPTE timecode records a unique number on each frame of video (Figure 17.5). Working with timecoded video ensures that a specific video frame can be accessed using the same time-code number over and over again with complete accuracy. This was referred to as frame accuracy, as in having a frame-accurate editing system or making a frame-accurate edit.

Reading Timecode

Timecode is read as a digital display much like a digital clock. However, in addition to the hours, minutes, and seconds of a digital clock, timecode includes a frame count so the specific video frames can be identified and accessed. A timecode number is displayed with a colon separating each category, constructed as follows:

Table 17.1

Timecode

| 00: |

00: |

00: |

00: |

| hours |

minutes |

seconds |

frames |

A timecode number of one hour, twenty minutes, and three frames would be written as 01:20:00:03. The zeros preceding the first digit do not affect the number and are often omitted when entering time-code numbers in editing systems or other equipment. However, the minutes, seconds, and frames categories following a number must use zeros, as they hold specific place values.

Here are some examples of how timecode is read and notated:

| 2:13:11 |

Two minutes, thirteen seconds, and eleven frames. |

| 23:04 |

Twenty-three seconds and four frames. |

| 10:02:00 |

Ten minutes, two seconds, and zero frames. (It is necessary to hold the frames place even though its value is zero. If the last two zeros were left out, the value of this number would change to ten seconds and two frames.) |

| 3:00:00:00 |

Three hours even. |

| 14:00:12:29 |

Fourteen hours, twelve seconds, and twenty-nine frames. |

| 15:59:29 |

Fifteen minutes, fifty-nine seconds, and twenty-nine frames. |

Timecode uses military clock time in that it runs from 00:00:00:00 to 23:59:59:29, that is, from midnight to the frame before midnight. In a 30 fps standard, if one frame is added to this last number, the number turns over to all zeros again, or midnight. The frame count of a 30 fps video is represented by the 30 numbers between 00 and 29. The number 29 would be the highest value of frames in that system. Add one frame to 29, and the frame count goes to zeros while the seconds increase by 1. The frame count of a 24 fps video is represented by the 24 numbers between 00 and 23.

Timecode can be used with any available video standard regardless of that standard’s frame rate. The only difference in the timecode numbering process is that the last two digits indicating frames may appear differently. The frames will reflect whatever frame rate was chosen as the recording format. For example, a timecode location in a 24 fps HDTV standard might be 1:03:24:23, which represents the last frame of that second; whereas the last frame of a 30 fps HDTV standard would be 1:03:24:29.

Timecode Formats

Since its development, timecode has had several formats. The original format was Longitudinal or Linear Timecode, or LTC. Longitudinal timecode is a digital signal recorded as an audio signal.

Another timecode format is Vertical Interval Timecode, or VITC, pronounced vit-see. While LTC is an audio signal, VITC is recorded as visual digital information in the vertical interval as part of the analog video signal. VITC must be recorded simultaneously with the video. Once recorded, VITC can be used to identify a frame of video either in still mode or in motion.

In digital recording formats, the timecode is carried as part of the Metadata contained within the digital stream or file structure. In digital video signals such as SDI and HDSDI, this is part of the HANC (Horizontal Ancillary Data). For file-based recording such as MXF and QuickTime, the timecode is encoded on a virtual track reserved for this purpose.

Non-Drop-Frame and Drop-Frame Timecode

When timecode was created, NTSC video ran at 29.97 frames per second. This was a technical adaptation to allow color broadcasts. In other words, it takes more than 1 second to scan a full 30 frames. In fact, it takes 1.03 seconds to scan 30 frames of color video.

Timecode had to account for this small difference. If every video frame was numbered sequentially with 30 fps timecode, at the end of an hour the timecode would read 59 minutes, 56 seconds, and 12 frames, or 3 seconds and 18 frames short of an hour, even though the program ran a full hour. There are fewer frames per second and thus fewer frames in an hour.

Broadcast programs have to be an exact length of time in order to maintain a universal schedule. In order to allow timecode to be used as a reference to clock time, SMPTE specified a method of timecoding that made up for the 3 seconds and 18 frames, or a 108-frame difference. It was determined that numbers should be dropped from the continuous code by advancing the clock at regular intervals. By doing this, there would be an hour’s duration in timecode numbers at the end of an hour’s worth of time.

The formula began by advancing the clock by two frames of time-code numbers each minute, except every tenth minute (10, 20, 30, and so on). By advancing 2 frames on every minute, a total of 120 frames, or 12 more than the 108 frames needed, would be dropped. But 2 frames dropped from every minute except the tens of minutes would be 54 minutes × 2 frames per minute, which equals 108 frames, accounting for the necessary number of frames to identify a true clock time period. SMPTE named the new alternative method of time coding color video drop-frame timecode in reference to its process. Drop-frame timecode is sometimes referred to as time of day, or TOD timecode. The original 30 fps continuous code was subsequently termed non-drop-frame timecode (Figure 17.6).

In drop-frame timecode, the timecode is always advanced by skipping the first two frame numbers of each minute except every tenth minute. For example, the numbering sequence would go from 1:06:59:29 on one frame to 1:07:00:02 on the very next frame (see Figure 17.6). The two frame numbers that are skipped are 1:07:00:00 and 1:07:00:01. At the tenth minute, no numbers are skipped. The numbering sequence at each tenth minute would go from, for example, 1:29:59:29 on one frame to 1:30:00:00 on the next.

Like non-drop-frame timecode, drop-frame timecode leaves no frames of video unlabeled, and no frames of picture information are deleted. The numbers that are skipped do not upset the ascending order that is necessary for some editing systems to read and cue to the timecode. But at the end of an hour’s worth of material, there will be an hour’s duration reflected in the timecode number.

NOTE A convention is followed when working with timecode numbers that helps to identify whether they represent drop-frame or non-drop-frame timecode. If the punctuation before the frames value is a semi-colon, such as 1:07:00;00, it is drop-frame time-code. If there is a colon dividing the frames, such as 1:07:00:00, it is non-drop-frame timecode.

Timecode at 24 Frames Per Second

While the terms 24 fps and 30 fps are used for convenience, the true frame rates that most systems use are 23.976 fps and 29.97 fps respectively. SMPTE timecode for video runs at the same frame rate the video does, 29.97 fps in drop-frame timecode mode. Timecode used in the 24 fps video system runs at 23.976 fps, also referred to as 23.98. There are no drop-frame or non-drop-frame modes in a 24 fps system because there are no conflicting monochrome versus color frames rates as there were in the original video systems developed by the NTSC. As a single frame rate is decided upon and the synchronization is fixed, a timecode system is developed to keep clock accurate time. Had the original NTSC system started with color, the timecode system developed simultaneously would have eliminated the need for more than one type of timecode.

This alteration in the frame rate from 24 fps to 23.98 fps was necessary to simplify the 24 fps to 30 fps conversion. Without it, converting true 24 fps images and timecode to the existing 29.97 frame rate in video would be much more complex. When converting 24 fps film or HD to 30 fps video, the additional time-code numbers are added as the additional frames are added. When transferring the other way, from 30 fps to 24 fps, the additional numbers are deleted and the timecode is resequenced as the additional fields and frames are deleted.

Visual Timecode

Timecode can also be displayed as numbers over the visual images. This is achieved through the use of a character or text generator or sometimes through an internal filter in an editing system. The

timecode signal is fed into the character generator, and the character generator in turn displays a visual translation of the timecode numbers. That display is placed over the video material and can be shown on the monitor or recorded into the picture (Figure 17.7). Visual timecode is not a signal that can be read by machine, computer, or timecode reader.

NOTE In addition to video productions, timecode can also be used in sound and music production. This is especially useful for audio artists working on sound design and scoring for film and video. The timecode signal for these applications can be recorded as AES-EBU digital audio or even transported using the MIDI interface.