A History of Biomaterials

Buddy D. Ratner

“History consists of a series of accumulated imaginative inventions”

Voltaire

A decade into the twenty-first century, biomaterials are widely used throughout medicine, dentistry, and biotechnology. Just 60 years ago biomaterials as we think of them today did not exist. The word “biomaterial” was not used. There were no medical device manufacturers (except for external prosthetics such as limbs, fracture fixation devices, glass eyes, and dental fillings and devices), no formalized regulatory approval processes, no understanding of biocompatibility, and certainly no academic courses on biomaterials. Yet, crude biomaterials have been used, generally with poor to mixed results, throughout history. This chapter will broadly trace the history of biomaterials from the earliest days of human civilization to the dawn of the twenty-first century. It is convenient to organize the history of biomaterials into four eras: prehistory; the era of the surgeon hero; designed biomaterials/engineered devices; and the contemporary era taking us into the new millennium. The emphasis of this chapter will be on the experiments and studies that set the foundation for the field we call biomaterials, largely between 1920 and 1980.

Biomaterials before World War II

Before Civilization

The introduction of non-biological materials into the human body took place throughout history. The remains of a human found near Kennewick, Washington, USA (often referred to as the “Kennewick Man”) were dated (with some controversy) to be 9000 years old. This individual, described by archeologists as a tall, healthy, active person, wandered through the region now know as southern Washington with a spear point embedded in his hip. It had apparently healed in, and did not significantly impede his activity. This unintended implant illustrates the body’s capacity to deal with implanted foreign materials. The spear point has little resemblance to modern biomaterials, but it was a “tolerated” foreign material implant, just the same. Another example of the introduction of foreign material into the skin, dated to over 5000 years ago, is the tattoo. The carbon particles and other substances probably elicited a classic foreign-body reaction.

Dental Implants in Early Civilizations

Unlike the spear point described above, dental implants were devised as implants and used early in history. The Mayan people fashioned nacre teeth from sea shells in roughly 600 AD, and apparently achieved what we now refer to as osseointegration (see Chapter II.5.7), basically a seamless integration into the bone (Bobbio, 1972). Similarly, in France, a wrought iron dental implant in a corpse was dated to 200 AD (Crubezy et al., 1998). This implant, too, was described as properly osseointegrated. There was no materials science, biological understanding, or medicine behind these procedures. Still, their success (and longevity) is impressive and highlights two points: the forgiving nature of the human body and the pressing drive, even in prehistoric times, to address the loss of physiologic/anatomic function with an implant.

Sutures Dating Back Thousands of Years

There is loose evidence that sutures may have been used even in the Neolithic period. Large wounds were closed early in history primarily by one of two methods – cautery or sutures. Linen sutures were used by the early Egyptians. Catgut was used in the middle ages in Europe. In South Africa and India, the heads of large, biting ants clamped wound edges together.

Metallic sutures are first mentioned in early Greek literature. Galen of Pergamon (circa 130–200 AD) described ligatures of gold wire. In 1816, Philip Physick, University of Pennsylvania Professor of Surgery, suggested the use of lead wire sutures, noting little reaction. J. Marion Sims of Alabama had a jeweler fabricate sutures of silver wire, and in 1849 performed many successful operations with this metal.

Consider the problems that must have been experienced with sutures in eras with no knowledge of sterilization, toxicology, immunological reaction to extraneous biological materials, inflammation, and biodegradation. Yet sutures were a relatively common fabricated or manufactured biomaterial for thousands of years.

Artificial Hearts and Organ Perfusion

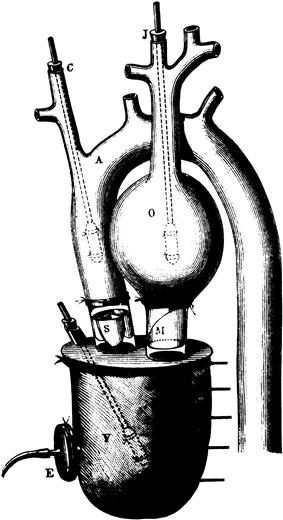

In the fourth century BC, Aristotle called the heart the most important organ in the body. Galen proposed that veins connected the liver to the heart to circulate “vital spirits throughout the body via the arteries.” English physician William Harvey, in 1628 espoused a relatively modern view of heart function when he wrote: “the heart’s one role is the transmission of the blood and its propulsion, by means of the arteries, to the extremities everywhere.” With the appreciation of the heart as a pump, it was a logical idea to think of replacing the heart with an artificial pump. In 1812, the French physiologist Le Gallois expressed his idea that organs could be kept alive by pumping blood through them. A number of experiments on organ perfusion with pumps were performed from 1828 to 1868. In 1881, Étienne-Jules Marey, a brilliant scientist and thinker who published and invented in photographic technology, motion studies, and physiology, described an artificial heart device (Figure 1), primarily oriented to studying the beating of the heart.

FIGURE 1 An artificial heart by Étienne-Jules Marey, Paris, 1881.

In 1938, aviator (and engineer) Charles Lindbergh and surgeon (and Nobel Prize Winner) Alexis Carrel wrote a visionary book, The Culture of Organs. They addressed issues of pump design (referred to as the Lindbergh pump), sterility, blood damage, the nutritional needs of perfused organs, and mechanics. This book is a seminal document in the history of artificial organs. In the mid-1950s, Dr. Paul Winchell, better known as a ventriloquist, patented an artificial heart. In 1957, Dr. Willem Kolff and a team of scientists tested the artificial heart in animals. More modern conceptions of the artificial heart (and left ventricular assist device) will be presented below and in Chapter II.5.3.D).

Contact Lenses

Leonardo DaVinci, in the year 1508, developed the concept of contact lenses. Rene Descartes is credited with the idea of the corneal contact lens (1632) and Sir John F. W. Herschel (1827) suggested that a glass lens could protect the eye. Adolf Gaston Eugen Fick (nephew of Adolf Eugen Fick of Fick’s Law of Diffusion fame) was an optometrist by profession. One of his inventions (roughly 1860) was a glass contact lens, possibly the first contact lens offering real success. He experimented on both animals and humans with contact lenses. In the period from 1936 to 1948, plastic contact lenses were developed, primarily of poly(methyl methacrylate).

Basic Concepts of Biocompatibility

Most implants prior to 1950 had a low probability of success, because of a poor understanding of biocompatibility and sterilization. As will be elaborated upon throughout the textbook, factors that contribute to biocompatibility include the chemistry of the implant, leachables, shape, mechanics, and design. Early studies, especially with metals, focused primarily on ideas from chemistry to explain the observed bioreaction.

Possibly the first study assessing the in vivo bioreactivity of implant materials was performed by H. S. Levert (1829). Gold, silver, lead, and platinum specimens were studied in dogs, and platinum, in particular, was found to be well-tolerated. In 1886, bone fixation plates of nickel-plated sheet steel with nickel-plated screws were studied. In 1924, A. Zierold published a study on tissue reaction to various materials in dogs. Iron and steel were found to corrode rapidly, leading to resorption of adjacent bone. Copper, magnesium, aluminum alloy, zinc, and nickel discolored the surrounding tissue. Gold, silver, lead, and aluminum were tolerated, but inadequate mechanically. Stellite, a Co–Cr–Mo alloy, was well tolerated and strong. In 1926, M. Large noted inertness displayed by 18-8 stainless steel containing molybdenum. By 1929 Vitallium alloy (65% Co–30% Cr–5%Mo) was developed and used with success in dentistry. In 1947, J. Cotton of the UK discussed the possible use of titanium and alloys for medical implants.

The history of plastics as implantation materials does not extend as far back as metals, simply because there were few plastics prior to the 1940s. What is possibly the first paper on the implantation of a modern synthetic polymer, nylon, as a suture appeared in 1941. Papers on the implantation of cellophane, a polymer made from plant sources, were published as early as 1939, it being used as a wrapping for blood vessels. The response to this implant was described as a “marked fibrotic reaction.” In the early 1940s papers appeared discussing the reaction to implanted poly(methyl methacrylate)(PMMA) and nylon. The first paper on polyethylene as a synthetic implant material was published in 1947 (Ingraham et al.). The paper pointed out that polyethylene production using a new high pressure polymerization technique began in 1936. This process enabled the production of polyethylene free of initiator fragments and other additives. Ingraham et al. demonstrated good results on implantation (i.e., a mild foreign-body reaction), and attributed these results to the high purity of the polymer they used. A 1949 paper commented on the fact that additives to many plastics had a tendency to “sweat out,” and this might be responsible for the strong biological reaction to those plastics (LeVeen and Barberio, 1949). They found a vigorous foreign-body reaction to cellophane, Lucite®, and nylon, but an extremely mild reaction to “a new plastic,” Teflon®. The authors incisively concluded: “Whether the tissue reaction is due to the dissolution of traces of the unpolymerized chemical used in plastics manufacture or actually to the solution of an infinitesimal amount of the plastic itself cannot be determined.” The possibility that cellulose might trigger the severe reaction by activating the complement system could not have been imagined, because the complement system had not yet been discovered.

World War II to the Modern Era: The Surgeon/Physician Hero

During World War I, and particularly at the end of the war, newly developed high-performance metal, ceramic, and especially polymeric materials, transitioned from wartime restriction to peacetime availability. The possibilities for using these durable, inert materials immediately intrigued surgeons with needs to replace diseased or damaged body parts. Materials, originally manufactured for airplanes, automobiles, clocks, and radios were taken “off-the-shelf” by surgeons and applied to medical problems. These early biomaterials included silicones, polyurethanes, Teflon®, nylon, methacrylates, titanium, and stainless steel.

A historical context helps us appreciate the contribution made primarily by medical and dental practitioners. Just after World War II, there was little precedent for surgeons to collaborate with scientists and engineers. Medical and dental practitioners of this era felt it was appropriate to invent (improvise) where the life or functionality of their patient was at stake. Also, there was minimal government regulatory activity, and human subject protections as we know them today were non-existent (see Chapters III.2.4 and III.2.7). The physician was implicitly entrusted with the life and health of the patient and had much more freedom than is seen today to take heroic action when other options were exhausted.1 These medical practitioners had read about the post-World War II marvels of materials science. Looking at a patient open on the operating table, they could imagine replacements, bridges, conduits, and even organ systems based on such materials. Many materials were tried on the spur of the moment. Some fortuitously succeeded. These were high risk trials, but usually they took place where other options were not available. The term “surgeon hero” seems justified, since the surgeon often had a life (or a quality of life) at stake and was willing to take a huge technological and professional leap to repair the individual. This laissez faire biomaterials era quickly led to a new order characterized by scientific/engineering input, government quality controls, and a sharing of decisions prior to attempting high-risk, novel procedures. Still, a foundation of ideas and materials for the biomaterials field was built by courageous, fiercely committed, creative individuals, and it is important to look at this foundation to understand many of the attitudes, trends, and materials common today.

Intraocular Lenses

Sir Harold Ridley, MD (1906–2001) (Figure 2), inventor of the plastic intraocular lens (IOL), made early, accurate observations of biological reaction to implants consistent with currently accepted ideas of biocompatibility. After World War II, he had the opportunity to examine aviators who were unintentionally implanted in their eyes with shards of plastic from shattered canopies in Spitfire and Hurricane fighter planes (Figure 2). Most of these flyers had plastic fragments years after the war. The conventional wisdom at that time was that the human body would not tolerate implanted foreign objects, especially in the eye – the body’s reaction to a splinter or a bullet was cited as examples of the difficulty of implanting materials in the body. The eye is an interesting implant site, because you can look in through a transparent window to observe the reaction. When Ridley did so, he noted that the shards had healed in place with no further reaction. They were, by his standard, tolerated by the eye. Today, we would describe this stable healing without significant ongoing inflammation or irritation as “biocompatible.” This is an early observation of “biocompatibility” in humans, perhaps the first, using criteria similar to those accepted today. Based on this observation, Ridley traced down the source of the plastic domes, ICI Perspex® poly(methyl methacrylate). He used this material to fabricate implant lenses (intraocular lenses) that were found, after some experimentation, to function reasonably in humans as replacements for surgically removed natural lenses that had been clouded by cataracts. The first implantation in a human was on November 29, 1949. For many years, Ridley was the center of fierce controversy because he challenged the dogma that spoke against implanting foreign materials in eyes – it is hard to believe that the implantation of a biomaterial would provoke such an outcry. Because of this controversy, this industry did not instantly arise – it was the early 1980s before IOLs became a major force in the biomedical device market. Ridley’s insightful observation, creativity, persistence, and surgical talent in the late 1940s evolved into an industry that presently puts more than 7,000,000 of these lenses annually in humans. Through all of human history, cataracts meant blindness or a surgical procedure that left the recipient needing thick, unaesthetic spectacle lenses that poorly corrected the vision. Ridley’s concept, using a plastic material found to be “biocompatible,” changed the course of history and substantially improved the quality of life for millions of individuals with cataracts. Harold Ridley’s story is elaborated upon in an obituary (Apple and Trivedi, 2002).

FIGURE 2 Left: Sir Harold Ridley, inventor of the intraocular lens, knighted by Queen Elizabeth II for his achievement. Right: Shards from the canopy of the Spitfire airplane were the inspiration leading to intraocular lenses. (Image by Bryan Fury75 at fr.wikipedia [GFDL (www.gnu.org/copyleft/fdl.html), from Wikimedia Commons].)

Hip and Knee Prostheses

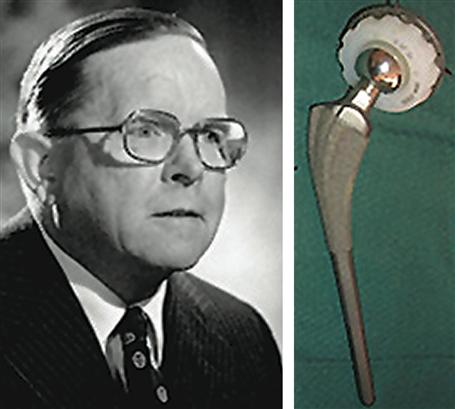

The first hip replacement was probably performed in 1891 by a German surgeon, Theodore Gluck, using a cemented ivory ball. This procedure was not successful. Numerous attempts were made between 1920 and 1950 to develop a hip replacement prosthesis. Surgeon M. N. Smith-Petersen, in 1925, explored the use of a glass hemisphere to fit over the ball of the hip joint. This failed due to poor durability. Chrome–cobalt alloys and stainless steel offered improvements in mechanical properties, and many variants of these were explored. In 1938, the Judet brothers of Paris, Robert and Jean, explored an acrylic surface for hip procedures, but it had a tendency to loosen. The idea of using fast setting dental acrylics to glue prosthetics to bone was developed by Dr. Edward J. Haboush in 1953. In 1956, McKee and Watson-Farrar developed a “total” hip with an acetabular cup of metal that was cemented in place. Metal-on-metal wear products probably led to high complication rates. It was John Charnley (1911–1982) (Figure 3), working at an isolated tuberculosis sanatorium in Wrightington, Manchester, England, who invented the first really successful hip joint prosthesis. The femoral stem, ball head, and plastic acetabular cup proved to be a reasonable solution to the problem of damaged joint replacement. In 1958, Dr. Charnley used a Teflon acetabular cup with poor outcomes due to wear debris. By 1961 he was using a high molecular weight polyethylene cup, and was achieving much higher success rates. Interestingly, Charnley learned of high molecular weight polyethylene from a salesman selling novel plastic gears to one of his technicians. Dr. Dennis Smith contributed in an important way to the development of the hip prosthesis by introducing Dr. Charnley to poly(methyl methacrylate) cements, developed in the dental community, and optimizing those cements for hip replacement use. Total knee replacements borrowed elements of the hip prosthesis technology, and successful results were obtained in the period 1968–1972 with surgeons Frank Gunston and John Insall leading the way.

FIGURE 3 Left: Sir John Charnley. Right: The original Charnley hip prosthesis. (Hip prosthesis photo courtesy of the South Australian Medical Heritage Society, Inc.)

Dental Implants

Some “prehistory” of dental implants has been described above. In 1809, Maggiolo implanted a gold post anchor into fresh extraction sockets. After allowing this to heal, he affixed to it a tooth. This has remarkable similarity to modern dental implant procedures. In 1887, this procedure was used with a platinum post. Gold and platinum gave poor long-term results, and so this procedure was never widely adopted. In 1937, Venable used surgical Vitallium and Co–Cr–Mo alloy for such implants. Also around 1937, Strock at Harvard used a screw-type implant of Vitallium, and this may be the first successful dental implant. A number of developments in surgical procedure and implant design (for example, the endosteal blade implant) then took place. In 1952, a fortuitous discovery was made. Per Ingvar Brånemark, an orthopedic surgeon at the University of Lund, Sweden, was implanting an experimental cage device in rabbit bone for observing healing reactions. The cage was a titanium cylinder that screwed into the bone. After completing the experiment that lasted several months, he tried to remove the titanium device and found it tightly integrated in the bone (Brånemark et al., 1964). Dr. Brånemark named the phenomenon “osseointegration,” and explored the application of titanium implants to surgical and dental procedures. He also developed low-impact surgical protocols for tooth implantation that reduced tissue necrosis and enhanced the probability of good outcomes. Most dental implants and many other orthopedic implants are now made of titanium and its alloys.

The Artificial Kidney

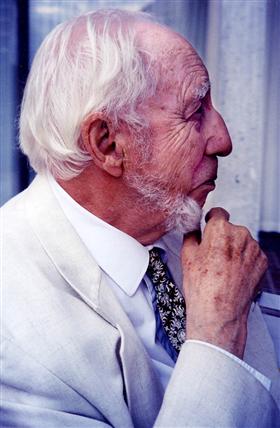

Kidney failure, through most of history, was a sentence to an unpleasant death lasting over a period of about a month. In 1910, at Johns Hopkins University, the first attempts to remove toxins from blood were made by John Jacob Abel. The experiments were with rabbit blood, and it was not possible to perform this procedure on humans. In 1943, in Nazi-occupied Holland, Willem Kolff (Figure 4), a physician just beginning his career at that time, built a drum dialyzer system from a 100 liter tank, wood slats, and 130 feet of cellulose sausage casing tubing as the dialysis membrane. Some successes were seen in saving lives where prior to this there was only one unpleasant outcome to kidney failure. Kolff took his ideas to the United States and in 1960, at the Cleveland Clinic, developed a “washing machine artificial kidney” (Figure 5).

FIGURE 4 Dr. Willem Kolff at age 92. (Photograph by B. Ratner.)

FIGURE 5 Willem Kolff (center) and the washing machine artificial kidney.

Major advances in kidney dialysis were made by Dr. Belding Scribner at the University of Washington (Figure 6). Scribner devised a method to routinely access the bloodstream for dialysis treatments. Prior to this, after just a few treatments, access sites to the blood were used up and further dialysis was not possible. After seeing the potential of dialysis to help patients, but only acutely, Scribner tells the story of waking up in the middle of the night with an idea to gain easy access to the blood – a shunt implanted between an artery and vein that emerged through the skin as a “U.” Through the exposed portion of the shunt, blood access could be readily achieved. When Dr. Scribner heard about the new plastic, Teflon®, he envisioned how to get the blood out of and into the blood vessels. His device, built with the assistance of Wayne Quinton (Figure 6), used Teflon tubes to access the vessels, a Dacron® sewing cuff through the skin, and a silicone rubber tube for blood flow. The Quinton–Scribner shunt made chronic dialysis possible, and is said to be responsible for more than a million patients being alive today. Interestingly, Dr. Scribner refused to patent his invention because of its importance to medical care. Additional important contributions to the artificial kidney were made by Chemical Engineering Professor Les Babb (University of Washington) who, working with Scribner, improved dialysis performance and invented a proportioning mixer for the dialysate fluid. The first dialysis center was opened in Seattle making use of these important technological advances (Figure 6). The early experience with dialyzing patients where there were not enough dialyzers to meet the demand also made important contributions to bioethics associated with medical devices (Blagg, 1998).

FIGURE 6 (a) Belding Scribner (Courtesy of Dr. Eli Friedman); (b) Wayne Quinton (Photo by B. Ratner); (c) Plaque commemorating the original location in Seattle of the world’s first artificial kidney center.

The Artificial Heart

Willem Kolff was also a pioneer in the development of the artificial heart. He implanted the first artificial heart in the Western hemisphere in a dog in 1957 (a Russian artificial heart was implanted in a dog in the late 1930s). The Kolff artificial heart was made of a thermosetting poly(vinyl chloride) cast inside hollow molds to prevent seams. In 1953, the heart-lung machine was invented by John Gibbon, but this was useful only for acute treatment, such as during open heart surgery. In 1964, the National Heart and Lung Institute of the NIH set a goal of a total artificial heart by 1970. Dr. Michael DeBakey implanted a left ventricular assist device in a human in 1966, and Dr. Denton Cooley and Dr. William Hall implanted a polyurethane total artificial heart in 1969. In the period 1982–1985, Dr. William DeVries implanted a number of Jarvik hearts based upon designs originated by Drs. Clifford Kwan-Gett and Donald Lyman – patients lived up to 620 days on the Jarvik 7® device.

Breast Implants

The breast implant evolved to address the poor results achieved with direct injection of substances into the breast for augmentation. In fact, in the 1960s, California and Utah classified use of silicone injections as a criminal offense. In the 1950s, poly(vinyl alcohol) sponges were implanted as breast prostheses, but results with these were also poor. University of Texas plastic surgeons Thomas Cronin and Frank Gerow invented the first silicone breast implant in the early 1960s, a silicone shell filled with silicone gel. Many variations of this device have been tried over the years, including cladding the device with polyurethane foam (the Natural Y implant). This variant of the breast implant was fraught with problems. However, the basic silicone rubber–silicone gel breast implant was generally acceptable in performance (Bondurant et al., 1999).

Vascular Grafts

Surgeons have long needed methods and materials to repair damaged and diseased blood vessels. Early in the century, Dr. Alexis Carrel developed methods to anastomose (suture) blood vessels, an achievement for which he won the Nobel Prize in medicine in 1912. In 1942 Blackmore used Vitallium metal tubes to bridge arterial defects in war wounded soldiers. Columbia University surgical intern Arthur Voorhees (1922–1992), in 1947, noticed during a post-mortem that tissue had grown around a silk suture left inside a lab animal. This observation stimulated the idea that a cloth tube might also heal by being populated by the tissues of the body. Perhaps such a healing reaction in a tube could be used to replace an artery? His first experimental vascular grafts were sewn from a silk handkerchief and then parachute fabric (Vinyon N), using his wife’s sewing machine. The first human implant of a prosthetic vascular graft was in 1952. The patient lived many years after this procedure, inspiring many surgeons to copy the procedure. By 1954, another paper was published establishing the clear benefit of a porous (fabric) tube over a solid polyethylene tube (Egdahl et al., 1954). In 1958, the following technique was described in a textbook on vascular surgery (Rob, 1958): “The Terylene, Orlon or nylon cloth is bought from a draper’s shop and cut with pinking shears to the required shape. It is then sewn with thread of similar material into a tube and sterilized by autoclaving before use.”

Stents

Partially occluded coronary arteries lead to angina, diminished heart functionality and eventually, when the artery occludes (i.e., myocardial infarction), death of a localized portion of the heart muscle. Bypass operations take a section of vein from another part of the body and replace the occluded coronary artery with a clean conduit – this is major surgery, hard on the patient, and expensive. Synthetic vascular grafts in the 3 mm diameter size that is appropriate to the human coronary artery anatomy will thrombose, and thus cannot be used. Another option is percutaneous transluminal coronary angioplasty (PTCA). In this procedure, a balloon is threaded on a catheter into the coronary artery and then inflated to open the lumen of the occluding vessel. However, in many cases the coronary artery can spasm and close from the trauma of the procedure. The invention of the coronary artery stent, an expandable mesh structure that holds the lumen open after PTCA, was revolutionary in the treatment of coronary occlusive disease. In his own words, Dr. Julio Palmaz (Figure 7) describes the origins and history of the cardiovascular stent.

I was at a meeting of the Society of Cardiovascular and Interventional Radiology in February 1978 when a visiting lecturer, Doctor Andreas Gruntzig from Switzerland, was presenting his preliminary experience with coronary balloon angioplasty. As you know, in 1978 the mainstay therapy of coronary heart disease was surgical bypass. Doctor Gruntzig showed his promising new technique to open up coronary atherosclerotic blockages without the need for open chest surgery, using his own plastic balloon catheters. During his presentation, he made it clear that in a third of the cases, the treated vessel closed back after initial opening with the angioplasty balloon because of elastic recoil or delamination of the vessel wall layers. This required standby surgery facilities and personnel, in case acute closure after balloon angioplasty prompted emergency coronary bypass. Gruntzig’s description of the problem of vessel reclosure elicited in my mind the idea of using some sort of support, such as used in mine tunnels or in oil well drilling. Since the coronary balloon goes in small (folded like an umbrella) and is inflated to about 3–4 times its initial diameter, my idealistic support device needed to go in small and expand at the site of blockage with the balloon. I thought one way to solve this was a malleable, tubular, criss-cross mesh. I went back home in the Bay Area and started making crude prototypes with copper wire and lead solder, which I first tested in rubber tubes mimicking arteries. I called the device a BEIS or balloon-expandable intravascular graft. However, the reviewers of my first submitted paper wanted to call it a stent. When I looked the word up, I found out that it derives from Charles Stent, a British dentist who died at the turn of the century. Stent invented a wax material to make dental molds for dentures. This material was later used by plastic surgeons to keep tissues in place, while healing after surgery. The word “stent” was then generically used for any device intended to keep tissues in place while healing.

I made the early experimental device of stainless steel wire soldered with silver. These were materials I thought would be appropriate for initial laboratory animal testing. To carry on with my project I moved to the University of Texas Health Science Center in San Antonio (UTHSCSA). From 1983–1986 I performed mainly bench and animal testing that showed the promise of the technique and the potential applications it had in many areas of vascular surgery and cardiology. With a UTHSCSA pathologist, Doctor Fermin Tio, we observed our first microscopic specimen of implanted stents in awe. After weeks to months after implantation by catheterization under X-ray guidance, the stent had remained open, carrying blood flow. The metal mesh was covered with translucent, glistening tissue similar to the lining of a normal vessel. The question remained whether the same would happen in atherosclerotic vessels. We tested this question in the atherosclerotic rabbit model and to our surprise, the new tissue free of atherosclerotic plaque encapsulated the stent wires, despite the fact that the animals were still on a high cholesterol diet. Eventually, a large sponsor (Johnson & Johnson) adopted the project and clinical trials were instituted under the scrutiny of the Food and Drug Administration.

FIGURE 7 Dr. Julio Palmaz, inventor of the coronary artery stent. (Photograph by B. Ratner.)

Coronary artery stenting is now performed in well over 1.5 million procedures per year.

Pacemakers

In London in 1788, Charles Kite wrote “An Essay Upon the Recovery of the Apparently Dead,” where he discussed electrical discharges to the chest for heart resuscitation. In the period 1820–1880 it was already known that electric shocks could modulate the heartbeat (and, of course, consider the Frankenstein story from that era). The invention of the portable pacemaker, hardly portable by modern standards, may have taken place almost simultaneously in two groups in 1930–1931 – Dr. Albert S. Hyman (USA) (Figure 8), and Dr. Mark C. Lidwill (working in Australia with physicist Major Edgar Booth).

FIGURE 8 The Albert Hyman Model II portable pacemaker, circa 1932–1933. (Courtesy of the NASPE-Heart Rhythm Society History Project (www.Ep-History.org).)

Canadian electrical engineer, John Hopps, while conducting research on hypothermia in 1949, invented an early cardiac pacemaker. Hopps’ discovery was if a cooled heart stopped beating, it could be electrically restarted. This led to Hopps’ invention of a vacuum tube cardiac pacemaker in 1950. Paul M. Zoll developed a pacemaker in conjunction with the Electrodyne Company in 1952. The device was about the size of a small microwave oven, was powered with external current, and stimulated the heart using electrodes placed on the chest – this therapy caused pain and burns, although it could pace the heart.

In the period 1957–1958, Earl E. Bakken, founder of Medtronics, Inc. developed the first wearable transistorized (external) pacemaker at the request of heart surgeon Dr. C. Walton Lillehei. Bakken quickly produced a prototype that Lillehei used on children with post-surgery heart block. Medtronic commercially produced this wearable, transistorized unit as the 5800 pacemaker.

In 1959 the first fully-implantable pacemaker was developed by engineer Wilson Greatbatch and cardiologist W.M. Chardack. He used two Texas Instruments transistors, a technical innovation that permitted small size and low power drain. The pacemaker was encased in epoxy to inhibit body fluids from inactivating it.

Heart Valves

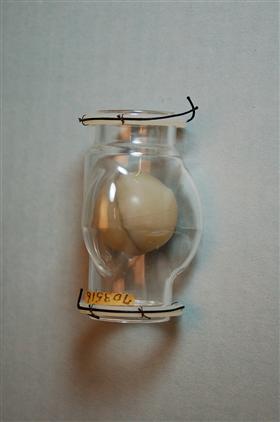

The development of the prosthetic heart valve paralleled developments in cardiac surgery. Until the heart could be stopped and blood flow diverted, the replacement of a valve would be challenging. Charles Hufnagel, in 1952, implanted a valve consisting of a poly(methyl methacrylate) tube and nylon ball in a beating heart (Figure 9). This was a heroic operation and basically unsuccessful, but an operation that inspired cardiac surgeons to consider that valve prostheses might be possible. The 1953 development of the heart-lung machine by Gibbon allowed the next stage in the evolution of the prosthetic heart valve to take place. In 1960, a mitral valve replacement was performed in a human by surgeon Albert Starr, using a valve design consisting of a silicone ball and poly(methyl methacrylate) cage (later replaced by a stainless steel cage). The valve was invented by engineer Lowell Edwards. The heart valve was based on a design for a bottle stopper invented in 1858. Starr was quoted as saying: “Let’s make a valve that works and not worry about its looks,” referring to its design that was radically different from the leaflet valve that nature evolved in mammals. Prior to the Starr–Edwards valve, no human had lived with a prosthetic heart valve longer than three months. The Starr–Edwards valve was found to permit good patient survival. The major issues in valve development in that era were thrombosis and durability. In 1969, Warren Hancock started the development of the first leaflet tissue heart valve based upon glutaraldehyde-treated pig valves, and his company and valve were acquired by Johnson & Johnson in 1979.

FIGURE 9 The Hufnagel heart valve consisting of a poly(methyl methacrylate) tube and nylon ball. (United States Federal Government image in the public domain.)

Drug Delivery and Controlled Release

Through most of history drugs were administered orally or by hypodermic syringe. In general, there was no effort to modulate the rate of uptake of the drug into the body. In 1949, Dale Wurster invented what is now known as the Wurster process that permitted pills and tablets to be encapsulated and therefore slow their release rate. However, modern ideas of controlled release can be traced to a medical doctor, Judah Folkman. Dr. Folkman noted that dyes penetrated deeply into silicone rubber, and he surmised from this that drugs might do the same. He sealed isoproterenol (a drug used to treat heart block) into silicone tubes, and implanted these into the hearts of dogs (Folkman and Long, 1964). He noted the delayed release and later applied this same idea to delivering a birth control steroid. He donated this development, patent-free, to the World Population Council. An entrepreneur and chemist, Alejandro Zaffaroni, heard of the Folkman work, and launched a company in 1970, Alza (originally called Pharmetrics), to develop these ideas for the pharmaceutical industry. The company developed families of new polymers for controlled release, and also novel delivery strategies. Alza was a leader in launching this new field that is so important today; further details on the field of controlled release are provided in an excellent historical overview (Hoffman, 2008).

Designed Biomaterials

In contrast to the biomaterials of the surgeon-hero era, when largely off-the-shelf materials were used to fabricate medical devices, the 1960s on saw the development of materials designed specifically for biomaterials applications. Here are some key classes of materials and their evolution from commodity materials to engineered/synthesized biomaterials.

Silicones

Although the class of polymers known as silicones has been explored for many years, it was not until the early 1940s that Eugene Rochow of GE pioneered the scale-up and manufacture of commercial silicones via the reaction of methyl chloride with silicon in the presence of catalysts. In Rochow’s 1946 book, The Chemistry of Silicones (John Wiley & Sons, Publishers), he comments anecdotally on the low toxicity of silicones, but did not propose medical applications. Possibly the first report of silicones for implantation was by Lahey (1946) (see also Chapter II.5.18). The potential for medical uses of these materials was realized shortly after this. In a 1954 book on silicones, McGregor has a whole chapter titled “Physiological Response to Silicones.” Toxicological studies were cited suggesting to McGregor that the quantities of silicones that humans might take into their bodies should be “entirely harmless.” He mentions, without citation, the application of silicone rubber in artificial kidneys. Silicone-coated rubber grids were also used to support a dialysis membrane (Skeggs and Leonards, 1948). Many other early applications of silicones in medicine are cited in Chapter II.5.18.

Polyurethanes

Polyurethane was invented by Otto Bayer and colleagues in Germany in 1937. The chemistry of polyurethanes intrinsically offered a wide range of synthetic options leading to hard plastics, flexible films, or elastomers (Chapter I.2.2.A). Interestingly, this was the first class of polymers to exhibit rubber elasticity without covalent cross-linking. As early as 1959, polyurethanes were explored for biomedical applications, specifically heart valves (Akutsu et al., 1959). In the mid-1960s a class of segmented polyurethanes was developed that showed both good biocompatibility and outstanding flex life in biological solutions at 37°C (Boretos and Pierce, 1967). Sold under the name Biomer® by Ethicon and based upon DuPont Lycra®, these segmented polyurethanes comprised the pump diaphragms of the Jarvik 7® hearts that were implanted in seven humans.

Teflon®

DuPont chemist Roy Plunkett discovered a remarkably inert polymer, Teflon® (polytetrafluoroethylene) (PTFE), in 1938. William L. Gore and his wife, Vieve started a company in 1958 to apply Teflon® for wire insulation. In 1969, their son Bob found that Teflon®, if heated and stretched, forms a porous membrane with attractive physical and chemical properties. Bill Gore tells the story that, on a chairlift at a ski resort, he pulled from his parka pocket a piece of porous Teflon® tubing to show to his fellow ski lift passenger. The skier was a physician and asked for a specimen to try as a vascular prosthesis. Now, Goretex® porous Teflon® and similar expanded PTFEs are the leading synthetic vascular grafts, and are also used in numerous other applications in surgery and biotechnology (Chapters I.2.2.C and II.5.3.B).

Hydrogels

Hydrogels have been found in nature since life on earth evolved. Bacterial biofilms, hydrated extracellular matrix components, and plant structures are ubiquitous, water-swollen motifs in nature. Gelatin and agar were also known and used for various applictions early in human history. But the modern history of hydrogels as a class of materials designed for medical applications can be accurately traced.

In 1936, DuPont scientists published a paper on recently synthesized methacrylic polymers. In this paper, poly(2-hydroxyethyl methacrylate) (polyHEMA) was mentioned. It was briefly described as a hard, brittle, glassy polymer, and clearly was not considered of importance. After that paper, polyHEMA was essentially forgotten until 1960. Wichterle and Lim published a paper in Nature describing the polymerization of HEMA monomer and a cross-linking agent in the presence of water and other solvents (Wichterle and Lim, 1960). Instead of a brittle polymer, they obtained a soft, water-swollen, elastic, clear gel. Wichterle went on to develop an apparatus (built originally from a children’s construction set; Figure 10) for centrifugally casting the hydrogel into contact lenses of the appropriate refractive power. This innovation led to the soft contact lens industry, and to the modern field of biomedical hydrogels as we know them today.

FIGURE 10 Left: Otto Wichterle (1913–1998). (Wikipedia.) Right: The centrifugal casting apparatus Wichterle used to create the first soft, hydrogel contact lenses. (Photograph by Jan Suchy, Wikipedia public domain.)

Interest and applications for hydrogels have steadily grown over the years, and these are described in detail in Chapter I.2.5. Important early applications included acrylamide gels for electrophoresis, poly(vinyl alcohol) porous sponges (Ivalon) as implants, many hydrogel formulations as soft contact lenses, and alginate gels for cell encapsulation.

Poly(Ethylene Glycol)

Poly(ethylene glycol) (PEG), also called poly(ethylene oxide) (PEO) in its high molecular weight form, can be categorized as a hydrogel, especially when the chains are cross-linked. However, PEG has many other applications and implementations. It is so widely used today that its history is best discussed in its own section.

The low reactivity of PEG with living organisms has been known since at least 1944, when it was examined as a possible vehicle for intravenously administering fat-soluble hormones (Friedman, 1944). In the mid-1970s, Frank Davis and colleagues (Abuchowski et al., 1977) discovered that if PEG chains were attached to enzymes and proteins, they would a have a much longer functional residence time in vivo than biomolecules that were not PEGylated. Professor Edward Merrill of MIT, based upon what he called “various bits of evidence” from the literature, concluded that surface-immobilized PEG would resist protein and cell pickup. The experimental results from his research group in the early 1980s bore out this conclusion (Merrill, 1992). The application of PEGs to wide range of biomedical problems was significantly accelerated by the synthetic chemistry developments of Dr. Milton Harris while at the University of Alabama, Huntsville.

Poly(Lactic-Glycolic Acid)

Although originally discovered in 1833, the anionic polymerization from the cyclic lactide monomer in the early 1960s made creating materials with mechanical properties comparable to Dacron possible. The first publication on the application of poly(lactic acid) in medicine may be by Kulkarni et al. (1966). This group demonstrated that the polymer degraded slowly after implantation in guinea pigs or rats, and was well-tolerated by the organisms. Cutright et al. (1971) were the first to apply this polymer for orthopedic fixation. Poly(glycolic acid) and copolymers of lactic and glycolic acid were subsequently developed. Early clinical applications of polymers in this family were for sutures, based upon the work of Joe Frazza and Ed Schmitt at David & Geck, Inc. (Frazza and Schmitt, 1971). The glycolic acid/lactic acid polymers have also been widely applied for controlled release of drugs and proteins. Professor Robert Langer’s group at MIT was the leader in developing these polymers in the form of porous scaffolds for tissue engineering (Langer and Vacanti, 1993).

Hydroxyapatite

Hydroxyapatite is one of the most widely studied materials for healing in bone. It is a naturally occurring mineral, a component of bone, and a synthesized material with wide application in medicine. Hydroxyapatite can be easily made as a powder. One of the first papers to describe biomedical applications of this material was by Levitt et al. (1969), in which they hot-pressed the hydroxyapatite power into useful shapes for biological experimentation. From this early appreciation of the materials science aspect of a natural biomineral, a literature of thousands of papers has evolved. In fact, the nacre implant described in the prehistory section may owe its effectiveness to hydroxyapatite – it has been shown that the calcium carbonate of nacre can transform in phosphate solutions to hydroxapatite (Ni and Ratner, 2003).

Titanium

In 1791, William Gregor, a Cornish amateur chemist, used a magnet to extract the ore that we now know as ilmenite from a local river. He then extracted the iron from this black powder with hydrochloric acid, and was left with a residue that was the impure oxide of titanium. After 1932, a process developed by William Kroll permitted the commercial extraction of titanium from mineral sources. At the end of World War II, titanium metallurgy methods and titanium materials made their way from military application to peacetime uses. By 1940, satisfactory results had already been achieved with titanium implants (Bothe et al., 1940). The major breakthrough in the use of titanium for bony tissue implants was the Brånemark discovery of osseointegration, described above in the section on dental implants.

Bioglass

Bioglass is important to biomaterials as one of the first completely synthetic materials that seamlessly bonds to bone. It was developed by Professor Larry Hench and colleagues. In 1967 Hench was Assistant Professor at the University of Florida. At that time his work focused on glass materials and their interaction with nuclear radiation. In August of that year, he shared a bus ride to an Army Materials Conference in Sagamore, New York with a US Army Colonel who had just returned from Vietnam where he was in charge of supplies to 15 MASH units. This colonel was not particularly interested in the radiation resistance of glass. Rather, he challenged Hench with the following: hundreds of limbs a week in Vietnam were being amputated because the body was found to reject the metals and polymer materials used to repair the body. “If you can make a material that will resist gamma rays why not make a material the body won’t resist?”

Hench returned from the conference and wrote a proposal to the US Army Medical R and D Command. In October 1969 the project was funded to test the hypothesis that silicate-based glasses and glass-ceramics containing critical amounts of Ca and P ions would not be rejected by bone. In November 1969 Hench made small rectangles of what he called 45S5 glass (44.5 weight % SiO2), and Ted Greenlee, Assistant Professor of Orthopaedic Surgery at the University of Florida, implanted them in rat femurs at the VA Hospital in Gainesville. Six weeks later Greenlee called: “Larry, what are those samples you gave me? They will not come out of the bone. I have pulled on them, I have pushed on them, I have cracked the bone and they are still bonded in place.” Bioglass was born, and with the first composition studied! Later studies by Hench using surface analysis equipment showed that the surface of the bioglass, in biological fluids, transformed from a silicate rich composition to a phosphate rich structure, possibly hydroxyapatite (Clark et al., 1976).

The Contemporary Era (Modern Biology and Modern Materials)

It is probable that the modern era in the history of biomaterials, biomaterials engineered to control specific biological reactions, was ushered in by rapid developments in modern biology (second and third generation biomaterials; see Figure 2 in “Biomaterials Science: An Evolving, Multidisciplinary Endeavor”). In the 1960s, when the field of biomaterials was laying down its foundation principles and ideas, concepts such as cell-surface receptors, growth factors, nuclear control of protein expression and phenotype, cell attachment proteins, stem cells, and gene delivery were either controversial observations or not yet discovered. Thus, pioneers in the field could not have designed materials with these ideas in mind. It is to the credit of the biomaterials community that it has been quick to embrace and exploit new ideas from biology. Similarly, new ideas from materials science such as phase separation, anodization, self-assembly, surface modification, and surface analysis were quickly assimilated into the biomaterial scientists’ toolbox and vocabulary. A few of the important ideas in biomaterials literature that set the stage for the biomaterials science we see today are useful to list:

Since these topics are addressed later in some detail in Biomaterials Science: An Introduction to Materials in Medicine, third edition, they will not be expanded upon in this history section. Still, it is important to appreciate the intellectual leadership of many researchers that promoted these ideas that comprise modern biomaterials – this is part of a recent history of biomaterials that will someday be completed. We practice biomaterials today immersed within an evolving history.

Conclusions

Biomaterials have progressed from surgeon-heroes, sometimes working with engineers, to a field dominated by engineers, chemists, and physicists, to our modern era with biologists and bioengineers as the key players. As Biomaterials Science: An Introduction to Materials in Medicine, third edition, is being published, many individuals who were biomaterials pioneers in the formative days of the field are well into their eighth or ninth decades of life. A number of leaders of biomaterials, pioneers who spearheaded the field with vision, creativity, and integrity, have passed away. Biomaterials is a field so new that the first-hand accounts of its roots are available. I encourage readers of the textbook to document their conversations with pioneers of the field (many of whom still attend biomaterials conferences), so that the exciting stories that led to the successful and intellectually stimulating field we see today are not lost.

Bibliography

1. Abuchowski A, McCoy JR, Palczuk NC, van Es T, Davis FF. Effect of covalent attachment of polyethylene glycol on immunogenicity and circulating life of bovine liver catalase. J Biol Chem. 1977;252(11):3582–3586.

2. Akutsu T, Dreyer B, Kolff WJ. Polyurethane artificial heart valves in animals. J Appl Physiol. 1959;14:1045–1048.

3. Apple DJ, Trivedi RH. Sir Nicholas Harold Ridley, Kt, MD, FRCS, FRS. Arch Ophthalmol. 2002;120(9):1198–1202.

4. Blagg C. Development of ethical concepts in dialysis: Seattle in the 1960s. Nephrology. 1998;4(4):235–238.

5. Bobbio A. The first endosseous alloplastic implant in the history of man. Bull Hist Dent. 1972;20:1–6.

6. Bondurant S, Ernster V, Herdman R, eds. Safety of Silicone Breast Implants. Washington DC: National Academies Press; 1999.

7. Boretos JW, Pierce WS. Segmented polyurethane: A new elastomer for biomedical applications. Science. 1967;158:1481–1482.

8. Bothe RT, Beaton LE, Davenport HA. Reaction of bone to multiple metallic implants. Surg., Gynecol Obstet. 1940;71:598–602.

9. Branemark PI, Breine U, Johansson B, Roylance PJ, Röckert H, Yoffey JM. Regeneration of bone marrow. Acta Anat. 1964;59:1–46.

10. Clark AE, Hench LL, Paschall HA. The influence of surface chemistry on implant interface histology: A theoretical basis for implant materials selection. J Biomed Mater Res. 1976;10:161–177.

11. Crubezy E, Murail P, Girard L, Bernadou J-P. False teeth of the Roman world. Nature. 1998;391:29.

12. Cutright DE, Hunsuck EE, Beasley JD. Fracture reduction using a biodegradable material, polylactic acid. J Oral Surg. 1971;29:393–397.

13. Egdahl RH, Hume DM, Schlang HA. Plastic venous prostheses. Surg Forum. 1954;5:235–241.

14. Folkman J, Long DM. The use of silicone rubber as a carrier for prolonged drug therapy. J Surg Res. 1964;4:139–142.

15. Frazza E, Schmitt E. A new absorbable suture. J Biomed Mater Res. 1971;5(2):43–58.

16. Friedman M. A vehicle for the intravenous administration of fat soluble hormones. J Lab Clin Med. 1944;29:530–531.

17. Hoffman A. The origins and evolution of “controlled” drug delivery systems. Journal of Controlled Release. 2008;132(3):153–163.

18. Ingraham FD, Alexander Jr E, Matson DD. Polyethylene, a new synthetic plastic for use in surgery. J Am Med Assoc. 1947;135(2):82–87.

19. Kolff WJ. Early years of artificial organs at the Cleveland Clinic Part II: Open heart surgery and artificial hearts. ASAIO J. 1998;44(3):123–128.

20. Kulkarni RK, Pani KC, Neuman C, Leonard F. Polylactic acid for surgical implants. Arch Surg. 1966;93:839–843.

21. Lahey FH. Comments (discussion) made following the speech “Results from using Vitallium tubes in biliary surgery,” by Pearse H E before the American Surgical Association, Hot Springs, VA. Ann Surg. 1946;124:1027.

22. Langer R, Vacanti J. Tissue engineering. Science. 1993;260:920–926.

23. LeVeen HH, Barberio JR. Tissue reaction to plastics used in surgery with special reference to Teflon. Ann Surg. 1949;129(1):74–84.

24. Levitt SR, Crayton PH, Monroe EA, Condrate RA. Forming methods for apatite prostheses. J Biomed Mater Res. 1969;3:683–684.

25. McGregor RR. Silicones and their Uses. New York: McGraw Hill Book Company, Inc.; 1954.

26. Merrill EW. Poly(ethylene oxide) and blood contact. In: Harris JM, ed. Poly(ethylene glycol) chemistry: Biotechnical and biomedical applications. New York: Plenum Press; 1992;199–220.

27. Ni M, Ratner BD. Nacre surface transformation to hydroxyapatite in a phosphate buffer solution. Biomaterials. 2003;24:4323–4331.

28. Rob C. Vascular surgery. In: Gillis L, ed. Modern Trends in Surgical Materials. London: Butterworth & Co.; 1958;175–185.

29. Scales JT. Biological and mechanical factors in prosthetic surgery. In: Gillis L, ed. Modern Trends in Surgical Materials. London: Butterworth & Co.; 1958;70–105.

30. Skeggs LT, Leonards JR. Studies on an artificial kidney: Preliminary results with a new type of continuous dialyzer. Science. 1948;108:212.

31. Wichterle O, Lim D. Hydrophilic gels for biological use. Nature. 1960;185:117–118.

1The regulatory climate in the US in the 1950s was strikingly different from today. This can be appreciated in this recollection from Willem Kolff about a pump oxygenator he made and brought with him from Holland to the Cleveland Clinic (Kolff, W. J., 1998): “Before allowing Dr. Effler and Dr. Groves to apply the pump oxygenator clinically to human babies, I insisted they do 10 consecutive, successful operations in baby dogs. The chests were opened, the dogs were connected to a heart-lung machine to maintain the circulation, the right ventricles were opened, a cut was made in the interventricular septa, the septa holes were closed, the right ventricles were closed, the tubes were removed and the chests were closed. (I have a beautiful movie that shows these 10 puppies trying to crawl out of a basket).